?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Dialogue robots have gained widespread use in various domains. However, their ability to engage in conversations about actions that robots cannot perform, such as eating and traveling, remains a challenge. This study proposes a dialogue strategy called Agreebot Introduction Dialogue to enhance the acceptability of robot statements related to actions that robots are incapable of performing. This strategy involved referring to the presence of another robot with the same opinion. We examined our proposed method by conducting a dialogue experiment with 43 participants. The results indicated that the proposed dialogue strategy increased the acceptance of the robot's statements regarding food topics and improved user engagement behavior. This suggests that our strategy enables dialogue robots to participate effectively in diverse scenarios, including conversations on certain topics that are typically challenging for robots.

1. Introduction

The application of dialogue robots has extended to various domains of society [Citation1]. In recent years, they have been employed to not only provide weather updates [Citation2] and news [Citation3] to users but also to recommend food menus [Citation4–6] and travel destinations [Citation7,Citation8]. Additionally, dialogue robots are utilized to engage in chitchats with users [Citation9–11]. Topics such as personal preferences, including favorite foods and desired travel destinations, have attracted attention as subjects for chitchat [Citation10,Citation11]. Therefore, in the context of both task-oriented dialogue (e.g. recommendations) and non-task-oriented dialogue (e.g. chitchat), food and travel are considered common topics frequently addressed and discussed by dialogue robots.

In interactions related to these topics, it is assumed that dialogue robots will be expected to express their opinions [Citation10–12]. For instance, users may occasionally seek the dialogue robot's personal opinions and experiences during a conversation, such as by asking questions like ‘What do you think about xx?’ In such cases, it is desirable for the robot to express its opinions to meet the users' expectations and maintain their engagement in the dialogue [Citation13,Citation14]. The incorporation of the dialogue robot's opinions is considered important because previous research has indicated a positive correlation between the frequency of subjective opinion exchanges and degree of engagement in the dialogue [Citation15]. Hence, to facilitate user engagement in conversations, it is necessary for dialogue robots to express their opinions.

However, certain human-like activities, such as eating and traveling, are inherently beyond the capabilities of robots, and it is considered difficult for users to accept the robot's personal opinions regarding these activities (for example, ‘I like eating spicy food’ or ‘I like going to hot springs’). In general, robots lack the ability to consume food or have taste preferences in the same way as humans do. Furthermore, robots cannot physically travel to destinations or engage in experiences such as enjoying a hot spring. Consequently, even if a robot engages in conversations with users and expresses its own opinions about eating or traveling, the users may struggle to accept these opinions [Citation16]. This can lead to a perceived lack of authenticity in human–robot interactions and a decrease in users' willingness to interact with them. Previous research has reported that people are less willing to interact with robots on topics that are difficult to attribute experience to robots [Citation13]. Therefore, to enable dialogue robots to effectively participate in diverse scenarios, including conversations about food or travel, it is important to enhance the user's acceptance of the robot's statements.

Currently, there is little research on enhancing the acceptability of robot statements regarding actions that humans can perform but robots cannot, such as eating and traveling. Consequently, there is no established method to address this issue. An intuitive approach to tackling this problem involves altering the robot's appearance to make it more human-like [Citation17], aiming to give the user the impression that the robot can experience enjoyment from human-like activities. However, whether employing robots with hardware that closely resembles humans [Citation17], such as androids (e.g. [Citation18]), can improve the acceptability of a robot's statements remains unclear. In addition, this approach, which primarily focuses on hardware, is not considered a cost-effective or practical solution because of financial constraints and installation location limitations. Hence, it is preferable to employ methods that are not dependent on hardware and instead focus on software aspects, such as dialogue strategies.

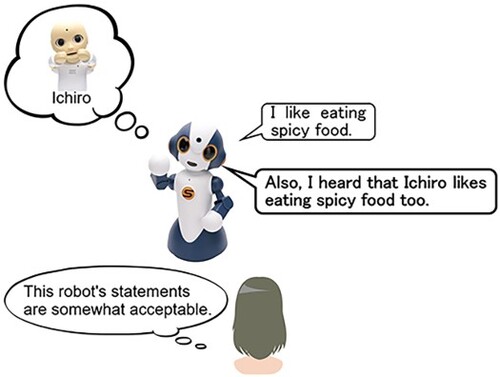

Therefore, in this study, we propose a dialogue strategy aimed at enhancing the acceptance of a robot's statements regarding actions beyond its inherent capabilities. Specifically, we suggest employing a dialogue strategy in which a robot refers to another robot that shares the same opinion, utilizing a third-party reference structure (see Figure ). In this study, we term this dialogue strategy the ‘Agreebot Introduction Dialogue.’ This strategy consists of two important components: what to convey, and how to convey it. Specifically, regarding what to convey, the robot conveys the presence of another robot that shares the same opinion. Regarding how to convey, the robot utilizes a third-party reference structure. The details of these two components are described separately below.

Figure 1. Proposed dialogue strategy (i.e. Agreebot Introduction Dialogue). Ichiro is the name of the robot that previously interacted with the participant.

What to convey. In the Agreebot Introduction Dialogue, a robot mentions another robot that shares the same opinion. If a robot highlights the presence of another robot with the same opinion, it can create the perception among users that multiple robots endorse the same opinion. This perception is expected to make users more likely to agree with and conform to a robot's opinion. Several previous studies have demonstrated the effectiveness of making users perceive that multiple individuals or robots hold the same opinion. For example, Asch [Citation19] and Sherif [Citation20] found that people tend to conform to an opinion that is supported by multiple individuals, even if they hold different perspectives. Similarly, even in the context of human–robot interactions, it has been suggested that people are more likely to align with opinions supported by multiple robots [Citation21,Citation22]. These studies suggest that our dialogue strategy, which makes users aware that multiple robots share the same opinion, is expected to be effective in increasing user conformity and acceptance of the robot's statements, even when users initially hold a different perspective and may find it difficult to accept the robot's statements.

How to convey. In the Agreebot Introduction Dialogue, a third-party reference structure is used to convey the presence of another robot. That is, one robot refers to the presence of another robot in a manner similar to gossiping. Previous research suggests that when a robot refers to a third party that is not present in the conversation, it can give the impression that the robot has experience interacting with that third party. This impression can lead to the robot being perceived as a more social and human-like entity by the user. For instance, several studies [Citation23–25] reported that when a robot mentions humans with whom it has interacted in the past, users perceive the robot as having better social skills and a more human-like nature. Another study [Citation26] explored the effect of a robot mentioning information about another robot, making users aware of the existence of previous robot-to-robot conversations. The findings of that study indicated that referring to another robot could enhance its agency, including its communication skills. Therefore, it is expected that employing third-party references by a robot can improve the user perception of the robot as more human-like, thereby facilitating the user's acceptance of the robot's statements regarding actions that only humans can perform.

Given the above, the purpose of this research was to enhance the acceptance of robot's statements related to actions beyond their inherent capabilities by making the robot refer to another robot that shares the same opinion (i.e. Agreebot Introduction Dialogue). The hypotheses of this study are as follows:

Hypothesis 1: Agreebot Introduction Dialogue facilitates the acceptance of the robot's statements regarding actions that the robot cannot perform.

Hypothesis 2: Agreebot Introduction Dialogue enhances the user's engagement in the dialogue.

In this study, we focused on the statement of a robot regarding actions beyond its inherent capabilities. Specifically, we aimed to investigate whether the proposed dialogue strategy can enhance the acceptability of a robot's statements, such as ‘I like eating spicy food’ and ‘I like going to hot springs’ (Hypothesis 1). Previous research indicated that when people accept a robot's statements, they are more motivated to interact with it [Citation13]. Therefore, if the acceptability of the robot's statements is improved through the proposed dialogue strategy, we expect that users will be motivated in the dialogue, thereby demonstrating increased engagement in the dialogue with the robot (Hypothesis 2).

The remainder of this paper is organized as follows: Section 2 provides a comprehensive description of the experiment conducted to test the hypotheses. Section 3 presents the experimental results. Section 4 presents a thorough discussion of the experimental findings. Finally, Section 5 concludes the study.

2. Method

2.1. Experimental design

To investigate the effectiveness of our proposed dialogue strategy, we conducted a dialogue experiment using a between-subjects design under two conditions: experimental and control. Each participant engaged in two dialogues with two different robots (Figure ). The first dialogue was identical for both conditions. However, in the second dialogue under the experimental condition, the robot not only expressed its own opinion but also mentioned the presence of another robot sharing the same opinion. In contrast, in the control condition, the robot expressed only its own opinion and did not refer to the other robot.

Figure 2. Experimental flow. ‘Another robot's opinion’ in the red box appears only in the experimental condition.

The choice of a between-subjects design was based on the following reasons. First, it mitigates potential demand effects that could arise from participants perceiving differences between the two conditions [Citation27,Citation28]. Second, it avoids order effects that could result from conducting two similar dialogues for each condition [Citation28].

This experiment was conducted with the approval of the ethics committee for research involving human subjects at the Graduate School of Engineering Science, Osaka University (No. 31-1). All participants provided written informed consent before they participated in the experiment.

2.2. Participants

A total of 43 participants took part in this experiment. The participants were Japanese-speaking undergraduate and graduate students. Among the participants, 22 were assigned to the experimental condition (12 males, nine females, and one non-binary) and 21 were assigned to the control condition (12 males, nine females). The average age of the participants in the experimental condition was 21.41 years , whereas that in the control condition was 21.10 years

.

2.3. Apparatus

Robots: This study employed two dialogue robots, CommU and Sota (Figure (a)), manufactured by VstoneFootnote1. CommU and Sota are compact tabletop dialogue robots, each approximately 30 cm in height, and can move their arms and necks. Furthermore, they are equipped with built-in speakers on their chests. CommU and Sota were used during the first and second dialogue sessions, respectively.

Figure 3. Dialogue system. (a) Robots (left: CommU, right: Sota). (b) Tablet-based dialogue system and (c) System architecture.

Tablet-based dialogue system: A dialogue system utilizing a tablet was implemented to reduce the possibility of dialogue breakdown caused by speech recognition failures or participant utterances deviating from the scenario [Citation29] (Figure (b,c)). The dialogue proceeded based on pre-established scenario scripts. The robots initiated the conversation by speaking to the participants, who responded to the robots using button inputs on the tablet. When soliciting a response from the participants, the tablet displayed 1 or 2 candidate response sentences as buttons. The tablet synthesized the audio output based on the button pressed by the participant, thereby speaking on their behalf. Additionally, the tablet screen was designed to display questionnaires, allowing the participants to evaluate the robot's statements during the conversation. This functionality enabled the participants to assess their impression of specific robot utterances immediately after they were made, thus ensuring the collection of reliable data.

Environment: Two separate rooms were prepared with one robot placed in each room. Special attention was given to ensure that the experimenter was not visible to the participants during the dialogue, allowing them to concentrate on the interaction. The participants engaged in dialogue with CommU in the first room and then moved to the second room to have a conversation with Sota.

2.4. Procedure

The experimental flow is illustrated in Figure . The procedure consisted of the following steps: a first dialogue session with CommU, a second dialogue session with Sota, and answering the questionnaire.

In the first dialogue session, the participants engaged in conversations with the robot on everyday topics (see Table ). During this session, the robot did not provide any statement regarding its ability to eat or travel. In the second dialogue session, participants discussed two topics: their favorite food and how they spent their holidays (see Table ). We chose multiple topics instead of a single topic to assess the broader applicability of our dialogue strategy, in line with previous studies where robots referred to other robots in their dialogues (e.g. [Citation24,Citation26]). For each topic, the robot first asked the participant a question (e.g. ‘Do you eat sweets often?’ or ‘Do you often play games on holidays?’) and then disclosed its own opinions (e.g. ‘I like eating spicy food’ or ‘I like going to hot springs on holidays’). Furthermore, in the experimental condition, the robot also mentioned the presence of another robot who shared the same opinion (e.g. ‘Also, I heard that [robot1] likes eating spicy food too’). In contrast, the robot did not mention the other robot in the control condition. Following the discussion of each topic, the participants evaluated their level of acceptance of the robot's statements using a tablet. It is important to note that the order of the presentation of the two topics was counterbalanced across the participants to avoid order effects. After completing the two-topic procedure, the participants were asked to answer a questionnaire that aimed to evaluate their impressions of the robot conversed with in the second dialogue session.

2.5. Measurements

2.5.1. Acceptance of robot's statements

To verify Hypothesis 1, we assessed the level of acceptance of the robot's statements. Specifically, during the second dialogue session with the robot (see Figure ), the participants were presented with a questionnaire on the tablet to evaluate their acceptance of the robot's statements. The questionnaire items included ‘I really believe that this robot likes eating spicy food’ and ‘I really believe that this robot likes going to hot springs’. The participants were asked to rate the robot's statements on a 7-point Likert scale, ranging from 1 (strongly disagree) to 7 (strongly agree).

2.5.2. User engagement

To verify Hypothesis 2, we assessed user engagement from two different aspects. First, we evaluated whether the participants returned a bow gesture in response to the bowing motion of the robot at the end of the second dialogue session. Figure shows an example of a participant bowing in return to the robot. Several previous studies have explored user behavior, such as user posture during conversations, as an indicator of user engagement (e.g. [Citation30]). Bowing is a widely used gesture in Japan [Citation31] and is considered one of the most important nonverbal communication cues in Japanese culture [Citation32]. In addition, returning a bow is regarded as a type of synchronous behavior likely to occur when a person has a positive impression of another person [Citation33–35]. Furthermore, it has been observed that people have a greater tendency to bow to robots when they perceive the robots as being remotely operated rather than autonomous [Citation36], suggesting that users are more likely to bow when robots are perceived to act in a human-like manner. Therefore, this study focused on the reciprocation of the bowing action to assess user engagement.

Figure 4. Participant bowing back to the robot. The left figure shows the robot bowing and the right figure shows the participant bowing in return.

Moreover, as another measure of engagement aspects, we evaluated the ‘Intention to Use’ [Citation37]. Intention to Use is a three-item question commonly used in various studies on human–robot interaction (e.g. [Citation23,Citation25]) to assess whether users intend to engage in conversations with the robot again. After completing the two dialogues with the robots, the participants rated their intention to use the second robot on a 7-point Likert scale, ranging from 1 (strongly disagree) to 7 (strongly agree). Because of its high Cronbach's alpha reliability coefficient (), we used the mean scores of the three items in the analysis.

3. Results

3.1. Data exclusion

The dialogues during the experiment were conducted in an environment where the experimenter's presence was not directly observable, thus raising the concern that participants may not have paid sufficient attention to the conversations with the robot. Therefore, after the experiment, we checked the video of each participant's interaction with the robot and identified one participant (male, control condition) who paid minimal attention to the robot's utterances and gestures. Therefore, the data from this participant were excluded and subsequent analyzes were conducted using the data from the remaining 42 participants.

3.2. Acceptance of robot's statements

To examine the differences between the conditions in the context of the acceptance of the robot's statements, unpaired t-tests were conducted for each of the following statements: ‘I like eating spicy food’ and ‘I like going to hot springs’ (see Figure ). The results revealed that the robot's statement about liking spicy food had a significantly higher acceptance score in the experimental condition than in the control condition

. Regarding the robot's statement about liking hot springs, no significant difference between the conditions was confirmed

. However, upon examining the mean of the scores of the statement about liking hot springs, it was found that the experimental condition

had a higher average score than the control condition

.

3.3. User engagement

To evaluate whether the participants reciprocated the robot's bow at the end of the conversation, two non-authors, who were blind to the study's objectives, first independently checked and annotated the interaction videos (Figure ). The initial annotation results showed a high level of agreement, as indicated by a high kappa coefficient . In three cases where the annotators had differences in opinion, we followed previous annotation studies (e.g. [Citation38,Citation39]) and allowed them to reach a consensus through discussions. Subsequently, a Chi-square test was conducted to compare the proportion of participants who reciprocated the robot's bow between the conditions. The results revealed a marginal significance

, indicating that the experimental condition

exhibited a higher proportion of bow reciprocation than the control condition

(Figure ).

Next, an unpaired t-test was conducted to examine the differences between the conditions in the context of Intention to Use. The results revealed no significant difference between the conditions .

4. Discussion

4.1. Acceptance of robot's statements

The proposed dialogue strategy significantly improved the acceptance of the robot's statements regarding its food preferences. This finding partially supports Hypothesis 1, suggesting that the strategy makes it easier for participants to accept the robot's statements regarding a certain action beyond their inherent capabilities (i.e. eating spicy food). Moreover, we confirmed that the effect of this dialogue strategy on the acceptance of the robot's statements varies depending on the topic. A possible reason for this variability could be that the act of enjoying hot springs is perceived by users as more hazardous and more challenging for the robot when compared with eating spicy food, making the users more reluctant to accept the robot's statements. This interpretation aligns with the findings of previous studies that the degree of attribution of robot experience varies depending on the capability to perform the action [Citation13,Citation16]. Therefore, it is necessary to further investigate the effectiveness of the proposed dialogue strategy for various categories of topics and identify topics that are particularly effective and those that are not.

Furthermore, upon examining the distribution of the acceptance scores in Figure , we cannot entirely deny the possibility that some participants might have thought recent robots, including the robot used in our study, were inherently capable of consuming food and going to hot springs. Hence, to conduct more controlled experiments and further explore the domains where the proposed strategy is applicable, an investigation into human perceptions of recent robot capability is required.

4.2. User engagement

In the experimental condition, nearly twice as many participants bowed in return to the bowing gesture of the robot when compared with the control condition, exhibiting a marginal significance. Bow reciprocation is considered a behavior that occurs when the user has a positive impression of the robot and actively engages in dialogue [Citation33–35]. It is worth noting a potential limitation of our study: we have not fully considered the variations in the individual tendencies to bow. Given this limitation, the above result tentatively supports Hypothesis 2 and suggests that the proposed dialogue strategy not only improves the acceptance of the robot's statements regarding food topics but also potentially increases the user engagement in the dialogue. However, contrary to the hypothesis regarding engagement, no significant difference was observed between the conditions in terms of Intention to Use. One possible reason why the proposed strategy improved only the measure of returning the bow and did not enhance the Intention to Use could be that these two measures focused on different stages of the engagement. The indicator for returning the bow focused on the user engagement during the conversations, whereas Intention to Use focused on the user's willingness to engage in future conversations. In other words, the effectiveness of the proposed dialogue strategy with regard to user engagement may be limited to the current dialogue and may not be extensible to subsequent dialogues. Therefore, further studies are required to enhance the effectiveness of the proposed approach. For instance, although this study employed a design with two robots expressing the same opinion, previous research on the conformity pressure exerted by robots suggested the usefulness of involving a large number of robots, such as six [Citation21]. Thus, increasing the number of robots mentioned by the interacting robot in the proposed approach can strengthen its influence on the user's willingness to engage in future conversations with the robot.

4.3. Contributions

In this study, we confirmed that the proposed dialogue strategy could enhance the acceptance of a robot's statements and increase user engagement behavior when a robot discusses a certain topic beyond its inherent capabilities (i.e. eating spicy food). Improving the acceptability of robot statements regarding food topics is particularly important considering the increasing attempts to deploy robots that recommend food menus at restaurants [Citation4,Citation5] or bakeries [Citation6]. To the best of our knowledge, this study is the first to concentrate on increasing users' acceptance of the notion that a robot can form its own opinion regarding topics beyond its inherent capabilities.

Furthermore, an interesting observation in this study was that the proposed dialogue strategy not only increased the acceptance of the robot's statements but also potentially affected the dialogue behavior of the participants (i.e. bowing). This finding highlights the practical value of this approach, particularly considering the growing efforts to promote the widespread adoption of social robots in real-world settings [Citation40].

In addition, the proposed dialogue strategy does not require hardware modifications [Citation18] or complex machine learning algorithms [Citation41]. This strategy can be implemented by simply manipulating the speech content of a robot, making it a low-cost and highly practical solution. This enhances its feasibility and applicability to real-world scenarios.

4.4. Limitations

A limitation of this study is that we addressed only two actions that the robot cannot perform: eating spicy food and going to hot springs. There are various other topics besides eating and traveling that are difficult to attribute to robots, and robots should be able to engage in these topics. For example, Sytsma and Machery reported that experiences involving physical or emotional suffering, such as pain or anger, are difficult to attribute to robots [Citation16]. When dialogue robots are used in counseling applications [Citation42,Citation43], the robot's empathic statements about the user's suffering (e.g. ‘I have had similar experiences as you, so I can understand your feelings well.’) were considered important [Citation44]. The proposed dialogue strategy may be effective in making such empathic statements of the robot more acceptable to the user. However, this experiment only confirmed the effectiveness of the dialogue strategy in improving the acceptance of the robot's statements about food topics, and its impact on other topics remains unclear. Therefore, as mentioned in Section 4.1, it is necessary to verify the usefulness of the proposed dialogue strategy for various topic categories.

Another limitation of this study is that our results can only be demonstrated for the specific robot used in the experiment (Sota; Figure (a)). In the field of human–robot interaction, there are various robots with different appearances, ranging from mechanical to very human-like [Citation17]. The degree of human-likeness in a robot's appearance may strongly influence the acceptability of statements regarding actions that humans can perform but robots cannot. Therefore, additional research is necessary to verify the effectiveness of the proposed dialogue strategy by employing various robots with different levels of human-likeness.

5. Conclusion

In this study, we proposed a dialogue strategy aimed at increasing the acceptability of a robot's statements regarding actions that it cannot perform. This strategy involves a robot mentioning another robot that shares the same opinion. Through the experiment, we confirmed that the proposed dialogue strategy enhances the acceptance of the robot's statements and improves user engagement behavior when the robot discusses a certain type of topic beyond its inherent capabilities. An interesting observation in this study was that the proposed dialogue strategy demonstrated its ability to potentially induce changes in the participants' dialogue behavior. This highlights the practical value of the proposed strategy. In our future research, we will further investigate the effectiveness of our proposed dialogue strategy in robot–human dialogues on various topics and with different robots.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Seiya Mitsuno

Seiya Mitsuno received a Bachelor's degree in engineering science from Osaka University, Japan, in 2020. He is currently enrolled in the doctoral program in the Graduate School of Engineering Science at Osaka University. His research interests include human-robot interaction and dialogue systems.

Yuichiro Yoshikawa

Yuichiro Yoshikawa received a Ph.D. degree in engineering from Osaka University, Japan, in 2005. He is currently an associate professor at the Graduate School of Engineering Science, Osaka University. He is interested in the study of human-robot communication, especially about multiple-robot coordination and psychiatric or educational applications.

Midori Ban

Midori Ban received a Ph.D. degree in psychology from Doshisha University, Japan, in 2015. She is currently a specially appointed lecturer at the Graduate School of Engineering Science, Osaka University. Her research fields are developmental psychology and Human-Agent Interaction (HAI) studies.

Hiroshi Ishiguro

Hiroshi Ishiguro received a Ph.D. degree in engineering from Osaka University, Japan in 1991. He is currently a professor at the Graduate School of Engineering Science, Osaka University. He is also visiting Director of ATR Hiroshi Ishiguro Laboratories. His expertize includes robotics, android science, and sensor networks.

Notes

References

- Mattias Wahde MV. Conversational agents: theory and applications. preprint, 2012. arXiv:2202.03164.

- Tulshan AS, Dhage SN. Survey on virtual assistant: Google Assistant, Siri, Cortana, Alexa. In: Advances in signal processing and intelligent recognition systems. Singapore: Springer; 2019. p. 190–201.

- Laban P, Canny J, Hearst MA. What's the latest? A question-driven news chatbot. preprint, 2021. arXiv:2105.05392.

- Herse S, Vitale J, Ebrahimian D, et al. Bon appetit! robot persuasion for food recommendation. In: Companion of the 2018 ACM/IEEE International Conference on Human-Robot Interaction; HRI '18. Association for Computing Machinery; 2018. p. 125–126.

- Sakai K, Nakamura Y, Yoshikawa Y, et al. Effect of robot embodiment on satisfaction with recommendations in shopping malls. IEEE Robot Autom Lett. 2022;7(1):366–372. doi: 10.1109/LRA.2021.3128233

- Song S, Baba J, Okafuji Y, et al. Out for in! empirical study on the combination power of two service robots for product recommendation. In: Proceedings of the 2023 ACM/IEEE International Conference on Human-Robot Interaction; HRI '23. Association for Computing Machinery; 2023. p. 408–416.

- Yamazaki T, Yoshikawa K, Kawamoto T, et al. Tourist guidance robot based on HyperCLOVA. preprint, 2022. arXiv:2210.10400.

- Higashinaka R, Minato T, Sakai K, et al. Dialogue robot competition for the development of an android robot with hospitality. In: 2022 IEEE 11th Global Conference on Consumer Electronics (GCCE), Osaka, Japan; 2022. p. 357–360.

- Sugiyama H, Mizukami M, Arimoto T, et al. Empirical analysis of training strategies of transformer-based japanese chit-chat systems. In: 2022 IEEE Spoken Language Technology Workshop (SLT), Doha, Qatar; 2023. p. 685–691.

- Minato T, Sakai K, Uchida T, et al. A study of interactive robot architecture through the practical implementation of conversational android. Front Robot AI. 2022;9:Article ID 905030. doi: 10.3389/frobt.2022.905030

- Uchida T, Minato T, Nakamura Y, et al. Female-type android's drive to quickly understand a user's concept of preferences stimulates dialogue satisfaction: dialogue strategies for modeling user's concept of preferences. Int J Soc Robot. 2021;13(6):1499–1516. doi: 10.1007/s12369-020-00731-z

- Uchida T, Minato T, Ishiguro H. Does a conversational robot need to have its own values?: A study of dialogue strategy to enhance people's motivation to use autonomous conversational robots. In: Proceedings of the Fourth International Conference on Human Agent Interaction, Biopolis, Singapore; 2016. p. 187–192.

- Uchida T, Minato T, Ishiguro H. The relationship between dialogue motivation and attribution of subjective opinions to conversational androids. Trans Jpn Soc Artif Intell. 2019;34(1):B-I62_1–8. doi: 10.1527/tjsai.B-I62

- Uchida T, Minato T, Ishiguro H. A values-based dialogue strategy to build motivation for conversation with autonomous conversational robots. In: 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, USA; 2016 Aug. p. 206–211.

- Tokuhisa R, Terashima R. Relationship between utterances and ‘enthusiasm’ in non-task-oriented conversational dialogue. In: Proceedings of the 7th SIGdial workshop on discourse and dialogue – SigDIAL '06; Morristown (NJ): Association for Computational Linguistics; 2006.

- Sytsma J, Machery E. Two conceptions of subjective experience. Philos Stud. 2010;151(2):299–327. doi: 10.1007/s11098-009-9439-x

- Mathur MB, Reichling DB. Navigating a social world with robot partners: A quantitative cartography of the uncanny valley. Cognition. 2016;146:22–32. doi: 10.1016/j.cognition.2015.09.008

- Glas DF, Minato T, Ishi CT, et al. ERICA: the ERATO intelligent conversational android. In: 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, USA; 2016. p. 22–29.

- Asch SE. Opinions and social pressure. Sci Am. 1955;193(5):31–35. doi: 10.1038/scientificamerican1155-31

- Sherif M. A study of some social factors in perception. Arch Psychol (Columbia University). 1935;187:60.

- Shiomi M, Hagita N. Do synchronized multiple robots exert peer pressure? In: Proceedings of the Fourth International Conference On Human Agent Interaction; Association for Computing Machinery; 2016. p. 27–33.

- Salomons N, van der Linden M, Strohkorb Sebo S, et al. Humans conform to robots: disambiguating trust, truth, and conformity. In: Proceedings of the 2018 ACM/IEEE International Conference on Human-Robot Interaction; Association for Computing Machinery; 2018. p. 187–195.

- Mahzoon H, Ogawa K, Yoshikawa Y, et al. Effect of self-representation of interaction history by the robot on perceptions of mind and positive relationship: A case study on a home-use robot. Adv Robot. 2019;33(21):1112–1128. doi: 10.1080/01691864.2019.1676823

- Fu C, Yoshikawa Y, Iio T, et al. Sharing experiences to help a robot present its mind and sociability. Int J Soc Robot. 2021;13(2):341–352. doi: 10.1007/s12369-020-00643-y

- Mitsuno S, Yoshikawa Y, Ban M, et al. Evaluation of a Daily interactive chatbot that exchanges information about others through long-term use in a group of friends. Trans Jpn Soc Artif Intell. 2022;37(3):IDS 10IDS–I_1 –14. doi: 10.1527/tjsai.37-3_IDS-I

- Mitsuno S, Yoshikawa Y, Ishiguro H. Robot-on-Robot gossiping to improve sense of human-robot conversation. In: 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy; 2020. p. 653–658.

- Rosenthal R. Experimenter effects in behavioral research. Enlarged. New York: Appleton-Century-Crofts; 1966.

- Charness G, Gneezy U, Kuhn MA. Experimental methods: between-subject and within-subject design. J Econ Behav Organ. 2012;81(1):1–8. doi: 10.1016/j.jebo.2011.08.009

- Williams JD, Young S. Partially observable Markov decision processes for spoken dialog systems. Comput Speech Lang. 2007;21(2):393–422. doi: 10.1016/j.csl.2006.06.008

- Lee MK, Forlizzi J, Kiesler S, et al. Personalization in HRI: a longitudinal field experiment. In: 2012 7th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Boston, MA, USA; 2012. p. 319–326.

- Mizutani N, Mizutani O. How to be polite in Japanese. Tokyo: Japan Times; 1987.

- Wierzbicka A. Kisses, handshakes, bows: the semantics of nonverbal communication. Semiotica. 1995;103(3–4):207–252.

- Cheng M, Kato M, Saunders JA, et al. Paired walkers with better first impression synchronize better. PLOS ONE. 2020;15(2):Article ID e0227880. doi: 10.1371/journal.pone.0227880

- Zhao Z, Salesse RN, Gueugnon M, et al. Moving attractive virtual agent improves interpersonal coordination stability. Hum Mov Sci. 2015;41:240–254. doi: 10.1016/j.humov.2015.03.012

- Zhao Z, Salesse RN, Marin L, et al. Likability's effect on interpersonal motor coordination: exploring natural gaze direction. Front Psychol. 2017;8:1864. doi: 10.3389/fpsyg.2017.01864

- Tanaka K, Yamashita N, Nakanishi H, et al. Blurring autonomous and teleoperated produces the feeling of talking with a robot's operator. Inf Process Soc Jpn. 2016;57(4):1108–1115.

- Heerink M, Kröse B, Evers V, et al. Assessing acceptance of assistive social agent technology by older adults: the almere model. Int J Soc Robot. 2010;2(4):361–375. doi: 10.1007/s12369-010-0068-5

- Belur J, Tompson L, Thornton A, et al. Interrater reliability in systematic review methodology: exploring variation in coder decision-making. Sociol Methods Res. 2021;50(2):837–865. doi: 10.1177/0049124118799372

- Smith B, McGannon KR. Developing rigor in qualitative research: problems and opportunities within sport and exercise psychology. Int Rev Sport Exerc Psychol. 2018;11(1):101–121. doi: 10.1080/1750984X.2017.1317357

- Leite I, Martinho C, Paiva A. Social robots for long-term interaction: a survey. Int J Soc Robot. 2013;5(2):291–308. doi: 10.1007/s12369-013-0178-y

- Wang W, Siau K. Artificial intelligence, machine learning, automation, robotics, future of work and future of humanity: a review and research agenda. J Database Manag. 2019;30(1):61–79. doi: 10.4018/JDM

- Scoglio AA, Reilly ED, Gorman JA, et al. Use of social robots in mental health and well-being research: systematic review. J Med Internet Res. 2019;21(7):Article ID e13322. doi: 10.2196/13322

- Utami D, Bickmore T. Collaborative user responses in multiparty interaction with a couples counselor robot. In: 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Daegu, South Korea; 2019. p. 294–303.

- Park S, Whang M. Empathy in human-robot interaction: designing for social robots. Int J Environ Res Public Health. 2022;19(3):1889. doi: 10.3390/ijerph19031889

Appendix. Dialogue scenario

Table A1. First dialogue scenario.

Table A2. Second dialogue scenario.