Abstract

This was a two-phase study that aimed to (a) develop a tool for assessing visual attention in individuals with Rett syndrome using AAC with a communication partner during naturalistic interactions in clinical settings; and (b) explore aspects of the tool’s reliability, validity, and utility. The Assessment of Visual Attention in Interaction (AVAI) tool was developed to assess visual attention operationalized as focused gazes (1 s or longer) at the communication partner, an object, and a symbol set. For the study, six video-recorded interactions with nine female participants diagnosed with Rett syndrome (range: 15–52-years-old) were used to calculate intra- and inter-rater agreement, and 18 recorded interactions were analyzed to examine sensitivity to change and acceptability. There was a significant difference in the AVAI results between two conditions (with and without aided-language modeling). Inter-rater agreement ranged from moderate and strong. There was a range in scores, indicating that the AVAI could differentiate between participants. The AVAI was found to be reliable, able to detect change, and acceptable to the participants. This tool could potentially be used for evaluating interventions that utilize aided AAC.

Communication is a complex dynamic process in which shared engagement and active participation are important factors. In the International Classification of Functioning, Disability and Health (ICF), the World Health Organization (WHO, Citation2001) proposes a model that distinguishes between activity and participation. Activity refers to “how an individual executes a task or an action” while participation is “the individual’s actual involvement in a life situation” (p. 10). That participation was included in the model means that, in addition to assessing how an individual performs a task, it is also necessary to assess the frequency of the performance in naturally occurring situations and the individual’s engagement in various situations in daily life. Activity and participation are influenced by environmental factors such as context, products and technology, attitudes of the communication partners, social support, and relationships (WHO, Citation2001).

In a dialogue, both interaction partners need to communicate in a way that is clear and easy for the other to interpret to respond to each other’s communicative actions. Before an individual masters a language (or symbol system), the exchange is more dependent on the communication partner’s ability to support the communication. In partner-supported interaction, the communication partner often has to interpret the communicative function of the individual’s actions (Cress, Grabast, & Burgers Jerke, Citation2013). This can be especially difficult during interactions with individuals with significant motor and communication disabilities, such as people diagnosed with Rett syndrome (Julien, Parker-McGowan, Byiers, & Reichle, Citation2015; Sigafoos et al., Citation2011).

Rett syndrome is a neurodevelopmental disorder almost exclusively affecting females (Neul et al., Citation2010). The four main diagnostic criteria are (a) partial or complete loss of acquired purposeful hand skills, (b) partial or complete loss of acquired spoken language (including babbling), (c) impaired (dyspraxic) gait, and (d) stereotypic hand movements (Neul et al., Citation2010). Dyspraxia (i.e., difficulties in initiating and coordinating voluntary movements) contributes significantly to difficulties in controlling movements (Downs et al., Citation2014; Larsson & Witt Engerström, Citation2001), and hand function especially is often limited (Downs, Bebbington, Kaufmann, & Leonard, Citation2011). One common feature is severe communication difficulties. In particular, expressive communication is severely affected, and approximately 80% of individuals diagnosed with Rett syndrome lack speech (Bartolotta, Zipp, Simpkins, & Glazewski, Citation2011; Didden et al., Citation2010; Urbanowicz, Downs, Girdler, Ciccone, & Leonard, Citation2015). Even so, a number of studies have found that people with Rett syndrome enjoy social interaction (Fabio, Giannatiempo, Oliva, & Murdaca, Citation2011; Sandberg, Ehlers, Hagberg, & Gillberg, Citation2000; Urbanowicz, Downs, Girdler, Ciccone, & Leonard, Citation2016), and that the most efficient form of communication is eye pointing (Bartolotta et al., Citation2011; Didden et al., Citation2010).

Many individuals with Rett syndrome are reported to use aided augmentative and alternative communication (AAC) (e.g., speech-generating devices, eye-gaze boards, etc.), which can increase functional communication, engagement, and independence (Townend, Bartolotta, Urbanowicz, Wandin, & Curfs, Citation2020; Townend et al., Citation2016; Wandin, Lindberg, & Sonnander, Citation2015). As is the case for all learning, attention and alertness are important factors for learning to use aided AAC. Yet, autonomic dysfunction is common in individuals with Rett Syndrome, causing seizure activity, breathing disturbances, and fluctuating levels of attention (Bartolotta et al., Citation2011; Fabio, Antonietti, Castelli, & Marchetti, Citation2009). Attention is defined as “the ability to deploy the resources of the brain so as to optimize performance towards behavioral goals” (Atkinson & Braddick, Citation2012, p. 589). Findlay (Citation2003) describes visual attention as "the process by which some objects or locations are selected to receive more processing than others" (p. 1). Visual attention can be guided by higher cognitive processes or orienting events in the environment; overt signs include occurrence and duration of gaze fixations (Carrasco, Citation2011).

Visual attention is also important for acquisition of spoken language. For example, when a parent moves or points to an object, their child’s gaze tends to focus on the hands and the object (Yu, Citation2007; Yu & Smith, Citation2013). When the parent simultaneously labels the object, it is presumed that the connection between the object and the label is strengthened and, thus, language learning is facilitated (Yu, Citation2007). Communication with aided AAC also requires shifting one’s gaze to objects or symbols and thus places higher demands on the control of visual attention (Benigno & McCarthy, Citation2012). Moreover, it can be assumed that the individual needs to visually focus their attention on the symbols to connect the symbol to what it represents. Their ability to visually focus on the communication partner, objects, and symbols is essential.

Increased visual attention may be an early outcome when learning to use eye-gaze technology and partner-assisted scanning with communication books. Mastering eye-gaze technology (Borgestig, Sandqvist, Parsons, Falkmer, & Hemmingsson, Citation2016) may take a long time and require extensive support from the individual’s social network (Tegler, Pless, Blom Johansson, & Sonnander, Citation2019). To be able to measure early progress could facilitate and reinforce effective communication partner strategies and thus help to prevent AAC abandonment, which is a reported problem in AAC interventions (e.g., Johnson, Inglebret, Jones, & Ray, Citation2006).

Visual attention has been assessed mainly in laboratory settings (e.g., Forssman & Wass, Citation2018), with eye-gaze technology used for this purpose in several studies (e.g., Rose, Djukic, Jankowski, Feldman, & Rimler, Citation2016). Although there have been studies that have examined visual attention related to aided AAC (Dube & Wilkinson, Citation2014; Wilkinson et al., Citation2015), to be able to assess participation, it is important to observe visual attention in naturalistic interactions. There are tools for assessing visual attention in interaction but mainly as a component of joint engagement (Adamson, Bakeman, & Deckner, Citation2004; Mundy et al., Citation2003) or eye pointing (Sargent, Clarke, Price, Griffiths, & Swettenham, Citation2013). Joint engagement/attention is reported to be important to communication development (e.g., Koegel, Koegel, & McNerney, Citation2001). Some authors have also highlighted the importance of joint engagement to communication partners (Wilder, Citation2008; Wilder, Axelsson, & Granlund, Citation2004), although existing assessment tools require interpretation of the individual’s intent. Such interpretation is often difficult when assessing communication in individuals with Rett Syndrome.

The Eye-Pointing Classification Scale (EpCS) (now under development) is a promising tool that does not require that the assessor interpret intention (Clarke et al., Citation2016). The tool differentiates between different levels of eye-pointing development of children with cerebral palsy. Eye pointing integrates an individual’s ability to shift and fixate gazes with social, cognitive, and motor skills in communication with others (Sargent et al., Citation2013). EpCS describes the development from foundation skills such as the single ability to fix gaze, to fully functional eye pointing. For some individuals, however, it may take longer to reach a new level of eye pointing, thus, it may be valuable to also be able to assess more frequent behaviors within the same skill level. None of the aforementioned tools specifically takes AAC into account.

Currently, there is no tool for assessing visual attention involving aided AAC in a naturalistic setting with a communication partner including aided AAC (regardless of demonstrated intent or shared attention) that is able to detect gains that can impact participation even when an individual does not move on to a more advanced skill level. Given the importance of visual attention for learning aided AAC for individuals with Rett syndrome and the lack of such a tool, the current study aimed to (a) develop a tool for assessing visual attention in individuals with Rett syndrome using AAC with a communication partner during naturalistic interactions in clinical settings, and (b) explore aspects of the tool’s reliability, validity, and utility, including assessments of inter- and intra-rater agreement, sensitivity to change, and acceptability.

Method

This study had two phases: Development of the Assessment of Visual Attention in Interaction (AVAI) tool, and assessment of the tool’s validity with a group of participants with Rett Syndrome. Ethical approval for the study was obtained from the appropriate institutional review board; ethical regulations and guidelines complied with Swedish law.

Phase 1: Development of the AVAI tool

Research design

Inter-rater agreement (IRA) was assessed using Occurrence Percentage Agreement (OPA) across all three coders because the coding material was considered non-exhaustive (Yoder & Symons, Citation2010). The IRA and the coders’ comments regarding feasibility formed the basis for a revised version of the AVAI that was evaluated in Phase 2.

Coders

A preliminary version of the AVAI tool was developed during Phase 1, and three external coders (Coders A, B, and C) participated in evaluation of the tool. All of the coders had extensive experience of communication with individuals with communication and motor disabilities.

Participants

Convenience sampling was used to recruit participants for Phase 1 from a center that specialized in Rett syndrome diagnoses. Inclusion criteria were (a) confirmed diagnosis of Rett syndrome, (b) aged 15 and over, and (c) limited speech (i.e., no intelligible words, and word approximations used inconsistently). Two individuals were asked by proxy to participate and agreed through the following consent procedure: The caregivers or legal guardians of the participants who participated in the video clips were given written information about confidentiality of the study, that participation was voluntary, and that they could choose to withdraw from the study at any time. They provided written informed consent on behalf of the participants, who could at any time indirectly withdraw from participating in the session (the caregivers were asked to be attentive to any displays of discomfort inflicted by the situation). In such cases, the session would be changed or terminated. The communication partner in the session was also attentive to displays of discomfort from the participant and was prepared to change or terminate the session.

Materials

Materials for Phase 1 were (a) the preliminary version of the AVAI tool, (b) a Panasonic HCx920 video camera used to record video clips of interactions, (c) Adobe Premiere Pro CC3 video editing software to code the recorded interactions, and (d) a symbol set.

Preliminary version of the AVAI

This tool was used to assess visual attention and consisted of (a) definitions of the coding categories to be used, and (b) the coding procedure. The following coding categories were defined: gaze at communication partner, gaze at object, gaze at symbol set, and gaze without any specific focus. To identify gaze shifts, the time of the onset of each gaze shift was noted along with the gaze focus (gaze at communication partner, gaze at object, gaze at symbol set, and gaze without any specific focus). The coding procedure was as follows: to identify gaze shift, the time of the onset of each gaze shift was noted along with the gaze focus (gaze at communication partner, gaze at object, gaze at symbol set, and gaze without any specific focus).

Panasonic HCx920 video camera

The Panasonic HCx920Footnote1 video camera was used to record video clips of interactions.

Adobe premiere pro CC3 video editing software

The Adobe Premier Pro CC3 Video Editing softwareFootnote2 has a zoom function, making it possible to enlarge selected parts of the screen. It is also possible to change the frequency of frames per second shown (to a maximum four per s), which allows for high precision when moving the cursor back and forth along the timeline.

Symbol set

The symbol set was Picture Communication SymbolsFootnote3, which were arranged in pages for core words such as more, finished, and I/me; for comments such as good, fantastic, and boring; and for specific activities such as sing, play, and faster.

Procedures

Four short video clips of interactions between the two participants and the communication partner (the first author) were recorded. The interactions took place in a room that was well known to the participants, who engaged in two activities that the caregivers had considered to be motivating. One of the video clips could not be used because the eyes of the participant were not visible. The coders independently coded the first 5 min of the three video clips and noted their comments in writing while coding (e.g., when finding it difficult to categorize a specific behavior). Once the coding was complete, the first author conducted a brief interview with each of the three coders. Inter-rater agreement was assessed using OPA across all three coders because the coding material was non-exhaustive (Yoder & Symons, Citation2010). The formula for calculating OPA is: Number of agreements divided by the number of agreements plus disagreements, multiplied by 100.

Results

OPA was 67% for gazes at communication partner, 55% for gazes at object, and 25% for gazes at symbol set. Disagreements were categorized, per Yoder and Symons (Citation2010), into unitizing disagreement (i.e., only one of the coders coded an action) and classifying disagreement (i.e., when the coders coded the same action differently). Of the disagreements (n = 67), 93% were unitizing disagreements and 7% were classifying disagreements.

The coder comments revealed that there were instances when a participant’s eyes were not visible, either because of the positioning of the communication partner or the participant’s eyes were semi-closed. The coders also noted difficulties in identifying the exact gaze focus, especially when gaze duration was very short, and that it was easier to code when the participant was alert, engaged, and interested in the activity. The coders also commented about difficulties identifying the exact gaze focus (e.g., when the participant was looking at nothing specific or at the symbol set), and reported that coding of visual attention to be time consuming and taxing. Thus, it may have been difficult for them to remain attentive while coding the entire 5-min clip.

Discussion

The coder’s comments resulted in revisions designed to improve the reliability and feasibility of the AVAI tool. To increase the low inter-rater agreement, revisions were made to the overall procedure and to clarify the instructions. The importance of alertness for communication, as emphasized by Munde, Vlaskamp, Ruijssenaars, and Nakken (Citation2011), was confirmed and alertness was also concluded to be important for the inter-rater agreement of the AVAI. Therefore, assessment using the Alertness Observation List (AOL), developed by Vlaskamp, Fonteine, and Tadema (Citation2005), was added to the AVAI to identify the periods in the video clips during which the participant was most alert. The instruction for the video-recording procedure was written so that it clearly stated that the video-recorded interaction should include two activities. The rationale was that the change of activity would increase the chances that the participant would be alert and engaged. The criterion for visual attention to be coded was limited to focused gazes (of 1 s or longer) as this was considered to increase inter-rater reliability. Gaze duration is also considered an overt sign of visual attention (Carrasco, Citation2011). Finally, an assistant was assigned specifically to ensure that the eyes of the participant were visible through the camera during the entire video-recorded session.

Phase 2: Assessment of the AVAI tool

Research design

Phase 2 focused on an evaluation of the psychometric and ecological characteristics of the revised AVAI tool, including aspects of reliability, validity, and utility. Sensitivity to change was explored by coding interactions under two different conditions: with and without aided language modeling. It was hypothesized that visual-attention scores would be higher when aided language modeling was used.

Coders

During exploration of the AVAI tool’s reliability, validity, and utility, the first author (Coder D), coded all video clips; during the assessment of inter-rater reliability, an external coder (Coder A) also participated.

Participants

A convenience sampling was used to recruit participants for Phase 2 of the study (assessment of the AVAI tool) from a center that specialized in Rett syndrome diagnoses. Inclusion criteria were (a) confirmed diagnosis of Rett syndrome, (b) aged 15 or older, and (c) limited speech (i.e., no intelligible words, and word approximations used inconsistently). In all, 16 individuals met the criteria and were asked by proxy to participate using the same consent procedure as in Phase 1. Of these, 13 individuals agreed and three eventually withdrew their participation due to illness. One participant completed only one of the two sessions due to health issues and was therefore excluded from the analysis. This resulted in a total of nine participants who were between 15 and 52 years old (Mage = 30, Mdnage = 28).

Of the nine participants, five could not walk, two could walk with support, and two could walk a minimum of 25 meters (82 feet) without support. Per caregiver report, two of the participants could sometimes move a hand toward objects; three others could rarely do so. None of the participants consistently used any form of expression recognized by the caregivers as a means to indicate accepting or rejecting. Six participants inconsistently used a recognized way to accept or reject, such as looking at a symbol for YES or NO, vocalizing, or making movements that the caregivers interpreted as yes/no responses.

Materials

Materials for Phase 2 were (a) the revised AVAI tool, (b) the AOL (Vlaskamp et al., Citation2005), (c) a Panasonic HCx920 video camera used to record video clips of interactions, (d) Adobe Premiere Pro CC video editing software to code the recorded interactions, and (e) a symbol set (c through e were also used in Phase 1).

AVAI tool

The revised AVAI tool was used to assess visual attention and consisted of the following: (a) instructions for video-recording sessions, (b) definitions of the coding categories to be used, and (c) the coding procedure. The instructions for video recording the sessions were:

Setting

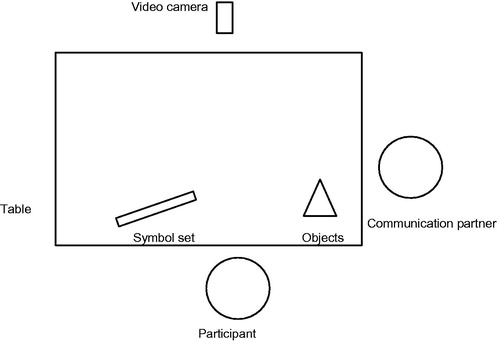

Find a room with few competing stimuli. The communication partner sits at the short end of the table, to one side of the participant who sits at the long side of the table (see ). A symbol set is placed in front of the participant, slightly to the other side to facilitate video recording of gaze direction.

Activities

Use leisure activities that the caregivers consider to be motivating to the participant (e.g., an object or a picture representing a song) or use a tablet in the activities. Example of activities are singing, listening to music on a tablet, playing with bubbles or vibrating toys, or painting nails. Perform each activity as long as the participant shows interest in the activity (maximum 20 min). Introduce the next activity when the participant shows no or little interest in the activity after 5 min. If the participant does not show interest after two activities, introduce a third activity. End the session if the participant does not show any interest in the third activity.

Interaction

Engage the participant in the activity, and to attend to the objects, for example, by pacing and adjusting the activity to the participant’s responses and by moving the objects into their visual field when necessary. In addition, engage the participant in the interaction by, for example, attracting attention to one’s self by varying voice volume and quality when necessary. Give the participant opportunities to choose and to take initiatives throughout the activities.

AVAI coding followed three steps: (a) identification of video clips to code, (b) identification of gaze shifts, and (c) identification of focused gazes (see Appendix A). To identify the time periods of the sessions during which the participant appears to be most alert and focused on the environment, the full recording of the session should be assessed using the AOL (described below). Every 15 s, 5 s of footage are coded for state of alertness. The consecutive 2 min of every activity containing the largest number of intervals when the participant is coded to be active/focused on the environment are then edited into a 4-min video clip to be coded using the AVAI. Subsequently, these video clips are coded for visual attention. To identify gaze shift, the time of the onset of each gaze shift is noted along with the gaze focus (gaze at communication partner, gaze at object, gaze at symbol set, and gaze without any specific focus). Finally, focused gazes are identified by calculating the time between each gaze shift. All gazes at the communication partner, object, or symbol set that last for 1 s or longer are coded as focused gazes.

AOL

The AOL (Vlaskamp et al., Citation2005) had an average inter-rater agreement of 81% and an average intra-rater agreement of 87% (Munde et al., Citation2011). The following codes are used: active/focused on the environment, inactive/withdrawn, sleeping/drowsy, and agitated/discontent.

Procedures

Data collection

Data collection consisted of recording 18 video clips of the nine participants and the communication partner (the first author) across two sessions. The recording procedure followed the AVAI protocol. The caregivers were present in the room but did not take part in the interaction. The sessions were between 8 and 44 min in duration. Each session, and its recording, started as soon as the participant and communication partner were seated and ended when the session was finished. The session was finished when the participant seemed to lose interest in the second or the third activity or after maximum 40 min. On one occasion, the session lasted longer because the participant showed clear signs that she did not want to finish (e.g., leaning toward objects and vocalizing). Between the two sessions, the participants and their caretakers were encouraged to engage in any preferred restorative activity, such as going for a walk during the break, which lasted between 30 and 60 min.

To explore the tool’s sensitivity to change, the two recorded sessions were performed under two different conditions. The first condition utilized aided-language modeling (Allen, Schlosser, Brock, & Shane, Citation2017; Dada & Alant, Citation2009; Drager et al., Citation2006) and involved the communication partner pointing at a symbol approximately once per minute while speaking. To minimize order effects, the aided language modeling was used in the first session with every second participant and in the second session with the other participants.

Coder D used the AVAI tool to code all recorded interactions. Identification of gaze shifts was carried out by placing a marker at each onset of the target action on the timeline in the video editing software. For each marker, the coder also made a notation of the gaze focus. As soon as the gaze was shifted from the communication partner, an object, or the symbol set, a marker was placed with the notation “gaze without any specific focus.” Once the coding was complete, the markers and notations for each clip were exported into a spreadsheet showing when the marker was placed (minutes, seconds, hundredths of a second) and the categorization of gaze focus.

Data analysis

To explore inter-rater agreement, 6 (33%) of the 18 video clips were randomly selected and an external coder independently coded these using the AVAI. To explore intra-rater agreement, the same coder coded six (33%) randomly selected video clips 4 years after the first scoring. To explore acceptability, the AOL assessments from the identification of which clips to code were analyzed.

All statistical analyses were conducted using SPSS version 22.0 (SPSS Inc., Chicago, IL). The proportion of each alertness state during the entire session was calculated for each video recorded interaction and presented as percentages. The frequencies of focused gazes directed at the (a) communication partner, (b) object, or (c) symbol set were calculated separately and in (d) total for each clip coded with the AVAI. A Wilcoxon-signed rank test was used to analyze the differences between the number of focused gazes in the condition when aided language modeling was used and in the condition when aided language modeling was not used. To check for possible order effects, the Wilcoxon test was also used to analyze differences between the first and second sessions. The hypothesis for this specific procedure was that focused gazes would be more frequent when aided language modeling was used. A non-parametric test was chosen due to the small sample size (Bridge & Sawilowsky, Citation1999; Fritz, Morris, & Richler, Citation2012). Cohen’s kappa was used to assess intra-, and inter-rater agreement. The video clips were divided into 10-s intervals, and agreement for each interval was noted. When there was one agreement and one disagreement within the same interval, which occurred on two instances, it was noted as a disagreement.

Results

Alertness and acceptability

The AOL assessment showed a large variation in levels of alertness across the nine participants. The proportion of time that the participants were assessed as active/focused on the environment ranged from 6% to 98%. The proportion of active/focused time was relatively consistent between both sessions for each participant, with the largest difference between sessions at 11%. The proportion of time that the participants were assessed as inactive/withdrawn ranged from 3% to 63%; for sleeping/drowsy the range was 0–65%; and for agitated/discontent the range was 1% of the coded intervals.

Visual attention

As can be seen in , the number of focused gazes ranged between 0 and 22 for communication partner (M = 7.78, SD = 5.94), between 1 and 15 for objects (M = 9.22, SD = 4.65), and between 0 and 13 for symbol set (M = 4.44, SD = 3.26). The total number of all focused gazes ranged between seven and 45 (M = 19.78, SD = 10.21).

Table 1. Number of focused gazes at partner, object, symbol set, and total number of focused gazes in interaction with and without (w/o) aided language modeling (ALM) for each participant.

Inter-rater and intra-rater agreement (IRA)

Agreement between the two coders for communication partner, objects, and symbol set was κ = .86, p < .0001, 95% CI [.76, .95], .79, p < .0001, 95% CI [.67, .90], and .88, p < .0001, 95% CI [.78, .98], respectively. The IRA can be interpreted as moderate for objects and strong for communication partner and symbol set (McHugh, Citation2012). All non-agreements were unitizing non-agreements (i.e., behaviors identified by only one of the coders). IRA between one coder’s scorings for communication partner, objects, and symbol set was κ = .95, p < .0001, 95% CI [.89, 1], .81, p < .0001, 95% CI [.70, .92], and .93, p < .0001, 95% CI [.86, .1], respectively. The IRA can be interpreted as strong (McHugh, Citation2012). All non-agreements were unitizing non-agreements (i.e., behaviors identified on only one occasion).

Sensitivity to change

The number of focused gazes was higher when aided language modeling was used (see for results on an individual level and for results on group level). During the session when aided language modeling was used, there were 24 more gazes at the communication partner for the whole group (M = 2.67, Mdn = 0), 15 gazes at objects (M = 1.66, Mdn = 0), 26 gazes at the symbol set (M = 2.89, Mdn = 1), and 81 focused gazes in total (M = 9, Mdn = 8). According to the Wilcoxon-signed rank test, the difference was significant for gazes at symbol set, z = 2.38, (p < .05) and total gazes, z = 2.17, (p < .05). The effect size was .79 for symbol set and .72 for total focused gazes, which can be considered large according to Cohen’s classification of effect sizes. There were no significant differences in the number of focused gazes between the first and the second session.

Table 2. Number of focused gazes in interaction with and without aided language modeling (ALM) for the group.

Discussion

Reliability

The number of unitizing non-agreements between the two coders was relatively high even after the revision of the preliminary version of the AVAI tool in Phase 1. This indicates that it was still difficult to detect all gaze shifts (e.g., when the gaze shifted from no specific focus to an object). More detailed instructions could further enhance reliability. For example, the instructions to the coders did not specify the number of frames per second to use while coding, thus, the precision with which markers were placed for a specific behavior might have varied among the coders. Furthermore, the instructions did not include the amount of time that should be allocated for the coding of each clip or the number of times each clip should be watched. It is not known whether the allocated time differed among the coders; however, while not all gaze shifts were detected, the intra- and inter-rater agreements were still moderate to strong (McHugh, Citation2012).

Gaze-based assistive technology is advancing rapidly and portable eye-tracking solutions that can identify gaze focus, on and outside a computer screen, have been developed. Portable eye trackers would potentially make identification of gaze focus more accurate (Wilkinson & Mitchell, Citation2014); however, any assessment of visual attention would require a systematic procedure (such as that offered by the AVAI tool) for identification of coding units, gaze shifts, and focused gazes.

Sensitivity to change

It was hypothesized that the AVAI scores would be higher when the communication partner used the aided language modeling intervention strategy during the interaction. This was confirmed, thus, the results support that the tool is sensitive to the use of aided language modeling to increase visual attention. Validation of the tool in terms of sensitivity to change was demonstrated.

Sensitivity to change is often used to detect changes in an individual’s abilities (e.g., when they reach a new level of a particular ability). But sensitivity to change can also be used for context-dependent measures, including changes that are induced by the communication partner’s behavior. As noted by Yoder and Symons (2010), it is important that the change be linked to a treatment. Aided language modeling was used as a treatment in a number of prior studies (Allen et al., Citation2017; Dada & Alant, Citation2009; Drager et al., Citation2006). The intervention in Phase 2 lasted only for one session and it remains to be seen if and how visual attention might change after a longer period of aided language modeling intervention.

Acceptability

Any assessment should be acceptable to the individual being assessed. When identifying which sequences to code using the AVAI tool, sessions were coded in their entirety for alertness using the AOL. Only a small proportion of the sessions included intervals in which the participants were agitated or upset, even though the communication partner was unfamiliar, indicating that, overall, the assessments were acceptable to the participants. It should be noted that some of the participants were only active/focused on the environment during a few of the coded intervals. It is possible that these participants did not find the situation enjoyable. Another possible explanation is that some of the intervals during which the participants were coded as inactive/withdrawn or drowsy/asleep could have been the result of commonly occurring symptoms related to Rett Syndrome such as seizures, breath holds, and stereotypies. All of these symptoms can decrease alertness and ability to focus on the environment.

General discussion

Targeting visual attention

Visual attention and the ability to voluntarily control visual attention are considered important for language acquisition and learning (Forssman & Wass, Citation2018; Rose et al., Citation2016). Visual attention is also a component of joint engagement, which is an important objective for parents of children who do not communicate at a symbolic level (Wilder et al., Citation2004). Furthermore, visual attention is related to eye pointing, the intentional use of the gaze for communicative purposes (Sargent et al., 2013). Consequently, visual attention is an important aspect to assess in interventions with individuals with significant motor and communication disabilities. Implementation of aided AAC often requires dedication and a high level of support from the social network, and initially, the individual may not be able to use aided AAC effectively. If increased visual attention is an early result of the process, it might encourage communication partners to persist. Aided AAC holds the potential to support language development and increase participation in daily activities. Any contribution to successful implementation is therefore of value. Earlier studies have examined aspects of visual attention in relation to interaction or aided AAC (Dube & Wilkinson, Citation2014; Wilkinson & Jagaroo, Citation2004; Wilkinson et al., Citation2015). The development of the AVAI appears to represent the first attempt to assess visual attention of individuals using aided AAC during naturalistic communication situations in clinical settings.

Clinical implications

The ICF model (WHO, Citation2001) emphasizes the importance of participation. In line with this model, environmental factors, such as the communication partner’s communicative style and strategies used, should be taken into account because they influence participation in the interaction. In aided AAC interventions, an important component of the process is to teach the communication partner strategies for facilitating communication development. The AVAI tool provides avenues for assessing visual attention in more naturalistic interactions with communication partners and, therefore, may potentially be of use both in research studies as well as in clinical practice. For example, the AVAI tool could be used to evaluate a communication partner-directed AAC intervention by assessing the dyad before, during, and after the intervention.

In the coding protocol, gazes were scored as frequencies, which is generally considered a sensitive measurement that readily reflects changes of actions over time (Kazdin, Citation2013). The scores differed among the participants, which indicated that coding with the AVAI protocol differentiated between levels of visual attention in terms of focused gazes as a context-dependent behavior. There are tools that are used to assess different levels of abilities related to visual attention such as joint engagement (Adamson et al., Citation2004) and eye pointing (Clarke et al., Citation2016); however, it may be valuable that an individual shows a behavior more often even if they do not develop skills to a more advanced level. More frequent focused gazes could therefore be an early intervention goal to help the social network to evaluate an AAC intervention.

The AVAI tool provides not only information about visual attention in general but also what the individual focuses on more and less frequently. If an individual rarely looks at objects, it is likely that they will miss important information if the objects are the focus of the conversation. Consequently, an intervention using the AVAI could attempt to redirect focus to objects and activities and pace the conversation accordingly. When the level of visual attention is low, the goal of an intervention may be to increase visual attention generally. Instructions for use of the AVAI are not standardized and so can be adjusted to align with the purpose of each study or clinical situation.

Limitations and future directions

The results of this pilot study should be interpreted with caution for a number of reasons, including the small number of participants. Moreover, there is a risk of bias because the same communication partner who interacted with the participant also coded the video clips in Phase 2, even though agreement with the external coder was acceptable. It would have been preferred that the intra-rater assessment was performed by an external coder who is blind to the purpose of the study (Tate et al., Citation2016), which was not possible within the limits of the project. It is also important to keep in mind that evidence for the validity of a tool cannot be established in a single study but rather needs to be collected over time and across studies and is restricted to the context and the population studied.

Several aspects of validity were not explored. For example, little is known about visual attention in naturalistic interactions among individuals who are using AAC, thus, it was beyond the scope of this study to compare the AVAI with other available instruments or to examine expected differences between participant groups, which are standard methods to assess construct validity. This will be an important area for future research. A comparison option would be the Eye-Pointing Classification Scale (EpCS) (Clarke et al., Citation2016, Citationin press).

Regarding utility, acceptability to clinicians was not investigated; the priority was to develop a measure of visual attention that would be acceptable to the individual being assessed. Acceptability to the participants indicates that the AVAI tool could be used as a tool in research and that it would be worthwhile to also investigate its acceptability to and feasibility for clinicians. Even though the administration procedure is time consuming it would be still worthwhile to also investigate its acceptability to and feasibility for clinicians.

Although the difference in the number of focused gazes between the two conditions was significant for gazes at symbols and total number of gazes, it is not known if this difference is of clinical importance. The participants looked at the symbol set almost once per minute on average and more often during the session when aided language modeling was used. In previous studies, the dosage of aided language modeling was between once and twice per minute (Allen et al., Citation2017). Therefore, it could be of clinical value if an individual is visually attentive once per minute. All psychometric properties would need to be evaluated in future studies with larger groups of participants (Prinsen et al., Citation2018), after which the AVAI tool could potentially be used as both an initial assessment and evaluative tool of aided communication intervention. Moreover, future research could also examine how communication partners perceive increased visual attention.

Conclusion

The AVAI is the first tool of its kind for assessing the visual attention of individuals using aided AAC during naturalistic interactions in clinical settings. In this pilot study, the protocol seems to have acceptable reliability and validity in terms of sensitivity to change for assessing visual attention. The assessment with the AVAI was also acceptable for the participants. A systematic, step-by-step procedure was necessary to enhance feasibility and to reach an acceptable level of reliability, although there is still a large potential for development.

Acknowledgments

The authors wish to thank all the participants and their caregivers as well as everyone who helped in the recruitment process. The authors would also like to thank the coders who assisted in developing AVAI: Helena Tegler, Uppsala University, Karin Cloud Mildton, Folke Bernadotte Regional Rehabilitation, and Märith Bergström-Isacsson, Swedish National Center for Rett syndrome and related disorders. Thanks also to Anna Lindam for statistical advice and Lisa Cockette for English language checking.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

Notes

1 Panasonic HCx920 is a product of Panasonic Corporation of Dublin, www.panasonic.com.

2 Adobe Premiere Pro CC is a product of Adobe Systems Software Ireland Limited of Dublin, http://www.adobe.com/se/products/premiere.html.

3 Picture Communication Symbols is a product of TobiiDynavox LLC, Stockholm, Sweden.

References

- Adamson, L. B., Bakeman, R., & Deckner, D. F. (2004). The development of symbol-infused joint engagement. Child Development, 75(4), 1171–1187. doi:10.1111/j.1467-8624.2004.00732.x

- Allen, A. A., Schlosser, R. W., Brock, K. L., & Shane, H. C. (2017). The effectiveness of aided augmented input techniques for persons with developmental disabilities: A systematic review. Augmentative and Alternative Communication (Baltimore, Md. : 1985), 33(3), 149–159. doi:10.1080/07434618.2017.1338752

- Atkinson, J., & Braddick, O. (2012). Visual attention in the first years: Typical development and developmental disorders. Developmental Medicine and Child Neurology, 54(7), 589–595. doi:10.1111/j.1469-8749.2012.04294.x

- Bartolotta, T. E., Zipp, G. P., Simpkins, S. D., & Glazewski, B. (2011). Communication skills in girls with Rett syndrome. Focus on Autism and Other Developmental Disabilities, 26(1), 15–24. doi:10.1177/1088357610380042

- Benigno, J. P., & McCarthy, J. W. (2012). Aided symbol-infused joint engagement. Child Development Perspectives, 6(2), 181–186. doi:10.1111/j.1750-8606.2012.00237.x

- Borgestig, M., Sandqvist, J., Parsons, R., Falkmer, T., & Hemmingsson, H. (2016). Eye gaze performance for children with severe physical impairments using gaze-based assistive technology-A longitudinal study. Assistive Technology : The Official Journal of Resna, 28(2), 93–102. doi:10.1080/10400435.2015.1092182

- Bridge, P. D., & Sawilowsky, S. S. (1999). Increasing physicians’ awareness of the impact of statistics on research outcomes: Comparative power of the t-test and Wilcoxon rank-sum test in small samples applied research. Journal of Clinical Epidemiology, 52(3), 229–235. doi:10.1016/S0895-4356(98)00168-1

- Carrasco, M. (2011). Visual attention: The past 25 years. Vision Research, 51(13), 1484–1525. doi:10.1016/j.visres.2011.04.012

- Clarke, M., Woghiren, A., Sargent, J., Griffiths, T., Cooper, R., Croucher, L., … Swettenham, J. (2016, June, Abstract). Eye-Pointing Classification in Non-Speaking Children with Severe Cerebral Palsy. Paper Presented at the International Conference on Cerebral Palsy and Other Childhood-Onset Disabilities, Stockholm. http://edu.eacd.org/sites/default/files/Meeting_Archive/Stockholm-16/Abstract-book-16.pdf

- Clarke, M.T., Sargent, J, Cooper, R, Aberbach, G., McLaughlin, L., Panesar, P., Woghiren, A., Griffiths, T., Price, K., Rose, C., Swettenham, J. (In Press). Development and testing of the Eye-pointing Classification Scale for Children with Cerebral Palsy. Disability and Rehabilitation.

- Cress, C. J., Grabast, J., & Burgers Jerke, K. (2013). Contingent interactions between parents and young children with severe expressive communication impairments. Communication Disorders Quarterly, 34(2), 81–96. doi:10.1177%2F1525740111416644

- Dada, S., & Alant, E. (2009). The effect of aided language stimulation on vocabulary acquisition in children with little or no functional speech. American Journal of Speech-Language Pathology, 18(1), 50–64. doi:10.1044/1058-0360(2008/07-0018)

- Didden, R., Korzilius, H., Smeets, E., Green, V. A., Lang, R., Lancioni, G. E., & Curfs, L. M. (2010). Communication in individuals with Rett syndrome: An assessment of forms and functions. Journal of Developmental and Physical Disabilities, 22(2), 105–118. doi:10.1007/s10882-009-9168-2

- Downs, J., Bebbington, A., Kaufmann, W. E., & Leonard, H. (2011). Longitudinal hand function in Rett syndrome. Journal of Child Neurology, 26(3), 334–340. doi:10.1177%2F0883073810381920

- Downs, J., Parkinson, S., Ranelli, S., Leonard, H., Diener, P., & Lotan, M. (2014). Perspectives on hand function in girls and women with Rett syndrome. Developmental Neurorehabilitation, 17(3), 210–217. doi:10.1177%2F0883073810381920

- Drager, K. D. R., Postal, V. J., Carrolus, L., Castellano, M., Gagliano, C., & Glynn, J. (2006). The effect of aided language modeling on symbol comprehension and production in two preschoolers with autism. American Journal of Speech-Language Pathology, 15(2), 112–125. doi:10.1044/1058-0360(2006/012)

- Dube, W. V., & Wilkinson, K. M. (2014). The potential influence of stimulus overselectivity in AAC: Information from eye tracking and behavioral studies of attention with individuals with intellectual disabilities. Augmentative and Alternative Communication (Baltimore, Md. : 1985), 30(2), 172–185. doi:10.3109/07434618.2014.904924

- Fabio, R. A., Antonietti, A., Castelli, I., & Marchetti, A. (2009). Attention and communication in Rett syndrome. Research in Autism Spectrum Disorders, 3(2), 329–335. doi:10.1016/j.rasd.2008.07.005

- Fabio, R. A., Giannatiempo, S., Oliva, P., & Murdaca, A. M. (2011). The increase of attention in Rett syndrome: A pre-test/post-test research design. Journal of Developmental and Physical Disabilities, 23(2), 99–111. doi:10.1007/s10882-010-9207-z

- Findlay, J. M. (2003). Visual selection, covert attention and eye movements. In J. M. Findlay & I. D. Gilchrist (Eds.), Active vision: The psychology of looking and seeing (Vol. 37, pp. 1–27). Oxford: Oxford University Press.

- Forssman, L., & Wass, S. V. (2018). Training basic visual attention leads to changes in responsiveness to social‐communicative cues in 9-month-olds. Child Development, 89(3), e199. doi:10.1111/cdev.12812

- Fritz, C. O., Morris, P. E., & Richler, J. J. (2012). Effect size estimates: Current use, calculations, and interpretation. Journal of Experimental Psychology. General, 141(1), 2–18. doi:10.1037/a0024338

- Johnson, J. M., Inglebret, E., Jones, C., & Ray, J. (2006). Perspectives of speech language pathologists regarding success versus abandonment of AAC. Augmentative and Alternative Communication (Baltimore, Md. : 1985), 22(2), 85–99. doi:10.1080/07434610500483588

- Julien, H. M., Parker-McGowan, Q., Byiers, B. J., & Reichle, J. (2015). Adult interpretations of communicative behavior in learners with Rett syndrome. Journal of Developmental and Physical Disabilities, 27(2), 167–182. doi:10.1007/s10882-014-9407-z

- Kazdin, A. E. (2013). Research design in clinical psychology. Boston, MA: Pearson Education.

- Koegel, R. L., Koegel, L. K., & McNerney, E. K. (2001). Pivotal areas in intervention for autism. Journal of Clinical Child Psychology, 30(1), 19–32. doi:10.1207/S15374424JCCP3001_4

- Larsson, G., & Witt Engerström, I. (2001). Gross motor ability in Rett syndrome: The power of expectation, motivation and planning. Brain and Development, 23, S77–S81. doi:10.1016/S0387-7604(01)00334-5

- McHugh, M. L. (2012). Interrater reliability: The kappa statistic. Biochemia Medica, 22(3), 276–282. doi:10.11613/BM.2012.031

- Munde, V., Vlaskamp, C., Ruijssenaars, W., & Nakken, H. (2011). Determining alertness in individuals with profound intellectual and multiple disabilities: The reliability of an observation list. Education and Training in Autism and Developmental Disabilities, 46, 116–123. https://www.jstor.org/stable/23880035

- Mundy, P., Delgado, C., Block, J., Venezia, M., Hogan, A., & Seibert, J. (2003). Early Social Communication Scales (ESCS). Miami, OH: University of Miami.

- Neul, J. L., Kaufmann, W. E., Glaze, D. G., Christodoulou, J., Clarke, A. J., Bahi-Buisson, N., … Percy, A. K, RettSearch Consortium. (2010). Rett syndrome: Revised diagnostic criteria and nomenclature. Annals of Neurology, 68(6), 944–950. doi:10.1002/ana.22124

- Rose, S. A., Djukic, A., Jankowski, J. J., Feldman, J. F., & Rimler, M. (2016). Aspects of attention in Rett syndrome. Pediatric Neurology, 57, 22–28. doi:10.1016/j.pediatrneurol.2016.01.015

- Prinsen, C. A., Mokkink, L. B., Bouter, L. M., Alonso, J., Patrick, D. L., De Vet, H. C., & Terwee, C. B. (2018). COSMIN guideline for systematic reviews of patient-reported outcome measures. Quality of Life Research : An International Journal of Quality of Life Aspects of Treatment, Care and Rehabilitation, 27(5), 1147–1157. doi:10.1007/s11136-018-1798-3

- Sandberg, A. D., Ehlers, S., Hagberg, B., & Gillberg, C. (2000). The Rett syndrome complex: Communicative functions in relation to developmental level and autistic features. Autism, 4(3), 249–267. doi:10.1177/1362361300004003003

- Sargent, J., Clarke, M., Price, K., Griffiths, T., & Swettenham, J. (2013). Use of eye-pointing by children with cerebral palsy: What are we looking at? International Journal of Language & Communication Disorders, 48(5), 477–485. doi:10.1111/1460-6984.12026

- Sigafoos, J., Kagohara, D., van der Meer, L., Green, V. A., O’Reilly, M. F., Lancioni, G. E., … Zisimopoulos, D. (2011). Communication assessment for individuals with Rett syndrome: A systematic review. Research in Autism Spectrum Disorders, 5(2), 692–700. doi:10.1016/j.rasd.2010.10.006

- Tate, R. L., Perdices, M., Rosenkoetter, U., Shadish, W., Vohra, S., Barlow, D. H., … Wilson, B. (2016). The Single-Case Reporting Guideline In BEhavioural Interventions (SCRIBE) 2016 Statement. Evidence-Based Communication Assessment and Intervention, 10(1), 44–58. doi:10.1080/17489539.2016.1190525

- Tegler, H., Pless, M., Blom Johansson, M., & Sonnander, K. (2019). Speech and language pathologists' perceptions and practises of communication partner training to support children's communication with high-tech speech generating devices . Disability and Rehabilitation. Assistive Technology, 14(6), 581–589. doi:10.1080/17483107.2018.1475515

- Townend, G. S., Bartolotta, T. E., Urbanowicz, A., Wandin, H., & Curfs, L. M. G. (2020). Development of consensus based guidelines for managing communication of individuals with Rett syndrome. Augmentative and Alternative Communication. 1–12.

- Townend, G. S., Marschik, P. B., Smeets, E., van de Berg, R., van den Berg, M., & Curfs, L. M. G. (2016). Eye gaze technology as a form of augmentative and alternative communication for individuals with Rett syndrome: Experiences of families in the Netherlands. Journal of Developmental and Physical Disabilities, 28, 101–112. doi:10.1007/s10882-015-9455-z

- Urbanowicz, A., Downs, J., Girdler, S., Ciccone, N., & Leonard, H. (2015). Aspects of speech-language abilities are influenced by MECP2 mutation type in girls with Rett syndrome. American Journal of Medical Genetics Part A, 167(2), 354–362. doi:10.1002/ajmg.a.36871

- Urbanowicz, A., Downs, J., Girdler, S., Ciccone, N., & Leonard, H. (2016). An exploration of the use of eye gaze and gestures in females with Rett syndrome. Journal of Speech, Language, and Hearing Research , 59(6), 1373–1383. doi:10.1044/2015_JSLHR-L-14-0185

- Wandin, H., Lindberg, P., & Sonnander, K. (2015). Communication intervention in Rett syndrome: A survey of speech language pathologists in Swedish health services. Disability and Rehabilitation, 37(15), 1324–1333. doi:10.3109/09638288.2014.962109

- Wilder, J. (2008). Proximal processes of children with profound multiple disabilities. (Doctoral Dissertation), Stockholm University, Stockholm.

- Wilder, J., Axelsson, C., & Granlund, M. (2004). Parent-child interaction: A comparison of parents' perceptions in three groups. Disability and Rehabilitation, 26(21–22), 1313–1322. doi:10.1080/09638280412331280343

- Wilkinson, K. M., Dennis, N. A., Webb, C. E., Therrien, M., Stradtman, M., Farmer, J., … Zeuner, C. (2015). Neural activity associated with visual search for line drawings on AAC displays: An exploration of the use of fMRI. Augmentative and Alternative Communication (Baltimore, Md. : 1985), 31(4), 310–324. doi:10.3109/07434618.2015.1100215

- Wilkinson, K. M., & Jagaroo, V. (2004). Contributions of principles of visual cognitive science to AAC system display design. Augmentative and Alternative Communication, 20(3), 123–136. doi:10.1080/07434610410001699717

- Wilkinson, K. M., & Mitchell, T. (2014). Eye tracking research to answer questions about augmentative and alternative communication assessment and intervention. Augmentative and Alternative Communication (Baltimore, Md. : 1985), 30(2), 106–119. doi:10.3109/07434618.2014.904435

- Vlaskamp, C., Fonteine, H., & Tadema, A. (2005). Manual of the list ’Alertness in children with profound intellectual and multiple disabilities’. [Handleiding bij de lijst ‘Alertheid van kinderen met zeer ernstige verstandelijke en meervoudige beperkingen’]. Groningen: Stichting Kinderstudies.

- World Health Organization. (2001). International classification of functioning, disability and health (ICF). www.who.int/iris/handle/10665/42407

- Yoder, P., & Symons, F. J. (2010). Observational measurement of behavior. New York: Springer Publishing Co Inc.

- Yu, C. (2007). Embodied active vision in language learning and grounding. In L. Paletta & E. Rome (Eds.), Attention in cognitive systems. Theories and systems from an interdisciplinary viewpoint. WAPCV 2007. Lecture notes in computer science (Vol. 4840, pp. 75–90). Berlin, Heidelberg: Springer.

- Yu, C., & Smith, L. (2013). Joint attention without gaze following: Human infants and their parents coordinate visual attention to objects through eye-hand coordination. PLoS One, 8(11), e79659. doi:10.1371/journal.pone.0079659

Appendix 1

Appendix

Instructions for coding using the assessment of visual attention in interaction (AVAI) tool

Use software that allows easy placement of time markers of different colors (e.g., Adobe Premiere pro). If necessary, it is possible to change the number of frames per second or zoom in/out on the picture.

Identification of clips to code using alertness observation list (AOL)

The full coding is recorded. Every 15 seconds, code 5 seconds for the following states of alertness:

Active/focused on the environment (mark with green marker in the software).

Inactive/withdrawn (mark with orange marker in the software).

Sleeping/drowsy (mark with red marker in the software).

Agitated/discontent (mark with blue marker in the software).

Edit the two consecutive minutes of every activity containing the largest number of the category active/focused on the environment into a 4-min video clip to be coded using AVAI.

AVAI implementation

Mark all gaze shifts: For each marker, make a note of the target: (a) gaze directed at communication partner (p), (b) gaze directed at object (o), and (c) gaze directed at symbol set (s). The objects are the referents of the communication, for example nail polish, song props, a tablet. Only a communication book or a device with a communication application are marked as a symbol set. Set a marker and note with a dash – as soon as the gaze leaves the partner, object or symbol set. Set a marker as soon as the gaze reaches the communication partner, the object or the symbol set. Score all gazes that last 1 s or longer.