?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

The autonomous driving algorithm studied in this paper makes a ground vehicle capable of sensing its environment via visual images and moving safely with little or no human input. Due to the limitation of the computing power of end side devices, the autonomous driving algorithm should adopt a lightweight model and have high performance. Conditional imitation learning has been proved an efficient and promising policy for autonomous driving and other applications on end side devices due to its high performance and offline characteristics. In driving scenarios, the images captured in different weathers have different styles, which are influenced by various interference factors, such as illumination, raindrops, etc. These interference factors bring challenges to the perception ability of deep models, thus affecting the decision-making process in autonomous driving. The first contribution of this paper is to investigate the performance gap of driving models under different weather conditions. Following the investigation, we utilise StarGAN-V2 to translate images from source domains into the target clear sunset domain. Based on the images translated by StarGAN-V2, we propose Conditional Imitation Learning with ResNet backbone named Star-CILRS. The proposed method is able to convert an image to multiple styles using only one single model, making our method easier to deploy on end side devices. Visualization results show that Star-CILRS can eliminate some environmental interference factors. Our method outperforms other methods and the success rate values in different tasks are 98%, 74%, and 22%, respectively.

1. Introduction

Autonomous driving (Codevilla et al., Citation2019; Miao et al., Citation2022) is a technology that enables cars to automatically drive on road without human interference. Applications (Bai et al., Citation2021, Citation2022) related to autonomous driving are of great significance. End-to-end autonomous driving has recently attracted interest as a simple alternative to traditional modular approaches used in industry. In this paradigm, perception and control models are learned simultaneously by adopting deep networks. Explicit sub-tasks are not defined but may be implicitly learned from data. These controllers are typically obtained by imitation learning from human demonstrations.

Benefiting from the development of deep learning (Bai et al., Citation2022; Wang et al., Citation2022; Zhang et al., Citation2022), end-to-end visual-based driving (Bogdoll et al., Citation2022; Wu et al., Citation2022; Zablocki et al., Citation2022) has drawn a lot of attention. However, features extracted from very deep neural networks (Sheng et al., Citation2020; Wang et al., Citation2022; Yan et al., Citation2021) bring a huge challenge to the deployment of deep neural networks on autonomous driving cars. Many existing approaches (Badawi et al., Citation2022; Elsisi, Citation2020, Citation2022; Elsisi & Tran, Citation2021; Elsisi et al., Citation2022) have been proposed to deploy deep neural networks in edge devices. For example, in Badawi et al. (Citation2022), Elsisi et al. (Citation2022), the authors involve artificial neural network to estimate the transformer health index and diagnose online faults for power transformer. In Elsisi and Tran (Citation2021), an integrated IoT architecture is proposed to handle the problem of cyber attacks based on a developed deep neural network. Similar to the above works, we deploy the autonomous driving algorithm on edge devices in the form of lightweight deep neural networks.

As an online method, reinforcement learning needs interactions between the environment and the agent, which is risky in autonomous driving (Mnih et al., Citation2016). Alternatively, due to its offline training characteristics, conditional imitation learning is a safer way and plays an important role in the process of researching end-to-end visual-based driving policy (Codevilla et al., Citation2018). In terms of autonomous vehicle, Elsisi (Citation2022) propose an improved grey wolf optimiser based on opposition and quasi learning when controlling the steering angle.

Different driving scenarios bring different decision-making difficulties to autonomous driving policies. For example, rural driving models are simpler than urban driving models due to their different traffic flows. There is almost no need to consider the obstacle avoidance problem and traffic lights in the uninhabited rural environment. However, in the town, obstacle avoidance becomes the main problem restricting driving, especially for the obstacle avoidance task of dynamic objects, i.e. pedestrians and vehicles (Lv et al., Citation2019, Citation2020a, Citation2020b).

In addition to the complexity of the task itself, the images acquired in the scene also influence the agent's perception ability. Driving scenarios under different natural conditions have different impacts on driving strategy, especially on the visual-based driving strategies that need RGB images captured by sensors. For example, on a slice of weather conditions like rainy days and clear noon, driving policy requires proper environmental perception in the presence of raindrops, strong light, and any other environmental interference factors to make the right decisions. The correct perception of the environment is the basis of improving the success rate of the autonomous driving policy. There are challenges for driving policy to cope with the changing natural environments. The end-to-end visual-based autonomous driving policy extracts features from the input RGB images and then utilises the features to make decisions on the policy network, making the decisions easily influenced by the images (Codevilla et al., Citation2018). To improve the decision-making ability of the driving policy network, we need to improve the perception ability that is adaptive to changing natural environments.

In this paper, we concentrate on improving the perceptual ability of the driving model by introducing a translation tool. At first, we conduct experimental statistics on the driving performance of different natural environments, and we find that the drivability in the clear sunset days is satisfactory in terms of image clarity and driving accuracy. Then, to improve the perceptual ability of the driving model for different environments, we introduce the StarGAN-V2 model (Choi et al., Citation2020), a scalable approach that can generate diverse images across multiple domains, to convert RGB images under different natural environments. We train a 6-class StarGAN-V2 model before applying it to the driving model and analyse the generated images from both general way and special cases. We find that in the StarGAN-V2 model, taking images captured from the clear sunset weather conditions as a style provider can generate more eligible images. When applying, we pre-train two 4-class StarGAN-V2 models to translate images before putting them into control networks which illuminate the natural disturbances in source RGB images and enhance the images. Experimental results prove that the proposed method can improve the perceptual adaptive ability of the network, and we believe that this is also a way to solve the sim-to-real gap problem in visual-based autonomous driving policy. At the level of the model itself, we explore a way to eliminate the influence of image style on the policy network and improve the perceptual adaptability of the model.

Our main contributions are as follows:

We explore the influence of different environments on autonomous driving. Based on the influence, this paper focus on improving the environmental adaptability of driving models.

We propose a weather-style translation network based on StarGAN-V2 to reduce the style difference of images in various kinds of weather.

Based on the translation network, we propose a conditional imitation network named Star-CILRS for autonomous driving. Star-CILRS achieves better results than other approaches.

2. Related works

2.1. Image-to-image translation methods

Inspired by sim-to-real related methods, the image-to-image translation method helps to improve the generalisation ability of the algorithm and improve the perception ability of the model under different conditions. Specifically, images produced under different conditions have a unique appearance, which we call style. In the same weather conditions, the style of the images is almost the same. In different weathers, the images have different styles. This difference in style may be caused by the sensor taking images in different weathers, which have different lighting conditions, and possibly the effects of wind and raindrops. Image-to-image translation is more like a domain transfer problem and can be used to address model perception biases caused by different picture styles. Therefore, it is of great research significance to study the image style transfer problem on an end-to-end vision-based autonomous driving method.

Method (Isola et al., Citation2017) is the earliest method to address the domain transfer problem requiring paired datasets, by exploiting the Semantic Image Segmentation Network (SegNet) to address the sim-to-real gap (Pan et al., Citation2017) problem. Furthermore, in order to solve the image style transfer problem without using paired datasets, the researchers used a variant of GAN to solve this problem. For example, CycleGAN (Zhu et al., Citation2017) solves the image translation problem for unpaired datasets. This method can be used in autonomous driving scenarios to convert pictures into different styles. However, the problem with this method is that Cycle-GAN can only convert one style with the trained network, and there are many kinds of weather, so multiple networks must be trained to convert various weathers to improve the perceptual adaptability of the model. Obviously, the disadvantage of this method is that it requires a lot of computing resources to train the model and stores multiple deep models on the terminal device. StarGAN (Choi et al., Citation2020) is a more scalable and less resource-intensive approach that can generate images in multiple styles using a single model.

In addition, an image-to-image translation model is expected to learn a mapping between various style domains while having two properties: i.e. the ability to generate diverse images and the scalability over different styles. However, most existing methods only have either of the properties. In this paper, we aim to adopt a translation model that satisfying the two properties.

Inspired by these translation networks, we first explore the performance of autonomous driving models in different weather conditions. We conduct some experiments to explore the influence of the weather on driving policy. We think that the influence of different weather conditions on driving policy is due to the interference of natural factors like raindrops, sunlight, shadows, and so on. In this paper, we propose a weather-style translation network that uses a single generator to learn mappings between all available domains.

2.2. Driving control models

Autonomous driving is a technology with high safety requirements and has received more and more attention from academia and industry. At present, existing autonomous driving technologies have adopted deep learning techniques such as reinforcement learning and imitation learning, and have achieved good driving results. The characteristic of reinforcement learning is that it needs to interact with the environment to guide the training of the model through feedback rewards. However, in real life, this trial and error with the environment is expensive and unsafe. Therefore, the direct reinforcement learning training process cannot well guarantee the security problem. In contrast, imitation learning has unique advantages in the study of autonomous driving due to its offline learning characteristics.

For autonomous driving, the control task divides driving control actions into 3 categories: “turn left”, “turn right”, and “go straight' according to traditional real-world-based driving habits. Based on the above setting, many methods have been studied. For example, in Codevilla et al. (Citation2018), a conditional imitation learning method is proposed, by taking high-dimensional control as the input condition of the model, four instructions are issued in the form of hot vectors, namely “along the road”, “left at the next intersection”, “Turn”, “Go straight at the next intersection” and “Turn right at the next intersection”. Furthermore, the conditional imitation learning approach works well on the benchmark proposed by Codevilla et al. (Citation2019), and the main reason is that this branch focuses on its decision-making work.

Some modifications are made on this basis conditional imitation learning by Codevilla et al. (Citation2019). The new model is called CILRS, which is our basic baseline model. A new benchmark named NoCrash was also proposed by it, which is our benchmark too. CILRS extent with a ResNet architecture and speed prediction. It performs well in most conditions on its benchmark NoCrash.

However, the above methods do not consider the influence caused by different weather styles. In addition, there is a strange phenomenon that the deep model plays better on a new town and new weather unseen conditions than on conditions that only the town is changed. Thus, the gap between different natural weather environments is needed to be solved. Based on this idea, we convert input images to a new domain and propose a conditional imitation network named Star-CILRS for autonomous driving.

3. The influence of different environments

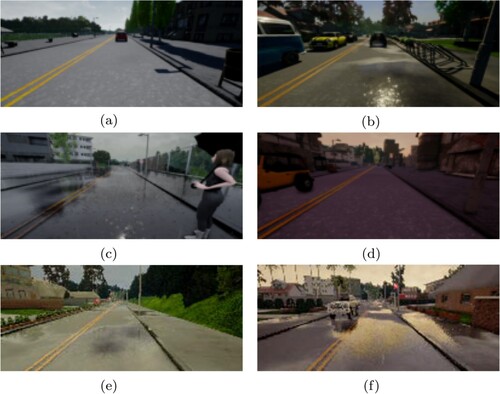

In general, autonomous driving agents perform well in training conditions but fail in unseen environments. We also observe that the performance gap of an agent under different weather conditions is also very large. In this paper, we first investigate the driving performance in different weathers. Figure shows the weathers of the CARLA environment.

Figure 1. Example images captured from various weathers in CARLA. The quality and clarity of these images are different, thus influencing the performance of the driving models. (a) clear noon, (b) clear noon after rain, (c) heavy rain noon, (d) clear sunset, (e) after rain sunset, (f) soft rain sunset.

When the weather condition changes, the styles of the images rendered by the simulator also change. To explore the influence of different natural climates on autonomous driving, we run the agent trained by CILRS (Codevilla et al., Citation2019) in Town01 with 4 different weather conditions. Note that in NoCrash benchmark settings (Codevilla et al., Citation2019), the heavy rain noon and soft rain sunset are unseen while training, and the rest weather conditions are all seen in the training process. The driving policy performs differently in these training conditions. The results are shown in Table .

Table 1. The driving results of the CILRS (Codevilla et al., Citation2019) agent in different weather conditions.

The RGB images collected by the sensors in different weather conditions are influenced by natural factors in the weather conditions and the perception part of the network will be influenced too. As shown in Figure , the images captured by the sensors show objects clearly but are easily affected by a bright light when the weather is clear noon. When the weather is heavy rain noon, raindrops may affect the detection of an obstacle. On some weather conditions, the collected RGB images may be enhanced naturally by the environment so that the model drives well on that weather condition which explains the issue mentioned above. Therefore, in this paper, we study the influence of different weather on driving, and then improve driving performance by reducing the influence of different weathers.

4. The proposed method

To improve the adaptability of autonomous driving strategies to different environmental styles, an offline model named Star-CILRS is proposed in this paper. The proposed model aims to study the influence of different weather on image style, and improves the environmental adaptability of the model by performing style transfer and enhancement of RGB images captured in changing environments.

In this section, we first introduce the translate model and the control network. Then, we introduce the whole model that binds the translation network and control network.

4.1. Image translation network

There are 4 components in StarGAN-V2, i.e. Generator, Mapping Network, Style Encoder, and Discriminator. These components are all utilised while training, but only the generator and the embedding network are deployed when driving on the road.

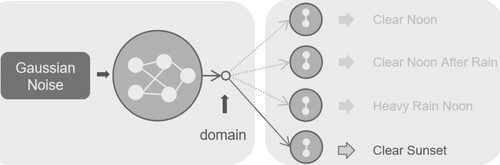

As shown in Figure , the Mapping Network is designed to transform a random Gaussian noise into a style code. In this paper, the style codes in training environments represent several different weathers, i.e. “Clear Noon”, “Heavy Rain Noon”, “Clear Noon After Rain”, and “Clear Sunset”. The style codes are also the inputs of the generator. The mapping network is as follows:

(1)

(1) where z is the Gaussian noise, y is the target domain, s is the generated style code of domain y, and M is an MLP layer. The Style Encoder, which we don't use while driving, is also used to provide style code with the RGB images as reference instead of the Gaussian noise.

Figure 2. The mapping network. The output style codes i.e. clear noon, clear noon after rain, heavy rain noon, and clear sunset, are the input of the generator.

The Discriminator is to is to determine which domain the image belongs to. The Discriminator consists of several branches that learn a binary classification determining whether an image x is a real image y or a fake one

. The fake image is produced by the generator G. Similar to the typical GAN system, the Discriminator helps the generator produce more realistic images through an adversarial loss. The adversarial loss

is as follows:

(2)

(2) where

is the output of the Discriminator D.

The Generator translates a source image into a new one with a target style. A domain-specific style vector from the Mapping Network and a source RGB image are inputted into the Generator. An adaptive instance normalisation (AdaIN) (Choi et al., Citation2018, Citation2020) is used in the generator network G. To make the Generator stronger, a style reconstruction loss and a diversity-sensitive loss are used to train the model. The style reconstruction loss forces the Generator to generate images using the style code. The style reconstruction loss is as follows:

(3)

(3) where

is the target style code and M denotes the mapping network that extracts a style code from an generated image

. Theoretically,

and

should be the same. The diversity-sensitive loss is as follows:

(4)

(4) where there should be a noticeable difference between the image

generated with

and the image

generated with

.

The network draws on CycleGAN's cyclic consistency loss mechanism, which computes the L1 loss between source images and cyclic generated images.

(5)

(5) where x is the source image, s is the source style,

is the target style,

is generated images by the Generator. The cyclic generated images

, are generated with a fake image and the source style. Once the generator generates a fake image

with the target style

and a source image x, the fake image is then inputted in the Generator back with a source style code s to reconstruct the source image

.

Finally, the objective function L of the translation model is as follows:

(6)

(6) where we maximise

and minimise the others.

,

,

, and

are the losses when training the translation network and have been described above.

,

, and

are hyperparameters for each term to control their relative importance during training.

4.2. Conditional imitation network

Conditional imitation learning is a supervised learning approach that learns policies from offline data. It requires pairs of input observations with expert actions as labels. In conditional imitation learning, we use a high-level navigation command c t to control the agent when crossing multiple types of intersections. Given an expert policy and the navigation environment, we produce a off-line expert dataset,

, where

and

are the observation and high-level commands, respectively.

indicates taking the next right, left of staying in lane.

are the low-level controls. In addition, the observation

contains a single image and its current speed

. We feed the above observation into the driving system to properly react on the road. Without speed

, the driving model probably won't learn if and when it should brake or accelerate to obtain a desired speed.

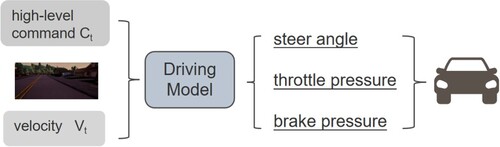

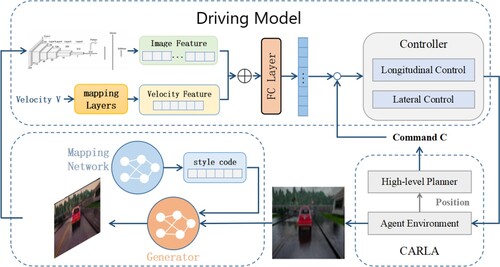

Conditional imitation learning allows an autonomous vehicle trained end-to-end to be directed by high-level commands. In this part, as shown in Figure , we describe the conditional imitation network of the proposed method. The inputs of the conditional imitation network consists of an RGB image, the current speed of the agent, and a high-dimensional control instruction. The network outputs a three-dimensional action vector including throttle pressure, brake pressure, and steering angle. With these outputs, the agent act at next step and the environment changes associated with the action. Finally, the sensor on the centre of the vehicle will catch a new image.

We deploy Resnet (He et al., Citation2015) as the backbone of the driving model. In a certain range, the deeper the network, the better the model performs. However, gradient explosion or gradient dispersion may occur if the number of layers exceeds a certain threshold. In terms of image processing, Resnet (He et al., Citation2015) has been shown to extract robust and efficient visual features. Careful design of the network can significantly improve the accuracy of the task. In this paper, we adopt the 34-layer Resnet in the urban driving task. The network takes an image as input and outputs a 512-dimensional vector. Then, the vector performs as the input of the joint input module along with the output from a measurement module that processes the speed input.

In this paper, we divide the driving task into four sub-tasks, i.e. follow the road, turn left at the next intersection, go straight at the next intersection, and turn right at the next intersection. Each sub-task has a driving model that has independent parameters. To produce low-level controls, the agent learns a policy π parametrised by θ based on observations o and high-level commands c. The parameters are trained by minimising the following imitation cost:

(7)

(7) where l represents the L1 distance.

4.3. Star-CILRS

After the image translation model and the conditional imitation network are trained, the two models are utilised to train the Star-CILRS model. In the process of joint adjustment, the target image is used as the input of the control network to obtain the action result, and the loss is obtained through the loss function of the control network. At the same time, the image in the graphics translation network needs to be sent back to the generator as a circular input to generate back to the original style image, this step gets a circular loss. All losses are added up and the gradient is returned, then the parameters of the generator network are updated.

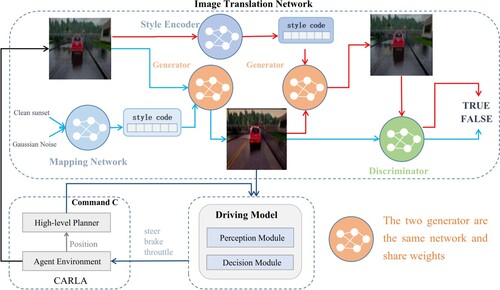

Figure is the overall workflow of our method. The generator can translate any image that is similar to the training dataset to the target style, even if the image has not been trained. However, both the embedding network and the style encoder can only get the trained style code, and cannot get a new style code.

Figure 4. The workflow of Star-CILRS. The proposed method contains two main models: the translation network and the driving model. The translation network takes a source image as input and outputs a generated image with a specific weather style. Given the generated image, the driving model takes actions for autonomous driving.

We use style codes extracted from images with clear sunset day weather to translate the source images taken by the sensor into target images to illuminate natural disturbances. The main reason is that both the driving model and the translation model play better in clear sunset weather conditions. A fast and low-power deep model is essential for end side devices for autonomous driving. To deploy our method to end side devices, only several modules are utilised and the rest are only utilised in training. The workflow of our method in the inference stage is illustrated in Figure . The proposed method utilises a generator of the translation model to translate images to improve the environmental adaptability of the driving model. With the translated image, the driving model extracts image feature together with velocity feature as the input of controller. Then, the controller takes actions to drive the car.

Figure 5. The inference stage of our method. The generator takes a source image and a target style code to output the target image with a specific style. Then, the translated image is fed to the driving model to control the agent.

Although the proposed method is effective, it still suffers from some limitations. Our translation model is an efficient model and can transfer different weather styles. However, the efficiency of the translation model can continue to improve. When transferring a source image to the target domain, the architecture with downsampling and upsampling operations is utilised. The method also requires a downsampling process in the driving model. Thus, if we can convert the sequential process of downsampling, upsampling, and downsampling to the only downsampling operation, the efficiency of the algorithm can be further improved.

5. Experimental results

As it is difficult to apply the autonomous driving policy under a common benchmark in the real world directly, we conduct the experiments in the CARLA (Dosovitskiy et al., Citation2017) environment, which is a open-source simulator for autonomous driving. In this part, we first introduce the source of experiment data, data annotation information, and experimental environment. Then, we show and analyse the results in CARLA.

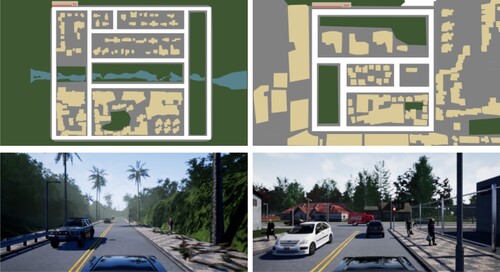

5.1. Simulated environment and experiment settings

The CARLA maps are shown in Figure . This paper utilises two towns in CARLA to conduct training and evaluation, respectively. Training data is collected in Town01 where an expert performs autonomous driving to obtain the open source CARLA100 data set. Specifically, the expert can get overall states related to driving, including accurate the town map, intelligent vehicles, all other vehicles and pedestrians. The driving path of the expert is calculated using the standard planner. The planner uses A* algorithm to determine the path to a certain target location, then this path is converted into a series of path points, generated by the PID controller to generate a throttle, brake and steering angle. Expert vehicles are stabilised at the centre of the lane, maintaining a constant speed of 35 km per hour during straightness, reducing the speed of about 15 km per hour. In addition, the programme of expert vehicles can respond to visible pedestrians when needed to prevent collisions. When the distance from the pedestrian is greater than 5 m and less than 15 m, the expert vehicle reduces the speed according to the collision distance. When the distance from the pedestrians is below 5 m, the expert vehicle will stop completely. Expert vehicles will also stop when the current car is approaching 5 m. In the process of data collection, expert vehicles will never perform changes or overtakes. In order to avoid front obstacles, the expert vehicle will only reduce the speed waiting for front pedestrians or vehicles.

Figure 6. Town01 and Town02 in CARLA (Dosovitskiy et al., Citation2017).

In CARLA100, the total number of pedestrians spawned around the town was randomly sampled from 50 to 100, and the total number of vehicles spawned in the town was randomly sampled from 30 to 70. There are four types of weather conditions when collecting data, i.e. Clear Noon, Clear Noon after Rain, Heavy Rain Noon, and Clear Sunset. The tasks in CARLA are defined as:

Task1: there is no dynamic objects in the town.

Task2: several dynamic objects exist in the town.

Task3: many dynamic objects exist in the town.

Each task has 25 start-end position pairs, and if any collision larger than a fixed magnitude occurs, the episode ends. When a new episode starts, the agent is sent back to a new start position. We utilise success rate to evaluate the approaches where the agent is expected to reach the goal position without any collision or timeout. Note that a traffic light violation will not lead to a termination.

5.2. Training detail

We train the StarGAN-V2 model (Choi et al., Citation2020) with the above two datasets. The datasets collected in CARLA are divided into two parts. We train two 4-class StarGAN-V2 networks, one for empty urban town tasks and another for regular and dense traffic tasks, using the datasets we collected before. The domain number is four, which means that the training images come from four styles and one style means one weather. We use these four-weather datasets to pre-train StarGAN-V2 networks, which are involved in the whole driving policy. We use the Adam optimiser with and

. The learning rates for generator, discriminator, and encoder are set to

, while that of mapping network is set to

. To handle the overfitting problem, we adopt a 0.5 dropout rate after the last convolutional layer.

When training the conditional imitation network, we adopt Adam with minibatches of 120 samples, the initial learning rate is set to . At each training step, the sample a minibatch randomly form the entire dataset. When the training error has not decreased for over 1,000 steps, we divide the learning rate by 10.

Two training datasets, i.e. the less-car dataset and the more-car dataset, are utilised to train the autonomous driving models. To collect the less-car dataset, we set the numbers of the vehicles to be 30–60, and the numbers of the pedestrians to be 50–100. To collect the more-car dataset, we set the numbers of the vehicles to be 60–400, and the numbers of the pedestrians to be 100–300. Both datasets are collected in the training conditions, Town01 has four kinds of weather, i.e. “Clear Noon”, “Heavy Rain Noon”, “Clear Noon After Rain”, and “Clear Sunset”. Each dataset contains four classes of images collected from different weather styles and each class has 10,000 images. We validate every 20K steps and stop training when the validation error increases for three steps. We use this checkpoint to test our benchmarks.

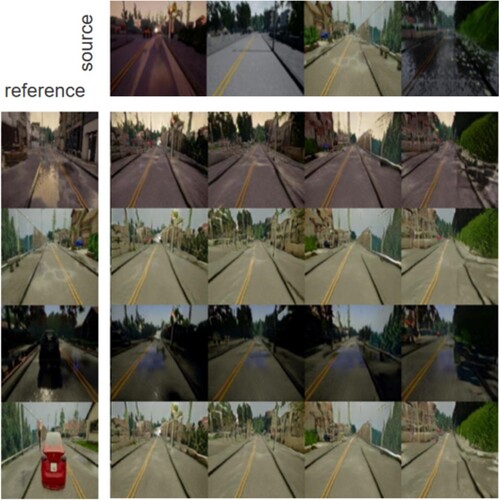

5.3. Results of the translation network

The translation network learns to translate a source image into a target image with a specific style. The generated images in empty scenarios are illustrated in Figure . Our method produces multiple outputs using random noise and reference images. We observe that the translated images have high visual quality. In addition, our method can successfully change the entire weather condition of the source image, while preserving details. Specifically, the vehicles, pedestrians, traffic lights, and buildings are preserved while the colour changes and the raindrops and hydrops are illuminated.

Figure 7. Example images generated by the translation network in empty scenarios. Source images are in the first row and reference images are in the first column where all images are real images. The rest images in the middle are the generated images with the style extracted from the images in the first column. These images are collected in simple task scenarios with only a few vehicles.

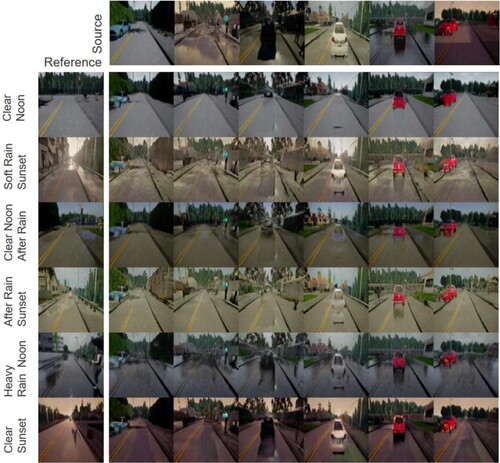

In Figure , we illustrate some translation examples in complex scenarios where contain vehicles. As we can see in the fourth and fifth columns, the black vehicle and the white vehicle are almost erased in some weather conditions which may cause collisions because the vehicles were regarded as natural disturbances and erased by the driving policy. In the last row, the vehicles are best preserved while other natural disturbances are removed. We can observe that the translation network performs best when taking the clear sunset weather condition as a style reference.

Figure 8. Example images generated with vehicles. Source images with vehicles are in the first row, and reference images are in the first column. The rest of the images in the middle are the generated images.

In one word, the performance of our translation network varies when processing different weather styles. In clear sunset weather, our translation network can preserve vivid details, which are beneficial for autonomous driving.

5.4. Driving results

We compare our method Star-CILRS with state-of-the-art methods, i.e. CILRS and three other approaches: CAL (Sauer et al., Citation2018), MT (Li et al., Citation2018), and CIL (Codevilla et al., Citation2018). No extra supervised clues or interactions with the environment during training are adopted in our Star-CILRS model, while CAL and MT requires affordances and semantic segmentation maps, respectively.

We conduct experiments in three scenarios, i.e. Town02 with training weathers, Town01 with new weathers, and Town02 with new weathers. We run the driving policy three times to get the mean and standard deviation. As our imitation network is trained in Town01, we name Town01 as the training town and Town02 as the testing town.

Results in testing town and training weathers: As shown in Table , in Town02 with training weathers, our model performs best on task 1 with an 8% improvement, average performance on task 2 with a 9% drop compared to the best CILRS model, and the worst performance on task 3.

Table 2. Comparison with the state-of-the-arts on the CARLA benchmark in Town02 with training weathers.

Compared with other approaches, our method only outputs best success rate in task1. The main reason is that other approaches perform training and testing in the same weather, where perception biases caused by different picture styles do not exist.

Results in training town and testing weathers: As shown in Table , in Town01 with new weather scenarios, our model performs best in both task 1 and task 2, with an improvement of 4% and 1%, respectively, and performed slightly worse on task 3.

Table 3. Comparison with the state-of-the-art on the CARLA benchmark in Town01 with testing weathers.

The main reason for the improvement is that our translation model is able to transform the source image into a better image with clearer weather, which is beneficial for the policy to get better output.

Results in testing town and testing weathers: In the new Town02 with new weather scenario, one of the most challenging scenarios, the results in Table show that our model achieves the best performance in both task 1 and task 2, with an improvement of 8% and 18%, respectively. However, the performance in task 3 is still inferior to CILRS.

Table 4. Comparison with the state-of-the-art on the CARLA benchmark in Town02 with testing weathers.

In task1 and task2, Our Star-CILRS model plays best compared to the state-of-the-art methods both in training and testing Towns with new weathers. In empty towns, the success rate in testing conditions is similar to the success rate in training conditions and even better. The performance in empty towns proves that our method eliminates the natural disturbances between different weathers and improves the environmental adaptation of the driving policy. When there are dynamic objects like vehicles and pedestrians, the performance of our model degrades. As for task3, the advantage of Star-CILRS is not clear. One of the reasons can be that in the training process, the model is influenced by datasets and as we discussed in Section 5.2, the vehicles are not translated well in some cases so that while adding dynamic obstacle, the agent performs worse than it performs in the empty town. Another reason is the complexity of driving in dense traffic towns itself.

The above results demonstrate the effectiveness of our method. The main reason is that our method utilise a weather-style translation model to reduce the style difference of images. In addition, the Star-CILRS model is based on conditional imitation learning that can train a robust driving model.

6. Conclusion

The deployment of autonomous driving algorithms on end side devices has important research significance and economic value. This paper studies the use of lower computational cost networks while improving autonomous driving performance. We adopt the Star-GAN, a translation network, for the environmental adaptability of vision-based driving models. In this paper, we introduce the translation network to the CILRS driving model and propose the Star-CILRS model to remove natural noise in different weather conditions. Furthermore, we explored the influence of weather conditions on driving strategies and selected a clear sunset style as our target style based on the analysis results. During the testing phase, we validate our proposed model in the CARLA simulator and compare it with other state-of-the-art methods. Experimental results illustrate that our Star-CILRS model improves the perception of driving policies in empty cities and regular traffic. However, in towns with complex and dense traffic, we did not get satisfactory results. In addition to the difficulty of the model itself in such a complex environment, the performance of the translation model has yet to be improved, and this dataset contains a dataset of RGB images taken in cities and towns in different weather conditions.

In future research, we will continue to explore the application of environmental adaptability models in driving strategies, hoping to further improve the adaptability of autonomous driving algorithms to different environmental styles. In addition, in order to efficiently apply the deep models on end side devices, we will study how to reuse the modules or models for improving the efficiency of the deep learning algorithms.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

References

- Badawi, M., Ibrahim, S. A., Mansour, D. E. A., El-Faraskoury, A. A., S. A. Ward, Mahmoud, K., & Darwish, M. M. (2022). Reliable estimation for health index of transformer oil based on novel combined predictive maintenance techniques. IEEE Access, 10, 25954–25972. https://doi.org/10.1109/ACCESS.2022.3156102

- Bai, X., Wang, X., Liu, X., Liu, Q., Song, J., Sebe, N., & Kim, B. (2021). Explainable deep learning for efficient and robust pattern recognition: A survey of recent developments. Pattern Recognition, 120, 108102. https://doi.org/10.1016/j.patcog.2021.108102

- Bai, X., Zhou, J., Ning, X., & Wang, C. (2022). 3d data computation and visualization. Elsevier.

- Bogdoll, D., Nitsche, M., & Zöllner, J. M. (2022). Anomaly detection in autonomous driving: A survey. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 4488–4499). IEEE.

- Choi, Y., Choi, M., Kim, M., Ha, J. W., Kim, S., & Choo, J. (2018). Stargan: Unified generative adversarial networks for multi-domain image-to-image translation. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 8789–8797). IEEE.

- Choi, Y., Uh, Y., Yoo, J., & Ha, J. W. (2020). Stargan v2: Diverse image synthesis for multiple domains. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 8188–8197). IEEE.

- Codevilla, F., Müller, M., López, A., Koltun, V., & Dosovitskiy, A. (2018). End-to-end driving via conditional imitation learning. In 2018 IEEE international conference on robotics and automation (ICRA) (pp. 4693–4700). IEEE.

- Codevilla, F., Santana, E., López, A. M., & Gaidon, A. (2019). Exploring the limitations of behavior cloning for autonomous driving. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 9329–9338). IEEE.

- Dosovitskiy, A., Ros, G., Codevilla, F., Lopez, A., & Koltun, V. (2017, November). CARLA: An open urban driving simulator. In S. Levine, V. Vanhoucke, & K. Goldberg (Eds.), Proceedings of the 1st annual conference on robot learning (Vol. 78, pp. 1–16). PMLR. http://proceedings.mlr.press/v78/dosovitskiy17a.html.

- Elsisi, M. (2020). Optimal design of nonlinear model predictive controller based on new modified multitracker optimization algorithm. International Journal of Intelligent Systems, 35(11), 1857–1878. https://doi.org/10.1002/int.v35.11

- Elsisi, M. (2022). Improved grey wolf optimizer based on opposition and quasi learning approaches for optimization: Case study autonomous vehicle including vision system. Artificial Intelligence Review, 55(7), 5597–5620. https://doi.org/10.1007/s10462-022-10137-0

- Elsisi, M., & Tran, M. Q. (2021). Development of an IoT architecture based on a deep neural network against cyber attacks for automated guided vehicles. Sensors, 21(24), 8467. https://doi.org/10.3390/s21248467

- Elsisi, M., Tran, M. Q., Mahmoud, K., Mansour, D. E. A., Lehtonen, M., & Darwish, M. M. (2022). Effective IoT-based deep learning platform for online fault diagnosis of power transformers against cyberattacks and data uncertainties. Measurement, 190, 110686. https://doi.org/10.1016/j.measurement.2021.110686

- He, K., Zhang, X., Ren, S., & Sun, J. (2015). Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 770–778). IEEE.

- Isola, P., Zhu, J., Zhou, T., & Efros, A. A. (2017, July). Image-to-image translation with conditional adversarial networks. In 2017 IEEE conference on computer vision and pattern recognition (CVPR) (pp. 5967–5976). IEEE Computer Society.

- Li, Z., Motoyoshi, T., Sasaki, K., Ogata, T., & Sugano, S. (2018). Rethinking self-driving: Multi-task knowledge for better generalization and accident explanation ability.

- Lv, K., Du, H., Hou, Y., Deng, W., Sheng, H., Jiao, J., & Zheng, L. (2019). Vehicle re-identification with location and time stamps. In CVPR workshops (pp. 399–406). IEEE.

- Lv, K., Sheng, H., Xiong, Z., Li, W., & Zheng, L. (2020a). Improving driver gaze prediction with reinforced attention. IEEE Transactions on Multimedia, 23, 4198–4207. https://doi.org/10.1109/TMM.2020.3038311

- Lv, K., Sheng, H., Xiong, Z., Li, W., & Zheng, L. (2020b). Pose-based view synthesis for vehicles: A perspective aware method. IEEE Transactions on Image Processing, 29, 5163–5174. https://doi.org/10.1109/TIP.83

- Miao, J., Wang, Z., Ning, X., Xiao, N., Cai, W., & Liu, R. (2022). Practical and secure multifactor authentication protocol for autonomous vehicles in 5G. Software: Practice and Experience.

- Mnih, V., Badia, A. P., Mirza, M., Graves, A., Lillicrap, T., Harley, T., Silver, D., & Kavukcuoglu, K. (2016). Asynchronous methods for deep reinforcement learning. In International conference on machine learning (pp. 1928–1937). PMLR.

- Pan, X., You, Y., Wang, Z., & Lu, C. (2017). Virtual to real reinforcement learning for autonomous driving.

- Sauer, A., Savinov, N., & Geiger, A. (2018). Conditional affordance learning for driving in urban environments. In Conference on robot learning (pp. 237–252). PMLR.

- Sheng, H., Lv, K., Liu, Y., Ke, W., Lyu, W., Xiong, Z., & Li, W. (2020). Combining pose invariant and discriminative features for vehicle reidentification. IEEE Internet of Things Journal, 8(5), 3189–3200. https://doi.org/10.1109/JIoT.6488907

- Wang, C., Ning, X., Sun, L., Zhang, L., Li, W., & Bai, X. (2022). Learning discriminative features by covering local geometric space for point cloud analysis. IEEE Transactions on Geoscience and Remote Sensing, 60, 1–15. https://doi.org/10.1109/TGRS.2022.3170493

- Wang, C., Wang, X., Zhang, J., Zhang, L., Bai, X., Ning, X., & Hancock, E. (2022). Uncertainty estimation for stereo matching based on evidential deep learning. Pattern Recognition, 124, 108498. https://doi.org/10.1016/j.patcog.2021.108498

- Wu, J., Huang, Z., & Lv, C. (2022). Uncertainty-aware model-based reinforcement learning: Methodology and application in autonomous driving. IEEE Transactions on Intelligent Vehicles, 1–10. https://doi.org/10.1109/TIV.2022.3185159

- Yan, C., Pang, G., Bai, X., Liu, C., Xin, N., Gu, L., & Zhou, J. (2021). Beyond triplet loss: Person re-identification with fine-grained difference-aware pairwise loss. IEEE Transactions on Multimedia, 24, 1665–1677. https://doi.org/10.1109/TMM.2021.3069562

- Zablocki, É., Ben-Younes, H., Pérez, P., & Cord, M. (2022). Explainability of deep vision-based autonomous driving systems: Review and challenges. International Journal of Computer Vision, 130(10), 2425–2452. https://doi.org/10.1007/s11263-022-01657-x

- Zhang, H., Lin, Y., Han, S., Wang, S., & Lv, K. (2022). Conservative distributional reinforcement learning with safety constraints. arXiv preprint arXiv:2201.07286.

- Zhu, J. Y., Park, T., Isola, P., & Efros, A. A. (2017). Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE international conference on computer vision (pp. 2223–2232). IEEE.