ABSTRACT

Introduction

The aim of this study was to investigate the feasibility of an online clinical competency assessment for use with physiotherapy students as an alternative option to a traditional in-person assessment.

Methods

A mixed methods approach was used where student competency was evaluated by experienced assessors in both an in-person and an online assessment, and semi-structured interviews were conducted with all participants. Participants’ experiences and student competency outcomes were explored to evaluate the feasibility of the online assessment in terms of Implementation, Practicality, Acceptability and Demand.

Results

Participants described the online assessment as feasible in terms of both practicality and implementation. However, some concerns were raised by the assessors regarding the ability of the online assessment to capture “hands-on” skills, environment management skills and risk management skills. Competency outcomes of the online assessment were acceptable with over 80% of students received the same outcome on both assessments.

Conclusion

These findings suggest that an online assessment is a feasible method for evaluating clinical competence in physiotherapy students. However, certain challenges need to be addressed and further validation completed to ensure successful implementation. Ensuring the feasibility of online methods for assessing clinical competence has important implications for the future use of online assessment in health professional education.

Introduction

The assessment of clinical competence is a fundamental component of health professional education. Clinical competence is often referred to as the ability of an individual to apply skills, knowledge, judgment and personal attributes to practice safely and effectively in a particular clinical role (Yanhua and Watson, Citation2011). An assessment of clinical competency attempts to evaluate an individual’s performance of this set of abilities, typically allowing judgment of the progress a learner has made toward acquiring standards or skill levels while simultaneously facilitating their learning (Boud and Dochy, Citation2010). In physiotherapy and other health professions, clinical competence is assessed using a variety of methods, including OSCEs (Objective Structured Clinical Examination), standardized case-based assessments (e.g., an encounter with a standardized patient) and assessment of case-based learning on placement. These types of assessments are considered reliable and valid in the assessment of clinical skills (Khan, Gaunt, Ramachandran, and Pushkar, Citation2013; Patrício, Julião, Fareleira, and Carneiro, Citation2013; Petrusa, Citation2002; Wass, Van der Vleuten, Shatzer, and Jones, Citation2001). Hence, many accrediting bodies utilize these types of assessments of clinical competence in a high-stakes environment to determine “entry to practice” skill levels (Australian Physiotherapy Council, Citation2023; Federation of State Boards of Physical Therapy, Citation2021; National Council of State Boards of Nursing, Citation2023). However, the challenge with these methods of assessing clinical competence is that they typically require the subject, assessors, and patients to be physically co-located.

Assessments of clinical competence were impacted dramatically by the COVID-19 pandemic, especially those requiring in person interaction (Kumar et al., Citation2021; Pokhrel and Chhetri, Citation2021). To minimize the spread of COVID-19, the implementation of physical distancing became a crucial preventive measure, resulting in the cancellation of assessment pathways undertaken by many accrediting bodies to determine clinical competence. As these types of in-person assessments are commonly employed in high-stakes contexts, such as university evaluations of graduating students and accrediting bodies assessments of competency, they play a critical role in the process of granting registration to new healthcare professionals entering the workforce. Hence, the need for assessment formats that did not require in-person interaction grew in response to the COVID-19 pandemic, and this demand was particularly evident for accreditation and exit examinations (Tognon, Grudzinskas, and Chipchase, Citation2021).

Using technology to shift these assessments into an online format was an obvious solution, drawing on methodologies investigated in previously established online assessments of clinical competence (Cantone, Palmer, Dodson, and Biagioli, Citation2019; Gupta et al., Citation2020; Palmer et al., Citation2015). For example, accreditation authorities in dentistry, medicine, and nursing were able to change their exit OSCEs from in-person to online, hybrid, video or telehealth encounters (Boursicot et al., Citation2020; Boyle et al., Citation2020; Heal et al., Citation2022; Khalaf, El-Kishawi, Moufti, and Al Kawas, Citation2020). This transition was supported by a recent systematic review that found remote clinical assessments were viable and provided an alternative method for assessing many clinical skills (Kunutsor et al., Citation2022). However, the review also noted that in some instances, particularly when used to evaluate practical “hands-on” clinical skills, the online format was limited compared to traditional in-person assessments. While the move to online education and assessment of clinical competence was necessary during the COVID-19 pandemic, further detailed exploration of this approach is now required, specifically within physiotherapy, to continue to investigate its potential as a more flexible and future proof mode of assessment.

Recognizing this gap in evidence, the purpose of this study was to evaluate the feasibility of a new format of online standardized simulated case-based assessment of physiotherapy clinical competence (online examination) in relation to a current in-person standardized simulated case-based clinical assessment (in-person examination).

Methods

A prospective mixed methods approach was adopted to investigate aim of this feasibility study, where feasibility was determined a priori to consist of 4 domains: (i) Implementation (e.g. can the online examination be implemented as planned), (ii) Practicality (e.g. can the online examination be conducted within participants and examination constraints), (iii) Acceptability (e.g. how do the intended recipients of the online examination respond to the online examination), and (iv) Demand (e.g. is there sufficient demand for the online examination) (Bowen et al., Citation2009). Physiotherapy students completed two examinations of clinical competency (one online and one in-person) while being assessed as either competent or not competent by qualified physiotherapists using a standardized assessment form. Both student and assessor participants completed semi-structured interviews where their experiences and perspectives on the examinations were explored. Given that the focus of the domains of feasibility requires an understanding of experiences, semi-structured interviews were used to explore the experiences and perspectives of the participants. In addition, a preliminary comparison of the outcomes (competent or not competent) between the in-person and online examination provided further information to evaluate the practicality and implementation of the online examination. Ethics approval was granted by Flinders University and La Trobe University (Project number 4683). All participants provided written informed consent.

Models of assessment

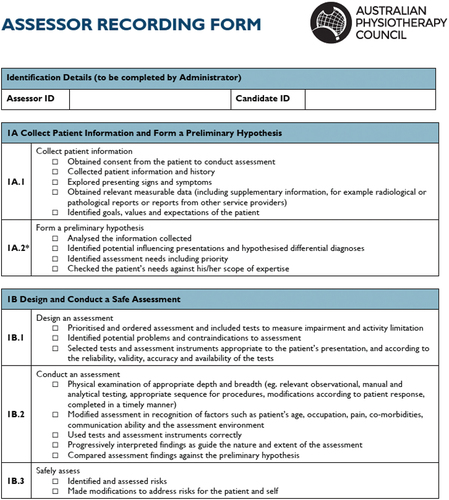

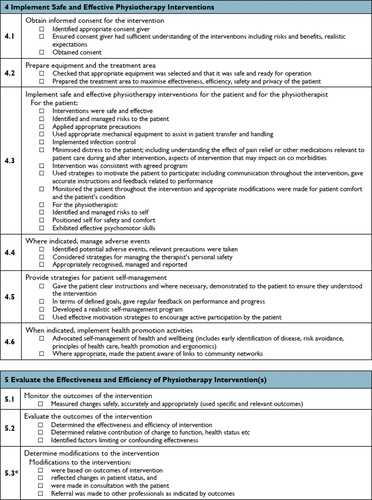

The in-person and online examinations were standardized simulated case-based assessments of clinical competence that were designed to assess physiotherapy competency using the same standardized assessment form. The in-person examination, used as the reference standard, is currently in use by the Australian Physiotherapy Council (APC) for evaluating “entry to practice” competency in overseas physiotherapists seeking registration to practice in Australia. The new online examination was developed using a cyclical process beginning with the initial idea of creating a high quality online clinical assessment similar to the current in-person examination (Hirokawa and Poole, Citation1996; Segal, Citation1982). A working group of five experts in the field of assessment in the health professions was established to workshop potential models of online assessments. The working group used their expert knowledge, previous experiences with assessments and historic in-person examination data to refine the model. Both examination formats followed the same protocol, requiring participants to assess and treat a patient using standardized case scenarios, with the patient at the center of the case study being portrayed by a trained actor.

In-person examination

Detailed case scenarios provided comprehensive guidance on the case for the actor and assessors, and case information was provided to the participant at the start of the examination. Following the provision of case information to the participant a patient consultation was performed, lasting approximately 75 minutes. These cases were designed for participants to demonstrate skills in one of three practice areas defined as cardiorespiratory, musculoskeletal, and neurological physiotherapy. Examinations were observed and evaluated by two independent, trained assessors. Participants attended the APC simulation laboratory in Melbourne, Australia and were co-located in the clinical simulation laboratory with the patient (actor) and two assessors. The student participant interacted with the patient (actor) to complete a full assessment and treatment as per usual physiotherapy practice.

Online examination

The online examination followed the same format as the in-person examination regarding the provision of case scenario information, the time allocated to the examination, the discipline areas of assessment that were examined, and the presence of two assessors to examine the student performance. The only difference to the protocol described above was the location and resulting interaction. The patient (actor) was based in the APC simulation laboratory where a single camera-enabled smart device captured them and their environment. The student participant and assessors joined via teleconference meeting from a private location where they could see each other and the actor in the APC simulation laboratory. The student participant interacted with the patient (actor) as normal to complete the subjective assessment, physical assessment and treatment as normal, supplementing verbal description for any hands-on techniques.

Participants

Two groups of participants were recruited into this study: (i) physiotherapy student participants who completed the online and in-person examinations and (ii) assessor participants who evaluated the student participants for competence in both testing situations. Physiotherapy students from a range of year levels were recruited, as it was anticipated that they would represent a range of skill levels approaching “entry to practice” competency. This included students with minimal clinical experience to investigate the ability of the examination to detect a not competent performance.

Students

Students enrolled in “entry to practice” physiotherapy programs at Melbourne-based universities in early 2022 were invited to participate through e-mails, flyers and in-person information sessions at the university campus. Students enrolled in the first year of a bachelor’s degree were excluded as they had yet to complete any learning experiences in a clinical environment and, therefore, reasoned unlikely to be able to perform the skills required. Students were provided with information booklets for both examinations as per standard APC protocol. Students were informed that their participation in this study was entirely voluntary, and their participation would have no impact on their physiotherapy studies.

Assessors

All assessors (registered physiotherapists) appointed and trained by the APC to provide in-person examinations were invited to participate as assessors in this study. Assessors available on the day of data collection and willing to train in the new procedure were recruited via e-mail. A member of the research team (BF) led a one-hour group training session with the assessors in the online examination procedure and provided assessors with a modified assessors manual.

Procedures

The order of assessments (in-person or online examination first) was randomized for each student, as well as the area of practice for both assessments (cardiorespiratory, musculoskeletal, neurological rehabilitation) using a computer-generated random number table. Students were aware of which area of practice they had been allocated to prior to the day of data collection but not the specific case scenario. Assessors were allocated to examinations as per the APC protocol. Assessors were also allocated to ensure they did not assess the same student twice to minimize measurement bias.

Data collection commenced at the beginning of the academic year (March and April 2022). Before completing the examinations of clinical competency, demographic information (age, sex, experience level, languages spoken) and a 5-point Likert scale rating of attitude (“extremely negative,” “somewhat negative,” “neither positive nor negative,” “somewhat positive,” “extremely positive”) and experience with technology (“none at all,” “a little,” “a moderate amount,” “a lot,” “a great deal”) were gathered from all participants.

Students completed an online and an in-person examination in the same practice area within ten days. Both examinations were completed within a fortnight of each other to minimize any learning effect; as the students were completing university studies, there was a potential risk they could gain new skills that may substantially alter their clinical competence.

Performance of competency for both the in-person and online examinations was evaluated using the protocols developed and implemented by the APC in their standard assessment of physiotherapy competence. In this protocol, two independent assessors used the standardized assessment form to determine the student as either “competent” or “not competent” on each of eight domains (Appendix 1). The eight domains relate to key clinical competences, such as conduct a safe assessment, create a plan, interpret findings and evaluate effectiveness, required of “entry to practice” level physiotherapists in Australia (Physiotherapy Board of Australia & Physiotherapy Board of New Zealand, Citation2015). Following the examination, the two assessors discussed any disparities and reached an agreed score for each domain and an outcome (competent or not competent) for the examination, which was recorded on the final moderated standardized assessment form. To obtain a competent outcome for the examination, the student needed to be evaluated as competent in all domains.

Within one week of the second examination, semi-structured open-ended interviews were conducted via Zoom, with the format chosen allowing for flexibility and depth of insight (DiCicco-Bloom and Crabtree, Citation2006; Qu and Dumay, Citation2011). The interviews were completed in the week following the participant’s last examination at a mutually convenient time and explored participants’ perceptions around the Implementation, Practicality, Acceptability and Demand of the online assessment. The interviews lasted between 20 to 40 minutes and were audio recorded on two devices in case one failed. The research team piloted the interview before finalization, and one researcher (BF) completed all interviews. BF kept a reflective journal and completed regular debriefs with other research team members throughout this process.

Data analysis

Students and assessors were provided with a unique identifier that ensured the matching of demographic data to examination results, and de-identified data were used in the analysis.

Semi-structured interviews

All interviews were conducted by a single interviewer. All interviews were transcribed using digital transcription services. Transcripts were checked by the research team against the audio recordings for accuracy and then sent back to participants for comment or correction. Nine participants responded, six replied with no changes, two provided minor grammatical corrections, and one provided clarifications of the statements that were included in the analysis.

Thematic analysis at a predominantly semantic level was used as the flexibility of this approach facilitated a range of theoretical perspectives and orientations (Braun and Clarke, Citation2006; Terry, Hayfield, Clarke, and Braun, Citation2017). First, a deductive approach was used to investigate the domains of feasibility related to Implementation, Practicality, Acceptability, and Demand, as identified a priori from the literature (Bowen et al., Citation2009). Second, an inductive approach was used to investigate any additional themes. The analysis followed the predefined steps of initial familiarization, generating initial codes, searching for themes by collating codes, reviewing themes against the codes and entire data set, and defining and finalizing themes (Braun and Clarke, Citation2006; Braun, Clarke, and Weate, Citation2016; Terry, Hayfield, Clarke, and Braun, Citation2017). Finally, before finalizing the themes, they were reviewed against the entire data set multiple times until no new themes were generated (Terry, Hayfield, Clarke, and Braun, Citation2017). One researcher (BF) conducted the initial steps from initial familiarization to searching for themes from codes with regular team meetings to discuss codes and workshop themes. All researchers were involved in the final steps of reviewing and finalizing the themes. Finalized themes were sent back to a random 10% of participants for validation (Jackson, Drummond, and Camara, Citation2007).

Outcomes of assessments

As an additional step to evaluate the feasibility domains of Implementation and Practicality, the outcomes of student performance/competency were compared between the online and in-person examinations. Each student participant was rated as either competent or not competent by the assessors for each of the online and in-person examinations. A descriptive analysis was conducted where the number of students who were determined as competent or not competent on each assessment was reported, as well as the proportions of students who had the same outcome on each assessment (passed both or failed both) or different outcomes on each assessment (passed one and failed one).

Results

Demographics

Students

Seventeen physiotherapy students were recruited, with an average age of 24.3 (SD = 3.91) years, of whom 70.6% were female, and 70.6% spoke more than one language. Over half of the students reported “a lot” to “a great deal” of experience with technology, with no students reporting negative attitudes to technology or online examination (). One additional student enrolled in the study but withdrew before data collection commenced with no reason provided; their data was not included in the analysis.

Table 1. Participant’s demographics.

Assessors

A group of 13 assessors currently employed by the APC were recruited to complete the examinations. Three assessors completed only online examinations, four completed only in-person examinations and six completed both types of examinations. The assessors had an average age of 45.6 (SD = 11.4) years and had been an APC assessor for an average of 9 (SD = 5.5) years. Due to unforeseen circumstances related to the COVID-19 pandemic, one member of the research team was an assessor for two in-person examinations; their de-identified data is included in this group. Assessors generally had a lower attitude toward remote assessments, with 23.1% reporting a negative attitude ().

Semi-structured interviews

Deductive themes

Using key areas of focus from feasibility literature to guide the deductive analysis of the interviews, several themes were identified (Bowen et al., Citation2009). These are summarized in and . Overall, participants were positive about the online examination regarding Implementation and Practicality. However, participants had mixed attitudes around its Acceptability and Demand. Student participants largely felt that the online examination was acceptable to use, and there was a demand within the profession. In contrast, most assessors had concerns relating to the need and ongoing use of the online examination, and overall, had a lower level of satisfaction relating to the online examination.

Table 2. Feasibility themes with consensus.

Table 3. Feasibility themes without consensus.

Inductive themes

Further themes were found through inductive analysis, that expand on the findings in the above deductive themes, particularly relating to Acceptability. These inductive themes focus on how the assessors and students perceived the online examination. The themes are detailed below, with quotes included supporting these findings.

Realistic environment

Assessors expressed a belief that to assess competence, there needs to be an element of a realistic environment and this was necessary to either support or challenge performance. Assessors reasoned that cues from the environment could help trigger students to perform the automatic tasks (e.g., hand hygiene, checking bed brakes) and provide prompts regarding performance expectations.

they are missing a lot of the visual cues that you get from actually being face to face with somebody. And that can be to their detriment. - Assessor 10

Assessors described that a realistic environment creates challenges for the candidate to test their performance in a three-dimensional way and that moving the assessment online reduced the realism offered by the in-person environment.

I feel like every time we take a step back, going from a real patient to simulation then simulation to online I feel you lose something in that. - Assessor 7

Risk Management skills

Assessors described that the online format reduced their capacity to assess how a candidate would react in the moment to an environmental risk or hazard and felt that this was essential to capturing clinical competence.

They say I’ll stand in a position where I’m close enough to provide support in case you know, the patient isn’t balanced or whatever. And it’s like, yeah, but would you like, where would you actually be? Where would your hands actually be? And what is your response time like if that patient trips or stumbles? - Assessor 10

Hands-on skills

All assessors expressed the view that physiotherapy is a hands-on profession and, as such, clinical competence should be assessed in a format so that hands-on skills could be clearly visualized. Assessors also held a strong belief that the student’s ability to describe and explain a technique would not necessarily correlate to their ability to perform that same technique in person.

I feel aspects of physiotherapy need to be hands on. A candidate can describe it to us, but you still need to see it. - Assessor 9

I just, I felt that I wasn’t really able to gauge the level of their competency because it’s one thing to say they’re going to do something and it’s quite another to actually do it. - Assessor 12

Clinical knowledge and reasoning

The assessors stated that the online examination was equal to or better than the in-person examination to evaluate a student’s clinical knowledge and reasoning skills most likely because of the requirement for more communication.

in some ways because they’re talking through a little bit more what they’re doing sometimes, it was actually easy to work out what their thoughts processes were in a clinical reasoning. - Assessor 6

Challenges of online

The final theme highlights the perspectives of the students regarding their experience with the online examination. In contrast to the assessors, students reported the experience of the online examination as comparable to the in-person examination and felt confident demonstrating their clinical competence through this format.

It felt pretty comparable … just to me like it felt like that [I] was doing the same thing.- Student 8

However, students did report that certain skills were more challenging to demonstrate online. Specifically, they described difficulties in recalling the appropriate actions and providing descriptions of these actions, as well as the challenge of establishing rapport with patients through online interactions.

I couldn’t remember all the things like I should have said about, you know, stopping, to check the brake or sanitizing my hands or kneeling on the floor while I’m testing her manual muscle testing. Yeah. I couldn’t remember when I’m in the exam, … It’s just natural things that we do in person, right. - Student 2

Outcomes of assessments

Seventeen students completed 34 examinations in the areas of cardiorespiratory (n = 12, 35.3%), musculoskeletal (n = 12, 35.3%), and neurological rehabilitation (n = 10, 29.4%). Of the 34 examinations, 29 (85.3%) had an outcome of not competent and 5 (14.7%) had an outcome of competent.

Fourteen students (82.4%) received the same outcome on both examinations, with only 3 students (17.6%) receiving a different outcome on each examination (i.e., passed one and failed one). As can be seen from , one student passed the in person and failed the online examination, while two students failed the in-person examination while passing the online.

Table 4. Cross-tabulation of student outcomes (competent/not-competent) in online versus in-person examinations.

Discussion

The change to assessment methods in response to the COVID-19 pandemic produced a solid foundation of work in the field of higher education and accreditation relating to online assessment of clinical competence. The rapid implementation of online assessments by universities and accrediting bodies allowed continued graduation of health professionals at a time when the healthcare workforce was under significant strain (Giri and Stewart, Citation2023; Kunutsor et al., Citation2022). Unfortunately, the limited opportunity to prepare for the shift meant that many studies investigating online assessment were conducted retrospectively and, therefore, were unable to control sources of bias or confounding variables (Boyle et al., Citation2020; Felthun, Taylor, Shulruf, and Allen, Citation2021; Lara, Foster, Hawks, and Montgomery, Citation2020). To the authors’ knowledge, this is the first published study to prospectively evaluate the feasibility of an online standardized simulated case-based assessment of physiotherapy clinical competence and to compare the assessment outcome to an in-person assessment. The findings of this study confirm the conclusions of existing literature that an online examination is feasible for use by assessors and students despite some limitations proposed by the assessors. The findings of the preliminary comparison also indicate that outcomes of an online examination of clinical competence are comparable to an in-person examination, with just over 80% of students receiving the same outcome in both examinations.

The findings from the semi-structured interviews investigating the feasibility of the online examination were overwhelmingly positive. Participants reported implementing the online examination with minimal assistance, minimal deviation from the planned procedure and no issues concerning the time, resources or skills required to complete the examination. These findings suggest that the online examination process was not complicated, which is particularly satisfying given that 40% (12 of 30) of participants reported moderate or low amounts of experience with technology. Previous studies involving online OSCEs and similar short-case style online assessments also reported positive feasibility from students and assessors (Cantone, Palmer, Dodson, and Biagioli, Citation2019; Chan et al., Citation2014; Donn, Scott, Binnie, and Bell, Citation2021; Mooney, Peyre, Clark, and Nofziger, Citation2020; Munshi, Alsughayyer, Alhaidar, and Alarfaj, Citation2020) with many highlighting that participants found online assessments to be an adequate alternative to in-person assessment (Chan et al., Citation2014; Donn, Scott, Binnie, and Bell, Citation2021; Mooney, Peyre, Clark, and Nofziger, Citation2020). This is reinforced by the perspectives of the students in our study, who found the overall experience of the online examination to be practical to implement and the format to be a potential alternative to the in-person examination.

The acceptability of, and demand for, the online examination varied between the participant groups in our study. While students readily embraced the online examination, assessors displayed a higher level of reluctance. The inductive themes present possible reasons for assessors’ hesitancy toward the online examination; specifically, that the online examination may have challenged the assessors’ established beliefs regarding the content and scope of clinical competence assessments. These concerns may be genuine or merely perceptions held by the assessors, which could be alleviated if addressed by additional educational initiatives, collaborative efforts, and increased exposure to online examinations. When taken together, the themes overall suggest the application of the online examination is feasible but highlight the need for more inquiry into the root of the assessors’ perceptions toward online assessment and how to address these.

The quantitative findings of this study also provide a preliminary indication that an online examination can detect similar determinations of clinical competence as an in-person examination. The findings are consistent with studies that have compared online to in-person OSCEs in a clinical profession. In these studies, there was no difference in failure rates between the online and in-person assessment formats (Lara, Foster, Hawks, and Montgomery, Citation2020) or between mean assessment scores (Arrogante et al., Citation2021; Chan et al., Citation2014; Chen et al., Citation2019; Gortney, Fava, Berti, and Stewart, Citation2022). The ability to distinguish not competent performance is often the primary focus of high-stakes assessments at “entry to practice” level in order to ensure the safety of the public (Physiotherapy Board of Australia & Physiotherapy Board of New Zealand, Citation2015). It is important to note that there was a high failure rate among the student participants of this study, and the similarity in outcome evaluations between formats suggests that the online examination method appropriately detected the majority of students who did not meet competence. These results are encouraging and provide support for a more robust/larger comparison between assessment formats.

Consideration must also be given to the possible explanations for the three students assessed to have different competency outcomes between the online and in-person assessment formats. These differences could stem from format disparities, individual factors, or simple chance occurrences. A potential format disparity is that during the in-person examination, assessors can move around the examination room, whereas the online examination provides only a single camera view. This reduces the information that is available to assessors to judge student performance in the online format. Another possibility may be the complexity of the cases utilized. Many studies conducted during the COVID-19 pandemic reported that the cases/tasks used online had reduced complexity to facilitate the format (Felthun, Taylor, Shulruf, and Allen, Citation2021; Lawrence et al., Citation2020). Although the research team endeavored to ensure the cases were of comparable complexity, it is plausible that the online examination used simpler cases in contrast to the more intricate cases used in the in-person examination, potentially affecting student outcomes. Considering individual factors, students may feel less anxious and more comfortable using an online assessment away from the pressures of peer anxiety (Hytönen et al., Citation2021; Kakadia, Chen, and Ohyama, Citation2021; Mak, Krishnan, and Chuang, Citation2022). Additionally, students’ preferences for learning and assessment formats vary and hence may impact their performance on non-preferred assessments. While important to consider these factors, it is crucial to note that this pertains to a small portion of the sample, and the observed differences may not necessarily represent a genuine finding.

This study adds to the growing body of research around the feasibility and validity of online assessments of clinical competence. This is the first study to prospectively evaluate an online standardized simulated case-based assessment of physiotherapy clinical competence and compare it to the performance of an in-person examination. The findings support the research across health professions worldwide that online assessment of clinical competence is a feasible alternative to in-person assessments. As health educators continue to adjust to the post COVID-19 world, the importance of establishing a feasible and valid online assessment of clinical competence continues to be a high priority.

Limitations

The high failure rate in our sample needs to be considered when interpreting the findings of this study. An important consideration in designing this study was that the online examination be able to detect not competent performance. Therefore, students with minimal clinical experience were sought as they were likely to have lower competence levels and test the ability of the online examination to detect non-competency. However, the failure rate in this study likely does not reflect the true distribution of scores typical to candidates of high-stakes clinical assessments when being evaluated for entry to the profession.

As previously discussed, the similarly high failure rate in both the online and in-person examinations has provided additional confidence that the online assessment can appropriately detect not competent performance. Conversely, this has provided minimal information about the ability of the online examination to appropriately detect competent performance. The high failure rate may have also influenced the student experience of the clinical assessments and influenced their perception of the feasibility of this format as an alternative to the in-person examination. However, the impact of this appears minimal as students were largely supportive of the online examination.

Conclusion

The results of this study suggest that the online examination is a feasible method of assessing clinical competence. The online examination also appears to produce comparable measures of clinical competence (fail/pass) to an in-person examination. Further investigation is needed with a larger sample size to confirm the validity of the online examination. This study also adds insight into the perceptions of students and qualified physiotherapists concerning the use of online assessment methods. The contrasting opinions and perceptions present an avenue for further investigation into the potential perception regarding online assessment in the physiotherapy profession. This study also lays the groundwork for future research to validate the online examination in a larger cohort study.

Author contributions

BF, JM, and LC were involved in the initial concept and data analysis, data interpretation, drafting and revising the manuscript. BF and DL were involved in the data collection. All authors read and approved the final manuscript.

Abbreviations

| APC | = | Australian Physiotherapy Council |

| COVID-19 | = | Corona Virus Disease 2019 |

| OSCE | = | Objective Structured Clinical Exam |

| SD | = | Standard Deviation |

Disclosure statement

In accordance with Taylor & Francis policy and the ethical obligation as a researcher, BF reports that they are completing an industry supported PhD in association with the APC, and authors DL and LC are affiliated with the APC. This has been disclosed fully to Taylor & Francis. Author JM has no competing interests to declare.

Data availability statement

The data that support the findings of this study are available from the corresponding author, BF, upon reasonable request.

Additional information

Funding

References

- Arrogante O, López-Torre EM, Carrión-García L, Polo A, Jiménez-Rodríguez D 2021 High-fidelity virtual objective structured clinical examinations with standardized patients in nursing students: An innovative proposal during the COVID-19 pandemic. Healthcare 9: 355.

- Australian Physiotherapy Council 2023 Standard Assessment Pathway. Australian Physiotherapy Council. Melbourne, Australia. https://physiocouncil.com.au/overseas-practitioners/standard-assessment-pathway/.

- Boud D, Dochy F 2010 Assessment 2020: Seven propositions for assessment reform in higher education. p. 1–4. Sydney: Australian Learning and Teaching Council.

- Boursicot K, Kemp S, Ong TH, Wijaya L, Goh SH, Freeman K, Curran I 2020 Conducting a high-stakes OSCE in a COVID-19 environment. MedEdpublish 9: 54.

- Bowen DJ, Kreuter M, Spring B, Cofta-Woerpel L, Linnan L, Weiner D, Bakken S, Kaplan CP, Squiers L, Fabrizio C 2009 How we design feasibility studies. American Journal of Preventive Medicine 36: 452–457.

- Boyle JG, Colquhoun I, Noonan Z, McDowall S, Walters MR, Leach JP 2020 Viva la VOSCE? BMC Medical Education 20: 514.

- Braun V, Clarke V 2006 Using thematic analysis in psychology. Qualitative Research in Psychology 3: 77–101.

- Braun V, Clarke V, Weate P 2016 Using thematic analysis in sport and exercise research. In: Smith B Sparkes A Eds Routledge handbook of qualitative research in sport and exercise, pp. 213–227. London: Routledge.

- Cantone RE, Palmer R, Dodson LG, Biagioli FE 2019 Insomnia telemedicine OSCE (TeleOSCE): A simulated standardized patient video-visit case for clerkship students. MedEdPORTAL 15: 10867.

- Chan J, Humphrey‐Murto S, Pugh DM, Su C, Wood T 2014 The objective structured clinical examination: Can physician‐examiners participate from a distance? Medical Education 48: 441–450.

- Chen T-C, Lin M-C, Chiang Y-C, Monrouxe L, Chien S-J 2019 Remote and onsite scoring of OSCEs using generalisability theory: A three-year cohort study. Medical Teacher 41: 578–583.

- DiCicco-Bloom B, Crabtree BF 2006 The qualitative research interview. Medical Education 40: 314–321.

- Donn J, Scott JA, Binnie V, Bell A 2021 Bell a 2021 a pilot of a virtual objective structured clinical examination in dental education. A response to COVID‐19. European Journal of Dental Education 25: 488–494.

- Federation of State Boards of Physical Therapy 2021 National Physical Therapy Examination (NPTE) Candidate Handbook Federation of State Boards of Physical Therapy. Virginia: United States of America. https://www.fsbpt.org/Portals/0/documents/free-resources/NPTE_Candidate_Handbook.pdf

- Felthun JZ, Taylor S, Shulruf B, Allen DW 2021 Empirical analysis comparing the tele-objective structured clinical examination and the in-person assessment in Australia. Journal of Educational Evaluation for Health Professions 18: 23.

- Giri J, Stewart C 2023 Innovations in assessment in health professions education during the COVID‐19 pandemic: A scoping review. The Clinical Teacher 20: e13634.

- Gortney JS, Fava JP, Berti AD, Stewart B 2022 Comparison of student pharmacists’ performance on in-person vs. virtual OSCEs in a pre-APPE capstone course. Currents in Pharmacy Teaching and Learning 14: 1116–1121.

- Gupta TS, Campbell D, Chater AB, Rosenthal D, Saul L, Connaughton K, Cowie M 2020 Fellowship of the Australian College of Rural & Remote Medicine (FACRRM) Assessment: A review of the first 12 years. MedEdpublish 9: 100.

- Heal C, D’Souza K, Hall L, Smith J, Jones K 2022 Changes to objective structured clinical examinations (OSCE) at Australian medical schools in response to the COVID-19 pandemic. Medical Teacher 44: 418–424.

- Hirokawa RY, Poole MS 1996 Communication and group decision making. pp. 269–300. California, United States of America: Sage Publications.

- Hytönen H, Näpänkangas R, Karaharju‐Suvanto T, Eväsoja T, Kallio A, Kokkari A, Tuononen T, Lahti S 2021 Modification of national OSCE due to COVID‐19–implementation and students’ feedback. European Journal of Dental Education 25: 679–688.

- Jackson RL, Drummond DK, Camara S 2007 What is qualitative research? Qualitative Research Reports in Communication 8: 21–28.

- Kakadia R, Chen E, Ohyama H 2021 Implementing an online OSCE during the COVID‐19 pandemic. Journal of Dental Education 85: 1006.

- Khalaf K, El-Kishawi M, Moufti MA, Al Kawas S 2020 Introducing a comprehensive high-stake online exam to final-year dental students during the COVID-19 pandemic and evaluation of its effectiveness. Medical Education Online 25: 1826861.

- Khan KZ, Gaunt K, Ramachandran S, Pushkar P 2013 The objective structured clinical examination (OSCE): AMEE guide no. 81. Part II: Organisation & administration. Medical Teacher 35: e1447–e1463.

- Kumar A, Sarkar M, Davis E, Morphet J, Maloney S, Ilic D, Palermo C 2021 Impact of the COVID-19 pandemic on teaching and learning in health professional education: A mixed methods study protocol. BMC Medical Education 21: 439.

- Kunutsor SK, Metcalf EP, Westacott R, Revell L, Blythe A 2022 Are remote clinical assessments a feasible and acceptable method of assessment? A systematic review. Medical Teacher 44: 300–308.

- Lara S, Foster CW, Hawks M, Montgomery M 2020 Remote assessment of clinical skills during COVID-19: A virtual, high-stakes, summative pediatric objective structured clinical examination. Academic Pediatrics 20: 760–761.

- Lawrence K, Hanley K, Adams J, Sartori DJ, Greene R, Zabar S 2020 Building telemedicine capacity for trainees during the novel coronavirus outbreak: A case study and lessons learned. Journal of General Internal Medicine 35: 2675–2679.

- Mak V, Krishnan S, Chuang S 2022 Students’ and examiners’ experiences of their first virtual pharmacy objective structured clinical examination (OSCE) in Australia during the COVID-19 Pandemic. Healthcare 10: 328.

- Mooney CJ, Peyre SE, Clark NS, Nofziger AC 2020 Rapid transition to online assessment: Practical steps and unanticipated advantages. Medical Education 54: 857–858.

- Munshi F, Alsughayyer A, Alhaidar S, Alarfaj M 2020 An Online Clinical Exam for Fellowship Certification during COVID‐19 Pandemic. Medical Education 54: 954–955.

- National Council of State Boards of Nursing 2023 NCLEX-RN Test Plan. National Council of State Boards of Nursing. Chicago, United States of America. https://www.nclex.com/files/2023_RN_Test%20Plan_English_FINAL.pdf.

- Palmer RT, Biagioli FE, Mujcic J, Schneider BN, Spires L, Dodson LG 2015 The feasibility and acceptability of administering a telemedicine objective structured clinical exam as a solution for providing equivalent education to remote and rural learners. Rural and Remote Health 15: 3399.

- Patrício MF, Julião M, Fareleira F, Carneiro AV 2013 Is the OSCE a feasible tool to assess competencies in undergraduate medical education? Medical Teacher 35: 503–514.

- Petrusa ER 2002 Clinical performance assessments. In: Norman GR (Eds) International Handbook of Research in Medical Education, pp. 673–709. Dordrecht: Springer.

- Physiotherapy Board of Australia & Physiotherapy Board of New Zealand 2015 Physiotherapy practice thresholds in Australia and Aotearoa New Zealand. Melbourne, Australia: Physiotherapy Board of Australia & Physiotherapy Board of New Zealand. https://cdn.physiocouncil.com.au/assets/volumes/downloads/Physiotherapy-Board-Physiotherapy-practice-thresholds-in-Australia-and-Aotearoa-New-Zealand.PDF.

- Pokhrel S, Chhetri R 2021 A literature review on impact of COVID-19 pandemic on teaching and learning. Higher Education for the Future 8: 133–141.

- Qu SQ, Dumay J 2011 The qualitative research interview. Qualitative Research in Accounting and Management 8: 238–264.

- Segal UA 1982 The cyclical nature of decision making: An exploratory empirical investigation. Small Group Behavior 13: 333–348.

- Terry G, Hayfield N, Clarke V, Braun V 2017 Thematic analysis. The SAGE Handbook of Qualitative Research in Psychology 2: 17–37.

- Tognon K, Grudzinskas K, Chipchase L 2021 The assessment of clinical competence of physiotherapists during and after the COVID-19 pandemic. Journal of Physiotherapy 67: 79–81.

- Wass V, Van der Vleuten C, Shatzer J, Jones R 2001 Assessment of clinical competence. The Lancet 357: 945–949.

- Yanhua C, Watson R 2011 A review of clinical competence assessment in nursing. Nurse Education Today 31: 832–836.