Abstract

Perception in virtual reality is often compared to the perception of pictures. There are, however, important differences. A virtual reality environment, unlike a picture, is interactive and immersive. These properties give rise to novel theoretical challenges. Building on James J. Gibson’s analysis of the perception of depictions, we raise three questions about perception in virtual reality, to which we offer tentative answers: (1) Is virtual reality a kind of picture perception? Answer: No. A virtual reality headset is better understood not as an image-based display but as a pair of electronic spectacles that allow the wearer to sample a simulated ambient optic array. (2) Who creates the information in virtual reality? Answer: The information is a joint creation of hardware developers, software engineers, and the observer. The observer is a co-creator of the information that they detect. (3) Are there affordances in virtual reality? Answer: Yes, but there are limitations on what a given virtual reality environment can provide to its users, resulting from the details of how the environment is implemented. An accurate understanding of the perceptual information available in virtual reality may contribute to current philosophical debates on the nature of experience in virtual reality.

Introduction

The ecological approach to the psychology of visual perception is primarily concerned with natural vision, or with the problem of how animals navigate their environment. However, natural vision is not the only kind of vision that exists. James J. Gibson devoted the final two chapters of his last book to problems around how we perceive depictions (Gibson, Citation1979). Chapter 15 of that book is devoted to the perception of static images, while chapter 16 is devoted to the perception of motion pictures. These two chapters are rich in theoretical insight, and they remain relevant texts for anyone interested in understanding the nature of pictures and picture perception (Blau, Citation2020; Stoffregen, Citation2020).

Since Gibson’s book was published, a number of innovations have occurred in the technology of depiction. Most significantly, our daily lives are now filled with forms of depiction that are interactive in nature. Had Gibson been around to update his final book today he might have felt compelled to write a seventeenth chapter on these interactive forms of depiction. While we claim no special insight into how Gibson would personally have addressed this topic, our aim in what follows is to explore some of the basic issues that arise within a perceptual psychology of interactive depictions. We will focus on two particular modes of interactive depiction: video games and immersive virtual reality.Footnote1 We start by summarizing Gibson’s discussion of the perception of non-interactive depictions, i.e. static and moving images. We then introduce some novel issues that arise with the perception of interactive depictions before moving on to discuss some specific questions around perception in virtual reality: Do users of virtual reality perceive pictures? Who generates the information in virtual reality? Are there affordances in virtual reality? These questions are of relevance to current philosophical discussions around the aesthetics of experience in virtual reality.

The information available in static and moving images

In the natural world, visual perception usually occurs from a moving point of observation. As an animal moves through its environment, the structure of solid angles of light in the optic array is transformed. These transformations are lawful, and are informative to the viewer about the layout of things in the environment. For instance, natural vision follows the law of reversible occlusion (Heft, Citation2020). You can walk around a thing to reveal its previously hidden side. You can locomote from one place to another to reveal a previously hidden vista, and you can retrace your steps to bring the original vista back into view. A major achievement of Gibson’s (Citation1979) ecological approach to perception was to demonstrate that these phenomena of natural vision should be of central concern for psychologists.

Picture perception is different from natural, or ambulatory, perception. The content of a picture does not usually change as the viewer moves (there are partial exceptions, such as lenticular images). Looking at pictures is a special kind of looking, one in which the viewer sees both the actual surface (the canvas, the projection screen) and a depicted environment as it were ‘behind’ this surface. In other words, looking at a picture involves a kind of dual perception. According to Gibson, looking at a picture ‘requires two kinds of apprehension, a direct perceiving of the picture surface along with an indirect awareness of what it depicts’ (Gibson, Citation1979, p. 291). The viewer can see the surface on which the image is depicted, and the viewer can also see an intangible set of surfaces in the depicted environment. Usually, the viewer can readily shift between these two modes of attention.

An interesting question for the perceptual psychologist is this: How can it be that we are able to see a depicted environment of multiple apparent surfaces, even though we are really only looking at one surface (i.e. the canvas, the screen, the photographic print)? Gibson tried to address this question repeatedly throughout his career (Cutting, Citation2000). Part of Gibson’s (Citation1979) answer was to propose that there are two types of information present at the same time. First, there is information about the surface as such. In natural viewing circumstances, the observer can always move their eyes or their body so as to notice the edges of the depiction surface. It is only under highly artificial laboratory conditions that we are ever placed in a situation where we do not have access to stimulus information specifying the edges of the depiction surface.

The more difficult question, however, is about how to characterize the other kind of information—the information about the apparent layout of the depicted environment.

Let us consider motion pictures first. Watching a motion picture is different from looking at a static picture. And both are different from natural vision of the kind that we engage in when we look at things in our surroundings. There are, however, some ways in which watching a motion picture is similar to natural vision. A motion picture preserves some of the dynamic structure that we generate when we move our eyes, head, or body. The video camera can often usefully be thought of as a surrogate for the body. A panning shot produces a video sequence that simulates what happens when you turn your head or your eyes. A zoom shot produces a sequence that simulates what you see when you approach an object (although motion parallax relative to the peripheral surroundings is not preserved). A dolly shot simulates what you see as you locomote through an environment. A dashboard mounted camera produces a video sequence that simulates what you see while driving a car.

The video camera, in capturing a sequence of images, is able to capture some of the same kinds of structure that we generate in our optic array as we move around in the natural environment. A panning shot resembles a turn of the head, for instance, because it preserves the characteristic pattern of disocclusion at the leading edge of the field of view, i.e. in the direction in which the head is turning, and of occlusion at the trailing edge.

More mysterious is why we are able to see the depicted environment within a static image. If natural vision is based on movement, as Gibson insisted, then it would perhaps seem odd that, in a static image, we are able to see anything at all.

The crucial question is: How is it that the creator of the static picture is able to present information for the existence of the depicted environment, even though the depicted environment is not really there? Gibson entertained several possible solutions to this problem over the decades that he thought about it (see the essays in part 3 of Reed & Jones, Citation1982). The explanation that he offers in his final book is phrased in a way that is perhaps less clearly worded than he would have liked. Gibson proposes that ‘a picture is a surface so treated that it makes available an optic array of arrested structures with underlying invariants of structure’ (Gibson, Citation1979, p. 272). This proposal is predicated on the idea that static visual perception is really a special case of natural vision, i.e. it is a special case of seeing from a moving point of observation. We learn to pick up invariants by moving around, and it is only after we have gained some experience in this activity that we are able to pick up invariants from a static point of observation. A picture, in turn, is a special case of static perception. A picture presents a persisting array of information that stays the same even as the observer moves closer to the picture surface, or even as the observer sways from side to side.

The optic array that a picture presents to a viewer is not ambient. The viewer cannot move so as to generate new information about the environment that is depicted in the picture. The picture contains only the information that was put there when the picture was created. This applies to both static pictures and moving pictures: the information in them has been put there by the picture creator. Static and moving pictures are non-interactive depictions. Interactive depictions, including virtual reality environments, work on a different set of principles.

Four modes of depiction

Since the invention of the motion picture in the nineteenth century there have been a number of further innovations in the technology of depiction. We will not here attempt to give an exhaustive survey of such technologies of depiction, which would have to include such things as video conferencing apps, computer-aided design software, drone technologies, simulators for aircraft control and car driving, and technologies such as social robots (Clark & Fischer, Citation2023). We focus on four distinct modes of depiction: static images; moving images; 3D graphical environments as in video games; and virtual reality environments as viewed through a virtual reality headset.Footnote2 We compare these four modes of depiction along four dimensions of analysis. The comparison is summarized in .

Table 1. Comparison of four modes of depiction.

Location of the depicted environment

The first issue concerns the apparent location of the depicted environment. When looking at a painting or watching a movie, the viewer sees an apparent environment, as it were, ‘behind’ the canvas, or ‘behind’ the projection screen. The same applies in the case of standard video games, of the kind played on a computer monitor or on a television screen. The depicted environment of the game again appears to be behind the screen. Virtual reality offers a very different experience. The viewer of a virtual reality environment experiences a depicted environment that extends all around their body. The viewer can turn their entire body around and they will still be looking at the depicted environment (although, granted, the designer of the environment may not have bothered to place anything there that is interesting to look at). Virtual reality is said to offer an ‘immersive’ experience (Calleja, Citation2014). The experience is immersive precisely because the viewer appears to be ‘inside’ the space of the depicted environment (Grabarczyk & Pokropski, Citation2016). (We explore this point in more detail in the next section.)

Apparent point of observation

A static image usually depicts a scene from a single, fixed point of observation. A camera, for example, typically captures a still image of a scene as viewed from the position of the camera lens. The apparent point of observation is a property of the image as such. It is this fixed point of observation that accounts for the familiar sense in which the eyes in a portrait will ‘follow you around the room.’ The same applies to any given frame within a motion picture. If an actor ‘breaks the fourth wall’ and looks directly at the camera, the viewer will have the experience that the actor is looking directly at them, regardless of where the viewer is sitting within the movie theater. Video games, likewise, typically offer only a single apparent point of observation at any given time. In a virtual reality headset, the viewer has much more complete control over the apparent point of observation. In fact, what the viewer sees at any moment is determined by the position and orientation of the observer’s head. A significant challenge for designers of the basic hardware and software of virtual reality headsets is to continuously track this information in order to present a depicted environment that does not cause motion sickness in the user (Chattha et al., Citation2020; Munafo et al., Citation2017).

Possibility to interact with ‘objects’ in the depicted environment

Static images and motion pictures are limited in terms of the interactivity that they offer. You can draw a mustache on a reproduction of the Mona Lisa, but if you want to change her facial expression you will have to paint her face again from scratch. (Or at least this was the case until recently. Now we have mobile phone apps that will do the job. Who is to say whether this is progress or not.) In a video game, the user must be able to interact with objects in the depicted environment in some way. Interactivity is what distinguishes a video game from a mere animated video sequence (Myers, Citation2005; Nguyen, Citation2020). Virtual reality environments also often allow the user to interact with some of the objects in the depicted environment, although not always. Some virtual reality environments are created purely to be looked at. Even here, though, the experience is still interactive in the sense that the observer is typically able to move their head to visually explore the depicted environment. This may be the case, for example, when virtual reality technology is used as part of the creative process in industrial design settings, or in digital art and media.

Multiple observers

We have already seen above that static images and motion pictures display a scene as viewed from a single apparent point of observation, which may be a moving point of observation. This means that when several observers gather to look at the same image at the same time, as in an art gallery or a movie theater, we all see the same thing, in an important sense. Classic offline video games work in a similar way: one or several players can interact with the game via input devices, and there can be an arbitrary number of passive viewers watching the same screen. Networked video games, in contrast, offer a single depicted environment that can be accessed simultaneously by an arbitrarily large number of users, each of whom sees the environment ‘behind’ their own screen. And virtual reality environments, similarly, can allow an arbitrarily large number of simultaneous viewers. The difference is that, in virtual reality, the viewer is ‘inside’ the depiction space, in the sense discussed in the first point above. The possibility of sharing a single depicted space with other people in virtual reality raises an intriguing question over the extent to which it is possible, through the use of technology, to collapse, or negate, physical distance between people. Perhaps in the future we will visit one another in virtual reality instead of traveling over large physical distances to do the same. Perhaps. Existing technology offers this possibility only to a limited extent. There are several important technological obstacles that have not yet been fully solved, including the so-called duplex problem, i.e. the problem of how to track someone’s facial movements in real time while they are wearing a VR headset which is itself partly occluding their face (Lanier, Citation2017).Footnote3

In the remaining sections we look in more depth at some specific issues around perception in virtual reality.

Do users of virtual reality headsets perceive pictures?

We are assuming that virtual reality can usefully be understood as a technology of depiction. So far, we have identified some dimensions of difference and continuity with other modes of depiction. One important difference is that while other modes of depiction present a depicted environment that appears to be behind a surface of depiction, this is not the case for the wearer of a virtual reality headset. In virtual reality, the depicted environment appears to extend all around the observer’s body. This claim deserves to be explored in more depth.

One response here would be to assert that there is in fact no contradiction in saying that the virtual reality environment both surrounds the observer and is situated ‘behind’ a screen. The virtual reality headset is strapped to the viewer’s head, and the headset thus moves in such a way that the stimulus display is always situated directly in front of the eyes. This is true. In another sense, though, it feels incorrect to say that the depicted environment appears to be behind a screen, and in fact we can ask whether the viewer inside a virtual reality headset has the experience of perceiving pictures at all.

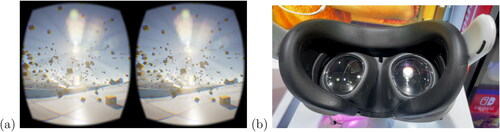

This question might seem odd. Virtual reality headsets of the kind that are commonly available to consumers today do, after all, literally contain picture screens (Anthes et al., Citation2016). The screens are embedded within the hardware of the headset. A pair of lenses positioned in between the screen and the eyeball (see ) allow the two-dimensional images () to appear to be a three-dimensional environment. It may seem, therefore, that wearing a virtual reality headset is necessarily a kind of image perception. This need not be the case, however. Developers have explored alternative methods for delivering optical information to the eyeball, without using an image screen. For instance, some systems project light directly onto the retina—so-called virtual retinal displays (Lin et al., Citation2017; Peillard et al., Citation2020). In the case of such systems, it would be incoherent to say that the wearer of the headset is engaging in image perception. The only thing here that could plausibly be identified as an ‘image’ is the one that is projected on the viewer’s retina. There arises the familiar problem that this ‘image’ on the retina is simply not visible to the observer, just as the ‘image’ on a contact lens is not visible to the wearer.Footnote4

Figure 1. Commercial virtual reality systems. (a) A screen capture from the Oculus Rift system. An image is presented separately to each eyeball. The viewer sees a single, apparently three-dimensional environment, but only (b) when the images are viewed through the appropriate lenses (image shows the inside of a Meta Quest 3 headset). Image source: Wikimedia commons, CC-BY-SA.

Our suggestion is that a virtual reality headset might better be understood not as an image-based display, but as a pair of electronic spectacles that allow the wearer to sample a simulated ambient optic array. We think our suggestion aligns better with the phenomenology of being in virtual reality.Footnote5 Seeing an object in virtual reality does not feel like looking at a picture of an object, or even like seeing an object in a movie. While we are inside virtual reality, we do not experience the presence of a picture surface. If our suggestion is correct, then virtual reality does not involve picture perception. The images in are merely an implementation detail of one particular kind of virtual reality hardware. In other words, a given piece of virtual reality technology may happen to use picture screens, but what the virtual reality device really does is it makes available an array of optical information that the user’s visual system can explore.

Ecological psychologists could profit from paying more attention to the hardware of virtual reality devices. To build a virtual reality system, it is necessary to build an ambient optic array. Indeed, virtual reality development can be thought of as a kind of cognitive modeling, one in which what is being modeled is not the internal dynamics of the nervous system but the external dynamics of information arrays. Virtual reality development is, we could say, a form of ecological cognitive modeling.

If a virtual reality system can be thought of as implementing a model of an ambient optic array, then it will be useful to understand how this is achieved. An important initial question to address is the following: where does the information in virtual reality come from?

Who creates the information in a 3D virtual environment?

A key difference between motion pictures and 3D realtime depictions is that the latter allow for exploratory action on the part of the user. The user can decide what to look at, and can cause a change in what information is displayed. Many modern video games allow the user to control a character moving through an apparently three-dimensional environment. In such games, the user will sometimes wish to visually scan the parts of the environment that are currently not displayed on the screen, for instance when attempting to adjust the character’s direction of locomotion. A standard solution to this problem used in modern video game consoles is to provide the user with two joysticks: one for controlling the movement of the avatar and one for adjusting the position of the ‘camera.’ Skilled gamers are able to perform both of these control tasks fluently.

The fact that the viewer is in partial control of the display means that realtime 3D environments avoid some problems inherent in other modes of depiction. For instance, artists often have to worry about establishing perceptual continuity across a whole work. Artists cannot take for granted people’s ability to track objects across a series of images. Comic book readers can have trouble identifying characters when they see them for the first time from a new angle or in new clothing. Similar challenges arise in film-making, for instance where the narrative cuts back and forth between different locations or different points in time. Film makers can make use of various devices to help viewers keep track of the narrative. An establishing shot gives a brief view of the outside of a building to remind the viewer of where the action is currently taking place. A flashback scene can be filmed in black and white to contrast it from the ‘present day,’ which is filmed in color. The existence of these devices demonstrates that film makers, like comic book artists, are forced to think about how to convey the appropriate invariant structure to allow the viewer to keep track of the narrative.

By handing over some control to the user, developers of 3D realtime environments avoid some of the responsibility for keeping track of these invariants. Users of these 3D environments can observe the environment’s objects from varied perspectives or distances, and there is no risk of breaking the illusion of the unity of the depicted objects. If anything, the sense of unity is made more compelling because the user is free to spend as much time as they wish on inspecting and exploring the objects and layout of the depicted environment.

In the case of video games, the user may have to deliberately control the camera. In the case of virtual reality, the user does not have to think about controlling a camera because the information that is presented at any given moment is determined by the position of the user’s head. Some virtual reality experiences eschew a first-person perspective for a third-person perspective or a diorama view. Even in these cases, the user perceives the scene from the apparent position of the headset, as if they are looking down on a cardboard model.

Developers of virtual reality experiences do, however, still have to worry about perceptual continuity. For instance, how do you allow the user to move from one place in virtual reality to another place that is some distance away? You can’t allow them to simply walk, as the user will likely collide with obstacles in the physical environment. One solution is to allow the user to ‘teleport,’ which means, in effect, that the 3D virtual environment that the viewer is inside simply gets switched out for a different 3D virtual environment. The way that teleportation is implemented in virtual reality is currently quite ad hoc and unstandardized (Prithul et al., Citation2021). Plausibly, Gibsonian perceptual theory can contribute to a principled way of designing the user experience. Software developers might, for example, aim to present the observer with a teleportation animation that maintains a lawful transformation of invariants as the user is transported from point A to point B.

These brief considerations demonstrate that interactive depictions differ from traditional forms of depiction (i.e. static or moving images) in interesting ways. In traditional forms of depiction, it is typically the artist who chooses what information is present in the work and how it is presented. The viewer merely looks at and appreciates the finished work. In contrast, interactive depictions, including video games and virtual reality environments, must be thought of as a joint creation. The information is created by the hardware designers, the software engineers, and also by the end user who engages in exploratory movement.

If this is correct, then the end user can be said to be a co-creator of the experience that they have inside virtual reality. It is tempting to say that virtual reality closes the gap between perceiving a depiction and perceiving the natural environment (Chalmers, Citation2022). To what extent is this true? This brings us to the problem of affordance perception in virtual reality.

Are there affordances in virtual reality?

It is not straightforward to determine the extent to which virtual reality supports the perception of affordances. There is an empirical literature on affordance perception in virtual reality (e.g. Bhargava et al., Citation2020; Geuss et al., Citation2015; Grechkin et al., Citation2014). Existing studies in this tradition typically attempt to adapt some well-studied affordance phenomenon, e.g. the perception of aperture passability (Warren & Whang, Citation1987), and to implement this in virtual reality. These studies thus present an observer with simulated information about an affordance. The observer is tasked with engaging in some behavior with respect to this simulated information.

The assumption, in these studies, is that virtual reality supports the presentation of information about affordances. This is a useful methodological assumption within the empirical study of affordances. But our concern here is slightly different. We are not concerned with whether simulated information about affordances can be presented in virtual reality (we assume it can be). We are concerned with whether or not the virtual environment actually has its own affordances. It could be the case, after all, that information about affordances can be presented without there being any affordances there, in the same way that a painting can present information about a battle scene for a battle that never took place. Some theorists have argued that virtual reality only supports a kind of make-believe action in which the observer acts ‘as if’ the affordances are there (Rolla et al., Citation2022). We believe that virtual reality does sometimes afford actions in the strict sense. There are, however, at least three important obstacles to claiming that virtual reality supports affordance perception in general.

Ambiguous embodiment

The first obstacle concerns the ambiguous status of the viewer’s body in virtual reality. An affordance is usually understood in terms of a correspondence between, on the one hand, some structure in the environment and, on the other hand, some structure of the observer’s body.Footnote6 To perceive an affordance, the observer must therefore have access to both exterospecific information about the environment and propriospecific information about their own body.

Propriospecific information can, of course, be non-visual information. The observer who interacts with the virtual reality medium is an embodied agent who continues to engage in perceptual and motor processes, including head rotation, arm movement, walking, and object manipulation, while they interact with the virtual reality medium. In a well-functioning virtual reality system, the user should have the experience of inhabiting the body that they see via the headset (Rolla et al., Citation2022). There should be no mismatch between what they see and what they feel in their body.

Allowing the user’s body to be present in the optical information presented via a virtual reality headset is a significant engineering challenge (Kilteni et al., Citation2012). In order to present the user’s own body to them in an accurate way, the virtual reality device must be able to constantly measure the user’s posture. The position and posture of the observer’s head can be tracked quite accurately via gyroscopes embedded in the headset. Current and recent commercial devices, such as the Oculus Rift, generally use handheld peripheral devices to keep track of the hands, although not the individual fingers (Anthes et al., Citation2016).Footnote7 The rest of the body, in modern commercial virtual reality devices, is usually not explicitly tracked at all. This means that the user’s own body is not reliably observable via visual information presented via the headset. This imposes a constraint on the the extent to which the virtual reality system can offer affordances to the user. Actions can be performed only to the extent that the user’s bodily movements exist in the virtual reality environment, thanks to accurate tracking of posture. If the posture of the body is not reliably relayed to the user’s eyes, then confusing perceptual paradoxes are likely to arise.Footnote8

Stereotyped action

The second obstacle to affordance perception in virtual reality concerns the nature of interaction with objects. Virtual reality systems can provide feedback and responses that align with the user’s actions, allowing the user to interact with objects in the depicted environment. An example is the virtual reality game Beat Saber, in which the user wields a Star Wars style lightsaber and is tasked with slicing at a sequence of animated blocks to a rhythm set by a musical backing track. The user’s bodily movements are open-ended, and not stereotyped. The user has a great degree of freedom to move as the machine tracks the real-time posture of their head and hands. The interaction with the blocks, however, is highly stereotyped. If the user successfully makes contact between the saber and the block, then the block breaks in half exactly down the middle. The blocks are programmed to behave in this stereotyped way: either the user makes contact, in which case the block breaks in half, or they fail to make contact, in which case the block stays intact. This illustrates a limitation of interacting with objects in virtual reality. Objects in virtual reality can typically only be interacted with to the extent that the programmer has explicitly anticipated and coded for that interaction. Virtual reality objects are made of code, not material substances.

At the same time, it is not the case that all affordances of a virtual reality game can be designed a priori. Some affordances can come forth in the game. This is the case because the user is an active co-creator of their experience, in the sense discussed in the previous section. The user is free to explore the virtual environment and to select their own actions, within the limits imposed by the game design. Some games, such as the block-based construction game Minecraft, allow the user, from inside the game, to combine elements of the game world in open-ended and novel, albeit still stereotyped, ways.

Substanceless objects

A third obstacle—perhaps the most significant—concerns the lack of any substance in the depicted environment. Virtual reality environments have virtual surfaces, and there is a virtual medium (in modern systems, the software simulates how light bounces around between surfaces). But the surfaces of virtual reality objects are not the outside faces of virtual substances. They are mere surfaces with nothing behind them, reflecting virtual light but not absorbing energy. The objects themselves are entirely hollow, which sometimes creates eerie experiences wherein the user walks through another object or even through another person’s avatar, catching a glimpse of the hollow inside. This lack of substance seems to account for why some theorists are skeptical over whether virtual reality offers an environment at all (Tavinor, Citation2022). The lack of material substance also means that ‘our biological needs, such as nutrition, rest, and thermoregulation, cannot be met in VR—only in real life’ (Rolla et al., Citation2022, p. 95). This is true, and it follows from the fact that objects in virtual reality are made of code, not material substances.

Despite these obstacles, we are inclined to say that virtual reality does afford things to the user, in limited ways. Indeed, virtual reality affords both exploratory and performatory types of action (Gibson, Citation1966). Virtual reality affords exploratory action because it provides a new set of possibilities for perceiving virtual objects. The observer has the freedom to seek new information in the simulated ambient optic array. Consider how one can look ‘behind’ a virtual ‘wall’ in a virtual reality game. Just as in the non-virtual world, one can move one’s body and tilt one’s head to bring to view the occluded space. The information for what is behind the wall does not arise in the same way as information specifying the surface of a physical wall. In virtual reality, there is no substance that reflects the light. The information ‘about’ the wall surface is instead simulated by the virtual reality system. Nevertheless, a successfully-implemented virtual reality system captures the experience of seeing the back of a wall, given the appropriate bodily movement, without there being a wall in the traditional material-bound sense.

Virtual reality affords performatory action in some ways that we have already mentioned: slashing at virtual blocks in Beat Saber, stacking them in Minecraft. These are explicitly game-like experiences. Perhaps more significant, however, is the potential that virtual reality offers for new forms of social interaction at a distance. Jaron Lanier, a technology pioneer who is credited with coining the term virtual reality, wrote in his 2017 memoir Dawn of the New Everything about how, in the 1970s, long before the technology was actually developed, he imagined virtual reality as primarily a technology for social interaction: ‘I was immediately obsessed with the potential for multiple people to share such a place, and to achieve a new type of consensus reality, and it seemed to me that a ‘social version’ of the virtual world would have to be called virtual reality. This in turn required that people would have bodies in VR so that they could see each other, and so on, but all that would have to wait for computers to get better’ (Lanier, Citation2017, p. 45). Given that the computers have now improved, we suggest that the time has come to engage seriously with the possibilities for perceiving and acting in virtual worlds.

Conclusion

Virtual reality has long proved useful as a tool in the empirical study of information detection. Virtual reality offers a uniquely powerful method for making information available to an observer while allowing the observer to freely explore that information. In the above discussion, however, we have examined virtual reality, along with the perception of video games, not as an empirical tool but as a topic of research in its own right, i.e. as a realm of aesthetic experience.Footnote9 We began by comparing virtual reality to picture perception. This is a common comparison, but it deserves to be problematized. Virtual reality has some similarities with picture perception, but it differs importantly in that it is interactive and immersive. Our discussion indicates that the range of possibilities for perception and action that is supported by interactive media is rich, and is becoming increasingly more so as technology evolves. The perception of interactive depictions deserves to be theorized and studied in greater depth.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes

1 We must note here that we have some misgivings about describing virtual reality as a form of ‘depiction’. The term is related to the word ‘picture’, but we intend it in its broader sense of an artificially mediated portrayal. We deny that the use of the word ‘depiction’ necessarily implies a commitment to an ontology of virtual reality as a picturing medium. Indeed, we explain below that we do not think that perception in virtual reality involves picture perception at all. Other terms are, unfortunately, equally imperfect. We could say that virtual reality offers a ‘representation’ of an environment, but this invites confusion with mental representations. Possibly it would be better to talk about ‘interactive media’, but ‘medium’ already has a technical sense in Gibson’s (Citation1979) work, so we will mostly avoid using that term here as well.

2 We restrict our discussion to full virtual reality, in which the observer’s field of view is entirely filled by light generated by the headset. We do not consider mixed- or augmented reality devices, but see Raja and Calvo (Citation2017).

3 As we were working on this paper Apple released its Vision Pro device. This device attempts to solve the duplex problem, in its FaceTime app, by generating a 3D model of the user’s head, which can be presented in the form of a floating video screen to another user who is wearing a second headset. The device uses sensors to track the user’s facial movements in real time but relies on pre-captured photographic data to render the user’s skin, hair, clothes, and make-up. In effect, the user appears in the other person’s headset as a kind of talking photograph.

4 Another reason why virtual reality is not necessarily a kind of picture perception is that, given the appropriate hardware, designers of virtual reality environments can choose to present the user with non-visual sources of information. Blind and partially sighted users of virtual reality can in principle become immersed in a virtual environment that is presented exclusively via auditory and haptic feedback, with no access to optical information at all (Kreimeier & Götzelmann, Citation2020). We thank Farid Zahnoun for pointing this out.

5 We do, however, agree with O’Shiel (Citation2020) that virtual reality environments are phenomenologically distinguishable from the physical environment, at least given the limitations of existing technology.

6 The exact metaphysical status of affordances is disputed. We have formulated the wording here so as to remain neutral on this question, which is not relevant for our purposes. For discussion, see Baggs and Chemero (Citation2021); Bruineberg et al. (Citation2023).

7 Alternative methods for tracking the hands are possible. Early work in the development of virtual reality in the 1980s in fact focused on the development of high-fidelity haptic interfaces, including gloves (Lanier, Citation2017). At time of writing, the next generation of virtual reality devices, including Apple’s Vision Pro, appear set to eschew handheld peripherals altogether, instead tracking the user’s hands using video cameras embedded in the underside of the visor.

8 When Meta CEO Mark Zuckerberg launched his flagship Horizon Worlds platform in 2021, the software was widely mocked for the fact that users’ avatars appeared as floating torsos with heads and arms but no legs. In the absence of reliable information for tracking users’ leg posture, however, this may be a sensible design choice. In terms of usability, no information may be better than information that is inaccurate.

9 We have have touched upon a number of issues where an ecological analysis of the information available in virtual reality can perhaps inform future theoretical work in aesthetics. These include: the capacity for virtual reality to offer an ‘immersive’ experience, the difference in the experience of an object in virtual reality from either a picture or a moving image, the idea that the viewer can be seen as a co-creator of their experiences in virtual reality, and the possibility of ‘eerie’ experiences with substance-lacking surfaces. We thank an anonymous reviewer for highlighting these points.

References

- Anthes, C., García-Hernández, R. J., Wiedemann, M., & Kranzlmüller, D. (2016). State of the art of virtual reality technology [Paper presentation]. 2016 IEEE Aerospace Conference (pp. 1–19). MT, USA: Big Sky. https://doi.org/10.1109/AERO.2016.7500674

- Baggs, E., & Chemero, A. (2021). Radical embodiment in two directions. Synthese, 198(S9), 2175–2190. https://doi.org/10.1007/s11229-018-02020-9

- Bhargava, A., Lucaites, K. M., Hartman, L. S., Solini, H., Bertrand, J. W., Robb, A. C., Pagano, C. C., & Babu, S. V. (2020). Revisiting affordance perception in contemporary virtual reality. Virtual Reality, 24(4), 713–724. https://doi.org/10.1007/s10055-020-00432-y

- Blau, J. J. C. (2020). Revisiting ecological film theory. In J. B. Wagman & J. J. C. Blau (Eds.), Perception as information detection: Reflections on gibson’s ecological approach to visual perception (pp. 274–290). Taylor & Francis.

- Bruineberg, J., Withagen, R., & Van Dijk, L. (2023). Productive pluralism: The coming of age of ecological psychology. Psychological Review. https://doi.org/10.1037/rev0000438

- Calleja, G. (2014). Immersion in virtual worlds. In M. Grimshaw (Ed.), The Oxford handbook of virtuality (pp. 222–236). Oxford University Press.

- Chalmers, D. J. (2022). Reality+: Virtual worlds and the problems of philosophy. Penguin.

- Chattha, U. A., Janjua, U. I., Anwar, F., Madni, T. M., Cheema, M. F., & Janjua, S. I. (2020). Motion sickness in virtual reality: An empirical evaluation. IEEE Access. 8, 130486–130499. https://doi.org/10.1109/ACCESS.2020.3007076

- Clark, H. H., & Fischer, K. (2023). Social robots as depictions of social agents. Behavioral and Brain Sciences, 46, e21. https://doi.org/10.1017/S0140525X22002825

- Cutting, J. E. (2000). Images, imagination, and movement: Pictorial representations and their development in the work of James Gibson. Perception, 29(6), 635–648. https://doi.org/10.1068/p2976

- Geuss, M. N., Stefanucci, J. K., Creem-Regehr, S. H., Thompson, W. B., & Mohler, B. J. (2015). Effect of display technology on perceived scale of space. Human Factors, 57(7), 1235–1247. https://doi.org/10.1177/0018720815590300

- Gibson, J. J. (1966). The senses considered as perceptual systems. Houghton-Mifflin.

- Gibson, J. J. (1979). The ecological approach to visual perception. Houghton-Mifflin.

- Grabarczyk, P., & Pokropski, M. (2016). Perception of affordances and experience of presence in virtual reality. AVANT. Trends in Interdisciplinary Studies, 7(2), 25–44.

- Grechkin, T. Y., Plumert, J. M., & Kearney, J. K. (2014). Dynamic affordances in embodied interactive systems: The role of display and mode of locomotion. IEEE Transactions on Visualization and Computer Graphics, 20(4), 596–605. https://doi.org/10.1109/TVCG.2014.18

- Heft, H. (2020). Revisiting ‘The discovery of the occluding edge and its implications for perception’ 40 years on. In J. B. Wagman & J. J. C. Blau (Eds.), Perception as information detection: Reflections on Gibson’s ecological approach to visual perception (pp. 188–204). Routledge.

- Kilteni, K., Groten, R., & Slater, M. (2012). The sense of embodiment in virtual reality. Presence: Teleoperators and Virtual Environments, 21(4), 373–387. https://doi.org/10.1162/PRES_a_00124

- Kreimeier, J., & Götzelmann, T. (2020). Two decades of touchable and walkable virtual reality for blind and visually impaired people: A high-level taxonomy. Multimodal Technologies and Interaction, 4(4), 79. https://doi.org/10.3390/mti4040079

- Lanier, J. (2017). Dawn of the new everything: Encounters with reality and virtual reality. Henry Holt and Company.

- Lin, J., Cheng, D., Yao, C., & Wang, Y. (2017). Retinal projection head-mounted display. Frontiers of Optoelectronics, 10(1), 1–8. https://doi.org/10.1007/s12200-016-0662-8

- Munafo, J., Diedrick, M., & Stoffregen, T. A. (2017). The virtual reality head-mounted display Oculus Rift induces motion sickness and is sexist in its effects. Experimental Brain Research, 235(3), 889–901. https://doi.org/10.1007/s00221-016-4846-7

- Myers, D. (2005). The aesthetics of the anti-aesthetics [Paper presentation]. Online Proceedings: The Aesthetics of Play Conference 2005, Bergen, Norway.

- Nguyen, C. T. (2020). Games: Agency as art. Oxford University Press.

- O’Shiel, D. (2020). Disappearing boundaries? Reality, virtuality and the possibility of ‘pure’ mixed reality (MR). Indo-Pacific Journal of Phenomenology, 20(1), e1887570. https://doi.org/10.1080/20797222.2021.1887570

- Peillard, E., Itoh, Y., Moreau, G., Normand, J.-M., Lécuyer, A., & Argelaguet, F. (2020). Can retinal projection displays improve spatial perception in augmented reality? In 2020 IEEE International Symposium on Mixed and Augmented Reality (ISMAR) (pp. 80–89). IEEE.

- Prithul, A., Adhanom, I. B., & Folmer, E. (2021). Teleportation in virtual reality; a mini-review. Frontiers in Virtual Reality, 2, 730792. https://doi.org/10.3389/frvir.2021.730792

- Raja, V., & Calvo, P. (2017). Augmented reality: An ecological blend. Cognitive Systems Research, 42, 58–72. https://doi.org/10.1016/j.cogsys.2016.11.009

- Reed, E., & Jones, R. (Eds.). (1982). Reasons for realism: Selected essays of James J. Gibson. Lawrence Erlbaum Associates.

- Rolla, G., Vasconcelos, G., & Figueiredo, N. M. (2022). Virtual reality, embodiment, and allusion: An ecological-enactive approach. Philosophy & Technology, 35(4), 95. https://doi.org/10.1007/s13347-022-00589-1

- Stoffregen, T. A. (2020). The use and uses of depiction. In J. B. Wagman & J. J. C. Blau (Eds.), Perception as information detection: Reflections on Gibson’s ecological approach to visual perception (pp. 255–273). Taylor & Francis.

- Tavinor, G. (2022). The aesthetics of virtual reality. Routledge.

- Warren, W. H., & Whang, S. (1987). Visual guidance of walking through apertures: Body-scaled information for affordances. Journal of Experimental Psychology. Human Perception and Performance, 13(3), 371–383.