ABSTRACT

Educating health care professionals for working in interprofessional teams is a key preparation for roles in modern healthcare. Interprofessional teams require members who are competent in their roles. Self-assessment instruments measuring interprofessional competence (IPC) are widely used in educational preparation, but their ability to accurately and reliably measure competence is unknown. We conducted a systematic review to identify variations in the characteristics and use of self-report instruments measuring IPC. Following a systematic search of electronic databases and after applying eligibility criteria, 38 articles were included that describe 8 IPC self-report instruments. A large variation was found in the extent of coverage of IPC core competencies as articulated by the Interprofessional Education Collaborative. Each instrument’s strength of evidence, psychometric performance and uses varied. Rather than measuring competency as “behaviours”, they measured indirect proxies for competence, such as attitudes towards core interprofessional competencies. Educators and researchers should identify the most appropriate and highest-performing IPC instruments according to the context in which they will be used.

Systematic review registration: Open Science Framework (https://archive.org/details/osf-registrations-vrfjn-v1).

Introduction

Delivering modern high-quality healthcare needs workers with expertise, competence and the ability to work collaboratively as part of an interprofessional team. Preparing professionals for interprofessional collaborative work requires reliable and feasible assessment methods.

Background

Assessment and outcomes

Interprofessional education (IPE) does not uniformly improve interprofessional competence (IPC), which refers to specific skills for specific tasks (Marion-Martins & Pinho, Citation2020; O’Keefe et al., Citation2017; Spaulding et al., Citation2021). Because “in-role” competence is a prerequisite for successful IPE, understanding why IPE varies in its effectiveness requires reliable and accurate measures of IPC.

Assessment is used variously, from grading students to contributing to course development and research, but IPC assessment tools often lack conceptual clarity (J. E. Thistlethwaite et al., Citation2014). Outcomes often reflect professional domains (e.g. medicine and nursing) and proximal outcomes, such as attitudes measured before or after a training session, rather than distal outcomes, such as demonstrable competencies at the end of a programme (Guitar & Connelly, Citation2021; O’Keefe et al., Citation2017; Wooding et al., Citation2020). IPE outcomes can be thought of hierarchically: at the base, interprofessional reactions, upwards through attitudes, knowledge, skills and behavioural change and, finally, patient benefits (Hammick et al., Citation2007). Using theory alongside outcomes can help provide clarity by specifying the professional practices included in IPC and describing them and their relationships to each other (Reeves et al., Citation2011; J. E. Thistlethwaite et al., Citation2014).

To move beyond profession-specific education and clarify IPC, a consensus framework was developed by the Interprofessional Education Collaborative (IPEC, Citation2016). The IPC framework has four core competencies: values and ethics, roles and responsibilities, interprofessional communication and teams and teamwork.

Self-assessment as a response to the challenge of observing IPC

Ideally, competence should be assessed by observing professionals working in interprofessional teams, capturing their “real-world” performance in the context of complex interactions with other professionals and patients. Observing IPC systematically in authentic settings is, however, challenging (J. Thistlethwaite et al., Citation2016). Whilst tools such as observation rubrics for assessment can encourage fidelity and inter- and intra-observer reliability, they are no panacea (Curran et al., Citation2011). IPC changes over time, so assessment techniques must be able to efficiently capture this dynamic development (Anderson et al., Citation2016; Rogers et al., Citation2017).

Self-report instruments are a popular, pragmatic means of assessing IPC (Blue et al., Citation2015; Phillips et al., Citation2017). They are convenient to distribute, straightforward to analyse and report and allow comparison of findings between settings and over time. However, the validity of any comparisons and conclusions depends on the quality of the instrument. An instrument’s quality can be reviewed, in part, from its systematic properties, such as various forms of internal validity and reliability (Havyer et al., Citation2016; Oates & Davidson, Citation2015; Thannhauser et al., Citation2010), and the empirical and theoretical adequacy of any development or validation processes (for example, the degree of non-responder bias in instrument development).

In this review, we focus on self-report instruments, as they are frequently used to measure the outcome of IPE. An evaluation of existing instruments can, by increasing awareness of the benefits and limitations of specific instruments enable an informed choice. The current literature lacks an overview of what components of the spectrum of conceptualisations of IPC are addressed by validated instruments. Moreover, judgement of the quality of an instrument depends on the context in which it is being applied. For example, an IPC instrument used for formal academic assessment may have differing criteria for being judged “high-quality,” such as good reliability and strong internal validity, whereas if the same instrument is used as a prompt for informal educational development or training intervention, criteria such as ease of administration, speed and ease of completion and the strength of underpinning behavioural or educational theory will have more weight. Therefore, the contexts in which instruments are used are connected to the assessment of instrument quality.

There is a need to identify variations in the characteristics of self-report instruments and the ways they are used. To achieve the aim of exploring these variations, our review’s primary objectives are to:

Describe the components of IPC assessed in self-report instruments,

Assess the quality of IPC self-report instruments,

Describe the theoretical foundation for IPC instruments and

Describe the educational contexts.

To achieve these objectives, we performed a review to support educators in informing assessment and research using self-report instruments.

Method

We conducted a systematic review based on a published protocol (Allvin et al., Citation2020).

Eligibility criteria

Studies were eligible if they (a) used a quantitative or mixed-methods design, (b) assessed undergraduate students from two or more health professions, (c) related to educational interventions assessing one or more aspects of IPC, (d) used a self-report instrument regarding IPC and (e) evaluated these instrument’s psychometric properties (e.g. validity and reliability).

Studies were excluded if they (a) failed to treat results from students and practitioners/faculty in the same sample separately, (b) used a self-report instrument unrelated to IPC or where the IPC focus was secondary to other aspects (where the major part of the instrument consisted of items not related to IPC e.g. simulation as a learning modality or teamwork not relating specifically to interprofessionality), (c) limited the evaluation of psychometric properties to only reporting internal reliability (e.g. Cronbach’s alpha), (d) reported only course satisfaction and (e) were not empirical research.

Information sources

We searched the PubMed (NLM), CINAHL (EBSCO), ERIC (EBSCO), Scopus (Elsevier) and Web of Science (Clarivate) databases following pilot searches in PubMed and Scopus. CovidenceTM software was used to facilitate screening papers for eligibility, to record the quality assessments and to extract the data.

Search strategy

The search strategy was developed by the research team and an expert librarian. Details of the search strategies used in each database are included in Supplementary File, . The search was restricted to articles published in English between January 2010 and May 2023. The reference lists of included studies were also screened for additional studies. The searches were performed in April 2019 with an update February 2023 (all databases except Scopus) and May 2023 (Scopus).

Table 1. Self-report instrument characteristics.

Study selection

Titles and abstracts were independently screened for eligibility by RA and SE. Potentially relevant articles were examined in full-text form by RA, and the screened articles were independently reviewed for eligibility by RA and SE. Inclusion decisions were made in consensus between RA and SE. CT was available as an arbiter in case of disagreement – there was none.

Data extraction and synthesis

A data extraction form based on the Medical Education Research Study Quality Instrument (MERSQI) (Reed et al., Citation2007) and the Best Evidence in Medical Education (BEME) guidelines (Gordon et al., Citation2019) was used. Two researchers (RA and SE) first extracted the data independently, verified the data collaboratively using CT as an arbiter in case of disagreement and in case of conflict of interest (one case). Quality was assessed using a coding rubric developed by Artino et al. (Citation2018).

We extracted the following data (Marion-Martins & Pinho, Citation2020): general information (title, author(s), country, year) (O’Keefe et al., Citation2017); instrument details (name, origin, number of items, item response, response scale) (Spaulding et al., Citation2021); study characteristics (study design, number of participants, participants’ profession) (J. E. Thistlethwaite et al., Citation2014); intervention details (IPCs assessed, education context, time from intervention to assessment, conceptual framework); and (Guitar & Connelly, Citation2021) author statements about the strengths and/or weaknesses of the instrument.

The extracted data were analysed and compiled into tables. The instrument items were analysed in relation to the four IPC core competencies of IPEC (Citation2016) by RA and SE, followed by discussions in the local IPE community during an academic seminar attended by IPE educators and researchers, after which the analysis was further refined. No ethical approval was required, and no sensitive data were handled; therefore, no ethical vetting was performed.

Results

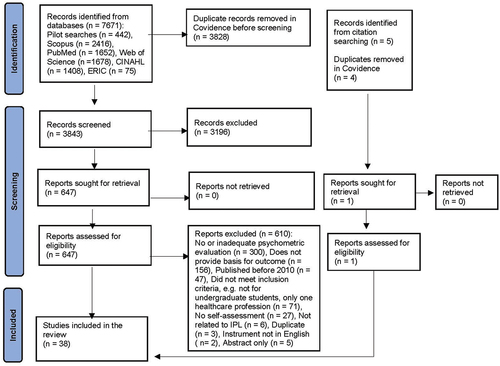

Of the 7671 identified articles, 38 met the inclusion criteria and were included for analysis (see ).

Characteristics of the included studies

The study characteristics are presented in Supplementary File, . Thirty-one studies used a post-intervention only design and seven used pre – post designs. Most studies included medical students (n = 32), followed by nursing (n = 31), pharmacy (n = 18) and physical therapy (n = 15) students. Medicine and nursing were the two professions that most frequently shared IPE (28 studies, 74%). These two professions, in turn, were most frequently sharing IPE with pharmacy (14 studies, 37%), physiotherapy (11 studies, 29%) and dentistry (9 studies, 24%) students. Thirty-one studies included between 2 and 7 professions, and 4 studies involved more than 10 professions. Most studies were conducted in the United States (n = 9). IPE was mainly delivered in activities within courses or programmes with undescribed content and structures (n = 11). IPE was delivered in simulated settings (n = 8), classrooms (n = 6), and clinical practice (n = 2). Eight studies were unconnected to a specific activity, and eight studies provided no information regarding the educational context. The Readiness for Interprofessional Learning Scale (RIPLS) was the most commonly used instrument (23 studies). Time from learning activity to assessment of IPC varied between assessment alongside the intervention (without specifying before or after) (Cloutier et al., Citation2015; Ganotice & Chan, Citation2018; Tyastuti et al., Citation2014; Zaher et al., Citation2022), before the intervention (Hasnain et al., Citation2017; Kerry et al., Citation2018; Kottorp et al., Citation2019; Lie et al., Citation2013), directly after the intervention (Al-Shaikh et al., Citation2018; Edelbring et al., Citation2018; Milutinović et al., Citation2018; Shimizu et al., Citation2022; Willman et al., Citation2020) to before and after the intervention (Peeters et al., Citation2016; Yu et al., Citation2018). Two studies reported administering an online survey directly after the activity completion, without specifying the response period (Lunde et al., Citation2021; Schmitz et al., Citation2017). Twenty-one studies did not report the time from intervention to assessment.

Table 2. IPC self-assessment instrument item numbers related to IPEC core competencies.

Included instruments

The 38 included studies in this review used in total 8 self-report instruments (). Most studies (n = 34) adopted or refined a previously developed assessment tool. Four surveys described instruments developed specifically for the study in question (Fike et al., Citation2013; Hasnain et al., Citation2017; Hojat et al., Citation2015; Mann et al., Citation2012). The number of items in the instruments ranged from 9 to 38. Most instruments used Likert-type rating scales with agreement response options.

No instrument asked respondents to explicitly assess their IPC competencies. Instead, attitudes towards or ways of relating to IPC were rated: self-efficacy (Axelsson et al., Citation2022; Hasnain et al., Citation2017; Kottorp et al., Citation2019; Mann et al., Citation2012), readiness for learning IPC (Al-Shaikh et al., Citation2018; Cloutier et al., Citation2015; Edelbring et al., Citation2018; Ergönul et al., Citation2018; Ganotice & Chan, Citation2018; Kerry et al., Citation2018; Keshtkaran et al., Citation2014; King et al., Citation2012; Li et al., Citation2018; Lie et al., Citation2013; Luderer et al., Citation2017; Mahler et al., Citation2016; Milutinović et al., Citation2018; Onan et al., Citation2017; Shimizu et al., Citation2022; Sollami et al., Citation2018; Spada et al., Citation2022; Torsvik et al., Citation2021; Tyastuti et al., Citation2014; Villagrán et al., Citation2022; Williams et al., Citation2012; Yu et al., Citation2018; Zaher et al., Citation2022), attitudes towards IPC (Edelbring et al., Citation2018; Hojat et al., Citation2015; Lockeman et al., Citation2016), perceptions of interprofessional education (Fike et al., Citation2013; Ganotice et al., Citation2022; Peeters et al., Citation2016; Piogé et al., Citation2022; Pudritz et al., Citation2020; Zorek et al., Citation2016), and behaviour (Lockeman et al., Citation2016; Lunde et al., Citation2021; Schmitz et al., Citation2017; Violato & King, Citation2019).

Validity and reliability evidence

Structural validity and internal reliability were the most reported psychometric properties. Analysis of content and structural validity and internal reliability are presented in Supplementary File, Table S3 and S4.

Content validity was assessed in 15 of the studies for 7 instruments: the RIPLS (Cloutier et al., Citation2015; Ergönul et al., Citation2018; Keshtkaran et al., Citation2014; Li et al., Citation2018; Luderer et al., Citation2017; Yu et al., Citation2018), the IPECC-SET (Hasnain et al., Citation2017; Kottorp et al., Citation2019), the JeffSATIC (Hojat et al., Citation2015), the IPEC competency tool (Lockeman et al., Citation2016), the SEIEL scale (Mann et al., Citation2012), the ICCAS (Lunde et al., Citation2021; Schmitz et al., Citation2017) and SPICE (Fike et al., Citation2013; Zorek et al., Citation2016).

Structural validity was mostly provided with an exploratory approach to analysis of the scale structure solely based on data within the sample (n = 22) (Al-Shaikh et al., Citation2018; Axelsson et al., Citation2022; Cloutier et al., Citation2015; Hojat et al., Citation2015; Kottorp et al., Citation2019; Li et al., Citation2018; Lockeman et al., Citation2016; Luderer et al., Citation2017; Lunde et al., Citation2021; Mann et al., Citation2012; Milutinović et al., Citation2018; Schmitz et al., Citation2017; Shimizu et al., Citation2022; Sollami et al., Citation2018; Spada et al., Citation2022; Torsvik et al., Citation2021; Tyastuti et al., Citation2014; Villagrán et al., Citation2022; Violato & King, Citation2019; Williams et al., Citation2012; Yu et al., Citation2018; Zaher et al., Citation2022). A confirmatory approach, i.e. using an a priori determined model was used in 14 studies (Edelbring et al., Citation2018; Ergönul et al., Citation2018; Fike et al., Citation2013; Ganotice & Chan, Citation2018; Ganotice et al., Citation2022; Hasnain et al., Citation2017; Kerry et al., Citation2018; Keshtkaran et al., Citation2014; King et al., Citation2012; Lie et al., Citation2013; Mahler et al., Citation2016; Onan et al., Citation2017; Piogé et al., Citation2022; Pudritz et al., Citation2020). Of these, the major part were performed using factor analysis (n = 12) and three using an Item Response Theoretical (IRT) approach (Edelbring et al., Citation2018; Hasnain et al., Citation2017; Kerry et al., Citation2018).

Eight studies (Cloutier et al., Citation2015; Ergönul et al., Citation2018; Keshtkaran et al., Citation2014; Li et al., Citation2018; Lunde et al., Citation2021; Onan et al., Citation2017; Sollami et al., Citation2018; Tyastuti et al., Citation2014) assessed cross-cultural validity for two instruments (the RIPLS and the ICCAS), utilising either independent translation, forward – backward translation, validation of the translation or an expert committee. The most commonly reported measure of reliability was the Cronbach’s alpha coefficient. Overall, the internal reliability for all the total instrument scores ranged between α = 0.60–0.98. Twenty articles using the RIPLS (Al-Shaikh et al., Citation2018; Cloutier et al., Citation2015; Edelbring et al., Citation2018; Ergönul et al., Citation2018; Ganotice & Chan, Citation2018; Kerry et al., Citation2018; Keshtkaran et al., Citation2014; King et al., Citation2012; Li et al., Citation2018; Luderer et al., Citation2017; Mahler et al., Citation2016; Milutinović et al., Citation2018; Onan et al., Citation2017; Shimizu et al., Citation2022; Spada et al., Citation2022; Torsvik et al., Citation2021; Tyastuti et al., Citation2014; Villagrán et al., Citation2022; Williams et al., Citation2012; Yu et al., Citation2018) reported Cronbach’s alpha for each subscale (or factor) within a range of α = 0.09–0.93. The RIPLS subscale with the lowest internal reliability was roles and responsibilities and the highest measure was found in factor 2 in Shimizu et al (Shimizu et al., Citation2022).

Interprofessional core competencies in the instruments

As the IPEC competency tool, the IPECC-SET 38 (and the shorter versions, the IPECC-SET 27 and IPECC-SET 9) were all based on the IPEC framework description of IPC competencies, these self-assessment instruments matched at least some IPEC core competencies (see ). However, there were also un- or under-represented IPEC competencies in some instruments. The IPECC-SET 9 (Axelsson et al., Citation2022; Kottorp et al., Citation2019) and the JeffSATIC (Hojat et al., Citation2015) omitted measuring the values and ethics core competence. The IPEC competency tool (Lockeman et al., Citation2016), the JeffSATIC (Hojat et al., Citation2015), SPICE (Fike et al., Citation2013), SPICE-R (Peeters et al., Citation2016), SPICE-2 (Zorek et al., Citation2016) and SPICE-R3 (Axelsson et al., Citation2022) did not measure interprofessional communication; the three RIPLSs with 16 items (Cloutier et al., Citation2015; Tyastuti et al., Citation2014; Yu et al., Citation2018) and the IPEC competency tool (Lockeman et al., Citation2016) omitted measuring the roles and responsibilities competence. Many instruments (the IPECC-SET and the IPEC Competency tool excepted) contained one or more additional items not related to any IPEC core competence (see ).

Table 3. Summary of measurement characteristics for each self-assessment instrument.

Conceptual frameworks in IPC self-report instruments

The underlying theory or explicit conceptual frameworks for self-assessment instruments were under-reported. The rationales reflected a pragmatic need for IPC in healthcare and, thus, a need for IPC in professional education. Shimizu et al. (Citation2022); Sollami et al. (Citation2018) drew on social identity theory to portray IPE – and attitudes to IPE – as a function of the relationship between groups of students. Mann et al., (Citation2012) used socio-cognitive theory and self-efficacy constructs to evaluate student learning through interaction with their environments and the people and activities within these environments. Hasnain et al. (Citation2017) and Kottorp et al. (Citation2019). also suggested self-efficacy as a relevant approach to developing IPE. Zaher et al. (Citation2022) framed their study using relational coordination theory and situated learning theory.

Discussion

Our review identified eight self-assessment instruments that measure IPC in healthcare undergraduates and vary in two distinct and important ways. First, psychometrically: measures vary from reliable to unreliable and range from strong internal validity to questionable internal validity. Second, this performance comes from a narrow range of interprofessional learning evaluation contexts – mainly medicine and nursing students. All the included studies lacked a strong explicit theoretical base. Whilst the IPEC core competencies were all represented to varying extents, some received less attention than others – notably, interprofessional communication. The reasons why were not communicated by the study authors.

IPC developmental educational interventions are an example of complex interventions (Skivington et al., Citation2021). This complexity makes IPC and associated assessment intellectually challenging by specifying relationships between concepts, direction and causality. However, assessing groups of students simultaneously is challenging practically: it needs to be feasible and efficient. Ease of administration and presentation of results means that self-report measures will likely remain. Methodologically, though, a further challenge remains: reported intention in learners does not necessarily translate to observable behaviours – what people say they will do or feel may not equate to what they actually do or feel in a situation or interprofessional context (McConnell et al., Citation2012; Rogers et al., Citation2017). Our review suggests that IPC instrument developers and evaluators omit this important implementation consideration, arguably overestimating instruments’ utility in non-classroom (virtual, simulated or actual) contexts.

An educator or researcher seeking an instrument for IPC assessment needs the highest quality, most trustworthy instrument for the intended use and context. This necessitates assessing the instrument content, quality of evidence (psychometric properties) and quality of application (user feasibility). Therefore, we have summarised the measurement characteristics of each self-assessment instrument to facilitate decision-making by educators and researchers seeking to choose an appropriate instrument (see ). Any choice between instruments will involve benefit trade-offs between these three important aspects of quality.

If the educator is principally concerned with the psychometric properties (e.g. structural validity and reliability) of the instrument, then the IPECC-SET or SPICE are among the optimal instruments. However, the IPC challenge for the educator is to use the instrument in context. The context for the educator may differ from the context in which the scale was developed, for example, team surgery roles for a successful error-free operation vs team roles in a successful transfer into an emergency department from an ambulance. Therefore, the implementation potential is – in part – a function of the generic nature of the competences assessed and the volume of repeated and reproduced evaluations in which the instrument has been applied. The contextual importance of scale development may help explain why one self-report instrument may demonstrate strong psychometric quality in one study but perform less well in another. Ideally, a judgement of instrument quality will come from multiple validations in varying contexts. Thus, we need more systematic replication of instruments for varying IPC challenges, a challenge faced by practice developers using other quality improvement methods (Ivers et al., Citation2014).

IPC instruments rarely demonstrate best practice in instrument development (Oates & Davidson, Citation2015). Badly formulated item wording is common, and 95% of the survey instruments used in health professional education have been found to contain badly designed and laid out items (Artino et al., Citation2018). Most self-assessment instruments in our review contained items formulated as statements and assessed with Likert-type rating scales (Al-Shaikh et al., Citation2018; Cloutier et al., Citation2015; Edelbring et al., Citation2018; Ergönul et al., Citation2018; Fike et al., Citation2013; Ganotice & Chan, Citation2018; Ganotice et al., Citation2022; Hojat et al., Citation2015; Kerry et al., Citation2018; Keshtkaran et al., Citation2014; King et al., Citation2012; Li et al., Citation2018; Lie et al., Citation2013; Lockeman et al., Citation2021; Luderer et al., Citation2017; Mahler et al., Citation2016; Milutinović et al., Citation2018; Onan et al., Citation2017; Peeters et al., Citation2016; Piogé et al., Citation2022; Pudritz et al., Citation2020; Shimizu et al., Citation2022; Spada et al., Citation2022; Torsvik et al., Citation2021; Tyastuti et al., Citation2014; Villagrán et al., Citation2022; Violato & King, Citation2019; Williams et al., Citation2012; Yu et al., Citation2018; Zaher et al., Citation2022; Zorek et al., Citation2016), which is a format that can lead to a form of bias where any assertion made in a question is endorsed, regardless of content (Krosnick, Citation1999). Better practice is to formulate items as questions emphasising the underlying construct (Artino et al., Citation2011) (for example, “How confident are you that you can do well in this course?” instead of “I am confident I can do well in this course”). The negatively worded items used in versions of the RIPLS (Ganotice & Chan, Citation2018; King et al., Citation2012; Li et al., Citation2018; Torsvik et al., Citation2021; Tyastuti et al., Citation2014; Yu et al., Citation2018) can be difficult to comprehend and answer accurately (Artino et al., Citation2014). Furthermore, the negatively worded items need to be reverse scored in a sum score analysis. The RIPLS was mainly adapted from Parsell and Bligh in 1999 (Parsell & Bligh, Citation1999). While some authors have recommended scale revision (Tyastuti et al., Citation2014) to generate new items (Milutinović et al., Citation2018), no authors have discussed the need to update the wording of items or the response options, despite the instrument being developed prior to 1999. Using or adapting an existing instrument can be advantageous, for example, for encouraging cross-study comparisons. This argument may explain the popularity of the RIPLS. However, the differences in the structural validity of the RIPLS between contexts (Yu et al., Citation2018) shows little support for this strategy. A better strategy is to establish validity by focusing on item quality in relation to intended use. Furthermore, calls have been made to move forward from assessing attitudes towards learning IPC to assessing these competencies per se (Torsvik et al., Citation2021).

In this review, the psychometric evidence varied. Content validity was generally appropriate, evaluated using experts and students and/or based on the literature and previously developed instruments. However, the absence of some IPC aspects (e.g. values and ethics, interprofessional communication and roles and responsibilities) meant that conclusions about overall IPC are – or should be – limited.

Many studies performed factor analysis to assess structural validity. Confirmatory factor analysis (CFA) provides information on how well existing scale partitioning fits in a certain context, thus contributing useful psychometric data and provides possibility to generalize across contexts. In addition, we also found uses of exploratory factor analysis (EFA). While adding to judgements of psychometric quality, conclusions drawn from EFA may deviate from original, theory-driven scale partitioning. The case of one study using EFA to re-structure IPEC-based scales, leaving only two (teams and teamwork and values and ethics) of four core competencies for future recommended use, is a questionable result of EFA practice (Lockeman et al., Citation2016).

Generally, high total levels of internal reliability (Cronbach’s alpha) were reported. Cronbach’s alpha, however, is affected by the length of the scale, so reported alpha values may not reflect the internal consistency of items or unidimensionality of the scale, but may derive from a large number of test items (Streiner, Citation2003). The subscale (factor) roles and responsibilities in different versions of the RIPLS showed very low values, which could be due to a low number of items, poor interrelatedness between items or a heterogeneous construct. Criticism has been directed at Cronbach’s alpha as a measure of dimensionality, which has frequently been reported without adequate understanding and interpretation (Kalkbrenner, Citation2023; Tavakol & Dennick, Citation2011). The Omega measure is proposed by Kerry (Kerry et al., Citation2018) as a more accurate estimate of reliability due to e.g. being less sensitive to scale length. However, this estimate is not widely accessible in statistical packages and is also best functional under certain conditions of data (Kalkbrenner, Citation2023). Thus, more empirical and theoretical work is required to establish reliable common practices in establishing unidimensional measurement scales for IPC.

Half of the studies in our review were intended for and subsequently conducted among English-speaking students. The performance of IPC self-assessment instruments in non-English-speaking students and/or using translated instruments remains uncertain. A rigorous translation process should consider both the instrument's theoretical origin and the target context.

The quality of a self-assessment instrument can also be judged functionally, i.e. in context and in relation to its fitness for purpose, structurally (the quality of underpinning empirical and theoretical evidence) and in relation to the process (implementability) (Donabedian, Citation1988). As with other educational research (Niemen et al., Citation2022), our review revealed a narrow theoretical base, with only six studies drawing on explicit theory: social cognitive theory (Bandura, Citation2001), intergroup contact theory (Allport, Citation1954), social identity theory (Tajfel & Turner, Citation1979), relational coordination theory (Gittell, Citation2011) and situated learning theory (Lave & Wenger, Citation1991). These theories also focus on learning and attitudes, but IPC relies on team members’ performing (i.e. their behaviours). This gap in the relationship between exposure to educational interventions, assessment and eventual competence is an important omission in the evidence base.

Assessment is related to the intentions of the educational strategies being used. Interprofessional learning educational approaches vary greatly between universities, such as using problem-based learning, e-learning or simulation-based strategies (Aldriwesh et al., Citation2022). The sparse description of teaching and learning approaches in the identified reports meant that we were unable to assess these contextual factors. A related aspect, however, is the time it takes to develop IPC competence in a manner that is reflected in a self-report instrument. IPC was assessed directly after the educational intervention in some studies which is something to consider in interpreting these results. Interprofessional learning is a dynamic process, and developing IPC takes time (Rogers et al., Citation2017). The effect of time from educational exposure and the advantages and disadvantages of more dynamic research designs (for example, interrupted time series designs) remain an important uncertainty. Future research should focus on systematic replication and adaptation of formal and explicit theory-based IPC self-assessment instruments.

Limitations

Publications were limited to the English language; therefore, we may have missed non-English IPC instruments and evaluations. Information in the included studies was minimal, limiting completeness of data extraction. Time and resource constraints meant that we did not contact individual authors of the studies to provide more details. Part of the potentially relevant full-text articles were initially screened by one researcher, with the attendant risk of erroneously excluding studies due to screening fatigue. After the first screening, the articles were independently reviewed by two researchers, and inclusion decisions were made by team consensus.

There are minor changes from the protocol (Allvin et al., Citation2020) to the performed study report: a) clarified formulation of objectives; b) in the protocol, the Scopus database was omitted which we amended in the manuscript; c) exclusion criteria were more elaborated; d) The protocol mentioned the overall outcome as the effectiveness of instruments used to assess interprofessional competence. To address this outcome in practice we directed focus towards characteristics of instrument in relation to their respective ways to relate to IPEC core competencies as a means to reach effectiveness to measure IPC; e) Quality assessment forms MERSQI, BEME, NOS-E and COSMIN were stated in the protocol as a basis for quality assessment. In the study we chose to merge MERSQI, BEME & and a rubric developed for item quality assessment by Artino et al. (Citation2018).

Conclusion

IPC core competency domains are reflected to varying extents in different self-assessment instruments. Educators and researchers need to identify the most appropriate instruments for use in different contexts by considering both the quality of evidence and the quality of application. Interprofessional competence is a function of the work or educational context, so selecting an IPC assessment instrument should happen as part of the conception and design of the IPE intervention being considered. This review contributes an increased awareness of IPC aspects in self-report instruments and provides educators and researchers with a summary of the available IPC self-assessment instruments to guide instrument selection.

Acknowledgments

Thank you to active participants in the workshop hosted by the Medical Education group at Örebro University on IPC competences in relation to instrument items. We also would like to thank librarians Linda Bejerstrand and Elias Olsson from Örebro University Library for sharing their expertise and support in searches and data management.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Renée Allvin

Renée Allvin, PhD, is associate professor at the School of Health Sciences, Örebro University, Sweden. She has long experience and research interest in medical simulation and clinical training.

Carl Thompson

Professor Carl Thompson is Chair in Applied Health Research and Dame Kathleen Raven Chair in Clinical Research at the University of Leeds, UK. He has a long-standing interest in researching and teaching the ways professionals use information in their clinical decision-making and judgement.

Samuel Edelbring

Samuel Edelbring, PhD, is associate professor in medical education and vice dean for educational development at the School of Health Sciences, Örebro University. His research interest concern IPE, clinical reasoning, and workplace-based learning.

References

- Aldriwesh, M. G., Alyousif, S. M., & Alharbi, N. S. (2022). Undergraduate-level teaching and learning approaches for interprofessional education in the health professions: A systematic review. BMC Medical Education, 22(1), 13. https://doi.org/10.1186/s12909-021-03073-0

- Allport, G. W. (1954). The nature of prejudice. Perseus Books.

- Allvin, R., Thompson, C., & Edelbring, S. (2020). Assessment of interprofessional competence in undergraduate health professions education: Protocol for a systematic review of self-report instruments. Systematic Review, 9(1), 142. https://doi.org/10.1186/s13643-020-01394-7

- Al-Shaikh, G. K., Al-Madi, E. M., Masood, J., Shaikh, Q., Syed, S. B., Bader, R. S., & McKimm, J. (2018). Interprofessional learning experiences: Exploring the perception and attitudes of Saudi Arabian medical and dental students. Medical Teacher, 40(sup1), S43–SS48. https://doi.org/10.1080/0142159X.2018.1465180

- Anderson, E., Smith, R., & Hammick, M. (2016). Evaluating an interprofessional education curriculum: A theory-informed approach. Medical Teacher, 38(4), 385–394. https://doi.org/10.3109/0142159X.2015.1047756

- Archibald, D., Trumpower, D., & MacDonald, C. J. (2014). Validation of the interprofessional collaborative competency attainment survey (ICCAS). Journal of Interprofessional Care, 28(6), 553–558. https://doi.org/10.3109/13561820.2014.917407

- Artino, A. R., Jr., Gehlbach, H., & Durning, S. J. (2011). AM last page: Avoiding five common pitfalls of survey design. Academic Medicine: Journal of the Association of American Medical Colleges, 86(10), 1327. https://doi.org/10.1097/ACM.0b013e31822f77cc

- Artino, A. R., Jr., La Rochelle, J. S., Dezee, K. J., & Gehlbach, H. (2014). Developing questionnaires for educational research: AMEE guide no. 87. Medical Teacher, 36(6), 463–474. https://doi.org/10.3109/0142159X.2014.889814

- Artino, A. R., Jr., Phillips, A. W., Utrankar, A., Ta, A. Q., & Durning, S. J. (2018). “The questions shape the answers”: Assessing the quality of published survey instruments in health professions education research. Academic Medicine: Journal of the Association of American Medical Colleges, 93(3), 456–463. https://doi.org/10.1097/ACM.0000000000002002

- Axelsson, M., Kottorp, A., Carlson, E., Gudmundsson, P., Kumlien, C., & Jakobsson, J. (2022). Translation and validation of the Swedish version of the IPECC-SET 9 item version. Journal of Interprofessional Care, 36(6), 900–907. https://doi.org/10.1080/13561820.2022.2034762

- Bandura, A. (2001). Social cognitive theory: An agentic perspective. Annual Review of Psychology, 52(1), 1–26. https://doi.org/10.1146/annurev.psych.52.1.1

- Blue, A. V., Chesluk, B. J., Conforti, L. N., & Holmboe, E. S. (2015). Assessment and evaluation in interprofessional education: Exploring the field. Journal of Allied Health, 44(2), 73–82.

- Cloutier, J., Lafrance, J., Michallet, B., Marcoux, L., & Cloutier, F. (2015). French translation and validation of the readiness for interprofessional learning scale (RIPLS) in a Canadian undergraduate healthcare student context. Journal of Interprofessional Care, 29(2), 150–155. https://doi.org/10.3109/13561820.2014.942837

- Curran, V., Hollett, A., Casimiro, L. M., McCarthy, P., Banfield, V., Hall, P., Lackie, K., Oandasan, I., Simmons, B., & Wagner, S. (2011). Development and validation of the interprofessional collaborator assessment rubric ((ICAR)). Journal of Interprofessional Care, 25(5), 339–344. https://doi.org/10.3109/13561820.2011.589542

- Donabedian, A. (1988). The quality of care. How can it be assessed? Jama, 260(12), 1743–1748. https://doi.org/10.1001/jama.1988.03410120089033

- Dow, A. W., DiazGranados, D., Mazmanian, P. E., & Retchin, S. M. (2014). An exploratory study of an assessment tool derived from the competencies of the interprofessional education collaborative. Journal of Interprofessional Care, 28(4), 299–304. https://doi.org/10.3109/13561820.2014.891573

- Edelbring, S., Dahlgren, M. A., & Wiegleb Edström, D. (2018). Characteristics of two questionnaires used to assess interprofessional learning: Psychometrics and expert panel evaluations. BMC Medical Education, 18(1), 40. https://doi.org/10.1186/s12909-018-1153-y

- El-Zubeir, M., Rizk, D. E., & Al-Khalil, R. K. (2006). Are senior UAE medical and nursing students ready for interprofessional learning? Validating the RIPL scale in a middle Eastern context. Journal of Interprofessional Care, 20(6), 619–632. https://doi.org/10.1080/13561820600895952

- Ergönul, E., Baskurt, F., Yilaz, N. D., Baskurt, Z., Asci, H., Koc, S., & Temel, U. B. (2018). Reliability and validity of the readiness for interprofessional learning scale (RIPLS) in Turkish speaking healthcare students. Acta Mediterranea, 34(3), 797–803. https://doi.org/10.19193/0393-6384_2018_3_122

- Fike, D. S., Zorek, J. A., MacLaughlin, A. A., Samiuddin, M., Young, R. B., & MacLaughlin, E. J. (2013). Development and validation of the student perceptions of physician-pharmacist interprofessional clinical education (SPICE) instrument. American Journal of Pharmaceutical Education, 77(9), 190. https://doi.org/10.5688/ajpe779190

- Ganotice, F. A., & Chan, L. K. (2018). Construct validation of the English version of readiness for interprofessional learning scale (RIPLS): Are Chinese undergraduate students ready for ‘shared learning’? Journal of Interprofessional Care, 32(1), 69–74. https://doi.org/10.1080/13561820.2017.1359508

- Ganotice, F. A., Fan, K. K. H., Ng, Z. L. H., Tsoi, F. H. S., Wai, A. K. C., Worsley, A., Lin, X., & Tipoe, G. L. (2022). The short version of students’ perceptions of interprofessional clinical education-revised (SPICE-R3): A confirmatory factor analysis. Journal of Interprofessional Care, 36(1), 135–143. https://doi.org/10.1080/13561820.2021.1879751

- Gittell, J. H. (2011). Relational coordination: Guidelines for theory, measurement and analysis. Brandeis University.

- Gordon, M., Grafton-Clarke, C., Hill, E., Gurbutt, D., Patricio, M., & Daniel, M. (2019). Twelve tips for undertaking a focused systematic review in medical education. Medical Teacher, 41(11), 1232–1238. https://doi.org/10.1080/0142159X.2018.1513642

- Guitar, N. A., & Connelly, D. M. (2021). A systematic review of the outcome measures used to evaluate interprofessional learning by health care professional students during clinical experiences. Evaluation & the Health Professions, 44(3), 293–311. https://doi.org/10.1177/0163278720978814

- Hammick, M., Freeth, D., Koppel, I., Reeves, S., & Barr, H. (2007). A best evidence systematic review of interprofessional education: BEME guide no. 9. Medical Teacher, 29(8), 735–751. https://doi.org/10.1080/01421590701682576

- Hasnain, M., Gruss, V., Keehn, M., Peterson, E., Valenta, A. L., & Kottorp, A. (2017). Development and validation of a tool to assess self-efficacy for competence in interprofessional collaborative practice. Journal of Interprofessional Care, 31(2), 255–262. https://doi.org/10.1080/13561820.2016.1249789

- Havyer, R. D., Nelson, D. R., Wingo, M. T., Comfere, N. I., Halvorsen, A. J., McDonald, F. S., & Reed, D. A. (2016). Addressing the interprofessional collaboration competencies of the association of American medical colleges: A systematic review of assessment instruments in undergraduate medical education. Academic Medicine: Journal of the Association of American Medical Colleges, 91(6), 865–888. https://doi.org/10.1097/ACM.0000000000001053

- Hojat, M., Fields, S. K., Veloski, J. J., Griffiths, M., Cohen, M. J., & Plumb, J. D. (1999). Psychometric properties of an attitude scale measuring physician-nurse collaboration. Evaluation & the Health Professionas, 22(2), 208–220. https://doi.org/10.1177/01632789922034275

- Hojat, M., Ward, J., Spandorfer, J., Arenson, C., Van Winkle, L. J., & Williams, B. (2015). The Jefferson scale of attitudes toward interprofessional collaboration (JeffSATIC): Development and multi-institution psychometric data. Journal of Interprofessional Care, 29(3), 238–244. https://doi.org/10.3109/13561820.2014.962129

- IPEC. (2016). Core competencies for interprofessional collaborative practice: 2016 update. Interprofessional Education Collaborative.

- Ivers, N. M., Sales, A., Colquhoun, H., Michie, S., Foy, R., Francis, J. J., & Grimshaw, J. M. (2014). No more ‘business as usual’ with audit and feedback interventions: Towards an agenda for a reinvigorated intervention. Implementation science : IS, 9(1), 14. https://doi.org/10.1186/1748-5908-9-14

- Kalkbrenner, M. T. (2023). Alpha, Omega, and H internal consistency reliability estimates: Reviewing these options and when to use them. Counseling Outcome Research and Evaluation, 14(1), 77–88. https://doi.org/10.1080/21501378.2021.1940118

- Kerry, M. J., Wang, R., & Bai, J. (2018). Assessment of the readiness for interprofessional learning scale (RIPLS): An item response theory analysis. Journal of Interprofessional Care, 32(5), 634–637. https://doi.org/10.1080/13561820.2018.1459515

- Keshtkaran, Z., Sharif, F., & Rambod, M. (2014). Students’ readiness for and perception of inter-professional learning: A cross-sectional study. Nurse Education Today, 34(6), 991–998. https://doi.org/10.1016/j.nedt.2013.12.008

- King, S., Greidanus, E., Major, R., Loverso, T., Knowles, A., Carbonaro, M., & Bahry L. (2012). A cross-institutional examination of readiness for interprofessional learning. Journal of Interprofessional Care, 26(2), 108–114. https://doi.org/10.3109/13561820.2011.640758

- Kottorp, A., Keehn, M., Hasnain, M., Gruss, V., & Peterson, E. (2019). Instrument refinement for measuring self-efficacy for competence in interprofessional collaborative practice: Development and psychometric analysis of IPECC-SET 27 and IPECC-SET 9. Journal of Interprofessional Care, 33(1), 47–56. https://doi.org/10.1080/13561820.2018.1513916

- Krosnick, J. A. (1999). Survey research. Annual Review of Psychology, 50(1), 537–567. https://doi.org/10.1146/annurev.psych.50.1.537

- Lave, J., & Wenger, E. (1991). Situated learning: Legitimate peripheral participation. Cambridge university press.

- Lie, D. A., Fung, C. C., Trial, J., & Lohenry, K. (2013). A comparison of two scales for assessing health professional students’ attitude toward interprofessional learning. Medical Education Online, 18(1), 21885. https://doi.org/10.3402/meo.v18i0.21885

- Li, Z., Sun, Y., & Zhang, Y. (2018). Adaptation and reliability of the readiness for inter professional learning scale (RIPLS) in the Chinese health care students setting. BMC Medical Education, 18(1), 309. https://doi.org/10.1186/s12909-018-1423-8

- Lockeman, K. S., Dow, A. W., DiazGranados, D., McNeilly, D. P., Nickol, D., Koehn, M. L., & Knab, M. S. (2016). Refinement of the IPEC competency self-assessment survey: Results from a multi-institutional study. Journal of Interprofessional Care, 30(6), 726–731. https://doi.org/10.1080/13561820.2016.1220928

- Lockeman, K. S., Dow, A. W., & Randell, A. L. (2021). Validity evidence and use of the IPEC competency self-assessment, version 3. Journal of Interprofessional Care, 35(1), 107–113. https://doi.org/10.1080/13561820.2019.1699037

- Luderer, C., Donat, M., Baum, U., Kirsten, A., Jahn, P., & Stoevesandt, D. (2017). Measuring attitudes towards interprofessional learning. Testing two German versions of the tool “readiness for interprofessional learning scale” on interprofessional students of health and nursing sciences and of human medicine. GMS Journal for Medical Education, 34(3), Doc33. https://doi.org/10.3205/zma001110

- Lunde, L., Bærheim, A., Johannessen, A., Aase, I., Almendingen, K., Andersen, I. A., Bengtsson R., Brenna S. J., Hauksdottir N., Steinsbekk A., & Rosvold E. O. (2021). Evidence of validity for the Norwegian version of the interprofessional collaborative competency attainment survey (ICCAS). Journal of Interprofessional Care, 35(4), 604–611. https://doi.org/10.1080/13561820.2020.1791806

- MacDonald, C., Archibald, D., Trumpower, D., Casimiro, L., Cragg, B., & Jelly, W. (2010). Designing and operationalizing a tool kit of bilingual interprofessional education assessment instruments. Journal of Research in Interprofessional Practice and Education, 1(3). https://doi.org/10.22230/jripe.2010v1n3a36

- Mahler, C., Giesler, M., Stock, C., Krisam, J., Karstens, S., Szecsenyi, J., & Krug, K. (2016). Confirmatory factor analysis of the German readiness for interprofessional learning scale (RIPLS-D). Journal of Interprofessional Care, 30(3), 381–384. https://doi.org/10.3109/13561820.2016.1147023

- Mann, K., McFetridge-Durdle, J., Breau, L., Clovis, J., Martin-Misener, R., & Matheson, T., Beanlands, H., Sarria, M. (2012). Development of a scale to measure health professions students’ self-efficacy beliefs in interprofessional learning. Journal of Interprofessional Care, 26(2), 92–99. https://doi.org/10.3109/13561820.2011.640759

- Marion-Martins, A. D., & Pinho, D. L. M. (2020). Interprofessional simulation effects for healthcare students: A systematic review and meta-analysis. Nurse Education Today, 94, 104568. https://doi.org/10.1016/j.nedt.2020.104568

- McConnell, M. M., Regehr, G., Wood, T. J., & Eva, K. W. (2012). Self-monitoring and its relationship to medical knowledge. Advances in Health Sciences Education: Theory and Practice, 17(3), 311–323. https://doi.org/10.1007/s10459-011-9305-4

- McFadyen, A. K., Webster, V., Strachan, K., Figgins, E., Brown, H., & McKechnie, J. (2005). The readiness for interprofessional learning scale: A possible more stable sub-scale model for the original version of RIPLS. Journal of Interprofessional Care, 19(6), 595–603. https://doi.org/10.1080/13561820500430157

- Milutinović, D., Lovrić, R., & Simin, D. (2018). Interprofessional education and collaborative practice: Psychometric analysis of the readiness for interprofessional learning scale in undergraduate Serbian healthcare student context. Nurse Education Today, 65, 74–80. https://doi.org/10.1016/j.nedt.2018.03.002

- Niemen, J. H., Bearman, M., & Tai, J. (2022). How is theory used in assessment and feedback research? A critical review. Assessment & Evaluation in Higher Education, 48(1), 1–14. https://doi.org/10.1080/02602938.2022.2047154

- Oates, M., & Davidson, M. (2015). A critical appraisal of instruments to measure outcomes of interprofessional education. Medical Education, 49(4), 386–398. https://doi.org/10.1111/medu.12681

- O’Keefe, M., Henderson, A., & Chick, R. (2017). Defining a set of common interprofessional learning competencies for health profession students. Medical Teacher, 39(5), 463–468. https://doi.org/10.1080/0142159X.2017.1300246

- Onan, A., Turan, S., Elcin, M., Simsek, N., & Deniz, K. Z. (2017). A test adaptation of the modified readiness for inter-professional learning scale in Turkish. Indian Journal of Pharmaceutical Education and Research, 51(2), 207–215. https://doi.org/10.5530/ijper.51.2.26

- Parsell, G., & Bligh, J. (1999). The development of a questionnaire to assess the readiness of health care students for interprofessional learning (RIPLS). Medical Education, 33(2), 95–100. https://doi.org/10.1046/j.1365-2923.1999.00298.x

- Peeters, M. J., Chen, Y., & S, M. (2016). Validation of the SPICE-R instrument among a diverse interprofessional cohort: A cautionary tale. Currents in Pharmacy Teaching and Learning, 8(4), 517–523. https://doi.org/10.1016/j.cptl.2016.03.008

- Phillips, A. W., Friedman, B. T., Utrankar, A., Ta, A. Q., Reddy, S. T., & Durning, S. J. (2017). Surveys of health professions trainees: Prevalence, response rates, and predictive factors to guide researchers. Academic Medicine: Journal of the Association of American Medical Colleges, 92(2), 222–228. https://doi.org/10.1097/ACM.0000000000001334

- Piogé, A., Zorek, J., Eickhoff, J., Debien, B., Finkel, J., Trouillard, A., & Poucheret, P. (2022). Interprofessional collaborative clinical practice in medicine and pharmacy: Measure of student perceptions using the SPICE-R2F instrument to bridge health-care policy and education in France. Healthcare (Basel), 10(8), 1531. https://doi.org/10.3390/healthcare10081531

- Pudritz, Y. M., Fischer, M. R., Eickhoff, J. C., & Zorek, J. A. (2020). Validity and reliability of an adapted German version of the student perceptions of physician-pharmacist interprofessional clinical education instrument, version 2 (SPICE-2D). The International Journal of Pharmacy Practice, 28(2), 142–149. https://doi.org/10.1111/ijpp.12568

- Reed, D. A., Cook, D. A., Beckman, T. J., Levine, R. B., Kern, D. E., & Wright, S. M. (2007). Association between funding and quality of published medical education research. Jama, 298(9), 1002–1009. https://doi.org/10.1001/jama.298.9.1002

- Reeves, S., Goldman, J., Gilbert, J., Tepper, J., Silver, I., Suter, E., & Zwarenstein M. (2011). A scoping review to improve conceptual clarity of interprofessional interventions. Journal of Interprofessional Care, 25(3), 167–174. https://doi.org/10.3109/13561820.2010.529960

- Reid, R., Bruce, D., Allstaff, K., & McLernon, D. J. (2006). Validating the readiness for interprofessional learning scale (RIPLS) in postgraduate context. Med Educ, 40(5), 415–422. https://doi.org/10.1111/j.1365-2929.2006.02442.x

- Rogers, G. D., Thistlethwaite, J. E., Anderson, E. S., Abrandt Dahlgren, M., Grymonpre, R. E., Moran, M., & Samarasekera, D. D. (2017). International consensus statement on the assessment of interprofessional learning outcomes. Medical Teacher, 39(4), 347–359. https://doi.org/10.1080/0142159X.2017.1270441

- Schmitz, C. C., Radosevich, D. M., Jardine, P., MacDonald, C. J., Trumpower, D., & Archibald, D. (2017). The interprofessional collaborative competency attainment survey (ICCAS): A replication validation study. Journal of Interprofessional Care, 31(1), 28–34. https://doi.org/10.1080/13561820.2016.1233096

- Shimizu, I., Kimura, T., Duvivier, R., & van der Vleuten, C. (2022). Modeling the effect of social interdependence in interprofessional collaborative learning. Journal of Interprofessional Care, 36(6), 820–827. https://doi.org/10.1080/13561820.2021.2014428

- Skivington, K., Matthews, L., Simpson, S. A., Craig, P., Baird, J., Blazeby, J. M., Boyd, K. A., Craig, N., French, D. P., McIntosh, E., Petticrew, M., Rycroft-Malone, J., White, M., & Moore, L. (2021). A new framework for developing and evaluating complex interventions: Update of medical research council guidance. BMJ, 374, n2061. https://doi.org/10.1136/bmj.n2061

- Sollami, A., Caricati, L., & M, T. (2018). Attitudes towards interprofessional education among medical and nursing students: The role of professional identification and intergroup contact. Current Psychology, 37(4), 905–912. https://doi.org/10.1007/s12144-017-9575-y

- Spada, F., Caruso, R., De Maria, M., Karma, E., Oseku, A., Pata, X., Prendi, E., Rocco, G., Notarnicola, I., & Stievano, A. (2022). Italian translation and validation of the readiness for interprofessional learning scale (RIPLS) in an undergraduate healthcare student context. Healthcare (Basel), 10(9), 1698. https://doi.org/10.3390/healthcare10091698

- Spaulding, E. M., Marvel, F. A., Jacob, E., Rahman, A., Hansen, B. R., Hanyok, L. A., Martin S. S., Han H. R. (2021). Interprofessional education and collaboration among healthcare students and professionals: A systematic review and call for action. Journal of Interprofessional Care, 35(4), 612–621. https://doi.org/10.1080/13561820.2019.169721

- Streiner, D. L. (2003). Starting at the beginning: An introduction to coefficient alpha and internal consistency. Journal of Personality Assessment, 80(1), 99–103. https://doi.org/10.1207/S15327752JPA8001_18

- Tajfel, H., & Turner, J. C. (1979). The social identity theory of intergroup behavior. In S. Worchel & W. Austin (Eds.), Psychology of intergroup relations (pp. 7–24). Nelson-Hall.

- Tavakol, M., & Dennick, R. (2011). Making sense of Cronbach’s alpha. International Journal of Medical Education, 2, 53–55. https://doi.org/10.5116/ijme.4dfb.8dfd

- Thannhauser, J., Russell-Mayhew, S., & Scott, C. (2010). Measures of interprofessional education and collaboration. Journal of Interprofessional Care, 24(4), 336–349. https://doi.org/10.3109/13561820903442903

- Thistlethwaite, J., Dallest, K., Moran, M., Dunston, R., Roberts, C., Eley, D., Bogossian F., Forman D., Bainbridge L., Drynan D., & Fyfe S. (2016). Introducing the individual teamwork observation and feedback tool (iTOFT): Development and description of a new interprofessional teamwork measure. Journal of Interprofessional Care, 30(4), 526–528. https://doi.org/10.3109/13561820.2016.1169262

- Thistlethwaite, J. E., Forman, D., Matthews, L. R., Rogers, G. D., Steketee, C., & Yassine, T. (2014). Competencies and frameworks in interprofessional education: A comparative analysis. Academic Medicine: Journal of the Association of American Medical Colleges, 89(6), 869–875. https://doi.org/10.1097/ACM.0000000000000249

- Torsvik, M., Johnsen, H. C., Lillebo, B., Reinaas, L. O., & Vaag, J. R. (2021). Has “The ceiling” rendered the readiness for interprofessional learning scale (RIPLS) outdated? Journal of Multidisciplinary Healthcare, 14, 523–531. https://doi.org/10.2147/JMDH.S296418

- Tyastuti, D., Onishi, H., Ekayanti, F., & Kitamura, K. (2014). Psychometric item analysis and validation of the Indonesian version of the readiness for interprofessional learning scale (RIPLS). Journal of Interprofessional Care, 28(5), 426–432. https://doi.org/10.3109/13561820.2014.907778

- Villagrán, I., Jeldez, P., Calvo, F., Fuentes, J., Moya, J., Barañao, P., Irarrázabal, L., Rojas, N., Soto, P., Barja, S., & Fuentes-López, E. (2022). Spanish version of the readiness for interprofessional learning scale (RIPLS) in an undergraduate health sciences student context. Journal of Interprofessional Care, 36(2), 318–326. https://doi.org/10.1080/13561820.2021.1888902

- Violato, E. M., & King, S. (2019). A validity study of the interprofessional collaborative competency attainment survey: An interprofessional collaborative competency measure. The Journal of Nursing Education, 58(8), 454–462. https://doi.org/10.3928/01484834-20190719-04

- Williams, B., McCook, F., Brown, T., Palmero, C., McKenna, L., Boyle, M., Scholes, R., French, J., & McCall, L. (2012). Are undergraduate health care students ‘ready’ for interprofessional learning? A cross-sectional attitudinal study. Internet J Allied Health Sci Practice, 10(3). https://doi.org/10.46743/1540-580X/2012.1405

- Willman, A., Bjuresäter, K., & Nilsson, J. (2020). Newly graduated registered nurses’ self-assessed clinical competence and their need for further training. Nursing Open, 7(3), 720–730. https://doi.org/10.1002/nop2.443

- Wooding, E. L., Gale, T. C., & Maynard, V. (2020). Evaluation of teamwork assessment tools for interprofessional simulation: A systematic literature review. Journal of Interprofessional Care, 34(2), 162–172. https://doi.org/10.1080/13561820.2019.1650730

- Yu, T. C., Jowsey, T., & Henning, M. (2018). Evaluation of a modified 16-item readiness for interprofessional learning scale (RIPLS): Exploratory and confirmatory factor analyses. Journal of Interprofessional Care, 32(5), 584–591. https://doi.org/10.1080/13561820.2018.1462153

- Zaher, S., Otaki, F., Zary, N., Al Marzouqi, A., & Radhakrishnan, R. (2022). Effect of introducing interprofessional education concepts on students of various healthcare disciplines: A pre-post study in the United Arab Emirates. BMC Medical Education, 22(1), 517. https://doi.org/10.1186/s12909-022-03571-9

- Zorek, J. A., Fike, D. S., Eickhoff, J. C., Engle, J. A., MacLaughlin, E. J., Dominguez, D. G., & Seibert, C. S. (2016). Refinement and validation of the student perceptions of physician-pharmacist interprofessional clinical education instrument. American Journal of Pharmaceutical Education, 80(3), 47. https://doi.org/10.5688/ajpe80347