Abstract

Objectives

To develop and evaluate Cochlear™ Remote Assist (RA), a smartphone-based cochlear implant (CI) teleaudiology solution. The development phase aimed to identify the minimum features needed to remotely address most issues typically experienced by CI recipients. The clinical evaluation phase assessed ease of use, call clarity, system latency, and CI recipient feedback.

Design

The development phase involved mixed methods research with experienced CI clinicians. The clinical evaluation phase involved a prospective single-site clinical study and real-world use across 16 clinics.

Study sample

CI clinicians (N = 23), CI recipients in a clinical study (N = 15 adults) and real-world data (N = 57 CI recipients).

Results

The minimum feature set required for remote programming in RA, combined with sending replacements by post, should enable the clinician to address 80% of the issues typically seen in CI follow-up sessions. Most recipients completed the RA primary tasks without prior training and gave positive ratings for usefulness, ease of use, effectiveness, reliability, and satisfaction on the Telehealth Usability Questionnaire. System latency was reported to be acceptable.

Conclusion

RA is designed to help clinicians address a significant proportion of issues typically encountered by CI recipients. Clinical study and real-world evaluation confirm RA’s ease of use, call quality, and responsiveness.

Introduction

Key elements of the clinical management of cochlear implant (CI) recipients include counselling, device fitting, performance evaluation and habilitation. After the first year of implantation, CI recipients are advised to visit their clinic annually for maintenance visits (American Academy of Audiology, Citation2019; British Cochlear Implant Group, Citation2020). However, adherence to clinic-scheduled follow-up appointments can be as low as 35% by the second year post-implantation (Shapiro et al., Citation2021). The low attendance is likely due to the fact that clinic visits require CI recipients to bear the additional costs, time, inconvenience and often time off work (Sach et al., Citation2005). Difficulties travelling to the clinic have also been cited as a potential reason CI recipients are lost to follow-up (Rooth et al., Citation2017). The median distance CI recipients travel to their clinic is 83.7 km at some clinics in the USA (Nassiri et al., Citation2022). Although empirical evidence is limited, it is known that in many countries such as United Kingdom and Australia, clinicians sometimes travel to outreach locations to provide care locally (Athalye et al., Citation2015; Psarros & Abrahams, Citation2022; Schepers et al., Citation2019). Although outreach services may be beneficial and highly desired by patients, the inconvenience and cost of travel are unavoidably transferred from the patient to the clinician. The increased costs to the outreach service provider have been acknowledged by some governments, who now cover travel, meals, accommodation, and staff absence costs when medical services are delivered to remote and rural communities (AGDHAC, Citation2020). Challenges in attending in-clinic visits may not be limited to people who live far from the clinic but could also include those who are time-poor or with mobility issues (Barnett et al., Citation2017; Noblitt et al., Citation2018).

Teleaudiology has the potential to be an efficient and cost-effective way to overcome the barrier of distance and burdens of cost and time for the CI recipient while maintaining the effectiveness of the treatment at the same level as an in-clinic appointment (Muñoz et al., Citation2021). Many professional organisations encourage teleaudiology (American Academy of Audiology, Citation2008; ASHA, n.d.; Audiology Australia, Citation2020).

Although clinicians generally had a positive attitude towards teleaudiology before the COVID-19 pandemic, reports show that only a quarter of them had ever used teleaudiology services pre-pandemic (Eikelboom & Swanepoel, Citation2016). There was a surge in the utilisation of teleaudiology during the COVID-19 lockdowns to keep clinics running and patients and clinicians safe. However, an increase in the adoption of teleaudiology was not observed in audiology services involving hearing implants (Bennett et al., Citation2022). This suggests that in the field of CI, some of the key barriers to the widespread adoption of teleaudiology, such as remote access to clinical equipment, overall cost, ease of use for the patients and the clinicians, reliability and effectiveness of the technology, may remain despite a clear need for teleaudiology (Bennett et al., Citation2022; Eikelboom et al., Citation2022).

Traditionally, a CI teleaudiology session replicates the processes followed in an in-clinic appointment, where the clinician first attempts to identify potential issues by asking questions and using performance tests. Once issues are identified, they proceed to try and resolve the issues using a range of tools. The practice of scheduling routine remote follow-up appointments for all CI recipients increases the burden on the clinic and the majority of CI recipients who have no concerns/issues with their hearing performance. An alternate approach to remote care is to split the process into two parts: the first set of activities to identify and triage the issues, and the second set of activities to resolve the issues only for those CI recipients for whom issues have been detected (Maruthurkkara et al., Citation2022a). Cochlear™ Remote Check (Cochlear Ltd., Sydney, Australia) is a convenient, easy-to-use asynchronous teleaudiology solution that is as effective as a face-to-face session for the triage of issues (Maruthurkkara, Case, et al., 2022c). When issues are detected and further intervention is required, remote support methods can be used to resolve identified concerns remotely. Remote Check is now used in several countries worldwide. A review of de-identified data from 7127 completed Remote Checks (75% adults and 25% children) conducted in 24 countries worldwide (as of December 2022) showed that for 84.5% of recipients, the clinicians concluded that no clinic visit was required. Some intervention was deemed necessary for the remaining 15.5% of recipients (Cochlear Ltd, 2023).

Several studies have demonstrated that remote programming is an effective means of providing remote care for CI recipients who require fitting (Eikelboom et al., Citation2014; Goehring & Hughes, Citation2017; Hughes et al., Citation2012, Citation2018; Samuel et al., Citation2014; Schepers et al., Citation2019; Wesarg et al., Citation2010). However, this service has traditionally required proprietary programming hardware and a personal computer (PC) with the fitting software at the remote location. In addition, third-party software is required for controlling the remote PC and audio-visual communication. Most studies above report remote programming at a clinic that is local to the recipient. A local facilitator/remote clinician takes responsibility for the upkeep and connection of the remote programming setup and must be present in the same room as the recipient during the session. The need for additional hardware, software and an additional facilitator/remote clinician increases the cost of the intervention. While a remote programming session at a local clinic helps reduce the distance the recipient needs to travel, it does not eliminate the need for travel. An alternative approach is to ship the programming hardware and a PC directly to the CI recipient’s home, eliminating their need for travel and a local facilitator/remote clinician (Slager et al., Citation2019). However, this approach relies upon a level of technical competency of the recipient and incurs additional costs and administrative burdens for sending and retrieving the hardware. The clinic must also take additional steps to sanitise the equipment and maintain medical data confidentiality before reusing the programming equipment for another recipient. Moreover, the number of people this approach can serve is limited by the availability of remote programming kits and the time taken to send and retrieve the equipment.

Today, smartphones can communicate with hearing aids and CI sound processors (Maidment & Amlani, Citation2020; Warren et al., Citation2019), and smartphone ownership is high (PEW Research Center, Citation2021). Due to the ability of smartphones to connect to hearing aids, it is possible to provide remote hearing aid fitting services directly to the patient’s home without the need to ship any hardware (Froehlich et al., Citation2020; Miller et al., Citation2020; Sonne et al., Citation2019). The connectivity of smartphones to CI sound processors provides an opportunity to adopt a similar approach for CI recipients. The simplest form of technology to allow remote support is an app that enables a video call. The development team at Cochlear Ltd determined ten additional app-based approaches that are technically feasible for remote support (). However, the implementation of each remote programming function in the CI system can have significant technological and regulatory challenges (Kearney & McDermott, Citation2023). Unlike hearing aids, CI sound processors interact with an implantable device that delivers electrical current within the body. CI systems thus require greater technical complexity to ensure the safe delivery of electrical stimuli when used as intended and to prevent unintended stimulation when one of its components is at fault or under a cybersecurity threat. Adding any new functionality in an implantable medical device such as a CI system comes with high implementation time and cost (Sertkaya et al., Citation2022). Thus, identifying the minimum functionality required for remote care of CI recipients is essential.

Table 1. Potential remote care solutions considered, listed in increasing order of commercial implementation cost and technical complexity.

Research reported in this paper was conducted in two phases: a development phase and a clinical evaluation phase. The objective of the development phase was to determine the minimum functionality required in a smartphone app-based remote support tool to address the maximum proportion of issues typically seen in CI follow-up sessions. Based on the research in the development phase, a new CI synchronous teleaudiology solution called Cochlear Remote Assist (RA) was created. RA enables a clinician to conduct a clinical session for programming and provide support remotely by connecting the fitting software to the recipient’s sound processor via a smartphone app over the Internet. The objectives of the clinical evaluation phase were to evaluate 1) the ease of use of RA, 2) the system’s effectiveness in terms of call clarity and system latency, and 3) assess recipient feedback after using RA in real-world scenarios.

Development phase

Research in this phase comprised three sequential activities: 1) Characterisation of issues typically experienced by CI recipients, 2) Root cause analysis of issues, and 3) Identification of minimum functionality required to address the root causes. Experienced audiologists, characterised as individuals with a minimum of five years of experience in the CI field, were extended invitations via email to participate in activities 2 and 3.

Characterisation of issues

Methods

The data from Maruthurkkara et al. (Citation2022a) were analysed to determine the frequency of patient-related issues that required clinical intervention. In that study, clinicians at four clinics in the United Kingdom and one in Australia identified issues that required intervention for 93 CI recipients scheduled for routine CI appointments.

Results

Clinicians indicated that a total of 646 issues were identified, which could be resolved by replacing equipment (27%), counselling (32%), device programming (20%), a combination of programming and counselling (15%) or by Ear Nose and Throat (ENT) referral (6%). The majority of issues (65%, 420 issues) required equipment replacement, counselling, or ENT referral, and these could be addressed using ‘low-tech’ options such as sending parts/information by post and via video/audio calls. The remaining 226 issues contained multiple instances of the same issues. Once the duplicates were removed, there were 30 unique issues requiring programming or a combination of programming and counselling.

Root cause analysis

Methods

Any issue that requires programming is often only a symptom of an underlying cause. A root cause analysis reveals several root causes for the issue. For example, the Fitting Assistant in the Custom Sound® Pro software indicates that a report of “loud sounds uncomfortable” may have many root causes such as 1) all upper stimulation levels (C levels) too high, 2) electrodes causing non-auditory sensations, 3) hardware issues, 4) sensitivity set too high, 5) volume set too high, 6) acoustic loudness set too high, 7) minimum stimulation levels (T levels) set too high. The clinician identifies and resolves the underlying problem or root cause producing the symptom using a trial-and-error approach. However, the approach can vary from one clinician to another. In the above example, for the issue of “loud sounds uncomfortable”, one clinician might check the hardware first, another might review the datalogs first to determine volume/sensitivity usage issues, and a different clinician might start with adjusting the C levels. Accordingly, the software functionality required to resolve a given issue varies depending on the root cause the clinician wishes to tackle first. To identify the minimum functionality required to address the issues, first, all the root causes for the issues need to be determined. Eight experienced CI clinicians were asked to identify the potential root causes and the frequency at which they occurred for the 30 unique issues mentioned above that required programming or a combination of programming and counselling.

Results

Fifty-one potential root causes were identified by the clinicians, of which 25 were considered resolvable through counselling via video call, while the clinicians deemed the resolution of the remaining 26 root causes to require some form of programming.

Identification of minimum functionality required

Methods

The experienced CI clinicians were asked to watch a video recording that provided detailed descriptions of the potential remote programming solutions listed in . Online meetings were conducted to enable the clinicians to ask questions and clarify the functionality of the listed solutions, as needed. The clinicians were then asked to complete a purpose-built survey to rate the suitability of the different remote care solutions in resolving the root causes. The surveys utilised the following question format: “You have reviewed the Remote Check results for your patient, and their symptom is [list specific symptom] _____. This symptom can be caused due to many possible root causes. You want to resolve the root cause [list root cause] _____. Which solution could be used to resolve this root cause for some patients?”. Clinicians were asked to select the minimal viable option that could successfully resolve (not just gain more insight into) the issue for some (not necessarily for all) patients. Once they made their choice, they were asked three follow-up questions; 1) How would you use this solution? 2) Are there any special circumstances/recipients for whom this option is unsuitable? 3) If yes, what proportion of sessions would these special circumstances/recipients represent? (Rating scale of 0 to 100%).

Results

Twenty-three experienced CI clinicians completed the survey. For each root cause, the solution voted for by the majority of clinicians was identified. For 50% (13/26) of the root causes, most clinicians (>60%) voted for one solution. A one-way chi-square test (p ≤ Bonferroni corrected α = 0.0038) showed that the proportion of the most popular solution was significantly higher than the other solutions for these 13 root causes. Where a clear majority could not be shown for multiple solutions, the solution with greater technical complexity and implementation cost, listed in , was selected.

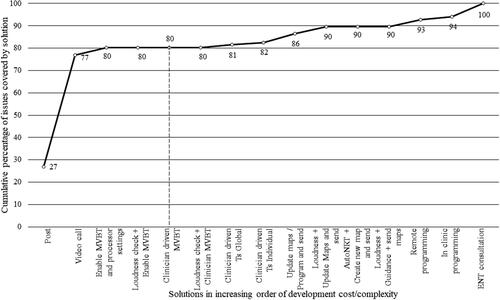

The extent to which a solution covers issues will depend on the frequency of the root cause resolved by it, the percentage of recipients it is applicable for and the frequency of the issue. For each solution listed in , a ‘coverage score’ was calculated by factoring in the frequency of the issues, the frequency of the root causes and the percentage of recipients for whom the minimal solution is applicable. The percentage of issues covered was determined from this score. shows the cumulative coverage of issues by remote care solutions when plotted in increasing order of complexity/cost of commercial implementation. An app-based remote programming solution that enables the clinician to adjust Master Volume Bass and Treble (MVBT) and processor settings remotely while in a video call, along with sending replacement equipment by post, provided coverage of 80% of the issues. Any addition of functionality only produced relatively smaller change in the proportion of issues covered. For this reason, this solution was selected for implementation. The solution is now known as Remote Assist (RA).

Figure 1. Cumulative coverage of issues by remote care solutions when plotted in increasing order of implementation cost/complexity. The solutions up to the dashed vertical line denotes the feature set selected for Remote Assist. Abbreviations: MVBT = Master volume, bass and treble; T = Threshold; AutoNRT = Automatic neural response telemetry; ENT = Ear Nose and throat.

Remote assist overview and features

RA is a telehealth solution that enables clinicians to adjust sound processor programs remotely. It enables clinicians to connect the Nucleus® Custom Sound Pro (CSPro) fitting software to a CI recipient’s Nucleus 7, Nucleus 8, Kanso® 2, or newer sound processors over the Internet via the Nucleus Smart App (NSA), which is installed on their compatible smartphone. The RA functionality in the recipient’s NSA is enabled by the clinician using the myCochlearTM Professional portal. The date and time for the remote session are scheduled using the clinic’s existing scheduling methods. At the scheduled time, the recipient joins the RA session at home via the NSA on their smartphone and enters a virtual waiting room until their clinician joins the RA session. While waiting, the patient can prepare for the session using an in-app checklist. In the clinic, the clinician logs into CSPro to join the RA session. If the recipient has not joined the session when the clinician has entered, the NSA on the recipient’s smartphone will notify the recipient and prompt them to join. Once the clinician and recipient are in the session, they can communicate with each other via video call, audio call, and text chat. Although recipients hear better when phone calls are streamed from a smartphone directly to the sound processor (Wolfe et al., Citation2016), RA is designed to deliver the audio via the smartphone’s speakers to ensure that any programming changes optimises the sound quality while listening through the sound processor’s microphone rather than the streamed input.

To optimise sound quality, the clinician can adjust the current sound processor programs using MVBT controls (Botros et al., Citation2013). The software also displays the program locations where the maps are stored, and which map is currently providing stimulation (on air). Any non-active electrodes and the expected range for C levels are also displayed. The software prevents C levels from being set above compliance limits and will notify the clinician if the limit is reached. The clinician can also enable/disable processor settings such as the spatial noise-reduction setting ForwardFocus, MVBT, volume and sensitivity. Once the changes are saved to the processor, they are automatically saved to the CSPro database. When the patient finishes the session, the sound processor is restarted to commit the changes to the sound processor. To ensure that RA, was effective, easy to use, and satisfactory, when used by CI recipients, it underwent a thorough assessment during the clinical evaluation phase described below.

Clinical evaluation phase

Methods: Clinical evaluation phase

The clinical evaluation phase comprised of a controlled clinical study and a real-world use evaluation survey.

Clinical study design

This prospective, single-centre study used repeated measures and usability study designs. The usability of RA for CI recipients was assessed by observer rating and via participant feedback on a questionnaire that included the Telehealth Usability Questionnaire (TUQ) (Parmanto et al., Citation2016). The subjective feedback and the time taken by RA to make changes to the map over the Internet were evaluated. The ability to understand live speech during the video call using RA was compared with a commercially available Voice over Internet Protocol (VoIP) video call apps (either WhatsApp or Facetime depending on the smartphone allocated). The study received ethics approval at a single site in Sydney (Application No: 2021-06-680) and was registered on clinicaltrials.gov (NCT04987021; 3/8/2021).

Clinical study research participants

To assess the usability of RA, a minimum sample size of 15 participants was required, as recommended in ANSI/AAMI HE75:2009/(R) Citation2018). CI recipients who had previously provided consent to be contacted by Cochlear Ltd for research studies were invited to participate via email. The inclusion criteria were adults (aged ≥18 years) who were implanted for at least three months with cochlear implants from the CI500, CI600 or Freedom series in one or both ears. Fifteen participants were enrolled. provides the demographic details of the study participants. Thirteen out of 15 participants owned a smartphone and used it frequently. One participant did not own a smartphone, and another used it only for emergencies. Most participants who owned smartphones reported feeling fairly or very comfortable using their devices for a variety of tasks, including making phone calls, video calls, taking photos, sending text messages/emails, adjusting phone settings, and using general-purpose apps.

Table 2. Demographic details of participants in the clinical evaluation phase.

Clinical study procedures

The study was conducted from August 2021 to January 2022. Due to the physical distancing restrictions during the COVID-19 pandemic, all study procedures were conducted remotely, including informed consent and programming. For most recipients, the preferred remote location was their home environment, but for a few, it was an office environment. The median distance between the clinician and the recipient was 27.2 km (range 7.2 to 3925 km). For the study duration, the participants received CP1000 or CP1150 Sound Processors that were loaded with two programs. Program 1 was their latest program saved during their previous in-clinic appointment. Program 2 was a ‘soft’ program created especially for use during the RA session. CSPro software was used to manually create a ‘soft’ program where the population mean profile (Maruthurkkara & Bennett, Citation2022b) was used to set the C level profile, and the master volume was set to a low level, just above the thresholds. The soft program was created to simulate poor sound quality, which could then be optimised during the live RA session. Study participants were loaned a smartphone installed with RA enabled NSA. Android or Apple devices were allocated considering the participant’s prior experience with such devices. WhatsApp and Facetime video call apps were installed on Android or Apple devices, respectively. The study devices were delivered to and collected from the study participants by courier.

No instructions were provided to the participants on how to use the app or what to do when they had questions; however, they could access the inbuilt guidance within the app or the user guide. The participants were asked to complete 14 primary tasks to assess the ease of use of RA. The tasks included: joining an RA session, positioning the phone, handling the camera and microphone settings, using chat, resuming the session after different kinds of interruptions, and completing fitting-related tasks. The clinician’s screen was shared via Microsoft Teams, and the session was remotely and unobtrusively observed by a project team member. Observers, trained to assess the task completion as per predefined acceptance criteria, rated the recipient’s ability to complete the tasks using a five-point rating scale used in an earlier study (Maruthurkkara & Bennett, Citation2022b) (1-Unable to complete, 2-Completed after using help, 3-Completed after trying an incorrect option, 4-Completed after some exploration, and 5-Completed in the first attempt). The investigator presented one list of City University of New York (CUNY) sentences (Boothroyd et al., Citation1985) for each condition using uncalibrated live speech presented in a normal speaking voice to emulate natural conversation over the video call. Participants were asked to repeat the sentences through 1) an RA video call and 2) a VoIP video call. Three to four sentences from the CUNY sentences were also presented during an audio-only call (with video turned off) with RA and VoIP.

The participants rated the ease of understanding the live speech for video calls or audio-only calls with RA and VoIP on a questionnaire in response to the question: “When listening to your clinician speaking in these different situations, please rate how well you felt you understood what they were saying to you?” using a rating scale where ‘0’ was “Not at all”, and ‘10’ was “Perfectly”. Participants also rated their ability to understand face-to-face conversations with their families to obtain a baseline. The investigator used MVBT controls in RA to adjust the soft program to resolve sound quality issues. The session was video recorded to measure the response times and any user errors. After presenting a stimulation beep to the sound processor over the Internet, all software controls are disabled until the processor confirms that the stimulation has been presented. The time taken from stimulus presentation to the re-enablement of software controls was analysed from the video recording. The clinician presented a verbal cue to the participant when a stimulus was presented. The participants rated the acceptability of system latency on a questionnaire in response to the question: “The time taken for you to hear the beeps when the clinician adjusted Volume, Bass or Treble.” using a rating scale where ‘0’ was “Too slow”, and ‘10’ was “Fast enough”. Feedback was gathered from participants about their experience of the RA session using a questionnaire that explored 1) their use of mobile phones, 2) the current state of clinical care experience at their clinic, and 3) the TUQ using a seven-point Likert scale where one was ‘Strongly disagree’ and seven was ‘Strongly agree’. Participants could also mark the question as ‘not applicable’ or give a neutral response of four, ‘Neither agree nor disagree’. The nonparametric Chi-square test was used to analyse the categorical data to compare the proportion of respondents who agree (any degree) with those who disagree (any degree). The 21 questions on the TUQ were divided into six areas: Usefulness, Ease of Use and Learnability, Interface Quality, Interaction Quality, Reliability, Satisfaction and Future Use.

Real-world use evaluation survey:

Twenty-five clinicians from 16 clinics in North America, Italy, and the United Kingdom used RA during a field evaluation between December 2021 and June 2022. The clinicians used RA to conduct synchronous sessions for 57 CI recipients (53 adults and four children with their carers) with compatible implants and sound processors. Online surveys were used to gather feedback from recipients and clinicians regarding ease of use, convenience, technical usability, satisfaction, and future use of the technology. The survey also asked recipients to estimate their out-of-pocket cost during clinic visits. The Medical Research Council online decision support tool (MRC, n.d.) showed that the survey did not need an ethics committee review for sites in the United Kingdom. The Beaumont Ethics, Dublin, Ireland and Western Institutional Review Board-Copernicus Group, WA, USA, formally reviewed the real-world evaluation, and both ethics committees waived the need for ethical approval to conduct the survey procedures.

Results - Clinical evaluation phase

Ease of use

Thirteen out of 15 participants (86.6%) in the clinical study were able to complete all primary tasks. One participant was unable to type in the chat function because they accidentally activated the dictation button. Another participant could not find RA in the NSA home menu and was unable to switch to the rear camera. The proportion of participants who could complete the primary tasks with RA was significantly greater than those who could not (p = 0.0099, one-way chi-square). Learnability was one of the usability dimensions to be assessed. Learnability was defined as the ease with which users accomplish tasks the first time they encounter the design. The proportion of the tasks completed on the first attempt was 84.5%, while 13.9% was completed after some exploration, after trying an incorrect option or after referring to help information.

Call clarity

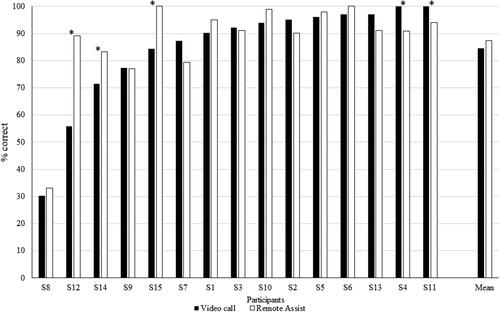

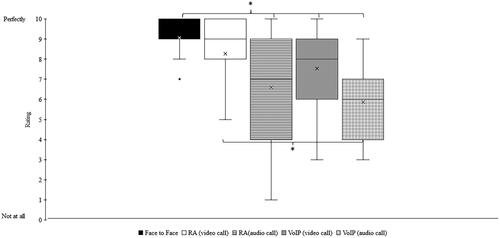

An exploratory objective of the clinical study was to characterise the ability of the participant to comprehend the clinician’s voice via the video call using the feedback on the rating scale. The Mann-Whitney nonparametric statistics test showed no significant differences (p = 0.2) between the median rating for face-to-face (median:9) and RA video call (median:9). However, the median rating for face-to-face was significantly higher (p < 0.05) than those for RA audio only (median:7), VoIP video call (median:8) or VoIP audio-only (median:6). The median rating for RA video calls was significantly higher than for VoIP audio-only calls () on the same smartphone. A paired t-test showed no significant difference in live voice speech perception scores with CUNY sentences between a VoIP video call and RA (p > 0.05) (). Binomial analysis showed that VoIP video call was significantly better than RA for two participants (S4 and S11), and RA was significantly better than VoIP video call for three participants (S12, S14 and S15) (p < 0.05).

Figure 2. Participant rating to the question “How well you felt you understood what they were saying to you?”. Black: Face-to-face conversation, White: Video call with Remote Assist (RA), Horizontal hatching: Audio call with RA, Grey: Video call with Voice over Internet Protocol (VoIP), and Checked hatchings: Audio call with VoIP. Box plots display quartiles and data range. Mean values are denoted by ‘x’, and ‘*’ denotes a significant difference (p < 0.05).

System latency

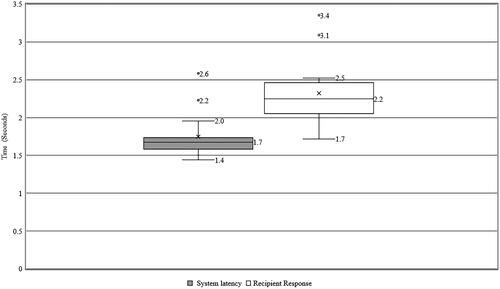

An exploratory objective of the clinical study was to characterise the time taken for RA to make changes to the program over the Internet in real-world conditions. The median time taken was 1.7 seconds (range 1.4 to 2.6). shows the time taken for the software to make changes over the Internet and the time taken for the participant to respond. A possible reason for the 2.2-second system latency for one subject was the use of a low-performance smartphone (iPhone 6) along with the personal hotspot from another iPhone 6 for Internet access. For another subject, the system latency was 2.6 seconds, which could be attributed to the long distance (3925 km) between the participant and the clinician during the session. The median time for the participant to respond to stimulation was 2.2 seconds (range 1.7 to 3.4). However, this included the time the software takes to present a stimulus and the reaction time for each participant. The participant’s median rating for the acceptability of the system latency was 9.5 on the 10-point rating scale (range 7 to 10).

Recipient feedback

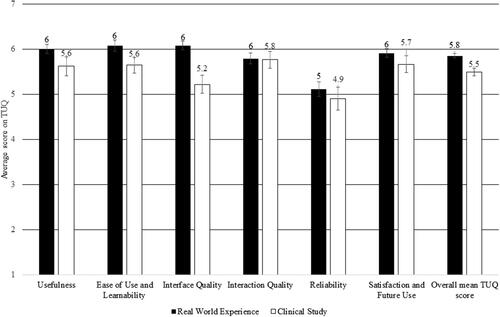

The participants in the clinical study were asked to rate the degree to which they agreed or disagreed with statements in the TUQ. On a one-way chi-square test, the proportion of recipients who agreed (5-somewhat agree, 6-agree, and 7-strongly agree) to the positive statements regarding RA across all six areas was significantly higher than those who did not. The participants gave positive ratings for all six domains, with the lowest rating for reliability. The reliability ratings may also have been affected by the strength of the Internet connection. shows the participant ratings on TUQ for RA during the clinical study and the real-world evaluation. Nine out of 15 participants felt that 50% to 100% of their clinic visits in the last 24 months could have been skipped if RA had been available.

Real-world use

In the real-world survey of CI recipients (n = 57), 93% reported a preference for their future follow-up care to include RA, either alone (30%) or in combination with in-clinic sessions (63%). When asked about the main benefits of RA compared to in-clinic appointments, most recipients responded that RA ‘helps me to get care if I can’t get to the clinic’ (79%), ‘saves me time’ (68%), and ‘is more convenient’ (61%). Other advantages commonly cited included timely access to care (58%), ‘maintaining connection with their hearing health professional outside of in-clinic visits’ (58%) and ‘cost savings’ (58%). Recipients who felt RA would save them money reported an average out-of-pocket cost of US$60.3 (10 to 125) and €91.1 (10 to 100) for each clinic visit in the USA (N = 16) and Europe (N = 17), respectively.

In terms of usability, the average TUQ score in the recipient survey was 5.8, which was comparable to the average TUQ score reported by the clinical study participants (5.5). A one-way chi-square test showed that significantly more recipients agreed than disagreed with the positive statements regarding RA across all six areas (). The top reason provided by clinicians for conducting the RA session was to ‘trial the new technology’ (51%). In 17% of sessions, RA was used to address specific issues reported by the recipients. Clinicians also used RA to ‘follow-up after an in-clinic appointment’ (17%) or ‘to replace an in-clinic visit’ (9%).

Discussion

Research in the development stage reported in this paper showed that a combination of sending replacement equipment by post and a synchronous session using the video call and programming capabilities of RA could enable a clinician to address 80% of the issues typically seen in CI follow-up sessions.

Current remote programming solutions available for CI recipients involve the setup of a remote programming facility managed by additional remote staff or shipping specialised equipment to the recipient’s home. Current solutions have several limitations, such as 1) additional costs involved in procuring specialised hardware and in shipping to/retrieving from the CI recipient, 2) administrative burden in sending and receiving the devices, 3) time and effort in cleaning the devices and managing patient data so that they can be repurposed for other sessions, and 4) dependency on the number of available remote programming kits on scheduling and the number of people who can be served. The unavailability of suitable infrastructure has been an important contributor to the low uptake of teleaudiology (Saunders & Roughley, Citation2021). This study has demonstrated the feasibility of conducting remote programming using the recipient’s smartphone via RA without the need for the shipment of specialised equipment. Thus, RA can help overcome the abovementioned limitations of the existing remote programming approaches.

Current remote programming solutions require CI recipients to be technically competent to manage the equipment. The clinical study reported in this paper has shown that most CI recipients successfully used RA on their first attempt without prior training. We present the first report using TUQ to evaluate a CI telehealth solution. Feedback on RA via the TUQ in the clinical study and the real-world experience showed that the majority of participants gave positive ratings for usefulness, ease of use, learnability, interface quality, interaction quality, reliability, satisfaction, and future use. The TUQ has been used to evaluate the usability of several telehealth solutions across different health contexts (Layfield et al., Citation2020; Serwe, Citation2018; Serwe et al., Citation2017; Vaughan et al., Citation2020; Yu et al., Citation2017). On average, the TUQ score for the telehealth methods reported in the abovementioned studies is 5.58 (range 4.7 to 6.01). In the present study, the TUQ scores obtained for RA in clinical (5.5) and real-world (5.8) settings were comparable to the average TUQ ratings (5.8) reported in the literature for other telehealth applications, suggesting high ease of use of RA.

Many clinics routinely provide CI support by telephone; however, it is known that many CI recipients depend on lipreading or access to visual cues to supplement their communication (Anderson et al., Citation2006). Earlier CI teleaudiology studies have reported communication difficulties for CI recipients due to poor video/audio link quality, being unable to hear while connecting the processor to the system, and when writing maps (Hughes et al., Citation2012). RA is designed to ensure the recipient always remains hearing, including when connecting the processor or saving programs. Our clinical study results indicated that the call clarity ratings with RA was comparable to other commercially available video call platforms such as WhatsApp and Facetime and appeared to be on par with face-to-face communication. Previous studies (Botros et al., Citation2013) indicate that a sample size of 12 or more subjects provides adequate statistical power for comparing recorded CUNY sentence tests. One limitation of the present study is that the minimum sample size required for live voice administration of CUNY sentences was unknown at the study’s onset. Future studies should replicate the speech perception comparison using appropriate sample size estimation for live speech.

One of the critical challenges with remote programming reported in earlier studies is poor reliability due to technical issues. This may be partly due to a reliance on a range of independent software and hardware, where any technical issue with one software/hardware could disrupt the session (Kim et al., Citation2021; Slager et al., Citation2019). With RA, the technology is specifically designed for remote programming of CI. It is integrated within the CSPro fitting software and the recipient’s NSA, reducing the likelihood of technical issues. In the clinical study and real-world evaluation, a significantly high proportion of recipients rated the system’s reliability as acceptable. In addition, the system latency of RA (average 1.7 seconds) was found to be acceptable for CI recipients. However, like other telehealth platforms, RA relies on a good Internet connection between the clinician and the recipient.

Another barrier to the adoption of telehealth is the clinician’s perception that in-person consultations are preferred by patients (Saunders & Roughley, Citation2021). However, studies have shown that a large proportion of patients prefer remote solutions only or in combination with an in-clinic visit (Doximity, Citation2022; IDA Institute, Citation2021; Toll et al., Citation2022). Similarly, in our real-world evaluation of RA, most recipients reported that they would prefer their future care to combine RA and in-clinic sessions as RA is convenient, saves time, and reduces travel burden.

Studies have shown that most audiology appointments are unplanned (Groth et al., Citation2017), with patients needing care when problems randomly arise. In a situation where a clinical session can’t be scheduled quickly due to the recipient’s inability to travel, the delay may result in a poor or inaccurate recall of the issue (Timmer et al., Citation2018). Since RA avoids the need to attend the clinic in person, a timelier review appointment may be possible. Indeed, based on the real-world experience, a large proportion (58%) of recipients reported that “RA provides them access to immediate care”.

One of the essential goals of CI fitting is to address issues CI recipients face in real-world environments. However, it is challenging for clinicians to replicate the real-world environment during in-clinic sessions (Cord et al., Citation2007; Wu et al., Citation2018). RA enables clinicians to conduct programming sessions while the recipients are in their homes or other non-clinical environments.

This research demonstrates that RA can be very useful for those who 1) have access to a compatible smartphone and 2) their smartphone is set up with their sound processor for RA. At the time of writing, clinical systems do not capture whether a given CI recipient meets the above two criteria, making it difficult for the clinician to determine if a recipient can be supported via RA. Thus, the applicability of RA to the population of CI recipients, in general, is unknown. While licensure requirements and reimbursement issues leading to clinic profitability concerns may remain significant barriers to the adoption of telehealth services in general (Muñoz et al., Citation2021), the acceptable ease of use, call clarity, reliability, and patient acceptance of RA may help lower some barriers to remotely delivering quality CI care post-implantation.

Conclusion

Remote Assist is the first app-based teleaudiology solution that clinicians can use to address a large proportion of common issues that arise among CI recipients post-implantation without the need for an in-clinic visit. RA was found to be easy to use by recipients without any prior training in a clinical study and in a real-world evaluation. The call quality and the system’s responsiveness were acceptable for remote programming. Remote Care solutions such as Remote Check and Remote Assist can help CI clinicians continue to deliver quality care in a convenient and timely fashion to overcome distance, travel and scheduling challenges associated with in-clinic appointments.

Authors’ Contributors

SM was involved in the design of RA, study design, ethics submission, data collection, data analysis, and write-up of the draft manuscript.

Declaration of conflict of interest

The author is an employee of Cochlear Ltd.

Acknowledgements

The author would like to thank the CI recipients and the CI clinicians who generously gave their time to the study. The author would like to thank Michael Sinclair and Suzanne Hayley for their input into the research methodology used in the development phase. Thanks to Joel Kelly and Esti Nel for their support in the conduct of the clinical study. Thanks to Ana Bordonhos, Helen Souris, Kyle Longwell and Potentiate Inc for facilitating the collection of real-world evidence. Thanks to Beejal Vyas-Price, Janine Del Dot, Joel Kelly, Kirsty Orton, and Peggy McBride for review of the manuscript. Cochlear Limited sponsored this study.

References

- AGDHAC (Australian Government Department of Health and Aged Care). 2020. Outreach Programs Service Delivery Standards. https://www.health.gov.au/sites/default/files/documents/2021/12/outreach-programs-service-delivery-standards.pdf

- American Academy of Audiology 2008. The Use of Telehealth/Telemedicine to Provide Audiology Services. http://audiology-web.s3.amazonaws.com/migrated/TelehealthResolution200806.pdf_5386d9f33fd359.83214228.pdf

- American Academy of Audiology 2019. “Clinical Practice Guidelines: Cochlear Implants.” Journal of the American Academy of Audiology 30 (10):827–844. https://doi.org/10.3766/jaaa.19088.

- American National Standards Institute (ANSI), & Association for the Advancement of Medical Instrumentation (AAMI). 2018. Human factors engineering—Design of medical devices HE75:2009/(R)2018

- American Speech-Hearing-Language Association (ASHA). n.d. Telepractice. Retrieved September 6, 2022, from www.asha.org/Practice-Portal/Professional-Issues/Telepractice/

- Anderson, I., W.-D. Baumgartner, K. Böheim, A. Nahler, C. Arnoldner, and P. D'Haese. 2006. “Telephone Use: What Benefit do Cochlear Implant Users Receive?” International Journal of Audiology 45 (8):446–453. https://doi.org/10.1080/14992020600690969.

- Athalye, S., S. Archbold, I. Mulla, M. Lutman, and T. Nikolopoulous. 2015. “Exploring Views On Current and Future Cochlear Implant Service Delivery: The Perspectives of Users, Parents and Professionals at Cochlear Implant Centres and in the Community.” Cochlear Implants International 16 (5):241–253. https://doi.org/10.1179/1754762815Y.0000000003.

- Audiology Australia. 2020. Position Statement Teleaudiology. https://audiology.asn.au/Tenant/C0000013/AudA Position Statement Teleaudiology 2020 Final(1).pdf

- Barnett, M., B. Hixon, N. Okwiri, C. Irungu, J. Ayugi, R. Thompson, J. B. Shinn, and M. L. Bush. 2017. “Factors Involved in Access and Utilization of Adult Hearing Healthcare: A systematic review.” The Laryngoscope 127 (5):1187–1194. https://doi.org/10.1002/lary.26234.

- Bennett, R. J., I. Kelsall-Foreman, C. Barr, E. Campbell, T. Coles, M. Paton, and J. Vitkovic. 2022. “Barriers and Facilitators to Tele-Audiology Service Delivery in Australia during the COVID-19 Pandemic: Perspectives of Hearing Healthcare Clinicians.” International Journal of Audiology 62 (12):1145–1154. https://doi.org/10.1080/14992027.2022.2128446.

- Boothroyd, A., L. Hanin, and T. Hnath. 1985. A sentence test of speech perception : reliability, set equivalence, and short term learning. CUNY Academic Works. https://academicworks.cuny.edu/gc_pubs/399/

- Botros, A., R. Banna, and S. Maruthurkkara. 2013. “The Next Generation of Nucleus(®) Fitting: A Multiplatform Approach Towards Universal Cochlear Implant Management.” International Journal of Audiology 52 (7):485–494. https://doi.org/10.3109/14992027.2013.781277.

- British Cochlear Implant Group. 2020. “Quality Standards Cochlear Implant Services for Children and Adults.” Bcig , :1–32. Accessed March 22, 2023. https://www.bcig.org.uk/_userfiles/pages/files/qsupdate2018wordfinalv2.pdf

- Cord, M., D. Baskent, S. Kalluri, and B. C. Moore. 2007. Disparity Between Clinical Assessment and Real-World Performance of Hearing Aids. The Hearing Review. June 2. Accessed March 1, 2023. https://hearingreview.com/practice-building/practice-management/disparity-between-clinical-assessment-and-real-world-performance-of-hearing-aids

- Doximity. 2022. State of Telemedicine Report Second Edition (Issue 2). Accessed February 27, 2023. https://assets.doxcdn.com/image/upload/pdfs/state-of-telemedicine-report-2022.pdf

- Eikelboom, R. H., R. J. Bennett, V. Manchaiah, B. Parmar, E. Beukes, S. L. Rajasingam, and D. W. Swanepoel. 2022. “International Survey of Audiologists During the COVID-19 Pandemic: Use of and Attitudes to Telehealth.” International Journal of Audiology 61 (4):283–292. https://doi.org/10.1080/14992027.2021.1957160.

- Eikelboom, R. H., D. M. Jayakody, D. W. Swanepoel, S. Chang, and M. D. Atlas. 2014. “Validation of Remote Mapping of Cochlear Implants.” Journal of Telemedicine and Telecare 20 (4):171–177. https://doi.org/10.1177/1357633X14529234.

- Eikelboom, R. H., and D. W. Swanepoel. 2016. “International Survey of Audiologists’ Attitudes Toward Telehealth.” American Journal of Audiology 25 (3S):295–298. https://doi.org/10.1044/2016_AJA-16-0004.

- Froehlich, M., E. Branda, and D. Apel. 2020. User Engagement with Signia TeleCare: A Way to Facilitate Hearing Aid Acceptance. Audiology Online. https://www.audiologyonline.com/articles/signia-telecare-hearing-aid-acceptance-26463

- Goehring, J. L., and M. L. Hughes. 2017. “Measuring Sound-Processor Threshold Levels for Pediatric Cochlear Implant Recipients Using Conditioned Play Audiometry Via Telepractice.” Journal of Speech, Language, and Hearing Research 60 (3):732–740. https://doi.org/10.1044/2016_JSLHR-H-16-0184.

- Groth, J., M. Bhatt, P. E. Raun, and A. Jahn. 2017. “Where Does the Day Go? Insights From Appointments in a Large Hearing Aid Practice.” The Hearing Journal July 6. Accessed February 24, 2023. https://journals.lww.com/thehearingjournal/blog/onlinefirst/Lists/Posts/Post.aspx?ID=13.

- Hughes, M. L., J. L. Goehring, J. L. Baudhuin, G. R. Diaz, T. Sanford, R. Harpster, and D. L. Valente. 2012. “Use of Telehealth for Research and Clinical Measures in Cochlear Implant Recipients: A Validation Study.” Journal of Speech, Language, and Hearing Research 55 (4):1112–1127. https://doi.org/10.1044/1092-4388.(2011/11-0237)

- Hughes, M. L., J. L. Goehring, J. D. Sevier, and S. Choi. 2018. “Measuring Sound-Processor Thresholds for Pediatric Cochlear Implant Recipients Using Visual Reinforcement Audiometry Via Telepractice.” Journal of Speech, Language, and Hearing Research 61 (8):2115–2125. https://doi.org/10.1044/2018_JSLHR-H-17-0458.

- IDA Institute 2021. Future Hearing Journeys report - Introduction to the Future Hearing Journeys project. https://idainstitute.com/fileadmin/user_upload/Future_Hearing_Journeys/Report_V1/index.html#/lessons/0DBKkw-3xICoBJYRO0AIpvydAnmnfAtG

- Kearney, B., and O. McDermott. 2023. “The Challenges for Manufacturers of the Increased Clinical Evaluation in the European Medical Device Regulations: A Quantitative Study.” Therapeutic Innovation & Regulatory Science 57 (4):783–796. https://doi.org/10.1007/s43441-023-00527-z.

- Kim, J., S. Jeon, D. Kim, and Y. Shin. 2021. “A Review of Contemporary Teleaudiology: Literature Review, Technology, and Considerations for Practicing.” Journal of Audiology & Otology 25 (1):1–7. https://doi.org/10.7874/jao.2020.00500.

- Layfield, E., V. Triantafillou, A. Prasad, J. Deng, R. M. Shanti, J. G. Newman, and K. Rajasekaran. 2020. “Telemedicine for head and neck ambulatory visits during COVID-19: Evaluating usability and patient satisfaction.” Head & Neck 42 (7):1681–1689. https://doi.org/10.1002/hed.26285.

- Maidment, D. W., and A. M. Amlani. 2020. “Argumentum ad Ignorantiam: Smartphone-Connected Listening Devices.” Seminars in Hearing 41 (4):254–265. https://doi.org/10.1055/s-0040-1718711.

- Maruthurkkara, S., A. Allen, H. Cullington, J. Muff, K. Arora, and S. Johnson. 2022. “Remote Check Test Battery for Cochlear Implant Recipients: Proof of Concept Study.” International Journal of Audiology 61 (6):443–452. https://doi.org/10.1080/14992027.2021.1922767.

- Maruthurkkara, S., and C. Bennett. 2022. “Development of Custom Sound® Pro software Utilising Big Data and its Clinical Evaluation.” International Journal of Audiology 63 (2):87–98. https://doi.org/10.1080/14992027.2022.2155880.

- Maruthurkkara, S., S. Case, and R. Rottier. 2022. “Evaluation of Remote Check: A Clinical Tool for Asynchronous Monitoring and Triage of Cochlear Implant Recipients.” Ear and Hearing 43 (2):495–506. https://doi.org/10.1097/AUD.0000000000001106.

- Miller, A., L. Standaert, E. Stewart, and K. S. June. 2020. Digital Service Delivery with AudiogramDirect : being there for your clients when you can’t be with. Phonak Field Study News. https://www.phonakpro.com/content/dam/phonakpro/gc_hq/en/resources/evidence/field_studies/documents/PH_FSN_Digital_Service_Delivery_with_Audiogram_Direct_297x210_EN_V1.00.pdf

- MRC n.d. Do I need NHS REC review? https://www.hra-decisiontools.org.uk/ethics/about.html

- Muñoz, K., N. K. Nagaraj, and N. Nichols. 2021. “Applied Tele-Audiology Research in Clinical Practice During The Past Decade: A Scoping Review.” International Journal of Audiology 60 (sup1):S4–S12. https://doi.org/10.1080/14992027.2020.1817994.

- Nassiri, A. M., M. A. Holcomb, E. L. Perkins, A. L. Bucker, S. M. Prentiss, C. M. Welch, N. S. Andresen, C. V. Valenzuela, C. C. Wick, S. I. Angeli, et al. 2022. “Catchment Profile of Large Cochlear Implant Centers in the United States.” Otolaryngology-Head and Neck Surgery: official Journal of American Academy of Otolaryngology-Head and Neck Surgery 167 (3):545–551. https://doi.org/10.1177/01945998211070993.

- Noblitt, B., K. P. Alfonso, M. Adkins, and M. L. Bush. 2018. “Barriers to Rehabilitation Care in Pediatric Cochlear Implant Recipients.” Otology & Neurotology: official Publication of the American Otological Society, American Neurotology Society [and] European Academy of Otology and Neurotology 39 (5):e307–e313. https://doi.org/10.1097/MAO.0000000000001777.

- Parmanto, B., A. N. Lewis, Jr., K. M. Graham, and M. H. Bertolet. 2016. “Development of the Telehealth Usability Questionnaire (TUQ).” International Journal of Telerehabilitation 8 (1):3–10. https://doi.org/10.5195/ijt.2016.6196.

- PEW Research Center 2021. Demographics of Mobile Device Ownership and Adoption in the United States. https://www.pewresearch.org/internet/fact-sheet/mobile/

- Psarros, C., and Y. Abrahams. 2022. “Recent Trends in Cochlear Implant Programming and (Re)habilitation.” In Cochlear Implants: New and Future Directions edited by S. DeSaSouza ), (1st ed., 441–471. Singapore: Springer Nature Singapore.

- Rooth, M., E. R. King, M. Dillon, and H. Pillsbury. 2017. “Management Considerations for Cochlear Implant Recipients in Their 80s and 90s.” Hearing Review Aug, July 25.

- Sach, T. H., D. K. Whynes, S. M. Archbold, and G. M. O'Donoghue. 2005. “Estimating time and Out-Of-Pocket Costs Incurred by Families Attending a Pediatric Cochlear Implant Programme.” International Journal of Pediatric Otorhinolaryngology 69 (7):929–936. https://doi.org/10.1016/j.ijporl.2005.01.037.

- Samuel, P. A., M. V. S. Goffi-Gomez, A. G. Bittencourt, R. K. Tsuji, and R. d Brito. 2014. “Remote Programming of Cochlear Implants.” CoDAS 26 (6):481–486. https://doi.org/10.1590/2317-1782/20142014007.

- Saunders, G. H., and A. Roughley. 2021. “Audiology in the TIme of COVID-19: Practices and Opinions of Audiologists in the UK.” International Journal of Audiology 60 (4):255–262. https://doi.org/10.1080/14992027.2020.1814432.

- Schepers, K., H. J. Steinhoff, H. Ebenhoch, K. Böck, K. Bauer, L. Rupprecht, A. Möltner, S. Morettini, and R. Hagen. 2019. “Remote Programming of Cochlear Implants in Users of All Ages.” Acta Oto-Laryngologica 139 (3):251–257. https://doi.org/10.1080/00016489.2018.1554264.

- Sertkaya, A., R. DeVries, A. Jessup, and T. Beleche. 2022. “Estimated Cost of Developing a Therapeutic Complex Medical Device in the US.” JAMA Network Open 5 (9):e2231609. https://doi.org/10.1001/jamanetworkopen.2022.31609.

- Serwe, K. M. 2018. “The Provider’s Experience of Delivering an Education-based Wellness Program via Telehealth.” International Journal of Telerehabilitation 10 (2):73–80. https://doi.org/10.5195/ijt.2018.6268.

- Serwe, K. M., G. I. Hersch, and K. Pancheri. 2017. “Feasibility of Using Telehealth to Deliver the “Powerful Tools for Caregivers” Program.” International Journal of Telerehabilitation 9 (1):15–22. https://doi.org/10.5195/ijt.2017.6214.

- Shapiro, S. B., N. Lipschitz, N. Kemper, L. Abdelrehim, T. Hammer, L. Wenstrup, J. T. Breen, J. J. Grisel, and R. N. Samy. 2021. “Real-World Compliance with Follow-up in 2,554 Cochlear Implant Recipients: An Analysis of the HERMES Database.” Otology & Neurotology: official Publication of the American Otological Society, American Neurotology Society [and] European Academy of Otology and Neurotology 42 (1):47–50. https://doi.org/10.1097/MAO.0000000000002844.

- Slager, H. K., J. Jensen, K. Kozlowski, H. Teagle, L. R. Park, A. Biever, and M. Mears. 2019. “Remote Programming of Cochlear Implants.” Otology & Neurotology: Official Publication of the American Otological Society, American Neurotology Society [and] European Academy of Otology and Neurotology 40 (3):E260–E266. https://doi.org/10.1097/MAO.0000000000002119.

- Sonne, M., L. W. Balling, and E. L. Nilsson. 2019. Right at Home : Remote Care in Real Life. Hearing Review. https://hearingreview.com/practice-building/office-services/telehealth/right-home-remote-care-real-life

- Timmer, B. H. B., L. Hickson, and S. Launer. 2018. “The Use of Ecological Momentary Assessment in Hearing Research and Future Clinical Applications.” Hearing Research 369:24–28. https://doi.org/10.1016/j.heares.2018.06.012.

- Toll, K., L. Spark, B. Neo, R. Norman, S. Elliott, L. Wells, J. Nesbitt, I. Frean, and S. Robinson. 2022. “Consumer Preferences, Experiences, and Attitudes Towards Telehealth: Qualitative Evidence from Australia.” PLOS One 17 (8):e0273935. https://doi.org/10.1371/journal.pone.0273935.

- Vaughan, E. M., A. D. Naik, C. M. Lewis, J. P. Foreyt, S. L. Samson, and D. J. Hyman. 2020. “Telemedicine Training and Support for Community Health Workers: Improving Knowledge of Diabetes.” Telemedicine Journal and e-Health: The Official Journal of the American Telemedicine Association 26 (2):244–250. https://doi.org/10.1089/tmj.2018.0313.

- Warren, C. D., E. Nel, and P. J. Boyd. 2019. “Controlled Comparative Clinical Trial of Hearing benefit outcomes for users of the CochlearTM Nucleus® 7 Sound Processor with mobile connectivity.” Cochlear implants International 20 (3):116–126. https://doi.org/10.1080/14670100.2019.1572984.

- Wesarg, T., A. Wasowski, H. Skarzynski, A. Ramos, J. C. Falcon Gonzalez, G. Kyriafinis, F. Junge, A. Novakovich, H. Mauch, and R. Laszig. 2010. “Remote Fitting in Nucleus Cochlear Implant Recipients.” Acta Oto-Laryngologica 130 (12):1379–1388. https://doi.org/10.3109/00016489.2010.492480.

- Wolfe, J., M. Morais Duke, E. Schafer, G. Cire, C. Menapace, and L. O'Neill. 2016. “Evaluation of a Wireless Audio Streaming Accessory to Improve Mobile Telephone Performance of Cochlear Implant Users.” international journal of audiology 55 (2):75–82. https://doi.org/10.3109/14992027.2015.1095359.

- Wu, Y.-H., E. Stangl, O. Chipara, S. S. Hasan, A. Welhaven, and J. Oleson. 2018. “Characteristics of Real-World Signal to Noise Ratios and Speech Listening Situations of Older Adults With Mild to Moderate Hearing Loss.” Ear and Hearing 39 (2):293–304. https://doi.org/10.1097/AUD.0000000000000486.

- Yu, D. X., B. Parmanto, B. E. Dicianno, V. J. Watzlaf, and K. D. Seelman. 2017. “Accessibility Needs and Challenges of a mHealth System for Patients with Dexterity Impairments.” Disability and Rehabilitation. Assistive Technology 12 (1):56–64. https://doi.org/10.3109/17483107.2015.1063171.