?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

This paper critically evaluates the quantification of psychological attributes through metric measurement. Drawing on epistemological considerations by Immanuel Kant, the development of measurement theory in the natural and social sciences is outlined. This includes an examination of Fechner’s psychophysical law and the fundamental criticism initially raised by von Kries. The distinction between theoretical and practical measurability is illuminated, addressing the question of equality within mental entities () and their measures (

). Psychometric scaling procedures such as Rasch scaling are argued to enable interval-scaled quantification on a real number line

, but they are insufficient to establish a genuine interval scale level of

. Instead, the values of

should be regarded as qualitative statements that indicate ordinal relationships within

. Two principles of scaling – the Guttman “model” and the Rasch model – are introduced, with their theoretical foundations explained, referencing the Rasch paradox. In the empirical section, data simulation is conducted to illustrate the Rasch paradox and to substantiate the theoretical considerations of the article. The research underscores the significance of linguistic analysis in understanding quantitative claims, suggesting a shift toward ordinal quantification within psychological measurement, drawing upon the linguistic principle of localism. Spatial metaphors are argued to play a central role in human language, even in natural sciences like physics, suggesting that systematic analysis of human language could offer a valuable method for the quantitative analysis of psychological attributes.

Introduction

This article is dedicated to the overarching topic of an objective quantification of psychological characteristics. We will specifically deal with the question of the measurement level to be achieved and appropriately interpreted in such a quantification approach, for example, as proposed quite prominently by Stevens (Citation1946, Citation1958) for social science measurement theory. However, it is precisely this Steven’s taxonomy of scale levels that has been even before Stevens and continues to be the subject of controversial contributions and debates in scientific psychology (see e.g. Feuerstahler, Citation2023; Lord, Citation1953; Mausfeld, Citation1994; Trendler, Citation2009; von Kries, Citation1882; Zand Scholten & Borsboom, Citation2009, just to name a few). The single contributions touch on fundamental epistemological questions and questions of scientific measurement theory and reach so far into the history, not only of the subject of psychology, that it must be stated that the controversy about the measurement of the Psychic and its scale level has ultimately accompanied the subject of psychology throughout its scientific development.

Thus, since the beginnings of scientific psychology, specifically with the foundation of Fechner’s psychophysics (see Fechner, Citation1858), there has been an ongoing, lively, and sometimes controversial debate on the idea of measurement in psychology (e.g Campbell, Citation1921; Cliff, Citation1992; Humphry, Citation2011; Kyngdon, Citation2008b, Citation2011b; Maraun, Citation1998; Markus & Borsboom, Citation2012; Maul et al., Citation2016; Michell, Citation2005, Citation2006, Citation2021; Saint-Mont, Citation2012; Salzberger, Citation2013; Schönemann, Citation1994; Sijtsma, Citation2012; Thomas, Citation2020; Trendler, Citation2009, Citation2019b; Uher, Citation2018, Citation2021b, Citation2022; Velleman & Wilkinson, Citation1993, just to name some). There are so many (more) contributions in the literature on this topic of measurement, not just psychological measurement, and its appropriate level that a more or less complete bibliography alone could easily go beyond the bounds of a journal article. We will therefore limit ourselves here to a few basic sources that address central concepts and ideas, knowing full well that some readers may miss something here.

Although there seems to be broad acceptance that Fechner’s approach to quantifying the Psychic () established a fundamental paradigm shift toward quantification in the history of psychology (e.g. Michell, Citation1999), there is only limited consensus about the particular conclusions in terms of substance science interpretation derived from this paradigm; for example, with respect to the level of measurement of the Psychic (

), or in modern language: psychological traits, on a so-called “metric” interval scale. This paper first references this fundamental critique of the metric measurability as well as interpretation of psychological variables and, secondly, bridges current issues of scalability and the extent to which outcome variables can be interpreted on certain scale levels, as prominently proposed, for example, by Stevens (Citation1946).

Since the measurement principles of scientific psychology were strongly oriented toward those of the natural sciences when they were founded (e.g. Cornejo & Valsiner, Citation2021), we consider it appropriate in this essay to embed the controversy surrounding the measurement of the Psychic in a somewhat larger, also scientific-theoretical framework. This will show that even the seemingly rock-solid foundation of, for example, metric measurement in physics can also be seen as an implicit circular argument, at least from a strictly epistemological perspective. With regard to this statement, which may seem somewhat surprising to the reader at this point, reference is made, for example, to comments made by Böhme (Citation1976) on the foundations of quantification in the natural sciences as already discussed in Duhem (Citation1908).

We shall begin by examining the theoretical foundations of measurement, starting with its application in the domain of natural sciences. Through a concise outline, we will highlight key milestones in the historical development of the concept of measurement, both in general and with a focus on psychology. In addition, we will delve into two dominant scaling models extensively utilized in psychometrics, presenting their implications and paradoxes.

In order to adequately address the specific questions about measurement in psychology in this paper and to embed them in a broader scientific theoretical framework, this paper is organized as follows. In the following section, we will outline the social origins and scientific theoretical foundations of measurement. In the form of a brief outline, few milestones and examples in the historical development of the concept of measurement in general and its embedding in social developments over the last centuries will be presented (Frängsmyr et al., Citation1990). We will not go into too much detail about the technical aspects of physical measurement operations in the natural sciences but rather concentrate on the epistemological implications. Second, we will then address some central aspects of approaches to measuring . Third, we will specifically address two basic scaling procedures that are widely used in psychometrics and present their implications and paradoxes in the context of the measurement principle of psychological research.

In a final empirical part, a small simulation study will be conducted to illustrate the theoretical issues presented in the previous sections. In the concluding discussion, we will outline the main implications for practical measurement tasks in psychology and give some suggestions for the interpretation of scaling results in psychological and social science research. The prospects for further research will be presented as a conclusion.

Human perception, social discourse and the rise of the ideal of quantification in the natural sciences

Human perceptions and the social exchange regarding our perceptions about the “external world” are fundamental to our collective existence. When individuals discuss their perceptions of the world, they frequently employ spatial metaphors (e.g., “by far” the best performance, “highest” price, hitting a new “low,” etc.), suggesting that such linguistic spatial metaphors mirror underlying mental metaphors – a hypothesis supported by empirical evidence (see e.g., Casasanto & Bottini, Citation2014; Fischer & Shaki, Citation2014; Starr & Srinivasan, Citation2021, for a review). These findings are central to our present paper. First, they address the fundamental question of whether there is any possibility of “objectivity” in shared human perceptions – in essence, “what can we know” (with certainty)? Secondly, such spatial metaphors resonate with Kant’s thesis that the fundamental structures of perceived reality – space and time – are prescribed a priori in our way of perceiving the world. Beginning with an exploration of the objectification of perception, we will discuss these two central aspects in the theoretical sections of this paper.

The onset of the Enlightenment sparked a growing interest in objective methods of perceiving and measuring the world. In this briefly outlined context, the shift toward “the rational” view of the world marked the beginning of humans’ objective measurement of the shared environment, laying the foundation for systematic natural sciences (e.g., Frängsmyr et al., Citation1990; Mason, Citation1956). This almost revolutionary commencement of human-led measurement of the shared environment not only influenced the emerging scientific community but also left a profound impact on broad segments of society and public discourse. It continues to inspire nonscientific authors to produce reflective literary works on the origins and ascent of measuring the external world (see e.g., Kehlmann, Citation2007). Francis Bacon (1561–1626) is often cited in contemporary writings on the history of science as a prominent figure symbolizing the shift toward new scientific paradigms at the end of the Middle Ages (e.g., Böhme, Citation1993; Mason, Citation1956; Meinel, Citation1984). With his Novum organon scientiarum, published around 1620 (see Bacon, Citation1762, for an available reference), Bacon established a new logic of scientific invention. In his work, Bacon documents instruments and principles for arriving at new (scientific) discoveries in orderly, systematic steps (e.g., Meinel, Citation1984). However, it must be critically noted that (1) there were already scientifically-based inventions before Bacon (gunpowder, the compass, …) and that (2) Bacon’s role as the founder and representative of a new scientific approach is overstated (e.g., Frost, Citation1927; von Liebig, Citation1874). For instance, in one treatise, von Liebig (Citation1874) highlights Bacon’s critical perspective on the empirical research method, noting that active natural scientists of his time knew nothing of Bacon, and that there is to this day no clear evidence of his active influence on natural science (von Liebig, Citation1874). In this context, Mason (Citation1956) observes that Bacon, serving as Lord Chancellor of England under King James I, was not primarily a natural scientist. Instead, he was among the first to recognize the historical importance and potential of science, as well as its transformative role. Consequently, he endeavored to engage others in science to actualize these potentials (Mason, Citation1956, p. 110). In this vein, the innovative perspective introduced by Francis Bacon can be perceived at a meta-level as the groundwork for a novel scientific theory (Böhme, Citation1993). Specifically, this entailed the inception or promotion of a new methodology for fostering innovations and advancing knowledge.

According to Bacon, scientists were thus no longer merely connoisseurs in regard to a fixed canon of knowledge and Aristotelian logic, but publicly exposed members of a newly forming society that was increasingly turning toward the rational, who had to (constantly) expand the corpus of collective knowledge by adding new contributions to (scientific) knowledge. This new and now also critical view of “new” science is prototypically demonstrated much later in modern times by Max Weber who stated: “Every scientist knows that what he has contributed to knowledge will be obsolete in 10, 20, 50 years” (Weber, Citation1919, p. 14). After almost 300 years of cultivating and maturing the principles of enlightened natural sciences since Bacon, Max Weber provides a disillusioned analysis of the progress made by science, particularly as it manifests in the living conditions of the modern age. For Weber, the “disenchantment of the world” [Entzauberung der Welt] (Weber, Citation1919, p. 16) is the fate of this modern age, characterized by rationalization and intellectualization, which are now taken for granted as core elements of science. Thus, for Weber, the progress in knowledge and technology associated with systematization and rationalization also implies an increasing responsibility of science toward society, particularly in ensuring that what emerges from scientific work is considered important in the sense of being “worth knowing” [wissenswert] (Weber, Citation1919, p. 21) – not only for the individual but also for society.

Epistemological distinction between intensive and extensive measures

The initiation of systematization in acquiring scientific knowledge, often attributed to Francis Bacon, is evident in the growing interest in not only the practical aspects of measurement but also in its epistemological implications. This interest pertains to understanding both the nature of what is being measured and the properties of these measurements. Around a century after Bacon, it was Immanuel Kant (1724–1804), who, in his seminal 1781 work “Critique of pure reason” [Critik der reinen Vernunft] engaged with Aristotelian concepts of quality and quantity. Within his “Axioms of perception” [Axiomen der Anschauung] and “Anticipations of perception” [Anticipationen der Wahrnehmung] (Kant, Citation1781, pp. 162–176) he distinguished between intensive and extensive measures of natural entities (see also Böhme, Citation1974). The core idea behind the distinction between extensive and intensive measures [intensive Gröẞen] stems from Kant’s thesis that the fundamental structures of reality, space, and time, are prescriptions of our way of perceiving the world (a priori). Extensive measures [extensive Gröẞen] are not intrinsic properties of things themselves; rather, they are ascribed to things relative to our mode of cognition and perception, emerging from our own minds as a distinctly human form of perception. In Kant’s own words, “All phenomena contain, in their form, a perception in space and time, which is their overall a priori basis. They can therefore be apprehended in no other way, i.e., they cannot be taken up into empirical awareness …” [Alle Erscheinungen enthalten der Form nach eine Anschauung im Raum und Zeit, welche ihnen insgesammt a-priori zum Grunde liegt. Sie können also nicht anders apprehendirt, d. i. ins empirische Bewusstsein aufgenommen werden …] (Kant, Citation1911, p. 148) or “I call an extensive measure the one in which the conception of the parts makes the conception of the whole possible … “[Eine extensive Grösse nenne ich dieienige, in welcher die Vorstellung der Theile die Vorstellung des Ganzen möglich macht …] (Kant, Citation1781, p. 162). It is precisely this last quote from Kant’s work that to a certain extent anticipates Fechner’s (Fechner, Citation1858) approach to quantifying the Psychic and also points the way to current conceptualizations of (fundamental) measurement such as those later undertaken by von Helmholtz (Citation1887); Hölder (Citation1901) to link the definition of measurement to real objects.

Intensive measures, on the other hand, are, as anticipations of perception, subjective imaginations which correspond to real objects insofar as they provide sensation. In Kant’s own words, “The principle which anticipates all perceptions, as such, is called thus: In all phenomena the sensation, and the real which corresponds to it in the object, (realitas phaenomenon) has an intensive magnitude, i.e., a degree.” [Der Grundsatz, welcher alle Wahrnehmungen, als solche, anticipirt, heiẞt so: In allen Erscheinungen hat die Empfindung, und das Reale, welches ihr an dem Gegenstande entspricht, (realitas phaenomenon) eine intensive Grösse, d. i. einen Grad.] (Kant, Citation1781, p. 166).

Kant’s early epistemological distinction between intensive and extensive measures finds its equivalent in current natural science, particularly in physics and metrology. Extensive measures, such as length and weight, are understood to depend on the size of the system under consideration, representing properties empirical objects can show, with variations in magnitude (Hölder, Citation1901; Trendler, Citation2009; von Helmholtz, Citation1887). Kant termed these measures as appearances of the real world. Additive metric measurement for these extensive measures is based a priori on the peculiarity of our human perceptual apparatus, adapted to perceive ourselves and our environment in three-dimensional spatial and temporal continuity. Such additivity results from the perceived additive relationship of the properties of empirical objects, observed in operations such as placing weights in the same weighing pan or aligning measuring rods (see e.g. Sherry, Citation2011, p. 518). Aristotle already recognized that length and weight have parts, allowing for their addition and subtraction in empirical contexts. Thus, the metric measurement of extensive quantities is reduced to counting concatenated units, equivalent in their implied order relationship (Hölder, Citation1901). For instance, the “International Prototype Metre”, manufactured in 1889 as a platinum-iridium alloy scale, was defined by the French National Assembly as the forty-millionth part of the earth’s meridian passing through Paris, serving as one meter (cf. Hoppe-Blank, Citation2015, p. 7).

On the other hand, temperature, distinct from heat or thermal energy and measured in degrees Celsius or Fahrenheit, is considered an intensive quantity. Intensive quantities are physical measures that remain constant regardless of the amount of substance present in a system, unlike extensive quantities, which vary in proportion to the system’s size. While humans can measure extensive quantities, like length, using manifest reference objects and natural units, the measurement of other physical quantities, such as temperature, is not as straightforward due to the lack of perceptible units without additional assumptions. Unlike length, temperature scales cannot be divided into countable parts, making it nontrivial to determine whether thermometer measurements can be added or subtracted. For example, Sherry (Citation2011) pointed out that a metric interpretation of temperature measurement relies on theoretical model assumptions (see also Chang, Citation1995, Citation2004), particularly in substance science, regarding the physical properties of the measuring liquids used, typically alcohol or mercury.

Circular reasoning when measuring in science

According to Chang (Citation2004), the realization that a well-founded model or theory of the physical properties of the measuring liquids is required as a basis for classical thermometers can be traced back to early observations by the Dutch physicist Herman Boerhaave (1668–1738). Boerhaave, who possessed two thermometers with mercury and alcohol, both made by Daniel Gabriel Fahrenheit, noted discrepancies in measured values from thermometers with different measuring liquids. Despite having the same scale endpoints for the freezing and boiling points of water on a 100-point scale, Fahrenheit was initially unable to explain the different temperature values for empirically identical temperatures. The realization that these discrepancies were ultimately due to the varying expansion coefficients of the measuring liquids, which change depending on the temperature level, was not self-evident in Boerhaave and Fahrenheit’s time. As Chang (Citation2004) further explains, the standard calibration procedure for thermometers at that time just involved establishing two scale endpoints on the column of liquid used for measurement, such as the freezing and boiling points of water, while implicitly assuming a linear relationship between temperature rise and liquid expansion. However, this overlooks the fact that the entire principle of temperature measurement is justified only by the model assumption of a constant expansion coefficient over the entire measurement range, which is made a priori within the framework of scientific theory. For such a theory to serve as a valid basis for measurement, empirical evidence would first need to be established. However, this would require a proven thermometer. This problem of the epistemological circularity of measurement definition, referred to by Chang (Citation1995, Citation2004) as “nomic measurement,” also exists in other areas of physics and the natural sciences in general. Moreover, within the topic of measuring energy in quantum mechanics Chang (Citation1995) pointed to the fact that the direct use of a physical law, derived from substance science theory, for the purpose of measurement creates a problem of circularity. The law (the theory) needs to be empirically tested in order to ensure the reliability of measurement, but the testing of the theory requires that we already know about the quantitative properties of the quantity to be measured. Therefore, the seemingly simple statement, typically used within supposedly universally valid and broad definitions of measurement, that a measurement of the set

belongs to the system

, only makes sense from the point of view of substance science if the system (

) has already been classified as a part of

via a “justified” proposition based on theory (Van Fraassen, Citation2008). In this respect, the statement remains theoretical and ultimately depends on our (a priori) accepted theories and classifications of physical, and indeed mental or perceptual systems. If our theories change, the conditions for the truth of this statement also change (cf. Van Fraassen, Citation2008, p. 143). Admittedly, the theory dependence of measurement statements does not manifest as conspicuously in the context of established measurement procedures that are widely accepted and taken for granted. Consider the example of measuring temperature: in everyday contexts, the practical benefits of using thermometers are generally acknowledged, even in the absence of a deep epistemological understanding, given the relatively well-established and stable underlying theory. However, from a strictly epistemological standpoint, the theoretical underpinnings of measurement statements remain crucial. This interdependence becomes particularly evident in scenarios where a theory is novel and potentially at odds with an existing framework addressing the same phenomenon. An extended or rather alternative definition of measurement that takes this into account, is given by Van Fraassen (Citation2008), according to which “measurement is an operation that locates an item, already classified as in the domain of a given theory, in a logical space, provided by the theory to represent a range of possible states or characteristics of such items” (Van Fraassen, Citation2008, p. 164). In such cases, the behavior of any measurement device falls within the domain of the a priori theory itself, and the criteria for interpreting it as a physical, observable correlate of the measurement are described in terms of the theory itself, which constitutes a circular reasoning, at least from a strict epistemological perspective (see also e.Böhme, Citation1976; Duhem, Citation1908). From the negation of this obvious paradox, which in the end is only covered by the acceptance and stability of the background theories forming the respective measurement context, the positivist illusion was derived that we could describe the measurement processes and their results free of any theoretical content (Van Fraassen, Citation2008).

Measuring the psychic, and the illusion of metric scale levels for

As discussed in the previous section, even in the natural sciences like physics, the issue of circularity can arise during the establishment of unique measurements (metrification) if their operationalization relies on observed physical laws in the empirical domain. Furthermore, for an extensive measure, identifying units of equal magnitude is a necessary precondition for quantification and fundamental measurement (cf. Hölder, Citation1901; Trendler, Citation2019a; von Helmholtz, Citation1887). This process of fundamental measurement, which hinges on units of equal magnitude, essentially boils down to the simple act of counting concatenated units. But more or less throughout the last around 100 hundred years of attempting to develop psychology as a quantitative science (Campbell, Citation1921) there were doubts raised if and if so how that might be accomplished (Díez, Citation1997a, Citation1997b).

Confusion in this debate may stem from the ambiguous use of the term “metric”. Adroher et al. (Citation2018), based on a systematic literature review, conclude that “metric” is employed in various ways, without a consensus on whether its meaning implies interval scale properties or “only” ordinal or ordered scales. To foster clarity, we draw on the definition of “metric” in a metric space from the subfield of topology in mathematics.

Let be a non-empty set. A mapping

is called a metric on

if for all

the following conditions hold:

where is a mapping function and the pair

is called metric space.

Despite the precision and clarity provided by a mathematical definition of a metric, it is essential to clarify that this definition initially applies solely to the mathematical number space, such as the set of real numbers . This space provides a numerical representation for the measured values of extensive physical quantities like length or mass. This representation aligns with the classical concept of measurement, which involves counting units and is justified by the potential for concatenating physically tangible objects.

For the measurement of extensive quantities in physics such as mass and length Hölder (Citation1901), was able to prove that precisely the given additive connection of these physical characteristics in the tangible, phenomenological object domain implies a 1:1 connection to the mathematical metric space or system of positive real numbers. This fact was first formalized by other authors for the mathematical field, such as Bertrand Russell (cf. Russell, Citation1903/2010), and later called isomorphic mapping for the field of social-scientific, psychological measurement in the context of the so-called representation theory of measurement (cf. Michell, Citation1993; Stevens, Citation1958; Weitzenhoffer, Citation1951). In his tracing of the development of the representational theory of measurement, Michell (Citation2021) points out that this originally developed from the endeavor of mathematics to establish the subject as independent of its applications in empirical science and was picked up later in particular by authors like Stevens (Citation1946, Citation1958) to give psychology the status of a quantitative science through the reconstruction of measurement as the numerical coding of psychological characteristics from the phenomenological domain.

The fundamental concept underlying the representational theory of measurement is that of a relational system or structure. A relational system consists of a finite set of elements, referred to as the domain of the relational system, and relationships between these elements. In his seminal work on scientific mathematical models, Tarski (Citation1954) provided a comprehensive definition of a relational system, framing it within the context of a mathematical structure. Tarski (Citation1954) defines a relational system as follows, while noting that such relational systems are also termed algebras:

By a relational system, we understand an arbitrary system (sequence) in which

is a non-empty set,

are finitary relations, and each relation

is included in

where

is the rank of

; the type of the sequence

is called the order of

(Tarski, Citation1954, p. 573)

According to the foundations of the subdiscipline of topology in mathematics (see Fréchet, Citation1906; Hausdorff, Citation1914, for early references), which focuses on formal defining such metric spaces, the set of real numbers (as part of

) forms a universal numerical structure, serving as a metric space. This structure enables the representation as a relational system of any one-dimensional quantitative entity within a formal framework.

In the representation theory of measurement, particularly within the context of social science measurement, two types of relational systems are posited: an empirical relational system (ers) and a numerical relational system (nrs). These systems are crucial to the framework of the representation theory, necessitating a plausible and, importantly, empirically supported mapping function to connect the empirical structure with the numerical one.

Thus, despite the precision and clarity of the mathematical definitions provided for a metric and metric spaces, it is crucial to acknowledge that these initially pertain only to the numerical relational system (nrs). While such definitions offer a formal framework for the principle of unit counting in measurements, they do not address whether the defined metric is applicable within the empirical relational system . In this context, a crucial question arises: how can we establish a connection, in terms of a mapping function, between the empirical object domain (relative) and the numerical relational system? This challenge is pertinent for capturing or measuring characteristics like

that can only be identified indirectly, such as human feelings, mental processes, or attitudes. The strategies employed in psychology to address this issue are diverse and have been integral to the discipline since its inception

An early representative of this question was Gustaf Theodeor Fechner (1801–1887). Fechner’s initial foray into measuring the Psychic, as detailed in his works (see. Fechner, Citation1858, Citation1860a, Citation1860b), was likely driven by the goal of identifying a fundamental unit as the basis for quantifying psychic phenomena . Indeed, Fechner recognized that pinpointing such a unit was crucial not only for legitimizing the measurement process in psychology by enabling the counting of units, but also understood that psychic measurement necessitated an indirect approach. He compared it to the indirect measurement of temperature in physics, where temperature is inferred from the expansion of a liquid – a process that, in line with Kant’s theory, categorizes temperature as an intensive quantity. In his seminal 1858 essay “The Psychic Measure” (cf. “Das Psychische Maß”; Fechner, Citation1858), Fechner outlines his measurement methodology, emphasizing the project’s objective as follows:

In fact, it will be shown how our psychic measure in principle amounts to nothing other than the physical, to the counting of how many times the same thing. We would, of course, try in vain to make such a direct count: Sensation does not divide itself into equal inches or degrees that we could count.

[In der That wird sich zeigen, wie unser psychisches Maẞ prinzipiell auf nichts anderes hinauskommt, als auf das physische, auf die Zählung eines Wievielmal des Gleichen. Umsonst freilich würde wir versuchen, eine solche Zählung direct vorzunehmen: Die Empfindung theilt sich nicht in gleiche Zolle oder Grade ab, die wir zählen könnten.]

(Fechner, Citation1858, p. 2; German spellings as in the original)

Fechner sought to create a connection between mental sensations and physical stimuli by quantifying them with predefined physical and metric units of measurement. In essence, he sought to make mental qualities measurable by defining a fundamental mental unit through a mapping function that connects psychic phenomena () with physical phenomena. In fact, Fechner himself described the psychophysical function he identified as a fundamental formula [Fundamentalformel] (see Fechner, Citation1860b, pp. 9–10) which, on the basis of Weber’s law, formalizes the relationship between the (smallest) units for the psychic experience

(

), the respective physical unit (

) and the actual physical stimulus intensity (

) measured in the latter units (see Equation 4).

Fechner considered the two smallest units and

of the two scales

and

as differentials. To justify this approach, Fechner (Citation1860b) writes as follows:

The fundamental formula developed at the beginning of the previous Chapter […] is based on experiments on differences which are at the limit of the intelligible. According to this, and

can be considered and treated as differentials in it.

Die im Eingange des vorigen Kapitels entwickelte Fundamentalformel […] stützt sich auf Versuche über Unterschiede, welche an der Gränze des Merklichen stehen. Hienach können und

in ihr als Differenziale betrachtet und behandelt werden. (Fechner, Citation1860b, p. 33; German spellings as in the original; the omission refers to the EquationEquation 4

(4)

(4) )

Through integration, Fechner arrives at the following equation: 5:

Fechner replaces the integration constant in Equation 5 by introducing a lower stimulus threshold

, below which the sensory intensity

– i.e., the person perceives nothing. Mathematically, this corresponds to setting the equation 5 to zero, whereby Fechner uses

, so that the Equation 6 results by resolving to

:

Substituting Equation 6 into equation 5 finally results in the Equation 7 after factoring out and transforming according to the calculation rules for logarithms.

In the equations above, and

stand for the psychological and physical scale based on their fundamental units respectively,

is Euler’s number as the basis for the natural logarithm and

is a scaling factor that is directly related to Weber’s constant and therefore varies depending on the sensory modality.

However, perhaps overshadowed by Fechner’s mathematically ingenious derivation, it is often overlooked that this approach relies on a simple yet significant additional assumption. This assumption posits that by mapping the physical stimulus intensities (on the scale), which increase geometrically, onto the arithmetic scale of the

scale, the psychological equality of sensory units across the entire perception continuum is assumed. But, one must honestly state that this assumption lacks empirical substantiation. This assumption parallels somehow the empirically unproven assumption of a linear model when attempting to measure temperature via the (length of) expansion of any measurement liquid at different temperatures (see the above section).

Among the early critics of Fechner’s approach to measuring the Psychic is von Kries (Citation1882). He contends that, in reference to the Psychophysical Law established by Fechner (Citation1860a, Citation1860b) that Fechner’s apparent demonstration of measuring

on a metric scale ultimately relies on implicitly made (axiomatic) assumptions. von Kries (Citation1882) defines the measurement process proposed by Fechner as the setting equal of the non-identical, referring to an entity (

) and its measure, which in today’s psychometric measuring approaches typically termed as

.Footnote1 In his critique of Fechner’s approach, von Kries (Citation1882) distinguishes between theoretical and practical measurability. The former addresses the fundamental question of the existence of equalities (fundamental units) in the entity

to be measured. From today’s perspective, it can be noted that von Kries’ criticism ultimately boils down to the problem of nomic measurement as formulated by (Chang, Citation1995, Citation2004). In other words, the theory, in Fechner’s case the assumption of the equality of sensations, falls into the domain of the measurement itself.

While Fechner focused on measuring stimulus-related sensations in psychological research, contemporary psychological and social science measurement largely centers on evaluating constructs such as personality traits, personal attitudes, cognitive abilities, political orientations, and other opinion patterns. These constructs may also encompass varying numbers of dimensions. These constructs or their single dimensions are usually assessed using questionnaires (e.g. Heine, Citation2020). Conceptually similar statements (items) are aggregated into psychometric scales, which are subsequently interpreted as a quantitative representation of a latent variable through scaling. The underlying concept is to regard individual items as the smallest unit of measurement (Osterlind, Citation1990), serving as manifest indicators of latent variables that are not directly observable in surveyed individuals (Kromrey, Citation1994). In empirical social science research, these latent variables represent dimensions of theoretical constructs introduced to explain the observed relationships among items, which are considered as manifest indicators (e.g., Borsboom, Citation2008). The primary objective is to quantify the various manifestations in the latent variables into numerical indices, thereby ostensibly measuring the corresponding trait expressions (e.g., Narens & Luce, Citation1986).

Now, let’s consider a slightly different definition of measurement within the framework of latent variables, contrasting it with the traditional notion of measurement as the counting of units. We will examine the classical definition of measurement provided by Stevens (Citation1958). In the context of his definition of scale levels, Stevens (Citation1958) states:

In its broadest sense, measurement is the business of pinning numbers on things. More specifically, it is the assignment of numbers to objects or events in accordance with a rule of some sort. (Stevens, Citation1958, p. 384)

While this definition appears generally comprehensive and valid from an operationalistic perspective (but see Kantor, Citation1938; Koch, Citation1992), its crucial weakness lies in the lack of specificity in the final part of the statement, particularly in the phrase “ with a rule of some sort.” Whereas Fechner for measurement of attempts to form a unit for mental perception and thus ultimately establishes a very specific rule for the “pinning numbers on things,” such a derivation of a specific and theoretically justified rule for questionnaire items initially remains undefined. Instead, a comparatively trivial assignment scheme is typically used during questionnaire evaluation as part of a so called “per fiat” or “bona fide” measurement: E.g.,

or

to

. The process of applying such rules, or similar ones, is commonly referred to as “scoring” (cf. Leunbach, Citation1961). At the core of this procedure lies the question of which scale level is implicitly assumed when assigning numbers to empirical phenomena. The definition of a specific scale level during scoring remains undetermined initially, as the verbal content of the items is first translated into a symbolic space. Subsequently, in the data analysis phase, this symbolic representation can be treated either as “numbers” or merely as “numerals” (see e.g. Uher, Citation2022). Thus, as Berglund et al. (Citation2012) noted, there are two main strands of metrology in psychology, which are: psychophysics and psychometrics. Although these two metrological approaches have different foundations, both rely on the shared assumption of the metric quantifiability of

. While Fechner’s psychophysics is grounded in physics (cf. Fechner, Citation1858, Citation1860a, Citation1860b), psychometrics relates to the principles of social science data analysis using statistical methods.

An entertaining and classic example of the indeterminacy of the scale level of the resulting data (matrices) and the resulting confusion in the different interpretations and corresponding statistical treatments of symbolic representations as “numbers” or “numerals” can be found in Lord’s (Citation1953) thought experiment on the statistical treatment of soccer shirt numbers of soccer players (Lord, Citation1953). In Lord’s (Citation1953) thought experiment, the symbolic representation of soccer shirt “numbers” was initially intended solely for player identification, aligning with a nominal scale level. However, one soccer team interprets these symbols as “numbers” in the mathematical sense, particularly when comparing the average sizes of their numbers to those of another team. This difference in interpretation sparks a contentious debate over whether calculating the mean values of the soccer shirt numbers is appropriate to address the team’s complaint. When consulted, the imaginary statistician in Lord’s experiment responds affirmatively, dismissing the objection based on nominal scale level with the remark, “The numbers don’t know that” (Lord, Citation1953, p. 751). Indeed, numbers lack inherent knowledge of their intended scale. Moreover, Zand Scholten and Borsboom (Citation2009) demonstrate in a reanalysis of the thought experiment that utilizing statistical methods assuming a metric scale level, in reference to the numerical values of the soccer shirt numbers, is indeed valid for evaluating the football team’s complaint.

Despite the elegant mathematical derivation provided by Zand Scholten and Borsboom (Citation2009) based on the numerical values of soccer shirt numbers and the ensuing complaint by the soccer team, we believe that the purely mathematical reinterpretation of Lord’s soccer shirt numbers paradox misses the crux of the issue. One might respond pointedly in the spirit of the imagined statistician: while the numbers themselves may lack awareness, the scientific researcher should possess such insight. In other words, as noted by Uher (Citation2022), numerals, as symbols from a symbolic universe, can encode numbers, order relations, or qualitatively different properties Uher (Citation2022, p. 2532). This highlights the importance of understanding the symbolic context and the intended meaning behind numerical representations, and not confusing them with the research phenomena themselves (e.g. Uher, Citation2021a).

The example of interpreting soccer shirt numbers in Lord’s thought experiment encapsulates several key points elucidated in our preceding sections. First, the arbitrary interpretation of soccer shirt numbers highlights the fundamental question of deriving an objectively grounded scoring rule for assigning numerical values, as outlined by Stevens (Citation1946). Secondly, the example underscores that the choice of measurement and subsequent statistical methodology hinges on the a priori perspective taken on real-world phenomena, echoing a Kantian notion. The appropriateness of interpreting soccer shirt numbers as either numbers or mere symbols rests on competing theories that offer different explanations for their significance. From a purely “soccer-centric” viewpoint, one might argue for a symbolic space merely facilitating player differentiation. Conversely, the complaint raised by the soccer team suggests a theory linking the average numerical size of shirt numbers to team reputation. In this respect, different interpretation of the data and evaluation strategies can be justified in this example, depending on the theory pursued – the “soccer-centric” or the “reputation” theory. Thirdly, in our view, the example therefore underlines the necessity for a broadly accepted agreement on a justified a priori theory in methodological and metrological considerations, a concept Chang (Citation1995) terms “nomic measurement.” Depending on the chosen theory, measurement, and data analysis approaches diverge, as aptly summarized by the imagined statistician’s quip, “the Numbers don’t know.”

In recent years, a contentious debate has reignited regarding the measurement level of psychological dimensions (e.g., Borsboom & Mellenbergh, Citation2004; Kyngdon, Citation2008a, Citation2011a; Michell, Citation2000, Citation2014; Sijtsma, Citation2012). Fueled by the perceived solid foundation of sophisticated scaling models in Item-Response-Theory (IRT), there’s a prevailing belief that such models ensure quantitative measurement on a metric, specifically interval scale level. However, regarding the interval scale measurement by axiomatizing quantification, Lehman (Citation1983) notes: “The axioms defining the topological or Archimedean properties are empirically untestable ‘technical’ axioms, while the other axioms, e.g., order, are testable” (Lehman, Citation1983, p. 511). To establish fundamental measurement in psychology and the social sciences, some authors (see e.g., Green & Rao, Citation1971; Narens & Luce, Citation1986) refer hopefully to the principle of additive conjoint measurement (cf. Luce & Tukey, Citation1964), specifically developed in the framework of representational measurement theory (Kyngdon, Citation2008b). Moreover, Irtel (Citation1987) argued that the Rasch model, implying specifically objective comparisons being a fundamental characteristic of science as pointed out by Rasch (Citation1977), shares some basic principles of conjoint measurement. This conjoint approach was seen as so promising that Green and Rao (Citation1971), for example, went so far as to state that “… conjoint measurement procedures require only rank-ordered input, yet yield interval-scaled output” (Green & Rao, Citation1971, p. 355). However, as Trendler (Citation2019a) notes, psychology has nevertheless not yet succeeded in fulfilling even the first condition for a quantity, namely the identification of equal perceptual magnitudes (fundamental units) in , and thus terms conjoint measurement a “... superfluous method for the investigation of measurability of psychological factors.” (Trendler, Citation2019a, p. 102).

The problem with most applied representational theoretic approaches to psychological measurement lies at their core in neglecting the exploration of actual relations in empirical phenomenological (object) space . This is also reflected in Stevens’ taxonomy of scale levels (Stevens, Citation1946, Citation1958), which is purely defined by admissible transformations in the number space. While these approaches, along with the psychometric models built upon them, offer insights into admissible transformations and mathematical properties, they fail to elucidate the empirical facts these scales are meant to represent (see e.g., Díez, Citation1997a, Citation1997b). The gap between mathematical models and empirical reality has led some critical authors to deem the use of mathematical models for psychological measurement as reasonably ineffective (cf. Schönemann, Citation1994). Others, such as Campbell (Citation1921), questioned psychology’s status as a science, arguing that it lacks a coherent theory about the scale level of psychological constructs (

), distinct from their measurement (

). In essence, psychometric measurement approaches still suffer from a “lack of bridges between theory and data” (Cliff, Citation1992, p. 188). Moreover, Kyngdon (Citation2008b) argued mathematically that the Rasch model’s conceptualization of an empirical relational structure does not encompass “empirical objects or events” (Kyngdon, Citation2008b, p. 100). Similarly, Kyngdon (Citation2011a) demonstrated that the interval scale produced by the Rasch model can still align with the ordinal attributes of the underlying variable.

To conclude our reflections on attempts to quantify the psyche, we draw two interim conclusions: Firstly, spanning over a century, the efforts of scientific psychology have led to the widespread acceptance that inherently invisible (latent) attributes, such as a person’s intelligence, mathematical ability, or reading and writing skills, can be measured. Secondly, the observation of this now widespread acceptance may be viewed as evidence that, in general, relationships exist between spatial metaphors in our language and the conceptualization of abstract terms (such as here). More specifically, there is also an inverse relationship, suggesting that linguistic metaphors not only reflect the thoughts of speakers but also influence them, altering the way humans conceptualize abstract terms (cf. Casasanto & Bottini, Citation2014).

However, it remains an open question whether psychological attributes can truly be measured metrically on an interval scale, or, more fundamentally, whether they possess this interval property at all. Secondly, concerning the Kantian and current metrological distinction between extensive and intensive quantities, we align with Schönemann (Citation1994), who suggested that “social scientists [might] have fundamental measurement, they just do not have any extensive measurement” (Schönemann, Citation1994, p. 153; addition in square brackets by authors).

Psychometric models and paradoxes

As outlined above, a common foundation for today’s psychometrics and measurement theory is the classification of scale levels according to Stevens (Citation1946). Within this classification, the interval scale holds particular importance because psychological scales are often assumed to adhere to it by treating test scores constituting a measure for

on an interval scale level (i.e., “per fiat” measurement). A commonly held viewpoint in the field of psychometrics, especially in item response theory (IRT), is that the logit scale for persons (

) of the Rasch model (RM; Rasch, Citation1960) implies an interval scale for

, with the Rasch model (EquationEquation 8

(8)

(8) ) given by

with as the item parameter (difficulty) for items

and

as the person parameter for persons

. Thus, according to EquationEquation 8

(8)

(8) , multiplying the difference between

and

by the (

) coded observations (over

) in the numerator, the probability for the occurrence of the two response categories (

) is modeled together as a function of the parameters

and

.

Fischer (Citation1995, pp. 20–21) provides a proof that and

in (EquationEquation 8

(8)

(8) ) are unique up to a linear transformation of the form

and

whereby

and

are arbitrary constants. The proof does not require the common item slope

to be unity, as in the standard definition of the RM. Setting

to unity would imply an even stronger measurement scale, known as the “difference scale.” This scale is characterized by an arbitrary origin but a fixed, i.e., known measurement unit, the scale factor. As Verhelst (Citation2019) noted, in the standard definition of the RM the unit is defined by fixing the item slope parameter “...at the value of 1, but any other positive value may be chosen.” (Verhelst, Citation2019, p. 150; emphasis added). So, once we relax the convenient but loosely-justified assumption of unit item slopes, the scale unit is no longer fixed and any arbitrary constant

(

) can be chosen.

Now recall that arbitrary origins and scale factors are properties of interval scales under admissible positive linear transformations of the general form with

and

as the arbitrary intercept

and scale parameter

. Hence, under the mildly relaxed but reasonable assumptions, Fischer (Citation1995) demonstrated the interval scale property of the RM.

While this proves the interval scale property of the Rasch model, what typically goes completely unheeded is that this proof only refers to the numerical relational system (nrs) of the mathematical model, leaving its connection to the empirical relational system (ers) entirely untouched. The successful application of the Rasch model can only be justified if there is reason to believe, based on empirical evidence, that relations in an attribute of persons themselves – i.e., the empirical relational system – exhibit these interval scale properties. We doubt that such empirical evidence has been found in psychology. As Michell (Citation2008a) put it: “If we had independent evidence that abilities were quantitative (as opposed to merely ordinal), we might be able to legitimately apply the Rasch model to measuring them …” (Michell, Citation2008a, p. 122)

These caveats on the interpretability of person estimates as estimates for abilities or other psychological traits

that are said to lie on an interval scale, there still exists a pervasive belief that the mere fitting of a Rasch model necessarily implies or generates an interval scale of the underlying attribute. This is presumably due to an increasing popularity of the Rasch model during the last decades in the context of large-scale assessment studies, such as for example the Programme for International Student Assessment (PISA; e.g. OECD, Citation2014). Skeptical readers can ascertain the frequency of the claimed connection between the Rasch model and the interval scale, in tandem with the wishful thinking of transforming ordinal observations into interval scaled measures by conducting a Google Scholar search with the following search strings: “Rasch model” AND “interval” (around

hits) or by searching “Rasch model” AND generate interval (around

hits) or “Rasch model” AND generating interval (around

hits).

This reasoning regarding the quality of the interval scale level of psychological attributes, when it solely derives from the perspective of the model, is flawed. Successfully fitting a Rasch model does neither empirically imply an interval scale of the underlying psychological entity/attribute nor does it generate such a scale by mapping probabilities of getting an item correct onto a continuous number line, thereby magically raising the simple counts of response categories to metric quantitative relations between objects by merely applying the Rasch model. Moreover, regarding the assertion that the fit of a Rasch model at least suggests an interval scale of the psychological attribute, Michell (Citation2004) argues that one can successfully fit a Rasch model to data that actually satisfy only partial orders (i.e., a non-metric but ordinal structure). This is because the Rasch model’s probabilistic nature implies less demanding requirements of statistical model fit than ordinal response models, which typically use deterministic approaches to directly test order relations among objects. This was later demonstrated by Kyngdon (Citation2011a) within a Bayesian framework for ordinal statistical inference. He transformed Rasch-fitting data from the Lexile Framework for Reading into item response proportions, testing axioms of conjoint measurement (see Karabatsos, Citation2001; Luce & Tukey, Citation1964). Cancellation axioms were supported only under specific conditions, suggesting reading ability was quantitative but the theoretical framework incomplete. As a result of the findings and theoretical considerations based on proofs by Krantz et al. (Citation1971), Kyngdon (Citation2011a) concluded that a notable consequence of his study is that “ Fit of an IRT model to ability test data may only be indicative of ordinal structure in human cognitive abilities” (Kyngdon, Citation2011a, p. 492). This aligns with earlier discussions regarding the quality of scale for psychological attributes, or more specifically, the type of “quantification” (e.g., ordinal or interval scale) of cognitive abilities proposed by Luce (Citation2005); see also Perline et al. (Citation1979).

In our view, the assertion that the Rasch model “generates” an interval scale stems from a misconception about measurement, which is rooted in a problem hidden in plain sight. It is crucial to remember that, echoing Pfanzagl (Citation1959), the objective of a measurement model is solely to map an empirical set to a set of real numbers, enabling “conclusions concerning the relations between elements of

[to] be drawn from the corresponding relations between their assigned numbers” (cf. Pfanzagl, Citation1959, p. 283; addition in square brackets by authors). In relation to such a mapping, Pfanzagl (Citation1971) specifically distinguished between homomorphisms and isomorphisms. The latter is characterized as an invertible, unique, and bijective connection of the relations between the origin images in

(objects in an empirical relational system, ers) and the numerical relational system (nrs) in

. In contrast, a homomorphic mapping maps the elements of one set into another set in such a way that only certain structural features of the origin images are preserved.

Recall that Rasch-scaling (e.g., by means of conditioning on score groups) typically yields the same parameter estimate for every raw-score group, that is there are several persons from the population sharing the same estimate – which in essence leads to a classification preserving only certain structural features of the classified objects. These preserved structural features of

may relate to an ordinal or interval scale level, as both can be mapped to the interval scale level of the nrs of the Rasch (person) parameters. A point that is easy to overlook here is that in both cases the mapping of the Rasch model scale values correspond to, or rather preserve, the empirical relations between properties of objects. Roughly speaking, interval-scale properties between attributes of objects must already exist in the empirical relational system. Merely assigning real-numbered values to objects does not establish a mapping of empirical to numerical relations at all. However, in psychological measurement practice, it seems to us that there is little empirical justification or evidence that empirical relations among psychological attributes of agents/objects themselves have a relational structure on an interval scale. Instead, it appears to be a convenient or desirable assumption.

But, what is proposed and discussed under the term Rasch Paradox seems to undermine this convenient assumption. The term “Rasch Paradox” is rooted in the perspective that views the Rasch model as a probabilistic version of an additive scale, allowing for error (see Michell, Citation2014). From this standpoint, the paradoxical situation – in the sense of being an apparently anomalous result – arises when, if it were possible to eliminate all error factors, it would adversely affect the resulting measurement: This transformation would downgrade the scale from a metric, or specifically, interval level, to an ordinal level, as realized in the so-called Guttman “model.” This paradox is noteworthy, considering the typical assumption that an improvement in accuracy (reduction of error) always leads to a better (higher) level of measurement. However, adopting this perspective, the concept of the “Rasch Paradox” essentially arises from reasoning about the nature of error, in conjunction with the implicitly made assumption – yet to be empirically tested – of a metric nature of the psychological traits themselves to be measured.

The crux lies in distinguishing between Homomorphisms and Isomorphisms concerning the unresolved question of the scale level of the psychological property itself. The nature of the homomorphic mapping in Rasch scaling implies the preservation of any scale level less than or equal to the interval scale level, based on Stevens’ taxonomy (Stevens, Citation1946). However, the interval scale level is only guaranteed in the nrs of Rasch parameters, as demonstrated by Fischer (G. H. Fischer, Citation1995, pp. 20–21). As with any homomorphic mappings, the direction of the mapping, and thus the corresponding inference about scale levels, is crucial. Simply put, using a scale in a mathematical metric space suitable for representing an interval scale level does not automatically confer that scale level to the psychological trait itself mapped to such a metric space. According to Stevens’ taxonomy, any scale level less than or equal to the interval scale of an ers can be represented in a nrs for which an interval scale level has been established. From a set-theoretical perspective, the following inclusion relationship can be formulated with regard to the informative value of Stevens’ scale levels in terms of the empirical relational systems (ers) they might represent and the transferability of specific scale levels:

The relationship between scale levels, implicitly formulated in the taxonomy of Stevens (Citation1946), can be likened to the relations between different mathematical number ranges. A number range refers to a set of numbers with common properties, typically concerning the feasibility of certain arithmetic operations within that range. Similar to the hierarchical set relations in the scale levels of Stevens (Citation1946), there exist hierarchical relations between different number ranges. For instance, starting with the set of natural numbers , it is always true that number ranges defined by extending others can be conceptualized as supersets of the respective initial sets used to derive the extended number sets. Therefore, the following inclusion relations can be established:

.

Once again, this does not imply that the reverse conclusion, from the eventually proven scale level of the numerical relational system (nrs) to the scale level of the empirical relational system (ers), is automatically applicable. To illustrate the importance of such a differentiated connection, or mapping, between the numerical (nrs) and the empirical numerical system (ers), let us refer to a counterexample provided by Pfanzagl (Citation1971, p. 76), which illustrates a case where the direction of the mapping plays a decisive role in the medical decision-making process. The example provided by Pfanzagl (Citation1971) uses a medical blood serum test to measure the intensity of certain pathological processes. The test itself relates to the extent of sedimentation in a tube after sodium citrate is added to the blood serum. The amount of sedimentation is measured on a -scale, “which might give the illusion of an interval scale” (Pfanzagl, Citation1971, p. 76). Furthermore, Pfanzagl (Citation1971) points out that although the height of the sediment is monotonically related to the intensity of pathological processes, it is not possible, from a substantive scientific or medical perspective, to conclude that “a therapeutic agent

which decreases the sediment from 75 to 60 [mm] is more effective than an agent

decreasing the sediment from 65 to 55” (Pfanzagl, Citation1971, p. 76; addition in square brackets by authors). This conclusion is underscored by the fact that “changes in the procedure of its determination (such as changes in the amount of sodium citrate or the time interval after which the height of sediment is determined) will not at all lead to a linear transformation of the scale “ (Pfanzagl, Citation1971, p. 76; emphasis added). However, as we’ve exemplified in the proof provided by Fischer (Citation1995, pp. 20–21), such a linear transformation would be a prerequisite for a relational system to be at the interval scale level. In this manner Mislevy (Citation1987) pointed out that taking IRT models as a general solution to explain different true score distributions based on arbitrary subsets of items (on interval scale level) might be misleading (regarding the assumed interval scale level), because “this line of reasoning runs from model to data, not from data to model as must be done in practice.” (Mislevy, Citation1987, p. 248, emphasis added).

Along such lines of argumentation, Kyngdon (Citation2008b) argued mathematically that the Rasch model’s relational system does “not contain empirical objects of events” (Kyngdon, Citation2008b, p. 100) and will then always map probabilities onto the real number line. Hence, applying the Rasch model “does not lead to a representation of properties of objects as relations between numbers but is about the creation of variables, thereby tacitly avoiding whether the psychological variable under investigation is actually quantitative” (Bond et al., Citation2020, p. 12). In addition, even Georg Rasch himself noted in a very nuanced way that the strong need apparent in psychology for “… replacing the original qualitative observations by measurable quantities.” should not lead to the assumption, simply by substituting quantitative parameters for the observations, that “… we have an appropriate measurement on a ratio scale or on an interval scale of individuals or stimuli or that even an appropriate order is available.” (Rasch, Citation1961, p. 331).

In contrast to the interval scale level supposedly formally implied by the application of the Rasch model, there are more cautious assumptions concerning the relationship between persons and items in Guttman’s “model” for dichotomously scored responses (see Guttman, Citation1944, Citation1947). This “model” was introduced under the term scalogram analysis (see Guttman, Citation1947) as a procedure for testing the hypothesis of cumulative, summative scalability of a set of items from “a universe of attributesFootnote2 “ (see Guttman, Citation1944, p. 140) for a given population. Although at first described by Guttman (Citation1947) as a pure technique for “data sorting” or to find “an adequate basis for quantifying qualitative data” (see Guttman, Citation1944, p. 139, emphasis added), it is typically stated, that Guttman’s cornell technique (Guttman, Citation1947) ultimately implies (in analogy to the Rasch model) two “model parameters” to “model” the persons’ answers to the items of a scale. In analogy to the RM these two implicitly assumed “parameters” and

may be seen to represent the positions of persons and items on the latent continuum of the attribute dimension. They are connected in the Guttman “model” in a deterministic way in order to describe the occurrence of one of the two response categories. But note, that in fact Guttman (Citation1944, Citation1947) never claimed his scalogramm analysis – or in his own 1947 words “... simple scoring scheme.” (cf. Guttman, Citation1947, p. 251) – to be a psychometric model to describe the process of persons’ responses. Rather, Guttman (Citation1944) describes in his paper on the “basis for scaling qualitative data” a scoring procedure “...to assign to a population a set of numerical values like 3, 2, 1, 0.”, which “...will be called the person’s score.” (Guttman, Citation1944, p. 143). He further points out, that for example “… a score of 2 does not mean simply that the person got two questions right, but that he got two particular questions right, namely, the first and second.” (Guttman, Citation1944, p. 143; emphasis added). It is only in later writings on Guttman’s principle of scoring that a mathematical formal representation in the sense of a model is given, which is why we may speak (for convenience) of a Guttman “model” for the rest of the present article, although it was clearly pointed out that Guttman himself did not establish his scoring principle as a psychometric model for the response process per se. However, following Doignon et al. (Citation1984) the general principle of Guttman's scalogram analysis may be formalized afterward as follows (see also Narens & Luce, Citation1986):

Suppose X stands for a set of items and A is a set of persons. In the respective “model” the two permissible “probabilities” of a correct answer and

traced back to the deterministic characteristic of the resulting data can therefore be formally represented as follows (cf. Equation 9).

with the real numbers and

and

; with

as the biorder (Doignon et al., Citation1984), that is, sets equipped with two partial orders. Equation 9 is unique up to a positive monotonic function K for

and

so that

and

as an admissible transformation with ‘’ denoting function composition as described in Ducamp and Falmagne (Citation1969). Note, that “admissible transformation” can mean any order-preserving transformation, so that the biorder comprising the two partial orders can also be mapped for example to the order relation of the natural positive numbers

, which is typically the case in applied data evaluation scenarios when the summation is formed from the

coded item responses as a person score.

Thus, the Guttman scale defines ordinal relationships of persons and items on the latent attribute continuum, not metric ones on at least an interval scale level. The condition expressed in EquationEquation 9(9)

(9) then refers to the existence of a sample- or person-invariant (ascending) order of the items according to their difficulty. Under this “model”, the marginal sums of the data matrix are sufficient (i.e., exhaustive) statistics of the two “parameters”Footnote3

for the person ability and

for the item difficulty. Based on these two formal model definitions, a taxonomy widely (however misleadingly) adopted in the field of IRT is the assignment of the Rasch model for measurement at interval scale level and the Guttman model for measurement at ordinal scale level.

In addition to the differences between the two models briefly outlined above regarding the implicitly assumed scale level, they also differ in another important characteristic. While the Rasch model explicitly takes into account a certain amount of measurement error due to its probabilistic model formulation (cf. EquationEquation 8(8)

(8) ), the Guttman “model” excludes error in the measurement due to its deterministic relation between a person’s ability and the item difficulty (see also Heine, Citation2020, for a detailed discussion of probabilistic and deterministic models of IRT). Regarding its probabilistic nature, Wood (Citation1978) showed that the Rasch model even fits simulated coin-tossing data very well. Conversely, Kubinger and Draxler (Citation2007) demonstrated that the fitting and testability of the Rasch model failed under idealized Guttman conditions in empirical data, due to a violation of the uniqueness condition for (conditional) maximum likelihood parameter estimates.

Despite their differences in specific model formulation, wherein the Guttman “model” refers to a deterministic relationship between persons and items and the Rasch model introduces a probabilistic element, both models share a fundamental common ground. This commonality lies in formalizing a dominance relationship between persons and items. While the Guttman “model” embodies this relationship deterministically (see EquationEquation 9(9)

(9) ), the Rasch model adds a probabilistic component to accommodate an error element in response data. Additionally, the Rasch model provides a mathematical formulation that defines this probabilistic dominance relation as the difference between two parameters (

), thus classifying the model as parametric (see EquationEquation 8

(8)

(8) ). This characteristic underpins the argument for the interval scale level of person parameters within the Rasch model, a perspective that is generally accepted in psychometrics without challenge.

Based on this, Michell (Citation2000) provocatively diagnosed psychometric science as a pathological science, since it does not recognize the axiomatic setting of quantitative measurability of psychological traits on interval scale level as a (mere) hypothesis to be tested. Moreover, with regard to psychometric models from IRT (such as the Rasch model), Michell (Citation2008b) concludes that they have not yet met the challenges of seriously testing the relevant hypothesis for measurement at the interval scale level (see also Heene, Citation2013).

In the following paragraphs, for added clarity, and because many theoretical papers have neglected to provide an illustrative example, we will demonstrate that a well-fitting Rasch model does not inherently imply anything regarding the scale level of the empirical relational system (ers). Firstly, this is based on a simple theoretical consideration regarding the common basic conceptual idea of the Rasch and Guttman scaling. Secondly, we use a random, increasing error component as an empirical basis for model testing, starting from ideal-typical simulated dominance response process data.

Simulation as illustration

Outline and rationale

Drawing on the shared concept of a dominance relation regarding items and persons between the two scaling techniques and their discrepancy regarding an error component, the simulation presented below aims to amalgamate ideal-typical dominance “response” data (Guttman “model”) with an escalating random error element, akin to complete random “response” data stemming from coin-tossing scenarios (e.g., Wood, Citation1978). This blending process anticipates the emergence of a dataset compliant with the Rasch model, exhibiting optimal fit for a certain degree of added error. Subsequently, Rasch model parameter estimates and model fit indices are computed based on each of the simulated datasets. Additionally, coefficients for internal consistency according to Kuder and Richardson (Citation1937), but see also Cronbach (Citation1951), as well as (Rasch) residuals (cf. Wright & Masters, Citation1982) derived from IRT scaling are evaluated.

All data simulation and analyses were performed using the statistical environment R (R Core Team, Citation2023) and the current versions of the CRAN published R-packages Hmisc, pairwise, CTT, ggplot, future.apply (see Bengtsson, Citation2021; Harrell, Citation2023; Heine, Citation2023; Wickham, Citation2016; Willse, Citation2018, respectively) as well as a yet not on CRAN published R-package named pattern providing convenient functions for data simulation. All materials necessary for replicating the analysis presented in this paper, including the R-code, the package pattern, and the simulated datasets, are provided on OSF at the following link: https://doi.org/10.17605/OSF.IO/GQVHC.

Simulated datasets and parameter estimation

Starting from perfect Guttman data comprising rows (persons) and

columns (items) we contaminated this perfect data in increments of 5% (ranging from 5% to 100%) with a random error component. For each of these data generating steps,

replications were created. Item and person parameters were sampled from a standard normal distribution

. For each of the resulting 2000 data matrices (

steps

replications), the parameters of the Rasch model parameters were determined with the package pairwise (Heine, Citation2023). From the results, the likelihood ratio

-values of the Anderson model test (cf. Andersen, Citation1973) and the corresponding p-values were calculated. Furthermore, the squared score residuals (cf. Wright & Masters, Citation1982), averaged over persons and items, were determined. In addition, classical test theory internal consistencies of

statistic according to Kuder and Richardson (Citation1937) were calculated. The individual values for the respective coefficients are plotted on graphs, with the x-axis representing increasing levels of mixing between the Guttman data and random response data (error component).

Results

display the values of various coefficients obtained from fitting the Rasch model, plotted on the y-axis. The x-axis represents the percentage (in 20 levels) of contamination of the pristine Guttman data with an increasing proportion of random error. The scale endpoints of the x-axis correspond to perfect Guttman data, located on the left side of each figure, and completely random data, located on the right side of each figure.

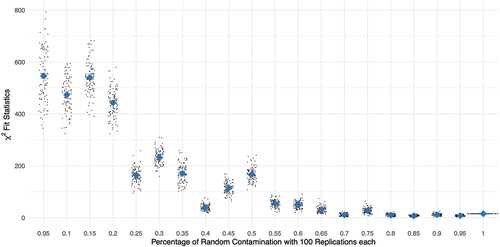

Figure 1. Distributions of -values of Andersen Likelihood-Ratio-Test (cf. Andersen, Citation1973) by percent random contamination of Guttman response data with 100 replications each; diamond shaped dots represent means across replications respectively.

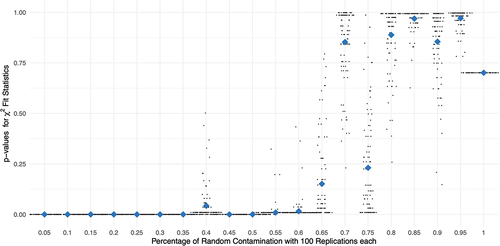

Figure 2. –values of Andersen test (cf. Andersen, Citation1973) by percent random contamination of Guttman response data with 100 replications each; diamond shaped dots represent means across replications, respectively.

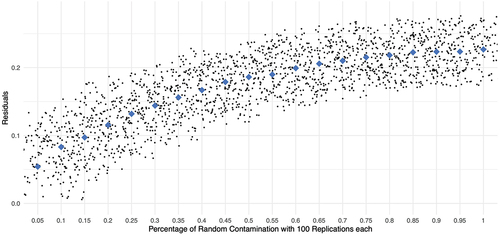

Figure 3. Mean values of squared score residuals across persons and items by percentage random contamination of Guttman response data with 100 replications each; diamond-shaped dots represent means across replications, respectively.

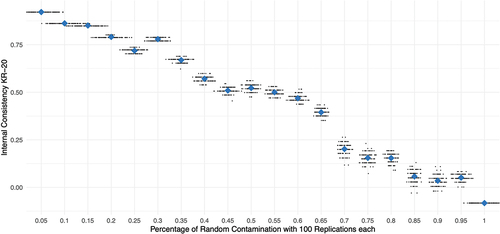

Figure 4. Values of Kuder-Richardson 20 () as measure of test reliability (cf. Kuder & Richardson, Citation1937) by percent random contamination of Guttman response data with 100 replications each; diamond-shaped dots represent means across replications.

illustrates the trend in the -fit statistics of the Andersen Likelihood Ratio Test (LR-Test; cf. Andersen, Citation1973) across the simulated data matrices (x-axis). The graph shows a decreasing trend in the values of the

-fit statistics, with the lowest value observed for the completely random data on the right-hand side of .

In line with the decreasing trend observed in the -test statistics (), the corresponding

-values depicted in indicate significant model misfit in datasets with low percentages of random error contamination. Specifically, datasets with up to approximately 35% random error suggest a misfitting Rasch model. Paradoxically, datasets with a relatively high proportion of error, starting at around 65%, appear to exhibit a perfect model fit, as evidenced by insignificant

-values (see ).

illustrates the progression of means across persons and items of the squared score residuals (y-axis) across simulated data matrices (x-axis). Unlike the descending trend observed in the -statistic and the apparent improvement in model fit following the LR-test (as indicated by the trend in the