Abstract

This case study focuses on the instructional design and outcomes of a virtual reality (VR) application for sepsis management in healthcare education. The instructional design of the VR sepsis application follows five principles adapted from Merrill’s instructional design theory and Bloom’s taxonomy. The VR simulation is structured to provide a coherent and realistic experience, with instructional materials and feedback incorporated to guide and support the learners. A pilot study was conducted with medical students on clinical placement. Participants experienced the VR sepsis simulation and completed a questionnaire using the Immersive Technology Evaluation Measure (ITEM) to assess their immersion, intrinsic motivation, cognitive load, system usability, and debrief feedback. Descriptive analysis of the data showed median scores indicating high immersion and presence, intrinsic motivation, and perceived learning. However, participants reported a moderately high cognitive load. Comparison with a neutral response to ITEM suggested that users had a significantly higher user experience (p < 0.05) in all domains. This case study highlights the potential of VR in healthcare education and its application in sepsis management. The findings suggest that the instructional design principles used in the VR application can effectively engage learners and provide a realistic learning experience. Further research and evaluation are necessary to assess the impact of VR on learning outcomes and its integration into healthcare education settings.

Background of virtual reality (VR) and the plausible experience

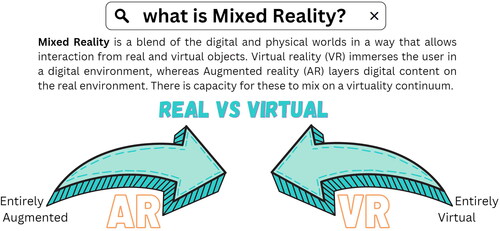

There are numerous different technologies that create an immersive experience. The extended reality (XR) and mixed reality (MR) continuum was first described as having augmentation at one end and virtuality at the other. adaptation of the reality-virtuality (Milgram & Kishino, Citation1994) illustrates the media and medium differences and that the continuum affords a mix of these environments. Virtual reality (VR) is a computer generated environment that can be fully interactive using head mounted display (HMD) and wireless controllers (Wang et al., Citation2021). This sensorimotor experience will recreate stimuli of vision, hearing and elements of touch via haptics. Thus, a user of VR is immersed in an environment, which can simultaneously provide an illusion of place and disconnect from the real-world (Jacobs & Rigby, Citation2022).

Figure 1. Mixed reality definition adapted from Migram and Kishino (Jacobs, Citation2023).

Immersion and place illusion concepts have been one of academic debate (Jung & Lindeman, Citation2021). The immersion can relate to the realism (fidelity) of the sensory stimulus through HMD that simulates an activity whereby a participant can look and feel with the user’s body movements actuated by the device (Slater, Citation2009). Hence, it can be shown increased levels of immersion when comparing a VR HMD to a 2D traditional screen (Jacobs & Maidwell-Smith, Citation2022). Illusion can be categorised as an equivalent term to presence with the subjective perception of the quality of the experience and ‘being there’ (Witmer & Singer, Citation1998), which can be derived from plausibility and place illusions. For example, can one feel they are present in the simulation and consider if events logically follow a sequence.

A further characteristic that contributes to immersion and illusion is the coherence of a scenario and relates to the consistency of the experience (Skarbez et al., Citation2021). For healthcare education simulations, this would mean realism or fidelity to a real-world environment is required for a coherent experience; thus, experiential fidelity is a construct where objectively reasonable set of rules are provided. The mental state to attune to the expectations, attitudes, and attention of users’ provides a deeper sense of presence (Beckhaus & Lindeman, Citation2011). The psychological fidelity is supporting the construct of realism and are supplemented by components of physical properties and behavioural representations of the simulation for the realness of experience.

The terminologies are discriminating a subjective phenomenon, and overlap in definitions exist. Satisfaction of a virtually created experience is multi-faceted, and user psychological state influences the coherence and degree of immersion and place illusion. This is to create a virtual experience that has both representational fidelity and interaction, which facilitates learning.

VR in healthcare education

Learning in traditional formats, such as, classroom, clinical environments, books, and more recently the internet, have been the main settings for healthcare professional (HCP) education. Interactions in the clinical setting permits the integration of 3 learning domains (Adams, Citation2015): clinical knowledge (Burgess et al., Citation2020), psychomotor or procedural skills, and the affective domain that incorporates non-technical skills (Prineas et al., Citation2021). Broadly this maps on to competency frameworks of knowledge, skills, and attitudes that HCP are assessed against, however, this can create a challenge to teach and assess (Franklin & Melville, Citation2015).

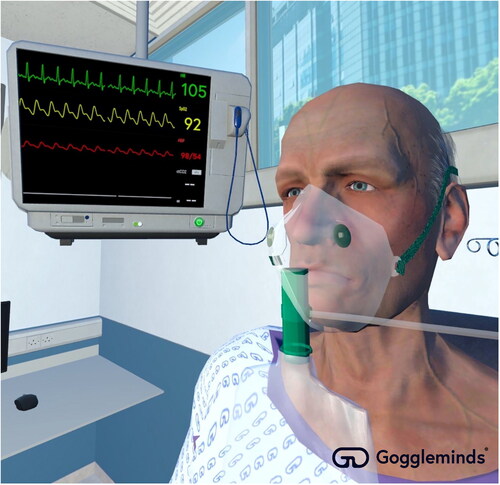

Simulation in HCP education is a common method of recreating clinical environments for HCP to practice and learn in a safe environment without patient harm. The capacity of VR to emulate the clinical environment is no longer limited to the equipment of the educational simulation suite. VR education packages with or without haptic control can be used for: medical knowledge acquisition (Zhou et al., Citation2021), learning procedural skills (Orland et al., Citation2020; Snarby et al., Citation2019), such as, surgery (Frendo et al., Citation2021; Yu et al., Citation2021), and the development of cognitive reasoning skills (Bonnin et al., Citation2023; Macnamara et al., Citation2021). Immersive technology with hardware and software sophistication can recreate a clinical scenario in a controlled simulation (see ). This has the potential to emulate a realism that is coherent clinically, immersive and illusive to place. Purposing the key learning domains and using evidence-based instructional design principles can serve to transfer theory to practice for learners (de Melo et al., Citation2022).

The gaming industry has been one of the driving forces for recent VR innovation (Kari & Kosa, Citation2023) alongside the military (Liu et al., Citation2018) and marketing (Loureiro et al., Citation2019). Research in VR applications in healthcare education is rapidly expanding (Jacobs et al., Citation2022; Tang et al., Citation2022), however, this has not translated into widespread adoption. The technology and associated ecosystem have numerous perceived barriers to acceptability and adoption; unit cost and lack of cost effectiveness evaluation: technology functionality assessment; and lack of trialability for users (Laurell et al., Citation2019). Furthermore, in a review of immersive technology in medical education identified the usability of hardware and connectivity to networks as potential barriers to adoption (George et al., Citation2023). Notwithstanding the foregoing, VR affords educators with opportunities to save costs whilst expanding clinical training to meet the increasing demands on healthcare education settings.

The aim of this case study is to describe the use of instructional design in a VR application for HCP education, gain understanding of the user experience and interpret this alongside theory. It is hypothesised that interactive learning through VR will provide a positive learning experience by HCP in training.

Method

VR sepsis instructional design

Sepsis management is a medical emergency and providing education on the condition and management is a key curriculum feature for HCP (McVeigh, Citation2020; Moore et al., Citation2019). Sepsis is a major cause of mortality, morbidity, and cost to health systems (Robson & Daniels, Citation2008). Improving early recognition of patients improves outcomes and has been a key target for healthcare delivery and supportive organisations, such as the Sepsis Trust. Screening tools provide HCP with rapid assessment and decision-support mechanisms.

Learning in the context of patient care there are multiple objectives that require integration of the constituents to an enterprise schema. Gagné and Merrill (Citation1990) seminal work in instructional design denotes the goal to manifest and discover knowledge that facilitates transfer of training (Gagné & Merrill, Citation1990). The designer formulates a schema that harnesses the intellectual skills, cognitive strategies, and sensory information.

Five principles that represent a concise interpretation of instructional design theory by Merrill (Citation2002) are conceptually enveloping problem-centred instruction to demonstrate and activate learning (Merrill, Citation2002). These can be adapted to the VR learning context using Blooms’ taxonomy:

Learners are engaged in solving real-world problems (authenticity).

Learning is promoted when existing knowledge and experience is activated as a foundation for new cognitive, affective, and psychomotor.

Learning is promoted when new knowledge is demonstrated to the learner.

Learning is promoted when new knowledge is applied by the learner.

Learning is promoted when new educational activity is integrated into the learner’s world.

Designing VR environments with these elements in mind and the use of experiential learning (Kolb, Citation1984) and the mastery of practice strengthens learning outcomes, because VR allows users to repeat exercises indefinitely. Furthermore, problem progression with complex learning outcomes can be tailored to individual learners’ response. Explicit guidance on the steps demonstrates to the learner the information required, using portrayal in a high-fidelity representation of a real event. Media in VR provides a demonstration of consistency of skills, whilst non-branching logic in VR enables multiple avenues of user pathways that can be supported by relevant media. For example, early sepsis identification is a key outcome and visual prompts can steer the learner to prioritise this (). Alternatively, taking blood samples from a VR mannikin is a clinical skill, which has prescribed sequence, departure from this sequence could promote bad practices and thus instruction could benefit from a fixed set of instructions ().

Figure 3. Virtual reality application demonstrating clinical observations of a patient with suspected sepsis.

Integrating the relevant supplementary material in the VR environment is crucial, however, so too is management of the additional cognitive load so not to compete excessively for attention and detract from learning.

The application should allow for practice and promote deeper learning that the user can ultimately test in real life scenarios. The practice element should remain consistent with the learning goals of the VR experience. Furthermore, feedback on performance and affective domain are recognised as a key element in instruction. Errors are a normal passage to successful problem solving and supporting a safe identification and correction can be integrated into the feedback tools. Debrief is a standardised format of feeding back to learners, which can be facilitated and self-facilitated when using immersive technology.

Evaluation of educational outcomes provides valuable information on the design and application of immersive technology. Kirkpatrick’s (Citation1994) framework proposes a four-level model based on the classification of evaluation of training programs: (i) reaction, (ii) learning, (iii) behaviour, and (iv) results (Kirkpatrick, Citation1994). Collecting evidence in simulation to indicate real-world learning improvements is challenging. Reflection of experience using a self-reported survey is one method of collecting data on the learners’ impressions.

Participants

A pilot study was conducted with medical students (n = 14), who were on placement at a regional hospital in United Kingdom. Participation was voluntary and inclusion criteria were: (i) on a clinical placement that has this simulation component, (ii) were able to wear headset and move in a fixed sized room. Students with prior to experience of VR were not excluded. Students were able to withdraw at anytime and data collected was anonymous. The study was approved by the Great Western Hospital medical education ethics committee (KV012023).

Procedure

Participants were recruited from educational sessions run by clinical teaching doctors and opted to have an additional simulation experience in sepsis identification and management. The VR application was provided by Gogglemind Ltd. The VR device (Quest 2 HMD with 2 controllers) was demonstrated to users and a facilitating doctor in education remained throughout to replicate the facilitated mannikin simulation teaching. Up to 2 students and 1 facilitator were connected to the same simulation via Wi-Fi. The students were instructed to interview the VR patient using verbal selected prompts and proceed to manage the clinical scenario (). They were not pre-briefed to indicate the selected learning scenario of Sepsis. The content was based on national guidelines of managing sepsis in the acute hospital setting and was co-developed with clinicians from a teaching department of a regional hospital. The students completed the scenario using instructional materials within the simulation to guide them and the educational doctor to provide clinical and technical help as required. The room provided freedom of movement in the simulated resus room in approximately 4 m x 4 m space. The simulation took between 15 and 20 minutes. On completion of the simulation the application provided tailored feedback to indicate missed points on correct management. Following removal of headsets and controllers the participants completed a questionnaire that took 5 minutes to complete.

Assessment

Primary outcome data was collected using Immersive Technology Evaluation Measure (ITEM), which is a multi-domain instrument that evaluates the users in terms of their experience of immersion, intrinsic motivation, cognitive load, system usability, and debrief (Jacobs et al., Citation2023). ITEM is used after an immersive healthcare education experience and measures facets that relate to the technology and the media content, with additional debrief feedback that occurs in simulation practice. The instrument was developed for VR and AR clinical education (George et al., Citation2023; Jacobs & Rigby, Citation2022) and is based on the theoretical model of immersive technology in healthcare education (MITHE). Cognitive Load is measured using the NASA TLX survey and system usability is measured using the System Usability Score (SUS) and are weighted scores. Jacobs et al. (Citation2023) explored MITHE as a theory to describe the interaction in a multi-sensory environment that facilitates multimodal learning through experiential, self-determinism and cognitive meta-theories.

ITEM consist of a 40 question, 39 questions use a 5-point Likert scale (1 = strongly disagree, 5 = strongly agree) and 1 question on ‘how immersed did you feel’ with a ten-point Likert scale (1 = strongly disagree, 10 = strongly agree). The survey was based on MITHE theory and was developed following expert consensus on the qualities to measure immersive technology (Jacobs et al., Citation2023). The domains of immersion and intrinsic motivation in prior work showed high criterion validity and internal reliability. Furthermore, cognitive interviewing of survey demonstrated a high level of user understanding. ITEM full survey is available in supplementary material. The survey for this paper was generated using Qualtrics software, Version [April 2023] of Qualtrics. Copyright © [2023] Qualtrics.

Statistical analysis

The single group study statistical analysis Stats Direct (version 3) was used. Descriptive analysis of data was performed with measures of central tendency and variability summarising the data. Single group Wilcoxon-signed rank parametric paired comparison was measured with intervention group matched to a neutral response total to ITEM with effect size. A p-value of significance as <0.05 and Cohen’s d effect size values range from 0.2 small effect to above 0.8 of a large effect size. Likert scales were treated as continuous data. Cognitive load was not matched to a neutral response as working memory effective load measured by NASA TLX does not have a neutral score.

Results

Fourteen participants completed the ITEM survey, and 1 participant did not fully complete the ITEM as debrief domain was not completed. As this was an optional domain, their data was included in the final analysis. A test for normality indicated a non-normal distribution and medians and interquartile ranges were the descriptive outcomes. reports the median scores, interquartile range, median score difference between comparison group, and effect size. Full datasheet is available in supplementary materials.

Table 1. Immersive Technology Experience Measure (ITEM) results for sepsis virtual reality participant experience.

Immersion and presence

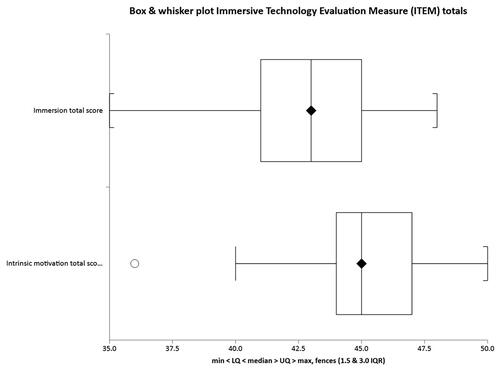

The median for the Immersion domain score was 43 (IQR 41-45), with a maximum score of 50. Within the immersion subscale question 9 ‘How Immersed did you feel?’ the median score was 8.5 (IQR 7-9), maximum score 10. Score of presence question ‘I felt detached from the outside world’ median value was 4.5 (IQR 4-5), maximum score 5. Fidelity measured by question 3 ‘It was as if I could interact with the simulated environment as if I was in the real world’ median score was 4.5 (IQR 4-5).

Median score was 13.5 greater in intervention group compared with neutral response comparator (p < 0.001). The effect size was large, with a Cohen’s d of 3.52.

Intrinsic motivation

The median for the Motivation domain score was 45 (IQR 44-47), with a maximum score of 50. Question 1 enjoyment of the activity rated scale median score was 5 (IQR 4-5). Learning from the activity median score for question 7 was 5 (IQR 4-5). Performance rated by users in question 10 ‘After working at this task for a while, I felt pretty competent’ median score was 4 (IQR 4-5). combines immersion and intrinsic motivation scores. The maximum score for both subscales was 50.

Median score was 10.5 greater in intervention group compared with neutral response comparator (p < 0.001). The effect size was large, with a Cohen’s d of 3.00.

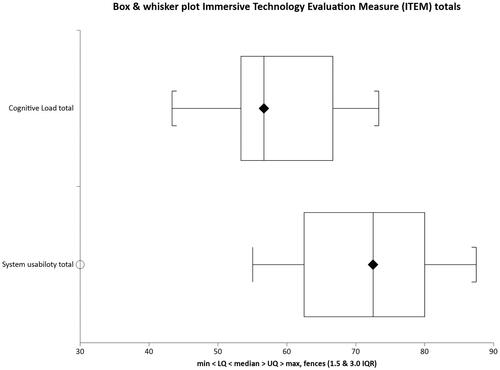

Cognitive load

The raw weighted NASA TLX score median value was 56.7 (IQR 53.33-66.66), with a maximum score 100. Question 1 ‘How mentally demanding was the task’ median score was 4 (IQR 3-4), maximum score 5. Effort to achieve outcome assessed in 5 ‘How hard did you have to work to accomplish your level of performance?’ median was 3 (IQR 3-4), with a maximum score of 5.

System usability

The median for System usability score was 72.5 (IQR 62.5-87.5), with a maximum score of 100. VR technology question 3 ‘I thought the technology was easy to use’ median score was 3 (IQR 3-4). Prior knowledge on how to use technology ‘I needed to learn a lot of things before I could get going with this technology’ question 10, median was 3 (IQR 3-4). Cognitive load and system usability weighted scores are represented in with both domains having an adjusted maximum score of 100.

Median score was 20.6 greater in intervention group compared with neutral response comparator (p < 0.001). The effect size was large, with a Cohen’s d of 0.97.

Debrief

The Debrief score median value was 21 (IQR 19-22), with a maximum score 25. Psychological safety assessed in question 1 ‘Did this feel like a safe learning environment?’ median score was 5 (IQR 4-5), and in question 2 ‘Did you explore your feelings?’ the median score was 3 (IQR 2-4), maximum scores 5. Reflection on performance after the VR session was rated in question 4 ‘Was one or more of your performance domains explored? For example, knowledge or procedural skills’ median score 4 (IQR 4-5).

Median score was 5.5 greater in intervention group compared with neutral response comparator (p < 0.001). The effect size was large, with a Cohen’s d of 2.52.

Discussion

This case study outlines the approach to design a VR simulation alongside a theoretical approach. The experience was evaluated by participants using ITEM. Multimodal learning whereby visual, auditory, and kinaesthetic senses are engaged in an immersive and interactive environment (VR) is influencing the design of digital learning platforms (Philippe et al., Citation2020). Problem-based instructional methods can offer positive outcomes in; clinical performance for medical student; tests of clinical knowledge; and reflective attitudes of the learning format (Vernon & Blake, Citation1993). A VR sepsis simulation was constructed using an instructional design framework and it offered a positive experience for participants. The subjective measures using the ITEM scales of immersion, intrinsic motivation, cognitive load, system usability and debrief were all rated high. Furthermore, the scores demonstrated significantly higher positive experiences when compared with a neutral response (large effect size). The stages of development as outlined in the introduction have been applied to numerous immersive technologies and have shown positive learning outcomes (Codd & Choudhury, Citation2011; Hartstein et al., Citation2022; Rim & Shin, Citation2021).

The technology (medium) and the media depicting the evolving clinical simulation of a deteriorating patient with sepsis was highly immersive. The participants’ experience was reported as highly real and yet dissociated them from the real-world. There is an experience of place illusion and fidelity that promotes the ability to be transported to an environment that evokes the sense of reality. Authenticity of activities is a contingency of design and fosters cognitive realism, thus, having the potential to align educational needs and outcomes (Herrington et al., Citation2007). Jensen and Konradsen (Citation2018) review of VR HMD identified dimensions of learning, which included cognitive skills and understanding of spatial and visual information; knowledge; psychomotor skills related to head-movement, such as visual scanning or observational skills; and affective skills related to controlling emotional response to stressful or difficult situations (Jensen & Konradsen, Citation2018). The review rated the quality of experimental studies using the Medical Education Research Quality Instrument (MERSQI) with an average 10.9. In another review of immersive technology using MERSQI found that VR studies in medical education scored 10.6, with the use of immersive technology favouring an association with learning (Jacobs et al., Citation2022).

Engaging in a complex scenario will utilise prior knowledge regarding the content and navigating the technology. The optimum cognitive load provides a perceived workload that can encourage situational awareness and managing internal and external stressors. The use of NASA TLX raw score has been validated in the clinical environment and correlates with the original two-step comparison methodology (Said et al., Citation2020). This implies higher cognitive load scores impair performance (Zimmerer & Matthiesen, Citation2021). However, as a complex construct, this relationship is challenging to observe and relate as causal (Hart, Citation2006). In this case the overall raw score is a snapshot of workload as subjective report following an activity. An extreme TLX score of above 77 was not experienced by any users (Grier, Citation2015). In separate work on high-fidelity simulation post-test total TLX scores were 61 (Favre-Félix et al., Citation2022), compared to 56.7 in this study. An acceptable median to NASA TLX of 25% to 75% of distribution of results based on an analysis of 45 studies using medical related tasks was 39.4–61.5 (Grier, Citation2015). The stepwise nature of the scenario enabled acceptable cognitive load whilst interfacing with technology. The simulation requires complete interaction with both patient and environment in a virtual world, and this can introduce additional extraneous load as clinical scenarios can be challenging coupled with a technology interface. The novice learner should be guided at a suitable pace and content difficulty tailored to the individual. The degree of control and immersion will influence outcomes (Birbara & Pather, Citation2021).

The operational aspects of technology described in MITHE effect the degree of immersion and this impacts how development, design, and implementation of technology for the benefit of education (Codd & Choudhury, Citation2011). Being an evolving technology with the addition of haptic controllers for the manipulation to the environment, it is important to evaluate the usability. Therefore it was reassuring to find that the usability score was 72.5, and ratings above 70 are considered to provide ‘good’ usability and acceptability (Bangor et al., Citation2009). Furthermore, a score above 68 is a benchmark acceptability (Hyzy et al., Citation2022). This feedback can generate adaptations and evaluation to methods of teaching form an important element to instructional design.

The intrinsic motivation subscale refers to the engagement in activities that can derive enjoyment, interest, and volition (Standage et al., Citation2005). The self-regulation of motivation proposes that a student will engage in an education activity if this produces satisfaction, which differs from an external motive. Autonomy, competence, and relatedness support the psychological functioning of students (Ryan & Deci, Citation2017) and facilitation of these motivations in education can foster a positive learning experience. The debrief subscale in the simulation provided a psychologically safe environment, however, this could be supplemented by greater reflection on user feelings after the simulation. Emotions influence our cognitive states and literature supports the notion that they impact on performance and learning (LeBlanc & Posner, Citation2022).

VR has the potential to offer numerous education benefits; repeating any areas of weakness; asychronicity with distribution and portability; individualisation of learning outcomes and performance metrics; representational fidelity that can re-produce a contextually and psychologically immersive experience. Furthermore, this technological based learning can introduce potential barriers. In a study of co-located learning researchers found connectivity to Wi-Fi disturbed the user augmented reality experience and potentially alters the presence (George et al., Citation2023). There are also concerns in relation to the ethical perspective of learning in this sphere (Jacobs, Citation2023) and some users expressed concern that this could replace real clinical teaching (Jacobs & Maidwell-Smith, Citation2022). In this study a doctor remained present to provide technical assistance and clinical interpretation to aid debrief experience by participants. This prototype sepsis simulation has the scope to be both co and self-facilitated in future iterations.

This pilot was a small sample case study without any real control group, as such, interpretation of findings have to be treated with caution. Although, effect sizes were very large when compared with a neutral response the sample size was small and this leads to reduced power. ITEM was a self-report, which has the advantage of efficiency and simplicity. However, subjectivity to responses and lack of other assessments to evaluate performance i.e. objective examination, hinders the ability to assess if the VR simulation is educationally beneficial. Furthermore, ITEM is an exploratory survey that requires further testing and factor analysis to explore if redundancy exists and whether variables correlate. Future studies to explore performance following VR simulation and comparison to standard mannikin simulation would strengthen our understanding of user experience and interaction with new technology.

Co-development of resources by industry and healthcare is a strategy that can lead to successful outcomes for all parties. The technology and gaming industries are advancing and manufacturers benefit from the clinical expertise to complement the key process components of identification, invention and implementation of education tools (Pai et al., Citation2018). The authors involved in the design of simulation (CJ and KV) found the process rewarding and the blended academic-commercial approach accelerated innovation traditionally not possible in healthcare education infrastructures.

Conclusion

The design of a novel simulation of sepsis of a deteriorating simulated patient in virtual reality (VR) was supported by evidence-base practice and co-developed with clinicians. The simulation utilised an instructional methodology that provided an enjoyable experience and one that promoted the subject immersion and presence. Immersive technology evaluation measure (ITEM) quantified the user experience and participants reported a high level of intrinsic motivation and usability during the problem-solving simulation. The student emotional state using a post simulation debrief allowed for students to reflect on take home messages that are key in managing conditions with high stakes to patient and health services. Future research directed at designing immersive simulation and evaluating outcomes is needed to challenge the perceived barriers with adopting immersive technologies in the healthcare education settings.

Supplemental Material

Download MS Word (34.9 KB)Disclosure statement

Author Dr Chris Jacobs is a scientific and technical editor for Journal of Visual Communication in Medicine. This was disclosed from outset and author had no part in editorial process. Gogglemind Ltd provided the virtual simulation software and hardware. Gogglemind Ltd had no influence in the data collection, analysis and subsequent write-up.

References

- Adams, N. E. (2015). Bloom’s taxonomy of cognitive learning objectives. Journal of the Medical Library Association : JMLA, 103(3), 152–153. doi:10.3163/1536-5050.103.3.010.

- Bangor, A., Kortum, P., & Miller, J. (2009). Determining what individual SUS scores mean: Adding an adjective rating scale. Journal of Usability Studies, 4(3), 114–123.

- Beckhaus, S., & Lindeman, R. W. (2011). Experiential Fidelity: Leveraging the Mind to Improve the VR Experience. In G. Brunnett, S. Coquillart, & G. Welch (Eds.), Virtual realities: Dagstuhl seminar 2008 (pp. 39–49). Springer Vienna.

- Birbara, N. S., & Pather, N. (2021). Instructional design of virtual learning resources for anatomy education. In P. M. Rea (Ed.), Biomedical visualisation, Vol 9 (Vol. 1317, pp. 75–110). Springer.

- Bonnin, C., Pejoan, D., Ranvial, E., Marchat, M., Andrieux, N., Fourcade, L., & Perrochon, A. (2023). Immersive virtual patient simulation compared with traditional education for clinical reasoning: A pilot randomised controlled study. Journal of Visual Communication in Medicine, 46(2), 66–74. doi:10.1080/17453054.2023.2216243.

- Burgess, A., van Diggele, C., Roberts, C., & Mellis, C. (2020). Key tips for teaching in the clinical setting. BMC Medical Education, 20(Suppl 2), 463. doi:10.1186/s12909-020-02283-2.

- Codd, A. M., & Choudhury, B. (2011). Virtual reality anatomy: Is it comparable with traditional methods in the teaching of human forearm musculoskeletal anatomy? Anatomical Sciences Education, 4(3), 119–125. doi:10.1002/ase.214.

- de Melo, B. C. P., Falbo, A. R., Souza, E. S., Muijtjens, A. M. M., Van Merriënboer, J. J. G., & Van der Vleuten, C. P. M. (2022). The limited use of instructional design guidelines in healthcare simulation scenarios: An expert appraisal. Advances in Simulation (London, England), 7(1), 30. doi:10.1186/s41077-022-00228-x.

- Favre-Félix, J., Dziadzko, M., Bauer, C., Duclos, A., Lehot, J. J., Rimmelé, T., & Lilot, M. (2022). High-fidelity simulation to assess task load index and performance: A prospective observational study. Turkish Journal of Anaesthesiology and Reanimation, 50(4), 282–287. doi:10.5152/tjar.2022.21234.

- Franklin, N., & Melville, P. (2015). Competency assessment tools: An exploration of the pedagogical issues facing competency assessment for nurses in the clinical environment. Collegian (Royal College of Nursing, Australia), 22(1), 25–31. doi:10.1016/j.colegn.2013.10.005.

- Frendo, M., Frithioff, A., Konge, L., Sorensen, M. S., & Andersen, S. A. (2021). Cochlear implant surgery: Learning curve in virtual reality simulation training and transfer of skills to a 3D-printed temporal bone - A prospective trial. Cochlear Implants International, 22(6), 330–337. doi:10.1080/14670100.2021.1940629.

- Gagné, R. M., & Merrill, M. D. (1990). Integrative goals for instructional design. Educational Technology Research and Development, 38(1), 23–30. doi:10.1007/BF02298245.

- George, O., Foster, J., Xia, Z., & Jacobs, C. (2023). Augmented reality in medical education: A mixed methods feasibility study. Cureus, 15(3), e36927. doi:10.7759/cureus.36927.

- Grier, R. A. (2015). How high is high? A meta-analysis of NASA-TLX global workload scores. Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 59(1), 1727–1731. doi:10.1177/1541931215591373.

- Hart, S. G. (2006). Nasa-task load index (NASA-TLX); 20 years later. Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 50(9), 904–908. doi:10.1177/154193120605000909.

- Hartstein, A. J., Verkuyl, M., Zimney, K., Yockey, J., & Berg-Poppe, P. (2022). Virtual reality instructional design in orthopedic physical therapy education: A mixed-methods usability test. Simulation & Gaming, 53(2), 111–134. doi:10.1177/10468781211073646.

- Herrington, J., Reeves, T. C., & Oliver, R. (2007). Immersive learning technologies: Realism and online authentic learning. Journal of Computing in Higher Education, 19(1), 80–99. doi:10.1007/BF03033421.

- Hyzy, M., Bond, R., Mulvenna, M., Bai, L., Dix, A., Leigh, S., & Hunt, S. (2022). System usability scale benchmarking for digital health apps: Meta-analysis. JMIR mHealth and uHealth, 10(8), e37290. doi:10.2196/37290.

- Jacobs, C. (2023). Augmented resuscitation- simulacrum of AR. Journal of Visual Communication in Medicine, 46(1), 51–53. doi:10.1080/17453054.2023.2169111.

- Jacobs, C., Foote, G., Joiner, R., & Williams, M. (2022). A narrative review of immersive technology enhanced learning in healthcare education. International Medical Education, 1(2), 43–72. doi:10.3390/ime1020008.

- Jacobs, C., Foote, G., & Williams, M. (2023). Evaluating user experience with immersive technology in simulation-based education: A modified Delphi study with qualitative analysis. PLOS One, 18(8), e0275766. doi:10.1371/journal.pone.0275766.

- Jacobs, C., & Maidwell-Smith, A. (2022). Learning from 360-degree film in healthcare simulation: A mixed methods pilot. Journal of Visual Communication in Medicine, 45(4), 223–233. doi:10.1080/17453054.2022.2097059.

- Jacobs, C., & Rigby, J. M. (2022). Developing measures of immersion and motivation for learning technologies in healthcare simulation: A pilot study. Journal of Advances in Medical Education & Professionalism, 10(3), 163–171. doi:10.30476/jamp.2022.95226.1632.

- Jensen, L., & Konradsen, F. (2018). A review of the use of virtual reality head-mounted displays in education and training. Education and Information Technologies, 23(4), 1515–1529. doi:10.1007/s10639-017-9676-0.

- Jung, S., & Lindeman, R. W. (2021). Perspective: Does realism improve presence in VR? Suggesting a model and metric for VR experience evaluation. Frontiers in Virtual Reality, 2. doi:10.3389/frvir.2021.693327.

- Kari, T., & Kosa, M. (2023). Acceptance and use of virtual reality games: An extension of HMSAM. Virtual Reality, 27, 1585–1605. doi:10.1007/s10055-023-00749-4.

- Kirkpatrick, D. L. (1994). Evaluating training programs: The four levels: First edition. Berrett-Koehler, Publishers Group West [distributor].

- Kolb, D. (1984). Experiential learning: Experience as the source of learning and development. Prentice-Hall.

- Laurell, C., Sandström, C., Berthold, A., & Larsson, D. (2019). Exploring barriers to adoption of virtual reality through social media analytics and machine learning – an assessment of technology, network, price and trialability. Journal of Business Research, 100, 469–474. doi:10.1016/j.jbusres.2019.01.017.

- LeBlanc, V. R., & Posner, G. D. (2022). Emotions in simulation-based education: Friends or foes of learning? Advances in Simulation (London, England), 7(1), 3. doi:10.1186/s41077-021-00198-6.

- Liu, X., Zhang, J., Hou, G., & Wang, Z. (2018). Virtual reality and its application in military. IOP Conference Series: Earth and Environmental Science, 170(3), 032155. doi:10.1088/1755-1315/170/3/032155.

- Loureiro, S. M. C., Guerreiro, J., Eloy, S., Langaro, D., & Panchapakesan, P. (2019). Understanding the use of virtual reality in marketing: A text mining-based review. Journal of Business Research, 100, 514–530. doi:10.1016/j.jbusres.2018.10.055.

- Macnamara, A. F., Bird, K., Rigby, A., Sathyapalan, T., & Hepburn, D. (2021). High-fidelity simulation and virtual reality: An evaluation of medical students’ experiences. BMJ Simulation & Technology Enhanced Learning, 7(6), 528–535. doi:10.1136/bmjstel-2020-000625.

- McVeigh, S. E. (2020). Sepsis management in the emergency department. The Nursing Clinics of North America, 55(1), 71–79. doi:10.1016/j.cnur.2019.10.009.

- Merrill, M. D. (2002). First principles of instruction. Educational Technology Research and Development, 50(3), 43–59. doi:10.1007/BF02505024.

- Milgram, P., & Kishino, F. (1994). A taxonomy of mixed reality visual displays. IEICE TRANSACTIONS on Information and Systems, 77(12), 1321–1329. doi: 10.1.1.102.4646.

- Moore, W. R., Vermuelen, A., Taylor, R., Kihara, D., & Wahome, E. (2019). Improving 3-hour sepsis bundled care outcomes: Implementation of a nurse-driven sepsis protocol in the emergency department. Journal of Emergency Nursing, 45(6), 690–698. doi:10.1016/j.jen.2019.05.005.

- Orland, M. D., Patetta, M. J., Wieser, M., Kayupov, E., & Gonzalez, M. H. (2020). Does virtual reality improve procedural completion and accuracy in an intramedullary tibial nail procedure? A randomized control trial. Clinical Orthopaedics and Related Research, 478(9), 2170–2177. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7431248/pdf/abjs-478-2170.pdf doi:10.1097/CORR.0000000000001362.

- Pai, D. R., Minh, C. P., & Svendsen, M. B. (2018). Process of medical simulator development: An approach based on personal experience. Medical Teacher, 40(7), 690–696. doi:10.1080/0142159X.2018.1472753.

- Philippe, S., Souchet, A. D., Lameras, P., Petridis, P., Caporal, J., Coldeboeuf, G., & Duzan, H. (2020). Multimodal teaching, learning and training in virtual reality: A review and case study. Virtual Reality & Intelligent Hardware, 2(5), 421–442. doi:10.1016/j.vrih.2020.07.008.

- Prineas, S., Mosier, K., Mirko, C., & Guicciardi, S. (2021). Non-technical skills in healthcare. In L. Donaldson, W. Ricciardi, S. Sheridan, & R. Tartaglia (Eds.), Textbook of patient safety and clinical risk management (pp. 413–434). Springer International Publishing.

- Rim, D., & Shin, H. (2021). Effective instructional design template for virtual simulations in nursing education. Nurse Education Today, 96, 104624. doi:10.1016/j.nedt.2020.104624.

- Robson, W. P., & Daniels, R. (2008). The Sepsis Six: Helping patients to survive sepsis. British Journal of Nursing, 17(1), 16–21. doi:10.12968/bjon.2008.17.1.28055.

- Ryan, R. M., & Deci, E. L. (2017). Self-determination theory: Basic psychological needs in motivation, development, and wellness. The Guilford Press.

- Said, S., Gozdzik, M., Roche, T. R., Braun, J., Rössler, J., Kaserer, A., Spahn, D. R., Nöthiger, C. B., & Tscholl, D. W. (2020). Validation of the raw national aeronautics and space administration task load index (NASA-TLX) questionnaire to assess perceived workload in patient monitoring tasks: Pooled analysis study using mixed models. Journal of Medical Internet Research, 22(9), e19472. doi:10.2196/19472.

- Skarbez, R., Smith, M., & Whitton, M. C. (2021). Revisiting milgram and Kishino’s reality-virtuality continuum. Frontiers in Virtual Reality, 2. doi:10.3389/frvir.2021.647997.

- Slater, M. (2009). Place illusion and plausibility can lead to realistic behaviour in immersive virtual environments. Philosophical Transactions of the Royal Society of London. Series B, Biological Sciences, 364(1535), 3549–3557. doi:10.1098/rstb.2009.0138.

- Snarby, H., Gasbakk, T., Prasolova-Forland, E., Steinsbekk, A., & Lindseth, F. (2019). Procedural medical training in VR in a smart virtual university hospital. Smart Education and E-Learning 2018, 99, 132–141. doi:10.1007/978-3-319-92363-5.

- Standage, M., Duda, J. L., & Ntoumanis, N. (2005). A test of self-determination theory in school physical education. The British Journal of Educational Psychology, 75(Pt 3), 411–433. doi:10.1348/000709904X22359.

- Tang, Y. M., Chau, K. Y., Kwok, A. P. K., Zhu, T., & Ma, X. (2022). A systematic review of immersive technology applications for medical practice and education - Trends, application areas, recipients, teaching contents, evaluation methods, and performance. Educational Research Review, 35, 100429. doi:10.1016/j.edurev.2021.100429.

- Vernon, D. T., & Blake, R. L. (1993). Does problem-based learning work? A meta-analysis of evaluative research. ACADEMIC Medicine, 68(7), 550–563. https://journals.lww.com/academicmedicine/Fulltext/1993/07000/Does_problem_based_learning_work__A_meta_analysis.15.aspx doi:10.1097/00001888-199307000-00015.

- Wang, A., Thompson, M., Uz-Bilgin, C., & Klopfer, E. (2021). Authenticity, interactivity, and collaboration in virtual reality games: Best practices and lessons learned. Frontiers in Virtual Reality, 2. doi:10.3389/frvir.2021.734083.

- Witmer, B. G., & Singer, M. J. (1998). Measuring presence in virtual environments: A presence questionnaire. Presence: Teleoperators and Virtual Environments, 7(3), 225–240. doi:10.1162/105474698565686.

- Yu, P., Pan, J. J., Wang, Z. X., Shen, Y., Wang, L. L., Li, J. L., Hao, A., & Wang, H. (2021). Cognitive load/flow and performance in virtual reality simulation training of laparoscopic surgery. In 2021 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW 2021), pp. 468–469.

- Zhou, Y., Hou, J., Liu, Q., Chao, X., Wang, N., Chen, Y., Guan, J., Zhang, Q., & Diwu, Y. (2021). VR/AR technology in human anatomy teaching and operation training. Journal of Healthcare Engineering, 2021, 9998427–13. doi:10.1155/2021/9998427.

- Zimmerer, C., & Matthiesen, S. (2021). Study on the impact of cognitive load on performance in engineering design. Proceedings of the Design Society, 1, 2761–2770. doi:10.1017/pds.2021.537.