?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Computer-assisted orthopedic surgery requires precise representations of bone surfaces. To date, computed tomography constitutes the gold standard, but comes with a number of limitations, including costs, radiation and availability. Ultrasound has potential to become an alternative to computed tomography, yet suffers from low image quality and limited field-of-view. These shortcomings may be addressed by a fully automatic segmentation and model-based completion of 3D bone surfaces from ultrasound images. This survey summarizes the state-of-the-art in this field by introducing employed algorithms, and determining challenges and trends. For segmentation, a clear trend toward machine learning-based algorithms can be observed. For 3D bone model completion however, none of the published methods involve machine learning. Furthermore, data sets and metrics are identified as weak spots in current research, preventing development and evaluation of models that generalize well.

1. Introduction

Computer assisted orthopedic surgery (CAOS) provides a number of benefits to the patient, surgeon and clinic. By CAOS, the accuracy and repeatability in placement of e.g. screws, osteotomies or implants can be improved [Citation1–3]. The automated data processing enables personalized solutions like patient-specific instruments and implants [Citation4], where the 3D geometry is required to design rigidly fitting cutting guides and to assess the patient’s J-curve, respectively, among other aspects. These methods potentially improve mechanical alignment [Citation5] or other functional parameters [Citation6,Citation7] and decrease net-costs in total knee arthroplasty (TKA) [Citation8,Citation9], respectively. E.g. implant templating in 3D as opposed to 2D increases tibial size selection accuracy from 28.7% to 93.1% [Citation10]. Finally, personalized biomechanical simulation and optimization based on 3D modeling may correlate with patient-related outcomes [Citation11–14], and thus help to improve clinical outcomes.

Therefore, for many applications in CAOS, a patient-specific 3D representation of bony anatomy is fundamental. The accuracy of this representation is of high importance: mismatch between the bone and implant geometries is associated with poor clinical outcome. Chung et al. state that femoral component overhang of over 4 mm correlates with lower maximum flexion angle (p = 0.005) [Citation15]. Mahoney et al. report frequent overhang exceeding 3 mm in 40% of men and 68% of women and found an almost twofold increased risk of knee pain [Citation16]. Conventionally, computed tomography (CT) and magnetic resonance imaging (MRI) are the gold standard for the acquisition of 3D bony geometry. These techniques provide a comprehensive 3D image of the region of interest at a high resolution, with in-plane voxel spacing of current systems in the range of 0.3–0.5 mm [Citation17], and is influenced by external factors, like the tissue properties. While CT allows for comparably easy segmentation of bony structures, MRI enables visualization of the surrounding soft tissues. However, both systems come with high costs. Yet another alternative is intra-operative 2D or 3D fluoroscopy. While it is rather cheap compared to CT or MRI, it exposes the patient and possibly the surgeon to ionizing radiation.

In contrast to computed tomography and fluoroscopy, ultrasound is a radiation-free imaging technique. Based on the reflection of ultrasound waves at impedance interfaces, it is able to visualize various tissues and structures, for example muscles, blood vessels, cartilage and bones. Its real-time capability allows for an interactive morpho-functional examination including e.g. doppler sonography or elastography. It is widely available and represents a cheap alternative [Citation18]. The physical limit of spatial resolution depends on the imaging frequency and ranges from 0.1 mm to 1 mm for frequencies of 20 to 1 MHz. However, ultrasound comes with a number of limitations. The most apparent one is the so-called speckle noise: The interference of numerous small diffuse reflections creates the characteristic noise commonly found in ultrasound images. Accordingly, the signal-to-noise ratio (SNR) is quite low. Furthermore, bone interfaces cause a strong specular reflection of the ultrasound wave, with almost no echo of structures in the ‘bone shadow’. As such, visualization of structures behind a bone surface is not possible in general. For a number of bony anatomies this is highly relevant, as vital parts, e.g. retropatellar surfaces of the knee joint, cannot be imaged by ultrasound. The specular reflection furthermore induces a high dependence of the signal strength on the inclination angle between bone surface and ultrasound beam: In an orthogonal setting, the sound energy is reflected in direction of the probe, producing a strong response in the image. As the angle becomes more acute, less sound energy is reflected to and measured by the probe, causing a small or even no response of the surface in the image. In a fully orthogonal setting on the other hand, echo artifacts may occur. Imaging parameters need to be set manually, even if some progress on automation has been made by ultrasound vendors (Clarius, Vancouver, Canada). Similarly, the data acquisition itself is a highly manual process. Attempts to automate it with robots are still in its infancy [Citation19]. All of the above leads to a steep learning curve for sonographers, who require years of training and practice to be able to distinguish relevant features from artifacts [Citation20]. Most importantly, these limitations induce the need for a modeling of occluded parts of the bone. This holds especially true for narrow joint gaps, e.g. in the wrist, knee or hip. Two examples of relevant medical applications in this regard are TKA, where the distal cut may be defined by an offset to the distal articulating surface and total hip arthroplasty (THA), where the cup needs to be positioned in the joint socket such that it is covered by bone.

The ultrasound signal recorded by the probe is referred to as radio frequency (RF) data. Commonly, the signal envelope of the RF data is computed and a grey-scale Brightness-Mode (B-Mode) image is constructed from it. All papers reviewed in this work base their computation on B-Mode images, even though the original data may be used as well [Citation21]. Ultrasound signals can be measured for a single 1D beam, a 2D image or a 3D volume image. The latter can be generated by combining multiple 2D image slices into one volume. This requires information on the pose of the probe, which can be provided by a mechanically swiped 1D piezo-element array, by a phased 2D piezo-element array, or by tracking a 2D probe with optical or electromagnetic tracking systems. Given the limited field-of-view of common ultrasound probes, this technique becomes highly relevant to depict large structures in a single volume. Note, that the image interpolation process is often referred to as reconstruction, which is not a topic of this survey. Similarly, (multi-)modal registration of (volumetric) ultrasound images to itself, other imaging modalities or atlases is not discussed Instead, we refer the reader to [Citation22] and [Citation23] for reviews of these topics.

Computer-assistance may be capable of handling the aforementioned limitations and allow the wide-spread use of ultrasound for 3D bone modeling. In general, two tasks need to be accomplished: First, the delineation of the bone surface in the ultrasound image, typically done via segmentation, and second, the bone model completion of the partial surface. A recent review on the first topic is given by Pandey et al. [Citation24], presenting 56 works published over a 29-year period. This work strives to continue their work by adding another 44 publications published in the past three years to the list, underlining the significance of the topic. We furthermore extent their work by providing a method tutorial and investigating bone model completion. The review most closely related to this topic by Heimann et al. mentions just a single publication involving ultrasound imaging [Citation25].

This leads to the main objectives and contributions of this work: (1) Review of the state-of-the-art in segmentation of bone surfaces in ultrasound images. (2) Review of the state-of-the-art in completion of partial bone models derived from ultrasound. From these contributions, the reader should get a comprehensive overview and detailed understanding of the methodology used. (3) Identification and discussion of past limitations in order to (4) make recommendations for future research. This work primarily addresses researchers in the fields of total knee and hip arthroplasty (TKA/THA) and biomechanics, that require full 3D models as input for their methods.

2. Methods

We followed the ‘Preferred Reporting Items for Systematic Reviews and Meta-Analysis’ (PRISMA) paradigm when applicable. As the review at hand is a tutorial review, this excluded data collection, bias assessment or syntheses. The review was not registered beforehand. Our research protocol followed a multi-stage approach: First, we researched related review papers with similar scope. This allowed us to identify the need for a more recent survey publication and to hint the reader to high quality reviews of prior work. The keywords required in the title or abstract were either a combination of 3D, ultrasound and survey, or ultrasound, survey and segmentation. For each word, alternative notations and synonyms were included (see for a complete list). Two literature databases, Google Scholar and PubMed were used. Examining 520 survey papers, this research yielded five relevant surveys on segmentation of ultrasound images, that provide a comprehensive literature review [Citation26–29]. We want to highlight the work by Pandey et al. that provides a high quality overview up to the year 2019 [Citation24]. Three relevant surveys on shape completion of partial 3D ultrasound models were found, providing an overview of the state of the art up to 2009 [Citation25,Citation30,Citation31]. The most relevant survey on that topic is the work by Heimann et al. [Citation25]. This concludes the first stage, where other survey papers were reviewed. All papers included in these survey papers were excluded from our search.

Table 1. List of alternative keywords used in the keyword-based literature research.

In the second stage, we limited our research to more recent work published after 2019 and 2009, respectively, and searched for original articles using a similar pattern: For segmentation related papers, the keywords were a combination of 3D, ultrasound, segmentation and orthopedics. For bone model completion, the keywords were either model, orthopedics and completion or model, 3D and ultrasound. The papers title and abstract were scanned to decide upon selection. The papers included had to present methods not published previously. As mentioned beforehand, methods on registration and volume-reconstruction were excluded. Furthermore, manual or semi-manual techniques were excluded, as well as publications that were not accessible. Based on this method, we added 39 relevant out of 2000 examined papers to our list.

After this keyword-based analysis, we checked all authors that published more than one paper in the list so far. Their research groups were determined and all publications of their respective head of research were checked. Based on this, we added another 8 papers to our list.

Finally, we searched through all citations of the publications found so far and selected related works, adding another 8 publications. During revision, three more paper were pointed out by the reviewer, such that this review included 58 papers in total.

3. Methodological overview

As mentioned before, most of the publications addressed one of the two sub-tasks, segmentation and bone model completion. Therefore, we analyzed the respective problems separately. In the following subchapters, we present the most popular and successful methods.

3.1. Segmentation

3.1.1. Shadow peak

One of the most challenging tasks in the interpretation of ultrasound images is the distinction between bone surfaces from other soft tissue interfaces. A helpful feature for identifying bone surfaces is the acoustic shadow occurring behind interfaces with high difference in impedance, the so-called bone shadow: The largest part of incoming sound energy is reflected, only a very small part is transmitted. Mostly, bone shadow information is used as an additional feature for the segmentation of bone surfaces. One example is the Shadow Peak algorithm [Citation32], where a shadow confidence map S is computed based on the integration of normalized and Gaussian-filtered intensities along ultrasound scan lines:

Here, denotes the image intensity

the vertical image axis. This shadow confidence map (see ) can then be used for the enhancement of the original ultrasound image by element-wise multiplication.

3.1.2. Phase symmetry

Intensity-based segmentation techniques suffer from the unnormalized nature of ultrasound images. While these techniques may work well for CT images, the actual intensity observed in ultrasound is influenced by a number of variables, e.g. the wave amplitude, attenuation of the surrounding tissue or the inclination angle of the reflecting interface to the US beam. However, one may use another feature inherent to bone responses: their symmetry. The ultrasound pulse generated by the ultrasound probe has a certain spatial extent. When reflecting off a surface, its waveform may change, e.g. the pulse length may increase due to a reflection off an inclined surface, but its symmetrical nature will remain [Citation33].

In an image, this feature can be searched for by transforming it into the frequency domain and conducting a wavelet analysis. Suitable wavelets include log-Gabor filters, that exhibit a frequency and a directional component. By varying the frequency component, symmetric features of different sizes can be determined. Similarly, filters for different directions can be combined. In ultrasound segmentation, a filter bank of filters is built that exhibits rotation-invariance. The even

and odd

part of the filter response relate to the symmetry and asymmetry of the image signal. Constants

and

account for noise and prevent division by zero. Along with the inherent intensity-invariance of the individual filters, bone responses in the image can be detected reliably. This is computed by the so-called Phase Symmetry (PS), shown in [Citation34].

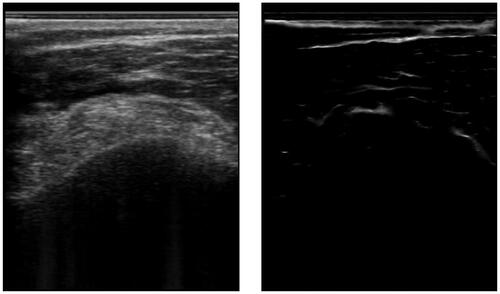

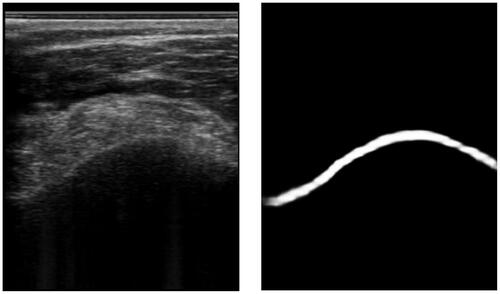

Figure 2. An example of an ultrasound image and its respective phase symmetry (PS) image. Note, that the bone surface on the left has a rather asymmetric appearance due to the hyperechoic soft tissue on top. This is why the bone surface is barely recognized.

Soft-tissue and other interface responses also exhibit a symmetric structure and are thus enhanced alongside the bone surfaces. However, bone surfaces are expected to have a sheet-like shape, which may differ from other interfaces like blood vessels. By conducting an eigen analysis of the PS image, one can assess the local eigenvalues. For a sheet like structure, the eigenvalues are

and

These requirements can be incorporated into the PS-formula as following, to obtain the Structured Phase Symmetry (SPS) [Citation35]:

where

describes a blob-eliminating term,

a sheet-enhancing term and

a noise-canceling term.

Another feature inherent to bone surfaces is the aforementioned bone shadow. It can be incorporated in the SPS by computing an attenuation map, similar to the shadow peak feature. The confidence-weighted structed phase symmetry (CSPS) map is defined as the product of the SPS with the shadow feature map. By thresholding the CSPS map, one can segment the bone surface [Citation35]. Note that the terms as well as the exact formulas used by the authors publishing about these methods slightly changed over the years.

While phase symmetry and its derivatives were among the most popular segmentation techniques prior to the era of convolutional neural networks, only two recent publication [Citation36,Citation37] fully rely on this technique for segmentation of bones. Another publication [Citation38] however utilizes symmetry filters for detecting not just the bones surface, but the full spinal column profile. In [Citation39], phase symmetry is compared to a trimmed version utilizing just the bone shadow and scan-line derivative in order to speed up the computation.

3.1.3. Convolutional neural networks

The aforementioned methods rely on handcrafted features and heuristics. In contrast, the following methods belong to the domain of deep learning.

With the success of the AlexNet [Citation40], convolutional neural networks (CNNs) became the gold standard for most vision tasks, including segmentation. See for an exemplary ultrasound image segmentation. In the medical domain, the U-Net [Citation41] establishes a gold standard architecture. However, various improvements have been adopted.

Figure 3. An example of an ultrasound image and its respective segmentation by a DeepLabv3 CNN architecture.

Most of the works analyzed in this paper rely on the original U-Net [Citation42–53], or its 3D counterpart [Citation54–57], or the succeeding U-Net++ [Citation58], which densely populates the skip connections with convolutional blocks. Three publications incorporate dropout, typically used during training in order to improve the networks robustness, into the inference scheme [Citation54,Citation59,Citation60]. Random neurons, or filters, are set to zero (‘dropped’) in order to introduce non-determinism into the inference process. This allows to measure the uncertainty of the segmentation. If a pixel is reliably segmented over a number of runs, even though different features were dropped, the network has a high certainty regarding its segmentation. The computational burden increases linearly with the number of inferences run. This technique can also be used at run-time to identify voxels with uncertain segmentation and to focus the weight adaption on these [Citation55]. Auxiliary phase-symmetry images can either be predicted by a second branch [Citation61] or incorporated as additional feature [Citation62–64]. Similarly, Tang et al. propose to combine two U-Nets processing B-mode and shadow enhanced B-mode images simultaneously, fuzing their respective outputs to obtain the final segmentation [Citation56]. Several contributions do not rely on the U-Net, but on the DeepLabv3 + [Citation65–68], Mask R-CNN [Citation69], a two-stage detector, and the Pyramid Attention Network (PAN) [Citation70]. The former utilizes strided convolutions to retain high-resolution feature maps and combine multi-scale features. The latter adds an attention mechanism to the skip connections. When combining the low-level features of the skip connection into the decoder, the high-semantic features are utilized to focus the attention to relevant parts. Additionally, a multi-scale pyramid pooling is added to the highest semantic layer. Jiang et al. follow this concept and stack multi-scale features to guide attention in the final classification step [Citation71]. Banerjee et al. introduce a number of changes to the U-Net, that were inspired by other architectures [Citation72]. Their ‘Light-convolution Dense Selection’ architecture (LDS-U-Net) employs depthwise-separable convolution for a reduced number of parameters, selection gates and residual connections. Similarly, the ‘BoneNet’ augments the original U-Net with ‘squeeze-and-excite’ blocks, that introduce an attention mechanism, too, and depthwise-separable convolution [Citation73]. For the segmentation of volumetric images, spatial information of the neighboring slices may be incorporated. Next to 3D convolutions, this can be achieved by combining two encoders in a Siam-U-Net [Citation74], where the known region-of-interest of the previous slice is processed by a siamese encoder and combined with the encoding of the current frame via cross-correlation. This concept can also be formulated as a recurrent neural network on the basis of a gated recurrent unit as proposed by Jiang et al. [Citation63].

3.1.4. Generative adversarial networks

Generative adversarial networks (GANs) [Citation75] consist of two competing subnetworks: a generator network which learns to create synthetic samples from random distributions, and a discriminator network which learns to differentiate between synthetic and real samples. Both subnetworks are trained simultaneously in a min-max fashion. One extension are conditional generative adversarial networks (cGANs) [Citation76], where the generator is conditioned on input samples and learns to create corresponding output samples; thus, cGANs can be used for image-to-image translation [Citation77].

In [Citation78], the authors compare the pix2pix cGAN with the U-Net for the semantic segmentation of radial metaphysis, radial epiphysis and carpal bones in ultrasound images. Furthermore, they investigate a combination of U-Net and pix2pix, with the cGAN trained to improve UNet predictions.

Apart from direct segmentation, cGANs can be used to generate additional input features. In [Citation79], the authors condition a cGAN on ultrasound B-mode images to generate corresponding bone shadow segmentation maps. These are subsequently fed to a multi-feature guided CNN for the segmentation of femur, tibia, radius, knee, and spine in ultrasound images.

Another use of GANs is the synthesis of ultrasound images and corresponding segmentation masks for data augmentation, as has been shown in [Citation51] for ultrasound images of radius, femur, spine and tibia. Similarly, in [Citation53] the authors use the pix2pix cGAN for the synthesis of ultrasound images of the humerus.

3.1.5. Orientation-guided graph convolutional networks

A feature specific to bone surfaces in ultrasound images is their connectivity. Typically, there are no sudden jumps or isolated patches. Rahman et al. leverage this knowledge by building a U-Net like network, but introducing graph convolution [Citation80]. The input feature map of the image is mapped to a subspace on which a learned similarity matrix is applied. This process imposes a learnable graph structure on the image, promoting continuous segmentation. Furthermore, the network predicts the surface’s orientation, adding another clue helping with continuous segmentation. This is of course limited to structures exhibiting a single closed surface, which is not the case for, e.g. spine images or cross-sections of radius and ulna.

3.1.6. Vision transformer

Large Transformer models are the basis of the recent advances in natural language processing. By computing an attention measure between all pairs of words in a sentence, their relative relevance is determined. This concept can be transferred to images by dividing the image into patches. In contrast to the convolution operation, which operates locally, global image context can be accessed in a single self-attention layer. In order to incorporate features that extend over multiple patches, shifted and hierarchically scaled patches may be established. On the hand, Transformer models are known to require large training data sets, but on the other hand, they are able to benefit strongly from additional data. Broessner et al. confirm this when comparing a shifted-window vision transformer to the U-Net [Citation81].

3.2. Bone model completion

All publications on ultrasound-based bone modeling reviewed in this work rely on statistical shape models (SSM) or similar approaches, typically built from CT image data bases. For a detailed description of the basic concept, we refer the reader to Heimann et al. [Citation27]. In general, the SSM has two components, the mean shape and the modes matrix

The model can adapt to any shape used in the training data by adding a linear combination of the modes with weights

to the mean shape:

apart from adapting the shape, all methods involve some kind of pose registration, either simultaneously or alternating to the shape adaption. As the model can only adapt its shape in the statistical limits of the data base used for its construction, some methods involve a final free-form deformation scheme.

3.2.1. Correspondence-based

In order to find the weights that adapt the model to the incomplete patient geometry, a mismatch function needs to be defined. This can be achieved by computing the squared distances between corresponding points. As the mapping from model to patient geometry is unknown, most publications define the nearest neighbors as the corresponding points [Citation82–84]. To adopt an image-based correspondence search, one can search along the vertex normal for high intensity pixels in either the original ultrasound image or the segmented one [Citation70,Citation85,Citation86]. For optimization of the mismatch function, the system of linear equations needs to be solved, e.g. by a QR decomposition.

3.2.2. Gaussian-mixture-model fitting

In a different approach, a Gaussian-Mixture-Model (GMM) is built from the model point cloud, where the vertices define the centroids of the GMM. Then the model is fitted to the incomplete patient geometry by assuming it was sampled from the GMM. This is similar to the Coherent Point Drift algorithm [Citation87], and eliminates the need to define correspondences. The target points may also be weighted according to their strength in the segmentation process. Model fitting may involve an expectation-maximization scheme [Citation88], a quasi-newton optimization [Citation89,Citation90] or covariance matrix adaption evolution strategy [Citation91].

3.2.3. Ultrasound simulation and correlation

Another measure that can be optimized is the correlation of a simulated ultrasound image with the recorded ultrasound image. For this, the current instance of the SSM is sliced according to the current transformation, and an ultrasound image is simulated. During simulation, rays are casted in the direction of the simulated ultrasound wave, to find intersection points with the model, that cast a bone shadow. By this process, one can eliminate the need for a segmentation, but needs to replace it with a simulation. Ghanavati et al. simultaneously optimize shape and pose by minimizing Linear Correlation by Linear Combination Their ultrasound image simulation includes a gray gradient image as background [Citation92,Citation93]. Khallaghi et al. in contrast alternate between optimizing shape and pose to optimize

This reduces the number of parameters optimized simultaneously and speeds up the computation. By moving this highly parallelizable process to the GPU, computation time is again reduced. Furthermore, instead of point clouds or meshes they use a data base of CT images to build the SSM [Citation94].

4. Results

and give an overview over all publications regarding segmentation and 3D bone modeling, respectively. The remainder of this paper is structured as followed. We aggregate the publications according to different aspect to provide insight over the state of the art. Following, we report quantitative evaluations and discuss their limitations. Finally, we suggest directions for future research.

Table 2. Overview of the publications addressing segmentation.

Table 3. Summary of publications on 3D bone model completion. Algorithms categorized according to the methods section.

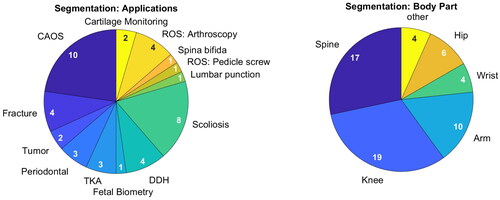

(left) shows the different medical applications the publications address. Several rather technical papers do not mention a specific application and are summarized under ‘CAOS’. Of those that do state an application, diagnosis of Scoliosis and Developmental Dysplasia of the Hip (DDH) are most common. Further diagnostic applications include fractures, bone tumors and periodontal tissue assessment and cartilage monitoring, among others. Finally, implant planning in TKA and robotic surgery complete the list. (right) shows the body parts involved during either training or testing. Here, knee and spine represent two thirds of all applications. Other relevant body parts are the hip, arm and wrist.

Figure 4. Addressed medical application (left) and targeted body parts (right) of publications on segmentation. Publications can have multiple mentions.

These findings demonstrate the wide range of medical applications that can profit off ultrasound imaging.

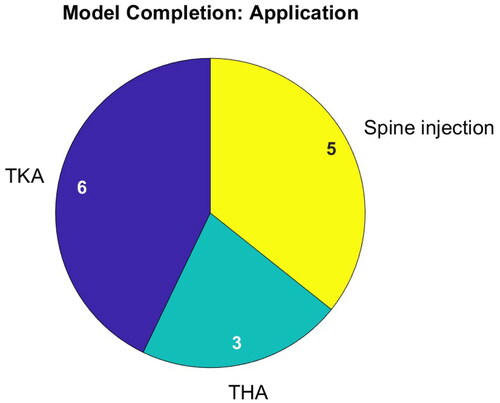

presents the applications addressed in publications on bone model completion. Only three applications are addressed involving surgery of the hip, knee and spine. In TKA, patient-specific bone models are sought for implant planning. In THA, the local bone coordinate system is searched for to determine the correct cup position. Note that in surgery of the spine, solely epidural needle placement is addressed and that there are no publications on pedicle screw placement.

Depending on the application, the proportion of accessible bone surface varies strongly, but no publication reports a quantitative evaluation in this regard. In TKA, just the articulating surfaces in the joint gap need to be determined by the bone model. All THA related publications model the pelvis on the basis of just three patches, located at the iliac crest and pubic symphysis. These patches cover only a very small portion of the pelvis. Similarly, during spine imaging, just the posterior surface of the vertebral bodies is visible. Furthermore, they overlap.

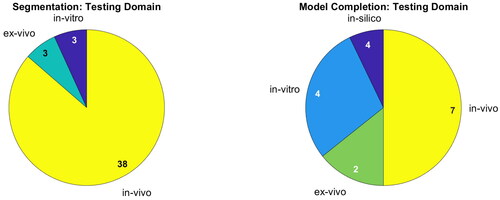

Most publications on segmentation are developed for and evaluated on in-vivo data. For 3D bone modeling, half of the publications are yet to be tested on in-vivo data. Evaluation under laboratory conditions of course differs from the actual scenario. See for a detailed overview. The medical applications and testing domain demonstrate that ultrasound segmentation is a well-developed field of research, which does not hold true for bone model completion.

Investigating , the data set sizes differ strongly, ranging from a few dozen images up to 20.000. In total, almost 130.000 images were annotated just in recent years. This assumes disjunct data sets. Furthermore, the number of slices for some volume images are based on estimates.

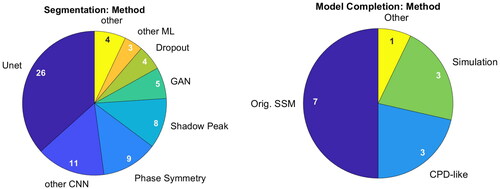

shows the adopted methods for segmentation and bone model completion. For segmentation, all but four publications rely on deep learning algorithms. Seventeen works incorporate variants of Shadow Peak and Phase Symmetry into their hybrid method, but CNNs dominate this chart.

Figure 7. Methods applied for segmentation (left) and bone model completion (right). Publications can have multiple mentions.

Categorization of bone model completion algorithms is not as straight-forward. All published methods involve statistical shape models and none include machine learning. While seven utilize a direct correspondence-based optimization, three follow a GMM-like approach. Three simulate ultrasound images to establish a common framework and one paper does not reveal its algorithm. Accordingly, correspondence-based optimization is the most active research direction.

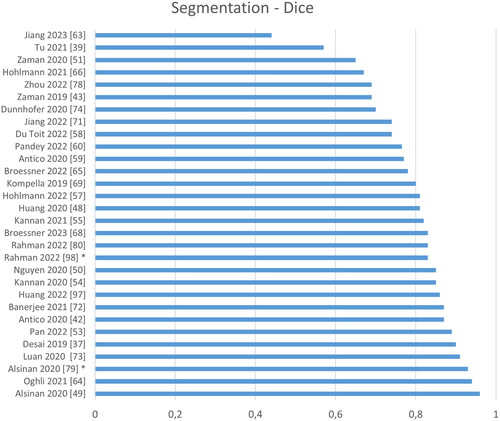

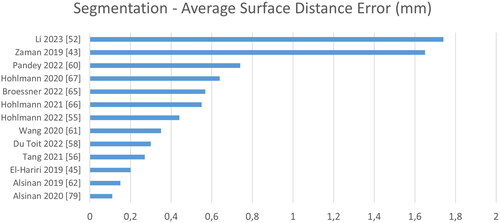

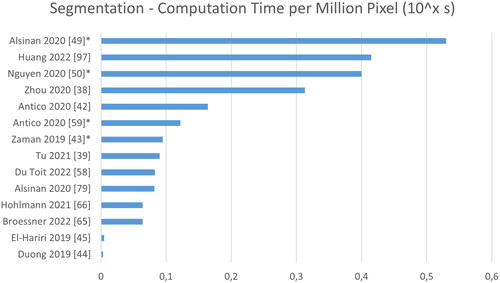

present a ranking regarding quantitative performance in terms of Dice, average surface distance error (SDE) and computation time. We normalize the reported processing times by the number of pixels, to account for varying image sizes. In case the image size is not reported, a default value of 256 × 256 pixels is assumed. Overall, a rather poor performance is reported in terms of Dice, when comparing to other medical imaging task, with 0.79 on average and 0.95 being the highest value. In terms of surface distance error, however, the performance is surprisingly good with 0.59 mm on average and 0.11 mm being the lowest value. Regarding processing speed, extremely large differences of a factor of 150 can be found, but real-time processing, i.e. processing of images at 25 Hz, is frequently achieved.

Figure 8. Quantitative performance analysis regarding segmentation. Note that the Dice metric differs strongly depending on the size of the object: Segmenting the bone shadow (marked by asterisk) significantly improves the Dice compared to segmenting just the bone response.

Figure 9. Quantitative performance analysis regarding segmentation. Note that the metric differs strongly depending on its definition: the surface distance error can be either directed or symmetric, computed on point clouds or surface meshes and either the absolute or the root-mean-square distance.

Figure 10. Analysis of computation time normalized to time per 1.000.000 pixel. Note that some images sized are based on estimates (marked by *), and that these times were reported for varying hard- and software.

A fair quantitative evaluation of the segmentation accuracy of the proposed methods is not possible based on the reviewed publications. This would require common test sets and identical metric definitions and evaluation scripts. In the current state, the evaluation procedures vary greatly. Furthermore, for distance-based metrics, even the definitions are inconsistent, e.g. by being either directional or symmetric, by computing the distance to a point cloud or a surface mesh or by computing the absolute or the root-mean-squared error. These variations have a huge influence on the evaluation but are rarely reported. Performance in terms of processing time suffers from similar shortcomings, as varying soft- and hardware renders direct comparison impossible.

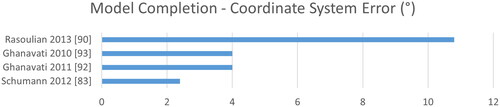

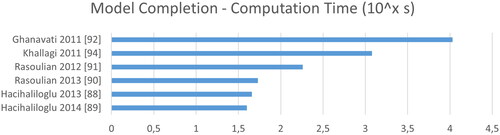

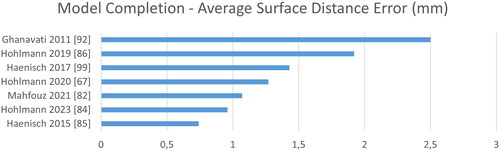

Regarding 3D bone modeling a comparison becomes even harder, as various metrics may be adopted. While Dice is not reported at all, surface distances are complemented with target registration errors (TRE) and coordinate-system (CoS) mismatch. As image sizes are rarely reported for 3D applications, we refrain from normalizing the computation time by the number of pixels. See for a ranking according to time, CoS, TRE and SDE. Overall, we see larger errors mostly above 1 mm and much slower computation in the range of minutes instead of milliseconds.

Figure 11. Quantitative evaluation of bone model completion algorithms regarding the average surface distance error in mm.

Figure 12. The target registration error describes the deviation of the computed to the ground truth transformation.

5. Challenges and trends

Over recent years, a clear trend toward machine learning can be noticed. A number of publications compare traditional to machine learning methods and independently confirm the superiority in terms of robustness, accuracy and processing times [Citation39,Citation45,Citation56,Citation62,Citation79], although some show benefits of hybrid approaches. Presumably, the trend to machine learning will continue. However, transferring this success from the field of research into everyday clinical use will bring great challenges: Machine learning models resemble ‘black boxes’. But verification, transparency and interpretability of these models is of high importance in clinical application. Few works address this problem [Citation54,Citation55,Citation59,Citation60] by analyzing the model uncertainty. Furthermore, domain shifts have proved to be an issue, especially when working on small data sets. While the scientific community annotated a huge number of images, they are not published along with the papers. In terms of accuracy, this is not much of an issue as the test data closely resembles the training data in most cases. However, this strongly limits our ability to build models that generalize well. Most publications do not employ separate validation and tests sets. Some utilize cross-validation, but the remaining models hyperparameters are tuned on the data they are tested on. While this improves the apparent accuracy, it limits the generalizability.

Currently, there is no consensus on the best metrics for validation of either segmentation or bone model completion algorithms. Intersection-based metrics like the Dice metric struggle with the thin bone surface annotations: Even small shifts can cause a significant drop in terms of this metric. To counter this problem, some authors dilate the annotations and predictions, improving the metrics apparent performance, but hampering comparability. One advantage of intersection-based metrics over surface distance-based ones is their derivability. One can define a loss function approximating the Dice. By this, the model is optimized on its actual task, improving its accuracy. Furthermore, it can be computed for images that do not show bones, allowing to quantify false positive segmentations.

For surface distance-based metrics, the directionality from prediction to ground truth and vice versa varies. Yet, in order to detect overcomplete (false positive) as well as undercomplete segmentation (false negative), the metric needs to be symmetric. Other variants of this metric found in literature include measuring the distance along the scanline direction only or even ignoring distances above 1 mm, artificially improving the metrics apparent performance. In some cases, the root-mean-squared surface distance error is reported. This penalizes large errors stronger, but hampers interpretability. When bone is visible only in either the image or the reference, the surface distance becomes undefined. This is especially a problem when evaluating 2D data, where empty image slices are frequent. By analyzing volumetric images, even for 2D models, this problem may be solved. In contrast to intersection-based metrics, surface distance errors are easy to interpret and thus facilitate communication with medical personnel.

In summary, a single comprehensive metric is yet to be found. Pandey et al. [Citation24] recommend to report at least one distance- and one overlap-based metric, potentially along other, task-specific, metrics. Given the highly variable definitions of the metrics as discussed above, this is not sufficient from our point of view. Instead, for evaluating overall segmentation quality, we recommend reporting the average symmetric surface distance on volume images containing at least one bone fragment. This metric combines all relevant aspects into a single, easily interpretable scalar. Furthermore, it is affected by both, false positive and false negative segmentations. This allows for direct comparison of algorithms by a single metric. By computation in 3D, the surface distance is defined in all cases, even if some slices do not contain bone surfaces. This mandatory metric can be supplemented with Dice or application-specific metrics. Any high-quality analysis of a segmentation algorithm should involve separate investigation of individual aspects, e.g. tendency to false positive or false negative segmentation, for example by investigating directed distances. Note, that the SSDE does not reflect computational efficiency, which should be evaluated separately, too.

In accordance with Pandey et al. we think that for fair comparison of algorithms, a common benchmark that provides a shared training set as well as a unified evaluation is essential. We want to promote their attempt to build such a benchmark [Citation100].

We cannot recommend one method or the other for either segmentation or bone model completion for the aforementioned reasons. Taking a closer look at the three best-performing segmentation algorithms in terms of mean surface distance error, their respective data sets appear to be small (1000 images or less) and the error computed is directed instead of symmetric [Citation49,Citation62,Citation79]. Still, we can rule out purely traditional methods and see a slight favor for hybrid ones. However, some of these require heavy computational effort. For bone model completion, the two best-performing methods are evaluated in-vitro or do not publish the underlying algorithm [Citation82,Citation85].

The ultrasound vision community heavily relies on a single convolutional neural network architecture: The U-Net. This is in sharp contrast to the non-medical domain. A direct comparison of architectures developed for the medical and non-medical domains is rare. Considering high quality semantic segmentation challenges like ADE20k [Citation101], we see impressive performance gains with architectures that do not resemble the U-Net ‘fully convolutional encoder-decoder with skip connections’-concept. We hypothesize, that great potential lies in a better scholarly exchange between the general and ultrasound vision communities. E.g. the vision transformer has proven its capabilities on non-medical data. While a first analysis did not show any advantage over CNNs on a small-scale data set [Citation81], future research may benefit from additional data published by the medical ultrasound research community [Citation100]. Furthermore, cross-modal models may utilize CT and MRI images. Coming back to ADE20k, the current leading model ‘ONE-PEACE’ builds not only on images, but audio and text, too.

Regarding 3D bone modeling, the total number of publications is rather small. Also, in contrast to publications on segmentation, only half of the evaluations are performed in-vivo. While being discussed for decades, the topic is paid little attention. Even worse, there has been little to no investigation of the effect of different segmentation strategies on the final bone model. While Hacihaliloglu et al. [Citation88,Citation89] find their proposed monogenic filters to be superior to Phase Symmetry and Shadow Peak-based segmentation, no such investigation was performed for machine learning methods. The tradeoff between a lower coverage of the bone model and a more reliable segmentation remains unknown. Future research could build upon the segmentation uncertainty provided by ensemble models or such using dropout. Alternatively, in- or decreasing the segmentation threshold constitutes an even simpler experimental setup, compatible with all presented segmentation algorithms. The final threshold may be aspect to machine learning and adopted locally, too.

While not being in focus, bone model completion is crucial to enable modeling of joints using ultrasound. With this paper, we want to promote future research on this topic by providing an up-to-date review. Most importantly, all of the reviewed publications rely on statistical shape models and none employ machine learning algorithms. We hypothesize, that the success of point-based models in the non-medical domain can be transferred to the medical domain, similar to the task of segmentation. The Completion3D data set constitutes one example for which the surface distance error was halved in recent years [Citation102]. While consisting of man-made objects that differ from natural geometry, the advances of point-based models may be transferred to the medical domain. This appears especially probable as the underlying architecture of the best-performing GRNet (at the time of publication) utilizes 3D convolutions that also proved to be effective in medical image processing [Citation103].

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Baraza N, Chapman C, Zakani S, et al. 3D – Printed patient specific instrumentation in corrective osteotomy of the femur and pelvis: a review of the literature. 3D Print Med. 2020;6(1):34. doi: 10.1186/s41205-020-00087-0.

- Evrard R, Schubert T, Paul L, et al. Quality of resection margin with patient specific instrument for bone tumor resection. J Bone Oncol. 2022;34:100434. doi: 10.1016/j.jbo.2022.100434.

- Wong KC. CAOS in bone tumor surgery. In: Sugano N., editor, Computer assisted orthopaedic surgery for hip and knee: current state of the art in clinical application and basic research. Singapore: Springer; 2018. pp. 157–169.

- Radermacher K, Portheine F, Anton M, et al. Computer assisted orthopaedic surgery with image based individual templates. Clin Orthop Relat Res. 1998;354(354):28–38. doi: 10.1097/00003086-199809000-00005.

- Renson L, Poilvache P, van den Wyngaert H. Improved alignment and operating room efficiency with patient-specific instrumentation for TKA. Knee. 2014;21(6):1216–1220. doi: 10.1016/j.knee.2014.09.008.

- Meng M, Wang J, Sun T, et al. Clinical applications and prospects of 3D printing guide templates in orthopaedics. J Orthop Translat. 2022;34:22–41. doi: 10.1016/j.jot.2022.03.001.

- Asseln M, Grothues SAGA, Radermacher K. Relationship between the form and function of implant design in total knee replacement. J Biomech. 2021;119:110296. doi: 10.1016/j.jbiomech.2021.110296.

- Culler SD, Martin GM, Swearingen A. Comparison of adverse events rates and hospital cost between customized individually made implants and standard off-the-shelf implants for total knee arthroplasty. Arthroplast Today. 2017;3(4):257–263. doi: 10.1016/j.artd.2017.05.001.

- Brinkmann EJ, Fitz W. Custom total knee: understanding the indication and process. Arch Orthop Trauma Surg. 2021;141(12):2205–2216. doi: 10.1007/s00402-021-04172-9.

- Pietrzak JR, Rowan FE, Kayani B, et al. Preoperative CT-based three-dimensional templating in Robot-assisted total knee arthroplasty more accurately predicts implant sizes than two-dimensional templating. J Knee Surg. 2019;32(7):642–648. doi: 10.1055/s-0038-1666829.

- Twiggs JG, Wakelin EA, Roe JP, et al. Patient-specific simulated dynamics after total knee arthroplasty correlate with patient-reported outcomes. J Arthroplasty. 2018;33(9):2843–2850. doi: 10.1016/j.arth.2018.04.035.

- Fischer MCM, Tokunaga K, Okamoto M, et al. Implications of the uncertainty of postoperative functional parameters for the preoperative planning of total hip arthroplasty. Journal Orthopaedic Research. 2022;40(11):2656–2662. doi: 10.1002/jor.25291.

- Habor J, Fischer MCM, Tokunaga K, et al. The patient-specific combined target zone for morpho-functional planning of total hip arthroplasty. J Pers Med. 2021;11(8):817. doi: 10.3390/jpm11080817.

- Reimann P, Brucker M, Arbab D, et al. Patient satisfaction – a comparison between patient-specific implants and conventional total knee arthroplasty. J Orthop. 2019;16(3):273–277. doi: 10.1016/j.jor.2019.03.020.

- Chung BJ, Kang JY, Kang YG, et al. Clinical implications of femoral anthropometrical features for total knee arthroplasty in Koreans. J Arthroplasty. 2015;30(7):1220–1227. doi: 10.1016/j.arth.2015.02.014.

- Mahoney OM, Kinsey T. Overhang of the femoral component in total knee arthroplasty: risk factors and clinical consequences. J Bone Joint Surg Am. 2010;92(5):1115–1121. doi: 10.2106/JBJS.H.00434.

- Taubmann O, Berger M, Bögel M, et al. Medical imaging systems: an introductory guide: computed tomography. Cham (CH): Springer; 2018.

- Centers for Medicare and Medicaid Services. Physician Fee Schedule. Available from: https://www.cms.gov/medicare/physician-fee-schedule/search?Y=0&T=4&HT=1&CT=0&H1=74176&H2=72195&H3=76856&M=5.

- Von Haxthausen F, Hagenah J, Kaschwich M, et al. Robotized ultrasound imaging of the peripheral arteries – A phantom study. Current Directions in Biomedical Engineering. 2020;6(1)Sep 2020.10.1515/cdbme-2020-0033

- Kim DM, Seo J-S, Jeon I-H, et al. Detection of rotator cuff tears by ultrasound: How many scans do novices need to be competent? Clin Orthop Surg. 2021;13(4):513–519. doi: 10.4055/cios20259.

- Pearlman PC, Tagare HD, Sinusas AJ, et al. 3D radio frequency ultrasound cardiac segmentation using a linear predictor. Med Image Comput Comput Assist Interv. 2010;13(Pt 1):502–509. 10.1007/978-3-642-15705-9_6120879268

- Huang Q, Zeng Z. A review on real-time 3D ultrasound imaging technology. Biomed Res Int. 2017;2017:6027029–6027020. doi: 10.1155/2017/6027029.

- Che C, Mathai TS, Galeotti J. Ultrasound registration: a review. Methods. 2017;115:128–143. doi: 10.1016/j.ymeth.2016.12.006.

- Pandey PU, Quader N, Guy P, et al. Ultrasound bone segmentation: a scoping review of techniques and validation practices. Ultrasound Med Biol. 2020;46(4):921–935. doi: 10.1016/j.ultrasmedbio.2019.12.014.

- Heimann T, Meinzer H-P. Statistical shape models for 3D medical image segmentation: a review. Med Image Anal. 2009;13(4):543–563. doi: 10.1016/j.media.2009.05.004.

- Hacihaliloglu I. 3D ultrasound for orthopedic interventions. Adv Exp Med Biol. 2018;1093:113–129. doi: 10.1007/978-981-13-1396-7_10.

- Hacihaliloglu I. Ultrasound imaging and segmentation of bone surfaces: a review. Technology (Singap World Sci). 2017;5(2):74–80. doi: 10.1142/S2339547817300049.

- Meiburger KM, Acharya UR, Molinari F. Automated localization and segmentation techniques for B-mode ultrasound images: a review. Comput Biol Med. 2018;92:210–235. doi: 10.1016/j.compbiomed.2017.11.018.

- Shin Y, Yang J, Lee YH, et al. Artificial intelligence in musculoskeletal ultrasound imaging. Ultrasonography. 2021;40(1):30–44. doi: 10.14366/usg.20080.

- Morooka K, Nakamoto M, Sato Y. A survey on statistical modeling and machine learning approaches to computer assisted medical intervention: intraoperative anatomy modeling and optimization of interventional procedures. IEICE Trans Inf Syst. 2013;E96.D(4):784–797. doi: 10.1587/transinf.E96.D.784.

- Sarkalkan N, Weinans H, Zadpoor AA. Statistical shape and appearance models of bones. Bone. 2014;60:129–140. doi: 10.1016/j.bone.2013.12.006.

- Pandey P, Guy P, Hodgson AJ, et al. Fast and automatic bone segmentation and registration of 3D ultrasound to CT for the full pelvic anatomy: a comparative study. Int J Comput Assist Radiol Surg. 2018;13(10):1515–1524. doi: 10.1007/s11548-018-1788-5.

- Kumar Jain A, Taylor RH. Understanding bone responses in B-mode ultrasound images and automatic bone surface extraction using a Bayesian probabilistic framework. SPIE; 2004. pp. 131–142.

- Hacihaliloglu I, Abugharbieh R, Hodgson AJ, et al. 2A-4 enhancement of bone surface visualization from 3D ultrasound based on local phase information. IEEE Ultrasonics Symposium, 2006: 2–6 October 2006, [Vancouver, Canada]. Piscataway (NJ): IEEE Operations Center; 2006. pp. 21–24.

- Quader N, Hodgson AJ, Mulpuri K, et al. Automatic evaluation of scan adequacy and dysplasia metrics in 2-D ultrasound images of the neonatal hip. Ultrasound Med Biol. 2017;43(6):1252–1262. doi: 10.1016/j.ultrasmedbio.2017.01.012.

- Quader N, Hodgson AJ, Mulpuri K, et al. 3-D ultrasound imaging reliability of measuring dysplasia metrics in infants. Ultrasound Med Biol. 2021;47(1):139–153. doi: 10.1016/j.ultrasmedbio.2020.08.008.

- Desai P, Hacihaliloglu I. Knee-Cartilage segmentation and thickness measurement from 2D ultrasound. J Imaging. 2019;5(4):43. doi: 10.3390/jimaging5040043.

- Zhou G-Q, Li D-S, Zhou P, et al. Automating spine curvature measurement in volumetric ultrasound via adaptive phase features. Ultrasound Med Biol. 2020;46(3):828–841. doi: 10.1016/j.ultrasmedbio.2019.11.012.

- Tu SJ, Morel J, Chen M, et al. Fast automatic bone surface segmentation in ultrasound images without machine learning. In Papiez BW, Yaqub M, Jiao J, Noble JA, Namburete AIL, editors. Medical Image Understanding and Analysis: 25th Annual Conference, MIUA 2021, Oxford, United Kingdom, July 12–14, 2021, proceedings. Cham: Springer; 2021. pp. 250–264.

- Krizhevsky A, Sutskever I, Hinton GE. ImageNet classification with deep convolutional neural networks. In: Pereira F, Burges CJ, Bottou L, Weinberger KQ, editors. Advances in neural information processing systems. Red Hook: Curran Associates, Inc.; 2012.

- Ronneberger O, Fischer P, Brox T. U-Net: convolutional networks for biomedical image segmentation. In Hornegger J, editor. Medical image computing and computer-assisted intervention – MICCAI 2015: 18th International Conference, Munich, Germany, October 5–9, 2015, Proceedings, Part III. Cham: Springer International Publishing AG; 2015. pp. 234–241.

- Antico M, Sasazawa F, Dunnhofer M, et al. Deep learning-based femoral cartilage automatic segmentation in ultrasound imaging for guidance in robotic knee arthroscopy. Ultrasound Med Biol. 2020;46(2):422–435. doi: 10.1016/j.ultrasmedbio.2019.10.015.

- Zaman A, Park SH, Miguel L, et al. Real-time 3D ultrasound bone model reconstruction and its registration with MR bone model for localization of intramedullary cystic Bone Lesion; 2019.

- Duong DQ, Nguyen K-CT, Kaipatur NR, et al. Fully automated segmentation of alveolar bone using deep convolutional neural networks from intraoral ultrasound images. Annual International Conference of the IEEE Engineering in Medicine and Biology Society. IEEE Engineering in Medicine and Biology Society. Annual International Conference; 2019. pp. 6632–6635. doi: 10.1109/EMBC.2019.8857060.

- El-Hariri H, Mulpuri K, Hodgson A, et al. Comparative evaluation of Hand-Engineered and Deep-Learned features for neonatal hip bone segmentation in ultrasound. In Shen D, editor, Medical image computing and computer assisted intervention – MICCAI 2019: 22nd international conference, Shenzhen, China, October 13–17, 2019, proceedings, Cham: Springer International Publishing; 2019. pp. 12–20.

- Ungi T, Greer H, Sunderland KR, et al. Automatic spine ultrasound segmentation for scoliosis visualization and measurement. IEEE Trans Biomed Eng. 2020;67(11):3234–3241. doi: 10.1109/TBME.2020.2980540.

- Banerjee S, Ling SH, Lyu J, et al. Automatic segmentation of 3D ultrasound spine curvature using convolutional neural Network Annual International Conference of the IEEE Engineering in Medicine and Biology Society. IEEE Engineering in Medicine and Biology Society. Annual International Conference; 2020. p. 2039–2042.

- Huang Z, Wang L-W, Leung FHF, et al. Bone feature segmentation in ultrasound spine image with robustness to speckle and regular occlusion noise. 2020 IEEE International Conference on Systems, Man, and Cybernetics: Toronto, Canada, October 11-14, 2020. Piscataway (NJ): IEEE; 2020 pp. 1566–1571.

- Alsinan AZ, Rule C, Vives M, et al. GAN-Based realistic bone ultrasound image and label synthesis for improved segmentation. Cham: Springer; 2020. pp. 795–804.

- Nguyen KCT, Duong DQ, Almeida FT, et al. Alveolar bone segmentation in intraoral ultrasonographs with machine learning. J Dent Res. 2020;99(9):1054–1061. doi: 10.1177/0022034520920593.

- Zaman A, Park SH, Bang H, et al. Generative approach for data augmentation for deep learning-based bone surface segmentation from ultrasound images. Int J Comput Assist Radiol Surg. 2020;15(6):931–941. doi: 10.1007/s11548-020-02192-1.

- Li R, Davoodi A, Cai Y, et al. Robot-assisted ultrasound reconstruction for spine surgery: from bench-top to pre-clinical study. Int J Comput Assist Radiol Surg. 2023;18(9):1613–1623. doi: 10.1007/s11548-023-02932-z.

- Pan Y-C, Chan H-L, Kong X, et al. Multi-class deep learning segmentation and automated measurements in periodontal sonograms of a porcine model. Dento Maxillo Facial Radiol. 2022;51(3):20210363.

- Kannan A, Hodgson A, Mulpuri K, et al. Uncertainty estimation for assessment of 3D US scan adequacy and DDH metric reliability. In Sudre CH, editor. Uncertainty for safe utilization of machine learning in medical imaging, and graphs in biomedical image analysis: second international workshop, UNSURE 2020, and third international workshop, GRAIL 2020, held in conjunction with MICCAI 2020, Lima, Peru, October 8, 2020 proceedings. Cham: Springer; 2020. p. 97–105.

- Kannan A, Hodgson A, Mulpuri K, et al. Leveraging voxel-wise segmentation uncertainty to improve reliability in assessment of paediatric dysplasia of the hip. Int J Comput Assist Radiol Surg. 2021;16(7):1121–1129. doi: 10.1007/s11548-021-02389-y.

- Tang S, Yang X, Shajudeen P, et al. A CNN-based method to reconstruct 3-D spine surfaces from US images in vivo. Med Image Anal. 2021;74:102221. doi: 10.1016/j.media.2021.102221.

- Hohlmann B, Brößner P, Radermacher K.CNN based 2D vs. 3D segmentation of bone in ultrasound images. EasyChair; 2022. pp. 116–110.

- Du Toit C, Orlando N, Papernick S, et al. Automatic femoral articular cartilage segmentation using deep learning in three-dimensional ultrasound images of the knee. Osteoarthr Cartil Open. 2022;4(3):100290. doi: 10.1016/j.ocarto.2022.100290.

- Antico M, Sasazawa F, Takeda Y, et al. Bayesian CNN for segmentation uncertainty inference on 4D ultrasound images of the femoral cartilage for guidance in robotic knee arthroscopy. IEEE Access. 2020;8:223961–223975. doi: 10.1109/ACCESS.2020.3044355.

- Pandey PU, Guy P, Hodgson AJ. Can uncertainty estimation predict segmentation performance in ultrasound bone imaging? Int J CARS. 2022;17(5):825–832. doi: 10.1007/s11548-022-02597-0.

- Wang P, Vives M, Patel VM, et al. Robust real-time bone surfaces segmentation from ultrasound using a local phase tensor-guided CNN. Int J Comput Assist Radiol Surg. 2020;15(7):1127–1135. doi: 10.1007/s11548-020-02184-1.

- Alsinan AZ, Patel VM, Hacihaliloglu I. Automatic segmentation of bone surfaces from ultrasound using a filter-layer-guided CNN. Int J Comput Assist Radiol Surg. 2019;14(5):775–783. doi: 10.1007/s11548-019-01934-0.

- Jiang B, Xu K, Moghekar A, et al. Feature-aggregated spatiotemporal spine surface estimation for wearable patch ultrasound volumetric imaging. In Medical imaging 2023: ultrasonic imaging and tomography: 22–23 February 2023, San Diego, California, United States. Bellingham, Washington, USA: SPIE; 2023. p. 23. doi: 10.1117/12.2653114.

- Ghelich Oghli M, Shabanzadeh A, Moradi S, et al. Automatic fetal biometry prediction using a novel deep convolutional network architecture. Phys Med. 2021;88:127–137. doi: 10.1016/j.ejmp.2021.06.020.

- Broessner P, Hohlmann B, Radermacher K. Ultrasound-based navigation of scaphoid fracture surgery. In Maier-Hein KH, Deserno TM, Handels H, Maier A, Palm C, Tolxdorff T, editors. Bildverarbeitung für die Medizin 2022: proceedings, german workshop on medical image computing, Heidelberg, June 26–28, 2022. Wiesbaden, Heidelberg: Springer Vieweg; 2022. p. 28–33.

- Hohlmann B, Brößner P, Welle K, et al. Segmentation of the scaphoid bone in ultrasound images. Curr Dir Biomed Eng. 2021;7(1):76–80. doi: 10.1515/cdbme-2021-1017.

- Hohlmann B, Glanz J, Radermacher K. Segmentation of the distal femur in ultrasound images. Curr Dir Biomed Eng. 2020;6(1):34. doi: 10.1515/cdbme-2020-0034.

- Brosner P, Hohlmann B, Welle K, et al. Ultrasound-based registration for the computer-assisted navigated percutaneous scaphoid fixation. IEEE Trans Ultrason Ferroelectr Freq Control. 2023;70(9):1064–1072. doi: 10.1109/TUFFC.2023.3291387.

- Kompella G, Antico M, Sasazawa F, et al. Segmentation of femoral cartilage from knee ultrasound images using mask R-CNN. Annual international conference of the IEEE engineering in medicine and biology society. IEEE Engineering in Medicine and Biology Society. Annual International Conference; 2019. pp. 966–969. doi: 10.1109/EMBC.2019.8857645.

- Hohlmann B, Radermacher K. Augmented active shape model search – towards 3D ultrasound-based bone surface reconstruction. EPiC Series in Health Sciences. EasyChair; 2020. pp. 117–121.

- Jiang W, Mei F, Xie Q. Novel automated spinal ultrasound segmentation approach for scoliosis visualization. Front Physiol. 2022;13:1051808. doi: 10.3389/fphys.2022.1051808.

- Banerjee S, Lyu J, Huang Z, et al. Light-Convolution dense selection U-Net (LDS U-Net) for ultrasound lateral bony feature segmentation. Applied Sciences. 2021;11(21):10180. doi: 10.3390/app112110180.

- Luan K, Li Z, Li J. An efficient end-to-end CNN for segmentation of bone surfaces from ultrasound. Comput Med Imaging Graph. 2020;84:101766. doi: 10.1016/j.compmedimag.2020.101766.

- Dunnhofer M, Antico M, Sasazawa F, et al. Siam-U-Net: encoder-decoder siamese network for knee cartilage tracking in ultrasound images. Med Image Anal. 2020;60:101631. doi: 10.1016/j.media.2019.101631.

- Goodfellow I, Pouget-Abadie J, Mirza M, et al. Generative adversarial nets. Advances in Neural Information Processing Systems; 2014. p. 27.

- Mirza M, Osindero S. 2014. Conditional generative adversarial nets. arXiv.

- Isola P, Zhu J-Y, Zhou T, et al. 2017 Image-to-Image translation with conditional adversarial networks. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). IEEE. doi: 10.1109/CVPR.2017.632.

- Zhou Y, Rakkunedeth A, Keen C, et al. Wrist ultrasound segmentation by deep learning. In Michalowski M, Abidi SSR, Abidi S, editors. Artificial intelligence in medicine: 20th international conference on artificial intelligence in medicine, AIME 2022, Halifax, NS, Canada, June 14–17, 2022, Proceedings, 1st ed.. Cham: Springer International Publishing; Imprint Springer; 2022. pp. 230–237.

- Alsinan AZ, Patel VM, Hacihaliloglu I. Bone shadow segmentation from ultrasound data for orthopedic surgery using GAN. Int J Comput Assist Radiol Surg. 2020;15(9):1477–1485. doi: 10.1007/s11548-020-02221-z.

- Rahman A, Bandara WGC, Valanarasu JMJ, et al. Orientation-guided graph convolutional network for bone surface segmentation; 2022.

- Brößner P, Hohlmann B, Radermacher K. Transformer vs. CNN – a comparison on Knee segmentation in ultrasound images. EasyChair; 2022. p. 31–24.

- Mahfouz MR, Abdel Fatah EE, Johnson JM, et al. A novel approach to 3D bone creation in minutes 3D ultrasound. Bone Joint J. 2021;103-B(6 Supple A):81–86. doi: 10.1302/0301-620X.103B6.BJJ-2020-2455.R1.

- Schumann S, Nolte L-P, Zheng G. Compensation of sound speed deviations in 3-D B-mode ultrasound for intraoperative determination of the anterior pelvic plane. IEEE Trans Inf Technol Biomed. 2012;16(1):88–97. doi: 10.1109/TITB.2011.2170844.

- Hohlmann B, Broessner P, Phlippen L, et al. Knee bone models from ultrasound. IEEE Trans Ultrason Ferroelectr Freq Control. 2023;70(9):1054–1063. doi: 10.1109/TUFFC.2023.3286287.

- Hänisch C, Hsu J, Noorman E, et al. Model based reconstruction of the bony knee anatomy from 3D ultrasound images. [15th Annual Meeting of the International Society for Computer Assisted Orthopaedic Surgery, 17.06.2015-20.06.2015, Vancouver, Canada], 5 Seiten; 2015.

- Hohlmann B, Radermacher K. The interleaved partial active shape model (IPASM) search algorithm – towards 3D ultrasound-based bone surface reconstruction. EPiC Series in Health Sciences. EasyChair; 2019. pp. 177–180.

- Myronenko A, Song X, Carreira-Perpiñán M. Non-rigid point set registration: coherent point drift. In: Schölkopf B, Platt J, Hoffman T, editors. Advances in neural information processing systems. Cambridge, MA: MIT Press; 2006.

- Hacihaliloghlu I, Rasoulian A, Rohling RN, et al. Statistical shape model to 3D ultrasound registration for spine interventions using enhanced local phase features. Medical image computing and computer-assisted intervention MICCAI … International Conference on Medical Image Computing and Computer-Assisted Intervention, 16(Pt 2); 2013. pp. 361–368.

- Hacihaliloglu I, Rasoulian A, Rohling RN, et al. Local phase tensor features for 3-D ultrasound to statistical shape + pose spine model registration. IEEE Trans Med Imaging. 2014;33(11):2167–2179. doi: 10.1109/TMI.2014.2332571.

- Rasoulian A, Rohling RN, Abolmaesumi P. 2013, Augmentation of paramedian 3D ultrasound images of the spine. In Barratt D, Cotin S, Fichtinger G, Jannin P, Navab N, editors. Information processing in computer-assisted interventions: 4th international conference, IPCAI 2013, Heidelberg, Germany, June 26, 2013. Proceedings. Berlin, Heidelberg: Springer; pp. 51–60.

- Rasoulian A, Rohling RN, Abolmaesumi P. Probabilistic registration of an unbiased statistical shape model to ultrasound images of the spine. In Medical imaging 2012: image-guided procedures, robotic interventions, and modeling. SPIE; 2012. p. 83161P. doi: 10.1117/12.911742.

- Ghanavati S, Mousavi P, Fichtinger G, et al. Phantom validation for ultrasound to statistical shape model registration of human pelvis. Medical imaging 2011: visualization, image-guided procedures, and modeling. SPIE; 2011. p.79642U. doi: 10.1117/12.876998.

- Ghanavati S, Mousavi P, Fichtinger G, et al. Multi-slice to volume registration of ultrasound data to a statistical atlas of human pelvis. Medical imaging 2010: visualization, image-guided procedures, and modeling. SPIE; 2010. p. 76250O. doi: 10.1117/12.844080.

- Khallaghi S, Abolmaesumi P, Gong RH, et al. GPU accelerated registration of a statistical shape model of the lumbar spine to 3D ultrasound images. In Medical imaging 2011: visualization, image-guided procedures, and modeling. SPIE; 2011. p. 79642W. doi: 10.1117/12.878377.

- Jiang W-W, Zhong X-X, Zhou G-Q, et al. An automatic measurement method of spinal curvature on ultrasound coronal images in adolescent idiopathic scoliosis. Math Biosci Eng. 2019;17(1):776–788. doi: 10.3934/mbe.2020040.

- Cengizler C, Kerem Ün M, Buyukkurt S. A novel evolutionary method for spine detection in ultrasound samples of spina bifida cases. Comput Methods Programs Biomed. 2021;198:105787. doi: 10.1016/j.cmpb.2020.105787.

- Huang Z, Zhao R, Leung FHF, et al. Joint spine segmentation and noise removal From ultrasound volume projection images With selective feature sharing. IEEE Trans Med Imaging. 2022;41(7):1610–1624. doi: 10.1109/TMI.2022.3143953.

- Rahman A, Valanarasu JMJ, Hacihaliloglu I, et al. Simultaneous bone and shadow segmentation network using task correspondence consistency. In Wang L, Dou Q, Fletcher PT, Speidel S, Li S, editors. Medical image computing and computer assisted intervention – MICCAI 2022. Switzerland, Cham: Springer Nature; 2022. pp. 330–339.

- Hänisch C, Hohlmann B, Radermacher K. The interleaved partial active shape model search (IPASM) algorithm–Preliminary results of a novel approach towards 3D ultrasound-based bone surface reconstruction. In EPiC Series in Health Sciences. EasyChair; 2017. pp. 399–406.

- Pandey P, Hohlmann B, Brößner P, et al. Standardized evaluation of current ultrasound bone segmentation algorithms on multiple datasets. EasyChair; 2022. p. 148–141.

- Zhou B, Zhao H, Puig X, et al. Scene parsing through ADE20K dataset. In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR): Proceedings 21–26 July 2016, Honolulu, Hawaii. Piscataway (NJ): IEEE. 2017. pp. 5122–5130. doi: 10.1109/CVPR.2017.544.

- Tchapmi LP, Kosaraju V, Rezatofighi H, et al. TopNet: structural point cloud decoder. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). IEEE; 2019. doi: 10.1109/CVPR.2019.00047.

- Xie H, Yao H, Zhou S, et al. GRNet: gridding residual network for dense point cloud completion. ECCV; 2020.