Abstract

Introduction

The use of simulation in health professional education has increased rapidly over the past 2 decades. While simulation has predominantly been used to train health professionals and students for a variety of clinically related situations, there is an increasing trend to use simulation as an assessment tool, especially for the development of technical-based skills required during clinical practice. However, there is a lack of evidence about the effectiveness of using simulation for the assessment of competency. Therefore, the aim of this systematic review was to examine simulation as an assessment tool of technical skills across health professional education.

Methods

A systematic review of Cumulative Index to Nursing and Allied Health Literature (CINAHL), Education Resources Information Center (ERIC), Medical Literature Analysis and Retrieval System Online (Medline), and Web of Science databases was used to identify research studies published in English between 2000 and 2015 reporting on measures of validity, reliability, or feasibility of simulation as an assessment tool. The McMasters Critical Review for quantitative studies was used to determine methodological value on all full-text reviewed articles. Simulation techniques using human patient simulators, standardized patients, task trainers, and virtual reality were included.

Results

A total of 1,064 articles were identified using search criteria, and 67 full-text articles were screened for eligibility. Twenty-one articles were included in the final review. The findings indicated that simulation was more robust when used as an assessment in combination with other assessment tools and when more than one simulation scenario was used. Limitations of the research papers included small participant numbers, poor methodological quality, and predominance of studies from medicine, which preclude any definite conclusions.

Conclusion

Simulation has now been embedded across a range of health professional education and it appears that simulation-based assessments can be used effectively. However, the effectiveness as a stand-alone assessment tool requires further research.

Introduction

Assessment, in the most expansive definition, is used to identify appropriate standards and criteria and ascertain quality through judgment.Citation1 There are a multitude of assessment modes, adopted for various reasons, such as measuring performance or skill acquisition, and these can be used at different stages of the learner’s educational trajectory. There has been much debate, however, about the effectiveness of various forms of assessment, such as multiple-choice question examinations, and this has influenced educators’ desire to develop assessments that are more realistic and performance based.Citation2,Citation3 The types of assessment used in pre- and postregistration health professional education have been widely reviewed.Citation4–Citation9 A compounding challenge with assessment for health professionals and health students is determining competency of practice. This is a complex but necessary component of education and training. In more recent decades, performance-based assessment practices have gained strong momentumCitation4 as educators have sought to examine authentic learner performance with the knowledge that these types of assessments are a driving influence on learning and teaching practices. Out of this need for authentic assessment came the adoption of simulation-based assessment.

Simulation, as a technique for both training and assessment, has been used in the aeronautical industry and military fields since the early 1900s, with the first flight simulator being developed in 1929.Citation10 The complexity and sophistication of simulation improved progressively from the 1950s, driven primarily by the integration of computer-based systems. The translation of simulation into health education has resulted in an almost exponential growth in the use of simulation as an educational tool. Simulation aims to replicate real patients, anatomical regions, or clinical tasks or to mirror real-life situations in clinical settings.Citation11 The increasing implementation of simulation-based learning and assessment within health education has been driven by training opportunities to practice difficult or infrequent clinical events, limited clinical placement opportunities, increasing competition on clinical educators’ time, new diagnostic techniques and treatment, and greater emphasis being placed on patient safety.Citation11–Citation15 Accordingly, health educators have adopted simulation as a viable educational method to teach and practice a diverse range of clinical and nonclinical skills. Simulation modalities such as standardized patients (SPs), anatomical models, part-task trainers, computerized high-fidelity human patient simulators, and virtual reality are in use within health education.Citation10,Citation11,Citation16 In particular, these techniques have been used in preregistration health professional training, as simulation allows learners to practice prior to clinical placement and patient contact, maximizing learning opportunities and patient safety.Citation6,Citation17,Citation18 Simulation provides a safe environment to practice clinical skills in a staged progression of increasing difficulty, appropriate for the level of the learner. Practicing skills on real patients can be difficult, costly, time consuming, and potentially dangerous and unethical.Citation11,Citation12,Citation14,Citation15 As such, health professional educators have increasingly adopted simulation-based assessment as a viable means of evaluating student and health professional populations. In addition, simulation-based assessments are a means of creating an authentic assessment, replicating aspects of actual clinical practice.

While there has been widespread acceptance of simulation as an educational training tool, with evidence supporting its use in health education, the effectiveness of simulation-based assessments in evaluating competence and performance remains unclear. With an increasing use of simulation in health education worldwide, it is salient to review the literature related to simulation-based assessments. Therefore, the aim of this systematic literature review was to evaluate the evidence related to the use of simulation as an assessment tool for technical skills within health education.

Methods

This systematic review was undertaken using the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines.Citation19 The review involved searching health and education databases, followed by structured inclusion and exclusion criteria with consensus across reviewers. Two raters (TR, BJ) independently screened all abstracts for eligibility. There was high agreement on the initial screen, and both raters showed excellent interrater reliability (Cohen’s kappa =0.91). Any disagreements with article eligibility were reconciled via consensus or referred to a third reviewer (CJG).

Search databases and terms

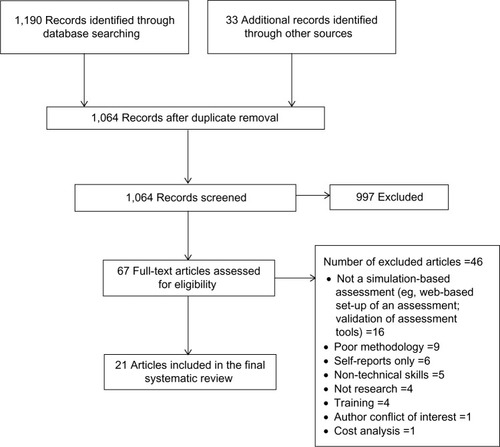

Literature was searched in the following key databases: Cumulative Index to Nursing and Allied Health Literature (CINAHL), Education Resources Information Center (ERIC), Medical Literature Analysis and Retrieval System Online (Medline), and Web of Science. The following search terms were included: allied health, medical education, nursing education, assessment, and simulation. This initial search located 1,190 articles, with another 33 located through reference list searches and gray literature (n=1,223). Following removal of duplicates, 1,064 abstracts were screened for eligibility. We reviewed 67 full-text articles for eligibility, with 21 articles chosen for the final systematic review (). An adapted critical appraisal toolCitation20 () was used to determine the methodological rigor of the articles.

Table 1 Adapted critical appraisal tool

Inclusion/exclusion criteria

Our inclusion criteria required all articles to be in English, and the databases were searched for articles published between the years 2000 and 2015. Articles needed to be research based and to have examined simulation as an assessment tool for health professionals or health professional students. Articles incorporating simulation-based assessments that explored technical and nontechnical skills were included. However, those studies that focused solely on nontechnical skills only, such as communication, interpersonal skills, and team work were excluded. The focus of this systematic review was on technical skills and we, therefore, only included studies that examined technical and nontechnical skills in combination. Technical skills were defined as those requiring the participant to complete a physical assessment (or part thereof) or to perform a treatment technique/s that required a hands-on component. The included articles all focused on simulation as an assessment tool and ideally were compared to other established forms of assessment.

Due to the large number of studies and reviews that have previously investigated objective structured clinical examinations (OSCEs),Citation21–Citation40 all papers that investigated OSCEs were excluded. This was beyond the scope of this systematic review. Research articles focusing on the use of simulation as a training modality only were excluded. This included studies on simulation training program validity conducted by incorporating a simulation-based assessment at the end of the training. We excluded these articles as they did not address the effectiveness of the simulation as an assessment but rather as a training tool. Studies that researched a specific simulation-based assessment grading tool were also excluded as these focused on tool validation and not on the assessment process. All search outcomes were assessed by two investigators (TR, BJ), and each abstract was read by both investigators for quality control.

Articles were also assessed for eligibility by their outcome measures. Many articles evaluated simulation as an assessment technique, but their primary outcome measure was a survey of participants’ attitudes on the simulation experience. In such studies, nearly all participants found it to be a positive experience, with only minor suggestions for improvement.Citation41–Citation50 Such studies were excluded as they did not focus on our primary aim of objectively determining the reliability, validity, or feasibility of simulation as an assessment technique.

Critical appraisal

The methodological quality of all included full-text articles was assessed using a modified critical appraisal tool.Citation20 The McMasters Critical Review Form for Quantitative Studies has been used repeatedly in systematic reviews of health careCitation51–Citation54 and it demonstrates strong interrater reliability. We modified this tool using 15 items, which were scored dichotomously (Yes =1/No =0, and “Not addressed” and “Not applicable” were also scored zero). As such, a maximum score of 15 was permissible, and all 67 full-text articles were appraised and scored. Both reviewers (TR, BJ) independently scored the articles, and the final inclusion of full-text articles was discussed with the third reviewer (CJG) to find consensus, with the critical appraisal tool score used as a measure of methodological rigor. Forty-six articles were excluded for the reasons shown in .

Findings

Of the 21 articles included, the majority were from the field of medicine (n=16), with the remaining being from the disciplines of paramedics (n=2), nursing (n=1), osteopathy (n=1), and physical therapy (n=1) (). Studies were undertaken in Australia, Denmark, New Zealand, Switzerland, and USA. There were no randomized controlled trials, and the majority of studies were of an observational study design. As such, blinding of participants and assessors to the simulation intervention was not undertaken in any of the studies. In addition, many studies used convenience samples that were not powered, and none of the studies calculated the number of participants required to achieve statistical significance, with one study commenting that their study was not adequately powered to detect differences between their two academic sites.Citation55 Many of the studies (n=13) were pilots or had small numbers (<50) of participants (range: n=18 to n=45). A small number of studies were conducted across different health centersCitation55–Citation57; however, the majority of these were conducted in a single health setting or university, making it difficult to generalize the findings. The included articles had scores on the critical appraisal tool ranging from 8 to 14/15. Some of the main reasons for low scores were a lack of description of the participants and a lack of either statistical analysis or description of the analysis.

Table 2 Summary of included articles

Themes

Eight (40%) of the studies used high-fidelity patient simulators to assess medical students, professionals, or applicants for a postgraduate nursing degree; with six (27%) of the studies using an SP combined with a clinical examination as the form of assessment. All of these studies varied in the format that they were completed, eg, the number of stations, time allowed, and the number of assessors used. The SP studies were conducted in the disciplines of medicine, physical therapy, and osteopathy and typically involved students rather than health professionals. Of the remaining studies, three studies (14%) used a virtual reality simulator to assess novice (medical students and professionals) to experienced professionals; two studies (9%) used manikins with varying levels of fidelity, from low-fidelity manikins to high-fidelity human patient simulators, to assess paramedics and intensive care unit (ICU) medical trainees; one study (5%) used a medium-fidelity patient simulator to assess paramedics; and one study (5%) included a part-task trainer to assess medical residents. Two studies compared SPs or low- to high-fidelity patient simulator assessments to other form(s) of assessment, such as paper-based examinations, oral examinations, or current university grade point averages. As such, the major themes that emerged are related to the type of simulation modality chosen for assessment (Supplementary material presents the definitions).

High-fidelity simulation

Eight studiesCitation58–Citation65 conducted simulation-based assessment using high-fidelity human patient simulators. The simulators used were METI Emergency Care Simulator® (Medical Education Technologies Inc., Sarasota, FL, USA)Citation58; METI HPS® (Sarasota, FL, USA)Citation63,Citation64; METI BabySim® (Sarasota)Citation58; METI PediaSIM HPS® (Sarasota)Citation61,Citation62; SimMan 3.3 (Laerdal Medical, Wappingers Falls, NY, USA)Citation65; SimNewB® (Laerdal Medical)Citation58,Citation61,Citation62; and a life-size simulator developed by MEDSIM-EAGLE® (Med-Sim USA, Inc., Fort Lauderdale, FL, USA).Citation59,Citation60

All of the studies were conducted with medical students or practicing doctors, except for one focusing on postgraduate nursing applicants.Citation65 Generally, the reliability and validity of assessment using high-fidelity human patient simulators was found to be good. All of the medical-related studies used multiple scenarios (eg, trauma, myocardial infarction, and respiratory failure) using high-fidelity human patient simulators to assess the candidates, with the postgraduate nursing applicants only being assessed on one anesthetic scenario within a group of three.

As all of the assessments were targeting the clinical performance of students and doctors on high-risk skills, it was not surprising that high-fidelity patient simulators were a popular assessment modality. Unfortunately, this type of assessment attracted generalizability coefficients less than what are acceptable for a high-stakes examination such as a summative performance assessment (G coefficients <0.8). All high-fidelity human patient simulator assessments were found to be suitable for low-stakes examinations (eg, a formative assessment of performance).

The evidence from these studies showed that increasing scenario numbers, rather than increasing the number of raters, increased assessment reliability.Citation58–Citation64 Some researchers suggested that 10–12 scenarios, with three to four assessors, would be required to reach an acceptable level of reliability of 0.8,Citation64 while others observed that ten scenarios with two raters did not reach these levels (0.57).Citation61 When multiple raters (two to four) were used, interrater reliabilities of 0.59–0.97 (the majority being >0.8) were achieved.Citation58–Citation62 While it was unclear how some of the raters reviewed the scenarios,Citation61,Citation63 the majority rated the performance via a video recording of the scenarios,Citation59,Citation60,Citation62,Citation64 with one study having a rater present at the time of the scenario as well as one scoring the performance via a video recording,Citation58 whereas one study used a one-way mirror to rate participants at the time.Citation65 When assessing pediatric interns, residents, and hematology/oncology fellows on sickle cell disease scenarios, checklists had superior interrater reliability than global rating scales.Citation62

Construct validity was high in studies that used high-fidelity human patient simulators for assessing participants with varying degrees of experience (medical students through to specialists) as they were able to differentiate between the different levels of experience.Citation58–Citation63 The pilot study investigating the correlation between high-fidelity human patient simulator assessment and face-to-face interviews for applicants applying for a postgraduate anesthetic nursing course found that there was a significant positive relationship (r=0.42) between the two and that high-fidelity human patient simulator assessment was a suitable adjunct to the admissions process.Citation65

Standardized patients

There were six studies that used SPs within a clinical examination. These simulation-based assessments varied significantly in total duration, the number of stations, the amount of time per station, the number of SPs used, the types of stations used, and skills assessed, but all had the common feature of using SPs. These studies investigated SP encounters, but with fewer SP encounters than traditional OSCEs, and allowed participants longer time with each SP and expected more than just one technical skill to be performed at each station, eg, a full physical therapy assessment and treatmentCitation66 under an assessment format. Four were from the medical profession,Citation67–Citation70 with the others being from physical therapyCitation66 and osteopathy.Citation71 Two of the studies combined the SP assessments with computer-based assessments to assess medical studentsCitation68 as well as emergency medicine, general surgery, and internal medicine doctors.Citation70 Unfortunately, only three of these articles listed the presenting problem of the SP.Citation66,Citation69,Citation71

Within SP assessments, participants’ performance was assessed by trained assessors,Citation66,Citation71 clinical experts,Citation66 self-assessment,Citation66 and the SPs themselves.Citation66,Citation67,Citation69–Citation71 In all studies, assessors were trained to score the encounters, but only one study commented on the assessor’s reliability. This study found that for physical therapy students, SP ratings did not significantly correlate with the ratings of other raters.Citation66 In contrast, strong agreement between experts and the criterion rater were evident. This suggests that experts and criterion raters are better placed than SPs to rate performances during high-stakes examinations.Citation66 When checklists were used by SPs, they negatively correlated with experience, possibly as more experienced doctors may solve problems and make decisions using fewer items of information, therefore checklists may lead to less valid scores.Citation70 Results varied as to whether SP examinations were able to determine clinical experience.Citation67,Citation69 Nonetheless, they were found to be reliable in assessing osteopathic students’ readiness to treat patients.Citation71 The correlation between SP examinations and computer-based assessments was varied, with minimal correlation (r=0.24 uncorrected and r=0.40 corrected)Citation68 to low-to-moderate correlation (r=0.34–0.48).Citation70 However, SP examinations showed low correlation with curriculum results within physical therapy (<0.3).Citation66 Overall, it was concluded that SP-based assessments should not be used in isolation to assess clinical competence.

Virtual reality

Virtual reality is increasingly being adopted as a simulation tool. In the health professions, virtual reality simulation uses computers and human patient simulators to create a realistic and immersive learning and assessment environment.Citation72–Citation74 Three studies used virtual realityCitation55,Citation56,Citation75 in simulation-based assessments comparing novice (medical students or residents), skilled (residents), and expert medical clinicians. Three different virtual reality systems were applied and all were shown to be able to differentiate between participants’ skill levels. The systems used were the SimSuite system (Medical Simulation Corporation, Denver, CO, USA), which includes an interactive endovascular simulatorCitation56; GI Mentor II computer system (Simbionix Ltd, Cleveland, OH, USA)Citation75; and a Heartworks TEE Simulator (Inventive Medical Ltd, London, UK), which includes a manikin and haptic-simulated probe.Citation55 All three systems were found to have construct validity as they were able to distinguish between the technical ability among the groups and therefore they are useful in determining those that require further training prior to clinical practice on real patients.

Mixed-fidelity patient simulators

Two studies that used low-, medium-, and high-fidelity human patient simulators during an assessment of paramedicsCitation76 and ICU medical trainees’ resuscitation skillsCitation57 were included in this review. All three levels of patient simulator fidelity were found to have high interrater reliability in these populations.Citation76 Intensive care trainees were assessed on medium- and high-fidelity human patient simulators as well as by written and oral viva examinations.Citation57 The written examination was shown not to correlate with either medium- or high-fidelity human patient simulation-based assessments, indicating that written and simulation assessments differed in their ability to evaluate knowledge and practical skills. Specific skill deficiencies were able to be determined when low- to high-fidelity simulators were used, therefore allowing subsequent training to be targeted to individuals’ needs.Citation76

Medium-fidelity simulation

One studyCitation77 investigated a medium-fidelity simulator (human patient simulator designed to allow limited invasive procedures with lower fidelity needs) and a volunteer with moulage. The paramedics were assessed in pairs on two simulated scenarios (acute coronary syndrome and a severe traumatic brain injury). Both their technical and nontechnical skills were assessed via separate checklists; the two assessors rated the performance from a video-recording and were allowed to rewind as necessary. Interrater reliability (between an emergency physician and psychologist) showed good correlations, especially for technical skills such as assessment of primary airway, breathing, circulation, and defibrillation. A positive and significant relationship was found between technical and nontechnical skills. Accordingly, one rater was found to be sufficient to adequately assess technical skills, but two raters were required to demonstrate equivalent reliability for nontechnical skills.

Task trainer

One pilot studyCitation78 investigated the performance of pediatric residents in lumbar puncture using a neonatal lumbar puncture task trainer. This simulation-based assessment used a video-delayed format, in which six raters reviewed the video and assessed the pediatric residents’ performance based on the seven criteria of preparation, positioning, analgesia administration, needle insertion technique, cerebrospinal fluid (CSF) fluid return/collection, diagnostic purpose/laboratory management of CSF, and creating and maintaining a sterile field. There was good interrater reliability and validity in regard to the response process (potential bias if the raters recognized the residents; voices were not altered, but faces were not shown) and relationship to external variables (eg, previous experience in neonatal or pediatric ICUs).

Discussion

We undertook a systematic review to examine simulation as an assessment tool across health professional education. Although this review demonstrated that simulation-based assessments of technical-based skills can be used reliably and are valid, the research was constrained by the findings that simulation-based assessments were commonly used in isolation, not in combination with other assessment forms or with more than one simulation scenario. This review also demonstrated that assessments using high-fidelity simulators and SPs have been more widely adopted. High-fidelity simulation was more widely adopted in medicine and commonly used in the emergency and anesthetic specialties in which high-risk skill assessments are used more frequently. The evidence suggests that participants can be assessed reliably with high-fidelity human patient simulators combined with multiple station assessment tasks with well-constructed scenarios. Overall, the results are promising for the future use and development of simulation-based assessment in the health education field.

Due to the multiplicity of simulation-based assessments, it was difficult to compare data between studies and definitive statements on which form of assessment type would be best for health disciplines and for students and practicing health professionals. In regard to health students, standardizing assessments created a fairer and more consistent approach, leading to greater equity and reliability. Simulation appears to achieve this in competency-based assessments as well as being a useful tool for predicting future performances. This area of research needs exploration as it may have the potential to determine future performances of students and their competency, especially in relation to whether students are ready for clinical environments and exposure to real patients. Simulation-based assessments may also assist newly graduated health professionals who could be deemed competent by using reliable and valid authentic assessments prior to commencing practice in a new area. In addition, simulation-based assessment is a promising approach for determining the skill level and capability for safe practice, as it appears to be able to distinguish between different levels of performance among novice and expert groups as well as being able to identify poor performers, allowing for safe practice.

The methodological rigor was an issue, with many of the studies having scores on the critical appraisal tool ranging from 8 to 14/15. Many of the studies had modest participant numbers, a common limitation noted in several studies,Citation58,Citation62,Citation64,Citation70,Citation78 which may limit the generalizability of the results. Sample size was not justified in many instances, and there was little mention of participant dropouts. In contrast, three studies had substantive participant numbers (>120 participants)Citation68,Citation71,Citation76 and provided robust analyses, which increased external validity.

A noticeable gap in this literature is that only three of the articles reviewed compared their simulation-based assessment to another assessment form or simulation type. These comparative studies provided a higher degree of critique of the assessment type and permitted observation of differences, which may be of assistance for health educators. We believe that comparative studies should be conducted in future research to provide evidence of assessment superiority and enhanced informed assessment choices.

A continuation of this theme is that studies examining the reliability and validity of simulation-based assessments need stronger research approaches, such as blinding assessors and participants, providing precise details of the intervention, and – where possible – to avoid contamination. While we appreciate that educational research is often challenging, robust study design should be tantamount.

Overall, further research is required to determine which form of simulation-based assessment is best suited in specific health professional learner situations. While it is suggested that simulation-based assessments should not be used in isolation to make an overall assessment of an individual’s clinical and theoretical skills, simulation-based assessments are being widely used and sometimes for this discrete purpose. Development of simulation-based assessment needs to continue as it will provide clarity and consistency for the assessors and participants, in addition to furthering the use of simulation in health education. As simulation is increasingly being used to replace a proportion of health student’s clinical practice time,Citation79,Citation80 it is expected that simulation-based assessment will become an integral component of health professional curricula and, therefore, it needs to be evidence based and valid. This will provide stronger conclusions for the use of simulation-based assessment in health professional education.

Limitations

There were several limitations of this systematic review. Studies included were limited to the English language, and there may well be other studies conducted and published in non-English-speaking publications. Due to the varying nature of the studies, we were unable to complete any form of pooled data analysis. We did not include studies that investigated the cost-effectiveness and cost analysis of simulation-based assessments. Studies of this type may highlight other areas of practicalities not highlighted in this systematic review. As already mentioned, studies investigating OSCEs were also excluded due to the extensive previous research conducted in this area. The inclusion of OSCE-based studies may have helped to strengthen the argument for SP use, as this tends to be the most common form of simulation used within the OSCE literature.

Conclusion

The use of simulation within health education is expanding; in particular, its use in the training of health professionals and students. The evidence from this review suggests that the use of SPs would be a practical approach for many clinical situations, with the use of part-task trainers or patient simulators to aid in areas in which the actors are unable to “act” or in cases wherein invasive procedures are undertaken. In assessments in which clinical skills need to be evaluated in high-pressure situations, the evidence of simulation-based assessments is that the use of patient simulators in high-fidelity environments may be more suitable than using task trainers. High-fidelity simulation assessments could also be used to incorporate and assess multidisciplinary team assessments. Overall, there is a clear need for further methodologically robust research into simulation-based assessments within health professional education.

Supplementary materials

Definitions

High-fidelity patient simulators

These are designed to allow a large range of noninvasive and invasive procedures to be performed and offer realistic sensory and physiological responses, with outputs such as heart rate and oxygen saturation usually displayed on a monitor. They can be run by a computer technician or preprogrammed to react to the participant’s actions.

Objective structure clinical examinations

These involve participants progressing through multiple stations at predetermined time intervals. They may have active or simulation-based stations that assess practical skills or passive stations such as written or video analysis, commonly used to assess theoretical knowledge.

Standardized patients

These are people trained to portray a patient in a consistent manner and present the case history of a real patient using predetermined subjective and objective responses.

Task trainers

These are models that are designed to look like a part of the human anatomy and allow individuals to perform discrete invasive procedures, for example a pelvis for internal pelvic examinations, or an arm to practice cannulation.

Disclosure

The authors report no conflicts of interest in this work.

References

- BoudDSustainable assessment: rethinking assessment for the learning societyStud Contin Educ2000222151167

- WigginsGA true test: toward more authentic and equitable assessmentPhi Delta Kappan1989709703713

- FalchikovNGoldfinchJStudent peer assessment in higher education: a meta-analysis comparing peer and teacher marksRev Educ Res2000703287322

- SwansonDBNormanGRLinnRLPerformance-based assessment: lessons from the health professionsEduc Res1995245511

- GordonMJA review of the validity and accuracy of self-assessments in health professions trainingAcad Med199166127627691750956

- WatsonRStimpsonAToppingAPorockDClinical competence assessment in nursing: a systematic review of the literatureJ Adv Nurs200239542143112175351

- RedfernSNormanICalmanLAssessing competence to practise in nursing: a review of the literatureRes Pap Educ20021715177

- GreinerACKnebelEHealth Professions Education: A Bridge to QualityWashington (DC)National Academies Press2003

- EpsteinRMAssessment in medical educationN Engl J Med2007356438739617251535

- RosenKRThe history of medical simulationJ Crit Care200823215716618538206

- IssenbergSBScaleseRJSimulation in health care educationPerspect Biol Med2008511314618192764

- GabaDMThe future vision of simulation in health careQual Saf Health Care200413suppl 1i2i1015465951

- WellerJMNestelDMarshallSDBrooksPMConnJJSimulation in clinical teaching and learningMJA2012196615

- ZivASmallSDWolpePRPatient safety and simulation-based medical educationMed Teach200022548949521271963

- ZivAWolpePRSmallSDGlickSSimulation-based medical education: an ethical imperativeAcad Med200378878378812915366

- KerJMoleLBradleyPEarly introduction to interprofessional learning: a simulated ward environmentMed Educ200337324825512603764

- AlinierGHuntWBGordonRDetermining the value of simulation in nurse education: study design and initial resultsNurse Educ Pract20044320020719038158

- SeropianMABrownKGavilanesJSDriggersBSimulation: not just a manikinJ Nurs Educ200443416416915098910

- MoherDPLiberatiAMDDTetzlaffJBAltmanDGPRISMA GroupThe PG. preferred reporting items for systematic reviews and meta- analyses: the PRISMA statementAnn Intern Med2009151426426919622511

- LawMStewartDPollockNLettsLBoschJWestmorlandMCritical Review Form – Quatitative StudiesMcMaster University Occupational Therapy Evidence-Based Practice Research GroupHamilton, Ontario1998

- HodgesBValidity and the OSCEMed Teach200325325025412881045

- WallaceJRaoRHaslamRSimulated patients and objective structured clinical examinations: review of their use in medical educationAdv Psychiatr Treat200285342348

- PellGFullerRHomerMRobertsTInternational Association for Medical EducationInternational Association for Medical E. How to measure the quality of the OSCE: a review of metrics – AMEE guide no. 49Med Teach2010321080281120854155

- CarraccioCEnglanderRThe objective structured clinical examination: a step in the direction of competency-based evaluationArch Pediatr Adolesc Med2000154773674110891028

- BartfayWJRomboughRHowseELeblancREvaluation. The OSCE approach in nursing educationCan Nurse20041003182315077517

- BarmanACritiques on the objective structured clinical examinationAnn Acad Med Singapore200534847848216205824

- RushforthHEObjective structured clinical examination (OSCE): review of literature and implications for nursing educationNurse Educ Today200727548149017070622

- KhattabADRawlingsBUse of a modified OSCE to assess nurse practitioner studentsBr J Nurs2008171275475918825850

- CaseyPMGoepfertAREspeyELAssociation of Professors of Gynecology and Obstetrics Undergraduate Medical Education CommitteeTo the point: reviews in medical education – the objective structured clinical examinationAm J Obstet Gynecol20092001253419121656

- PatricioMJuliaoMFareleiraFYoungMNormanGVaz CarneiroAA comprehensive checklist for reporting the use of OSCEsMed Teach200931211212419330670

- MitchellMLHendersonAGrovesMDaltonMNultyDThe objective structured clinical examination (OSCE): optimising its value in the undergraduate nursing curriculumNurse Educ Today200929439840419056152

- WalshMBaileyPHKorenIObjective structured clinical evaluation of clinical competence: an integrative reviewJ Adv Nurs20096581584159519493134

- HodgesBDHollenbergEMcNaughtonNHansonMDRegehrGThe psychiatry OSCE: a 20-year retrospectiveAcad Psychiatry2014381263424449223

- HastieMJSpellmanJLPaganoPPHastieJEganBJDesigning and implementing the objective structured clinical examination in anesthesiologyAnesthesiology2014120119620324212197

- PhillipsDZuckermanJDStraussEJEgolKAObjective structured clinical examinations: a guide to development and implementation in orthopaedic residencyJ Am Acad Orthop Surg2013211059260024084433

- PatricioMFJuliaoMFareleiraFCarneiroAVIs the OSCE a feasible tool to assess competencies in undergraduate medical education?Med Teach201335650351423521582

- LillisSStuartMSidonieFSStuartNNew Zealand registration examination (NZREX Clinical): 6 years of experience as an objective structured clinical examination (OSCE)N Z Med J20121251361748022960718

- SmithVMuldoonKBiestyLThe objective structured clinical examination (OSCE) as a strategy for assessing clinical competence in midwifery education in Ireland: a critical reviewNurse Educ Pract201212524224722633118

- BrannickMTErol-KorkmazHTPrewettMA systematic review of the reliability of objective structured clinical examination scoresMed Educ201145121181118921988659

- TurnerJLDankoskiMEObjective structured clinical exams: a critical reviewFam Med200840857457818988044

- CostelloEPlackMMaringJValidating a standardized patient assessment tool using published professional standardsJ Phys Ther Educ20112533045

- RuesselerMWeinlichMByhahnCIncreased authenticity in practical assessment using emergency case OSCE stationsAdv Health Sci Educ Theory Pract2010151819519609700

- EbbertDWConnorsHStandardized patient experiences: evaluation of clinical performance and nurse practitioner student satisfactionNurs Educ Perspect2004251121515017794

- AlinierGNursing students’ and lecturers’ perspectives of objective structured clinical examination incorporating simulationNurse Educ Today200323641942612900190

- LandryMOberleitnerMGLandryHBorazjaniJGEducation and practice collaboration: using simulation and virtual reality technology to assess continuing nurse competency in the long-term acute care settingJ Nurses Staff Dev200622416317116885679

- SharpnackPAMadiganEAUsing low-fidelity simulation with sophomore nursing students in a baccalaureate nursing programNurs Educ Perspect201233426426822916632

- ForsbergEGeorgCZiegertKForsUVirtual patients for assessment of clinical reasoning in nursing – a pilot studyNurse Educ Today201131875776221159412

- PaulFAn exploration of student nurses’ thoughts and experiences of using a video-recording to assess their performance of cardiopulmonary resuscitation (CPR) during a mock objective structured clinical examination (OSCE)Nurse Educ Pract201010528529020149746

- BrimbleMSkills assessment using video analysis in a simulated environment: an evaluationPaediatr Nurs2008207263118808054

- RichardsonLResickLLeonardoMPearsallCUndergraduate students as standardized patients to assess advanced practice nursing student competenciesNurse Educ2009341121619104339

- LekkasPLarsenTKumarSNo model of clinical education for physiotherapy students is superior to another: a systematic reviewAust J Physiother2007531192817326735

- EganMHobsonSFearingVGDementia and occupation: a review of the literatureCan J Occup Ther200673313214016871855

- SchabrunSChipchaseLHealthcare equipment as a source of nosocomial infection: a systematic reviewJ Hosp Infect200663323924516516340

- GordonJSheppardLAAnafSThe patient experience in the emergency department: a systematic synthesis of qualitative researchInt Emerg Nurs2010182808820382369

- BickJSDeMariaSJrKennedyJDComparison of expert and novice performance of a simulated transesophageal echocardiography examinationSimul Healthc20138532933424030477

- LipnerRSMessengerJCKangilaskiRA technical and cognitive skills evaluation of performance in interventional cardiology procedures using medical simulationSimul Healthc201052657420661006

- NunninkLVenkateshBKrishnanAVidhaniKUdyAA prospective comparison between written examination and either simulation-based or oral viva examination of intensive care trainees’ procedural skillsAnaesth Intensive Care201038587688220865872

- McBrideMEWaldropWBFehrJJBouletJRMurrayDJSimulation in pediatrics: the reliability and validity of a multiscenario assessmentPediatrics2011128233534321746717

- BouletJRMurrayDKrasJWoodhouseJMcAllisterJZivAReliability and validity of a simulation-based acute care skills assessment for medical students and residentsAnesthesiology20039961270128014639138

- MurrayDJBouletJRAvidanMPerformance of residents and anesthesiologists in a simulation-based skill assessmentAnesthesiology2007107570571318073544

- FehrJJBouletJRWaldropWBSniderRBrockelMMurrayDJSimulation-based assessment of pediatric anesthesia skillsAnesthesiology201111561308131522037637

- BurnsTLDeBaunMRBouletJRMurrayGMMurrayDJFehrJJAcute care of pediatric patients with sickle cell disease: a simulation performance assessmentPediatr Blood Cancer20136091492149823633232

- WaldropWBMurrayDJBouletJRKrasJFManagement of anesthesia equipment failure: a simulation-based resident skill assessmentAnesth Analg2009109242643319608813

- WellerJMRobinsonBJJollyBPsychometric characteristics of simulation-based assessment in anaesthesia and accuracy of self-assessed scoresAnaesthesia200560324525015710009

- PenpraseBMiletoLBittingerAThe use of high-fidelity simulation in the admissions process: one nurse anesthesia program’s experienceAANA J2012801434822474804

- PanzarellaKJManyonATUsing the integrated standardized patient examination to assess clinical competence in physical therapist studentsJ Phys Ther Educ20082232432

- AspreyDPHegmannTEBergusGRComparison of medical student and physician assistant student performance on standardized-patient assessmentsJ Physician Assist Educ20071841619

- EdelsteinRAReidHMUsatineRWilkesMSA comparative study of measures to evaluate medical students’ performancesAcad Med200075882583310965862

- NagoshiMWilliamsSKasuyaRSakaiDMasakiKBlanchettePLUsing standardized patients to assess the geriatrics medicine skills of medical students, internal medicine residents, and geriatrics medicine fellowsAcad Med200479769870215234924

- HawkinsRGaglioneMMLaDucaTAssessment of patient management skills and clinical skills of practising doctors using computer-based case simulations and standardised patientsMed Educ200438995896815327677

- GimpelJRBouletJRErrichettiAMEvaluating the clinical skills of osteopathic medical studentsJ Am Osteopath Assoc2003103626712834100

- AggarwalRGrantcharovTPEriksenJRAn evidence-based virtual reality training program for novice laparoscopic surgeonsAnn Surg2006244231031416858196

- BanerjeePPLucianoCJRizziSVirtual reality simulationsAnesthesiol Clin200725233734817574194

- HaubnerMKrapichlerCLoschAEnglmeierKHvan EimerenWVirtual reality in medicine-computer graphics and interaction techniquesIEEE IEEE Trans Inf Technol Biomed199711617211020811

- GrantcharovTPCarstensenLSchulzeSObjective assessment of gastrointestinal endoscopy skills using a virtual reality simulatorJSLS20059213013315984697

- LammersRLByrwaMJFalesWDHaleRASimulation-based assessment of paramedic pediatric resuscitation skillsPrehosp Emerg Care200913334535619499472

- von WylTZuercherMAmslerFWalterBUmmenhoferWTechnical and non-technical skills can be reliably assessed during paramedic simulation trainingActa Anaesthesiol Scand200953112112719032564

- IyerMSSantenSANypaverMAccreditation Council for Graduate Medical Education Committee, Emergency Medicine and Pediatric Residency Review CommitteeAssessing the validity evidence of an objective structured assessment tool of technical skills for neonatal lumbar puncturesAcad Emerg Med201320332132423517267

- BlackstockFCWatsonKMMorrisNRSimulation can contribute a part of cardiorespiratory physiotherapy clinical education two randomized trialsSimul Healthc201381324223250189

- WatsonKWrightAMorrisNCan simulation replace part of clinical time? Two parallel randomised controlled trialsMed Educ201246765766722646319