Abstract

Purpose: Community-based rehabilitation (CBR) must prove that it is making a significant difference for people with disabilities in low- and middle-income countries. Yet, evaluation is not a common practice and the evidence for its effectiveness is fragmented and largely insufficient. The objective of this article was to review the literature on best practices in program evaluation in CBR in relation to the evaluative process, the frameworks, and the methods of data collection. Method: A systematic search was conducted on five rehabilitation databases and the World Health Organization website with keywords associated with CBR and program evaluation. Two independent researchers selected the articles. Results: Twenty-two documents were included. The results suggest that (1) the evaluative process needs to be conducted in close collaboration with the local community, including people with disabilities, and to be followed by sharing the findings and taking actions, (2) many frameworks have been proposed to evaluate CBR but no agreement has been reached, and (3) qualitative methodologies have dominated the scene in CBR so far, but their combination with quantitative methods has a lot of potential to better capture the effectiveness of this strategy. Conclusions: In order to facilitate and improve evaluations in CBR, there is an urgent need to agree on a common framework, such as the CBR matrix, and to develop best practice guidelines based on the literature available and consensus among a group of experts. These will need to demonstrate a good balance between community development and standards for effective evaluations.

In the quest for evidence of the effectiveness of community-based rehabilitation (CBR), a shared program evaluation framework would better enable the combination of findings from different studies.

The evaluation of CBR programs should always include sharing findings and taking action for the sake of the local community.

Although qualitative methodologies have dominated the scene in CBR and remain highly relevant, there is also a call for the inclusion of quantitative indicators in order to capture the progress made by people participating in CBR programs.

The production of best practice guidelines for evaluation in CBR could foster accountable and empowering program evaluations that are congruent with the principles at the heart of CBR and the standards for effective evaluations.

Implications for Rehabilitation

Introduction

People with disabilities represent approximately 15% of the world’s population and are among the poorest and most marginalized of many communities [Citation1]. In 1978, in an attempt to decrease the burden of disability in low- and middle-income countries, the World Health Organization (WHO) launched a strategy called community-based rehabilitation (CBR) [Citation2]. CBR is now implemented in more than 90 countries and is defined as an inclusive community development strategy, which aims at the equalization of opportunities, rehabilitation, poverty reduction, and social inclusion of the population living with a disability [Citation3]. It is critical to evaluate existing CBR programs and to demonstrate their effectiveness in order to promote their sustainability and ongoing financial support, as well as the development of new programs based on the lessons learned from more than 30 years of experience in CBR. Yet, program evaluation does not seem to be common practice in the field, nor always congruent with standards for effective evaluations.

A review on the effectiveness of CBR programs by Finkenflugel et al. [Citation4] came to an unfortunate conclusion: evidence on the effectiveness and on the conditions under which CBR programs are most effective remains fragmented and largely insufficient. These authors retrieved few program evaluations on the effectiveness of CBR documenting both implementation and outcomes and highlighted that rigorous controlled studies on the efficacy of CBR were extremely rare. In addition, the wide range of outcomes analyzed in the different studies make it almost impossible to get a good general idea of the efficacy and effectiveness of CBR. Ultimately, there is very little consensus on how program evaluation and evaluative research should be conducted in order to be as close as possible to scientific standards, while remaining in harmony with CBR philosophy and the context of its implementation in low- and middle-income countries.

As Boyce and Ballantyne [Citation5], we believe that CBR will not survive unless better and more systematic program evaluation systems are used to document the outcomes and the effectiveness of this strategy. This systematic review aims to document: (1) the characteristics of the process that could be followed in the evaluation of CBR programs, (2) the way in which a framework or classification model could help frame the choice of outcome measures, and (3) the characteristics of the data collection methods that could be privileged. This study proposes a thorough look at the recommendations available in the literature and represents the first step in the preparation of best practice guidelines for program evaluation in CBR.

Method

Search strategy

A systematic search was conducted by the first author (MG) on the main rehabilitation databases (CINAHL, Embase, MEDLINE, PsychINFO, and Scopus) with the keywords “community-based rehabilitation” AND “program evaluation” (OR evaluative research OR process OR evidence OR evidence-based OR framework OR classification model OR conceptual model OR methods). Because of the confusion present in the literature between rehabilitation happening in the community and CBR as a community development strategy, no synonym was included for the first key word. The years 1994–2011 were searched as 1994 is the year when the first joint position statement on CBR was published [Citation3]. Articles published in this period are considered more representative of CBR today. In addition, a manual search was done on the WHO website. Finally, the references of the included articles were reviewed.

Inclusion and exclusion criteria

The selection of the articles that could provide indication on the process, models, and methods to be privileged in CBR program evaluation was conducted by two researchers who looked at all titles and abstracts, and read the full articles when in doubt. Disagreements on inclusion were resolved through discussion. Inclusion criteria were: (1) talking about CBR, as defined in the joint position paper in 1994 or 2004 (i.e., community development strategy), (2) having evaluation as a major theme, more precisely how evaluations should be done, (3) published in English or French. Articles reporting program evaluations without explicitly reflecting on the methodologies used were excluded. Peer-reviewed articles and guidelines were included, but book chapters, articles in newsletters, and articles published in journals without archives were excluded. Consistent with the suggestions of Kuipers and Harknett [Citation6] and Mitchell [Citation7], both program evaluation and evaluative research were considered; program evaluations can contribute to the evidence base if process and outcomes are described together, and if the outcomes that stand out in varied contexts and programs are verified using analytic study designs.

Methodological quality assessment of the studies

In order to make judgment on whether the recommendations for program evaluation represent a high level of evidence, two researchers assessed the methodological quality of all included studies, using tools appropriate for each type of study. The CASP tool was used to evaluate the methodological quality of observational qualitative studies [Citation8], the AMSTAR scale for systematic reviews [Citation9], the STROBE statement checklist for observational quantitative studies [Citation10], and the AGREE II instrument for practice guidelines [Citation11]. No appraisal tool was used to assess the quality of editorials (experts’ opinions) since these are generally considered to be the lowest possible level of evidence. The two researchers compared their assessment of the methodological quality of each article and reached consensus for the global rating of each article through discussion.

Both researchers also evaluated the strengths and weaknesses of each document in relation to the congruence with best practices in program evaluation and CBR. The four standards for effective evaluations from the Centre for Disease Control and Prevention (i.e., utility, feasibility, propriety, accuracy) were used as reference for best practices in program evaluation [Citation12]. The principles of CBR applicable to program evaluation (i.e., inclusion, participation, empowerment) served as reference for the CBR philosophy [Citation2].

Results

Articles and documents included

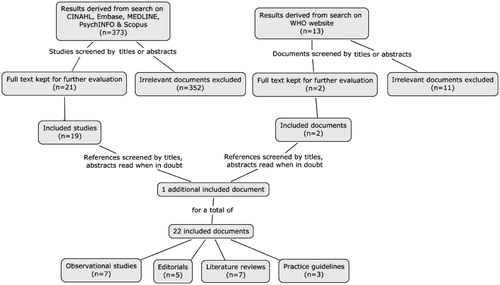

Some 373 articles were retrieved from the databases and 13 documents from the WHO website. In total, 22 documents met all inclusion criteria, two of which were found on the WHO website, and one was added after revision of the reference lists of the included articles (). These included observational studies, practice guidelines, literature reviews and editorials. The characteristics of the included documents and the results of the quality assessments are provided in Appendix A. A list of the excluded articles is provided in Appendix B.

Characteristics of the program evaluation process

provides a detailed description of the characteristics of the evaluation process found in the nine documents that provided specific suggestions on program evaluation processes that are most relevant in CBR. These were divided in three categories that emerged from the findings: people who should be involved in the evaluation process, steps to follow, and time when the evaluation should take place.

Table 1. Findings about the characteristics of the evaluation process for CBR.

In relation to who should be involved in the evaluation, the findings of this literature review suggest that the participation of the local community, including people with disabilities, is critical to ensure local relevance [Citation5,Citation14]. They also propose that self-assessment and external evaluations are suitable options [Citation2,Citation17,Citation18].

Concerning the steps to follow when conducting CBR program evaluation, the need to start by focusing the evaluation process came out [Citation2,Citation5,Citation18]. Two guidelines suggested that relevance, efficiency, effectiveness, sustainability, and social impacts could be used to focus the evaluation question [Citation2,Citation18]. Another element recommended by many authors is the completion of the evaluation cycle with reporting the findings and taking actions to improve the program [Citation2,Citation5,Citation7,Citation14,Citation17,Citation18].

As for the time when it is most appropriate to conduct an evaluation, the findings indicate that evaluation should be part of the regular activities of CBR programs, from the planning phase up to the follow-up upon completion of the program [Citation17,Citation18]. Many authors highlighted that the lack of baseline data in many CBR evaluations make it very challenging to capture the change happening over time [Citation2,Citation13,Citation15,Citation16].

Framework: process and outcomes used in program evaluation

A total of 13 classifications for CBR were identified in this review, including a broad variety of potential process and outcome measures, as well as indicators. They were not all presented as models for evaluation by the authors, but all included different categories on what should be measured when evaluating CBR programs. The different frameworks are presented in , along with process and outcome measures when available. The suggestions of the authors are divided in two categories: those associated with the implementation of the program and those associated with the outcomes of CBR.

Table 2. Frameworks proposed in the CBR literature and their associated process and outcome measures.

Four articles suggest that CBR would certainly benefit from using outcomes and indicators derived from classification models [Citation13,Citation15,Citation27,Citation28]. Thomas [Citation15] suggested that the desirable outcomes proposed in the CBR Guidelines for each element of the CBR matrix should be used to guide the choice of outcomes and indicators in CBR evaluations. Wirz and Thomas [Citation27] believe that a bank of ready-to-use indicators derived from classification systems is needed while others argue that it is best to create a framework and indicators that meet the specific needs of the program to be evaluated [Citation19,Citation20].

Methods of data collection in program evaluation

Of the 22 documents, 15 had specific recommendations on the characteristics of the methods of data collection that are most relevant for CBR (see ). Most authors agree that it is usually best to use more than one method to enable triangulation [Citation2,Citation6,Citation18,Citation20,Citation22,Citation26]. The findings highlight that traditional qualitative methodologies have been used extensively in CBR evaluations [Citation29], but some authors argue that mixed methods and quantitative methodologies have more potential to contribute to demonstrating the effectiveness of CBR programs [Citation13,Citation18,Citation27]. One point of disagreement in the literature lies in the need for tools that would be relevant in all contexts or some that would be context-specific.

Table 3. Characteristics of the methods of data collection in CBR.

Discussion

The review conducted provides insight into what should be considered best practices in CBR program evaluations. First, the need for the evaluative process to be focused on taking action in light of the results is certainly congruent with the utility and propriety standards for effective evaluations and the empowerment principle in CBR [Citation2,Citation7,Citation14]. However, it appears that only Boyce and Ballantyne [Citation5] have emphasized the need for the process itself to be empowering, so that the local community can become the leader of their evaluation. Based on our field experiences in CBR, we believe that CBR would gain from applying some of the principles proposed by Fetterman and Wandersman [Citation31] to design and engage in empowering evaluations that would foster greater community ownership and sustainability.

Second, the lack of consensus on which classification model to use contributes to a very fragmented evidence-base for CBR, as there is no common language [Citation4]. The authors of three articles suggest that CBR needs a common framework from which to derive outcomes for evaluations [Citation15,Citation27,Citation28] while two other groups propose that a localized framework would be better suited to a bottom-up approach like CBR [Citation19,Citation20]. We propose that evaluators need to join forces towards a common framework enabling the combination of findings in the quest for evidence for CBR effectiveness, but that this framework must be clearly defined, comprehensive, and flexible to enable local adaptability. We agree with Thomas [Citation15] that the CBR matrix could be an important piece of this framework since it was developed with the contributions of experts and managers of the field, and because each element is now explicitly described in the CBR Guidelines. The other frameworks available in the literature could help determine the most relevant evaluation questions while providing additional ideas for the process and outcome measures that can be appropriate for the particular context. presents a proposition for a shared framework for program evaluation in CBR. It integrates the CBR matrix and the highlights from the other frameworks. It is structured in the respect of the hierarchy of evaluations [Citation32] in order to facilitate the development of effective evaluations. The idea would be situate evaluations on both axes, the component and element of the CBR matrix evaluated, and the type(s) of question(s) studied (i.e., relevance, process, outcomes or cost). Further validation with experts and on the field is now needed.

Table 4. Proposed framework for program evaluation and evaluative research in CBR.

Although qualitative methods have dominated the scene in CBR program evaluation for now, and remain highly relevant, the need to incorporate quantitative indicators to capture the progress made by people participating in CBR programs is identified explicitly in three articles [Citation13,Citation27] and is certainly congruent with a quest for greater accuracy in evaluations. The challenge is to determine how to do so without losing flexibility in evaluations, and without renouncing to the richness and power that qualitative methodologies can have in engaging local communities. That being said, it is clear that the most important is for the methods to be suitable for the local community.

Most of the recommendations from the documents included in this review were highly congruent with the CBR principles of inclusion and participation, and the context of its implementation in resource-poor areas, but not always completely so with the empowerment principle and with the standards for effective evaluations and good research. This weakness, along with the lack of common language and framework in CBR before the publication of the CBR Guidelines, certainly has posed serious threats to the recognition and advancement of this strategy. Even though this review does not provide level 1 evidence for one type of evaluation over another, it certainly offers insights into what could be considered best practices in the field and highlights the need to move towards a culture of evaluation where reflection and action are incorporated in the regular activities of CBR programs. We believe that CBR now requires best practice guidelines for program evaluation, clarifying what is the proper balance between community development and standards for good evaluation and research. Ideally, these guidelines would be based on the evidence found in this systematic review and consensus among a group of experts, and would suggest a clear, detailed, and empowering process to follow, with clear links to a shared framework and potential methods of data collection. A field validation of the guidelines would be essential to ensure applicability.

Conclusion

In conclusion, we strongly believe that it is more than time for the CBR community to engage in program evaluation and in the production of best practice guidelines for accountable and empowering evaluations. Since moderate levels of evidence in favor of particular practices are available, experts in program evaluation in CBR need to come to a consensus on what are best evaluative practices in the context of CBR. Further research and evaluation on the conditions under which CBR programs are most effective for different populations is definitely needed, in the respect of both CBR principles and standards for effective research and evaluation. Hopefully, these can be situated within the framework proposed in this manuscript. We wish that this review provided guidance on how to demonstrate the effectiveness of this strategy, in order to foster the institutionalization of existing CBR programs showing positive results and the development of new CBR programs to address the needs of the population living with a disability in resource-poor areas.

Declaration of interest

This article is included in the first author’s doctoral thesis in rehabilitation sciences at the University of Ottawa, Canada. She is supported financially by a Vanier Scholarship from the Canadian Institutes of Health Research, an Excellence Scholarship from the University of Ottawa and a Doctoral scholarship from the Canadian Occupational Therapy Foundation. She also received an Ontario Graduate Scholarship from the Ministry of Training, Colleges and Universities at the beginning stages of the preparation of this manuscript. The authors report no conflicts of interest.

Acknowledgements

Special thanks go to Jessica Morin who helped in the selection and evaluation of the articles.

References

- WHO and World Bank. World report on disability. Geneva: WHO Press; 2011

- World Health Organization (WHO), United Nations Educational, Scientific and Cultural Organization (UNESCO), International Labour Organization (ILO), International Disability and Development Consortium (IDDC). [Internet]. Community-based rehabilitation: CBR Guidelines. Geneva: WHO Press; 2010. Available from: http://www.who.int/disabilities/cbr/en/ [last accessed 12 Apr 2013]

- International Labour Organization (ILO), United Nations Educational, Scientific and Cultural Organization (UNESCO), World Health Organization (WHO). [Internet] Joint position paper. CBR: a strategy for rehabilitation, equalization of opportunities, poverty reduction and social inclusion of people with disabilities. Geneva: WHO Press; 2004. Available from: http://www.who.int/disabilities/cbr/en/ [last accessed 12 Apr 2013]

- Finkenflugel H, Wolffers I, Huijsman R. The evidence base for community-based rehabilitation: a literature review. Int J Rehabil Res 2005;28:187–201

- Boyce W, Ballantyne S. Developing CBR through evaluation. Asia Pacific Disabil Rehabil J 2000;2:69–83

- Kuipers P, Harknett S. Guest editorial. Considerations in the quest for evidence in community based rehabilitation. Asia Pacific Disabil Rehabil J 2008;19:3–14

- Mitchell R. The research base of community-based rehabilitation. Disabil Rehabil 1999;21:459–68

- Critical Appraisal Skills Programme (CASP) [Internet]. Qualitative research: appraisal tool. 10 questions to help you make sense of qualitative research. Oxford: Public Health Resource Unit, 2006:1–4. Available from: http://www.casp-uk.net [last accessed 12 Apr 2013]

- Shea B, Grimshaw AM, Wells GA. Development of AMSTAR: a measurement tool to assess the methodological quality of systematic reviews. BMC Med Res Methodol 2007;7:1–10

- Elm EV, Altman DG, Egger M, et al. The Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) statement: guidelines for reporting observational studies. J Clin Epidemiol 2008;61: 344–9

- Brouwers M, Kho ME, Browman GP, et al. AGREE II: advancing guideline development, reporting and evaluation in healthcare. Can Med Assoc J 2010;51:421–4

- Centre for Disease Control and Prevention (CDC). Framework for program evaluation in public health. MMWR 1999;48:1–40

- Cornielje H, Velema JP, Finkenflügel H. Community based rehabilitation programmes: monitoring and evaluation in order to measure results. Leprosy Rev 2008;79:36–49

- Price P, Kuipers P. CBR action research: current status and future trends. Asia Pacific Disabil Rehabil J 2000;2:55–68

- Thomas M. Reflections on community-based rehabilitation. Psychol Dev Soc 2011;23:277–91

- Velema J, Finkenflugel H, Cornielje H. Gains and losses of structured information collection in the evaluation of ‘rehabilitation in the community’ programmes: ten lessons learnt during actual evaluations. Disabil Rehabil 2008;30:396–404

- WHO and IDC [Internet]. Guidelines for conducting, monitoring and self-assessment of community-based rehabilitation programmes: using evaluation information to improve programmes Geneva: WHO Press; 1996. Available from: http://www.who.int/disabilities/cbr/en/ [last accessed 12 Apr 2013]

- Zhao T, Kwok J. Evaluating community based rehabilitation: guidelines for accountable practice. 1999. Available from: http://unipd-centrodirittiumani.it/public/docs/34011_planning.pdf [last accessed 12 Apr 2013]

- Adeoye A, Seeley J, Hartley S. Developing a tool for evaluating community-based rehabilitation in Uganda. Disabil Rehabil 2011;33:1110–24

- Chung EY, Packer TL, Yau M. A framework for evaluating community-based rehabilitation programmes in Chinese communities. Disabil Rehabil 2011;33:1668–82

- Cornielje H, Nicholls P, Velema J. Making sense of rehabilitation projects: classification by objectives. Leprosy Rev 2000;71:472--85

- Evans PJ, Zinkin P, Harpham T, et al. Evaluation of medical rehabilitation in community based rehabilitation. Soc Sci Med 2001;53:333–48

- Mannan H, Turnbull AP. A review of community based rehabilitation evaluations: quality of life as an outcome measure for future evaluations. Asia Pacific Disabil Rehabil J 2007;18:29–45

- McColl MA, Paterson J. A descriptive framework for community-based rehabilitation. Can J Rehabil 1997;10:297–306

- Pal DK, Chaudhury G. Preliminary validation of a parental adjustment measure for use with families of disabled children in rural india. Child: Care Health Dev 1998;24:315–24

- Sharma M. Evaluation in community based rehabilitation programmes: a strengths, weaknesses, opportunities and threats analysis. Asia Pacific Disabil Rehabil J 2007;18:46–62

- Wirz S, Thomas M. Evaluation of community-based rehabilitation programmes: a search for appropriate indicators. Int J Rehabil Res 2002;25:163–71

- Finkenflugel H, Cornielje H, Velema J. The use of classification models in the evaluation of CBR programmes. Disabil Rehabil 2008;30:348–54

- Sharma M. Viable methods for evaluation of community-based rehabilitation programmes. Disabil Rehabil 2004;26:326–34

- Sharma M. Using focus groups in community based rehabilitation. Asia Pacific Disabil Rehabil J 2005;16:41–50

- Fetterman DM, Wandersman A, eds. Empowerment evaluation principles in practice. New York (NY): Guilford Press; 2005

- Rossi PH, Lipsey MW, Freeman HE. Evaluation: a systematic approach. 7th ed. Thousand Oaks (CA): Sage; 2004

Appendix A

Table A1. Characteristics of the included articles and highlights of the quality assessments.

Appendix B

List of excluded studies (available upon request sent to corresponding author).