Abstract

This paper discusses how varied policy analytical capacity can be evaluated at the systemic level through observed gaps in environmental data monitoring and reporting among countries. Such analytical capacity at the knowledge system level facilitates transparency and credibility needed for nation-states to cooperate on issues requiring global coordination, including “super-wicked” environmental issues like climate change. So far there has been relatively sparse attention paid to whether countries will have the ability — or policy analytical capacity — to report the necessary data and indicators required for the next round of global Sustainable Development Goals (SDGs) being proposed. In this paper, I argue that the varied policy analytical capacity within the global environmental knowledge system necessitates the participation of new institutions and actors. Identifying gaps in data availability at a global, systemic scale, this paper presents a proxy measure of policy analytical capacity based on publicly-reported national statistics of air and water quality performance. Such discrepancies evaluated at a systemic level make a case for channels by which citizen scientists, independent watchdogs, private sector companies and third-party organizations can participate to enhance the policy analytical capacity of governments.

1 Introduction

Policy analytical capacity is critical to advance evidence-based approaches to environmental decision-making and governance. It is defined as the ability of governments to analyze information, apply research methods and advanced modeling techniques, and is considered as one of the core competencies required for “governance success” (CitationHowlett, 2009; Wu, Ramesh, & Howlett, 2014). Such analytical ability is required to build trust between individual actors and organizations, who evaluate the credibility of policy interventions based on their performance, which is often substantiated in terms of data and statistical results (CitationBlind, 2007). On a larger systemic level, the use of data and evidence facilities transparency and credibility needed for nation-states to cooperate on issues requiring global coordination.

The consideration of policy analytical capacity is particularly salient in the context of environmental issues, where “super-wicked” problems of the commons like climate change (CitationLevin, Cashore, Bernstein, & Auld, 2007) necessitate global environmental governance. Effective commons governance for global-scale problems is dependent upon “good, trustworthy information about the stocks, flows, and processes within the resource systems being governed” (CitationDietz, Ostrom, & Stern, 2003). However, data gaps, information asymmetries and uncertainty — arguably the result of low and varied policy analytical capacity among governments — have long plagued sound management and policy practices (CitationEsty, 2001). When aggregated to the global systemic scale, these knowledge disparities result in the inability to effectively track performance and progress toward universal goals, such as the Sustainable Development Goals (SDGs) to be decided in September 2015. So far there has been relatively sparse attention paid to whether countries will have the ability — or policy analytical capacity — to monitor, collect and report the necessary data and indicators required for the range of targets proposed.

In this paper, I argue that the varied policy analytical capacity within the global environmental knowledge system necessitates the participation of new institutions and actors. Identifying gaps in data availability at a global, systemic scale, this paper presents a proxy measure of policy analytical capacity based on publicly reported national statistics of air and water quality performance. While by no means an attempt to explain causal factors for the lack of data and a crude approximation at best, the method proposed here is a first step toward highlighting potential disparities in policy analytical capacity that could threaten global environmental management, as well as policymakers’ ability to establish appropriate benchmarks for the future SDGs. Such discrepancies evaluated at a systemic level make a case for channels by which citizen scientists, independent watchdogs, private sector companies and third-party organizations can participate to enhance the policy analytical capacity of governments.

2 Evidenced-based approaches and policy analytical capacity

The relationship between knowledge and policymaking is central — if not the central relationship — for public policy studies (CitationParsons, 2004). With respect to the environment, scholars point to the lack of knowledge, resources, and weakness of institutions that limit management (CitationJänicke, 1997). Historically, environmental law and policy have not emphasized information and its disclosure as a primary concern, resulting in uncertainty being the “hallmark of the environmental domain” (CitationEsty, 2004). Technical and analytical limitations, inadequate and incomplete monitoring systems that prevent accurate assessment, market failures, and institutional deficiencies result in information gaps (CitationEsty, 2004; Metzenbaum, 1998). The recognition that these knowledge deficiencies are at the root of policy failure has motivated a shift toward investigating the role of information, its disclosure and transparency, in environmental decision-making (CitationEsty, 2004; Mol, 2006).

International practice has demonstrated that increased information facilitates pollution reduction by allowing for identification of target areas and allocation of resources where most needed. A growing number of environmental regulators have sought to accompany enforcement systems with information programs to reveal environmental performance of polluters (CitationWang et al., 2004; Foulon et al., 2002). However, the rise of information and data-based approaches has been “piecemeal and inchoate” (CitationKleindorfer & Orts, 1999). Only within the last two decades have information and knowledge, in addition to its networks and infrastructures, been increasingly seen as critical components for understanding social processes in the Information Age (CitationCastells, 1996, 1997a, 1997b; Mol, 2006). Scholars (CitationFlorini, 2007; Gupta, Christopher, Wang, Gehrig, & Kumar, 2006; Mol, 2006, 2009; Tietenberg, 1998; Van Kersbergen & Van Warden, 2004) note an increasing emphasis on information and its disclosure as an effective policy mechanism to drive improvements in environmental performance, or what CitationCase (2001) refers to as “informational regulation.”

Proponents of such evidenced-based approaches, however, tend to overlook the role of capacity in adopting these methods, which at their core emphasize policy failure as a result of information gaps but do not necessarily acknowledge the ability of actors or systems to effectively utilize information in decision-making. The growing emphasis on evidence-based policymaking can stretch the analytical resources of organizations to a “breaking point” (CitationHowlett, 2009; Hammersley, 2005). Such analytical resources and the ability to acquire and utilize knowledge in policy processes is what CitationHowlett (2009) refers to as “policy analytical capacity,” which is defined as:

“the amount of basic research a government can conduct or access, its ability to apply statistical methods, applied research methods, and advanced modeling techniques to this data and employ analytical techniques to this data and employ analytical techniques such as environmental scanning, trends analysis, and forecasting methods in order to gauge broad public opinion and attitudes, as well as those of interest groups and other major policy players, and to anticipate future policy impacts. It also involves the ability to communicate policy related messages to interested parties and stakeholders.”

CitationWu et al. (2014) identify various levels within a system where policy analytical capacities are all necessary for a government to succeed — the individual, organizational, and systemic. At the individual level, policy analytical capacity refers to the ability of individuals to not only analyze problems and implement policies, but to also contribute to the design and evaluation of the policies themselves. At an organizational level, there is recognition that institutions and resources are needed to provide an enabling context — existing institutional, economic or information opportunity structures, according to CitationJänicke (2005) — for individuals to perform functions necessary and related to policy analysis. Finally, at the systemic level, the general state of educational (e.g., universities or higher-learning institutions) and scientific facilities or the availability and access to high quality information (e.g., penetration of information communication technologies) are critical considerations to a government's policy analytical capacity. CitationRiddell (2007) summarizes the requirements of policy analytical capacity as grounded in “a recognized requirement or demand for research; a supply of qualified researches; ready availability of quality data; policies and procedures to facilitate productive interactions with other researchers; and a culture in which openness is encouraged and risk taking is acceptable.” All of these elements point to a larger systemic, cultural milieu necessary for governments’ policy analytical capacity.

While CitationWu et al. (2014) admit that systemic policy analytical capacity is to some extent limited to the individual or organizational level, the failure to account for it can undermine evidence or data-based approaches to policy interventions. For example, CitationAlshuwaikhat (2005) points to the case of environmental impact assessments (EIAs) in many countries in Asia (e.g., Sri Lanka, Vietnam and Saudi Arabia) that, when first introduced in the early 1990s, did not take into consideration the policy analytical capacities required for their successful implementation. Insufficient experience with monitoring and evaluation, in addition to a lack of baseline data, meant that governments were unprepared to undertake EIAs often required of them by multilateral lending institutions. “It seems that a political decision was taken without considering the technical and infrastructural aspects required to carry out assessments smoothly,” CitationAlshuwaikhat (2005) assesses.

Even when faced with available data, low levels of policy analytical capacity can mean a failure to effectively incorporate scientific knowledge in decision-making processes (CitationHowlett, 2009). The “overloading” of users’ capacity to assimilate information is what CitationDietz et al. (2003) point to as a potential source of governance failure in the case of complex environmental systems. Weak policy analytical capacity, then, can defeat the core tenet of evidenced-based approaches, which is that better decisions result when the most available information is incorporated and applied (CitationHowlett, 2009). Such integration is part of the policy learning cycle, in which states, organizations and actors transfer knowledge from one setting or period of time to another and build what CitationWu et al. (2014) refer to as the knowledge system capacity. Scholars of the policy learning literature would contend that improved policy analytical capacity, then, influences the learning process in that it can enhance information processing and utilization, increasing know-how and the possibility for successful policy outcomes (CitationBennett & Howlett, 1992; Sabatier & Jenkins-Smith, 1993).

The impact of low levels of policy analytical capacity amongst government actors can influence not only the ability to process information and disrupt the policy learning cycle, but to collect appropriate types of data necessary for environmental management. In practice, I argue that this low policy analytical capacity can influence what data and information are collected. Of course, environments may be considered “information-poor” due to a variety of factors. Information and data collection may be restricted for reasons other than weak policy analytical capacity. Governments sometimes have an incentive to distort or limit the flow of information (CitationStiglitz, 2002). Environments may also be considered “information poor” due to economic or political constraints that limit the informational processes and access; poor institutional structure and lack of capacity that undermine information collection and distribution; and complex cultural or ideological contexts that impede the flow of information (CitationMol, 2009). Countries that are non-compliant with international standards or norms regarding environmental management may also choose to hide or obstruct data.

China's decentralized mode of environmental policy implementation provides a prime example of how varied policy analytical capability becomes translated into stark differences between what environmental data are collected and reported between provinces in China. While policies are formulated at the central government level, it is left to the lower administrative units at the provincial and other sub-national units to implement. The result is often a gap between center policy formulation and local execution, as sub-national officials can then be selective about which national policies to implement and which to relegate to a back burner (CitationEconomy, 2004; Lieberthal, 1992).

This implementation and policy gap, on a sub-national scale, gives rise to wide variations in environmental data availability between provinces in China (CitationYale Center for Environmental Law and Policy (YCELP), Center for International Earth Science Information Network at Columbia University, Chinese Academy for Environmental Planning, & the City University of Hong Kong, 2011). More data on a wider range of issues are present in provinces with higher levels of economic development (as measured by GDP) and have greater policy analytical capacity (as measured by the number of employees with post-graduate degrees) (CitationHsu, 2013). In some provinces, such as Inner Mongolia, officials at the provincial environmental protection bureau (EPB) pointed to the lack of any personnel with doctorate degrees, while other places like Shanghai have multiple personnel with doctorates in relevant environmental science and engineering fields. Shanghai's EPB stands out as one of the agencies with relatively greater policy analytical capacity, with its monitoring center regularly collaborating on advanced environmental data collection with international counterparts, including the U.S. Environmental Protection Agency (idem). Suzhou, a prefecture-level city ranking in the top-10 of Chinese cities in terms of economic development as measured by GDP, boasted a real-time monitoring for air and water pollution discharge that sent text messages when factories exceeded pollution limits. No other EPB included had similar technology or analytical capacity (idem).

The case of China suggests that varying policy analytical capacity exists and influences what data are collected. Those EPBs with seemingly greater “capacity” are able to collect a range of data using sophisticated technologies and in collaboration with international counterparts, while those with far less human and technical capacity tend to only collect environmental data mandated through government directives. Such discrepancies become problematic when aggregated to a systemic scale: when comparing carbon emissions at the provincial versus the national scale, CitationGuan, Liu, Geng, Lindner, and Hubacek (2012) found a gap roughly the size of Japan's emissions. A loss of trust in a government's analytical capacities resulted when Chinese netizens began to question the validity of air quality statistics in Beijing compared to those released by the U.S. Embassy (CitationAFP, 2011), threatening social unrest. How such deficiencies in policy analytical capacity can be assessed systematically and at a broader scale is a question discussed in the next section.

3 Measuring policy analytical capacity for environmental governance

While CitationHowlett (2009) makes a strong case for the relevance of policy analytical capacity for evidenced-based decision making, assessing capacity is a difficult endeavor. Defining “governance” as “capacity to govern,” CitationFukuyama (2013) points to limitations of most existing measures of state quality and capacity, which almost exclusively rely on subjective, expert survey data and are often narrowly viewed through the perspective of democratic regimes. Datasets such as the World Bank Institute's Worldwide Governance Indicators (WGI) or Bo Rothstein's Quality of Governance Institute's quality of governance are constrained in terms of time series and reliance on perception data that can be skewed depending on sampling. Furthermore, because the WGI metrics are highly correlated with gross domestic product (GDP), they provide little differentiation between drivers of “good governance,” particularly since a positive relationship between GDP and some environmental indicators is well-established (CitationBradshaw, Giam, & Sodhi, 2010; Dinda, 2004; Grossman & Krueger, 1995; Hsu, 2013; Mukherjee & Chakraborty, 2010; Stern, 2003).

In other disciplines and subjects, scholars find attempts to measure capacity challenging and even problematic. When evaluating five indices aimed to assess and compare technology capacity between countries, CitationArchibugi and Coco (2005) found relative consensus with respect to what defines “technology” (e.g., the number of patents as a measure of innovative capacity), but too much divergence with respect to end results. Their analysis, while recognizing a certain level of subjectivity with respect to each index's authors, concludes that the lack of international coordination and standardization of measurement is in part to blame. At the individual level, CitationHowlett and Joshi-Koop (2011) evaluate policy analytical capacity through survey data of policy capacity perceptions and evaluation of post-secondary training of personnel, although their study was limited to a single country and similar data are not available at a global scale. CitationFukuyama (2013) suggests proxy measures to understand state capacity, including tax extraction rates or the ability to generate accurate census data, as more indicative of a government's capacity to govern and achieve results. However, CitationRotberg (2014) cautions against such input measures, instead arguing for metrics that equate governance with performance and use outputs as a means of evaluation. But even CitationRotberg (2014) stops short of providing concrete measures of performance by which to evaluate governance.

One approach — although not without its own limitations — is the use of data availability as a proxy of capacity. CitationRiddell (2007) specifies one of the requirements for policy analytical capacity includes the “ready availability of quality data,” among other factors. The World Bank's (2004) index of “statistical capacity” (i.e., the ability to adhere to internationally-accepted statistical standards and methods) captures three dimensions: statistical practice, data collection, and indicator availability. While these measures are limited in that they cannot speak to the efficacy of statistical systems or the willingness of decisionmakers to formulate policies based on data, the International Development Association (CitationIDA, 2004) has found this evaluation to be particularly useful in helping to identifying countries with weak statistical capacity for needed investments. Their analysis found countries that score lowest are those without established data collection systems as well as those that do not benefit from external financial or international support (idem). Further, the results were not aligned (i.e., positively correlated) with income levels, meaning weak statistical capacity can be found in both poor and rich countries alike.

Adopting a similar approach to consider the environmental domain, the availability of environmental data or existence of monitoring infrastructure could be an indication of whether a country has the policy analytical capacity to collect such data. If the availability of environmental data is related to environmental performance (e.g., a positive correlation between data availability and higher levels of performance or quality), such a relationship might suggest the importance of policy analytical capacity to environmental governance. To evaluate these two indicators — data availability and environmental performance, the Environmental Performance Index (EPI) provide a useful source of information.

The EPI is a global, biennial ranking of how well countries perform on high-priority environmental issues like climate change, air quality, water resources, among others. It is a composite index built on 19 indicators, which are weighted into policy issue categories, and then grouped into two broad objectives to provide national comparisons at multiple levels of aggregation. The 2014 edition, the fifth produced by Yale and Columbia universities, includes 178 countries, which represents 99% of the global population, 98% of global land area, and 97% of global Gross Domestic Product (GDP) (CitationHsu et al., 2014).

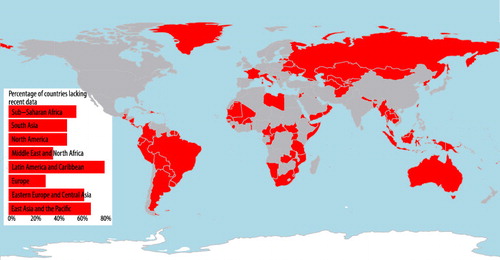

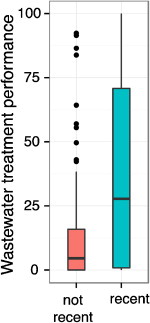

The 2014 EPI provides two cases by which to consider the environmental policy analytical capacity of countries, as measured through a rough proxy of data availability. The first example is availability of recent data for wastewater treatment, which is defined as water that has been used by households, industries, and commercial establishments that, unless treated, no longer serves a useful economic purpose and contains excessive nutrients or contaminants (CitationRaschid-Sally & Jayakody, 2009; UNSD, 2012, p. 196). highlights countries that lack any recent (after 2005) measure of wastewater treatment in country environment or statistic reports, data agencies, or as reported to intergovernmental agencies (CitationMalik, Hsu, Johnson, & de Sherbinin, 2015). The Latin American and Caribbean region has the most number of countries that lack recent data, followed by countries in the East Asia and Pacific region. Gaps in recently reported data do not necessarily seem related to economic development, as Australia and France and both identified as countries that lack recent data. Comparing the wastewater treatment performance of the two groups of countries, those that report more recent data tend to overall perform better than those that fail to report recent data, although the range for the former group is much wider than that of the latter (). What this result suggests is higher overall environmental performance (e.g., 2014 EPI score) when countries report more recent data for wastewater treatment.

Fig. 1 A map assessing the recentness of the world's wastewater reporting. This does not measure treatment performance but rather reporting status. Countries whose reported data (if available) was not recent (defined as “after 2005”) are shaded in red, while countries whose data (if available) were recent are shaded in gray. An absence of reported data is gauged as “not recent” and “zero treatment” is counted as reported data.

Fig. 2 Countries that report more recent data (i.e., after 2005) tend to perform higher overall on the 2014 EPI wastewater treatment indicator than countries that lack recent data.

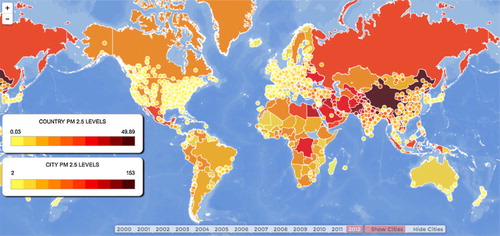

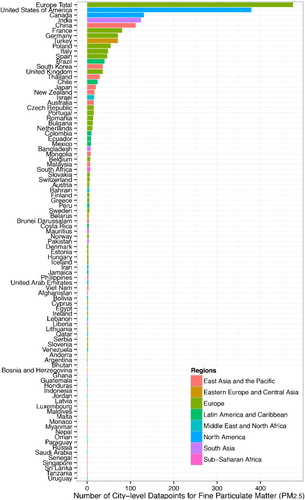

A second example is illustrated through globally available air quality data. Figs. 3 and 4 illustrate the differences in available data to assess exposures to fine particulate matter (PM2.5), pollutants invisible to the human eye with the greatest health effects because of their ability to penetrate human lung and blood tissue (CitationUS EPA, 2013). The majority of ground-based monitors at the city level to assess PM2.5 are located in primarily developed regions in North America and Western Europe (with exceptions in China and India, which have the fourth and fifth most number of monitors) (CitationWHO, 2014). Major gaps in available data for PM2.5 can be found in Russia, which only has data for the capital city of Moscow, Latin America, sub-Saharan Africa, and Central Asia. The scarcity of ground-based monitors is one reason the authors of the 2014 EPI opted to use satellite-derived measures of exposure to PM2.5 to develop national-level metrics of air quality (CitationHsu et al., 2014). It is clear that city-level air monitoring data are not available in many countries where national-level air quality is poor (as illustrated through the country-level shading in ), including parts of Southeast Asia, Central Asia, North Africa and the Middle East, and sub-Saharan Africa. However, China and India - identified as the two countries with the world's worst air quality — have relatively high numbers of ground-based monitors despite the relatively high levels of fine particulate pollution.

Fig. 3 Map of the WHO's Ambient (outdoor) air pollution in cities database (CitationWHO, 2014) shows disparities in reported data throughout the world. An interactive version of this map can be found online: http://www.theatlantic.com/health/archive/2014/06/the-air-we-breathe/372411/.

Fig. 4 Number of city-level data points for fine particular matter (PM2.5) available in the WHO's 2014 Ambient (outdoor) air pollution database (CitationWHO, 2014).

4 New actors to enhance policy analytical capacity

The cases of global availability of air and water data presented in the previous section demonstrate one factor - varied environmental monitoring and reporting - that could affect policy analytical capacity. As the Rio + 20 Earth Summit's “The Future We Want” outcome document emphasizes the need to incorporate indicators and specific targets to track progress toward the SDGs, whether countries will have the ability to do so is a question. This section discusses the potential for new actors and sources of data, outside of “official” government data collection channels, to address these shortcomings. How might these new actors and sources of data bolster policy analytical capacity of countries for environmental monitoring and governance?

As CitationJänicke (1997) states, environmental policy capacity is not restricted to national policies, but instead increasingly relies on “societal forces of all kinds.” The participation of multiple actors can contribute to a form of analytic deliberation that in turn, can enhance systemic policy analytical capacity across society. CitationDietz et al. (2003) argue that such analytic deliberation improves information provision and builds trust and the social capital necessary that then allows for actors to deal with change, inevitable conflict, and ultimately consensus on governance actions. In a sense, this analytic deliberation afforded by multiple actors can be the necessary catalyst for data and evidence-based approaches to proceed.

An illustration of how emerging analytic deliberation among government and non-government actors has led to enhanced policy analytical capacity is the case of India's introduction of an air quality index in 2014. New data presented in the New York Times juxtaposed New Delhi's air quality against Beijing's (CitationHarris, 2014), a city infamous for “airpocalyptic” levels of pollution (CitationLim, 2013), sparked a national conversation. Scientists, media, non-government organizations like the Center for Science and the Environment, began to question the veracity of government denials of the capital city's poor air quality (CitationMazoomdaar, 2014). The debate eventually led to New Delhi's government announcing new measures to improve real-time air quality data provision in major cities, which will be used to construct an index to communicate health risks to the public (CitationHsu & Yin, 2014).

As the example of India's air quality portrays, citizen scientists, independent watchdogs, private sector companies and third-party organizations can not only contribute to the demand for new or improved data, but they can also contribute data in a way that enhances the policy analytical capacity of governments. Within the international relations literature, the science-policy interface has generally been characterized as a dialectic between scientists and policymakers, generally ignoring the role of citizens and other third parties in contributing to knowledge generation (CitationBäckstrand, 2003). The last decade has witnessed growth in the generation and use of geographical information, particularly due to the proliferation of open source data (such as the user-generated database Open Street Map) and the increasing realization that citizens can play a key role in contributing data, including crowdsourcing, user-contributed data, and what is called Volunteered Geographical Information (VGI). The World Water Monitoring Challenge, for example, encourages people to monitor local water quality and share results. Other research efforts ask citizens to monitor plankton biodiversity in oceans; and to donate spare computer time to run climate simulations and models as a cost-effective means to source processing power (CitationCarrington, 2014; Kinver, 2014). The Air Quality Egg, a “community-led air quality sensing network,” allows for individuals with a device to monitor and report in real-time on health-related air pollutants (www.airqualityegg.com).

In terms of supply, user-contributed data could act as ground truths for government or top-down collected data, reducing uncertainty in official accounts and statistics. Mobile technology or cell phones equipped with ambient sensors would allow for citizens to monitor pollutants in the air or contaminants in their drinking water (CitationUS EPA, 2014). User-contributed data could also improve upon existing sources of data used to construct metrics to track progress. Already, citizen scientists are contributing data on species’ locations through projects like eBird (www.ebird.org) to refine habitat ranges (CitationSullivan et al., 2009). The smart-phone application Water Reporter app (www.waterreporter.org) allows for citizens to upload photos or report pollution run-off within a watershed. Photos of potential problems within waterways are sent to designated water managers, who are responsible for their resolution. Made low-cost and readily available, such technologies could arm citizen scientists with an arsenal of tools by which to contribute vast amounts of environmental data.

So far, user-contributed or crowdsourced data have not been considered for integration in official policy processes, such as the SDG implementation dialogs. Adoption of these new data begs a series of new questions. How can leaders engage citizens to meaningfully contribute data? What pathways can citizens participate in to maximize transparency as a way of ensuring governments are being held accountable to the SDGs? Another question is how, and by what means citizen science can be credible and legitimate in policy processes. CitationBäckstrand (2003) notes that the lack of a theoretical foundation for coupling democratic citizen participation with scientific assessment is a major cause for the separation of civic science and policy. The uptake of citizen science data into official policy processes is relatively uncharted territory.

Furthermore, if citizens are to contribute data for the purposes of enhancing government policy analytical capacity, protocols and guidelines must be established to protect individual rights and privacy. Individuals should know how their data will be used and be ensured that their privacy is maintained. Following controversies surrounding the National Security Agency (NSA) and security breaches by companies like Target, citizens in the United States are particularly wary of government surveillance, intrusion of privacy, and misuse of personal data (CitationStout, 2014). The recently updated CitationOECD's Guidelines on the Protection of Privacy and Transborder Flows of Personal Data provide a starting point for the harmonization of privacy laws to protect transborder flows of personal information. In areas of relative information poverty where information and communication technologies are still emerging, more can be done to equip citizens in these countries with the tools to participate equally in the data revolution. The transfer of low-cost technology transfer mechanisms to provide citizens in these countries with free or affordable personal environmental monitoring devices or community-based systems could be a specific task of the UN SDG process.

4.1 Business and third-party engagement

Some businesses are better poised than governments to collect environmental data. Coca-Cola operates in over 200 countries and since 2004 has invested more than $1.5 million USD recording and assessing physical water risk parameters, including water quantity, baseline and ground water stress, and drought severity (CitationCoca-Cola, 2012). As a beverage company that requires 333 ounces of water to generate $1 of revenue, Coca-Cola's bottom line rests on accurate knowledge of water resources. Its reputation has come under criticism for over-extracting water resources in water-stressed areas in countries like India, which is one of its biggest growth markets. In 2011 they teamed up with environmental think tank World Resources Institute to make all of their proprietary data publicly available through a web platform called Aqueduct, as a way to galvanize other businesses to evaluate their water impacts as well.

Third-party organizations can also validate data. The Sea Around Us, a research group at the University of British Columbia, for example, regularly “reconstructs” global fisheries data that are often incomplete and misreported by governments. They have noted that the UN's Food and Agriculture Organization (FAO), which develops the only global database on fisheries, underestimates the percentage of overexploited and collapsed fish stocks (CitationFroese, Zeller, Kleisner, & Pauly, 2012). This discrepancy is largely due to variable quality in reported fish catch data, but also due to the fact that the FAO overlooks other sources of valuable data, such as reconstructed catch data. What results is a myopic view of the status of the world's fisheries, which could potentially have disastrous consequences for global aquaculture and ocean health.

Yet SDG negotiations so far have not explored the realm of possibilities for private-sector and non-government engagement. The most recent progress report on the SDG discussions states that “business should be part of the solution,” but only by encouraging “greater private sector uptake of sustainability reporting.” A 2013 survey by KPMG shows that 71 percent of companies worldwide are already conducting sustainability reporting (CitationKPMG, 2013). The more critical issue is how companies can be incentivized to share data and contribute to measuring progress toward SDGs. If Coca-Cola collects the best global water data, then why not use their data to measure progress toward a global water SDG? If Google is best able to process vast amounts of satellite data, why not use their computing power?

Corporate or private sponsorship, while perhaps opposed by audiences who may fear commercialization of the SDGs, of new data streams could help bolster innovative sustainability-minded companies or individuals to share data. Crowdsourcing developers or tech companies to develop a transparent, centralized online “dashboard” to make it easy for individuals, businesses, and third-party institutions to contribute and share data could be administered by the UN Environment Programme (UNEP), which countries at the Rio + 20 Earth Summit pledged to bolster.

4.2 Potential drawbacks

While the engagement of new actors — from citizens, to scientists and businesses — represents opportunities, it also poses a series of challenges. Citizens may not often be equipped with the training or policy analytical capacity themselves to accurately collect and report data. Often monitoring environmental phenomena or collecting data require training in particular scientific protocols. If citizens are not appropriately trained, the reliability of the data collected could be compromised. Measures to anonymize or protect the identity of data contributors could also produce an adverse effect of allowing spurious or false data to be reported, if devices fall into the hands of unqualified users. Businesses, confronting similar adverse political motivations as governments, may also choose to self-select which data to report and which to conceal. The emergence of multiple streams of information may beg questions as to who has the authority to determine which data are accurate or represent the “truth.”

While determination of authority is a much more philosophical and contentious issue to solve, a range of methods to address issues of the verification and quality of citizen data have been proposed. For one, the idea of citizen science does not imply total ignorance or lack of training and qualification on the part of an individual contributing data. Instead, contributors are “specialized citizens” (CitationFischer, 1993) or even scientists as citizens, meaning there are often protocols and training that citizen scientists must undertake before contributing data. On the other hand, some organizations seeking to integrate citizen science design data collection in a foolproof way that does not require any specialized training or equipment on the part of contributors. For example, the Creek Watch project — in which users use an iPhone application and website to contribute information on water flow and trash data from creeks and rivers — has designed water parameters such as flow rate that are easy enough for anyone to collect (CitationKim, Robson, Zimmerman, Pierce, & Haber, 2011). Others incorporate the use of expert review to ensure the quality of citizen data (CitationWiggins, Newman, Stevenson, & Crowston, 2011).

Regardless of these challenges, evidence-based approaches are grounded in the belief that better decisions are those that have the most amount of information available at hand, and that the broad access to such data allows for improved results (CitationBennett & Howlett, 1992; Howlett, 2009). Multiple, iterative monitoring and evaluation of results from a range of sources and sensors, whether technical or human, provide an ability to cross-check, verify, and ultimately improve environmental decision-making and management.

5 Conclusion

This paper has provided a discussion of how varied policy analytical capacity can be evaluated at the systemic level through observed gaps in environmental data monitoring and reporting among countries. Examining global data availability for air and water quality performance, this paper has developed a rough proxy of policy analytical capacity for global environmental systems. While an approximation at best, examining the availability of data can help identify potential areas of low policy analytical capacity to monitor and report on environmental issues.

In the context of the current debate surrounding the SDGs, addressing varied policy analytical capacity among countries is critical if global targets are to be measured and progress tracked. A High-Level Panel of the United Nations in November 2013 called for a “Data Revolution” to address the lack of reliable statistics for many countries (CitationUN, 2013). This gap is where a new suite of actors, such as private-sector businesses, third-party organizations, and individuals, can catalyze enhanced analytical capacity for policy change.

References

- H.M. Alshuwaikhat . Beijing air pollution ‘hazardous’: US embassy. 2011; Associated Foreign Press. Available from: http://www.google.com/hostednews/afp/article/ALeqM5iJTkt3-cVITDVI6xipFX4aAyYjpw?docId=CNG.d957d0999e1088b0ce61729ec5b6c9f1.5f1 (accessed 1.11.12).

- H.M. Alshuwaikhat . Strategic environmental assessment can help solve environmental impact assessment failures in developing countries. Environmental Impact Assessment Review. 25(4): 2005; 307–317.

- D. Archibugi , A. Coco . Measuring technological capabilities at the country level: A survey and a menu for choice. Research Policy. 34(2): 2005; 175–194.

- K. Bäckstrand . Civic science for sustainability: Reframing the role of experts, policy-makers and citizens in environmental governance. Global Environmental Politics. 3(4): 2003; 24–41.

- C.J. Bennett , M. Howlett . The lessons of learning: Reconciling theories of policy learning and policy change. Policy Sciences. 25(3): 1992; 275–294.

- P.K. Blind . Building trust in government in the twenty-first century: Review of literature and emerging issues. 7th Global Forum on Reinventing Government Building Trust in Government. 2007, June; 26–29.

- C.J.A. Bradshaw , X. Giam , N.S. Sodhi . Evaluating the relative environmental impact of countries. PLoS ONE. 5(5): 2010; e10440.

- D. Carrington . Citizen scientists: Now you can link the UK winter deluge to climate change. The Guardian. 2014. Available from: http://www.theguardian.com/environment/damian-carrington-blog/2014/mar/04/citizen-scientists-now-you-can-link-the-uk-winter-deluge-to-climate-change .

- D.W. Case . The law and economics of environmental information as regulation. Environmental Law Reporter. 31 2001; 10773–10789.

- M. Castells . The rise of the network society. Volume I of the information age: Economy, society, and culture. 1996; Blackwell: Malden, MA

- M. Castells . The power of identity. Volume II of the information age: Economy, society, and culture. 1997; Blackwell: Malden, MA

- M. Castells . End of millennium. Volume III. Economy, society and culture. 1997; Blackwell: Malden, MA

- Coca-Cola . Water Stewardship. 2012. Available from: http://www.coca-colacompany.com/sustainabilityreport/world/water-stewardship.html#section-looking-ahead-a-more-nuanced-approach-to-replenishment .

- T. Dietz , E. Ostrom , P.C. Stern . The struggle to govern the commons. Science. 302(5652): 2003; 1907–1912.

- S. Dinda . Environmental Kuznets curve hypothesis: A survey. Ecological Economics. 49 2004; 431–455.

- E.C. Economy . The river runs black: The environmental challenge to China's future. 2004; Cornell University Press: Ithaca, NY

- D.C. Esty . Toward data-driven environmentalism: The environmental sustainability index. The Environmental Law Reporter: News & Analysis. 2001, May; 2001.

- D.C. Esty . Environmental protection in the information age. New York University Law Review. 79 2004; 115–211.

- F. Fischer . Citizen participation and the democratization of policy expertise: From theoretical inquiry to practical cases. Policy Sciences. 26(3): 1993; 165–187.

- A. Florini . Introduction: The battle over transparency. Ann Florini . The right to know: Transparency for an open world. 2007; Columbia University Press: New York 1–16.

- J. Foulon , P. Lanoie , B. Laplante . Incentives for pollution control: Regulation or information?. Journal of Environmental Economics and Management. 44(1): 2002; 169–187.

- R. Froese , D. Zeller , K. Kleisner , D. Pauly . What catch data can tell us about the status of global fisheries. Marine Biology. 2012 10.1007/s00227-012-1909-6.

- F. Fukuyama . What is governance?. Governance. 26(3): 2013; 347–368.

- G.M. Grossman , A.B. Krueger . Economic growth and the environment. The Quarterly Journal of Economics. 110(2): 1995; 353–377.

- D. Guan , Z. Liu , Y. Geng , S. Lindner , K. Hubacek . The gigatonne gap in China's carbon dioxide inventories. Nature Climate Change. 2 2012; 672–675.

- P.S.A. Gupta , J. Christopher , R. Wang , Y. Lee Gehrig , N. Kumar . Atmospheric Environment. 40 2006; 5880–5892.

- M. Hammersley . Is the evidence-based practice movement doing more good than harm? Reflections on Iain Chalmers’ case for research-based policy making and practice. Evidence & Policy: A Journal Of Research, Debate and Practice. 1(1): 2005; 85–100.

- G. Harris . Beijing's air would be a step up for smoggy Delhi. The New York Times. 2014, January. 26.

- M. Howlett . Policy analytical capacity and evidence-based policy-making: Lessons from Canada. Canadian Public Administration. 52(2): 2009; 153–175.

- M. Howlett , S. Joshi-Koop . Transnational learning, policy analytical capacity, and environmental policy convergence: Survey results from Canada. Global Environmental Change. 21(1): 2011; 85–92.

- A. Hsu . Limitations and challenges of provincial environmental protection Bureaus in China's environmental monitoring, reporting, and verification. Environmental Practice. 15(3): 2013; 280–292.

- A. Hsu , J. Emerson , M. Levy , A. de Sherbinin , L. Johnson , O. Malik . The 2014 environmental performance index. 2014; Yale Center for Environmental Law and Policy: New Haven, CT Available from: http://www.epi.yale.edu .

- A. Hsu , D. Yin . New air quality index may help India's cities breathe easier. The Huffington Post. 2014, August. Available from: http://www.huffingtonpost.com/angel-hsu/new-air-quality-index-may_b_5674853.html .

- International Development Association . Measuring results: Improving national statistics in IDA countries. 2004; IDA.

- M. Jänicke . The political system's capacity for environmental policy. In National environmental policies. 1997; Springer: Berlin, Heidelberg 1–24.

- M. Jänicke . Trend-setters in environmental policy: The character and role of pioneer countries. European Environment. 15(2): 2005; 129–142.

- S. Kim , C. Robson , T. Zimmerman , J. Pierce , E.M. Haber . Creek watch: Pairing usefulness and usability for successful citizen science. Proceedings of the SIGCHI conference on human factors in computing systems. 2011, May; ACM. 2125–2134.

- M. Kinver . Citizen science study to map the oceans’ plankton. BBC News. 2014. Available from: http://www.bbc.co.uk/news/science-environment-26483166 .

- P.R. Kleindorfer , E.W. Orts . Informational regulation of environmental risks. Risk Analysis. 18 1999; 155–170.

- KPMG . The KPMG survey of corporate sustainability reporting 2013. 2013. Available from: http://www.kpmg.com/Global/en/IssuesAndInsights/ArticlesPublications/corporate-responsibility/Documents/corporate-responsibility-reporting-survey-2013.pdf .

- K. Levin , B. Cashore , S. Bernstein , G. Auld . Playing it forward: Path dependency, progressive incrementalism, and the ‘Super Wicked’problem of global climate change. International studies association 48th annual convention, February (Vol. 28). 2007, February

- K. Lieberthal . Introduction. Lieberthal G. Kenneth , M. David Lampton . Bureaucracy, politics, and decision making in post-Mao China. 1992; University of California Press: Berkeley

- L. Lim . Beijing's ‘Airpocalypse’ spurs pollution controls, public pressure. NPR. 2013. Available from: http://www.npr.org/2013/01/14/169305324/beijings-air-quality-reaches-hazardous-levels (accessed 25.07.13).

- O. Malik , A. Hsu , L. Johnson , A.A. de Sherbinin . A global indicator of wastewater treatment to inform the Sustainable Development Goals (SDGs). Environmental Science and Policy. 48 2015; 172–185.

- S. Metzenbaum . Making measurement matter: The challenge and promise of building a performance-focused environmental protection center. Report CPM-98-2. 1998; Brookings Institution's Center for Public Management: Washington, D.C. http://www.brook.edu/gs/cpm/metzenbaum.pdf .

- A.P.J. Mol . Environmental governance in the information age: The emergence of informational governance. Environment and Planning C: Government and Policy. 24 2006; 497–514.

- A.P.J. Mol . Environmental governance through information: China and Vietnam. Singapore Journal of Tropical Geography. 30 2009; 114–129.

- J. Mazoomdaar . Why Delhi is losing its clean air war. BBC News. 2014, February. Available from: http://www.bbc.com/news/world-asia-india-26012671 .

- S. Mukherjee , D. Chakraborty . Is environmental sustainability influenced by socioeconomic and sociopolitical factors? Cross-country empirical analysis. Sustainable Development. 2010

- OECD . Guidelines on the protection of privacy and transborder flows of personal data — 2013 revision. 2013; OECD: Paris Available from: http://www.oecd.org/sti/ieconomy/privacy.htm#newguidelines .

- W. Parsons . Not just steering but weaving: Relevant knowledge and the craft of building policy capacity and coherence. Australian Journal of Public Administration. 63(1): 2004; 43–57.

- L. Raschid-Sally , P. Jayakody . Drivers and characteristics of wastewater agriculture in developing countries: Results from a global assessment. Vol. 127 2009; IWMI.

- N. Riddell . Policy research capacity in the Federal Government. 2007; Policy Research Initiative: Ottawa

- R.I. Rotberg . Good governance means performance and results. Governance. 2014

- P. Sabatier , H.C. Jenkins-Smith . Policy change and learning: An advocacy coalition approach. 1993; Westview Press: Boulder

- D. Stern . The rise and fall of the environmental Kuznets curve. Rensselaer Working Papers in Economics. 2003. Available from: www.economics.rpi.edu/workingpapers/rpi0302.pdf (accessed 27.12.12).

- J. Stiglitz . Transparency in government. The right to tell. 2002; The World Bank: Washington, DC

- H. Stout . Newly wary, shoppers trust cash. The New York Times. 2014, February. Available from: http://www.nytimes.com/2014/02/03/business/newly-wary-shoppers-trust-cash.html?_r=0 .

- B.L. Sullivan , C.L. Wood , M.J. Iliff , R.E. Bonney , D. Fink , S. Kelling . eBird: A citizen-based bird observation network in the biological sciences. Biological Conservation. 142(10): 2009; 2282–2292.

- T. Tietenberg . Disclosure strategies for pollution control. Environmental & Resource Economics. 11 1998; 587–588.

- U.S. Environmental Protection Agency (USEPA . Particulate matter. 2013. Available from: http://www.epa.gov/pm/ (accessed 10.02.13).

- US Environmental Protection Agency (USEPA . Next generation air monitoring. 2014. Available from: http://www.epa.gov/airscience/next-generation-air-measuring.htm .

- UN . What is the data revolution? UN high-level panel on the post-2015 development agenda. 2013. Available from: http://www.post2015hlp.org/wp-content/uploads/2013/08/What-is-the-Data-Revolution.pdf .

- United Nations Statistics Division (UNSD . System of environmental-economic accounting for water. 2012; United Nations: New York 196. Available from: http://unstats.un.org/unsd/envaccounting/seeaw/seeawaterwebversion.pdf .

- K. Van Kersbergen , F. Van Warden . ‘Governance’ as a bridge between disciplines: Cross-disciplinary inspiration regarding shifts in governance and problems of governability, accountability and legitimacy. European Journal of Political Research. 43 2004; 143–171.

- H. Wang , J. Bi , D. Wheeler , J. Wang , D. Cao , G. Lu . Environmental performance rating and disclosure: China's GreenWatch program. Journal of Environmental Management. 71 2004; 123–133.

- A. Wiggins , G. Newman , R.D. Stevenson , K. Crowston . Mechanisms for data quality and validation in citizen science. 2011 IEEE seventh international conference on IEEE. 2011, December; 14–19.

- World Health Organization (WHO . Ambient (outdoor) air pollution in cities database 2014. 2014; World Health Organization: Geneva Available from: http://www.who.int/phe/health_topics/outdoorair/databases/cities/en/ .

- X. Wu , M. Ramesh , M. Howlett . Blending skills and resources: A matrix model of policy capacities. Paper presented at the Lee Kuan Yew School-Zhejiang Workshop on Policy Capacity Hangzhou. China 2014, May

- Yale Center for Environmental Law and Policy (YCELP Center for International Earth Science Information Network at Columbia University Chinese Academy for Environmental Planning, and the City University of Hong Kong . Towards a China environmental performance index. 2011. Available from: http://environment.yale.edu/envirocenter/files/China-EPI-Report.pdf .