?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Data-driven most powerful tests are statistical hypothesis decision-making tools that deliver the greatest power against a fixed null hypothesis among all corresponding data-based tests of a given size. When the underlying data distributions are known, the likelihood ratio principle can be applied to conduct most powerful tests. Reversing this notion, we consider the following questions. (a) Assuming a test statistic, say T, is given, how can we transform T to improve the power of the test? (b) Can T be used to generate the most powerful test? (c) How does one compare test statistics with respect to an attribute of the desired most powerful decision-making procedure? To examine these questions, we propose one-to-one mapping of the term “most powerful” to the distribution properties of a given test statistic via matching characterization. This form of characterization has practical applicability and aligns well with the general principle of sufficiency. Findings indicate that to improve a given test, we can employ relevant ancillary statistics that do not have changes in their distributions with respect to tested hypotheses. As an example, the present method is illustrated by modifying the usual t-test under nonparametric settings. Numerical studies based on generated data and a real-data set confirm that the proposed approach can be useful in practice.

1 Introduction

Methods for developing and examining data-based decision-making mechanisms have been widely established in both theoretical and experimental statistical frameworks. The common approach for evaluating modern data-based testing algorithms follows the standards and foundations formulated nearly a century ago. In this context, for an extensive review and associated examples we refer the reader to Lehmann and Romano (Citation2005). The criteria for which statistical tests are commonly competed against each other uses the following prescription: (a) Type I error (TIE) rates of considered tests are fixed at the same level, say α; and (b) power levels of the tests are compared. This classical principle was largely created and advocated by J. Neyman and E. S. Pearson in a series of substantive papers published during 1928–1938 (e.g., Lehmann Citation1993). In this framework, the likelihood methodology is associated with the likelihood ratio concept, which allows for the development of powerful statistical inference tools in decision-making tasks.

In view of this, the likelihood ratio principle can be employed across a wide range of decision-making problems, although likelihood ratio tests are not completely specified in many practical applications. Cases exist in which estimated parametric likelihood ratio statistics can have different formulations depending upon the underlying schemes of estimations. Other times, the relevant likelihood functions may be quite complicated when, for example, the observations belong to correlated longitudinal data subject to some type of missing data mechanism. There are other nonparametric scenarios that limit our ability to write corresponding likelihood ratio statistics. A systematic study of the inherent properties of likelihood ratios is necessary for proposing policies to advise on procedures for constructing, modifying, and/or selecting test statistics.

Without loss of generality, and in order to simplify the explanations of the main aim of this article, we state the following formal notations. Assume we observe the underlying data D with the goal of testing a simple null hypothesis H0 against its simple alternative H1. To this end, we let denote a real valued one-dimensional statistic based on D that supports rejection of H0, if T(D) > C, where C is a fixed test-threshold. We write

and

to denote the probability and expectation under Hk,

, respectively. Throughout most of this article, we suppose that the probability distributions

of D are absolutely continuous with respect to a given sigma finite measure τ defined over

, where

is an additive class of sets in a space, say

, over which D is distributed. Then, we have nonnegative probability density functions f0 and f1 with respect to τ that satisfy

Note that f0 and f1 need not belong to the same parametric family of distributions. Define the probability density functions and

of T such that

where

means the indicator function, Hk is assumed to be true, and

. In this framework, the likelihood ratio

is the most powerful (MP) test statistic, if

and

, for all

. In scenarios when the random observable data D is multidimensional, f0 and f1 are generalized joint probability density with respect to τ (e.g., Lehmann Citation1950).

For the sake of simplicity, it will be assumed that in a case when a researcher plans to employ a test statistic , where L(u) is a monotonically increasing function with the inverse function

, we suppose the test statistic

is in use and T(D) is MP.

The starting point of our study is associated with the following property of Λ that can be found in Vexler and Hutson (Citation2018) and is included for the sake of completeness.

Proposition 1.1.

The likelihood ratio statistic Λ satisfies for all u > 0.

The proof is deferred to the supplementary materials.

An interesting observation is that the likelihood ratio , based on the likelihood ratio Λ is itself, forms the likelihood ratio Λ, that is,

. Consider the situation when a value of a statistic or a single data point, say X, is observed. The best transformation of X for making a decision with respect to H0 against H1 is the ratio

. In the case

, the observed statistic cannot be improved, and the transformation

is invertible at

, since the likelihood ratio Λ is a root of the equation

.

Let us for a moment assume that we could improve Proposition 1.1 by including the idiom “if and only if” in its statement, thereby asserting: a statistic T has a likelihood ratio form if and only if . Then, since Λ is the MP test statistic, having a given test statistic

, we will try to modify the structure of T, minimizing the distance between

and

, for at least some values of u. In this framework, comparing two test statistics, say T and B, we can select T, if, for example,

. Note that in nonparametric settings we can approximate and/or estimate distribution functions of test statistics in many scenarios. Unfortunately, the simple statement of Proposition 1.1 cannot be used to characterize MP test statistics. For example, when

, where X1 is from a normal distribution with

,

, and

, the statistic

satisfies

whereas the MP test statistic is

. That is, to characterize MP tests, Proposition 1.1 needs to be modified.

Remark 1.1.

In this article, we avoid using the term “Uniformly Most Powerful” in order to be able to study cases when parameters do not play an essential role in testing procedures as well as to consider situations where uniformly most powerful tests do not exist, e.g., nonparametric testing statements. For example, let H0 infer that observations are from a standard normal distribution versus that the observations follow a standard logistic distribution, under H1. In this case, we have the likelihood ratio MP test, whereas invoking the term “uniformly most powerful” can be misleading. In Section 3, the MP concept has a hypothetical context to which we aim to approach when we develop test procedures. (See also item (iii) in Remark 2.2 and note (d) presented in Section 6, in this aspect.)

The goal of the present article is 2-fold: (a) through an extension of Proposition 1.1, we describe a way to characterize MP tests, and (b) we exemplify the usefulness of the theoretical characterization of MP tests via corrections of test statistics that can be easily applied in practice. Under this framework, Section 2 considers one-to-one mapping of distribution properties of test statistics to their ability to be most powerful. The proven characterization is shown to be consistent with the principle of sufficiency in certain decision problems extensively evaluated by Bahadur (Citation1955). In Section 3, to exemplify potential uses of the proposed MP characterization, we apply the theoretical concepts shown in Section 2 toward demonstrating an efficient principle of improving commonly used test procedures via employing relevant ancillary statistics. Ancillary statistics have distributions that do not depend on the competing hypotheses. However, we show that ancillary statistics can make significant contributions to inference about the hypotheses of interest. For example, although it seems to be very difficult to compete against the well-known one sample t-test for the mean, we assert that a simple modification of the t-test statistic can increase its power. This can be accomplished by accounting for the effect of population skewness on the distribution of the sample mean. Section 3 demonstrates modifications of testing procedures that can be implemented under nonparametric assumptions when there are no MP decision-making mechanisms. Then, in Section 4, we show experimental evaluations that confirm high efficiency of the presented schemes in various situations. Furthermore, as described in Section 5, when used to analyze data from a biomarker study associated with myocardial infarction disease, the method proposed in Section 3 for one-sample testing about the median is more sensitive as compared with known methods to detect asymmetry in the data distributions. Finally, this paper is concluded with a discussion in Section 6.

2 Characterization and Sufficiency

In order to gain some insight into the purpose of this section, the following illustrative example is offered.

2.1 One-Sample Test of the Mean

Let X1, X2 be a random sample from a normal population with mean μ and variance . We consider testing

versus

, where δ is a fixed value. We present this simple toy example to illustrate our results shown in Section 2.2, not to offer a contender to the usual t-test. In this example, the statistic

can be used for MP testing. However, one may feel that, for example, the statistic

could be reasonable for assessing the competing hypotheses H0 and H1, for some

. The vector

has a bivariate normal density function, with

, and

. Then, by defining a joint density function of

-based statistics A1, A2 in the form

, it is easy to observe that the ratio

does not depend on v, where v is an argument of the joint density

relating to A’s component. In particular, this means that after surveying the two data points

and A, we can improve the

-based decision-making mechanism by creating the MP statistic for testing H0 versus H1 in the likelihood ratio form

, which only requires the computation of

. Thus, one might pose the question: can the observation above be generalized to extend Proposition 1.1? In this case, it seems to be reasonable that to provide an essential property of the MP concept, relationships with other D-based statistics should be taken into account.

The second aspect of our approach is to characterize a scenario where, say, statistic A1 is more preferable in the construction of a test than statistic A2. In this context, as will be seen later, A1 is superior to A2, if the ratio does not depend on v. To exemplify the benefits of this rule, let us pretend that it is unknown that

is the best statistic in this section such that we can consider the following task. The problem then is to indicate a value of

in the statistic

such that T outperforms

, for all

. Since the density

is bivariate normal, simple algebra shows that

is not a function of v, if

. Then, the solution is a = 0.5. In reality, we do know that T, with a = 0.5, is the best statistic in this framework. This example illustrates our point.

2.2 Theoretical Results

In this section, the main results are provided in Propositions 2.1–2.5 that establish the characterization of MP tests. The proofs of Propositions 2.1–2.5 are included in the supplementary materials for completeness and contain comments that augment the description of the obtained results. Proposition 2.5 revisits the characterization of MP tests in the light of sufficiency.

To extend Proposition 1.1, we define a joint density function of statistics and

in the form

, provided that Hk is true,

. Then, the likelihood ratio test statistic, Λ, has the following property.

Proposition 2.1.

For any statistic , we have

, for all

and

.

Proposition 2.1 emerges as a generalization of Proposition 1.1, since yields

In the following claim, it is shown that Proposition 2.1 can be augmented to imply a necessary and sufficient condition on test statistics distributions to present MP decision-making techniques. Define C to be a test threshold.

Proposition 2.2.

Assume a statistic for testing, , satisfies

, one rejects H0 when T > C. The test statistic T is MP if and only if (iff)

, for any statistic

and all

.

Note that the condition “T is strictly nonnegative” is employed in Fisher and Robbins (Citation2019), where a monotonic logarithmic transformation of T, a test statistic, may improve the power of the T-based test when the corresponding TIE rate is asymptotically controlled at α. However, the requirement is not critical, because if we evaluate a test statistic, say

, that can be negative, then a monotonic transformation

can assist in this case.

We can remark that, in scenarios where density functions of test statistics do not exist, the arguments employed in the proof of Proposition 2.2 can be applied to obtain the next statement.

Proposition 2.3.

The test statistic T(D) > 0 is MP iff , for every function

of D.

Remark 2.1.

Since , the condition

implies

, for every

, which means, with probability one under f0, we have

. Note also that, in Proposition 2.3, we can use g(D), satisfying

, for all m > 0.

The scheme used in the proof of Step (2) of Proposition 2.2 yields the following result.

Proposition 2.4.

A statistic is more powerful than a statistic T2, if the ratio

Z

, for all

.

It is interesting to note that, by virtue of Propositions 1.1 and 2.2, for any D-based statistic , we have

and then

if T is MP. That is to say,

, where the notation

means a conditional density function of A given T under Hk,

. In this case, when A is independent of T, we obtain

, and then

cannot discriminate the hypotheses. We can write that

is ancillary, meaning

. This motivates us to associate the results above with the principle of sufficiency.

According to Bahadur (Citation1955), in the considered framework, we can call to be a sufficient test statistic, if

, for each

and all

. In this context, the statements mentioned above assert the next result.

Proposition 2.5.

The following claims are equivalent:

is sufficient and

;

T is a MP statistic for testing the competing hypotheses H0 and H1.

Proposition 2.5 presents an argument to the reasonableness of making a statistical inference based solely on the corresponding sufficient statistics.

Remark 2.2.

We can note the following facts:

Kagan and Shepp (Citation2005) have exemplified a sufficiency paradox, when an insufficient statistic preserves the Fisher information.

In order to extend Proposition 2.5, statements related to a wide spectrum of Basu’s theorem-type results (e.g., GhoshCitation2002) can be employed, in certain situations. To the best of our knowledge, there are no direct applications of Basu’s theorem to the questions considered in the present article.

In Bayesian styles of testing (e.g., Johnson Citation2013), Proposition 1.1 can be extended to treat Bayes Factors, see, for example, Proposition 5 of Vexler (Citation2021), in this context. Then, Propositions 2.1 and 2.2 can be easily modified to establish integrated MP tests with respect to incorporated prior information (Vexler, Wu, and Yu Citation2010).

3 Applications

Section 2 carries out the relatively general underlying theoretical framework for the MP characterization concept. In this section, we outline three applications of the proposed MP characterization principle, by modifying well-accepted statistical tests in an easy to implement manner. It is hoped that the proposed MP characterization can provide different benefits for developing, improving, and comparing decision-making algorithms in statistical practice.

A common problem arising in statistical inference is the need for methods to modify a given test statistic in order to improve the performance of controlling the TIE rate and power of the corresponding decision-making scheme. For example, the accuracy of asymptotic approximations for the null distribution of a test statistic may be increased by incorporating Bartlett correction type mechanisms or/and location adjustment techniques. In this context, we refer the reader to the following examples: Hall and La Scala (Citation1990), for modifying nonparametric empirical likelihood ratios; Chen (Citation1995), for different transformations of the t-test statistics assessing the mean of asymmetrical distributions. Recently, Fisher and Robbins (Citation2019) proposed to use a logarithmic transformation to obtain a potential increase in power of the transformed statistic-based test.

This section demonstrates use of the considered MP principle, following the simple idea outlined below. Suppose that we have a reasonable test statistic To and we wish to improve to be in a form, say TN, approximately satisfying the claim

, for any statistic

and all

. Given that in general nonparametric settings there are no MP tests, it would be attractive to reach the MP property

at least for some statistic A, especially for some ancillary statistic. Informally speaking, by having A with

, we can remove the influence of A from To to create TN such that the ratio

is a function of u only. In this case, Proposition 2.4 could insure that TN outperforms A. This can be achieved via an independence between TN and A that is exemplified in Sections 3.3–3.5 in detail.

Through the following examples, we aim to show our approach in an intuitive manner.

3.1 Examples of the Use of Ancillary Statistics

We begin with displaying the toy examples below that illustrate our key idea.

Let independent data points X1 and X2 be observed; when it is assumed that and

are known. One can use the simple statistic

to test

μ = 0 against

. Easily, one can confirm that

is an ancillary statistic. We now consider a mechanism for transforming T and making a modified test statistic that is independent of

. Define

, a transformed version of T, where γ is a root of the equation

. Then, we obtain

. Thus, the derived statistic

is certainly a successful transformation of the initial statistic T, which presents the MP test statistic. For instance, we denote the power

when

and

. depicts the function

, the difference between the power levels of the

-based test and those of the T-based test at

, plotted against the function

, for

. As expected, the function

reaches its maximum when

. Moreover, it turns out that we do not need much accuracy in approximating the equation

to outperform the T-based test when we use the modified test statistic

. Then, intuitively, we can suppose that a transformed test statistic could include estimated elements while still providing good power characteristics for its decision-making algorithm.

Fig. 1 Graphical evaluations related to the examples shown in Section 3.1. Panel (a) plots , the power of the

-based test minus the power of the T-based test at the

level, against the covariance

, for

, where

. Panel (b) plots the powers

(solid line),

(longdashed line), and

(dotted line) at the

level, where

, and

, for

.

![Fig. 1 Graphical evaluations related to the examples shown in Section 3.1. Panel (a) plots P(a)−P(0), the power of the (T+a(X1−X2))-based test minus the power of the T-based test at the α=0.05 level, against the covariance cov(a)=cov(T+a(X1−X2),X1−X2), for a∈[−0.01,0.9], where T=0.5(X1+X2), X1∼N(μ,1), X2∼N(μ,42), E0(Xi)=0, E1(Xi)=5, i∈[1,2]. Panel (b) plots the powers PTO(μ)=Prμ{TO>CαTO} (solid line), PTN(μ)=Prμ{TN>CαTN} (longdashed line), and PT(μ)=Prμ{T>CαT} (dotted line) at the α=0.05 level, where T=(X1+X2+X3)/3, TN=T+γ(X1−X2), TO=(X1/σ12+X2/σ22+X3/σ32)/(1/σ12+1/σ22+1/σ32), γ=(σ22−σ12)(σ22+σ12)−1/3, X1∼N(μ,1), X2∼N(μ,42), and X3∼N(μ,32), for μ∈[0,5].](/cms/asset/d51609b1-84fe-4dd1-b342-8ab2e71a96ea/utas_a_2192746_f0001_b.jpg)

In various situations, we shall not exclude the possibility that there exists more than one ancillary statistic for a given testing statement. Let us exemplify such case, assuming we observe X1, X2, and X3 from the normal distributions , and

, respectively, where

, are known. Suppose we are interested in testing

μ = 0 versus

. The statistic to be modified is

. The observation

is an ancillary statistic with respect to μ. Define

with

, thereby obtaining that

. Then, it is clear that TN is somewhat better than T, but

is superior to TN in the terms of this example. Define the powers

, and

, where the test thresholds

, and

satisfy

exemplifies the behavior of the functions

, and

, when

, and

.

Thus, to improve a given test statistic, say T, we can suggest that one pays attention to a relevant ancillary statistic, say A, modifying T to be independent (or approximately independent) of A.

Note that, although the concept of ancillarity asserts that ancillary statistics do not provide information about the parameters of interest, different roles of ancillary statistics in parametric estimation have been dealt with extensively in the literature. In this context, for an extensive review, we refer the reader to Ghosh, Reid, and Fraser (Citation2010). For example, assume we observe the vectors , from a bivariate normal distribution with

,

, and

, where

is unknown. The statistics

and

are ancillary. According to Ghosh, Reid, and Fraser (Citation2010), to define unbiased estimators of ρ, it can be recommended to use the statistics

. As another example, when ancillary statistics are applied, we outline a case of so-called Monte Carlo swindles, simulation based methods that allow small numbers of generated samples to produce statistical accuracy at the level one would expect from much larger numbers of generated samples. Boos and Hughes-Oliver (Citation1998) discussed the following procedure. To estimate the variance of the sample median M of a normally distributed sample

, the Monte Carlo swindle approach estimates

(instead of

) by using the N Monte Carlo samples of

and then

is added to obtain an efficient estimate of

. In order to justify this framework, we employ that the statistic

is ancillary. Now, since

is complete sufficient,

and V are independent by Basu’s theorem. Then,

is independent of the sample median of V. Therefore,

It is clear that, when X1 has a normal distribution, the contribution from to

is much larger than the contribution from

, where the component

is proposed to be estimated by simulation. This limits the error in estimation by simulation to a small part of

(for details, see Boos and Hughes-Oliver Citation1998).

3.2 Theoretical Support

The point of view mentioned above can be supported by the following results. Assume we have a test statistic , and the ratio

is a monotonically increasing function that has an inverse function, say W(u). In this scenario, Y can be transformed into the form

, thereby implying that

This means that the likelihood ratio

(See Proposition 1.1, in this context.) Then, we state the next proposition. Let a statistic satisfy

. Suppose we have the decision-making procedure based on a statistic

, and we can modify T to TN to achieve TN and A as independent terms under H0 and H1, when

, for a bivariate function ψ. We conclude that:

Proposition 3.1.

The TN-based test considered above is superior to that based on T, if the ratio is a monotonically increasing function.

The proof is deferred to the supplementary materials.

Note that Proposition 3.1 gives some insight into the connection between the power of statistical tests and ancillarity, concepts that seem to be unrelated, since ancillary statistics cannot solely discriminate the competing hypotheses H0 and H1.

Proposition 3.1 depicts the rationale for modifying the following well-known test statistics.

3.3 One Sample t-test for the Mean

Assume we observe iid data points that provide

. For testing the hypothesis

μ = 0 versus

, where

, the well-accepted statistic is

, where

, and

.

In this testing statement, it seems that the statistic , the sample variance, is approximately ancillary with respect to μ. Note also that To and S2 are independent, when

(To is MP, in this case). Then, we denote the statistic

and derive a value of γ, say γ0, that insures

. The statistic

is a basic ingredient of the modified test statistic we will propose. To this end, we define

, and employ the results from O’Neill (Citation2014) in order to obtain

The additional argument for using in a test for H0 versus H1 can be explained in the following simple fashion. It is clear that the stated testing problem can be treated in the context of a confidence interval estimation of μ. Thus, there is a relationship between the quality of testing

μ = 0 and the variance of an estimator of μ involved in corresponding decision-making schemes. (e.g., the t-test, To, uses

to estimate μ.) For the sake of simplicity, consider

that satisfies

and

. To find a value of γ that minimizes

, we can solve the equation

, where it is assumed we can write

Then, the root

minimizes

and is

, where γ0 implies

. That is,

. The statistic

includes

multiplied by

. This confirms that the statistic

can be somewhat more powerful than To, in the terms of testing H0 versus H1, for various scenarios of X1’s distributions.

Finally, we standardize the test statistic to be able to control its TIE rate, denoting the α level decision-making rule: the null hypothesis H0 is rejected if

where

and

estimate μ3 and

, respectively;

is the sample estimator of

; and the threshold

satisfies

with

. It can be interesting to rewrite TN in the form

, where

. The statistic TN is asymptotically N(0, 1)-distributed, under H0. Certainly, in the case of

, meaning To is MP, we have

. In the form TN, an adjustment for the skewness of the underlying data is in effect.

Note that, in the transformation of the test statistic shown above, we achieve uncorrelatedness between TN and S2, thus, simplifying the development of the nonparametric procedure. It is clear that the equality is essential to the asymptotic independence between TN and S2 (e.g., Ghosh, Balakrishnan, and Ng Citation2021, pp. 181–206).

Section 4 uses extensive Monte Carlo evaluations to demonstrate an efficiency of the statistic TN for testing H0 against various alternatives.

The testing procedures based on To and TN require σ to be known. This restriction can be overcome, for example by using bootstrap type strategies, Bayesian techniques and/or p-value-based methods introduced by Bayarri and Berger (Citation2000). In this article, we only note that there are practical applications in which it is reasonable to assume σ is known, for example, Maity and Sherman (Citation2006), Boos and Hughes-Oliver (Citation1998, sec. 3.2), as well as Johnson (Citation2013, p. 1729). In many biostatistical studies, biomarkers values are scaled in such a way that their variance . We also remark that developments of simple test statistics improving the t-test, To, can be of a theoretical interest.

3.4 One-Sample Test for the Median

A sub-problem related to comparisons between mean and quantile effects can be considered as follows: Let be continuous iid observations with

. We are interested in testing the hypothesis

ν = 0 versus

, where ν denotes the median of X1. This statement of the problem can be found in various practical applications related to testing linear regression residuals as being symmetric, and pre-and post-placebo paired comparison of biomarker measurements as well as, for example, when researchers investigate data associated with radioactivity detection in drinking water, where the population mean is known; see Section 4 in Semkow et al. (Citation2019).

To test for H0, it is reasonable to use a statistic in the form

where

is the sample estimator of ν based on the order statistics

, and

is a measure of scale, with f(u) being the density function of X1. The statistic

can be selected as approximately ancillary with respect to ν, since

. The known facts we use are: (a) the statistics To and A have an asymptotic bivariate normal distribution with the parameters shown in Ferguson (Citation1998); and (b) if

then Basu’s theorem asserts that

and

are independent, since

is a complete sufficient statistic and

is ancillary. That is to say, in a similar manner to the development shown in Section 3.3, we can improve To by focusing on the statistic

that satisfies

and

, as

. Thus, the test we propose is as follows: to reject H0, if

where

, and

means a kernel estimator of the density function f. In order to estimate

, we suggest employment of the R-command (R Development Core Team Citation2012):

It is clear that, for two-sided testing ν = 0 versus

, we can apply the rejection rule:

, where the threshold

satisfies

with a random variable Z having a Chi-square distribution with one degree of freedom.

It can be remarked that the testing algorithm shown in this section can be easily extended to make decisions regarding quantiles of underlying data distributions; see Section 6, for details.

3.5 Test for the Center of Symmetry

In many practical applications, for example, paired testing for pre-and post-treatment effects, we may be interested in testing that the center of symmetry of the paired observations is zero. To this end, we assume that iid observations are from an unknown symmetric distribution F with

. According to Bickel and Lehmann (Citation2012), the natural location parameter, say ν, for F is its center of symmetry. We are interested in testing the hypothesis

ν = 0 versus

.

The statistics and

are reasonable to be employed for testing H0, where

and

estimates

. The components of To and T1 are specialized in Sections 3.3 and 3.4. It is clear that if F were known to be a normal distribution function, then To outperforms T1, whereas when F were known to be a distribution of, for example, the random variable

, where

are independent and identically Exp(1)-distributed, T1 outperforms To.

Consider, for example, the statistic To as a test statistic to be modified and the statistic having a role of an approximately ancillary statistic, where

. Then, following the concept and the notations defined in Sections 3.3 and 3.4, we propose to reject H0, if

We will experimentally demonstrate that the TN-based test can combine attractive power properties of the To-and T1-based tests.

Remark 3.1.

Note that, in this section, the test statistics TN are targeted to improve the statistics To. In this section’s framework, there are no MP decision-making mechanisms. Thus, in general, it can be assumed we can find decision-making procedures that outperform the TN-based tests in certain situations.

Section 4 numerically examines properties of the decision-making schemes derived in Section 3.

4 Numerical Simulations

We conducted a Monte Carlo study to explore the performance of the proposed transformations of the tests about the mean, median, and center of symmetry as described in Section 3. In terms related to evaluations of nonparametric decision-making procedures, it can be noted that there are no MP tests, in the frameworks of Sections 3.3–3.5. We therefore compare the tests based on the given statistics, To, with those based on the corresponding statistics TN, the modifications of To, under various designs of H0/H1-underlying data distributions. The aim of the numerical study is to confirm that the proposed method can provide improvements in the context of statistical power. In Sections 4.2 and 4.3, for additional comparisons, we demonstrate the Monte Carlo power of the one-sample Wilcoxon-Mann-Whitney test that is frequently used in applications, where researchers are interested in assessing the hypothesis ν = 0 when ν is the median of observations. Note that, in practice, it is very difficult to find a nonparametric alternative to the one sample t-test for the mean. Then, in Section 4.1, where the t-test and its transformation defined in Section 3.3 are evaluated, we include a bootstrapped (nonparametric resampling) version of the original t-statistic To to be compared with the corresponding statistic TN, expecting that the bootstrapped t-test may outperform the original t-test in several nonparametric scenarios (Efron Citation1992).

To evaluate the tests, we generated independent samples of size

from different distributions corresponding to, say, designs Dkm,

. In this scheme, designs Dkm,

, fit hypotheses Hk,

, respectively. Each of the presented bootstrap simulation results are based on

replications with 1000 bootstrap samples.

Let the notation represent a test statistic T conducted with respect to design Dkm,

. To judge the experimental characteristics of the proposed tests, we obtained Monte Carlo estimators, say

and

of the following quantities:

and

, where

is the α-level critical value related to the asymptotic H0-distribution of T and

means a value of the

-quantile of

’s distribution, respectively. The criterion

calculated under

, examines our current ability to control the TIE rate of a T-based test using an approximate H0-distribution of T. In this framework,

calculated under

, displays the expected power of T. Values of

can be used to evaluate the actual power levels of T, supposing we can accurately control the TIE rates of the corresponding T-based test. It can be theoretically assumed that we can correct T to produce a statistic, say

, in order to minimize the distance

, by employing a method based on, for example, a Bartlett type correction, location adjustments, and/or bootstrap techniques. In this framework, an accurate higher order approximation to the H0-distribution of

might be needed. In several situations,

could indicate potential abilities to improve practical implementations of studied tests.

4.1 One-Sample t-test for the Mean

In order to examine the TN-based test generated by modifying the t-test, To, in Section 3.3, the following designs of underlying data distributions were applied:

;

with

;

;

with

; where

and

. The experimental results presented in are the power comparisons of the t-test based on To, its modification based on TN and the bootstrap test TB, the bootstrapped version of the t-test, when the significance level,

, of the tests was supposed to be fixed at 5%.

Table 1 Monte Carlo rate of rejections at of the following statistics: the t-test statistic To and its modification TN, defined in Section 3.3; the t-test statistic’s bootstrapped version TB

.

Designs , exemplify scenarios, where To is MP. In these cases, values of

testify that To is slightly superior to TN. Designs

, correspond to negatively skewed distributions. In these scenarios, TN is clearly somewhat better than To, having approximately 27

30% power gains as compared with To. Designs

, represent positively skewed distributions. The proposed test

is about two times more powerful than

. However, we should note that the asymptotic TIE rate control related to

suffers from the skewness of the H0-distribution. According to the values of

computed under D13, the procedure

, will clearly dominate the strategy

, if the TIE rate control related to TN could be improved. To this end, for example, a Chen (Citation1995)-type approach can be suggested to be applied. The present article does not aim to achieve improvements of test-algorithms for controlling the TIE rate of TN. The computed values of the criterion

shown in confirm that the TN-based strategy is reasonable. The results related to D04 and D14 support the conclusions above. Although, under D04, the corresponding

’s values indicate that the Monte Carlo asymptotic TIE rates of TN are smaller than those related to To, the proposed test is superior to To in both the

and

contexts under D14.

In , we also report the experimental results related to the Monte Carlo implementations of the test based on a bootstrapped version of the To statistic, denoted TB, where are resampled with replacement. In these cases, asymptotic approximations for the corresponding TIE rates were not applied. Thus, we denote the criterion PowA = Pow. The applied bootstrap strategy required a substantial computational cost. However, we cannot confirm that the TB-based test is significantly superior to the t-test based on To, under

. Moreover, under the designs D02 and D04, the the bootstrap t-test cannot be suggested to be used.

4.2 One Sample Test for the Median

To gain some insight into operating characteristics of the test statistic TN defined in Section 3.4, we considered various designs of underlying data distributions corresponding to the hypotheses ν = 0 and

, where ν denotes the median of X1’s distribution. To exemplify the results of the conducted Monte Carlo study, we employ the following schemes:

;

;

;

; where

, and

. In this study, attending to the statements presented in Section 3.4, the one-sample, one-sided Wilcoxon-Mann-Whitney test, say W, the To-based test and its modification, the TN-based test, were implemented. Note that, for the W test, the criterion PowA = Pow, since H0-distributions of the Wilcoxon-Mann-Whitney test statistic do not depend on underlying data distributions. represents the typical results observed during the extensive power evaluations of W, To, and TN, when the significance level,

, of the considered tests was supposed to be fixed at 5%. For example, in scenario

, TN improves T0 providing about a 25% power gain.

Table 2 Monte Carlo rate of rejections at of the following statistics: the one-sample Wilcoxon-Mann-Whitney test statistic (W), To and its modification, TN, defined in Section 3.4.

Regarding the two-sided -based test derived in Section 3.4, the following outcomes exemplify the corresponding Monte Carlo power evaluations: PowA =0.223, 0.585, and 0.838 provided by the two-sided W-test,

-based test and

-based test, respectively, when n = 50 and generated data satisfy D11. Note that, in the scenario above, we can employ the method proposed in Fisher and Robbins (Citation2019). According to Fisher and Robbins (Citation2019), since

, and

are

, where k = 0 and k = 1, under H0 and H1, respectively, the test statistics

and

are reasonable to be examined. These monotonic transformations demonstrated slight PowA increases of approximately 1.4% and 1.1% for the

- and

-based strategies, respectively.

4.3 Test for the Center of Symmetry

In this section, we examine implementations of the proposed TN-modification of the To-based test developed in Section 3.5. The To- and T1-based tests as well as the one-sample, one-sided Wilcoxon-Mann-Whitney test (W) were compared with the TN-based test with respect to the setting depicted in Section 3.5. To exemplify the results of the conducted numerical study, the following designs of data generations were employed: for

and

,

, when To can be expected to be superior to T1, TN, and W;

, where ηi and ξi are independent Exp(1)-distributed random variables, and then T1 can be expected to be superior to To, TN, and W;

, where

;

, where

.

summarizes the computed Monte Carlo outputs across scenarios Dkj, , when n = 50, 150 and the significance level,

, of the tests is supposed to be fixed at 5%. It is observed that: under D01 and D11, To and TN have very similar behavior; under D02 with n = 50, TN does improve To in terms of the TIE rate control; under D12, the values of the measurement Pow related to TN and T1 are close to each other and greater than those of To; under Dkj,

, TN shows the Monte Carlo power characteristics that outperform those of To, and W. For example, under D14 with n = 50, TN has approximately 22%, 23%, and 65% power gains as compared with W, To, and T1, respectively.

Table 3 Monte Carlo power levels at of the one-sample Wilcoxon-Mann-Whitney test (W) as well as the To, T1, and TN-based tests defined in Section 3.5.

Based on the conducted Monte Carlo study, we conclude that the proposed testing strategies exhibit high and stable power characteristics under various designs of alternatives.

5 Real Data Example

By blocking the blood flow of the heart, blood clots commonly cause myocardial infarction (MI) events that lead to heart muscle injury. Heart disease is a leading cause of death affecting about or higher than 20% of populations regardless of different ethnicities according to the Centers for Disease Control and Prevention, for example, Schisterman et al. (Citation2001).

The application of the proposed approach is illustrated by employing a sample from a study that evaluates biomarkers associated with MI. The study was focused on the residents of Erie and Niagara counties, 35–79 years of age. The New York State department of Motor Vehicles drivers’ license rolls was used as the sampling frame for adults between the age of 35 and 65 years, while the elderly sample (age 65–79) was randomly chosen from the Health Care Financing Administration database. The biomarkers called “thiobarbituric acid-reactive substances” (TBARS) and “high-density lipoprotein” (HDL) cholesterol are frequently used as discriminant factors between individuals with (MI = 1) and without (MI = 0) myocardial infarction disease, for example, Schisterman et al. (Citation2001).

The sample of 2910 biomarkers’ values was used to estimate the parameters a and b in the linear regression model related to {MI

}’s cases, where

are log-transformed HDL-cholesterol measurements,

denote log-transformed TBARS measurements, and

represent regression residuals with

. It was concluded that

(see Table S1 in the supplementary material, for details). Assume we aim to investigate the distribution of ϵi based on n = 100 biomarkers’ values, when

. In this case, it was observed that the sample mean and variance were

and

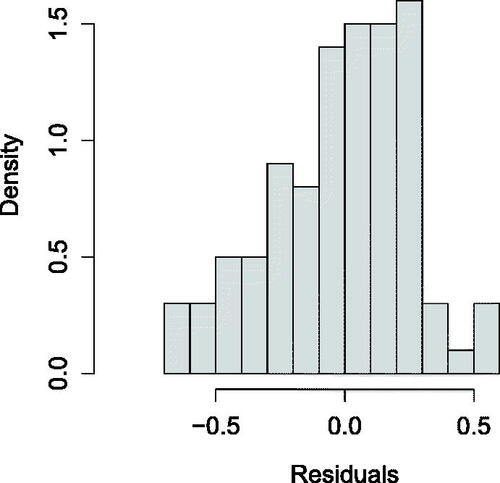

, respectively. depicts the histogram based on corresponding values of

.

In order to test for ν = 0 versus

, where ν is the median of ϵ’s distribution, we implemented the two-sided

-based test and its modification, the

-based test denoted in Section 3.4, as well as the two-sided Wilcoxon-Mann-Whitney test (W). Although the histogram shown in displays a relatively asymmetric distribution about zero, the

-based test and the W test have demonstrated a p-value

and p-value

, respectively. The proposed

-based test has provided p-value

. Then, we organized a Bootstrap/Jackknife type study to examine the power performances of the test statistics. The conducted strategy was that a sample with size

was randomly selected with replacement from the data

to be tested for H0 at a 5% level of significance. This strategy was repeated

times to calculate the frequencies of the events {

rejects H0}, {W rejects H0}, and {

rejects H0}. The obtained experimental powers of

, W, and

were: 0.238, 0.106, 0.535, when nb = 90; 0.198, 0.104, 0.463, when nb = 80; 0.176, 0.100, 0.415, when nb = 70, respectively. The experimental power levels of the tests increase as the sample size nb increases. This study experimentally indicates that the

-based test outperforms the classical procedures in terms of the power properties when evaluating whether the residuals of the association

, are distributed asymmetrically about zero. That is, the proposed test can be expected to be more sensitive as compared with the known methods to rejecting the null hypothesis

ν = 0 versus

, in this study.

6 Concluding Remarks

The present article has provided a theoretical framework for evaluating and constructing powerful data-based tests. The contributions in this article have touched on the principles of characterizing most powerful statistical decision-making mechanisms. Proposition 2.2 provides a method for one-to-one mapping the term “most powerful” to the properties of test statistics’ distribution functions via analyzing the behavior of corresponding likelihood ratios. We demonstrated that the derived characterization of MP tests can be associated with a principle of sufficiency. The concepts shown in Section 2 have been applied to improving test procedures by accounting for the relevant ancillary statistics. Applications of the presented theoretical framework have been employed to display efficient modifications of the one-sample t-test, the test for the median, and the test for the center of symmetry, in nonparametric settings. The effectiveness of the proposed nonparametric decision-making procedures in maintaining relatively high power has been confirmed using simulations and a real data example across various scenarios based on samples from relatively skewed distributions. We also note the following remarks. (a) Propositions 2.4 and 3.1 can be applied to different decision-making problems. (b) Effective corrections of the classical t-test can be of theoretical and applied interest. The modification of the t-test as per Section 3.3 involves using the estimation of the third central moment. This moment plays a role in some corrections of the t-test structure for adjusting its null distribution when the underlying data are asymmetric, for example, Chen (Citation1995). Overall, the proposed modification is somewhat different from those that are used to improve control of the TIE rates of t-test type procedures. Thus, in general, basic ingredients of the methods mentioned above can be combined. (c) The scheme presented in Section 3.4 can be easily revised to develop a test for quantiles, by using the observation that and the sample pth quantile, say

, are asymptotically bivariate normal with

(d) Sections 3–5 have exemplified applications of the treated MP principle in the nonparametric settings. In many parametric problems, the corresponding likelihood ratios do not have explicit forms or have very complicated shapes, for example, when testing statements are based on longitudinal data, dependent observations, multivariate outcomes, data subject to different sorts of errors, and/or missing-values mechanisms. In such cases, issues related to comparing/developing tests via the considered MP principle can be employed.

A plethora of decision-making algorithms touches on most fields of statistical practice. Thus, it is not practical in one paper to focus on all the relevant theory and examples. This paper studies only one approach to characterize a class of MP mechanisms. That is, there are many potential future directions that seem to be promising targets for research, including, for example: (i) examinations of the relationships between general MP characterizations, Basu’s theorem-type results (e.g., Ghosh Citation2002), the concepts of sufficiency, completeness, and ancillarity under different statements of decision-making policies. In this aspect, for example, a research question can be as follows: when can we claim that a statistic T is MP iff T and A are independently distributed, for any ancillary statistic A? (ii) Various parametric and nonparametric applications of MP characterizations in different settings can be developed. (iii) Proposition 2.4 can be used and extended to compare different statistical procedures in practice. (iv) In light of the present MP principle, relevant evaluations of optimal combinations of test statistics (e.g., Berk and Jones Citation1978) can be proposed. (v) Large sample properties of test statistics modified with respect to MP characterization can be analyzed. (vi) Perhaps, Proposition 3.1 can be integrated into various testing developments, where characterizations of underlying data distributions under corresponding hypotheses can be used to define relevant ancillary statistics. Leaving these topics to the future, it is hoped that the present paper will convince the readers of the benefits of studying different aspects and characterizations related to MP data-based decision-making techniques.

Supplementary Materials

The supplementary materials contain: the proofs of the theoretical results presented in the article; and Table S1 that displays the analysis of variance related to the linear regression fitted to the observed log-transformed HDL-cholesterol measurements using the log-transformed TBARS measurements as a factor, in Section 5.

Supplemental Material

Download PDF (147.4 KB)Acknowledgments

The authors are grateful to the Editor, Associate Editor, and an anonymous referee for suggestions that led to a substantial extension and improvement of the presented results.

Additional information

Funding

References

- Bahadur, R. R. (1955), “A Characterization of Sufficiency,” Annals of Mathematical Statistics, 26, 286–293. DOI: 10.1214/aoms/1177728545.

- Bayarri, M. J., and Berger, J. O. (2000), “P Values for Composite Null Models,” Journal of the American Statistical Association, 95, 1127–1142. DOI: 10.2307/2669749.

- Berk, R. H., and Jones, D. H. (1978), “Relatively Optimal Combinations of Test Statistics,” Scandinavian Journal of Statistics, 5, 158–162.

- Bickel, P. J., and Lehmann, E. L. (2012), “Descriptive Statistics for Nonparametric Models I. Introduction,” in Selected Works of EL Lehmann, ed. J. Rojo, pp. 465–471, New York: Springer.

- Boos, D. D., and Hughes-Oliver, J. M. (1998), “Applications of Basu’s Theorem,” The American Statistician, 52, 218–221. DOI: 10.2307/2685927.

- Chen, L. (1995), “Testing the Mean of Skewed Distributions,” Journal of the American Statistical Association, 90, 767–772. DOI: 10.1080/01621459.1995.10476571.

- Efron, B. (1992), Bootstrap Methods: Another Look at the Jackknife, New York: Springer.

- Ferguson, T. S. (1998), “Asymptotic Joint Distribution of Sample Mean and a Sample Quantile,” Unpublished. Available at http://www.math.ucla.edu/∼tom/papers/unpublished/meanmed.pdf.

- Fisher, T. J., and Robbins, M. W. (2019), “A Cheap Trick to Improve the Power of a Conservative Hypothesis Test,” The American Statistician, 73, 232–242. DOI: 10.1080/00031305.2017.1395364.

- Ghosh, I., Balakrishnan, N., and Ng, H. K. T. (2021), Advances in Statistics-Theory and Applications: Honoring the Contributions of Barry C. Arnold in Statistical Science, New York: Springer.

- Ghosh, M. (2002), “Basu’s Theorem with Applications: A Personalistic Review,” Sankhyā: The Indian Journal of Statistics, 64, 509–531.

- Ghosh, M., Reid, N., and Fraser, D. A. S. (2010), “Ancilllary Statistics: A Review,” Statistica Sinica, 20, 1309–1332.

- Hall, P., and La Scala, B. (1990), “Methodology and Algorithms of Empirical Likelihood,” International Statistical Review/Revue Internationale de Statistique, 58, 109–127. DOI: 10.2307/1403462.

- Johnson, V. E. (2013), “Uniformly Most Powerful Bayesian Tests,” The Annals of Statistics, 41, 1716–1741. DOI: 10.1214/13-AOS1123.

- Kagan, A., and Shepp, L. A. (2005), “A Sufficiency Paradox: An Insufficient Statistic Preserving the Fisher Information,” The American Statistician, 59, 54–56. DOI: 10.1198/000313005X21041.

- Lehmann, E. L. (1950), “Some Principles of the Theory of Testing Hypotheses,” The Annals of Mathematical Statistics, 21, 1–26. DOI: 10.1214/aoms/1177729884.

- Lehmann, E. L. (1993), “The Fisher, Neyman-Pearson Theories of Testing Hypotheses: One Theory or Two?” Journal of the American Statistical Association, 88, 1242–1249.

- Lehmann, E. L., and Romano, J. P. (2005), Testing Statistical Hypotheses, New York: Springer.

- Maity, A., and Sherman, M. (2006), “The Two-Sample t test with One Variance Unknown,” The American Statistician, 60, 163–166. DOI: 10.1198/000313006X108567.

- O’Neill, B. (2014), “Some Useful Moment Results in Sampling Problems,” The American Statistician, 68, 282–296. DOI: 10.1080/00031305.2014.966589.

- R Development Core Team. (2012), R: A Language and Environment for Statistical Computing, Vienna, Austria: R Foundation for Statistical Computing. http://www.R-project.org.

- Schisterman, E. F., Faraggi, D., Browne, R., Freudenheim, J., Dorn, J., Muti, P., Armstrong, D., Reiser, B., and Trevisan, M. (2001), “TBARS and Cardiovascular Disease in a Population-based Sample,” Journal of Cardiovascular Risk, 8, 1–7. DOI: 10.1097/00043798-200108000-00006.

- Semkow, T. M., Freeman, N., Syed, U. F., Haines, D. K., Bari, A., Khan, J. A., Nishikawa, K., Khan, A., Burn, A. J., Li, X., and Chu, L. T. (2019), “Chi-Square Distribution: New Derivations and Environmental Application,” Journal of Applied Mathematics and Physics, 7, 1786–1799. DOI: 10.4236/jamp.2019.78122.

- Vexler, A. (2021), “Valid p-values and Expectations of p-values Revisited,” Annals of the Institute of Statistical Mathematics, 73, 227–248. DOI: 10.1007/s10463-020-00747-2.

- Vexler, A., and Hutson, A. (2018), Statistics in the Health Sciences: Theory, Applications, and Computing, New York: CRC Press.

- Vexler, A., Wu, C., and Yu, K. (2010), “Optimal Hypothesis Testing: From Semi to Fully Bayes Factors,” Metrika, 71, 25–138. DOI: 10.1007/s00184-008-0205-4.