?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

The Cauchy problem for the heat equation is a model of situation where one seeks to compute the temperature, or heat-flux, at the surface of a body by using interior measurements. The problem is well-known to be ill-posed, in the sense that measurement errors can be magnified and destroy the solution, and thus regularization is needed. In previous work it has been found that a method based on approximating the time derivative by a Fourier series works well [Berntsson F. A spectral method for solving the sideways heat equation. Inverse Probl. 1999;15:891–906; Eldén L, Berntsson F, Regińska T. Wavelet and Fourier methods for solving the sideways heat equation. SIAM J Sci Comput. 2000;21(6):2187–2205]. However, in our situation it is not resonable to assume that the temperature is periodic which means that additional techniques are needed to reduce the errors introduced by implicitly making the assumption that the solution is periodic in time. Thus, as an alternative approach, we instead approximate the time derivative by using a cubic smoothing spline. This means avoiding a periodicity assumption which leads to slightly smaller errors at the end points of the measurement interval. The spline method is also shown to satisfy similar stability estimates as the Fourier series method. Numerical simulations shows that both methods work well, and provide comparable accuracy, and also that the spline method gives slightly better results at the ends of the measurement interval.

1. Introduction

In many industrial applications one wishes to determine the temperature, or heat-flux, on the surface of a body. Often it is the case that the surface itself inaccessible for measurements [Citation1–7]. In such cases one can instead measure the temperature at a location in the interior of the body and compute the surface temperature by solving an ill-posed boundary value problem for the heat equation.

In a one-dimensional setting a mathematical model of the above situation is the following: Determine the temperature , satisfying the heat equation

(1)

(1) where k is the thermal conductivity, ρ is the density, and

is the specific heat capacity of the material, with the Cauchy data

is given along the line x = a. In addition we speficy the initial data

, for

.

Of course, since g and h are measured, there exists measurement errors, and we would actually have functions , for which

(2)

(2) where

represents a bound on the measurement error.

Although in this paper we mostly discuss the heat equation in its simplest form, , our interest is in numerical methods that can be used for more general problems, e.g. non-linear equations

(3)

(3) which occur in applications since the thermal properties of most materials are dependent on the temperature. For such problems one cannot use methods based on reformulating the problem as a linear operator equation [Citation4,Citation8,Citation9]. Instead, we solve the problem, essentially, as an initial value problem in the space variable [Citation10–12]. This approach works very well if the time-derivative is approximated by a bounded, discrete operator [Citation2].

In this paper, we study two different methods for approximating the time derivative and their numerical implementation. First, the time derivative is approximated by a matrix representing differentiation of a trigonometric interpolant. This is a method that has proved to work very well in practice, see [Citation7,Citation13–15], but that has the drawback that by using the Fourier transform we implicitly assume that the solution is periodic in the time variable. This is generally not the case and thus the method is affected by additional errors at the ends of the time interval. Implicit assumptions of periodicity also occurs in other popular methods, such as those based on Meyer wavelets [Citation16,Citation17].

In order to avoid problems with periodicity we instead use a cubic splines to construct a discrete approximation of the time derivative. In previous work cubic splines have been shown to work well for this purpose, see [Citation18]. In our work we use cubic smoothing splines [Citation19], which includes a parameter λ that controls the smoothness of the result, and construct a matrix approximation of the time derivative. We also derive stability estimates that suggest that the spline method has similar regularizing properties as the Fourier series approach. In addition numerical experiments shows that the method works well.

2. The Cauchy problem for the heat equation

In this work we are concerned with the following non-standard boundary value problem for the heat-equation: Find the temperature , for

, such that

(4)

(4) where the function κ represents the material properties, and

,

are the data.

The problem (Equation4(4)

(4) ) is ill–posed in the sense that the solution, if it exists, does not depend continuously on the data. In the case of constant material properties, i.e. κ is a constant, this can be seen by solving the problem in the Fourier domain. In order to simplify the analysis we define all functions to be zero for t<0. Let

be the Fourier transform of the solution. The heat equation takes the form

(5)

(5) and it can be verified that the solution is given by

(6)

(6) where

, and

denotes the principal value of the square root. Since the real part of

is positive, and the solution

, is assumed to be in

, we see that both the data functions

, must decay rapidly as

. Also, small perturbations in

and

, for high frequencies, may blow-up and drastically change the solution. This behavior is typical for ill-posed problems [Citation20].

2.1. Stabilization by discretizing the time variable

In this section we stabilize the heat conduction problem by discretizing the time-variable, see [Citation2,Citation13]. By replacing the time derivative by a bounded operator, e.g. a matrix, we effectively regularize the problem. For this purpose we rewrite (Equation4

(4)

(4) ) as an initial-value problem

(7)

(7) with initial-boundary values

(8)

(8) and

(9)

(9) We discretize (Equation7

(7)

(7) ), on a uniform grid

, with

. For simplicity we also introduce a sampling operator such that

i.e. the function is sampled on the time grid. By introducing semi-discrete representations of the solution and its derivative, i.e.

we obtain the initial value problem,

(10)

(10) with initial data

and

.

In the initial value problem (Equation10(10)

(10) ) the matrix D represents a discretization of the time derivative. In [Citation21] it was observed that since, for any matrix,

is bounded the system of ODEs (Equation10

(10)

(10) ) is well-posed, and the solution depends in a stable way on the used data. By controlling the accuracy of the approximation

the degree of stability can be adjusted to be suitable for a problem with a specific noise level. For solving the problem numerically, we use a standard ODE solver, and usually it is sufficient to use an explicit method, e.g. a Runge–Kutta code.

In the rest of this paper, we will discuss concrete implementations where the matrix D in (Equation10(10)

(10) ) is approximated by a Fourier method and also a method where the derivative is computed using a smoothing spline. In both cases we will derive stability estimates and also investigate the properties of the methods using numerical examples.

2.2. Differentiation using the trigonometric interpolant

The unique trigonometric polynomial interpolating a function , on the uniform grid

is [Citation22],

(11)

(11) where the sequence

are the discrete Fourier coefficients, with the assumption that n is even. The discrete Fourier coefficients are computed by taking the FFT of the vector

.

It is known that very accurate approximations of the derivative, of periodic functions, can be computed by differentiation of the trigonometric polynomial. This leads us to the following definition:

Definition 2.1

The matrix is defined as

, where F is the Fourier matrix, and

is the diagonal matrix

(12)

(12) and

is the cut-off frequency.

By keeping only frequencies below the cut-off, i.e. , we effectively remove the high frequency content from the solution (Equation6

(6)

(6) ) and thus stability is restored. The stability of the problem (Equation10

(10)

(10) ), will be explored as a sequence of lemmas.

Lemma 2.2.

The matrix is bounded and

.

Proof.

The Fourier matrix F is orthogonal and therefore . Also, since

is diagonal the norm is bounded by its largest diagonal element.

Next, we prove that the problem (Equation10(10)

(10) ) is well-posed if the approximation

is used. In the case of constant coefficients, i.e. constant material propertiens κ, we have the following result;

Lemma 2.3.

Let , where

is the cut-off frequency, and let

and

be two different solutions to (Equation10

(10)

(10) ), corresponding to Cauchy data

and

, respectively. Then

(13)

(13)

Proof.

We use the Discrete Fourier Transform and let . Then (Equation10

(10)

(10) ) simplifies to

(14)

(14) with boundary conditions

and

. Since

is a diagonal matrix, the problem can be solved one frequency

at a time, and

(15)

(15) and

othervise. Thus

Since F is orthogonal

and

and thus the result follows by summation over all the frequencies

.

It is easy to see that , for

, as was used above. In order to treat the second component of (Equation6

(6)

(6) ) we need a similar property for

. This is demonstrated by the following two lemmas.

Lemma 2.4.

It holds that .

Proof.

Let . Then a direct calculation shows that

(16)

(16) Expanding the squares, and using the trigonometric identity

, yields the desired result.

Lemma 2.5.

The function ,

, is monotonically increasing.

Proof.

Let and

. According to L'Hôspital's monotone rule, see [Citation23], the function

is monotonically increasing if the same is true for

. The results follows by applying the monotone rule twice since a direct calculation shows that

, for

.

Finally since the two previous lemmas can be combined into the following result.

Corollary 2.6.

The function is monotonically increasing.

Lemma 2.7.

Let , where

is the cut-off frequency, and let

and

be two different solutions to (Equation10

(10)

(10) ), corresponding to Cauchy data

and

. Then

(17)

(17)

Proof.

The proof is similar to that of Lemma 2.3. Let and note that

and

. It can be verified that the solution, for one frequency

, is

(18)

(18) and

otherwise. Taking absolute values and using the monotononicity of Collorary 2.6 we obtain

(19)

(19) Using Lemma 2.4 leads to

(20)

(20) Finally, the result follows by using the orhogonality of F, the fact that

, and by summation over all the frequencies

.

Remark 2.8

Note that when deriving the bounds (Equation13(13)

(13) ) and (Equation17

(17)

(17) ) we used that the eigenvalues of

, i.e. the frequencies

, are located on the imaginary axis. Thus we get a slightly better bound compared to just using

.

2.3. Periodization using splines

When using the FFT algorithm we implicitly assume that the vector represents a periodic function. This is not realistic in our application. In order to avoid wrap-around effects, see [Citation2], we extend the data

, from the interval

to

. This is done by first computing two first degree polynomials that approximate the first, and last, six points of the vector

in the least squares sense. The two first degree polynomials are then sampled on the original grid creating a total of 12 interpolation points. By moving the six interpolation points located near

to after

we obtain suitable points for creating a cubic spline, defined on the interval

, that can be used to extend the vector

to a periodic vector of double length in such a way that the transitions at t = 0 and t = 1 are as smooth as possible.

The procedure is illustrated in Figure where a noisy vector , representing a function

defined on

, has been smoothly extended to double length. By fitting first degree polynomials to six data points, near t = 0 and t = 1, we obtain the interpolation points needed to create the cubic spline defined on the interval

. We also display the exact derivative

and the approximation

, where the FFT of the extended data vector is used to approximate the derivative. In this particular experiment the noise level was

and the cut-off frequency

was used. We manage to filter out most of the noise from the derivative and a sufficient number of terms in (Equation11

(11)

(11) ) is included so that the computed derivative is resonably accurate. Note that Gibbs phenomena near the end points of the interval

are avoided and the derivative is reasonably accurate in the whole interval.

Figure 1. We illustrate the periodization process by displaying both a noisy data vector (left graph) representing a non-periodic function

defined on

. The cubic spline, defined on

and that matches the slopes of

at t = 0 and t = 1 is also illustrated (left graph). The combined vector represents a periodic function defined on

. We also show the exact derivative

(right graph) and the approximate derivative

for

(right graph). The approximation is reasonably good both for t = 0 and t = 1.

![Figure 1. We illustrate the periodization process by displaying both a noisy data vector fΔ (left graph) representing a non-periodic function f(t) defined on [0,1]. The cubic spline, defined on [1,2] and that matches the slopes of f(t) at t = 0 and t = 1 is also illustrated (left graph). The combined vector represents a periodic function defined on [0,2]. We also show the exact derivative f′(t) (right graph) and the approximate derivative DξcfΔ for ξc=35 (right graph). The approximation is reasonably good both for t = 0 and t = 1.](/cms/asset/a79b477f-b2b1-47d4-94a1-873b7d4a31a8/gapa_a_1876224_f0001_oc.jpg)

2.4. Implementation of the Fourier method

The numerical implementation of the Fourier series-based approach consists of implementing the procedure for computing derivatives described in Section 2.2, together with the periodization presented in Section 2.3, i.e. writing a procedure for computing the product of and a vector. Once such a procedure is available we can solve the initial value problem (Equation10

(10)

(10) ) using a Runge–Kutta method (ode45 in Matlab) with automatic step size control.

In order to create test problems, with a known solution, we select a function , and set

, for

, and used the initial data

, for 0<x<a. We then discretized the heat equation using the the Crank-Nicholson implicit scheme and computed the corresponding temperature gradient

. For our experiments a grid of size n = 200 in space and m = 500 in time was used. By adding normally distributed noise to the computed Cauchy data

we obtain the noisy data vectors

, from which we attempt to reconstruct an approximation of the exact surface temperature

by solving the Cauchy problem (Equation10

(10)

(10) ).

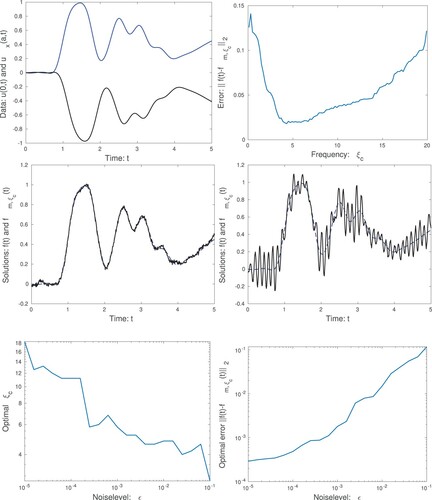

The performance of the Fourier method is illustrated by selecting a function and computing the corresponding Cauchy data

, as described above. Recall that

is used here. The exact surface temperature

, and also the thermal gradient

, are illustrated in Figure . Note that the function

is not periodic in time and therefore we might expect errors due to wrap-around effects.

Figure 2. We display the functions and

in the top-left graph. In the top-right graph we show the error

, as a function of

, for the noise level

. Note that there is an optimal value for

. In addition we display the solution

, for

(middle left), which is close to the optimum, and for

(middle right). Also the exact solution

is displayed. In the bottom-left graph we show the optimal

as a function of the noise level ϵ and in the bottom-right graph we show the corresponding error, for the optimal

, as a function of ϵ. Note that for a larger noise level ϵ, we need a smaller value of

, and obtain a larger error in the computed surface temperature

.

First, we set the noise level to and compute an approximate surface temperature

, for a range of frequencies

, and compute the error

. The total error can be divided into two parts. First we have the truncation error

, due to the fact that not all frequencies are included in the numerical solution. Second we have the propagated data error

. The stability results, see Lemmas 2.3 and 2.7, says that

is increasing as a function of

, while the truncation error

is decreasing as a function of

. Thus the error curve has a clear minimum and an optimal

representing the appropriate trade-off between

and

. For this case we also plot two solutions

, for

and

. We see that in the first case the level of regularization is just right but in the latter case there is too much noise magnification.

Next we pick noise levels in the range . For each level of noise we find the optimal value of

, which gives the smallest error. We see that a smaller noise ϵ means that we can use a large cut-off frequency

. We also compute the errors, for the optimal value of

, and as we expect a larger noise in the used data, leads to a larger error in the computed approximations

.

3. Regularization by using smoothing splines

In this section we discuss the regularization of the problem (Equation4(4)

(4) ) using cubic smoothing splines. As previously we will implement a differentiation matrix

using cubic splines and solve the discretized initial value problem (Equation10

(10)

(10) ) numerically. The goal is to reach similar accuracy and stability properties as reported for the Fourier-based derivative

, while avoiding the practical difficulties that occur when the data vectors do not represent periodic functions.

In the following subsections we introduce a differentiation matrix and also give a bound for its norm. Also we show that the matrix

is skew-symmetric. Thus stability results, similar to Lemmas 2.3 and 2.7, can be derived.

3.1. Differentiation using smoothing splines

In this section we present known facts about splines and introduce the notation needed in our work. For simplicity we restrict ourselves to considering the interval . Thus we have a uniform grid Δ, such that

, with grid parameter h. We work in an

setting and introduce the Sobolev space

(21)

(21) together with the standard norms and semi-norms defined, see [Citation24].

Let be a vector of data values on the grid. The smoothing cubic spline, that approximates

on the grid, is defined as follows:

Definition 3.1

Let be a vector. The cubic smoothing spline

is obtained by solving

(22)

(22) where

is the regularization parameter and

denotes a semi-norm.

It is well known that the solution to (Equation22(22)

(22) ) is a natural cubic spline. It is also known that the corresponding operator, mapping

onto

, is linear, see [Citation24,Citation25]. Thus we can introduce a matrix as follows:

Definition 3.2

The matrix is defined by

(23)

(23)

The matrix has many interesting properties. For instance

. Also, the problem (Equation22

(22)

(22) ) is symmetric in the sense that

, where

is the vector taken in reverse order. A consequence is the following result:

Lemma 3.3.

The matrix is symmetric.

Proof.

Let . By introducing a basis, e.g. the B-splines

, for the space of cubic splines, and writing the minimizer of (Equation22

(22)

(22) ) as

, we can derive an expression for

as follows: First

, where

. Second, the seminorm

can be written in the form

, where

. Thus the normal equations are

. Thus

is symmetric. For the case

, i.e. interpolating natural cubic splines, this is a known result [Citation25].

Now we are ready to introduce the differentiation matrix . More precisely, we make the following definition:

Definition 3.4

The matrix is defined by

(24)

(24)

The product is computed by finding the smoothing spline

and sampling its derivative on the grid.

Lemma 3.5.

The matrix is skew-symmetric.

Proof.

The matrix can be written in the form

, where the matrix

is symmetric,

, and

, see the proof of Lemma 3.3. A direct calculation shows that

(25)

(25) Since the B-spline basis function

is even, with respect to

, and the derivative

is odd with respect to

, we see that for the product

. Thus we can rearrange the indices to show that

.

A consequence of the fact that is skew-symmetric is that its eigenvalues are purely imaginary. The fact is used to derive stability estimates when (Equation10

(10)

(10) ) is solved using the smoothing spline method.

3.2. A bound for the matrix

In this section we derive a bound for . Most of the theory presented in this section represent simplifications, with simpler proofs, of more general results found in [Citation24], where an error estimate

was derived. For our work we instead need a bound for

which can be obtained using similar techniques. We need a series of Lemmas.

Lemma 3.6.

Let ,

be a uniform grid, and

. Then, if h is chosen so that

, it holds that

(26)

(26) where

and

are constants.

Proof.

We do the proof of one inequality as the other is obtained in a similar way. Let , with

and

. For

we have

Now we can integrate over

, sum over all the intervals, and then use the discrete Cauchy-Schwarz inequality, to obtain

(27)

(27) The result follows by using

. Also note that

.

The above Lemma can be used to estimate the discrete norm in terms of the continuous norm

, and also the other way around.

In order to obtain the desired bound for we need a result that follows directly from the fact that the cubic smoothing spline is the minimizer of (Equation22

(22)

(22) ).

Lemma 3.7.

Let be a vector and

be corresponding smoothing spline. Then

(28)

(28)

Proof.

A norm, and scalar product, for is given by

(29)

(29) The problem defining the cubic smoothing spline, see Lemma 3.1, can then be written as: Find

that minimize

. The solution of the least squares problem is characterized by the residual

being orthogonal to

, for all

. This means that

(30)

(30) Insert v = u and the result follows.

Proposition 3.8.

The matrix , defined by (3.4), satisfies

(31)

(31) where

is a constant.

Proof.

From the definition of , and using Lemma 3.6, we obtain

(32)

(32) where

is the smoothing cubic spline corresponding to the vector

. Next we use the inequality

(33)

(33) where

is a constant, which is valid for

, and all

, see [Citation24, Lemma 3.9] or, originally, [Citation26]. We obtain

(34)

(34) where we again used Lemma 3.6. For the final step we write

and expand

into

(35)

(35) By inserting Lemma 3.7 into the above expression we obtain

(36)

(36) From (Equation36

(36)

(36) ) we obtain the two estimates

(37)

(37) Inserting these two inequalities into (Equation34

(34)

(34) ) yields

(38)

(38) The result follows by inserting

into (Equation38

(38)

(38) ).

3.3. Stability analysis for cubic splines

In this section we establish stability results, for the initial value problem (Equation10(10)

(10) ), when the approximation

is used. The proofs are based on the idea that since

is skew-symmetric we have an eigenvalue decomposition

(39)

(39) where the eigenvector matrix X is unitary, the

are real, and are bounded by

. The factor b is due to the fact that the theory in the previous section was developed under the assumption that the time interval was 0<t<1.

Lemma 3.9.

Let , where

is the regularization parameter, and let

and

be two different solutions to (Equation10

(10)

(10) ), corresponding to Cauchy data

and

, respectively. Then

(40)

(40)

Proof.

Let X be as defined in (Equation39(39)

(39) ) and introduce a new variable

. The system (Equation10

(10)

(10) ) simplifies to

(41)

(41) with boundary conditions

and

. This is exactly the same situation as in the proof of Lemma 2.3 and the result follows by repeating the same steps.

Similarily we can repeat the steps for the proof of Lemma 2.7 to obtain the following result:

Lemma 3.10.

Let , where

is the regularization parameter, and let

and

be two different solutions to (Equation10

(10)

(10) ), corresponding to Cauchy data

and

. Then

(42)

(42)

The above results means that the problem (Equation10(10)

(10) ) is well-posed when the approximation

is used. The regularization parameter λ fills the same role as the cut-off frequency

for the Fourier method. For this method a small value for λ leads to a more accurate derivative and thus less stability for the inverse problem. If

then both methods satisfy similar stability estimates.

4. Simulated numerical examples

In this section we present numerical examples intended to illustrate the properties of the smoothing spline method. The experiments are constructed essentially the same way as in Section 2.4. There are many codes available for solving the minimization problem (Equation22(22)

(22) ) and for our work we use the Matlab function csaps; which can be used to find the cubic smoothing spline

for a given vector y, a stepsize h, and regularization parameter λ .Footnote1

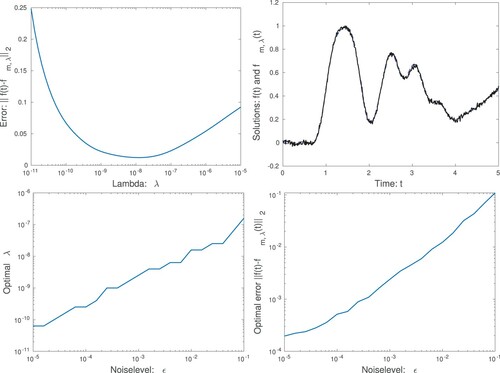

Test 1 As a first experiment we solve the same problem, as was used in Section 2.4. Thus we first set the noise level to be and solve the inverse problem for a wide range of regularization parameters λ. In Figure we present the results. We note that, as previously, there is an optimal regularization parameter λ, which represents the appropriate trade-off between accuracy and stability. We also display the computed solution for

which is close to the optimal value. The accuracy of the numerical solution is comparable to that computed by the Fourier method.

Figure 3. In the top-left graph we show the error , as a function of λ, for the noise level

. Note that there is an optimal value for λ. In the top-right graph we display the surface temperature for

which is close to the optimal value. In the bottom-left graph we show the optimal λ as a function of the noise level ϵ and in the bottom-right graph we show the corresponding error, for the optimal λ, as a function of ϵ. Note that for a larger noise level ϵ, we need a larger value of λ, and obtain a larger error in the computed surface temperature

.

Next we illustrate the regularization properties of the method by letting the noise level vary in the range . For each different noise level we find the optimal λ and compute the corresponding error. smallest error. We see that a smaller noise ϵ means that we can use a smaller λ, and thus compute the derivatives more accurately. We also see that a larger noise in the data leads to a larger error in the computed surface temperatures

. The results are comparable to those reported in Section 2.4.

Test 2 In order to further investigate the properties of the two numerical methods we select a variable coefficient

(43)

(43) This choice of

gives a slightly less ill-posed problem compared to our previous test. Note that since the problem now is non-linear iteration is needed to solve the problem and create numerical test data. The numerical code for the inverse problem (Equation10

(10)

(10) ) remains the same. For this experiment we chose a smoothed out stepfunction

as the exact surface temperature and the time interval is 0<t<b, where b = 5. The size of the time grid is again n = 400. Our objective is to try and verify that the Fourier transform method leads to a larger error, at the beginning of the time interval due to the implicit periodicity assumption. This motivates our choice of

as a step function. The idea is that Gibbs phenomena near the discontinuity causes a larger error in the second half of the interval

, and that this should also cause errors near t = 0 for the Fourier method. We use a smoothed out step function, and not a true discontinuity, to make the test a bit easier for the Fourier method.

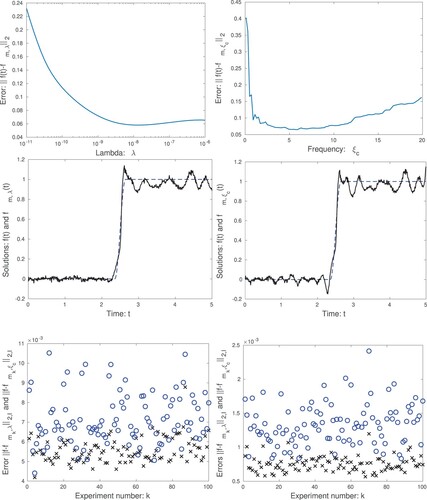

First we compute the errors and

for a range of regularization parameters. For this test we use the noise level

. The results are displayed in Figure . We see that for both methods too little regularization leads to a large error due to instability. However, also for both methods, the truncation error is fairly large due to Gibbs phenomena regardless of the choice of the regularization parameter. There is no clear optimal choice for the level of regularization. We also display two approximate solutions for

and

, respectively. Both solutions are about as accurate however the spline method clearly leads to a smaller error initially. This is due to a lack of wrap-around effects.

Figure 4. We present tests where the exact solution is a smoothed step function. The top graphs show the error

(left) for the spline method and the error

(right) for the Fourier method. The middle graphs display the numerical solutions

(left) obtained using the spline method and

and the solution

computed using the Fourier method and

. The lower graphs show the errors

x markers) and

o markers) for different random noise sequences

. In the left graph the variance of the noise is

,

and

. In the right graph instead

,

and

. In both cases 100 different sets of random noise were generated.

The noise level represents the variance of the normally distributed random noise added to the data

at individual grid points. However an observation is that different random sequences can give quite different errors in the computed solutions. Thus we perform 100 different experiments, with the same parameters b = 5,

and

as specified above. For each experiment we compute the error using the Euclidea norm

, where only grid points

inside the interval

counts towards the sum. This means that we only look at the errors at the beginning of the time interval.

To select the appropriate levels of regularization is difficult since the methods behave differently. In this case we did the following: We use the exact solution , and thus

. This means we only have noise. We picked

and adjusted

until both methods give the same mean error, over 100 tests. In this case that happens for

. Thus the parameters are chosen so that random noise is magnified equally by both methods. The results are shown in Figure . We see that for the step function

the error, measured using

, is on average significantly larger for the Fourier method which shows that the method suffers from problems with wrap-around effects.

We also tried a lower noise level . In this case the appropriate regularization parameters are

and

. Again when we run 100 tests, with different noise sequences, we see that the spline method has a smaller error initially, due to avoidance of wrap-around effects.

5. Concluding remarks

In this paper we have developed a regularization method for solving the inverse heat conduction problem by using smoothing cubic splines. Previously, the same problem has been solved using Fourier transforms [Citation2,Citation7,Citation13]. The Fourier method is working well but makes the implicit assumption that the data vectors represents periodic functions. This is not true for the problem under consideration and this can potentially lead to very large errors in practice. We present one of the standard techniques, called periodization, that is used to deal with non-periodic data vectors and thus avoid wrap-around effects. In our work we show that, while the periodization works reasonably well, there are increased errors in the numerical solution due to the periodicity assumptions needed for the Fourier method. To avoid the need for a periodicity assumption is why we introduce the smoothing spline method as an alternative. Our experiments show that we do indeed avoid an increased error due to wrap-around effects.

The inverse heat conduction problem is ill-posed in the sense that small errors in the data can cause large errors in the numerical solution. Thus regularization is needed. We demonstrate that the Fourier method is a regularization of the problem by providing stability estimates. The stability theory is developed for the discrete problem that is solved numerically. Though a complication is that the periodization procedure is not included in the stability analysis.

For the smoothing spline method we introduce a matrix that represents differentiation of the smoothing spline obtained from a vector y consisting of function values on the grid. Our goal is to develop similar stability estimates as for the Fourier method. Thus we derive a bound on the norm

and also show that the matrix is skew-symmetric. Our theory is based on a previous paper [Citation24] but since our work concerns only a special case we can obtain simpler proofs. Since no periodicity assumption is needed, as for the Fourier method, one advantage is that the stability theory corresponds more directly to the discrete problem that is actually solved by our codes. From our experiments we also deduce that the smoothing splines method can effectively be used as a regularization method for solving the Cauchy problem.

In this paper we only present a stability analysis that bounds the errors in the solution caused by random errors in the data. In [Citation24] an error estimate is derived. In future work we intend to make use of a similar error estimate for our matrix approximation

and also limit the truncation error for our method. We also intend to apply our method to similar problems and compare with the work of other authors, e.g. [Citation16,Citation18]. We are also looking to apply the method to industrial problems with measured data.

Acknowledgments

The work of Mary Nanfuka is supported by the SIDA bilateral programme (2015–2020) with Makerere University; Project 316: Capacity building in Mathematics. The authors also thank prof. Matti Heiliö for valuable discussions during the work.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Notes

1 The code used for our experiments is made available upon request by the corresponding author

References

- Beck JV, Blackwell B, Clair SR. Inverse heat conduction. Ill-posed problems. New York: Wiley; 1985.

- Eldén L, Berntsson F, Regińska T. Wavelet and Fourier methods for solving the sideways heat equation. SIAM J Sci Comput. 2000;21(6):2187–2205.

- Gardarein J-L, Gaspar J, Corre Y, et al. Inverse heat conduction problem using thermocouple deconvolution application to the heat flux estimation in a tokamak. Inverse Probl Sci Eng. 2013;21(5):854–864.

- Jahedi M, Berntsson F, Wren J, et al. Transient inverse heat conduction problem of quenching a hollow cylinder by one row of water jets. Int J Heat Mass Transf. 2018;117(Supplement C):748–756.

- Li D, Wells MA. Effect of subsurface thermocouple installation on the discrepancy of the measured thermal history and predicted surface heat flux during a quench operation. Metallurg Mater Trans. 2005;36B:343–354.

- Taler J, Weglowski B, Pilarczyk M. Monitoring of thermal stresses in pressure components using inverse heat conduction methods. Int J Numer Meth Heat Fluid Flow. 2017;27(3):740–756.

- Wikström P, Blasiak W, Berntsson F. Estimation of the transient surface temperature, heat flux and effective heat transfer coefficient of a slab in an industrial reheating furnace by using an inverse method. Steel Res Int. 2007;78(1):63–70.

- Berntsson F. An inverse heat conduction problem and improving shielded thermocouple accuracy. Numer Heat Transfer, Part A: Appl. 2012;61(10):754–763.

- Xiong X-T, Fu C-L. A spectral regularization method for solving surface heat flux on a general sideways parabolic. Appl Math Comput. 2008;197(1):358–365.

- Carasso AS. Slowly divergent space marching schemes in the inverse heat conduction problem. Numer Heat Transfer, Part B. 1993;23:111–126.

- Guo L, Murio DA, Roth C. A mollified space marching finite differences algorithm for the inverse heat conduction problem with slab symmetry. Computers Math Appl. 1990;19:75–89.

- Mejía CE, Murio DA. Numerical solution of generalized IHCP by discrete mollification. Computers Math Appl. 1996;32:33–50.

- Berntsson F. A spectral method for solving the sideways heat equation. Inverse Probl. 1999;15:891–906.

- Blevins LG, Pitts WM. Modeling of bare and a spirated thermocouples in compartment fires. Fire Saf J. 1999;33:239–259.

- Kemp SE, Annaheim S, Rossi RM, et al. Test method for characterising the thermal protective performance of fabrics exposed to flammable liquid fires. Fire Mater. 2016;41:750–767.

- Karimi M, Rezaee A. Regularization of the Cauchy problem for the Helmholtz equation by using Meyer wavelet. J Comput Appl Math. 2017;320:76–95.

- Regińska T. Sideways heat equation and wavelets. J Comput Appl Math. 1995;63:209–214.

- Foadian S, Pourgholi R, Hashem Tabasi S. Cubic b-spline method for the solution of an inverse parabolic system. Appl Anal. 2018;97(3):438–465.

- Reinsch CH. Smoothing by spline functions. Numer Math. 1971;16(5):451–454.

- Isakov V. Inverse problems for partial differential equations. New York: Springer; 1998.

- Eldén L. Solving the sideways heat equation by a ‘method of lines’. J Heat Transfer, Trans ASME. 1997;119:406–412.

- Gustafsson B, Kreiss H-O, Oliger J. Time dependent problems and difference methods. New York: Wiley Interscience; 1995.

- Anderson GD, Vamanamurthy MK, Vuorinen MK. Conformal invariants, inequalities, and quasiconformal maps. New York: John Wiley & Sons, Inc.; 1997.

- Ragozin DL. Error bounds for derivative estimates based on spline smoothing of exact or noisy data. J Approx Theor. 1983;37(4):335–355.

- Schoenberg IJ. Spline interpolation and the higher derivatives. Proc Nat Acad Sci USA. 1964;51:24–28.

- Agmon S. Lectures on elliptic boundary value problems. Princeton (NJ): D. Van Nostrand Co.; 1965.