?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

The nonlinear rational model is a generalized nonlinear model and has been gradually applied in modelling many dynamic processes. The parameter identification of a class of nonlinear rational models is studied in this paper. This identification problem is very challenging because of the complexity of the rational model and the coupling between model inputs and outputs. To identify the nonlinear model, a bias compensated multi-innovation stochastic gradient algorithm is presented. The multi-innovation technique replacing the scalar innovation with an information vector is adopted to accelerate the traditional stochastic gradient algorithm. However, the estimate obtained by the accelerated algorithm is biased because of the correlation between the information vector and the noise. To overcome this difficulty, a bias compensation strategy is used. The bias is calculated and compensated to get an unbiased estimate. Theoretical analysis shows that the proposed algorithm can give biased estimates with linear complexity. The proposed algorithm is validated by a numerical experiment and the modelling of the propylene catalytic oxidation.

1. Introduction

To describe the dynamic characteristics of the nonlinear systems, many nonlinear structures have been developed, such as the NARMAX model, Volterra series model, block-oriented nonlinear model and so on [Citation1–4]. In recent years, a model named nonlinear rational model (NRM) has been gradually applied in the modelling and control of nonlinear systems, particularly in some chemical processes and mechanistic systems [Citation5–8]. The NRM is a kind of generalized nonlinear model. Traditional rational model, NARMAX model, integral model, output affine model and linear difference equation model can be seen as its special form [Citation9].

The NRM is defined as the ratio of two polynomial expansions of past inputs, outputs and prediction errors [Citation10]. The identification of the NRM is quite difficult because the NRM cannot be parameterized into a linear-in-parameter system [Citation9–11] and the coupling between model input and output. Despite the difficulties, researchers have reported some results [Citation7,Citation9,Citation12]. For example, to identify the parameters of the NRM, a prediction error algorithm and a new rational model estimation (RME) algorithm were proposed [Citation11,Citation13]. To decrease the computational cost of the above two algorithms, a recursive RME algorithm, an error back propagation algorithm, an implicit least-squares iterative algorithm, two maximum likelihood algorithms and a globally convergent algorithm were derived [Citation5,Citation7,Citation10,Citation14,Citation15]. To determine the NRM’s structure, an orthogonal RME algorithm and a genetic algorithm were investigated [Citation9,Citation11]. Zhu et al. summarized the advances in NRM identification and control [Citation8].

Although these algorithms work well for many NRMs, they have at least O(n2) complexity, which makes them unsuitable for online applications. To decrease the complexity, the stochastic gradient (SG) algorithm is an alternative because it costs only O(n) flops each iteration [Citation16]. There are many gradient-based algorithms, among which, a key term separation gradient iterative algorithm was derived to identify a fractional-order nonlinear system [Citation17], a three-stage forgetting factor SG method was proposed for a Hammerstein system [Citation18] and an auxiliary model stochastic gradient method was studied for a Wiener–Hammerstein system [Citation19].

However, the estimate for the NRM given by the traditional SG algorithm is biased because the information vector is correlated to the noise [Citation10,Citation20,Citation21]. To get unbiased estimates, the bias compensation (BC) technique and instrumental variable (IV) technique are often used, for example, a BC-based method was proposed to estimate the state of charge for lithium-ion batteries [Citation22], a BC-based sign algorithm was addressed to estimate the weight vector of an unknown system [Citation23], an IV method was implemented for detecting and correcting parameter bias within structural equation models [Citation24], a unified model-implied IV approach was reported for structural equation modelling with mixed variables [Citation25]. Sometimes, for the IV method, although there are some guiding principles, it is still very difficult to select an appropriate instrumental variable. Therefore, the BC method is adopted to obtain an unbiased estimate for the NRM in this paper [Citation10].

This paper considers the parameter identification of the so-called ARX-NRM. This NRM contains a process model with nonlinear rational form and its noise model has the same denominator as the process model. This paper investigates this parameter identification in the time domain, without considering modelling error, time-delay estimation, uncertainties in the real systems and frequency-domain identification [Citation26–29]. Meanwhile, this paper discusses the integer adaptive methods for the nonlinear models. Recently, the fractional adaptive method has been an important part of adaptive algorithms. For details, please see [Citation30–33].

The main contributions of this paper are as follows:

The parameter identification of an ARX-type nonlinear rational model is considered, which is quite difficult because this model is a nonlinear-in-parameter system and its output is coupled with the input.

To accelerate the stochastic gradient algorithm, the multi-innovation technique is integrated into the algorithm, in which the scalar innovation is replaced by the innovation vector.

The bias of the multi-innovation stochastic gradient algorithm is calculated by the observations and the previous estimates, and then compensated to get an unbiased estimate.

The proposed algorithm is validated by numerical examples and case study. Results indicate that the proposed algorithm can obtain accurate estimates with a fast converge speed.

2. Problem description

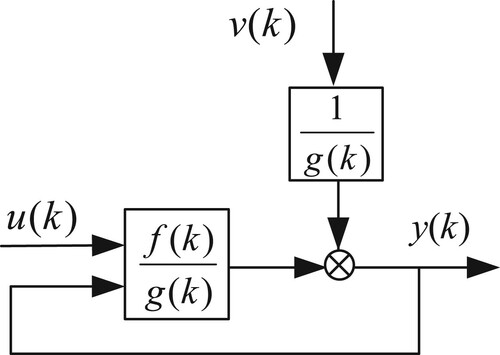

Consider an ARX-NRM depicted in Figure , where ,

and

are the input, output and noise, respectively.

and

are two nonlinear polynomials concerning

and/or

,

.

From Figure , we can express the output by

(1)

(1) where

(2)

(2)

It can be seen from Figure and Equation (1) that the structure of this NRM is similar to that of the ARX model, [Citation21]. The difference is that the numerator

and denominator

of the NRM are both nonlinear polynomials, and the input

is implicit in the nonlinear transfer function. Thus the NRM in Figure is named ARX-NRM.

Multiplying both sides of Equation (1) by yields

(3)

(3) with

(4)

(4) and

,

are scalars with the forms of

,

, etc. Then we can parameterize the ARX-NRM as follows:

(5)

(5) where

(6)

(6)

The identification of the ARX-NRM in Figure is transformed into the estimation of the parameter vector based on the observations

, where

is the data length.

3. Identification algorithm

3.1. Stochastic gradient (SG) algorithm

Consider the parameterized system in Equation (5) and denote the error as

(7)

(7) where

is the parameter estimate at time

. Sometimes,

is also called innovation [Citation16].

Define a cost function as follows:

(8)

(8) The SG algorithm updates the parameter estimate along the negative gradient direction of the criterion function

until

reaches the minimum. The stochastic gradient of

concerning

at time

is

(9)

(9)

The SG algorithm for identification of is as follows [Citation20]:

(10)

(10) where

is the variable step size that can be calculated by [Citation16]

(11)

(11)

3.2. Multi-Innovation SG (MI-SG) algorithm

Although the SG algorithm costs less calculation than the least-squares algorithm, it converges slowly. To accelerate the algorithm, multi-innovation is introduced [Citation16]. Replacing the single innovation in Equation (9) with the multi-innovation vector

, and replacing the single information vector

with the information matrix

gives a stacking gradient

as follows:

(12)

(12) where

is the stacking length,

,

and

are the stacked gradient, stacked innovation vector, and stacked information matrix, respectively. Expanding

yields

(13)

(13) where

denotes the gradient at time

.

It can be seen from Equation (13) that the stacked gradient is the sum of recent

gradients, and it can also be regarded as the weighted information vectors, and the weighting coefficient is the innovation at the corresponding time. In short, this summation or weighting increases the size of the gradient, modifies the direction of the gradient and is conducive to accelerating the gradient algorithm. This gradient algorithm using multi-innovation is called multi-innovation SG (MI-SG) algorithm.

Consider Equation (13), the SG estimator Equation (10) is rewritten as

(14)

(14) Equations (7)–(14) except Equation (10) construct the MI-SG algorithm.

3.3. Bias compensated MI-SG (BC-MI-SG) algorithm

Let us study the properties of the parameter estimate given by the MI-SG algorithm.

Considering Equations (7) and (9), the stacked gradient in Equation (12) is rewritten as

(15)

(15) where

,

and

denote the true parameter vector.

Considering Equations (15) and (14) becomes

(16)

(16) Taking expectation on both sides of Equation (16) yields

(17)

(17) Supposing

is not related to

, and using the conditional expectation formula [Citation20]

(18)

(18) The fourth item on the right side of Equation (17) can be written as

(19)

(19) Equation (17) becomes

(20)

(20) When

,

, we have

(21)

(21) It can be seen from Equation (21) that the parameter estimate obtained by the MI-SG algorithm is biased. We can find that the bias

is caused by the

on the right of Equation (16). To obtain an unbiased estimate, this term must be subtracted from the right of Equation (14), i.e.

(22)

(22) However,

cannot be calculated by

because of the unknown

. For the ARX-NRM in Figure , considering Equation (6) and replacing the unknown

by

,

is calculated by

(23)

(23) Substituting Equation (23) into Equation (22) yields

(24)

(24) Equations (7)–(13) and Equation (24) (except Equation (10)) construct the bias compensated MI-SG (BC-MI-SG) algorithm.

4. Performance analysis

4.1. Computational analysis

The calculation costs of each iteration of the SG, MI-SG, RLS and BC-MI-SG algorithms are shown in Table . It is seen that:

The computational burden of the three SG algorithms is all

.

The MI-SG and the BC-MI-SG algorithm cost more computations than the SG algorithm because of the multi-innovation.

The proposed algorithm costs less computation than the recursive least squares (RLS) algorithm, whose complexity is

[Citation9,Citation16].

Table 1. Computational costs of the SG, MI-SG, BC-MI-SG, and RLS algorithms.

5. Experiment results

5.1. Numerical example

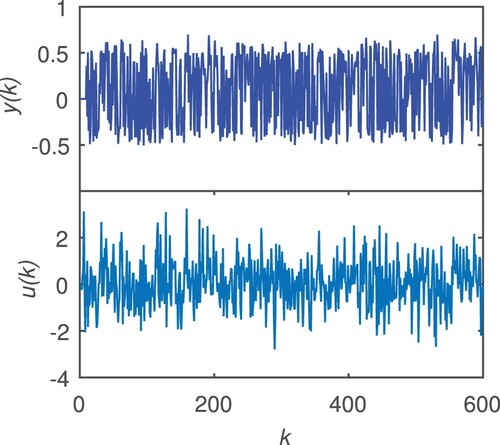

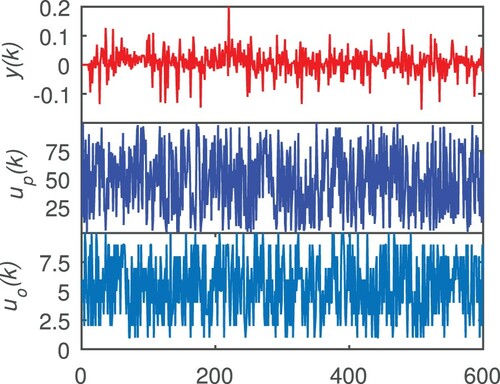

Consider an ARX-NRM in Equation (1) with

(28)

(28) where the input

is a Gaussian signal with mean zero and variance

. A noise

with mean zero is added to the model. 600 observations are collected and depicted in Figure . The initial value of each entry of the parameter vector is set to

and the estimation error is defined as

.

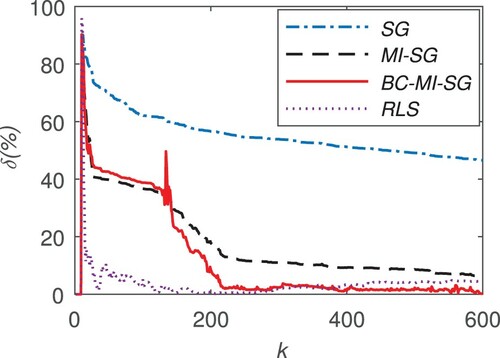

Results using BC-MI-SG, MI-SG, SG and RLS algorithms

The parameter estimates using the proposed BC-MI-SG are shown in Table , and the estimation errors are depicted in Figure , where . For comparison, the parameter estimates given by the SG, RLS and MI-SG algorithms are also listed in Table , and the estimation errors using the last three algorithms are also depicted in Figure .

Table 2. Estimates using the SG, MI-SG, RLS and BC-MI-SG algorithms.

It can be seen that:

In Figure , all curves decrease when k increases, which means that the estimation errors of the three algorithms become small with the new data being used.

The error curves of the two algorithms with MI are almost the same, which are far lower than that of the SG algorithm. In other words, the parameter estimates given by the two MI-SG algorithms are more accurate than those given by the SG algorithm.

Among the two curves with MI, the curve of the proposed BC-MI-SG algorithm is at the bottom, which shows that the estimation error given by the proposed algorithm is smaller. That is to say, the bias compensation can improve the estimation accuracy of the MI-SG algorithm.

In the second half of identification, the curve of the RLS algorithm has little difference with the proposed algorithm, which implies the RLS algorithm can give an accurate estimate for the ARX-NRM. However, the RLS algorithm costs too much computation to prevent its application in some situations that needs fast identification (see Table for more details).

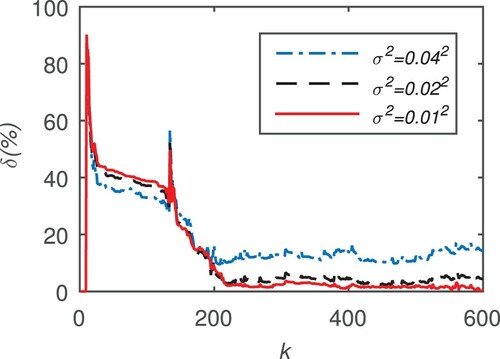

Results using the BC-MI-SG algorithm with different noise variances

It can be seen that:

For a given noise variance

, the overall trend of the estimation error decreases with the increase of

.

When the variance is small, the curve of the estimation error is relatively smooth. With the increase of variance, the fluctuation of the error curve increases.

The estimation error of

is smaller than those of the

and

. That is, a larger

is not conducive to the improvement of the estimate's accuracy.

5.2. Case study

A chemical model describing propylene catalytic oxidation with the following structure [Citation5,Citation15,Citation34] is used to validate the proposed algorithm,

where two inputs

and

are the oxygen and propylene concentrations at time

respectively. The rate of disappearance of propylene

is taken as the output variable. The true values are

. The inputs

and

are taken as random integers between

and between

respectively,

is taken as a white noise sequence with mean zero and variance

. The curve of 600 observed data is shown in Figure .

Following ARX-NRM structure is used:

Estimate using proposed BC-MI-SG algorithm with

is listed in Table , where the estimation error is calculated by the following formula:

.

Table 3. Results using the SG, MI-SG and BC-MI-SG algorithms for the propylene catalytic oxidation data.

For comparison, the estimates of the SG and MI-SG algorithms are also shown in Table . It is easy to find that the estimation error of the proposed algorithm is the smallest one among the three algorithms, which means the model obtained by the BC-MI-SG algorithm is the most accurate model of the three.

6. Conclusion

To identify the parameters of an ARX-NRM, a bias compensated multi-innovation stochastic gradient algorithm is presented. To accelerate traditional stochastic gradient algorithm, a multi-innovation is integrated into the algorithm. The multi-innovation technique replaces the scalar innovation in the SG algorithm with an information vector. Theoretical analysis shows that the MI-SG algorithm gives a biased estimate because the output contained in the information vector is correlated to the noise. To get an unbiased estimate, the bias is calculated firstly and then compensated to the MI-SG algorithm. The proposed algorithm is validated by numerical experiments and the modelling of the propylene catalytic oxidation. Results indicate that the proposed algorithm can give accurate estimates using less computation.

Data availability statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Becerra JA, Ayora MM, Reina-Tosina J, et al. Sparse identification of Volterra models for power amplifiers without pseudoinverse computation. IEEE Trans Microwave Theory Tech. 2020;68(11):4570–4578.

- Jing S. Identification of a deterministic Wiener system based on input least squares algorithm and direct residual method. Int J Model Ident Control. 2020;34(3):208–216.

- Obeid S, Ahmadi G, Jha R. NARMAX identification based closed-loop control of flow separation over NACA 0015 airfoil. Fluids. 2020;5(3):100.

- Wu H, Chen SX, Zhang YH, et al. Robust bearings-only tracking algorithm using structured total least squares-based Kalman filter. Automatika. 2015;563:275–280.

- Chen J, Ding F, Zhu Q, et al. Maximum likelihood based identification methods for rational models. Int J Syst Sci. 2019;50(14):2579–2591.

- Kambhampati C, Mason JD, Warwick K. A stable one-step-ahead predictive control of non-linear systems. Automatica (Oxf). 2000;36(4):485–495.

- Mu B, Bai E, Zheng W, et al. A globally consistent nonlinear least squares estimator for identification of nonlinear rational systems. Automatica (Oxf). 2017;77:322–335.

- Zhu Q, Wang Y, Zhao D, et al. Review of rational (total) nonlinear dynamic system modelling, identification, and control. Int J Syst Sci. 2015;46(12):2122–2133.

- Zhu Q, Billings SA. Parameter estimation for stochastic nonlinear rational models. Int J Control. 1993;57(2):309–333.

- Zhu Q, Billings SA. Recursive parameter estimation for nonlinear rational models. Dept of Automatic Control and System Engineering. Sheffield: University of Sheffield; 1991.

- Billings SA, Chen S. Identification of non-linear rational systems using a prediction-error estimation algorithm. Int J Syst Sci. 1989;20(3):467–494.

- Mao K, Billings SA, Zhu Q. A regularised least squares algorithm for nonlinear rational model identification. Department of Automatic Control and Systems Engineering. Sheffield: University of Sheffield. 1996.

- Billings SA, Zhu Q. Rational model identification using an extended least-squares algorithm. Int J Control. 1991;54(3):529–546.

- Zhu Q. A back propagation algorithm to estimate the parameters of non-linear dynamic rational models. Appl Math Model. 2003;27(3):169–187.

- Zhu Q. An implicit least squares algorithm for nonlinear rational model parameter estimation. Appl Math Model. 2005;29(7):673–689.

- Ding F. System identification New theory and methods. Beijing: Science Press; 2013.

- Wang J, Ji Y, Zhang C. Iterative parameter and order identification for fractional-order nonlinear finite impulse response systems using the key term separation. Int J Adapt Control Signal Process. 2021;35(8):1562–1577.

- Ji Y, Kang Z. Three-stage forgetting factor stochastic gradient parameter estimation methods for a class of nonlinear systems. Int J Robust Nonlinear Control. 2021;31(3):971–987.

- Xu L, Ding F, Yang E. Auxiliary model multiinnovation stochastic gradient parameter estimation methods for nonlinear sandwich systems. Int J Robust Nonlinear Control. 2021a;31(1):148–165.

- Fang C, Xiao D. Process identification. Beijing: Tsinghua University Press; 1988.

- Ljung L. System identification (2nd Ed.): Theory for the user. USA: Prentice Hall PTR; 1999.

- Ouyang T, Xu P, Chen J, et al. A novel state of charge estimation method for lithiumion batteries based on bias compensation. Energy. 2021;226:120348.

- Ni J, Gao Y, Chen X, et al. Bias-compensated sign algorithm for noisy inputs and its step-size optimization. IEEE Trans Signal Process. 2021;69:2330–2342.

- Grace JB. Instrumental variable methods in structural equation models. Methods Ecol Evol. 2021;12(7):1148–1157.

- Jin S, Yang-Wallentin F, Bollen KA. A unified model-implied instrumental variable approach for structural equation modeling with mixed variables. Psychometrika. 2021;86(2):564–594.

- Filipovic V, Nedic N, Stojanovic V. Robust identification of pneumatic servo actuators in the real situations. Forsch Ingenieurwes. 2011;75(4):183–196.

- Tao H, Li X, Paszke W, et al. Robust PD-type iterative learning control for discrete systems with multiple time-delays subjected to polytopic uncertainty and restricted frequency-domain. Multidimension Syst Signal Process. 2021;32(2):671–692.

- Xu Z, Li X, Stojanovic V. Exponential stability of nonlinear state-dependent delayed impulsive systems with applications. Nonlinear Analysis: Hybrid Systems. 2021b;42:101088.

- Zhang X, Wang H, Stojanovic V, et al. Asynchronous fault detection for interval type-2 fuzzy nonhomogeneous higher-level Markov jump systems with uncertain transition probabilities. IEEE Trans Fuzzy Syst. 2021. doi:https://doi.org/10.1109/TFUZZ.2021.3086224

- Chaudhary NI, Raja MAZ, Khan AUR. Design of modified fractional adaptive strategies for Hammerstein nonlinear control autoregressive systems. Nonlinear Dyn. 2015;82(4):1811–1830.

- Chaudhary NI, Raja MAZ, He Y, et al. Design of multi innovation fractional LMS algorithm for parameter estimation of input nonlinear control autoregressive systems. Appl Math Model. 2021;93:412–425.

- Khan AA, Shah SM, Raja MAZ, et al. Fractional LMS and NLMS algorithms for line echo cancellation. Arab J Sci Eng. 2021;46: 9385–9398.

- Raja MAZ, Chaudhary NI. Two-stage fractional least mean square identification algorithm for parameter estimation of CARMA systems. Signal Process. 2015;107:327–339.

- Dimitrov SD, Kamenski DI. A parameter estimation method for rational functions. Comput Chem Eng. 1991;15(9):657–662.