?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

We introduce a solution scheme for portfolio optimization problems with cardinality constraints. Typical portfolio optimization problems are extensions of the classical Markowitz mean–variance portfolio optimization model. We solve such types of problems using a method similar to column generation. In this scheme, the original problem is restricted to a subset of the assets resulting in a master convex quadratic problem. Then the dual information of the master problem is used in a subproblem to propose more assets to consider. We also consider other extensions to the Markowitz model to diversify the portfolio selection within given intervals for active weights.

Introduction

In portfolio optimization, an investor allocates funds among available assets. The objective is to select the best portfolio among a set of feasible portfolios, where the quality of a portfolio is measured in terms of different factors, classically expected return and risk. The portfolio optimization problem is, naturally, a multi-objective problem where there is a trade-off between risk and return: typically a higher expected return implies facing higher risk and vice versa. This trade-off is set according to the investor’s risk aversion. A standard model for portfolio optimization is the traditional Markowitz mean–variance portfolio problem (Markowitz, Citation1952). In this model, the trade-off between expected return and risk is represented by a weighted combination of return expectation and return variance. The Markowitz model is theoretically very strong but has received a lot of criticism because the setting is not realistic; thus, it is important to extend the simple Markowitz model with cardinality and quantity constraints (see, e.g., Cesarone, Scozzari, & Tardella, Citation2013).

In this article, we consider the traditional Markowitz mean–variance portfolio problem extended by some practical constraints including cardinality and quantity constraints. Existing methods for tackling such hard constraints are based on heuristics and evolutionary computing. We propose a novel methodology based on a column generation approach to quadratic optimization problems. To do this, the constraints are divided into two groups. The first group, the “easy” ones, is a set of linear constraints. The second group of constraints includes the hard ones in terms of computational complexity. This group consists of tracking error constraints, which are not convex, and cardinality constraints, which are of a combinatorial nature.

The basic model includes constraints setting limits on the assets’ weights based on multiple features such as active weights, market capital quantile, sector, and deviation from a benchmark. We define the basic model to be the Markowitz model extended by the first group of constraints. Because all of the constraints included are linear, the basic model is a (convex) quadratic optimization problem and can be solved very efficiently to optimality. Indeed, our experimental analysis indicates that commercial solvers are able to easily solve this problem. Our methodology will be based on iteratively solving several instances of the basic model; thus, efficiency is critical.

The second group consists of three type of constraints, active share constraints on the deviation from the benchmark, tracking error constraints on the correlation between the returns of the selected portfolio and the benchmark, and cardinality constraints on the number of active assets in the portfolio. We tackle the tracking error constraints by continuous adjustment of the risk parameter. To handle the cardinality constraints, we propose a novel asset selection subproblem based on the marginal effect of investing in those assets. Our method could be considered an extension of column generation to the quadratic setting.

In the literature, significant attention is paid to real-life trading costs and monitoring availability; in particular, cardinality constraints are studied. Cesarone et al. (Citation2013) provide a discussion on the computational complexity of this type of problems. Though the classical Markowitz model is a convex quadratic programming model, the cardinality constraint results in a significantly more complex NP-hard problem, which can be modeled via a mixed-integer quadratic program. An exact approach is provided by Bienstock (Citation1996) with a branch-and-cut algorithm. Even though this work provides theoretically strong results, such an approach is not practical for real-life problems (see Cesarone et al., Citation2013). Therefore, the algorithms considered in the literature are mainly based on local search and multi-objective evolutionary algorithms, which fail to guarantee global optimality. The effect of genetic algorithms, tabu search, and simulated annealing on the cardinality constraint is seen in the detailed work of Chang, Meade, Beasley, and Sharaiha (Citation2000) and references therein. Extensions to evolutionary algorithms, such as memetic algorithms, are also studied for tackling the cardinality constraint (Streichert, Ulmer, & Zell, Citation2004). More experiments and comparisons in evolutionary algorithms can be found in the work of Anagnostopoulos and Mamanis (Citation2011).

The rest of this article is structured as follows. In the following section, we provide the notation and formulate the problem mathematically. Then we present the methodology to solve the problem. Next, we perform a numerical test of our methods. We describe the data we use and evaluate the performance of our solution by analyzing the results. The conclusion, alternative methods to adjust the solution, and final remarks are provided in the last section.

Preliminaries

The portfolio construction problem follows the model of Markowitz (Citation1952), where a risk-averse investor’s goal is to construct a portfolio that maximizes expected return and minimizes risk. Risk aversion is quite a realistic assumption given large experimental evidence involving, for instance, lotteries (Holt & Laury, Citation2002). The Markowitz model uses the volatility of the portfolio returns as measure of risk. Given Ω, the variance–covariance matrix of the assets’ return, and α, the vector of assets’ expected returns, we formulate the following optimization model:

(1)

(1)

where the vector of decision variables w represents the percentage of wealth invested in each asset, and

denotes the set of all available assets. The parameter

reflects the investor’s risk aversion, balancing the preference between risk and return. The first constraint restricts the weight allocation to be nonnegative (short positions allowed), and the second constraint ensures that the total allocation of asset weights sums up to 1 (simply indicating that all of the funds have to be invested in our asset universe).

It has been assumed that the investor cares here only about the mean and variance of portfolio returns and we neglect all of the higher moments such as skewness (tail risk) or kurtosis (fatness of tails). Thus, up until this point we have used the same assumptions under which in a frictionless environment a mean–variance efficient frontier can be easily constructed (see, e.g., Cochrane, Citation2009) and the optimal portfolio chosen.

The basic model

We add constraints to model (1) to bring the analysis closer to practical implementation and more recent developments in the literature. We use MCAPQk to denote the set of assets corresponding to companies in the kth market capital quintile, where The index k = 1 stands for the largest quintile and k = 5 stands for the smallest. The assets in the given problem are being distributed into sectors: each asset i belongs to some sector j. We define the set sectorj as the set of all assets corresponding to companies belonging to sector

All of the extensions are financially quite intuitive. We assume that a benchmark with weights wb is exogenously specified. We introduce the auxiliary decision variable which measures the deviation from the given benchmark in terms of assets’ weights.

The first difference with model (1) is that we use as the objective function instead of

In our approach, instead of considering λ as an exogenously fixed parameter, the value of λ is dynamically adjusted in order to satisfy risk exposure constraints. More details are provided in the Solution Approach section.

We add constraints on the deviation from the benchmark according to different features:

The deviation from benchmark weight constraint restricts the individual deviation from the benchmark weight from −5% to +5%. The assets in the given problem are being distributed into sectors; each asset belongs to a sector. Thus, the sector active weight constraint restricts the total summed deviation for each of the sectors to be less than 10%.

The market capitalization of an asset captures the asset’s capitalization size relative to the market. The market cap quintile constraint ensures that the total summed deviation per quintile capitalization size does not exceed 10%. The beta active weight constraint ensures that the total sum of the product of beta, an exogenously specified measure of each of the asset’s sensitivity to the whole market, and the deviation from the benchmark is restricted to no more than 10%. These constraints ensure that the constructed portfolio does not deviate much from the benchmark, as well as that the weight allocation of the assets is distributed across sectors, capitalization, and betas. This is relevant from a risk management perspective and other policies that limit the portfolio exposure to idiosyncratic (e.g., firm specific) shocks.

Computationally hard constraints

The basic model discussed in the previous subsection could be solved efficiently. In this section, the constraints we introduce are the ones that make the problem computationally intractable.

Active share

The first nonconvex constraint we introduce is the active share constraint. The active share concept originally proposed by Cremers and Petajisto (Citation2009) and further analyzed by Cremers (Citation2017) is a measure of the relative activeness of a portfolio. There are several reasons why an investor could be interested in the active share of a portfolio. For example, in mutual funds, it is very important to know how active the fund manager is. After all, any active portfolio management services involve certain costs (management fees) that are considered to be a compensation for a portfolio manager’s effort to generate positive abnormal returns (in finance jargon, positive “alphas”). This example with a mutual fund manager might sound restrictive, but the concept is quite broad and could be applied in any portfolio selection procedure.

Because by construction the portfolio fully invested in a benchmark has a zero active share, our optimization problem simply tries to find an optimal deviation from a given benchmark. Moreover, a deviation from the benchmark can be observed by comparing the amounts invested in each asset. The active share constraint is then given by

The mathematical correctness of this constraint is also intuitive. Assume that the portfolio selected in the current solution is exactly the same as the benchmark. Then, for every asset i, resulting in

This is clearly a violation. However, if there are more significant deviations in the selected portfolio, then the constraint will be satisfied. We reformulate the active share constraint in terms of the sum of the absolute value of the deviations using Lemma 2.1.

Lemma 2.1.

Under the full portfolio constraint, for any a:

Proof.

For all i we have Thus,

and the lemma follows. □

Thus, the active share constraint is equivalent in our case to

Tracking error

The tracking error calculation introduced by Roll (Citation1992) and analyzed by Rudolf, Wolter, and Zimmermann (Citation1999) can also be seen as a measure of how the portfolio returns are dispersed relative to the benchmark.

The left part of the constraint is concave and the right part is convex. Because it is typically difficult to solve a minimization problem with concave constraints, we use an alternative method to satisfy the tracking error constraint, namely, by adjusting the risk aversion parameter values λ. Notice that the tracking error constraint is a constraint on the risk measure and thus it makes sense that the selection of λ will reflect the tracking error. Therefore, the algorithm dynamically adjusts the value of λ to satisfy the tracking error constraint. The details for this approach are given in the Solution Approach section.

Cardinality

The cardinality constraint closely relates to the idea that financial markets are not frictionless and there are substantial transaction costs and divisibility limitations. The rebalancing of a portfolio that contains hundreds of assets can be extremely costly and erode all of the net returns. This is motivation for allowing a limited number of positions in the portfolio to change. Another idea is that a portfolio with fewer assets is easy to oversee and analyze. Thus, the cardinality constraint has practical advantages. In our model, the cardinality constraint ensures that the number of active assets (i.e., assets with nonzero weight) is at least 50 and at most 70.

This constraint is a combinatorial constraint, and adding this type of constraint to a quadratic optimization problem makes the problem computationally very hard to solve. Our main contribution is a new methodology to tackle this constraint. In a nutshell, in the proposed methodology, interactively we maintain a small set of candidate assets to ensure the cardinality constraint. We find the optimal portfolio restricted to this set of assets. Then the set of assets is dynamically updated by computing the marginal effect that each asset has on the objective and including as new candidates the most promising assets, while dropping those of smaller weight. The marginal effect plays a role similar to the reduced cost in column generation for linear programs. Details are discussed in the following section.

Solution approach

In our methodology we divide the problem into a master problem and a subproblem. Given a set of candidate assets, we call the master problem the basic model introduced previously, restricted to

The master problem Psi is a (convex) quadratic optimization problem. There are efficient ways to solve this type of problem; for example, interior point methods (Wright, Citation1997).

Now we need to take care of the hard constraints introduced previously. First we look at the active share constraint or, equivalently, the total absolute deviation constraint. To fulfill this constraint, we derive the following sufficient condition:

Thus, implies the total absolute deviation constraint. Therefore, to satisfy the active share constraint, we randomly deselect assets in

to reduce the sum of the benchmark weights such that

The tracking error constraint is attained during each step by readjusting the value of λ when the constraint is violated. Notice that is one of the objective terms; hence, by adjusting λ we can make Psi

change the priority: decreasing λ gives more importance to minimizing the tracking error, ergo reducing

whereas increasing λ gives more weight to maximizing the expected revenue by allowing a higher risk, hence increasing

To satisfy the cardinality constraint, we construct the portfolio by solving problem Psi to optimality on a set of 70 selected candidate assets (all of the other assets are considered to have weight 0 in the solution). In this way, the cardinality constraint is satisfied. Iteratively the candidate set is modified by dropping assets of zero or small weight and replacing them with new candidate assets selected from the given universe of assets.

As we solve problem (Psi) many times it is important that we use an efficient solver. We use Mosek version 8 (MOSEK ApS, Citation2017) as solver under the Yalmip (Lofberg, Citation2004) environment (release R20180926, MATLAB 2017b). This solver was the most efficient of the solvers we tested for our problem.

Now we explain how we initially pick the set of assets and how we update it in each iteration. For each given review period, we initialize the algorithm by setting as the set of assets that were selected in the portfolio of the previous period. In the case when a candidate asset from the previous balance portfolio is no longer in the market, it is replaced in

by a random asset.

Iteratively, after solving Psi over a set

of candidate assets, we remove from

the assets with the lowest weight and all assets that we do not invest in (investment

) and remove them from

Then, to adjust the cardinality—that is, the number of assets that we consider in the portfolio to 70—we reselect assets not in

to be added to

We do this based on the marginal effect of investing in those assets. Take an asset

and let

We are interested in knowing whether i will have a positive or 0 weight when solving (Psi

). To check this, we fix

in (Psi

). Notice that this new problem is very similar to (Psi

), and we call it (Psi

).

The objective of (Psi) is the same objective of (Psi

) plus the term

The marginal direct effect on the objective from including asset i can be split into three main parts. These three parts are the marginal effect on the portfolio variance, the marginal effect on the mean portfolio return, and the marginal effect on the turnover costs. The marginal direct effect on the objective function and the turnover from including asset i is obtained from EquationEquation (2)(2)

(2) .

(2)

(2)

In the formulation of marginal costs, denotes the amount invested in asset i in the previous portfolio. Correspondingly,

is an indicator function. This indicator function takes a value of 1 if during the previous period a numerically significant amount has been invested in asset i—that is, at least 0.001% of the current budget—and 0 otherwise.

The constraints of (Psi) are the same constraints of (Psi

) except for the constant term. The indirect effect can be obtained by using the dual (shadow) values. Shadow values are typically described for linear optimization problems, but under strong duality they can be used for nonlinear models as well. Write the constraints of (Psi

) as

and let s denote the corresponding dual values. Then the indirect effect of investing in an asset i, as in the case of column generation, is given by EquationEquation (3)

(3)

(3) .

(3)

(3)

Then the marginal effect δi of investing in asset i is obtained by using EquationEquation (4)(4)

(4) .

(4)

(4)

The assets with the most negative marginal effect are added to the list of candidate assets, such that the cardinality of the new set is 70. Then the convex problem (Psi

) is solved again. We choose considering investment in a fixed number of 70 assets because investing in fewer than 70 assets leads to a smaller feasible region for (Psi

) and therefore leads to a worse objective than considering investment in more assets. Algorithm 2.1 describes the process of optimizing the portfolio.

Algorithm 2.1:

CG for review period t

Data:

α: Mean return parameter

Ω: Variance–covariance matrix

: Previous period portfolio

wb: Benchmark portfolio

: Risk parameter

λ = 5: Adjusted risk parameter

: Adjusted obtained weights in period t − 1

Removed

: Assets in which we do not invest in period t − 1

: Number of assets in which we do not invest

: Initial portfolio

: Difference in weights compared to the benchmark

: Turnover penalty

Result:

while time minutes do

| while do

| | Add arbitrary asset to Removed

| End

| Obtain wt and dt from Psim with

| if then

| | Set

| else

| | if then

| | | Set

| | else

| | | if Psim(wt,)+

turnover(wt,wb) <Psim(

))+

| | | | then

| | | Set

| | | end

| | | set oldRemoved = Removed

| | | set

}

| | | set

| | | set

| | | set

| | | Select assets based on with best marginal effect at wt on the objective

| | | newRemoved = Removed excluding the selected assets

| | | if (oldRemoved = newRemoved) then

| | | | reselect random non-removed assets

| | | | newRemoved = Removed excluding the reselected assets

| | | end

| | | Removed = newRemoved

| | end

| end

end

Application

Next, we apply the solution approach on the data set used and evaluate our solution with relevant performance metrics.

Data

The problem is defined by Principal Inc., a global investment company. The company also provided the data to test the solution approach. We were provided with 10 years of time series data starting at January 3, 2007, which we refer to as S&P 500 data, and estimators for the 4-week variance–covariance matrix of the return of those assets over the same time interval. The entries are updated every 28 days (4 weeks). The elements of each entry for a date are: the identifier which is the unique identifying SEDOL code, the sector of the company which will be used to set the sector active weight in an interval, the beta value which reflects the volatility of an asset compared to the whole market, the alpha score which is the performance estimation shown as the expected return, name of the asset, benchmark weight which is the investment amount in the provided benchmark and the market cap quintile which reflects the size of the asset’s company by the quantile in the market.

In the results sheet we are given the historical returns for each 4 week period. We assume that the returns on a date are the result of the investment done 4 weeks before. Thus, the return entry at the first date January 3, 2007, is actually the return coming from the investment made on December 6, 2006.

Performance measurements

The basis of the cost calculations are the portfolio weights w and the 4-week returns of the given portfolio r. For the calculations of our performance measures we also include the turnover-adjusted returns. The turnover is penalized by 0.5% in our method to avoid high costs corresponding to changing portfolio. The turnover is calculated by the following two formulas:

We evaluate our solution by comparing the following results with the benchmark results both including and excluding the turnover costs:

Cumulative return is the total return obtained. It is used to see how well the chosen portfolio scheme has performed at the end of the final period.

Annual return converts the cumulative period into an average annual return. The advantage of using annual returns is that this performance measure is independent of the measured time interval.

Annualized excess return is defined as the difference between the annual return of the proposed portfolio and the annual return of the benchmark.

Tracking error is the standard deviation of the difference between the returns of the chosen portfolio and the benchmark. We use annualized tracking error by considering the number of rebalances in a year. The tracking error can be used to analyze how closely the portfolio follows the benchmark.

Sharpe ratio is a measure to show the return of the portfolio compared to the risk it carries (Sharpe, Citation1994). It is computed by subtracting the best risk-free option from the final return and dividing it by the standard deviation of the observed returns. Because a risk-free option is not investing at all, we take this value as 1. This ratio helps us to understand how choosing riskier assets affects the extra return we have compared to the risk-free option. This ratio is helpful to see the trade-off between the return and the risk.

Information ratio is a measure to show how good the portfolio returns compared to the benchmark given, with a tracking error normalization. According to Grinold and Kahn (Citation2000, p. 110): “The information ratio measures achievement ex post (looking backward) and connotes opportunity ex ante (looking forward).” It is found by dividing the difference between the returns of the portfolio and benchmark by the tracking error. The information ratio is similar to Sharpe ratio; however, the information ratio gives the risk and return by taking the benchmark as the base case. A higher information ratio is given by a greater difference between the portfolio and benchmark and a lower tracking error. Therefore, a higher information ratio can be used to see how closely the benchmark is followed, with how much better return.

Results and analysis

We used a run time limit of 170 s for each review period for the algorithm. To check the performance of our method, we compare our portfolio’s performance on the Principal data set to the benchmark. In this comparison, we do not consider turnover costs for the returns of the benchmark portfolio. We therefore split our result analysis into two parts. We first compare the results of our method excluding turnover costs. In the second part, we do include the turnover costs in our portfolio.

It can be seen from our performance statistics in that the result of our method excluding turnover costs outperforms the benchmark. In each of the performance statistics, our model’s results are better than the performance statistics of the benchmark.

Table 1. Portfolio performance statistics excluding turnover costs.

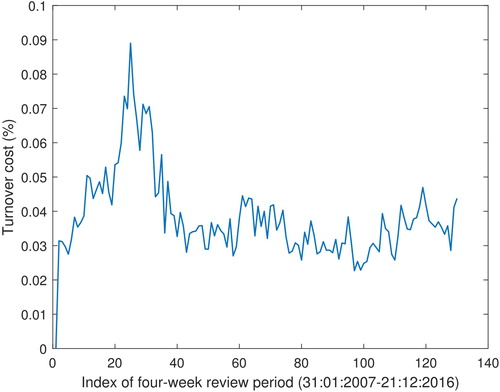

Our model handles turnover cost indirectly, but the obtained portfolios still have a large turnover. This can also be seen in , which plots the turnover costs of our portfolios over the review periods. These turnover costs are calculated as a 0.5% cost per unit turnover. indicates that our method does not focus enough on having a small turnover.

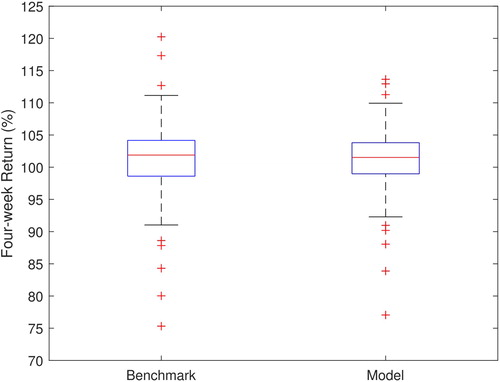

The relatively high turnover costs of our portfolios lead to our method performing slightly worse than the benchmark. These performance statistics can be seen in . These differences in performance suggest that our method adjusts the portfolio too much each period. That is why, for future development of this model, it is important to focus more on the minimization the turnover costs. In the Discussion section, we propose a few adjustments to the method to achieve lower turnover.

Table 2. Portfolio performance statistics including turnover costs.

The difference in returns is due to the 0.5% turnover cost per unit turnover. illustrates the turnover costs per 4-week review date from January 31, 2007, to December 21, 2016. shows that the resulting returns including turnover costs are on average not as high as the benchmark. This is in part compensated for by a smaller risk, which is shown as a smaller spread of the box plot. Therefore, our resulting portfolio can be seen as more robust.

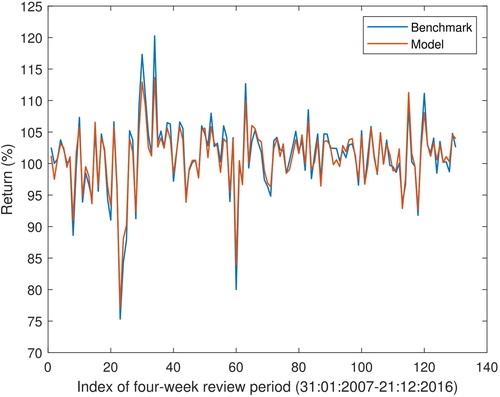

Next, we show the performance of our method over each review period. These results are shown in , which shows that the model follows the benchmark quite closely and our portfolios even lead to smaller peaks. This can be interpreted as our method leads to more robust portfolios than the benchmark.

Discussion

Our method shows promise for solving portfolio optimization problems with cardinality constraints. shows that the performance without the turnover costs is better than the benchmark, in terms of both risk and expected return. But the method could be improved in terms of turnover. and both show that the method has difficulties with the limitations of the turnover cost. Therefore, for future research we recommend constraining the allowed turnover. Although the turnover-adjusted performance in terms of cumulative return is slightly worse than the benchmark, this is partially due to the absence of the turnover costs for the benchmark. Overall, this method incorporates a comprehensive model that performs close to the benchmark and considers many practical issues. The method should work similarly on quadratic optimization problems (in particular in linear problems) with cardinality constraints.

Because in our model we do not allow infeasible solutions to exist, it is necessary to make our model more flexible or to allow (small) violations of the constraints if no solution has been found. Another improvement that could be made is to consider multiple-period portfolio selection to reduce the significant turnover costs. The turnover costs can also be reduced by considering the changes in the performance parameters α and Ω of the assets over time, which can be used to make more consistent portfolios or find trends in the performance of the assets.

One possible variation of the method is a column generation algorithm based on a random selection of the new assets; this would make the solution approach more diversified because in each iteration there would be more potential assets. In addition, this may help to escape possible local optima. The idea is to select the assets that leave and enter the set of candidate assets randomly. They could be chosen uniformly or with probabilities proportional to the marginal effects or reduced cost, where the reduced costs are determined by the shadow values.

In our algorithm, for each time period we have the initial portfolio referring back to the previous portfolio and the algorithm changes it by improvements. However, because we initiate the previous portfolio, the portfolio will end up close to the previous portfolio. In this way, we made sure that the algorithm is efficient and can be terminated quickly. This allows the model to produce good results on even a much larger scale data set (10-fold or 20-fold). On the other hand, one could look at every possible asset to initialize the portfolio for the algorithm in each time period. This will increase the computational burden but will allow further diversification of the selections.

Additional information

Notes on contributors

Lorenz M. Roebers

Lorenz M. Roebers is currently completing a master’s degree in operations research at Tilburg University. His research interests lie in mathematical optimization and heuristics, ranging from theoretical design to implementation. In fall 2019 he is starting a Ph.D. program in operations research at Tilburg University.

Aras Selvi

Aras Selvi is currently completing a master’s degree in operations research at Tilburg University. His research focuses on the theory and applications of nonconvex optimization. In fall 2019 he is starting a Ph.D. program in business analytics at Imperial College London.

Juan C. Vera

Juan C. Vera is an associate professor of operations research at Tilburg University. His main research interests are mathematical programing, probabilistic combinatorics, and their applications in operations research and theoretical computer science.

References

- Anagnostopoulos, K. P., & Mamanis, G. (2011). The mean–variance cardinality constrained portfolio optimization problem: an experimental evaluation of five multiobjective evolutionary algorithms. Expert Systems with Applications, 38(11), 14208–14217.

- Bienstock, D. (1996). Computational study of a family of mixed-integer quadratic programming problems. Mathematical Programming, 74(2), 121–140.

- Cesarone, F., Scozzari, A., & Tardella, F. (2013). A new method for mean-variance portfolio optimization with cardinality constraints. Annals of Operations Research, 205(1), 213–234.

- Chang, T.-J., Meade, N., Beasley, J. E., & Sharaiha, Y. M. (2000). Heuristics for cardinality constrained portfolio optimisation. Computers & Operations Research, 27(13), 1271–1302.

- Cochrane, J. H. (2009). Asset pricing: revised edition. Princeton University Press, New Jersey, USA.

- Cremers, M. (2017). Active share and the three pillars of active management: skill, conviction, and opportunity. Financial Analysts Journal, 73(2), 61–79.

- Cremers, M., & Petajisto, A. (2009). How active is your fund manager? A new measure that predicts performance. Review of Financial Studies, 22(9), 3329–3365.

- Grinold, R. C., & Kahn, R. N. (2000). Active portfolio management. McGraw Hill, New York.

- Holt, C. A., & Laury, S. K. (2002). Risk aversion and incentive effects. American Economic Review, 92(5), 1644–1655.

- Lofberg, J. (2004). YALMIP: A toolbox for modeling and optimization in MATLAB. In Computer Aided Control Systems Design, 2004 IEEE International Symposium on (pp. 284–289).

- Markowitz, H. (1952). Portfolio selection. The Journal of Finance, 7(1), 77–91.

- MOSEK ApS. (2017). The MOSEK optimization toolbox for MATLAB manual. version 8.1. [Computer software manual]. Retrieved from http://docs.mosek.com/8.1/toolbox/index.html

- Roll, R. (1992). A mean/variance analysis of tracking error. The Journal of Portfolio Management, 18(4), 13–22.

- Rudolf, M., Wolter, H.-J., & Zimmermann, H. (1999). A linear model for tracking error minimization. Journal of Banking & Finance, 23(1), 85–103.

- Sharpe, W. F. (1994). The Sharpe ratio. The Journal of Portfolio Management, 21(1), 49–58.

- Streichert, F., Ulmer, H., & Zell, A. (2004). Evolutionary algorithms and the cardinality constrained portfolio optimization problem. Operations Research Proceedings, 2003, 253–260.

- Wright, S. J. (1997). Primal-dual interior-point methods. SIAM, Philadelphia.