Abstract

In search and rescue missions, teleoperated rovers equipped with sensor technology are deployed into harsh environments to search for targets. To support the search task, unimodal/multimodal cues can be presented via visual, acoustic and/or haptic channels. However, human operators often perform the search task in parallel with the driving task, which can cause interference of attentional resources based on multiple resource theory. Navigating corners can be a particularly challenging aspect of remote driving, as described with the Cornering Law. Therefore, search cues should not interfere with cornering. The present research explores how unimodal/multimodal search cues affect cornering performance, with typical communication delays of 50 ms and 500 ms. One-hundred thirty-one participants, distributed into two delay groups, performed a target search task with unimodal/multimodal search cues. Search cues did not interfere with cornering performance with 50 ms delays. For 500 ms delays, search cues presented via the haptic channel significantly interfered with the driving task.

Practitioner summary: Teleoperated rovers can support search and rescue missions. Search cues may assist the human operator, but they may also interfere with the task of driving. The study examined interference of unimodal and multimodal search cues. Haptic cues should not be implemented for systems with a delay of 500 ms or more.

1. Introduction

Teleoperation of mobile ground robots is frequently used for exploring hazardous environments (Opiyo et al. Citation2021) like in lunar, deep-sea, or search and rescue (SAR) operations. These ‘harsh’ environments are unstructured and dynamically changing (Wong et al. Citation2017), making successful navigation of ground robots, such as rovers, challenging for human operators. SAR operations, in which rovers are deployed to explore the scenery and search for victims (Rogers et al. Citation2017) or bomb threats (Nelles et al. Citation2020), can induce substantial workload for operators (Khasawneh et al. Citation2019). Most teleoperation systems are prone to communication delays between the operator and teleoperator environment (hereafter: delays), which can additionally increase the operators’ workload (Lu et al. Citation2019) and frustration (Yang and Dorneich Citation2017), and impairs the performance of the human-machine system (e.g. Lu et al. Citation2019; Winck et al. Citation2014). SAR tasks with unmanned ground vehicles are often composed of two sub-tasks that operators need to coordinate simultaneously (Yang and Dorneich Citation2017): the remote search task and remote driving task (e.g. Luo et al. Citation2019). For the remote search task, operators typically need to search for and identify the position of targets in order to initiate further steps (e.g. communicate the position). The remote driving task comprises the navigation of the rover while searching for these targets. Operators need to avoid collisions with obstacles or getting stuck (Casper and Murphy Citation2003; Jones, Johnson, and Schmidlin Citation2011), which is particularly difficult when navigating corners (i.e. cornering; Helton, Head, and Blaschke Citation2014). Hence, operators should be supported in coordinating both tasks during critical stages, which can be achieved by incorporating user-centred approaches based on Human Factors/Ergonomics (HF/E) principles into the user interface design of SAR teleoperation systems.

Multimodal feedback has been identified as particularly prominent approach to support operators in teleoperation contexts (Chen, Haas, and Barnes Citation2007). For the search task, bimodal or trimodal combinations of visual, acoustic, and haptic feedback can be provided for cueing errors (Yang and Dorneich Citation2017), threats (Gunn et al. Citation2005), or improving target detection (e.g. by providing directional cues to support orientation; Hancock et al. Citation2013). While multimodal feedback constitutes a promising approach for teleoperation, its effects on performance when compared to unimodal feedback via the respective channels have been rather inconclusive. Some studies found positive effects (Hancock et al. Citation2013; Hopkins et al. Citation2017; Triantafyllidis et al. Citation2020; for a meta-analysis see Burke et al. Citation2006) that were context- or outcome-specific, other studies found no (Lathan and Tracey Citation2002) or even negative effects (Benz and Nitsch Citation2017). Hence, to study the question of how multimodal feedback benefits search task performance and situation awareness in SAR contexts, we conducted an experiment with 131 participants, in which operators had to navigate a rover through a simulated virtual environment to search for targets (Benz Citation2019). Visual, acoustic and haptic stimuli indicating the direction in which a target could be found were presented as unimodal, bimodal or trimodal cues for the search task (Benz Citation2019). Results suggested that the addition of search cues generally benefitted search task performance (i.e. the number of targets found), while multi- or bi-modal feedback was not significantly related to increased performance when compared to the successful unimodal conditions (using the visual or acoustic channel; Benz Citation2019). Hence, with these results we examined how search cues affect the search task. Yet, because teleoperation in SAR scenarios is related to dual-task loads, it is not clear how much (multimodal) search cues presented through these channels interfere with the driving task.

Therefore, the objective of the present research was to examine how unimodal or multimodal visual, acoustic and haptic search cues are related to performance decrements in the remote driving task of the same experiment. The SAR task was performed with a constant delay of either 50 ms, a realistic ’real-time’ level when accounting for network connectivity, video streams, etc. (Condoluci et al. Citation2017; Zulqarnain and Lee Citation2021), or 500 ms, a level which is realistic for SAR missions for disaster response (e.g. due to weakened communication networks; Chen and Barnes Citation2014, as cited by Khasawneh et al. Citation2019). Delays of 500 ms have shown to complicate remote driving, in particular cornering (e.g. Cross et al. Citation2018; Storms and Tilbury Citation2018). For the present research, cornering time (i.e. the time for navigating through a corner; Chan, Hoffmann, and Ho Citation2019; Cross et al. Citation2018) was the central measure of driving task performance due to the following reasons: First, since the search cues provided spatial/directional information that indicated the direction of the next target, they would have changed most dynamically during cornering and potentially interfered most strongly with the driving task. Second, because cornering has been identified as particularly relevant aspect of remote driving (Cross et al. Citation2018; Helton, Head, and Blaschke Citation2014) that has been shown to be increasingly affected by delays (Hoffmann and Drury Citation2019; Hoffmann and Karri Citation2018; Scholcover and Gillan Citation2018; Storms and Tilbury Citation2018), it has received increased attention from teleoperation research over the last decade, leading to the formulation of the Cornering Law (Helton, Head, and Blaschke Citation2014; Pastel et al. Citation2007). Parallel to Fitts Law (Fitts Citation1954), the Cornering Law predicts cornering time based on the index of difficulty of a corner (IDC), which is determined by the width of its aperture (w) and the vehicle width (p; IDC = p/(w − p); Pastel et al. Citation2007). Hence, cornering performance is modelled as a function of objective properties of the cornering task and has thus mostly been studied in geometric driving circuits with squared or round corners (Chan, Hoffmann, and Ho Citation2019; Cross et al. Citation2018; Helton, Head, and Blaschke Citation2014; Storms and Tilbury Citation2018). While this approach has allowed gathering empirical support for the formulation of the Cornering Law, SAR scenarios are often characterised by rather planetary environments that require cornering around less accurate corners. With the present research, we therefore seek to extend this knowledge by examining in a realistic SAR scenario how cornering is affected by potential interference from unimodal/multimodal search cues, communicated through visual, acoustic, or haptic channels. Whilst the study described in Benz (Citation2019) only investigated the effects of these search cues on search performance in the SAR context, the present study extends this research by examining their effects on operating performance as indicated by cornering times, which is also a crucial determinant of overall teleoperation performance.

1.1. Remote driving task and remote search task

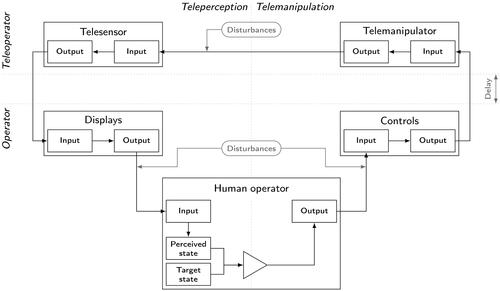

Remote navigation of rovers has been theoretically approached based on human-machine control loops (e.g. Glendon, Clarke, and McKenna Citation2006, 136) that consider operator and teleoperator processes. Operators perceive signals from displays (perception), process them cognitively (cognition) and respond accordingly (behavioural output) with the controls (Benz Citation2019; Nitsch Citation2012). These control behaviours constitute the inputs for the rover (system inputs), which are processed and transformed into rover behaviours (system output) that, again, can be monitored on displays by operators. This conception is similar to control-theoretic frameworks of road-driver behaviour (e.g. Fuller Citation2005; Summala Citation2007), which additionally assume that behaviours are regulated based on a comparator. For teleoperation, the comparator compares perceived states (perception component) with target states (cognitively derived) of the rover, and the operator’s control behaviours aim at reducing discrepancies between both states (i.e. negative control-loop). Therefore, we argue that remote driving can be modelled in affinity with control-theoretic frameworks of driver behaviour, which results in the dimensions of teleperception and telemanipulation ().

Figure 1. Control-theoretic framework of Teleperception and Telemanipulation. The comparator is depicted as triangle. In contrast to road-vehicle driving, the operator’s control inputs are performed to reduce discrepancies between an actual and a target state in the teleoperator environment—that is also perceived from the teleoperator environment through (e.g. visual, acoustic, or haptic) displays.

In contrast to road-vehicle driving, sensory information available to operators is restricted to what is communicated through displays during teleoperation, and delays further complicate successful control (Hoffmann and Drury Citation2019; Lu et al. Citation2019; Scholcover and Gillan Citation2018; Yang and Dorneich Citation2017). This becomes particularly crucial during cornering, because, to avoid collisions with obstacles or getting stuck (Casper and Murphy Citation2003; Jones, Johnson, and Schmidlin Citation2011), perceived states need to be integrated and compared to target states in very short time relative to the speed of forward motion.

From a dual-task perspective, remote driving can be seen as primary and remote searching as secondary task. In the present research, the remote search task is characterised by searching for and identifying targets and can thus be described in the taxonomy of the teleperception and telemanipulation (TPTM) framework. The rover collects information in the teleoperator environment, which are then transferred and presented to operators via displays, resulting in a strong load of the teleperception dimension. In contrast, the search task does not require telemanipulation, because targets are identified by button presses that do not actuate the rover’s effectors. Hence, remote searching requires constant teleperception, but not telemanipulation.

While visual information provided by the rover’s cameras constitutes the basic information relevant for the search and driving task, sensors can be utilised to provide additional support, for example with directional search cues pointing towards a target. Visual search cues can be given as arrows, acoustic search cues can be implemented as sounds, and force feedback can be used as haptic search cue (Benz and Nitsch Citation2017; Nitsch and Färber Citation2013). To integrate the directional information from these cues (Benz Citation2019; Benz and Nitsch Citation2018), multimodal combinations of search cues can be implemented (Chen, Haas, and Barnes Citation2007). When considering only the search task, the relevant question is how these search cues are integrated on the teleperception dimension (Benz Citation2019). Yet, from a dual-task perspective, it is essential to understand how much potential search-task facilitation by presenting search cues interferes with the remote driving task.

1.2. Dual-task interference

During teleoperated SAR, operators must process and perform the remote driving and search task in parallel, which can strain their limited attentional resources (Kahneman Citation1973). As outlined by Koch et al. (Citation2018), theoretical accounts of dual-task interference can be separated into cognitive structure, flexibility, and plasticity. Among those accounts, multiple resource theory (MRT; Wickens Citation1984) stands out by postulating that resources are modality-specific, extending the assumption of one overarching attentional resource (Kahneman Citation1973). Generally, MRT hypothesises that tasks interfere stronger when they occupy resources of the same modality (Wickens Citation1984), which can result in performance decrements. MRT assumes that resources can be distinguished based on four dimensions (Wickens Citation1984, Citation2008, Citation2021), of which three are crucial for the present research:

Processing stages: Perceptual and cognitive processes are assumed to require different resources than response processes.

Processing code: Spatial and verbal codes are assumed to occupy different resources for perception, cognition and responding.

Perceptual modality: For perception, visual, auditory and tactile perception use different resources. Haptic kinaesthetic perception has not been labelled as separate resource, but could either be additionally located under this dimension or subsumed with tactile perception (e.g. as done in the Literature Search of the meta-analysis by Prewett et al. Citation2006).

When combining these dimensions with the TPTM model, an understanding of the potential interference of the remote search and driving task during teleoperated SAR missions can be derived: Regarding the processing stages, teleperception requires perceptual and cognitive resources, while telemanipulation requires responding resources. The driving task is characterised by visual/spatial teleperception (perceptual modality dimension) and manual/spatial telemanipulation (processing code dimension; Wickens Citation2008, Citation2021). Finally, the cued search task is characterised by visual, acoustic and haptic teleperception (perceptual modalities dimension) and manual, though not telemanipulative, responses.

1.3. Present research

The objective of the present research was to examine if and how unimodal, bimodal, and trimodal search cues interfere with the remote cornering performance of operators when controlling a teleoperated rover, with delays of 50 ms or 500 ms. Accordingly, the effects of the experimental factors search cue modality (control, visual, acoustic, haptic, visual-acoustic, visual-haptic, acoustic-haptic, visual-acoustic-haptic) and delay (50 ms vs. 500 ms) on cornering time were studied. Search cues contained directional and proximity information, indicating the shortest route to the next target. They were implemented as arrows (visual), beep-tones (acoustic) and forces pushing the joystick with which the rover was controlled (haptic). Research Questions (Q) and hypotheses (H) were derived (see ) as reported below.

Table 1. Research questions and hypotheses.

With Q1, we wanted to examine the effect of delay on cornering times. In accordance with previous research (e.g. Lu et al. Citation2019; Storms and Tilbury Citation2018; Winck et al. Citation2014; Yang and Dorneich Citation2017), we hypothesised that higher levels of delay increasingly impair cornering times, as formulated with H1.

Q2 targeted the interference of the remote search and driving task when unimodal search cues were provided. Hypotheses were derived from MRT based on the processing code, processing stages and perceptual modalities dimensions (Wickens Citation1984, Citation2002, Citation2008). Information provided by the search cues and visual information available from cameras for the driving task both constitute spatial processing codes. Because resources from the visual perceptual modality are required for both tasks, we hypothesise that visual search cues will interfere with cornering performance. Haptic search cues should not show strong interference with the driving task, since their spatial information refers to the (tele-)perception stage, whereas the manual/spatial haptic input for the driving task refers to the responding (telemanipulation) stage. Furthermore, we derive from MRT that unimodal acoustic search cues would also not interfere with remote driving, since both tasks do not share resources from this perceptual modality. Accordingly, H2a-H2c were formulated.

Q3 focussed on the interference of multimodal search cues for which—to our knowledge—it is unbeknown in how far they interfere with remote cornering. We therefore initially formulated Q3 and H3a-H3d.

Q4 was formulated to examine which effects delays have on the interfering effects of search cue modality on cornering. Although the negative effects of delay on remote driving are well understood (Lu et al. Citation2019; Winck et al. Citation2014; Yang and Dorneich Citation2017), it—to our knowledge—has not been examined how delays interact with search cues. This question is particularly relevant, since both factors can interfere with cornering performance. Therefore, H4 was formulated.

2. Materials and method

2.1. Participants

The total sample consisted of N = 131 participants, n = 64 in the 50 ms-delay group (20 female, Mage = 23.4; SDage = 3.5), and n = 67 in the 500 ms-delay group (12 female, Mage = 23.5; SDage = 2.8). All were recruited from the Bundeswehr University Munich, had normal or corrected-to-normal vision, no hearing impairment, and were native German speakers. Participation took approximately one hour and was compensated with 10 €. All participants provided written and informed consent. The research design was approved by the ethics committee of the Bundeswehr University Munich.

2.2. Apparatus and stimuli

Data were collected on a MSI Z170A-G45 personal computer with 32GB RAM, i76700k and GeForce GTX980TI graphics card. A modified Microsoft Sidewinder Force Feedback 2 joystick was used for inputs and haptic cues, controlled by a SDL2 plug-in. The joystick was rather small and could be used with three fingers (all participants used it with the right hand). Simultaneous movements on the X- and Y-axis allowed diagonal control. Visual information was provided on a 27-inch monitor with a total distance of 90 cm from display to participant. The free-field auditory cues were realised with an OpenAL plug-in and presented with five loudspeakers (Edifier C6XD), which were mounted in a radius of 230 cm around the participant seating position. The rover for the simulated SAR operation resembled the KUKA YouBot, an omnidirectional robotic platform with four wheels that are actuated by separate motors, which enables the omnidirectional steering concept. Accordingly, longitudinal movements of the YouBot were controlled through the joystick’s Y-axis, whereas lateral movements were controlled through its joystick’s X-axis.

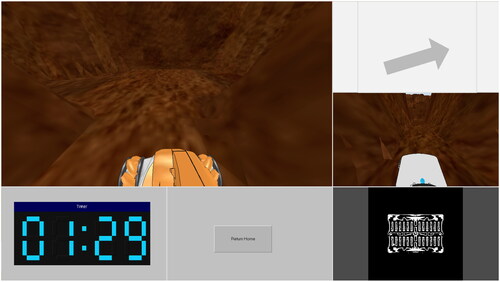

The spatial stimuli for search cue modality all contained directional and proximity information. The directional information would indicate the direction of the shortest route (linear distance) to the target, relative to the longitudinal axis of the YouBot (i.e. the front camera of the YouBot). Visual search cues were represented as arrow on white background, which pointed in the direction of the closest target. The colour of the arrow indicated proximity, from black (1 m distance to target) to white (no target detected). Acoustic cues appeared as monotone beep tones at a frequency of 350 Hz, with increasing loudness representing closer proximity to a target and maximum loudness at 1 m distance from target. Haptic force cues were provided as artificial force reflections that pushed the joystick in the direction of the closest target, with increasing force for closer proximity to the target. The direction in which the force was pushing the small stick constituted the most direct route towards the closest target. The simulation and visual cues including robotic view were implemented using V-Rep. With a red button on the right of the joystick, participants identified targets. To receive cues that navigated back to the start position, the space key was used. The operator screen is shown in .

Figure 2. Operator screen. One front-view and one rear-view camera of the rover are shown on the screen, together with a bird’s-eye map on which only the start position (but not the current position of the rover) is indicated. Targets were only visible through the front-view or (potentially) rear-view camera, but not on the map. The front-view camera is presented in the biggest window (upper left). In the bottom-left, time was presented. The bottom-middle contained the “return home” button. In the bottom-right, the bird’s-eye map was presented. In the middle-right, the rear-view camera was presented. In the upper right, the visual search cues were presented in conditions that included visual cues.

2.3. Scenario

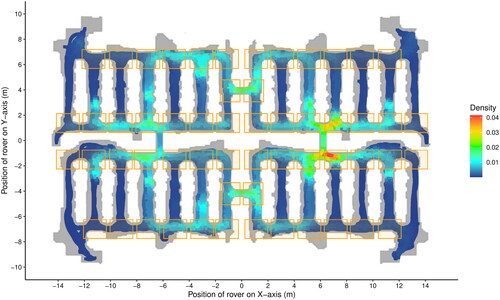

Adapted from Brooks and colleagues (Brooks et al. Citation2015), the simulation was based on an unstructured planetary environment, with vertical and horizontal corridors, connected by apertures and 90° curves. The corridors constrained the movement of the YouBot when it was navigated too close to an edge. For example, the YouBot would ’bounce off’ of a wall when running into it. From a bird’s-eye perspective, the corridors and apertures were approximately mirrored at the X- and Yaxis of the coordinate system. Twelve targets were distributed in an ellipsis around the centre-point, but lying on corridors, further four targets in dead-ends of the outermost corridors (see ).

Figure 3. Birds-eye view of the map and the X- and Y-coordinates of the rover. The density of the rover positions recorded every ≈ 50 ms are indicated. The defined curve fields are depicted as orange squares. The maximum possible speed of the YouBot is 0.8 m/s; Due to complexity and planetary structure of the environment, the maximum speed recorded in the experiment was 0.53 m/s.

Before participants started with the task, they received information about their SAR mission. They were instructed to find as many targets as possible and return to the start position within 4 minutes, which was more important than finding more targets. Furthermore, participants were informed that a detection system would indicate the direction in which a target could be found. To identify a detected target, they should push the respective button when the rover was within a 1 m radius, which was indicated when cue presentation stopped. To receive direction cues for returning to the start position, participants were instructed to press the ’home button’ on the keyboard.

Each SAR mission would start at one of four start positions, each located in a corridor in which no target was present. Depending on the start position, the timer started with a base time of one, two, three, or four minutes. This approach was used in order that participants would pay attention to the expiring time themselves and remember when to return to the start position (which was four minutes after the base time, i.e. before the timer showed five, six, seven, or eight minutes accordingly).

2.4. Procedure

Participants received standardised instructions in German and gave written consent of voluntary participation. They filled out a demographic questionnaire. Participants first received training of the rover navigation and direction cues, in the same simulated environment. Before the experiment started, they were reminded about the goal to find as many targets as possible and get back to the start position within four minutes. Then, the simulation started and each participant completed four trials, after which they completed another short questionnaire.

2.5. Design

The present research followed a mixed 2 × 8-design. The experimental factors were search cue modality (control, visual, acoustic, haptic, visual-acoustic, visual-haptic, acoustic-haptic, visual-acoustic-haptic) and delay (50 ms, 500 ms). Search cue modality was a within-participant factor, which followed a factorial-fractorial design (i.e. each participant would complete four of the factor’s eight levels). Assignment of the four levels followed a balanced permutation scheme. Delay was a between-participant factor. One group of participants completed the task with 50 ms delay, the other group with 500 ms delay.

2.6. Cornering time

For the experimental trials, the position of the YouBot on the X- and Y-axis was recorded every ≈ 50 ms. To derive cornering times, all intersections (i.e. areas with a clear corner) were first defined. Intersection fields had a height of 1.75 m and a width of 1.5 m, which prevented overlapping. They were specified to lie with their horizontal mid-line in the middle of the vertical aperture that required an 90° curve in one of two directions. Fifty-six intersections were defined accordingly ().

Next, curves were defined. Therefore, data points that did not lie in intersection fields were removed and a time-difference variable (within condition, participant, and intersection) was calculated by subtracting the previous from the current time point (△T = Ti+1 − Ti). This variable was used to identify continuous driving activities (passages) within an intersection field: Continuous data points would have values of △T ≈ 50 ms (corresponding to the 50 ms time-frame of data recordings), and noncontinuous data points in the same intersection field would generally be indicated by △T > 50 ms. To account for slight variations in recording times, we decided to set a value of △T > 60 ms as start of a new passage, and the end as the last value of △T ≈ 50 ms.

Curves were defined as passages with an exit area orthogonal to the entry area (i.e. 90° curves). For filtering the curves from all passages, entry and exit areas on the intersection field were recorded. These areas were defined as lying inside 0.10 m of each of the four sides (east, south, west, north) of an intersection field. It should be noted that for a given intersection, a passage with, for instance, entry at south and exit at east, would be a different curve than a passage with entry at east and exit at south. Passages which ended in the intersection field (i.e. without exit area) were first excluded. Second, passages that had the same entry and exit area were excluded. Third, passages that had an entry and exit area on opposite sides (i.e. east ↔ west or south ↔ north), corresponding to straight passages, were excluded. Fourth, passages that had data points in more than two entry/exit areas were excluded. Finally, all curves for which minimum speed was 0.005 m/s or lower were excluded. This value was chosen because it covered a small distribution of ’stopping times’ between 0.000 m/s and 0.005 m/s, with a Modus at 0.004 m/s. Ultimately, cornering times were computed by subtracting the entry time from the exit time.

2.7. Analysis

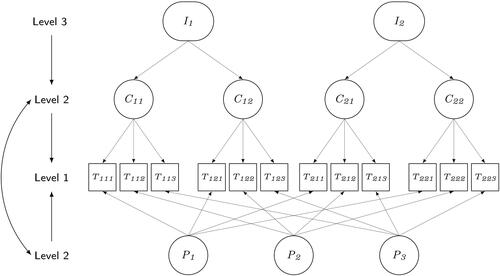

Since the simulated environment contained a total of 56 intersections that could be used for the analysis, each with potential four to six curves that had (partly) different attributes and navigation challenges and would be taken by different participants, a statistical analysis was required that can model these dependencies. Cross-classified multilevel models are an appropriate analysis for this type of data (Judd, Westfall, and Kenny Citation2017), since comparisons between the conditions of experimental factors can be made while accounting for the random factors participant, curve, and junction. On the lowest level (L1), the dependent variable cornering time was modelled. Each cornering time was nested in one participant and one curve, which itself was nested in an intersection (L3). Hence, on the second level (L2), participants and curves constituted crossed random factors. Therefore, a three-level model with crossed-random factors on L2 and fixed effects similar to an ANOVA was estimated ().

Figure 4. Depiction of the general structure of the data, with cornering times (T) clustered in three participants (P) and two intersections (I), with two curves (C) each.

Statistical analyses were based on the R-packages lme4 (to fit the generalised cross-classified mixed effects model; Bates et al. Citation2015), car (to derive type-III-ANOVA estimates; Fox and Weisberg Citation2018) and emmeans (to derive marginal means, confidence intervals—CIs—and adjusted p-values; Lenth, Citation2022). To test the hypotheses, main effects of delay and search cue modality, as well as their interaction, were first estimated. Subsequently, to estimate the specific interfering effects, adjusted significance tests of pairwise comparisons between the control and other search cue conditions were performed, for the average effects as well as for both delay groups. P-values were adjusted family-wise based on multivariate t-tests as implemented in the emmeans R-package. Cohen’s d0 was calculated as effect size for pairwise comparisons (see Judd, Westfall, and Kenny Citation2017), with d0 = ±0.20 corresponding to a small, d0 = ±0.50 to a medium, and d0 = ±0.80 to a large effect size (Cohen Citation1992). The significance level for all analyses was α = .05.

3. Results

Based on the procedure described before, NT = 5,827 cornering times from NP = 131 participants, nested in NC = 182 curves which were clustered in NJ = 55 junctions, were computed, of which 3,785 came from the 50 ms-delay, and 2,042 from the 500msdelay group. To account for potential outliers, minimal truncation of 0.1% on the left and right tail was applied (based on Ulrich and Miller Citation1994), resulting in a final sample of NT = 5,815 cornering times, whose distribution was positively skewed (SK = 2.57).

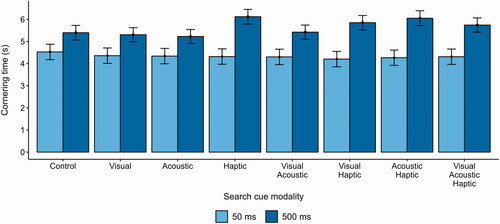

To account for this non-normality by selecting an adequate response distribution, three null-models (to avoid type-1-error inflation; Arend and Müsseler Citation2021) that accounted for the cross-nested structure were fitted with recommended types of response distributions (Gaussian normal, inverse Gaussian and Gamma, all with identity-link; Lo and Andrews Citation2015). Following Lo and Andrews (Citation2015), the null-models were compared based on the Akaike Information Criterion (AIC) and Bayes Information Criterion (BIC), with lower values indicating higher fit. The inverse Gaussian response distribution (AIC = 14,827; BIC = 14,861) provided the best fit, followed by the Gamma (AIC = 15,839; BIC = 15,872) and Gaussian response distribution (AIC = 19,342; BIC = 19,375). Hence, the full model was estimated with inverse Gaussian response distribution and identity-link, from which a type-III Wald Chi-square (χ2) test was calculated. The main effects of delay [χ2(1) = 38.10; p < .001] and search cue modality [χ2(7) = 35.67; p < .001] were significant, as was the two-way interaction between both factors [χ2(7) = 172.72; p < .001]. Hence, the main effects of delay and search cue modality should not be generalised without consideration of their interaction. provides an overview of these effects.

Figure 5. Two-way interaction of delay and search cue modality. Bars represent marginal means, with 95%CIs as error bars.

3.1. Q1: Does delay affect the driving task?

The significant main effect of delay indicated that, on average, the 50 ms-delay group (M = 4.25; 95%CI [3.93, 4.58]) had lower cornering times than the 500 ms-delay group (M = 5.35; 95%CI [5.06, 5.63]; d0 = −0.89; p < .001). Hence, delay had a significant effect on cornering times (H1 supported).

3.2. Q2: Do unimodal search cues interfere with the driving task?

We computed average (i.e. averaged over the levels of delay) interfering effects of the unimodal search cues (). The results indicated that cornering was significantly faster with acoustic search cues than in the control condition. Haptic search cues had an average small interfering effect on cornering. The effect of visual search cues was not significant. Hence, the average effects supported H2b and H2c, but not H2a.

Table 2. Inference statistics for pairwise comparisons of search cue modalities and control condition.

3.3. Q3: Do multimodal search cues interfere with the driving task?

To understand how multimodal search cues interfered with the driving task, their average cornering times also were compared to the control condition (). Results indicated that, beyond the bimodal acoustic-haptic search cues that had a small and significant interfering effect, none of the effects of multimodal search cues was significant. Thus, only H3c was supported.

3.4. Q4: Does delay influence the effects of search cue modality on the driving task?

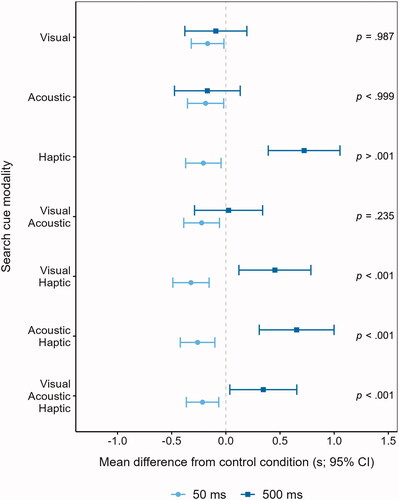

Although the pairwise comparisons of average interfering effects of search cue modality on cornering times were mostly non-significant, the significant Wald-test for the twoway interaction provided an indication that the interfering effects depend on delay. Therefore, the same pairwise comparisons were computed for each level of delay (see and ).

Figure 6. Forest plot of the interfering effects for 50 ms vs. 500 ms delay. Effects are mean differences of each search cue modality condition from the control condition and their 95% CIs. Positive values suggest interference with cornering performance, negative values suggest performance benefits.

With 50 ms delay (), the comparison of the control condition with the unimodal visual, acoustic, and haptic condition significantly reduced cornering times. The same was true for the bimodal conditions: visual-acoustic, visual-haptic, and acoustic-haptic search cues were related to significantly lower cornering times. Finally, cornering times were significantly lower with trimodal search cues than in the control condition. All effects benefitted cornering performance, but were rather small.

With 500 ms delay, unimodal haptic search cues significantly affected cornering when compared to the control condition, with a medium interfering effect. The visual and acoustic unimodal condition did not show significant differences from the control condition. For bimodal conditions, visual-haptic and acoustic-haptic search cues interfered with the driving task, with small to medium effects. The effects of visual-acoustic search cues were not significant. Trimodal search cues significantly interfered with cornering performance, with a small effect. Hence, with 500 ms delay, unimodal and multimodal search cues including the haptic communication channel showed small to medium and interfering effects on cornering performance.

In summary, search cue modalities did not interfere with the driving task for 50ms delays, whereas there was considerable interference related to the haptic modality for 500 ms delays. To receive further indications of these interfering effects, additional comparisons were computed. First, the difference of the interfering effect (i.e. the pairwise comparison of each search cue modality with the control condition) between the 50 ms- and 500 ms-delay groups was considered (see for the adjusted p-values). Results showed that when the haptic modality was included, differences between the delay groups were significant, whereas when only the visual or acoustic modality were included, differences were not significant. Furthermore, the results indicated a redundancy loss in cornering performance when the search cues were presented via combinations of the haptic with the visual and/or acoustic channel, compared to when the search task was cued via the visual or acoustic channel alone. Hence, to estimate the general interfering effect of the haptic modality dimension with 500 ms-delays, a contrast was tested that compared the haptic, visual-haptic, acoustic-haptic, and visual-acoustic-haptic condition with the control, visual, acoustic, and visual-acoustic condition. An average medium interfering effect of approximately 600 ms per curve in the delay-group was revealed (MDiff = 0.60; d0 = 0.51; p < .001).

provides a summary of results.

Table 3. Summary of results.

4. Discussion

4.1. Summary of results

The objective of the present research was to examine how unimodal, bimodal, and trimodal search cues interfered with the cornering performance of operators when teleoperating a rover, with delays of 50 ms or 500 ms. In an experimental study, participants controlled a rover in a simulated SAR environment. Cross-classified models were estimated with participant and curves, nested in junctions, as crossed random factors. Delay had a significant negative effect on remote driving (Q1). While on average (Q2 and Q3), search cue modality only selectively related to cornering performance, its effects significantly differed between delay groups (Q4). Hence, the effects of search cue modalities should not be generalised without considering delays. Theoretical and practical implications are discussed below.

4.2. Implications

Based on models of human-machine interaction (Glendon, Clarke, and McKenna Citation2006) and driver behaviour (Fuller Citation2005; Summala Citation2007), the TPTM framework has been conceptualised as theoretical approach to describe teleoperation tasks and integrate them with HF/E or engineering frameworks. The present research constitutes a first step in this respect, by interfacing the teleperception and telemanipulation dimensions with MRT’s processing stages to predict operator performance in dual-task situations. With additional application and development, the consideration of the TPTM dimensions can contribute to standardise the terminology of teleoperation research.

Second, MRT was used to derive hypotheses on the interference of search cues with the remote driving task. Contrary to these hypotheses, our research did not find interfering effects of search cues for 50 ms delays. With 500 ms delays, results did not support the hypothesised interfering effects of the visual modality, and the haptic modality interfered considerably with cornering. Yet, as hypothesised, the acoustic modality did not interfere with cornering and thus seems to be appropriate for providing unimodal search cues in SAR-scenarios regardless of delays up to 500 ms.

Third, although the results regarding the visual channel did not support assumptions of MRT, the framework offers alternative explanations for the non-interfering (with 500 ms delay) or even beneficial effect (with 50 ms delay) of visual search cues: Because the visual information for the driving and search task was presented together on the operator screen and both tasks communicate spatial codes, their perception could be integrated (Wickens Citation2002). Therefore, augmenting visual search cues into the front camera display on the operator screen could be a promising approach to extend the beneficial effects found for the visual modality in the low-delay group to higher-delay systems.

Fourth, the interfering effects of approx. 0.6 s higher cornering times found for the haptic dimension in the high delay condition were substantial. In the present research, force feedback via the input device was used as haptic search cue, providing forces in the direction of the target. Similarly, operators would make their driving inputs by moving the joystick in the direction in which they wanted to steer. Hence, the inputs (responding, or telemanipulation, stage) and feedback (teleperception stage) both communicate spatial codes. The very notion of MRT is that time-shared tasks, which utilise common structures, interfere (Wickens Citation2002). In the present research, the joystick was a common structure for the perception and responding stage. Hence, a potential explanation for the interfering effects of haptic search cues is that delays evoke a similar effect as time-sharing, which would result in the interference of responding and perception stages. Accordingly, the stages of processing would not interfere with lower delays (as, e.g. in an auditory-auditory task examined by Shallice, McLeod, and Lewis Citation1985). If this account can be supported by future research, substantial theoretical and practical implications would arise, for example as an approach for understanding the heterogeneity of effects of haptic feedback in teleoperation (as identified in a meta-analysis; Nitsch and Färber Citation2013), which often uses common structures for perception and responding.

Furthermore, by using cornering times as central indicator of remote driving performance, our research also contributes to the literature on the Cornering Law. First, the delay introduced in the present research also substantially increased cornering times which supports previous research (Cross et al. Citation2018; Storms and Tilbury Citation2018). Furthermore, our study simulated a complex, unstructured environment, as has been recommended (Helton, Head, and Blaschke Citation2014). While this makes a strict formulation of the Cornering Law difficult, since, when compared to geometric circuits, objective properties of a corner were not constant, it indicates that cornering times are also a relevant measure for corners that are part of more realistic teleoperation environments. In addition, our research is the first to suggest that, beyond delay and index of corner difficulty, elements of secondary tasks can influence cornering times. This can result in higher insecurity of estimates derived from the Cornering Law for telenavigation tasks that are characterised by delays and the presence of secondary tasks. Hence, accounting for these influences when using the Cornering Law can increase the validity of certification or risk management processes.

Since the main objective of the present research was to study the potential interference of directional search cues with remote driving performance in a realistic SAR scenario, we chose a planetary environment for the simulation. Yet, it is also important to consider the rendering quality, since insufficient visual information from the environment could alter cornering performance and make operators rely on (visual) search cues for cornering instead. To derive an indication whether this was the case, the results in the 50 ms-delay group can be considered: The mean differences in cornering times between the control condition and search cue conditions were only small (see and ). Furthermore, unimodal visual search cues did not show a different pattern of results than unimodal auditory or haptic search cues in this group (see and ). This indicates that visual information from the environment was sufficient for cornering, in order for operators to not rely on the visual information offered by search cues instead. Accordingly, we infer that the rendering provided sufficient detail.

Finally, direct design recommendations can be derived from the results. When designing operator interfaces for SAR rovers, the consideration and anticipation of delays should be part of the first steps in the development process. For systems whose delays will not exceed 50 ms, unimodal or multimodal search cues can be recommended without the potential to interfere with the driving task. Yet, since delays can commonly occur and often not be precluded with absolute certainty, it needs to be considered which search cues also work for higher delays. Our research indicated that unimodal visual or acoustic search cues could be used, since they were prone to dual-task interference in the 500 ms-delay group. Furthermore, when delays are anticipated, the haptic communication channel should be generally avoided for cueing the search task. This is further emphasised when considering that for the unimodal haptic condition, search task performance was also reduced (Benz Citation2019).

4.3. Conclusion

The objective of the present research was to examine how unimodal and multimodal search cues interfered with the cornering performance of operators when teleoperating a rover, with two levels of delays. We found beneficial effects of all search cue modalities for delays of 50 ms. For delays of 500 ms, cueing the search task via the haptic channel was related to substantial performance decrements in the remote driving task. The present research constitutes a first step in considering dual-task interference in remote SAR missions, indicating that the consideration of modality-specific resources does not suffice to derive useful cueing approaches for the search task.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Arend, M. G, and J. Müsseler. 2021. “Object Affordances from the Perspective of an Avatar.” Consciousness and Cognition 92: 103133. doi:10.1016/j.concog.2021.103133.

- Bates, D., M. Mächler, B. Bolker, and S. Walker. 2015. “Fitting Linear Mixed-Effects Models Using lme4.” Journal of Statistical Software 67 (1): v067.i01. doi:10.18637/jss.v067.i01.

- Benz, T. M, and V. Nitsch. 2017. “Using Multisensory Cues for Direction Information in Teleoperation: More is Not Always Better.”. In 2017 IEEE International Conference on Robotics and Automation (ICRA) (pp. 6541–6546). doi:10.1109/icra.2017.7989773.

- Benz, T. M. 2019. Multi-Modal User Interfaces in Teleoperation doi:10.18154/RWTH-2019-03285.

- Benz, T. M, and V. Nitsch. 2018. “Is Cross-Modal Matching Necessary? A Bayesian Analysis of Individual Reference Cues.” In Haptics: Science, Technology, and Applications. doi:10.1007/978-3-319-93445-7_2.

- Brooks, D. J., K. M. Tsui, M. Lunderville, and H. Yanco. 2015. “Methods for Evaluating and Comparing the Use of Haptic Feedback in Human-Robot Interaction with Ground-Based Mobile Robots.” Journal of Human-Robot Interaction 4 (1): 3–29. doi:10.5898/JHRI.4.1.Brooks.

- Burke, J. L., Prewett, M. S. Gray, A. A. Yang, L. Stilson, F. R. B. Coovert, M. D., and E. Redden 2006. “Comparing the Effects of Visual-Auditory and Visual-Tactile Feedback on User Performance.” In ICMI ’06: Proceedings of the 8th International Conference on Multimodal Interfaces (pp. 108–117). doi:10.1145/1180995.1181017.

- Casper, J, and R. R. Murphy. 2003. “Human-Robot Interactions during the Robot-Assisted Urban Search and Rescue Response at the World Trade Center.” IEEE Transactions on Systems, Man, and Cybernetics, Part B 33 (3): 367–385. doi:10.1109/tsmcb.

- Chan, A. H. S., E. R. Hoffmann, and J. C. H. Ho. 2019. “Movement Time and Guidance Accuracy in Teleoperation of Robotic Vehicles.” Ergonomics 62 (5): 706–720. doi:10.1080/00140139.2019.1571246.

- Chen, J. Y. C., E. C. Haas, and M. J. Barnes. 2007. “Human Performance Issues and User Interface Design for Teleoperated Robots.” IEEE Transactions on Systems, Man and Cybernetics, Part C 37 (6): 1231–1245. doi:10.1109/TSMCC.2007.905819.

- Chen, J. Y. C, and M. J. Barnes. 2014. “Human–Agent Teaming for Multirobot Control: A Review of Human Factors Issues.” IEEE Transactions on Human-Machine Systems 44 (1): 13–29. doi:10.1109/THMS.2013.2293535.

- Cohen, J. 1992. “A Power Primer.” Psychological Bulletin 112 (1): 155–159. doi:10.1037/0033-2909.112.1.155.

- Condoluci, M., T. Mahmoodi, E. Steinbach, and M. Dohler. 2017. “Soft Resource Reservation for Low-Delayed Teleoperation over Mobile Networks.” IEEE Access. 5: 10445–10455. doi:10.1109/ACCESS.2017.2707319.

- Cross, M., K. A. McIsaac, B. Dudley, and W. Choi. 2018. “Negotiating Corners with Teleoperated Mobile Robots with Time Delay.” IEEE Transactions on Human-Machine Systems 48 (6): 682–690. doi:10.1109/THMS.2018.2849024.

- Fitts, P. M. 1954. “The Information Capacity of the Human Motor System in Controlling the Amplitude of Movement.” Journal of Experimental Psychology 47 (6): 381–391. doi:https://doi.org/10.1037/h0055392.

- Fuller, R. 2005. “Towards a General Theory of Driver Behaviour.” Accident; Analysis and Prevention 37 (3): 461–472. doi:10.1016/j.aap.2004.11.003.

- Glendon, A. I., S. Clarke, and E. McKenna. 2006. Human Safety and Risk Management. Boca Raton: CRC Press. doi:10.1201/9781420004687.

- Gunn, D. V., J. S. Warm, W. T. Nelson, R. S. Bolia, D. A. Schumsky, and K. J. Corcoran. 2005. “Target Acquisition with UAVs: Vigilance Displays and Advanced Cuing Interfaces.” Human Factors 47 (3): 488–497. doi:10.1518/001872005774859971.

- Hancock, P. A., J. E. Mercado, J. Merlo, and J. B. F. Van Erp. 2013. “Improving Target Detection in Visual Search through the Augmenting Multi-Sensory Cues.” Ergonomics 56 (5): 729–738. doi:10.1080/00140139.2013.771219.

- Helton, W. S., J. Head, and B. A. Blaschke. 2014. “Cornering Law: The Difficulty of Negotiating Corners with an Unmanned Ground Vehicle.” Human Factors 56 (2): 392–402. doi:10.1177/0018720813490952.

- Hoffmann, E. R, and C. G. Drury. 2019. “Models of the Effect of Teleoperation Transmission Delay on Robot Movement Time.” Ergonomics 62 (9): 1175–1180. doi:10.1080/00140139.2019.1612954.

- Hoffmann, E. R, and S. Karri. 2018. “Control Strategy in Movements with Transmission Delay.” Journal of Motor Behavior 50 (4): 398–408. doi:10.1080/00222895.2017.1363700.

- Hopkins, K., S. J. Kass, L. D. Blalock, and J. C. Brill. 2017. “Effectiveness of Auditory and Tactile Crossmodal Cues in a Dual-Task Visual and Auditory Scenario.” Ergonomics 60 (5): 692–700. doi:10.1080/00140139.2016.1198495.

- Jones, K. S., B. R. Johnson, and E. A. Schmidlin. 2011. “Teleoperation through Apertures.” Journal of Cognitive Engineering and Decision Making 5 (1): 10–28. doi:10.1177/1555343411399074.

- Fox, J, and S. Weisberg. 2018. An R Companion to Applied Regression. Retrieved from https://www.ebook.de/de/product/33769893/john_fox_sanford_weisberg_an_r_companion_to_applied_regression.html

- Judd, C. M., J. Westfall, and D. A. Kenny. 2017. “Experiments with More than One Random Factor: designs, Analytic Models, and Statistical Power.” Annual Review of Psychology 68: 601–625. doi:10.1146/annurev-psych-122414-033702.

- Kahneman, D. 1973. Attention and Effort. New Jersey: Prentice-Hall.

- Khasawneh, A., H. Rogers, J. Bertrand, K. C. Madathil, and A. Gramopadhye. 2019. “Human Adaptation to Latency in Teleoperated Multi-Robot Human-Agent Search and Rescue Teams.” Automation in Construction 99: 265–277. doi:10.1016/j.autcon.2018.12.012.

- Koch, I., E. Poljac, H. Müller, and A. Kiesel. 2018. “Cognitive Structure, Flexibility, and Plasticity in Human Multitasking—An Integrative Review of Dual-Task and Task-Switching Research.” Psychological Bulletin 144 (6): 557–583. doi:10.1037/bul0000144.

- Lathan, C. E, and M. Tracey. 2002. “The Effects of Operator Spatial Perception and Sensory Feedback on Human-Robot Teleoperation Performance.” Teleoperators and Virtual Environments 11 (4): 368–377. doi:10.1162/105474602760204282.

- Lenth, R. 2022. Emmeans: Estimated Marginal Means, Aka Least-Squares Means. R package version 1.8.2. https://CRAN.R-project.org/package=emmeans

- Lo, S, and S. Andrews. 2015. “To Transform or Not to Transform: Using Generalized Linear Mixed Models to Analyse Reaction Time Data.” Frontiers in Psychology 6: 1171. doi:10.3389/fpsyg.2015.01171.

- Lu, S., M. Y. Zhang, T. Ersal, and X. J. Yang. 2019. “Workload Management in Teleoperation of Unmanned Ground Vehicles: Effects of a Delay Compensation Aid on Human Operators’ Workload and Teleoperation Performance.” International Journal of Human–Computer Interaction 35 (19): 1820–1830. doi:10.1080/10447318.2019.1574059.

- Luo, Ruikun, Yifan Wang, Yifan Weng, Victor Paul, Mark J. Brudnak, Paramsothy Jayakumar, Matt Reed, Jeffrey L. Stein, Tulga Ersal, and X. Jessie Yang. 2019. “Toward Real-Time Assessment of Workload: A Bayesian Inference Approach.” Proceedings of the Human Factors and Ergonomics Society Annual Meeting 63 (1): 196–200. doi:https://doi.org/10.1177/1071181319631293.

- Nelles, J., M. Arend, A. Mertens, A. Henschel, C. Brandl, and V. Nitsch. 2020. “Investigating Influence of Complexity and Stressors on Human Performance during Remote Navigation of a Robot Plattform in a Virtual 3D Maze.”. In 2020 13th International Conference on Human System Interaction (HSI). doi:10.1109/hsi49210.2020.9142671.

- Nitsch, V. 2012. Haptic Human-Machine Interaction in Teleoperation Systems. Retrieved from https://www.ebook.de/de/product/19030636/verena_nitsch_haptic_human_machine_interaction_in_teleoperation_systems.html

- Nitsch, V, and B. Färber. 2013. “A Meta-Analysis of the Effects of Haptic Interfaces on Task Performance with Teleoperation Systems.” IEEE Transactions on Haptics 6 (4): 387–398. doi:10.1109/TOH.2012.62.

- Opiyo, S., J. Zhou, E. Mwangi, W. Kai, and I. Sunusi. 2021. “A Review on Teleoperation of Mobile Ground Robots: Architecture and Situation Awareness.” International Journal of Control, Automation and Systems 19 (3): 1384–1407. doi:10.1007/s12555-019-0999-z.

- Pastel, R., J. Champlin, M. Harper, N. Paul, W. Helton, M. Schedlbauer, and J. Heines. 2007. “The Difficulty of Remotely Negotiating Corners.” In Proceedings of the Human Factors and Ergonomics Society 51st Annual Meeting 51 (5): 489–493. doi:10.1177/154193120705100513.

- Prewett, M. S., Yang, L.Stilson, F. R. B. Gray, A. A. Coovert, M. D. Burke, J.L. R. and Elliot 2006. “The Benefits of Multimodal Information.” In ICMI ’06: Proceedings of the 8th International Conference on Multimodal Interfaces. doi:10.1145/1180995.1181057.

- Rogers, H., A. Khasawneh, J. Bertrand, and K. C. Madathil. 2017. “An Investigation of the Effect of Latency on the Operator’s Trust and Performance for Manual Multi-Robot Teleoperated Tasks. In.” Proceedings of the Human Factors and Ergonomics Society Annual Meeting 61 (1): 390–394. doi:10.1177/1541931213601579.

- Scholcover, F, and D. J. Gillan. 2018. “Using Temporal Sensitivity to Predict Performance under Latency in Teleoperation.” Human Factors 60 (1): 80–91. doi:10.1177/0018720817734727.

- Shallice, T., P. McLeod, and K. Lewis. 1985. “Isolating Cognitive Modules with the Dualtask Paradigm: Are Speech Perception and Production Separate Processes?” The Quarterly Journal of Experimental Psychology 37 (4): 507–532. doi:10.1080/14640748508400917.

- Storms, J, and D. Tilbury. 2018. “A New Difficulty Index for Teleoperated Robots Driving through Obstacles.” Journal of Intelligent & Robotic Systems 90 (1–2): 147–160. doi:10.1007/s10846-017-0651-1.

- Summala, H. 2007. “Towards Understanding Motivational and Emotional Factors in Driver Behaviour: Comfort through Satisficing.” In Modelling Driver Behaviour in Automotive Environments 2007: 189–207. doi:10.1007/978-1-84628-618-6_11.

- Triantafyllidis, E., C. Mcgreavy, J. Gu, and Z. Li. 2020. “Study of Multimodal Interfaces and the Improvements on Teleoperation.” IEEE Access. 8: 78213–78227. doi:10.1109/ACCESS.2020.2990080.

- Ulrich, R, and J. Miller. 1994. “Effects of Truncation on Reaction Time Analysis.” Journal of Experimental Psychology 123 (1): 34–80. doi:10.1037/0096-3445.123.1.34.

- Wickens, C. D. 1984. “Processing Resources in Attention.” In Varieties of Attention, edited by R. Parasuraman, 63–102. New York, NY: Academic Press.

- Wickens, C. D. 2002. “Multiple Resources and Performance Prediction.” Theoretical Issues in Ergonomics Science 3 (2): 159–177. doi:10.1080/14639220210123806.

- Wickens, C. D. 2008. “Multiple Resources and Mental Workload.” Human Factors 50 (3): 449–455. doi:10.1518/001872008x288394.

- Wickens, C. D. 2021. “Attention: Theory, Principles, Models and Applications.” International Journal of Human–Computer Interaction 37 (5): 403–417. doi:10.1080/10447318.2021.1874741.

- Winck, R. C., S. M. Sketch, E. W. Hawkes, D. L. Christensen, H. Jiang, M. R. Cutkosky, and A. M. Okamura. 2014. “Time-Delayed Teleoperation for Interaction with Moving Objects in Space.” In 2014 IEEE International Conference on Robotics and Automation (ICRA). doi:10.1109/icra.2014.6907736.

- Wong, C., E. Yang, X.-T. Yan, and D. Gu. 2017. “An Overview of Robotics and Autonomous Systems for Harsh Environments.” In 2017 23rd International Conference on Automation and Computing (ICAC). doi:10.23919/iconac.2017.8082020.

- Yang, E, and M. C. Dorneich. 2017. “The Emotional, Cognitive, Physiological, and Performance Effects of Variable Time Delay in Robotic Teleoperation.” International Journal of Social Robotics 9 (4): 491–508. doi:10.1007/s12369-017-0407-x.

- Zulqarnain, S. Q, and S. Lee. 2021. “Selecting Remote Driving Locations for Latency Sensitive Reliable Teleoperation.” Applied Sciences 11 (21): 9799. doi:10.3390/app11219799.