Abstract

The Industry 5.0 concept has placed human needs at the heart of industrial processes. This raises the question of how new technologies can enhance employee decision-making processes and influence the evolution of team autonomy. Recent studies have shown that the best way to measure these impacts is to conduct experiments in complex and realistic environmental settings. However, the main methods cannot satisfy this requirement while controlling the events and associated variables, whereas a set of use cases can. Therefore, a model should be defined to generate and structure these use cases while validating their relevance. Following the decomposition of the global research objective and case-definition recommendations, this study proposes a framework for designing complementary use cases to evaluate the impact of new technologies on emerging autonomy models in a structured, realistic, and global manner. Based on widely recognised related work, the 6-step framework helps define a coherent context specifying the business process model, agent, autonomy, technologies to be implemented, their fields of action, detailed variable collection protocol, and experimental setup. A cross-analysis of existing cases from the literature and empirical use of the framework validated the relevance of the model in designing experimental environments that are close to real-world settings.

1. Introduction

The term Industry 5.0, adopted by the European Commission (European Commission. Directorate General for Research and Innovation Citation2021), has emerged as a concept complementary to Industry 4.0. Various research and technology organisations and funding agencies agreed on the need to better integrate the EU's social and environmental priorities into technological innovation by shifting from individual technology to a systemic perspective. While Industry 4.0 places new technologies at the centre of production and supply chains (Roblek, Meško, and Krapež Citation2016), Industry 5.0 aims to reinforce this digital transformation through more meaningful and effective collaboration between humans, machines, and systems within their digital ecosystem. The partnership between humans and intelligent machines combines the precision and speed of industrial automation with human creativity, innovation, and critical thinking. With Industry 5.0, value-driven and human-centred initiatives overlay the technological transformations of Industry 4.0, creating more fluid interactions between humans and machines (Maddikunta et al. Citation2021; Müller Citation2020). In this study, the term Industry 5.0 was used. Over the last decade, organisations have focused on implementing new technologies to increase productivity, sometimes neglecting the human dimension (Eslami et al. Citation2021). In this context, the question arises as to whether these technologies at the interface between workers and industrial processes enhance workers’ autonomy in decision-making processes. The reality of industries shows that new technologies lead to a complete rethinking of their uses, which has the potential to radically transform work. To better measure this effect, further studies are required to capture the interactions between technologies (Xu, Xu, and Li Citation2018) and all stages of the decision-making process (Ivanov Citation2022). A safe and inclusive working environment is essential for prioritising autonomy, which is considered a worker’s fundamental right (European Commission. Directorate General for Research and Innovation Citation2021; Nahavandi Citation2019; Xu, Xu, and Li Citation2018). Notably, many experts and observers believe that the key element of I5.0 lies in placing humans at the centre of the decision-making process by collaborating with machines. As human-machine interaction develops within I5.0, autonomy appears to be a necessary condition for industrial resilience. Humans are developing a degree of dependence on systems, which they should overcome in case of a disruption. Moreover, the evolution of organisations prioritises autonomy (e.g. Lean Management, agile management, and frugal innovation). Consequently, we should ensure that the development of digitalisation does not undermine the emergence of this autonomy. Finally, autonomy is seen as one of the most fundamental aspects of the transition from a technocentric to a value-centric 5.0 industry (Enang, Bashiri, and Jarvis Citation2023). Thus, autonomy in human decision-making, supported by new technologies is particularly crucial for the future (Kumar et al. Citation2021).

In this context, research should focus on how new technologies improve decision making and impact employee autonomy. Such research is challenging, because studying real-world situations requires the evaluation or measurement of human-centric experimental variables that are complex and difficult to isolate. Fortunately, studying human-centred processes and technology transfer issues is possible in an observational laboratory (de Paula Ferreira et al. Citation2022; Zeisel Citation2020); however, it requires the development of appropriate scenarios. While most research is based on case studies (Nguyen Ngoc, Lasa, and Iriarte Citation2022), it does not permit a quantitative measurement of the performance and behaviour of the actors involved in a process. They have also been criticised for their subjectivity, data interpretation bias, and lack of rigour in their reproduction. Contrarily, use cases, which are artificial replications of real contexts, allow for experimentation in the laboratory and control of specific variables without anticipating the phenomena that will occur (Yin Citation1981b; Citation1981a; Citation2018). A use case is an empirical method that examines a contemporary phenomenon in depth and in its actual context, particularly when the boundaries between the phenomenon and context may not be clear (Yin Citation1981b; Citation1981a; Citation2018). The essence of a use case is to describe and explain the complex phenomena that occur in real life. A set of use cases built in a complementary and mutually consistent manner around an overall research question, can therefore, make it possible to study phenomena occurring in complex contexts similar to a case study, but without falling into their usual shortcomings. All these types of complex cases focus on research questions such as ‘how’ and ‘why,’ but without controlling the behavioural and contemporary events (Yin Citation1981b; Citation1981a; Citation2018). The model proposed in this study addresses this problem by decomposing an overall research question into specific, mutually consistent research sub-questions, resulting in the creation of a coherent set of use cases. This type of case has also been shown to be relevant when the main object of the study concerns decision-making, as it may be used to explain why decisions were made, how they were implemented, and what results were obtained (Meyer Citation2001; Schramm Citation1971). Use cases are primarily used in system engineering or user experience (UX) research to assess and clarify user behaviour while using technology or systems. Compared to a case study, a use case can help clarify the outcomes related to technology and its utilisation by controlling the effects of the utilisation context (Jacobson, Spence and Bittner Citation2011). This seems more coherent with the claim of I5.0 to improve and adapt the technology to the requirements of employees.

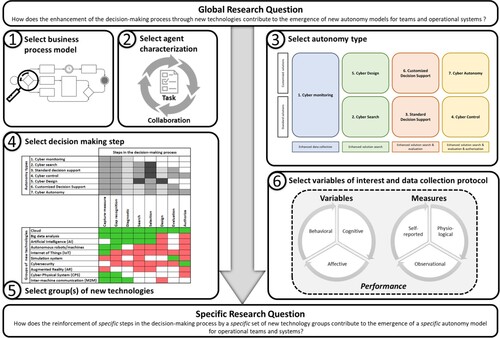

This study proposes a use case development framework to evaluate the impact of new technologies on new autonomy models in a structured, realistic, and comprehensive manner. This framework is closely related to our current work, which focuses on how enhancing the decision-making process through new technologies contributes to the emergence of new autonomy models for work centres. Beyond the scope of this study, this was our overall research question. This comprehensive research involves studying many complex phenomena in an environment as realistically as possible and requires consideration of a large number of variables of interest. Because the overall research question is extremely broad to be addressed in a single use case, we should create conditions for decomposing this overall research question into specific, mutually consistent, and specific research sub-questions, which can then be the subject of particular use cases. The framework proposed in this study is necessary for this decomposition.

The remainder of this study is organised as follows. In Section 2, we present a literature review of use cases that exploit new technologies. In Section 3, we outline the methodology used to develop our framework. In Section 4, we propose a framework for designing the use cases. Section 5 demonstrates the framework’s completion by analyzing the I5.0 cases reported in the literature. Section 6 discusses the results of the study, including the proposed framework and its validation. Finally, Section 7 concludes the study with a presentation of future perspectives offered by this framework and future research opportunities.

2. Literature review

2.1. People and technologies in Industry 5.0

Many authors have indicated that there is currently no clear definition of autonomy as applied to a production system (Everaere Citation2007). The term ‘autonomy’ comes from the Greek autos (oneself) and nomos (law, rule, organisation), and therefore refers to the idea of determining one's own rules or having the ability to govern oneself based on one's own rules (de Terssac Citation2012). Similarly, Cirillo et al. (Citation2021) considered that "autonomy is an expression of the leader's power, because it allows him to modify all actions". Brey (Citation1999) argued that worker autonomy is related to ‘the control that workers have over their own work situation,’ and draws on a definition of job autonomy as ‘the worker’s self-determination, discretion or freedom inherent in the job, to determine several task elements’ (De Jonge Citation1995). These task elements include the method of work, pace of work, procedures, scheduling, work criteria, work goals, workplace, work evaluation, working hours, work type, and amount of work. Brey (Citation1999) argued that worker autonomy refers to the degree to which employees have control over some or all task elements. Moreover, it is difficult to imagine that autonomy has no impact on collaboration within the team in which the operator works. The role of I5.0 employees will evolve toward that of decision-makers actively involved in a decision-making process that considers the whole context (Frazzon et al. Citation2013; Schuh et al. Citation2014). In this study, the notion of autonomy will be considered in the context of these visions. Ultimately, it refers to the freedom an agent has to make a decision, which leads us to define the concept of decision making. Simon (Citation1960) is among the first scholars to formulate a decision-making model. According to him, a decision begins with an investigation phase that involves identifying the gap between the current and desired situations. This is followed by a design phase to define possible actions to resolve the situation, which were then compared and selected in the final selection phase. In this study, we adhered to this definition and restricted ourselves to decisions arising from a situation gap analysis. The agent concept used here is similar to that established by Macal and North (Citation2009): an autonomous decision-making entity that receives sensor information from an environment and acts based on that information. Finally, this research was conducted on the scale of the work centre, which APICS defines as ‘a specific production area, consisting of one or more people and/or machines with similar capabilities, that can be considered as one unit for capacity requirements planning and detailed scheduling’ (Pittman and Atwater Citation2022).

European Commission (European Commission. Directorate General for Research and Innovation Citation2021) identified Industry 5.0, as a complementary and expanded vision of Industry 4.0, which recognises the importance of creating employee-centric factories to be more resilient and sustainable. Simultaneously, operational work is affected by technology. Technology can also empower employees to achieve higher productivity, as demonstrated by MIT’s Work of the Future Initiative (Autor et al. Citation2022). Assessing the implications of new technology adoption on employees is imperative, particularly when health, learning, performance, and decision making are affected (Pinzone et al. Citation2020). Meindl et al. (Citation2021) showed that the interfaces among the people involved, technologies used, and operational processes for improving work are not often explicit.

Recent studies in the field of operation management have investigated aspects of the dynamics between new technologies and work, addressing topics such as new relationships between people and technology (Longo, Nicoletti, and Padovano Citation2017; Peruzzini, Grandi, and Pellicciari Citation2020), the impact on work design (Cagliano et al. Citation2019), and the adoption of wearables in industrial settings (Maltseva Reiby Citation2020; Zheng, Glock, and Grosse Citation2022). Fifteen operation-related technologies were identified in a literature review by Dornelles et al. (Citation2022). For example, augmented and virtual realities are often mobilised to help operators perform complex tasks faster and with significant confidence (Uva et al. Citation2018). These are used in assembly operations (Lai et al. Citation2020), training (Tao et al. Citation2019), and quality control (Szajna and Kostrzewski Citation2022). In these assembly operations, augmented reality is used to indicate the assembly steps to follow (De Pace et al. Citation2020), signal and prevent errors, and communicate to the employee remotely with the supervisor or engineer in case of doubt (Calzavara et al. Citation2020).

Of note is the emergence of technologies that operators can use in various work situations. These wearables are generally used to collect data on operator movements, concentration, and state to improve working conditions and ergonomics (Guo et al. Citation2019; Sun et al. Citation2020). Wearables are used in assembly for the real-time collection of operator data, mainly on movements and workflows (Maltseva Reiby Citation2020). However, no research has been conducted on the use of these technologies to verify their impact on engagement or decision-making.

Complementary studies have highlighted the contributions of technologies to production planning, control (Bueno, Godinho Filho, and Frank Citation2020), and decision-making activities (Ivanov Citation2022). These mechanisms have been extensively studied at the strategic level (Olhager and Feldmann Citation2022). However, the mechanisms of decision-making at the operational level are yet to be explored in the context of implementing new technologies (Ivanov Citation2022). The latter also illuminates the impact of new technologies on employee decision-making autonomy (Rosin et al. Citation2021).

2.2. Use cases in operations management research

Research on I5.0 is mostly based on literature reviews (Panagou, Neumann, and Fruggiero Citation2023), text mining (Grosse Citation2023), interview analyses (van Oudenhoven et al. Citation2022), numerical experiments (Abdous et al. Citation2022), and industrial case studies (Kaasinen et al. Citation2020). The use of rich experimental settings in operation management research is limited. Gao, Li, and Sun (Citation2022) identified only 192 experiment-based publications, the majority of which were based on non-field experiments, such as laboratory or quasi-experiments. Whether they are field experiments, in organisations, or in controlled laboratory settings, they provide a rich means of establishing causal links between the multiple elements under study (Eden Citation2017) and lead to a finer understanding of the mechanisms under study and behaviours of agents (Highhouse Citation2009).

Laboratory experiments can be designed to test analytical models and verify the underlying theory by providing scenarios that are similar to real ones (Katok Citation2011). Researchers have also called for combining laboratory-type and experimental field approaches (Gao, Li, and Sun Citation2022) to increase the validity and generalizability of the research results.

Given the increasing centrality of technology and how employees use it, the development of use cases seems to fulfil two needs: to verify the impact of the technology on the user and consider the requirements and context of user. Use cases offer a unique advantage by providing operations close to real-world settings in controlled environments. In the literature, use cases are generally scenarios replicating specific organisational processes and technologies, which is important as there is often a dynamic interplay between the technology in its ecosystem (Maghazei, Lewis, and Netland Citation2022). Therefore, a call for use cases has been issued (Saihi, Awad, and Ben-Daya Citation2021). However, the few use cases that have been realised either focus on the technology primarily or on one type of technology, such as digital twins (Attaran and Celik Citation2023), IoT, and CPS (Lesch et al. Citation2023), leaving little room to interpret how this affects the individual in the workplace. Employee behaviour and ethical aspects of using technology seem to be missing from existing use cases (Ordieres-Meré, Gutierrez, and Villalba-Díez Citation2023). However, two recent studies have addressed the impact of technology on employees. The first presents the impact of a more or less powerful artificial intelligence (AI) on an operator's engagement, stress level, and cognitive load (Passalacqua et al. Citation2023), whereas the second enriches the same use case by adding augmented reality technology to the experimental protocol (Joblot et al. Citation2023). If research on this topic is to be conducted in the future, it will be essential to propose a framework for its structure.

When a use case approach is used, it tends to be simple (Katok Citation2011) or focused exclusively on a particular technology (Rožanec et al. Citation2022). The design of a use case is often missing. This can be partially explained by the lack of specific models for designing use cases to measure the impact of technology use on employees. Among the available approaches, Ordieres-Meré, Gutierrez, and Villalba-Díez (Citation2023) offered an architecture that integrates human and machine data to improve operational transparency. Golan, Cohen, and Singer (Citation2020) proposed a framework for investigating future operators (i.e. workstation interactions in the I4.0 era). A complex system can identify the degradation of an operator's performance or system state and correct it through different interventions. Moencks et al. (Citation2022) introduced a tool to guide practitioners in their decision-making processes. This tool takes a macro view of human-technology interfaces and ensures that their implementation generates value. It does not address the more concrete aspects of the direct impact of new technologies on humans, their autonomy, or their ability to make decisions. Autonomy is only considered when considering manufacturing systems (Mo et al. Citation2023). Moreover, none of these models allow for the design of larger use cases involving multiple actors, and measurement methods are not addressed.

By proposing a more structured approach to conducting these empirical studies through use cases, we aim to follow the trend of empirical approaches on the relationship between technology, decision-making, and work in Industry 5.0 (e.g. Dornelles et al. Citation2022; Peruzzini, Grandi, and Pellicciari Citation2020).

3. Methodology

This study aims to propose a framework for designing complementary use cases to assess the impact of new technologies on autonomy in an operational context in a structured, realistic, and comprehensive manner. This framework should make it possible to structure a set of use cases to answer the following global research question: ‘How does the enhancement of the decision-making process through new technologies contribute to the emergence of new autonomy models for work centres?’

3.1. Choice of empirical research method

Yin (Citation2018) compared different empirical research methods that can be applied to this type of study and suggested distinguishing them based on three conditions:

The nature of the research question,

The degree of control the researcher has over actual behavioural events, and

The extent to which this study focuses on contemporary events is contrary to that on historical events.

Because our research question is of an explanatory nature, framed as a ‘How’ question, and primarily focuses on contemporary events, two methods stand out: case studies and experiments. These two methods differ in terms of the control a researcher has over actual behavioural events:

Case studies are employed when the researcher has limited or no control over events and the boundary between a phenomenon and its context cannot be clearly delineated. Case studies allow for an in-depth examination of a contemporary phenomenon (referred to as the ‘case’) within its real-world context, which entails the consideration of numerous variables of interest.

Conversely, experiments require the researcher to exert direct, precise, and systematic control over actual behavioural events. The experiments aimed at isolating and focusing on the phenomenon of interest by deliberately separating it from its context. These tests are typically conducted in controlled laboratory settings; however, field experiments are also possible. Experiments concentrated on one or a few selected variables to establish causal relationships.

Both case studies and experiments have their strengths and are applicable to different research scenarios, depending on the research question and the level of control desired over the events being studied.

The generic research question addressed in our work calls for the study of complex phenomena that can only be understood after many variables of interest have been considered. This eliminates the need for further experiments. It also aims to study these phenomena in a realistic context such that the results are credible and exploitable in a real environment. However, the number of possible intersections between new technologies and the different ways in which they can be mobilised to reinforce a decision-making process implies that the number of contexts to be studied is extremely large. Therefore, case studies are unrealistic.

Literature frequently describes and analyzes the implementation of use cases to study the use of new technologies for I5.0. However, to the best of our knowledge, no generic methodology exists for this design. They represent a compromise between use cases and experiments for studying complex phenomena in a context that closely resembles a real environment, in which event control is not systematic.

3.2. General framework design methodology

Given the complexity of this phenomenon, we aimed to study the most realistic environment possible, and it was necessary to consider many variables of interest. Therefore, we employed a case study methodology to structure our framework for designing complementary use cases. The methodology proposed by Yin (Citation2018) serves as a widely recognised reference, which we used as a foundation.

Yin (Citation2018) indicated that in the context of a case study, a research plan is structured around five key components:

Case study questions;

Its proposals, if any;

Its case(s);

The logic linking the data to the proposals; and

Criteria for interpreting results

The design of the proposed framework is based on an approach that defines the components that constitute a use case and its scope (How? Why? What? Who? Where?):

3.2.1. Breaking down the defined global research question

Following the recommendations of Yin (Citation2018), the framework was designed to adhere to a logical sequence that connects the empirical data and conclusions to the research questions addressed in the use case.

Given that the overall research question is extremely broad to be addressed through a single-use case, it is necessary to create conditions for breaking down this research question into specific and mutually consistent sub-questions that can be addressed through individual use cases. Thus, the overall research questions were broken down as follows:

The research question is necessarily a ‘how?’ or ‘why?’ question.

The phenomenon under study: Enhancement of the decision-making process through new technologies (what?), and their potential impact on the emergence of new autonomy models (on what?).

The contextual elements impacted by the phenomenon. In the case of our global research question, these agents (who?) are likely to have their autonomy affected.

Other contextual elements to be characterised: the environment in which the phenomenon under study occurs (where?)

Note that at this stage, triggering the global research question, the context can only be partially defined; however, it should at least be expressed in the form of ‘How/why a phenomenon (what?) has an impact on something (on what?) that affects some of the contextual elements’.

3.2.2. Characterise the phenomenon under study, its impact, and its relationship

Defining the ‘what’ is central to the research plan. We characterised the phenomenon under study, its impact, and the relationship between the phenomenon and its impact. The phenomenon studied encompasses two key objectives: decision-making process and technology types. To characterise the decision-making process, we employed the model proposed by Rosin et al. (Citation2021), which builds on the model of Mintzberg, Raisinghani, and Théorêt (Citation1976). The same authors created a model outlining different autonomy types based on this decision-making process. We referred to the ten technology groups proposed by Danjou, Pellerin, and Rivest (Citation2017) to characterise the technology types. This classification draws upon and enriches the widely cited classification of Rüßmann et al. (Citation2015). To establish the relationships between the studied phenomenon and the object of its impact (i.e. new autonomy models), we formalised these relationships through the matrix structure presented in Subsection 4.5 (Step 5). This structure is based on the work of Rosin et al. (Citation2022), who examined the potential of new technologies to enhance the decision-making process and their connection to new autonomy types (Rosin et al. Citation2021).

From this characterisation of the phenomenon studied, the object of its impact, and its relationships, it is possible to identify a coherent set of specific research questions that can be learned through use cases. Each use case that can be extracted from the framework aims to answer a particular research question of the type ‘How does the reinforcement of specific steps in the decision-making process by a specific set of new technology groups contribute to the emergence of a specific autonomy model for operational teams?’

3.2.3. Characterise elements of the context

Two contextual elements should be distinguished: those directly affected by the phenomenon under study and other factors that contribute to defining the context of the use case.

First, we should characterise the agents (who) affected by the phenomenon and their relationship with the object of the phenomenon's impact, which in the context of our study is autonomy. For this analysis, we relied on the work-centre concept defined by APICS (Pittman and Atwater Citation2022). The autonomy referred to in this study can be achieved by one or more individuals who potentially interact with one or more machines.

Based on the characterisation of the studied phenomenon, the object of its impact, and their relationships, along with the specific research question at hand and the characterisation of the agents’ level of autonomy, we can formalise the propositions (as identified by Yin Citation2018) that we aim to validate through future use cases.

Finally, we should characterise other contextual elements that are not directly affected by the phenomena under study. In our case, they correspond to the organisational framework chosen for the study (where?). Designing use cases (cf. Yin's components) requires defining and bounding cases (Yin Citation2018). This involves determining the organisational framework (where) in which the phenomenon under study occurs. This is the subject of Step 1 in the framework. In our study, the latter generally relates to ‘the enhancement of decision-making processes using new technologies.’ Here, we should link the decision-making process model enhanced by new technologies to the operational process model within which decisions are made.

In Step 1 of our framework, the design of the organisational context is based on the definition of the business process model, which defines the tasks within business processes for which decision-making is required (Object Management Group Citation2023). Specifying the exact scope of the business process reproduced in the use case, which may be a subpart of a global business process, is essential. The organisational framework used to support use cases can occur in more or less complex environments, depending on whether the use cases are developed in a company or learning laboratories. At the end of this step, it is possible to properly define and formalise the cases to be studied, which correspond to one of the components identified by Yin (Citation2018).

3.2.4. Characterise the type of variables of interest and the type of measurements

In the first instance, we consider the cognitive, affective, and behavioural variables of interest to be characteristics of autonomy (Gagné and Deci Citation2005; Hackman and Oldham Citation1976; Oldham and Cummings Citation1996; Peruzzini, Grandi, and Pellicciari Citation2017). Concentration and mental absorption during task performance characterise the cognitive variables of interest. The affective variable of interest groups included perceptual aspects (reaction and mobilisation of the five senses) and emotional factors (valence of positive and/or negative emotions, as well as activation of emotions) during task performance. The behavioural variable of interest was characterised by observable elements of autonomy in task performance.

There are many different data collection methods in Industry 5.0 research, as summarised by Passalacqua et al. (Citation2022). Although the I5.0 research is still in its early stages, questionnaires are the most widely used data collection method. Psychophysiological methods have also been used, but neurophysiological methods have not. We took advantage of all types of data collection related to an experimental case (in the laboratory, in situ, or through use cases) to extend the scope of use of the framework, which we describe in more detail below (cf. Table ). Table summarises the results of the literature analysis on the types of measurements. It refers to the main measurement methods used (column 1) (self-reported, observational, and physiological), in which articles (column 3), and simultaneously indicates the variables of interest they could measure in the articles in question (column 2).

Table 1. Measure types associated with examples of variables according to current literature.

The three variables of interest and the three identified measures constitute Step 6, encompassing the final components outlined by Yin (Citation2018). Additionally, while we focused on measuring the conditions under which autonomy emerges, it was crucial to ensure that these conditions do not compromise performance. Therefore, the performance was consistently measured in each use case to guarantee maintenance. The added value of the framework lies in providing a means to measure the perspective of an individual on what is transpiring.

Thus, the proposed framework encompasses six steps, collectively covering yin’s essential components. Furthermore, the performance measurement in each use case ensures that autonomy is studied without detriment to performance. The framework offers a valuable contribution by providing means of measuring the viewpoint of an individual.

4. Use cases development framework

The proposed framework aims to support different research methodologies by defining a series of use cases that lead to experimentation in an observation environment, allowing for qualitative and quantitative data collection without disrupting the actual functioning of an organisation.

The framework permits the detailed design of use cases in six steps. As shown above, the use case generated proposes the five elements defined by Yin (Citation2018) required for its correct definition: a case study question, its proposals, if any, its case(s), the logic linking the data to the proposals, and the criteria for interpreting the results. This framework is embedded in a more general research approach guided by the overall research question (‘how does the enhancement of the decision-making process through new technologies contribute to the emergence of new autonomy models for work centres?’). The framework enables this overall research question to be broken down into several specific research questions (‘how does the reinforcement of specific steps in the decision-making process by a specific set of new technology groups contribute to the emergence of a specific autonomy model for operational teams and systems?’) and provides use case(s) for each specific research question. Thus, the framework ensures a coherent approach to the overall research question in a structured and rigorous manner.

Step 1 defines the business process section to be studied using a use case to structure it around a concrete element. This step clearly defines the perimeter within which the agent evolves and exercises autonomy and decision making.

Step 2 defines the agent and its autonomy dimensions. This step ensures that human beings are involved in the use cases as early as possible.

Step 3 defines the autonomy granted to an agent. This step structures the decision-making possibilities of the agent and defines the full scope of its autonomy in the identified sections of the business process.

Step 4 defines the decision-making step(s) enhanced by new technologies. This step establishes the decision(s) that the agent is called upon to make in the use case.

Step 5 defines group(s) of new technologies to enhance previously selected decision-making step(s). It determines the proposals for the use case based on the perceived capacity of the new technologies selected in the context defined here.

Finally, Step 6 defines the experimental part of the use case aimed at testing the proposals described in the first five steps of the framework. This step consists of selecting the variable(s) of interest and measurement protocol(s) for these experiments, and structuring different experimental configurations.

The general framework is illustrated in Figure . The following subsections describe each step of the framework.

Figure 1. Industry 5.0 use cases development framework.

4.1. Step 1: select business process model (where?)

As a reminder, Step 1 defines the section of the business process to be studied using the use case to structure it around a more concrete element. This step clearly defines the perimeter within which the agent evolves and exercises autonomy and decision making.

An agent’s autonomy is expressed through well-defined business processes. However, the experiment linked to the use case does not involve the entire business process. Therefore, in the first step, the business process step (or step transition) should be precisely targeted. The business process may be taken from a real-life example of opportunism or meeting a specific industrial need. The aim here is to target the relevant step that requires the agent to make a decision. However, it may be useful to recreate a context adapted to the phenomenon that we wish to study. Thus, the business process can be considered complete. The first step is to define it completely before targeting the relevant step in the use case.

The definition of context then continues to Step 2, which characterises the agent.

4.2. Step 2: select agent characterisation (who?)

Step 2 defines the agent at the core of the use case. This agent is similar to all or part of a work centre, that is, one or more individuals, potentially interacting with one or more machines. It is the agent's autonomy in the face of the decisions they should make that the use case enables us to study. The agent can perform a set of tasks related to the business process defined in Step 1 and enjoys varying degrees of freedom in organising its work to accomplish them. This autonomy can have a strictly personal dimension (how an operator organises their tasks) or a more collaborative dimension (how operators interact to organise work-centre tasks). The highest level of organisational maturity enables complete collaboration between actors, objectives are shared, and work routinely follows coordinated and synchronised operations (Mo et al. Citation2023).

We defined an agent as a human entity observed and studied throughout the use case. The agent is qualified by one of the following two statuses: operator or team. An operator is a single individual or a machine that performs a specific technical operation. They are partly or wholly responsible for the performance and scope of actions (task execution and decision making). A team is a group of individuals and/or machines sharing a collective work situation, subject to common objectives and mutual responsibilities (Piquet Citation2009). Thus, responsibility is linked to each member’s actions and expected results.

Most importantly, this involves defining the dimension of autonomy from which the agent benefits. The focus can be on the autonomy specific to the task or, more globally, on its impact on collaboration. If the focus of autonomy is on the task, the use case relates to the autonomy of the agent in performing their tasks. This concerns the agent's authority and freedom to define their tasks: actions on the sequence of tasks, method of execution, pace of work, and tools used to perform the work. If the focus of autonomy is collaboration, the use case will focus on the agent's power to influence the organisational and collective environment: involvement in improving the work organisation, ability to influence decisions, and dynamics of cooperation at work. Therefore, collaboration between individuals and/or machines in the work centre can be studied, similar to collaboration between different work centres.

In this step, the agent is identified, and the focus is on the scope of his/her autonomy (on their tasks or collaboration within or outside the work centre). Thus, the agent should be the main actor in the business process defined in Step 1: The next step delimits the autonomy granted by the agent.

4.3. Step 3: select autonomy type (on what?)

Step 3 defines the autonomy granted to an agent. This step structures the decision-making possibilities of the agent and defines the full scope of its autonomy in the identified sections of the business process. This subsection is further divided into several sections: Based on Rosin et al. (Citation2021), the first section presents the decision-making model chosen for this framework. The second section (Section 4.3.1. to 4.3.7) presents the seven autonomy types derived from the model by Rosin et al. (Citation2021). Finally, to help the user, the last section (Section 4.3.8) proposes tools for classifying and identifying the autonomy type most likely to correspond to the use case that they wanted to design.

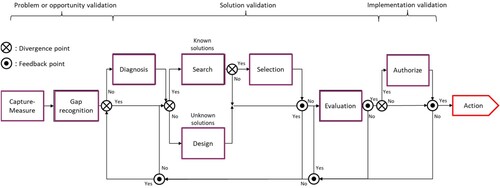

Different types of autonomy can be described or structured based on the decision-making processes proposed by Rosin et al. (Citation2021): Following Mintzberg, Raisinghani, and Théorêt’s (Citation1976) model, this process is divided into three phases: (1) problem or opportunity validation, (2) solution validation, and (3) implementation validation. Figure illustrates this model.

Figure 2. Decision-making process (Rosin et al. Citation2021).

The problem/opportunity validation phase includes the Capture-Measure and Gap Recognition steps. The capture measure step collects real-time information from the production system, whereas the gap recognition step recognises an abnormal situation that requires a response. We then proceeded to the solution validation phase through Diagnosis, Search, Design, Selection, and Evaluation steps. The Diagnosis step aims to characterise the problem by establishing cause-and-effect relationships in the situation under study to determine whether solutions already exist and proceed to the search step, or whether the situation is new and should move to the Design step. The Search step is used to determine the solution(s) most likely to solve the problem. The Design step is used to design a new solution. If multiple solutions exist, this leads to a Selection step that acts as a filter to reject inappropriate solutions. Finally, the Evaluation step compares the solutions and validates whether the selected solution solves the problem. Then comes Phase 3 and its single step, Authorisation. Authorisation to implement the solution is provided by the operator, machine, or higher authority. This generic model allows for definition of the seven types of autonomy.

4.3.1. Type 1: cyber monitoring

In this type of autonomy, the cyber-physical production system (CPPS) should identify a situation or stimulus that triggers an analysis and decision. The decision-making process is then completed by the teams responsible for managing the situation without further assistance from the CPPS. Cyber monitoring scenarios include the Capture-Measure and Gap Recognition steps that generate stimuli that lead to a decision. By enabling more data to be captured and analyzed in real time, new technologies can immediately, or in some cases predictively, identify performance gaps, errors, and problems in production. The decision-making process can then be initiated more quickly to identify the actions to be taken, thereby improving the operational efficiency.

4.3.2. Type 2: cyber search

For this type of autonomy, the CPPS should propose one or more solutions to an encountered problem based on a pre-established set of possible corrective actions. Faced with an identified situation, the cyber search scenario reinforces the search and diagnosis steps to quickly analyze and target known solutions to correct a problem or respond to an opportunity. The attention and working memory of an agent are particularly challenged at this stage of the decision-making process, and are critical factors that limit the interpretation of information from the environment. Simulation and immersion logic can also reinforce the diagnosis step by allowing real time comparison of the current situation with the situation simulated on a virtual replica of the production system.

4.3.3. Type 3: standard decision support

In this autonomy type, the CPPS should identify a problem, identify a set of possible solutions, and, after possibly filtering them, evaluate the most relevant one(s) and propose a viable solution. The specificity of this scenario reinforces the Evaluation step of the decision-making process. This step is preceded by a Selection step, which provides one or more possible solutions. Based on systematized data processing, the Selection step aims to limit the number of solutions to be processed subsequently in the Evaluation step, which is generally more restrictive in terms of the time and complexity of implementation, as it aims to identify, among the selected solutions, the one likely to meet the set objectives. Previous research has shown that an agent recognised for its expertise in operational decision situations evaluates a plan of action using mental simulation to anticipate what would happen if this plan were applied in the context of the current situation (Klein Citation2008). Simulation and immersion technologies are vital to support operational teams and reduce the cognitive load required for this step.

4.3.4. Type 4: cyber control

This autonomy type goes beyond the standard decision support by reinforcing the Authorise step and facilitating the implementation of the action plan selected in the evaluation step by transmitting the necessary information to the operational level. This last point does not necessarily imply task automation because information can be passed on to the operational team for subsequent translation into action. The Authorise step is generally necessary when applying the chosen solution involving a scope of responsibility other than that of the production centre managing the problem or opportunity. This approval may require horizontal information sharing across organisations. This may be the case, for example, when the root cause of the encountered problem or a key lever for action lies outside the scope of the operation team. Authorisation may also require a vertical flow of information. This occurs when approval to implement the chosen solution is linked to other tactical or strategic decisions. New technologies that improve the horizontal or vertical integration of systems are required at this stage of decision-making.

4.3.5. Type 5: cyber design

The cyber design type is characterised by the reinforcement of the Design step to develop tailor-made solutions, either when the operational team should handle an unknown situation or when no known solution is perfectly adapted to the current situation. In an operational context, the activation of the Design step usually occurs after known solutions have been searched for and evaluated without success. In a completely unknown situation, the Design step can be initiated immediately after a problem or opportunity is identified. There are two prominent cases: the ‘pure design’ case, where tailor-made solutions should be developed without relying on already known solutions, and the case where solutions are modified from already known alternatives. In an operational context, the latter is preferred because it is generally less time consuming, costly, and demanding in terms of the resources and skill levels required. For this reason, it seems more interesting to consider implementing cyber search or standard decision support types beforehand such that the feedback loops leading to the design step can take place as soon as possible after the Search-Selection or Evaluation steps.

4.3.6. Type 6: customised decision support

A unique feature of customised decision support is the enhancement of the Evaluation step after a custom solution is developed in the Design step. This type is ultimately similar to the standard decision-support type in terms of enhanced steps; however, it has the distinction of evaluating previously unknown custom solutions. This modified context introduces subtleties to the evaluation process. Multicriteria decision methods are more appropriate for assessing standard solutions associated with prototypical situations. When used alone, they are not suitable for customised decision support. This type of autonomy first requires the implementation of simulation technologies aimed at simulating and numerically testing the evolution of the production system based on the newly envisaged solutions. Generally, this involves specifying an action plan associated with the implementation of a given solution. It is then possible to translate this action plan into parameters to be updated (which often corresponds to solutions developed by modifying an already known standard solution) or into a scenario to be tested in the simulation model. The simulation then supports decision analysis to assess each possible future state's likelihood and usefulness of future state, and estimate the maximum and minimum achievable results.

4.3.7. Type 7: cyber autonomy

This last autonomy type differs from the previous one in that it reinforces the Authorise step when needed and reinforces the implementation of the action plan for customised solutions. The Authorise step is generally used more often here than for the cyber control type, because implementing a customised solution is more often conditioned by validation from another area of responsibility than that entrusted to the operational team. Strengthening the Authorise step facilitates the implementation of delegation logic. This notion, already well known in organisations, consists of a manager with authority over the operational team, transferring part of their responsibilities and the ability to act and make decisions (Verrier and Bourgeois Citation2016). This is generally accompanied by control exercised by the manager, whose rules are easier to define in advance when standard solutions are already known.

4.3.8. Classification and identification of autonomy types

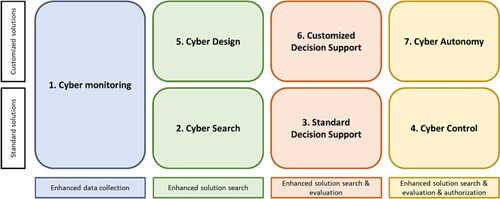

It should be noted that each autonomy type differs from the others in the nature of the steps in the decision-making process enhanced by new technologies and by the fact that the solution to be designed, validated, or authorised is already known. If we ignore the fact that the solution is known, then the types of autonomy can be classified into four main categories: enhanced data collection (Type 1), enhanced solution search (Types 2 and 5), enhanced solution evaluation (Types 3 and 6), and enhanced solution authorisation (Types 4 and 7). Similarly, the types of autonomy can be divided into two classifications: standardised solutions (Types 1, 2, 3, and 4) and customised solutions (Types 1, 5, 6, and 7). These two classifications and the four categories resulted in a matrix that maps the types of autonomy along the two axes, as shown in Figure .

Figure 3. Classification of autonomy types. Adapted from Rosin et al. (Citation2021).

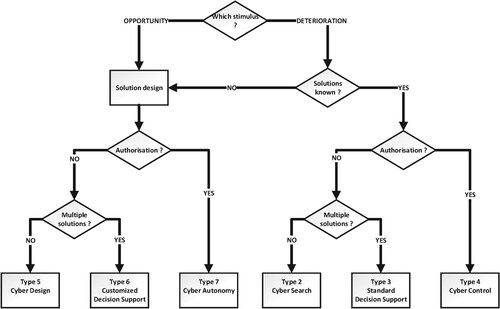

To quickly identify the type of autonomy involved in the use case, the user should answer a series of questions, as illustrated in Figure .

Figure 4. Flowchart for autonomy type selection.

What types of stimuli trigger decision-making? The stimulus may be related to deterioration, in which the current performance differs from that of the previous situation. In this case, the solution may already be known, leading to the classification of standardised solutions, or it may be a new solution, leading to a second classification of customised solutions. Alternatively, the stimulus may be an opportunity–that is, the possibility of achieving, under certain controlled conditions, a level of performance that is better than that of the current situation. This is generally outside the context of standardised work and its gap analysis is based on differences from a standard; the solution is much more likely to be completely new. This classification is more suitable for customised solutions.

Are all solutions known? When the solution is known, we automatically move toward the types of autonomy in the standardised solution classification, namely Types 2, 3, and 4. Conversely, when the solution is unknown, Types 5, 6, and 7 from the customised solution classification are favoured.

Is hierarchical approval required? When the approval of the hierarchy is needed and extended, the autonomy types will inevitably come from the enhanced solution authorisation classification.

Are there multiple solutions available? This question allows us to assess the usefulness of the evaluation step and thus, the extent to which the technologies invest in the decision-making model.

Once the autonomy type is selected, the next step is to identify the decision-making step(s) most relevant to the use case.

4.4. Step 4: select decision-making step(s) (on what?)

This step defines the decision-making step(s) enhanced by new technologies. This step defines the decision(s) that the agent is called upon to make in the use case.

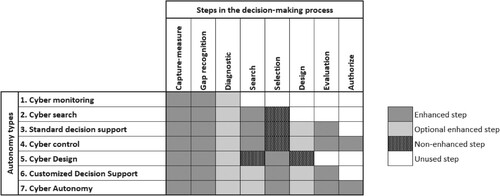

As described previously, the decision-making process includes eight steps. For a specific type of autonomy, studying all the proposed steps can be counterproductive, and it seems wise to limit the number of steps to be studied. To achieve this, it is necessary to distinguish between the necessary, optional, and unnecessary steps in each model. Concurrently, some essential and optional steps will be enhanced by one or more new technologies, whereas others will still be present but will be performed without strong technological support. Figure shows the necessary and enhanced technologies for each of the seven types of autonomy.

Figure 5. Enhanced decision-making step(s) in the chosen type of autonomy.

This figure illustrates the similarities among certain types of autonomy. The most complex types of autonomy, including the maximum number of steps enhanced by new technologies, can be perceived as extensions of the less complex type of autonomy. The cyber autonomy type can be considered as the equivalent of a customised decision support type to which the Authorise step has been added and enhanced. Consequently, studying a complex type of autonomy is of interest only if the study focuses on steps specific to that type of autonomy. Thus, it seems inadequate to build a use case around the cyber autonomy type if the study focuses on the Capture-Measurement step. Contrarily, cyber monitoring type allows the same study without the added complexity of later steps.

At this stage, the context of the use case is well-defined. The specific research question then takes the following form: ‘How does the reinforcement of specific steps in the decision-making process contribute to the emergence of a specific autonomy model for operational teams and systems?’ To answer this specific research question, the next step is to select the group(s) of new technologies to be included in the use case.

4.5. Step 5: select group(s) of new technologies (what?)

This step defines group(s) of new technologies that enhance previously selected decision-making step(s). This step determines the use case proposals according to the perceived capacity of the new technologies chosen in the context defined here. This subsection introduces the concept and usage of a relevance matrix before explaining how to design it using an example adapted from Rosin et al. (Citation2022).

4.5.1. Concept and usage of a relevance matrix

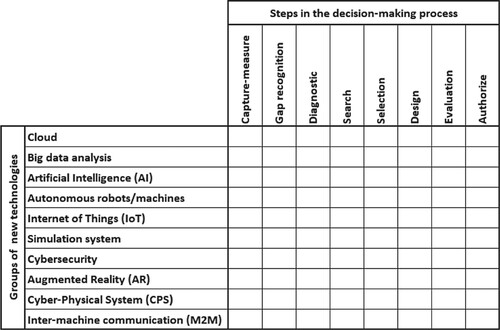

This step is structured around a relevance matrix between the new technologies and eight decision-making steps. At each intersection of this matrix, there is a capability indicator that can be green, white, or red. The structure of this matrix is illustrated in Figure .

Figure 6. Relevance matrix between groups of new technologies and the eight decision-making steps.

The use case proposal(s) were directly derived from this capability indicator. The notions of the enhanced and control configurations are more clearly defined in Step 6.

A green indicator indicates positive consensus on the capabilities of the technology involved in enhancing the decision-making process. The proposal is therefore of the following form: [group(s) of new technologies] promote the autonomy of the [agent] in its decision-making by enhancing its ability to [decision-making step(s)] in [section of the business process]. The modus operandi is based on the agent’s autonomy and is more significant in the enhanced configuration than in the control configuration.

A red indicator shows a negative consensus. The technology does not appear to be suitable for enhancing the involved decision-making steps. Therefore, the proposal has the following form: [group(s) of new technologies] do not promote the autonomy of the [agent] in its decision-making because it does not enhance its ability to [decision-making step(s)] in [section of the business process]. The modus operandi is based on the fact that an agent's autonomy is just as significant, if not less significant, in the enhanced configuration as in the control configuration.

A white indicator indicates a lack of consensus on the impact of the technology concerned in enhancing the decision-making steps involved. The proposal is the same as that for a green indicator and is therefore of the form: [group(s) of new technologies] promote the autonomy of the [agent] in its decision-making by enhancing its ability to [decision-making step(s)] in [section of the business process]. The modus operandi has no particular expectations but aims to detect the slightest effect on the agent's autonomy in both augmented and control configurations.

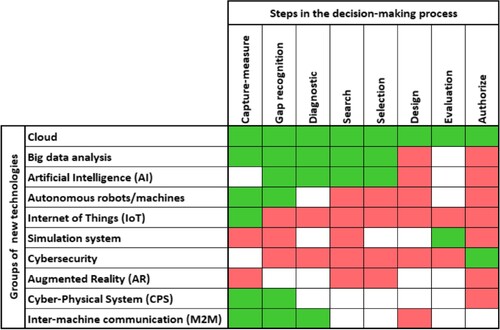

4.5.2. Design of a relevance matrix

This matrix can be completed by various means: a literature review for a theoretical matrix, survey of experts for a more pragmatic matrix, and experiments for a more realistic matrix. By interviewing a Delphi–Régnier panel of equal numbers of academics, experienced industrialists and new technology providers, a list of new technologies most likely to support one or more of the eight steps of the decision-making process was generated (Rosin et al. Citation2022). This type of work fits perfectly into this step because it represents the current industrial and scientific requirements and remains valid in a broader operational context. The results of this study are presented in Figure in the expected format at this step of the framework.

Figure 7. Proposed relevance matrix of new technologies to the steps in the decision-making process. Adapted from Rosin et al. (Citation2022).

These expert recommendations can be used to define correct combinations of scenarios and technologies. If certain technologies such as AI and the cloud seem to be naturally linked to several autonomy types, the study also shows experts’ dissent in applying some of these new technologies. Nevertheless, Figure can guide researchers to define the right new technologies that the use case will explore and the type of experimentation the use case will be able to support.

Figure 8. Step 6: Observational variables and measuring methods.

At the end of this step, the specific research question is completed, proposal(s) are identified, and the context of the use case is established. All that remains is to define the logic linking the data to the proposals and criteria for interpreting the results in Step 6.

4.6. Step 6: select variables of interest and data collection protocol

This step defines the experimental part of the use case aimed at testing the proposals defined in the first five steps of the framework. This subsection is divided into two sections. The first section describes how to select the variable(s) of interest and measure the protocol(s) for the experiments. The second one explains how the structures of the different experimental configurations.

4.6.1. Variable of interest and measure

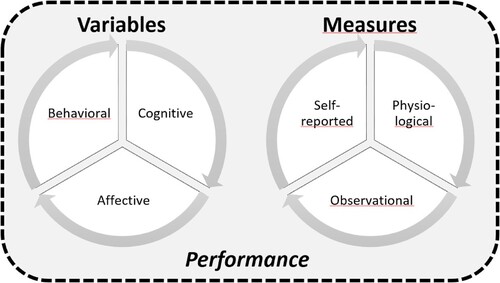

The final step in the framework is to identify the variables to be collected directly from the use case during the various experimental phases. Note that these data remain entirely independent of the status of the agent, although they seem to correspond to the capabilities of an individual. We are interested in both the capabilities of an individual and those of a team or an organisation. The last step is to select the correct variable(s) of interest to be measured in the use case. However, selecting one or more variables of interest is insufficient if a data collection protocol is not defined. The measurement protocol (measures) is as important as the type of data (variables) to be measured. In this step, the user should choose the variables of interest and the way to measure them. Figure presents the three variables of interest and their measures.

The variables were divided into three main categories: cognitive, affective, and behavioural. Cognitive variables relate directly to an agent's mental abilities such as memory, attention, and decision-making. Affective variables are broadly related to sensory perception by combining visual acuity with olfactory sensitivity, intuition, and emotions. Finally, behavioural variables refer to the agent’s behaviour or variations in behaviour, such as initiative, excitement, stress, or aggressiveness.

Three types of measurements can be defined: self-reported, physiological, and observational. Self-reported measures are those in which participants report their thoughts, feelings, or behaviours. This was accomplished through surveys, questionnaires, and interviews. These measures are subject to bias, because the reported facts are first interpreted by the individuals reporting them. Physiological measures assess both physiological and bodily functions. These parameters include heart rate, blood pressure, brain activity, or hormone levels. These measures are much more objective, but usually require invasive equipment. Observational measures assess observable actions or behaviours. Examples include counting the number of times a behaviour occurs, determining the duration of a behaviour, and observing and rating the quality of the behaviour. These measures remain partially objective because observer bias can influence them.

Finally, Figure shows that these variables of interest were measured in addition to the performance measures. It is crucial to ensure that the new technology group(s) do not degrade the agent's performance while performing its business process. Therefore, the agent's performance was continuously measured to ensure that it remained in the enhanced configuration, at least similar to that of the control configuration.

4.6.2. Enhanced configuration and control configuration

Several configurations were used to develop an experimental protocol in line with the use case proposals. In this study, we call ‘enhanced configuration’ any experimental configuration involving one or more groups of new technologies supporting the agent, and we call ‘control configuration’ the configuration without any new technology supporting the agent. Therefore, the principle of experimentation was to compare one or more enhanced configurations with a control configuration.

Certain new technologies and their utilisation can differ depending on their capabilities, that is, low, medium, and high. Thus, it is possible to design configurations that are low, medium, and/or highly enhanced. In addition to the comparison between these enhanced configurations and the control configuration, the chosen implementation conditions were used to verify the impact of the configuration modification over the course of the experiment.

Maintaining capability: no configuration change,

Increasing the capability of new technology: moving from a low-enhanced to a high-enhanced configuration

Degrading the capability of the new technology: moving from a highly enhanced to a low-enhanced configuration, or

Stopping/Adding capability: moving from an enhanced configuration to a control configuration and vice versa.

However, the control group remained in a control configuration throughout the experiment.

5. Validation

The validation of this framework is based on three elements:

Theoretical validation was conducted through a precise description of the methodology applied to the framework design (cf. Section 3 Methodology). This validation ensures that all framework design choices, as well as all tools referred to throughout the six steps, have their origins in the recognised work.

Empirical validation (below) presents an experiment: the step-by-step use of the framework to generate a relevant use case. This experiment ensured that the framework could generate a relevant use case containing all the information required for its experimental application.

Retrospective validation (below) compares the different choices offered by the framework at each step with data from actual cases as illustrated in the current literature. Finally, this validation ensures that the framework is complete or at least as exhaustively as possible.

5.1. Empirical validation

It is essential to ensure that the framework generates comprehensive and relevant use cases. To demonstrate this, we provided an experiment: the application of the framework to generate an actual use case. We adhered to the structure of the framework and provided a detailed account of the choices made at each step.

Step 1: The business process under consideration is that of the snowshoe assembly line in our factory laboratory. This step requires isolating the business process section that we wish to study. We then focused on the final assembly operation. There are two actions here: perform a quality control of the sole received and, if it is correct, finalise the assembly by inserting two latches. Otherwise, snowshoes are rejected. Therefore, we have a concrete decision-making situation (Is the sole correct?). The business process is clearly defined at the beginning and end, and the actions and decisions involved are identified.

Step 2: The business process, particularly the selected section, helps select the agent's characteristics. The decision we are interested in here is made by an agent with operator status, who is focused on their own tasks. Nevertheless, it would have been possible, for example, to focus on the decisions that the operator could make to ensure that an identified quality defect no longer occurs on the assembly line, thus integrating a cooperative dimension.

Step 3: We used the framework's classification of autonomy types (Figure ). In the context of our use case, and more specifically, in the section on the business process selected, it appears that the agent's decision derives directly from its ability to detect quality defects. Because gap analysis is at the core of cyber monitoring, we chose autonomy Type 1. However, we could imagine a business process in which the operator does not reject the defective sole but instead corrects it. Selecting autonomy Types 2 or 5 to enhance the solution search (known for Type 2 or unknown for Type 5) would then be more relevant.

Step 4: The table for this step in the framework (Figure ) implies that the capture measure and gap recognition decision-making steps are reinforced in autonomy Type 1. The simple discovery of a gap between the analyzed and standard sole is sufficient for the agent to decide to discard it. Therefore, we focus on these two decision-making steps and, more specifically, on the gap recognition decision-making step, which appears to trigger the decision. However, we could have imagined asking the agent to qualify for the gap and thus integrate the diagnostic decision-making step into the use case.

Step 5: We used the relevance matrix constructed from the Delphi–Régnier study by Rosin et al. (Figure ). The gap recognition decision-making step is thus highlighted, and experts agree that AI is relevant for enhancing this decision-making step. It would be interesting to propose a use case to validate this assertion within a specific context. The specific research question is complete: ‘How does the reinforcement of the gap recognition step in the decision-making process by AI contribute to the emergence of a cyber-monitoring autonomy type for operational teams and systems?’ Moreover, the resulting proposal would be: ‘AI promotes the operator autonomy in its decision-making during routine tasks by enhancing its ability to recognise a gap as part of a quality control in an assembly process.’ We can also select (or add) another group of new technologies such as augmented reality. In this case, because this group of new technologies caused dissensus among experts, the aim of the use case was to clarify this dissensus.

Step 6: In the final step, we define the measures and variables of interest and set up various experimental configurations for the first experiment. We aim to observe and measure the implications of the operator on the implemented Artificial Intelligence. We then selected the behavioural variables of interest. To limit any bias, we selected physiological measurements using equipment to capture heart rate, respiratory rate, sweating, etc. Next, we defined two experimental configurations: the control configuration featuring an operator without AI support and enhanced configuration featuring an operator with reliable AI support in 100% of the cases. During the first experiment, we made one configuration change; thus, the testing was stopped. The control group remained in the same configuration throughout the experiment. Given the positive consensus on AI in the context of our use case, it appears that the proposal will take a step towards confirmation if the agent's involvement is found to be greater in the enhanced configuration than in the control configuration. Finally, we added brain activity measurement equipment to extend the experiment and measure the cognitive load. We also defined a medium-enhanced configuration involving 80% reliable AI to further vary the experimental protocol.

This experiment, which illustrates each step of the framework, demonstrates that it is possible, in just a few steps, to define a coherent and relevant use case, offering a clear and precise context accompanied by several experimental protocols that would validate the proposals put forward, and thus answer a specific research question that fits automatically into a more global research approach. The works presented by Passalacqua et al. (Citation2023) and, more recently, by Joblot et al. (Citation2023) present use cases representative of the proposed example and incorporate all these elements.

5.2. Retrospective validation

However, the framework was compared with case studies and use cases found in the literature on I5.0. The restriction to I5.0 papers ensures that the selected cases are recent and deliberately human-centred. The literature on I5.0 includes several articles that use case studies and use cases to assess the impact of new technologies on employees. For a complete analysis of the literature, case studies were sufficiently close to use cases for inclusion in the search for articles for this validation. Experiments were conducted in realistic and complex environments. We sought to validate the relevance of our framework by analyzing whether it could propose use cases that mimic the characteristics of these cases.

The main aim of this validation was to ensure that the framework is complete, that is, all the parameters of the studied cases are proposed in one of the steps of the framework. The current literature was analyzed to validate the completeness of the framework.

The validation process started by identifying journal and conference articles in the Scopus database, exposing case studies and use cases focusing on Industry 5.0 practices. The query used was the following: TITLE-ABS-KEY ((‘industrie 5.0’ OR ‘industry 5.0’) AND (‘case stud*’ OR ‘use case’)) AND (LIMIT-TO (DOCTYPE, ‘cp’) OR LIMIT-TO (DOCTYPE, ‘ar’)) AND (EXCLUDE (SUBJAREA, ‘MEDI ORENER ORPHYS ORPSYC ORARTS ORMATE’)). The search returned 118 papers by December 2023.

Before using this set of articles to validate the framework, it was essential to ensure that each article encompassed a case study focused on assessing the influence of one or more groups of new technologies on an agent, preferably within a decision-making context. The initial reading of each article facilitated the exclusion of articles that primarily addressed technology implementation validation, training individuals on new technologies, or limiting accidents between humans and machines. Finally, 21 articles exposing relevant cases were retained and analyzed further. Articles written by (Rožanec et al. Citation2022) and Longo, Padovano, and Umbrello (Citation2020) propose multiple cases, which brought our total to 24 relevant case studies and uses cases.

The framework was validated using the Table presented below, which serves as an analytical framework for each case identified from the literature. Each case is represented by one row in the table. The table is divided into five coloured sections corresponding to Steps 2–6 of the framework (Step 1 does not propose any choice). Each section was further divided into items that characterised the respective framework steps. Each coloured section includes two additional columns.

The column at the beginning of the section indicates whether a particular case can be characterised by any item within the considered framework step.

An ‘other’ column at the end of the section indicates if a given case was characterised by an item not explicitly structured within the framework.

Table 2. Validation of the use cases development framework.

The validation process involved completing a table by marking the corresponding boxes when an item (column) was identified within a specific case (row). The final row of the table serves as a summary, providing a count of the cases characterised by each item. The framework was considered incomplete if one of the items identified in the case study was not covered in a given step. If this is not the case, the framework will be completed and validated against the current literature.

Each of the 24 selected cases underwent a thorough study conducted by multiple readers, each independently completed grid analysis to eliminate any potential influence. Additionally, each reader was required to provide a formal justification for the proposed positioning. The results obtained were compared and analyzed before the final placement of each case in the table.

We encountered no cases that could be characterised using the proposed framework. Additionally, we did not come across any cases that required an item outside the choices of the framework, as indicated by the absence of empty marks in the ‘other’ columns. This provided an initial level of validation based on the literature (see Table ).

6. Discussion

This framework does not necessarily require starting with a specific technology to construct a use case. Instead, it suggests defining a context and a need first, and then identifying the new technology(ies) most relevant to that context through step 5. As such, the framework is not technology-centred. Additionally, defining the agent holds significant importance within the framework because it greatly influences subsequent decisions. By prioritising a human-centred approach, this framework aligns fully with the principles of Industry 5.0.

The majority of the steps in the framework are based on established and recognised works. For instance, Step 2 draws upon the APICS work centre concept (Pittman and Atwater Citation2022), whereas Step 5 utilises the matrix from the research of Rosin et al. (Citation2022). Although the structure of the framework, as outlined in the initial part of the methodology, must be retained, the specific references used to illustrate each step can be replaced by alternatives. It is important to distinguish between the structure and content of a framework. New references can potentially be aligned with the objectives of the users of the framework or offer more recent insights, ensuring an up-to-date framework.

For example, it might be interesting to modify Step 2 with models that consider a broader vision than that of the work centre. Based on a think tank of both academics and industrialists, Bourdu, Péretié, and Richer (Citation2016) analyzed the notion of autonomy at work in the context of several emerging models of work organisation, such as lean management, liberated enterprises, and responsible enterprises. They proposed a model of autonomy at work based on three dimensions that delineate the space of involvement, direct participation, and the ability to influence and decide on the work of an agent: the task, cooperation, or governance.

The framework consists of six distinct steps traditionally approached in a specific order. However, in practice, it is often beneficial to address the generation of use cases through these steps simultaneously. Strong interactions and dependencies exist between steps, and the choices made in one step can potentially affect the decisions made in the previous steps. As a result, the order in which the steps are approached has minimal impact on the outcome as long as there is a continuous feedback loop. This six-step framework can be viewed as multidimensional, with each dimension representing a different aspect of the use-case generation process.

The framework’s validation reveals three configurations worth analyzing:

Configuration 1: an item proposed by the framework is not found in any of the cases studied. This occurred for items such as cybersecurity technology (Step 5), M2M communication (Step 5), and autonomy type 7 (Step 4). This indicates that these specific themes and their implications for autonomy have not been thoroughly explored in existing research. This highlights the potential for further investigation and research in these areas and presents new avenues for future research.

Configuration 2: none of the proposed steps have been identified in the case studied. Steps 3 and 4 of our framework are not detailed by Doyle-Kent and Shanahan (Citation2022). In this case, it was challenging to identify which step in the decision-making process was emphasised because the case study was more concerned with the benefits perceived by people than with studying how employees deal with problems. Similarly, Step 6 of our framework is not detailed in Helm, Malikova, and Kembro (Citation2023), Lo (Citation2023), Nourmohammadi, Fathi, and Ng (Citation2022), Ordieres-Meré, Gutierrez, and Villalba-Díez (Citation2023), and Sit and Lee (Citation2023). In this case, performance is the primary measure, as supported by our framework. Our framework also aims to enhance the analysis by incorporating other variables of interest to provide a more comprehensive understanding.