?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

In this paper, the optimal control problem for dynamical systems represented by general high-order fully actuated (HOFA) models is formulated. The problem aims to minimise an objective in the quadratic form of the states and their derivatives of certain orders. The designed controller is a combination of the linearising nonlinear controller and an optimal quadratic controller for a converted linear system. In the infinite-time output regulation case, the solution is in essence a nonlinear state feedback dependent on a well-known Riccati algebraic equation. In the sub-fully actuated system case, the feasibility of the controller is investigated and guaranteed by properly characterising a ball restriction area of the system initial values. Application of the optimal control technique for sub-fully actuated systems to a spacecraft attitude control provides very smooth and steady responses and well demonstrates the effect and simplicity of the proposed approach.

1. Introduction

Optimal control was born in the late 1950s with the appearance of linear quadratic regulator (LQR), dynamic programming and maximum principle. Kalman (Citation1960) first introduced the LQR for linear systems. The method determines an optimal control in an analytic feedback form and plays a fundamental role in linear control systems theory (Anderson & Moore, Citation1990). Afterwards, fruitful results appear in the literature. Constrained LQR is proposed for linear systems subject to constraints on the inputs and states (Chmielewski & Manousiouthakis, Citation1996; Scokaert & Rawlings, Citation1998), stochastic systems (Chen et al., Citation1998) and descriptor systems (Bender & Laub, Citation1987a, Citation1987b) are also studied, with corresponding LQRs developed, and Terra et al. (Citation2014) considers robust linear quadratic regulators (RLQRs) for discrete-time linear systems with parametric uncertainties, while the noise in state and control is taken into account in Rami et al. (Citation2002). Also, Agrawal (Citation2006) and Li and Chen (Citation2008) extend the optimal control theory to fractional dynamic systems, and the solution to fractional LQR problems is given. In addition, Li and Todorov (Citation2004) present iterative LQR design for nonlinear biological movement systems. In the context of switched and hybrid systems, a number of important properties associated with the discrete-time switched LQR (DSLQR) problem are derived (Zhang et al., Citation2009, Citation2012).

Dynamic programming was first proposed by Bellman (Citation1952) with the intension to solve multistage decision process problems. This approach transforms the discrete-time optimal control problem into solving the Hamilton–Jacobi–Bellman (HJB) equation (Bellman & Kalaba, Citation1957, Citation1960). For problems involving conflicting objectives, major developments of multi-objective dynamic optimisation have been made, e.g. the fuzzy dynamic programming proposed in Abo-Sinna (Citation2004). To break the ‘curse-of-dimensionality’ associated with the traditional dynamic programming approach, approximate dynamic programming is developed by combining simulation and function approximation (Wang et al., Citation2009), where heuristic dynamic programming (HDP) (Werbos, Citation1977), action-dependent HDP (Werbos, Citation1989), neuro-dynamic programming (Bertsekas & Tsitsiklis, Citation1995) and learning-based algorithms (Lewis & Vrabie, Citation2009; Tsitsiklis & Roy, Citation1997) can be used.

Right around the time Bellman proposed DP, Pontryagin's maximum principle appeared for general continuous-time nonlinear control problem by finding the solution of boundary conditions of the HJB equation (Pontiyagin et al., Citation1962; Rozonoer, Citation1959). Later, a discrete version was presented in Hwang and Fan (Citation1967). Nowadays, these types of optimal control techniques have been widely generalised, such as extensions to infinite-dimensional state space (Seierstad, Citation1975), hybrid control systems with Hybrid Maximum Principle (Dmitruk & Kaganovich, Citation2008; Sussmann, Citation1999), constrained impulsive control problems with necessary conditions in the form of Pontryagin's maximum principle (Arutyunov et al., Citation2012), Boolean control networks (Laschov & Margaliot, Citation2011a, Citation2011b) and optimal control problems with time delays (Bokov, Citation2011).

As a type of optimal control, model predictive control (MPC) techniques provide a methodology to tackle model uncertainty and dynamical constraints on states and inputs without expert intervention (Findeisen et al., Citation2003; Garcia et al., Citation1989). The idea originally comes from dynamic matrix control (DMC), and then an explosion of activity in MPC has been witnessed in the current century. For deterministic systems, hybrid MPC, economic MPC, explicit MPC and distributed MPC have attracted much attention. On the other hand, robust MPC and stochastic MPC are proposed to deal with control of uncertain systems subject to disturbances, or state and control constraints. More details can be found in Lee (Citation2011) and Mayne (Citation2014) and the references therein.

Besides the above, H optimal control is definitely a celebrated breakthrough, which is developed to deal with the worst-case control design for systems subject to input disturbances (Ball & Helton, Citation1990; Doyle et al., Citation1988). The case that parameter uncertainty appears in plant modelling motivates the research on robust H

control problem, and the results involve both continuous and discrete systems (Xie & de Souza, Citation1990; Xu et al., Citation2000). The robust H

control theory is also extended to time-delay systems (Xu & Chen, Citation2002). In addition, as an effective approximation method, state-dependent Riccati equation (SDRE)-based methods address nonlinear optimal control problems and provide systematic and effective design of feedback controllers (Çimen, Citation2008, Citation2012), where constrained SDRE for systems with state constraints and SDRE in combination with H

, sliding mode control, neural network, etc. are also studied (Nekoo, Citation2019).

1.1. Fully actuated system approaches

Most the approaches mentioned above are in the general state–space framework. Except those optimal control techniques which are coping with linear systems, e.g. the linear quadratic optimal control, the linear H/H

optimal control, most of the reported optimal control approaches are, more or less, complicated in theory and are hence difficult to apply in practice due to some theoretical or practical obstacles, such as solutions to complicated nonlinear differential or algebraic equations which are not solvable in an accurate sense.

As argued in Duan (Citation2020a), Duan (Citation2020b), Duan (Citation2020d) and Duan (Citation2020j), high-order fully actuated (HOFA) models also serve as a general model for dynamical control systems, and are especially convenient and effective in dealing with control problems. The demonstration of this with robust control, adaptive control and disturbance rejection control has been given in Duan (Citation2020f), Duan (Citation2020g), Duan (Citation2020h) and Duan (Citation2020i). A huge advantage of the HOFA models is that their full-actuation feature allows one to cancel the known nonlinearities in the system and hence to convert, to an extent, a nonlinear problem into a linear one. Such an advantage is again certified in this paper with the problem of nonlinear optimal control.

Any optimal control problem generally has an index, while the most basic form of an index is the quadratic one. In this paper, an optimal control problem for general dynamical systems described by HOFA models is formulated to minimise an index in the quadratic form of the state and its derivatives of certain orders. As a consequence of this requirement, the system responses eventually behave very smoothly and steadily.

As mentioned above, utilising the full-actuation feature of the HOFA models, the nonlinear optimal control problem is converted essentially to a linear quadratic optimal control problem, and a nonlinear optimal controller in a state feedback form is then obtained. Following such an outline, the finite-time optimal tracking control problem is first treated, and then the infinite-time output regulation problem is also solved.

Another contribution of the paper is the treatment of the sub-fully actuated system case. Sub-fully actuated systems are defined in Duan (Citation2020a) and Duan (Citation2020j), and are relatively more difficult to handle due to a problem of feasibility. Using a feature of linear quadratic optimal control, a feasibility condition is established for the optimal control of sub-fully actuated systems. It turns out that feasibility is guaranteed when a condition on only the initial values of the system is met.

For demonstration of the proposed nonlinear optimal control approach, control of the attitude system of a spacecraft is considered. It is well known that the spacecraft attitude system is highly nonlinear when the attitude angles are working in a wide range. Hence accurate attitude manoeuvring with smooth and steady transient performance has ever remained a big challenge. With the new design, stabilisation of the attitude with very smooth and steady transient performance is achieved, and very efficient rapid turning elimination is also realised. The design and simulation results have fully demonstrated that the proposed approach serves a very effective and simple way to tackle such spacecraft attitude control problems.

In the sequential sections, denotes the identity matrix,

denotes the null set and

represents the complement of the set Θ in set Ω. For a square matrix P,

and

denote its maximum and minimum eigenvalues and its determinant, respectively, while for a nonsingular matrix P, its condition number is denoted by

. Furthermore, for

and

as in the former papers in the series,

denotes a block diagonal matrix with the i-th diagonal block being

and

The paper is organised into seven sections. The next section formulates the nonlinear optimal control problem to be solved in the paper, and a solution to the problem is then given in Section 3. In Sections 4 and 5, the cases of output regulation and sub-fully actuated systems are treated, respectively. An application of the proposed optimal control technique to a spacecraft attitude control problem is presented in Section 6, followed by a brief concluding remark in Section 7. The appendix gives the general attitude model of a spacecraft.

2. Problem formulation

2.1. The HOFA model

Consider the following general HOFA system with multiple orders proposed by Duan (Citation2020j):

(1)

(1) where

is the control vector,

may represent a parameter vector, an external variable vector, a time-delayed state vector, an unmodelled dynamic state vector, etc.;

are a set of distinct integers,

are a set of vectors of proper dimensions, with

being a set of integers satisfying

(2)

(2) Further,

are a set of nonlinear vector functions, and

is a sufficiently smooth matrix function satisfying the following full-actuation condition:

Assumption A1

, and t>0.

The system (Equation1(1)

(1) ) satisfying the above Assumption A1 is called a (globally) fully actuated system (Duan, Citation2020j).

Remark 2.1

The above HOFA model (Equation3(3)

(3) ) might be easily mistaken to represent a very small portion of systems due to the full-actuation Assumption A1. While as discussed in Duan (Citation2020a), Duan (Citation2020d) and Duan (Citation2020j), it serves as a general model for dynamical control systems. Many systems which are in state-space forms can be converted into HOFA systems (see Duan (Citation2020a), Duan (Citation2020b), Duan (Citation2020d) and Duan (Citation2020e)), and practical systems can also be modelled as HOFA systems.

If we denote

then the HOFA system (Equation1

(1)

(1) ) can be compactly written as

(3)

(3) Recall that

,

we have

Denote

(4)

(4)

then it is easy to see that

For the above system (Equation3

(3)

(3) ), we can impose the output equation

(5)

(5) where

is a known matrix.

Particularly, in the case that the above system (Equation3(3)

(3) ) has the following set of output equations

(6)

(6) where

are a set of constant matrices of appropriate dimensions, we can define

which obeys the output Equation (Equation5

(5)

(5) ).

2.2. Statement of the problem

The design objective is to let the output y track a properly given sufficiently differentiable signal . To realise this, we introduce the following objective:

(7)

(7) where

are two semi-positive definite matrices, while

is a positive definite one. Obviously, the term

in the integral of the above index

aims to minimise the changing rate of the system states. As a consequence, smoothness and steadiness in the state vector

will be achieved.

Based on the above description, the problem to be solved in this paper can now be stated as follows.

Problem 2.1

Given the HOFA system (Equation3(3)

(3) ) with the output Equation (Equation5

(5)

(5) ), two semi-positive definite matrices

and a positive definite matrix

, find a feedback controller in the following form:

(8)

(8)

such that the index given by (Equation7

(7)

(7) ) is minimised, where in (Equation8

(8)

(8) )

(9)

(9) is some function which is continuous with respect to its variables.

To end this section, let us finally make some remarks about the considered HOFA model (Equation1(1)

(1) ), or equivalently, (Equation3

(3)

(3) ).

Remark 2.2

Please note that a special case of the above general HOFA model (Equation1(1)

(1) ) is clearly the following HOFA model with a single-order:

(10)

(10) which forms the basic part of the system models involved in the problems of robust control, adaptive control and disturbance rejection treated in Duan (Citation2020f), Duan (Citation2020g), Duan (Citation2020h) and Duan (Citation2020i).

Remark 2.3

Most physical systems are governed by certain physical laws, such as the Newton's Law, the Lagrangian Equation, the Theorem of Linear and Angular Momentum, Kirchhoff's Laws of Current and Voltage. When such physical laws are used in modelling, a series of second-order subsystems are originally obtained (Duan, Citation2020a). From this stage we can further get a state–space model by variable extension, and on the other side, we can get a HOFA model by variable elimination if the system is controllable (Duan, Citation2020j). It should be noted that many physically fully actuated systems exist, which are already in the form of second-order HOFA systems at the modelling stage.

3. Solution to problem

The solution to Problem 2.1 can be derived in three steps.

3.1. Step I. Deriving the linear system

In this step, we choose for the system (Equation3(3)

(3) ) a control input transformation in the form of (Equation8

(8)

(8) ), with

being an introduced control vector. Under this control transformation, the system (Equation3

(3)

(3) ) is turned into the following series of linear systems:

(11)

(11) which can be equivalently written in the state-space form as

(12)

(12) where, by our notations,

(13a)

(13a) Define

then the set of systems in (Equation12

(12)

(12) ) can be more compactly written as

(14)

(14)

3.2. Step II. Index conversion

In this step, let us consider the index defined by (Equation7

(7)

(7) ).

In view of (Equation3(3)

(3) ), we have

(15)

(15) Further using (Equation8

(8)

(8) ), we obtain

(16)

(16) Substituting the above relation (Equation16

(16)

(16) ) into (Equation15

(15)

(15) ) gives

(17)

(17) Finally, substituting (Equation17

(17)

(17) ) into (Equation7

(7)

(7) ), turns the index

into the following form:

(18)

(18)

3.3. Step III. Solving the linear optimal problem

In this step, we design a state feedback control law for the linear system (Equation14(14)

(14) ), with the output Equation (Equation5

(5)

(5) ), to minimise the index

defined in (Equation18

(18)

(18) ). According to the well-known optimal control result for linear systems (see, e.g. Theorem 8.5.1 in Duan (Citation2016)), the controller is readily obtained as

(19)

(19) where

is the solution to the following differential Riccati equation:

(20)

(20) with the final value condition

(21)

(21) and

is a vector function satisfying the differential equation

(22)

(22) with

(23)

(23) and the final value condition

(24)

(24) To sum up, we have the following theorem about the solution to Problem 2.1.

Theorem 3.1

Let Assumption A1 be met. Then a solution to Problem 2.1 is given by

(25)

(25) where

is the solution to the differential Riccati Equation (Equation20

(20)

(20) ) subject to the final value condition (Equation21

(21)

(21) ), and

is a vector function satisfying the differential Equations (Equation22

(22)

(22) )–(Equation23

(23)

(23) ) and the final value condition (Equation24

(24)

(24) ).

In the case of output regulation, that is, the case of , the index

becomes

(26)

(26) In this special case, correspondingly the function

vanishes, and obviously the above Theorem 3.1 becomes the following result.

Corollary 3.1

Let Assumption A1 be met, and be semi-positive definite, and

be positive definite. Then, for the system (Equation3

(3)

(3) ) with the output Equation (Equation5

(5)

(5) ), a feedback controller in the form of (Equation8

(8)

(8) ), which minimises the index

is given by

(27)

(27) where

is the solution to the differential Riccati Equation (Equation20

(20)

(20) ) subject to the final value condition (Equation21

(21)

(21) ).

To end this section, let us make the following remark.

Remark 3.1

In linear systems theory, time-varying linear system context is a very important part. Different from constant linear ones, time-varying linear systems are usually much more difficult to handle. As a matter of fact, the well-known linear quadratic optimal control problem is generally formulated with time-varying linear systems. We point out that, with HOFA approaches, problems related to time-varying linear systems generally do not present since the time-varying terms in the system may be included in the nonlinear term of the HOFA system and hence cancelled by using the full-actuation property.

4. The infinite-time case

4.1. The problem

Let us look into the case of infinite-time output regulation, that is, the case of and

Now the final-time term in the objective

given in (Equation7

(7)

(7) ) vanishes, and the objective

turns to be

(28)

(28) where

is a semi-positive definite matrix, while

is a positive definite matrix.

Particularly, when the problem of infinite-time state regulation problem is considered, that is, when or

the above index can be simply written as

(29)

(29) bearing in mind that the matrix R is required to be symmetric positive definite.

Corresponding to Problem 2.1, now the infinite-time optimal output regulation problem can be stated as follows.

Problem 4.1

Given the HOFA system (Equation3(3)

(3) ) with the output Equation (Equation5

(5)

(5) ), a semi-positive definite matrix

and a positive definite matrix

, find for the system a feedback controller in the form of (Equation8

(8)

(8) ), with

(30)

(30) such that the index

given by (Equation28

(28)

(28) ) is minimised.

Remark 4.1

For the infinite-time optimal control problem, considering tracking an arbitrary signal , as in the finite-time optimal control problem formulated in Section 3, is generally not realisable. However, for certain signal

generated by a proper dynamical system, infinite-time tracking problem can indeed be well formulated and solved (see, e.g. Anderson and Moore (Citation1990)).

4.2. The solution

To derive the solution to the above Problem 4.1, the following well-known result is needed (see, e.g. Lemma 8.3.1 and Theorem 8.3.2 in Duan (Citation2016)).

Lemma 4.2

Let ,

, and

be controllable. Further let R>0, and

be observable. Then

| (1) | the particular solution | ||||

| (2) | this limit matrix P is the unique positive definite solution to the following Riccati algebraic equation

| ||||

| (3) | the following matrix

| ||||

Due to the above lemma, we introduce the following assumption:

Assumption A2

The matrix pair is observable, where

.

Since , are all controllable, it is easy to know that

is also controllable. Thus it follows from the above Lemma 4.2 and Assumption A1 that the particular solution

to the Riccati differential Equation (Equation20

(20)

(20) ) satisfying the final condition

converges, as

, to the unique solution of the following Riccati algebraic equation:

(32)

(32) According to optimal control theory, for the linear system (Equation14

(14)

(14) ) the optimal controller which minimise the index

(33)

(33) is given by

and the corresponding closed-loop system is

(34)

(34) which is asymptotically stable according to the above Lemma 4.2.

Based on the above analysis, it is easy to derive the following theorem about the solution to Problem 4.1.

Theorem 4.3

Let Assumptions A1 and A2 be met, and be semi-positive definite, and

be positive definite. Then, for the system (Equation3

(3)

(3) ) with the output Equation (Equation5

(5)

(5) ), a feedback controller in the form of (Equation8

(8)

(8) ), which minimise the index

is given by

(35)

(35) where

is the unique solution to the algebraic Riccati Equation (Equation32

(32)

(32) ). Furthermore, the closed-loop system (Equation34

(34)

(34) ) is linear and asymptotically stable, and the minimum index is given by

(36)

(36)

Regarding the above result, we make the following remark.

Remark 4.2

Due to the above Theorem 4.3, the optimal controller (Equation35(35)

(35) ) may be viewed as a stabilising controller for the system (Equation3

(3)

(3) ). However, different from a general stabilising controller for the system, the controller (Equation35

(35)

(35) ) also assures that the state vector

of the closed-loop system goes to zero with a smooth and steady transient performance, and, particularly,

converges to zero monotonously.

Remark 4.3

Generally speaking, it is hard to achieve global stabilisation (including infinite-time optimal control) of nonlinear systems. However, as demonstrated by the above Theorem 4.3, once a nonlinear system is represented in a HOFA system form, global stabilisation can be easily realised. What is more, the closed-loop system is even linear (which obviously implies global exponential stabilisation). This fact again demonstrates the great advantage of the HOFA design approaches.

5. The sub-fully actuated case

Firstly, let us recall the concept of singular points of sub-fully actuated systems, introduced in Duan (Citation2020j).

5.1. Set of singular points

For a sub-fully actuated system in the form of (Equation1(1)

(1) ), the following concept is essential.

Definition 5.1

If satisfies

(37)

(37) then it is called a singular point of system (Equation1

(1)

(1) ) at time t.

Let be the set of all singular points of system (Equation1

(1)

(1) ) at time t, that is,

Then we call, particularly,

or, equivalently,

the set of singular initial value points of system (Equation1

(1)

(1) ). Furthermore, the following set

is called the set of singular points of system (Equation1

(1)

(1) ). Clearly, for a HOFA system (Equation1

(1)

(1) ) satisfying Assumption A1, there obviously holds

Let us define

then

is called the set of feasible points of system (Equation1

(1)

(1) ). Similarly, the following sets

and

are called the set of feasible points of system (Equation1

(1)

(1) ) at time t, and the set of feasible initial value points of system (Equation1

(1)

(1) ), respectively. In general, the system (Equation1

(1)

(1) ) is called a sub-fully actuated system if

is a set with dimension not less than 1 (Duan, Citation2020j).

5.2. Optimal output regulation

With the above preparation, we can now solve the optimal control problem of sub-fully actuated systems. For simplicity, let us again illustrate the idea with the output regulation problem, but with Assumption A1 replaced with the following one.

Assumption A3

The set of singular points, meets the following two conditions:

it does not depend on time t, that is,

it does not contain the origin, that is,

Obviously, the above is clearly the distance of the set

from the origin. As a consequence of the above Assumption A3, we also have

With the above preparation, the optimal control problem to be solved can be now stated as follows.

Problem 5.1

Let the HOFA system (Equation3(3)

(3) ), with the output Equation (Equation5

(5)

(5) ), satisfy Assumptions A2 and A3, and

be a semi-positive definite matrix, and

a positive definite one. Find a feedback controller in the form of (Equation8

(8)

(8) ) and (Equation30

(30)

(30) ), such that

| (1) | the index | ||||

| (2) | the following feasibility condition is met:

| ||||

To solve the above problem, we need to present the following important lemma about stabilisation of sub-fully actuated systems.

Lemma 5.2

Let Assumption A3 be met. Further, let be a matrix making

Hurwitz, and

be a positive definite matrix satisfying the Lyapunov matrix equation

where

and

is observable. Then,

| (1) | the following feedback controller

| ||||

| (2) | if, further, the initial values are chosen to satisfy

| ||||

Proof.

Under given conditions, the first conclusion can be easily proven, now we need only to show that the feasibility requirement (Equation38(38)

(38) ) is met when condition (Equation40

(40)

(40) ) holds.

First of all, since it follows from (Equation40

(40)

(40) ) that

(41)

(41) Thus we have

(42)

(42) Next, let us define

then, following a typical treatment in stability analysis of linear systems, we can prove that

Therefore,

that is,

(43)

(43) From this we obtain, for

,

which further gives, together with condition (Equation40

(40)

(40) ), the following

This implies the relation (Equation38

(38)

(38) ). The proof is then completed.

Based on Theorem 4.3 and the above lemma, a solution to the above Problem 5.1 can be given as follows.

Theorem 5.3

Let Assumptions A2 and A3 be met, and be semi-positive definite, and

be positive definite. Further, let the feedback controller in the form of (Equation8

(8)

(8) ) for the system (Equation3

(3)

(3) ) be given by (Equation35

(35)

(35) ), with

being the unique solution to the algebraic Riccati Equation (Equation32

(32)

(32) ). If, further, the initial values are chosen to satisfy

(44)

(44) then the two requirements in Problem 5.1 are both met.

Proof.

Denote

(45)

(45)

(46)

(46) then, by Theorem 4.3,

is Hurwitz. Further note

it is clear that the Riccati Equation (Equation32

(32)

(32) ) is equivalent to the following Lyapunov matrix equation

(47)

(47) with

(48)

(48) Due to Assumption A2, we have

and

is observable. Further let

we can write

(49)

(49) Remembering that

is observable, by the well-known PBH criterion we have

Using this relation, we immediately have

(50)

(50) that is, the matrix pair

is also observable by the PBH criterion again. Therefore, the conclusion of the theorem can now be easily drawn by combining Theorem 4.3 and Lemma 5.2.

5.3. Further discussions

This section further looks into two circumstances.

5.3.1. Case of

containing the origin

containing the origin

For simplicity, let us consider the following time-invariant HOFA system:

(51)

(51) By definition, its set of singular points is

When the above set of singular points contains the origin, we can introduce a state transformation

(52)

(52) where

is a constant vector. Under the above transformation, the above system (Equation51

(51)

(51) ) is converted into

(53)

(53) Often with a proper choice of the constant vector q, the set of singular points of the above new system (Equation53

(53)

(53) ), namely,

does not contain the origin. Hence for the system (Equation53

(53)

(53) ) Assumption A3 holds. Therefore, the optimal control of system (Equation51

(51)

(51) ) can then be solved by applying the above Theorem 5.3 to the transformed system (Equation53

(53)

(53) ).

5.3.2. Decoupled designs

It is easily recognised from the proof of Theorem 5.3 that the subset of the feasible initial values given by Theorem 5.3, that is, the set of points satisfying condition (Equation44(44)

(44) ), may be conservative. In certain cases, e.g. when

is dependent on only some, but not all,

's and their derivatives, less conservative initial value ranges can be provided.

For convenience, let us simply assume that is dependent on only

that is, there exists a scalar function

such that

In this case, instead of converting (Equation51

(51)

(51) ) into a whole system (Equation14

(14)

(14) ), we can convert (Equation51

(51)

(51) ) into two subsystems in state–space forms, as

(54)

(54) and

(55)

(55) where

By now, in the Step III of Section 3, we can apply the linear quadratic regulation control technique to both systems (Equation54

(54)

(54) ) and (Equation55

(55)

(55) ) separately, as treated in the proof of Theorem 3.1. As a consequence, the initial values of the system (Equation55

(55)

(55) ) can be freely chosen, while only the initial values of the system (Equation54

(54)

(54) ) should be properly restricted to meet the feasibility of the controller (Equation8

(8)

(8) ), as treated in the proof of the above Theorem 5.3. In such a way, a much more tight subset of feasible initial values can be provided. The next section gives a demonstration of this idea with an application to spacecraft attitude control.

6. Spacecraft attitude control

6.1. The system model

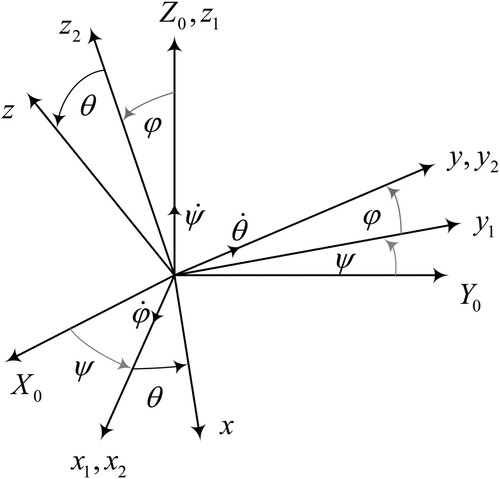

Consider the modelling of the attitude system of a spacecraft. The coordinate system on the spacecraft is shown in Figure . The origin of the coordinate system is taken to be the centre of the spacecraft, the z-axis takes the direction from the centre of the spacecraft to the earth centre, the x-axis goes along with the flight direction of the spacecraft, and the y-axis is determined by the right-hand coordinate system.

Denote by ψ the yaw angle, φ the roll angle, and ϑ the pitch angle, and put

(56)

(56) then, when the order of rotation of the coordinate system is

, the dynamical model for the spacecraft attitude system can be written in the following second-order matrix form (Duan, Citation2014):

(57)

(57) where u is the torque vector, and

(58)

(58) the functions

and

are given in the appendix. Thus the system can be rewritten in the following standard HOFA system form:

(59)

(59) where

(60)

(60)

(61)

(61) Note that

the above system (Equation59

(59)

(59) )–(Equation61

(61)

(61) ) has the following set of singular points:

(62)

(62) Clearly, the distance of

from the origin is

6.2. Optimal control design

The design objective is to stabilise the HOFA system (Equation59(59)

(59) )–(Equation61

(61)

(61) ) with a nonlinear controller in the form of (Equation8

(8)

(8) ), with a particular intension of keeping the spacecraft moving smoothly and steadily. Theoretically, this requires

and also

to be all as small as possible, and a proper choice of the index in the form of

in (Equation28

(28)

(28) ) will adequately meet this need. Therefore, the optimal control problem for the system (Equation59

(59)

(59) )–(Equation61

(61)

(61) ) can be well established and solved by applying Theorem 5.3.

However, in order to obtain a larger feasible set of initial values to cope with the singularity in the system, we here adopt the decoupled design idea illustrated in Subsection 5.3.2, and minimise separately the following two indices:

(63)

(63)

(64)

(64) where

is positive definite, while

and

are semi-positive definite.

With the following controller

the system is turned into two separate linear subsystems:

(65)

(65) and

(66)

(66) Their state-space forms are, respectively,

(67)

(67) and

(68)

(68) where

(69)

(69) Therefore, the controller finally designed for the system is

(70)

(70) where

(71)

(71) with

satisfying the Riccati equation

(72)

(72) and

(73)

(73) with

given by

(74)

(74) and

(75)

(75) The closed-loop system is composed of

(76)

(76) and

(77)

(77) In order to meet the feasibility of the system (Equation59

(59)

(59) )–(Equation61

(61)

(61) ), by Theorem 5.3 it is sufficient to require the following initial value restriction:

(78)

(78)

6.3. Simulation results

Consider a spacecraft with the following moments of inertia (Gao et al., Citation2013):

(79)

(79) The weighting matrices

,

and

are chosen to be

(80)

(80) where γ is a positive parameter.

According to (Equation73(73)

(73) ) and (Equation74

(74)

(74) ), the matrices

and

are solved as

(81)

(81) and

(82)

(82) respectively. In such a case, we have

(83)

(83) and hence, according to (Equation78

(78)

(78) ), the initial values of

needs to satisfy

(84)

(84)

6.3.1. Attitude stabilisation

Choose , we can obtain

(85)

(85) hence the feedback gain can be obtained as

(86)

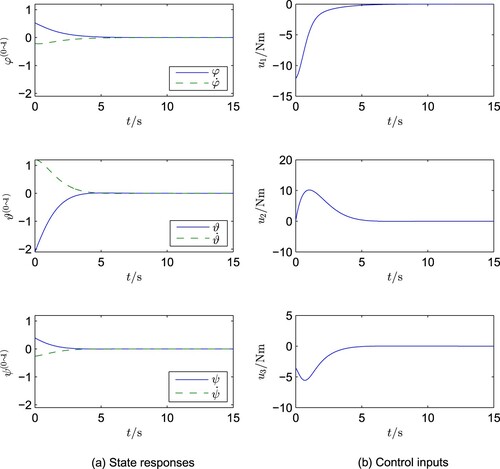

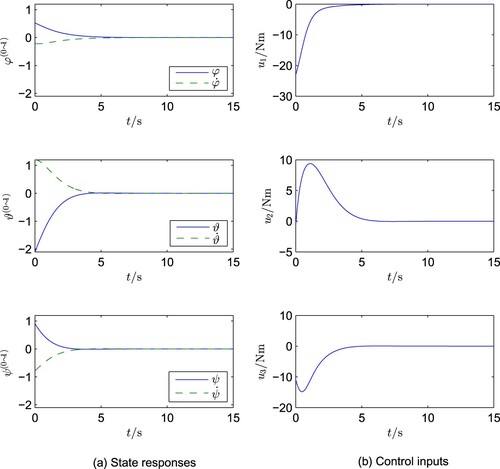

(86) Case A1: When the initial values are taken as

(87)

(87)

(88)

(88) and

(89)

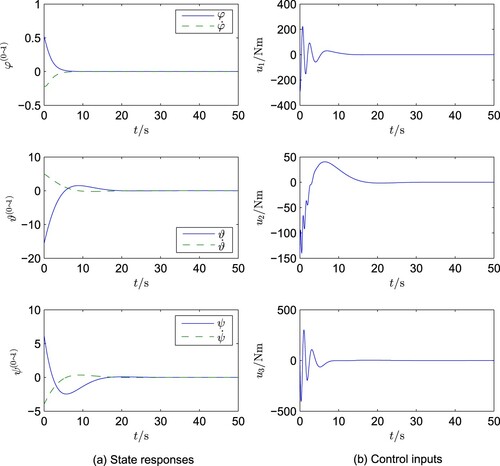

(89) the simulation of the designed control system has been carried out and the results are shown in Figure .

Case A2: When only the initial values in (Equation89(89)

(89) ) is replaced to

(90)

(90) while keeping the other ones unchanged as in Case A1, the simulation results are shown in Figure .

The simulation results clearly indicate the following:

as desired, in both cases the control technique provides very smooth and steady system responses and

a slight change in the initial values

causes a big change in the corresponding control input

The above second phenomenon reflects the difference between nonlinear and linear systems. The reason lies in the fact that, although the two closed-loop linear subsystems are decoupled, all the control inputs are still closely coupled via the function.

6.3.2. Rapid turning elimination

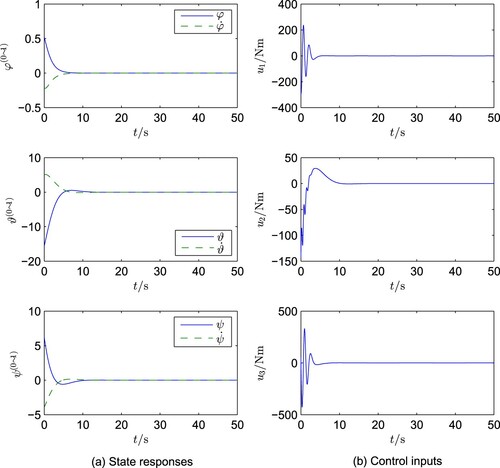

In this section, we testify the turning elimination control of the spacecraft. Let us assume that the spacecraft is turning in the θ and ψ directions with very high rates. Specifically, this is reflected in the following choice of the initial values:

(91)

(91)

(92)

(92) and

(93)

(93) With such big initial values, it is impractical to stop the turning of the spacecraft within too short time. Therefore, we decrease the weighting matrix

in (Equation80

(80)

(80) ) by reducing the factor γ.

Case B1. In the case of we can obtain

(94)

(94) and the simulation results are shown in Figure .

Case B2. In the case of we can obtain

(95)

(95) and the corresponding simulation results are shown in Figure .

It is clearly seen from Figures and the following:

Very effective rapid turning elimination in both Cases B1 and B2 is achieved with smooth transient process. Particularly, the turning in Case B1 is stopped in approximately 10 seconds, and that in Case B2 is stopped in approximately 25 seconds.

In both cases, the control amplitudes required are still in a reasonable range. The control amplitude can be accordingly reduced if the time required to stop the turning is relaxed.

7. Conclusion

Except the well-known linear quadratic optimal control technique, general nonlinear optimal control in the state–space framework still remains a big problem in the field of systems and control. Theoretical results are difficult to apply in practice due to certain nonlinear equations involved.

Parallel to the state–space approaches, HOFA approaches have been recently proposed and demonstrated to be much more effective in dealing with system control problems (Duan, Citation2020a, Citation2020b, Citation2020c) and (Duan, Citation2020d, Citation2020e, Citation2020f, Citation2020g, Citation2020h, Citation2020i, Citation2020j). It is further shown in this paper that, with the proposed HOFA approach, a type of general nonlinear optimal control can be well proposed and solved.

The problem is formulated to minimise an index in a quadratic form of the state and its derivatives of certain orders. It is shown that, with the help of the full-actuation feature of the HOFA models, the problem is linked to an optimal control problem of a linear system. Therefore, a nonlinear state feedback optimal controller can then be easily solved based on the solution to a Riccati differential or algebraic equation.

It is also shown that the formulation can be conveniently generalised to the case of sub-fully actuated systems. It turns out that the feasibility of the controller can be met by only restricting the system initial values to be within a proper ball area.

Spacecraft attitude systems are highly nonlinear ones when the attitude angles are not restricted to vary within very small ranges. Therefore, spacecraft attitude control with smooth and steady transient processes and accurate attitude manoeuvring has always remained a very hard problem. The proposed approach has been demonstrated to provide a very effective and simple way to handle such nonlinear spacecraft attitude control problems.

The results in this paper can be extended to other types of systems, e.g. discrete-time systems and uncertain systems. In addition, how to reduce the control magnitude in nonlinear optimal control certainly remains a very difficult problem.

Acknowledgments

The author is grateful to his Ph.D. students Guangtai Tian, Qin Zhao, Xiubo Wang, Weizhen Liu, Kaixin Cui, and Liyao Hu, and also his visiting scholar, Prof. Yang Cui, for helping him with reference selection and proofreading. His particular thanks go to his students Bin Zhou and Tianyi Zhao for their useful suggestions and help with the simulations, respectively.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Guangren Duan

Guangren Duan received his Ph.D. degree in Control Systems Sciences from Harbin Institute of Technology, Harbin, P. R. China, in 1989. After a 2-year post-doctoral experience at the same university, he became professor of control systems theory at that university in 1991. He is the founder and currently the director of the Center for Control Theory and Guidance Technology at Harbin Institute of Technology. He visited the University of Hull, the University of Sheffield, and also the Queen's University of Belfast, UK, from December 1996 to October 2002, and has served as Member of the Science and Technology committee of the Chinese Ministry of Education, Vice President of the Control Theory and Applications Committee, Chinese Association of Automation (CAA), and Associate Editors of a few international journals. He is currently an academician of the Chinese Academy of sciences, and fellow of CAA, IEEE and IET. His main research interests include parametric control systems design, nonlinear systems, descriptor systems, spacecraft control and magnetic bearing control. He is the author and co-author of 5 books and over 270 SCI indexed publications.

References

- Abo-Sinna, M. A. (2004). Multiple objective (fuzzy) dynamic programming problems: A survey and some applications. Applied Mathematics and Computation, 157(3), 861–888. https://doi.org/https://doi.org/10.1016/j.amc.2003.08.083

- Agrawal, O. P. (2006). A formulation and a numerical scheme for fractional optimal control problems. IFAC Proceedings Volumes, 39(11), 68–72. https://doi.org/https://doi.org/10.3182/20060719-3-PT-4902.00011

- Anderson, B. D. O., & Moore, J. B. (1990). Optimal control: Linear quadratic methods. Prentice Hall.

- Arutyunov, A. V., Karamzin, D. Y., & Pereira, F. (2012). Pontryagin's maximum principle for constrained impulsive control problems. Nonlinear Analysis: Theory, Methods and Applications, 75(3), 1045–1057. https://doi.org/https://doi.org/10.1016/j.na.2011.04.047

- Ball, J. A., & Helton, J. W. (1990). Nonlinear H ∞ control theory: A literature survey. In Robust Control of Linear Systems and Nonlinear Control (pp. 1–12). Birkhäuser.

- Bellman, R. (1952). On the theory of dynamic programming. Proceedings of the National Academy of Sciences of the United States of America, 38(8), 716–719. https://doi.org/https://doi.org/10.1073/pnas.38.8.716

- Bellman, R., & Kalaba, R. (1957). On the role of dynamic programming in statistical communication theory. IRE Transactions on Information Theory, 3(3), 197–203. https://doi.org/https://doi.org/10.1109/TIT.1957.1057416

- Bellman, R., & Kalaba, R. (1960). Dynamic programming and adaptive processes: Mathematical foundation. IRE Transactions on Automatic Control, 5(1), 5–10. https://doi.org/https://doi.org/10.1109/TAC.1960.6429288

- Bender, D. J., & Laub, A. J. (1987a). The linear-quadratic optimal regulator for descriptor systems. IEEE Transactions on Automatic Control, 32(8), 672–688. https://doi.org/https://doi.org/10.1109/TAC.1987.1104694

- Bender, D. J., & Laub, A. J. (1987b). The linear-quadratic optimal regulator for descriptor systems: Discrete-time case. Automatica, 23(1), 71–85. https://doi.org/https://doi.org/10.1016/0005-1098(87)90119-1

- Bertsekas, D. P., & Tsitsiklis, J. N. (1995, December). Neuro-dynamic programming: An overview. Proceedings of 1995 34th IEEE Conference on Decision and Control (pp. 560–564). IEEE.

- Bokov, G. V. (2011). Pontryagin's maximum principle of optimal control problems with time-delay. Journal of Mathematical Sciences, 172(5), 623–634. https://doi.org/https://doi.org/10.1007/s10958-011-0208-y

- Chen, S., Li, X., & Zhou, X. Y. (1998). Stochastic linear quadratic regulators with indefinite control weight costs. SIAM Journal on Control and Optimization, 36(5), 1685–1702. https://doi.org/https://doi.org/10.1137/S0363012996310478

- Chmielewski, D., & Manousiouthakis, V. (1996). On constrained infinite-time linear quadratic optimal control. Systems & Control Letters, 29(3), 121–129. https://doi.org/https://doi.org/10.1016/S0167-6911(96)00057-6

- Çimen, T. (2008). State-dependent Riccati equation (SDRE) control: A survey. IFAC Proceedings Volumes, 41(2), 3761–3775. https://doi.org/https://doi.org/10.3182/20080706-5-KR-1001.00635

- Çimen, T. (2012). Survey of state-dependent Riccati equation in nonlinear optimal feedback control synthesis. Journal of Guidance, Control, and Dynamics, 35(4), 1025–1047. https://doi.org/https://doi.org/10.2514/1.55821

- Dmitruk, A. V., & Kaganovich, A. M. (2008). The hybrid maximum principle is a consequence of pontryagin maximum principle. Systems & Control Letters, 57(11), 964–970. https://doi.org/https://doi.org/10.1016/j.sysconle.2008.05.006

- Doyle, J., Glove, K., Khargonekar, P., & Francis, B. A. (1988). State-space solutions to standard H 2 and H ∞ control problems. In 1988 American control conference (pp. 1691–1696).

- Duan, G. R. (2014, July). Satellite attitude control–a direct parametric approach. Proceeding of the 11th World Congress on intelligent control and automation (pp. 3989–3996). IEEE.

- Duan, G. R. (2016). Linear system theory (Volume II) (3rd ed). The Science Press.

- Duan, G. R. (2020a). High-order system approaches: I. Full-actuation and parametric design. Acta Automatica Sinica, 46(7), 1333–1345. https://doi.org/https://doi.org/10.16383/j.aas.c200234 (in Chinese)

- Duan, G. R. (2020b). High-order system approaches: II. Controllability and fully-actuation. Acta Automatica Sinica, 46(8), 1571–1581. https://doi.org/https://doi.org/10.16383/j.aas.c200369 (in Chinese)

- Duan, G. R. (2020c). High-order system approaches: III. Observability and observer design. Acta Automatica Sinica, 46(9), 1885–1895. https://doi.org/https://doi.org/10.16383/j.aas.c200370 (in Chinese)

- Duan, G. R. (2020d). High-order fully actuated system approaches: Part I. Models and basic procedure. International Journal of System Sciences, 52(2), 422–435. https://doi.org/https://doi.org/10.1080/00207721.2020.1829167

- Duan, G. R. (2020e). High-order fully actuated system approaches: Part II. Generalized strict-feedback systems. International Journal of System Sciences, 52(3), 437–454. https://doi.org/https://doi.org/10.1080/00207721.2020.1829168

- Duan, G. R. (2020f). High-order fully actuated system approaches: Part III. Robust control and high-order backstepping. International Journal of System Sciences, 52(5), 952–971. https://doi.org/https://doi.org/10.1080/00207721.2020.1849863

- Duan, G. R. (2020g). High-order fully actuated system approaches: Part IV. Adaptive control and high-order backstepping. International Journal of System Sciences, 52(5), 972–989. https://doi.org/https://doi.org/10.1080/00207721.2020.1849864

- Duan, G. R. (2020h). High-order fully actuated system approaches: Part V. Robust adaptive control. International Journal of System Sciences. https://doi.org/https://doi.org/10.1080/00207721.2021.1879964

- Duan, G. R. (2020i). High-order fully actuated system approaches: Part VI. Disturbance attenuation and decoupling. International Journal of System Sciences. https://doi.org/https://doi.org/10.1080/00207721.2021.1879966

- Duan, G. R. (2020j). High-order fully actuated system approaches: Part VII. Controllability, stabilizability and parametric designs. International Journal of System Sciences. https://doi.org/https://doi.org/10.1080/00207721.2021.1921307

- Findeisen, R., Imsland, L., Allgower, F., & Foss, B. A. (2003). State and output feedback nonlinear model predictive control: an overview. European Journal of Control, 9(2–3), 190–206. https://doi.org/https://doi.org/10.3166/ejc.9.190-206

- Gao, C. Y., Zhao, Q., & Duan, G. R. (2013). Robust actuator fault diagnosis scheme for satellite attitude control systems. Journal of Franklin Institute, 350(9), 2560–2580. https://doi.org/https://doi.org/10.1016/j.jfranklin.2013.02.021

- Garcia, C. E., Prett, D. M., & Morari, M. (1989). Model predictive control: theory and practice – A survey. Automatica, 25(3), 335–348. https://doi.org/https://doi.org/10.1016/0005-1098(89)90002-2

- Hwang, C. L., & Fan, L. T. (1967). A discrete version of Pontryagin's maximum principle. Operations Research, 15(1), 139–146. https://doi.org/https://doi.org/10.1287/opre.15.1.139

- Kalman, R. E. (1960). Contributions to the theory of optimal control. Boletín De La Sociedad Matemática Mexicana, 5(2), 102–119. https://doi.org/https://doi.org/10.1109/9780470544334.ch8

- Laschov, D., & Margaliot, M. (2011a). A maximum principle for single-input Boolean control networks. IEEE Transactions on Automatic Control, 56(4), 913–917. https://doi.org/https://doi.org/10.1109/TAC.2010.2101430

- Laschov, D., & Margaliot, M. (2011b). A Pontryagin maximum principle for multi-input Boolean control networks. In Recent advances in dynamics and control of neural networks. Cambridge Scientific Publishers.

- Lee, J. H. (2011). Model predictive control: review of the three decades of development. International Journal of Control, Automation and Systems, 9(3), 415–424. https://doi.org/https://doi.org/10.1007/s12555-011-0300-6

- Lewis, F. L., & Vrabie, D. (2009). Reinforcement learning and adaptive dynamic programming for feedback control. IEEE Circuits and Systems Magazine, 9(3), 32–50. https://doi.org/https://doi.org/10.1109/MCAS.7384

- Li, Y., & Chen, Y. Q. (2008, October). Fractional order linear quadratic regulator. 2008 IEEE/ASME international conference on mechtronic and embedded systems and applications (pp. 363–368). IEEE. https://doi.org/https://doi.org/10.1109/MESA.2008.4735696

- Li, W., & Todorov, E. (2004, August). Iterative linear quadratic regulator design for nonlinear biological movement systems. ICINCO 2004 Proceedings of the first international conference on informatics in control, automation and robotics, Setúbal, Portugal (pp. 222–229). Springer.

- Mayne, D. Q. (2014). Model predictive control: recent developments and future promise. Automatica, 50(12), 2967–2986. https://doi.org/https://doi.org/10.1016/j.automatica.2014.10.128

- Nekoo, S. R. (2019). Tutorial and review on the state-dependent Riccati equation. Journal of Applied Nonlinear Dynamics, 8(2), 109–166. https://doi.org/https://doi.org/10.5890/JAND.2019.06.001

- Pontiyagin, L. S., Boltyanskii, V. G., Gamkrelidze, R. V., & Mjshchenko, E. F. (1962). The mathematical theory of optimal processes. John Wiley and Sons.

- Rami, M. A., Chen, X., & Zhou, X. Y. (2002). Discrete-time indefinite LQ control with state and control dependent noises. Journal of Global Optimization, 23(3-4), 245–265. https://doi.org/https://doi.org/10.1023/A:1016578629272

- Rozonoer, L. I. (1959). The maximum principle of LS Pontryagin in optimal system theory. Automation and Remote Control, 20(10), 11.

- Scokaert, P. O. M., & Rawlings, J. B. (1998). Constrained linear quadratic regulation. IEEE Transactions on Automatic Control, 43(8), 1163–1169. https://doi.org/https://doi.org/10.1109/9.704994

- Seierstad, A. (1975). An extension to Banach space of Pontryagin's maximum principle. Journal of Optimization Theory and Applications, 17(3-4), 293–335. https://doi.org/https://doi.org/10.1007/BF00933882

- Sussmann, H. J. (1999, December). A maximum principle for hybrid optimal control problems. Proceedings of the 38th IEEE conference on decision and control (pp. 425–430). IEEE. https://doi.org/https://doi.org/10.1109/CDC.1999.832814

- Terra, M. H., Cerri, J. P., & Ishihara, J. Y. (2014). Optimal robust linear quadratic regulator for systems subject to uncertainties. IEEE Transactions on Automatic Control, 59(9), 2586–2591. https://doi.org/https://doi.org/10.1109/TAC.2014.2309282

- Tsitsiklis, J. N., & Roy, B. V. (1997). An analysis of temporal-difference learning with function approximation. IEEE Transactions on Automatic Control, 42(5), 674–690. https://doi.org/https://doi.org/10.1109/9.580874

- Wang, L., Peng, H., Zhu, H., & Shen, L. (2009, August). A survey of approximate dynamic programming. 2009 international conference on intelligent human-machine systems and cybernetics, Hangzhou, China (pp. 396–399). IEEE.

- Werbos, P. J. (1977). Advanced forecasting methods for global crisis warning and models of intelligence. General System Yearbook, 22, 25–38.

- Werbos, P. J. (1989, August). Neural networks for control and system identification. Proceedings of the 28th IEEE conference on decision and control, Tampa, FL, USA (pp. 260–265). IEEE.

- Xie, L., & C. E. de Souza (1990). Robust H ∞ control for linear time-invariant systems with norm-bounded uncertainty in the input matrix. Systems & Control Letters, 14(5), 389–396. https://doi.org/https://doi.org/10.1016/0167-6911(90)90088-C

- Xu, S. Y., & Chen, T. W. (2002). Robust H-infinity control for uncertain stochastic systems with state delay. IEEE Transactions on Automatic Control, 47(12), 2089–2094. https://doi.org/https://doi.org/10.1109/TAC.2002.805670

- Xu, S., Yang, C., & Zhou, S. (2000). Robust H ∞ control for uncertain discrete-time systems with circular pole constraints. Systems & Control Letters, 39(1), 13–18. https://doi.org/https://doi.org/10.1016/S0167-6911(99)00066-3

- Zhang, W., Hu, J., & Abate, A. (2009). On the value functions of the discrete-time switched LQR problem. IEEE Transactions on Automatic Control, 54(11), 2669–2674. https://doi.org/https://doi.org/10.1109/TAC.2009.2031574

- Zhang, W., Hu, J., & Abate, A. (2012). Infinite-horizon switched LQR problems in discrete time: A suboptimal algorithm with performance analysis. IEEE Transactions on Automatic Control, 57(7), 1815–1821. https://doi.org/https://doi.org/10.1109/TAC.2011.2178649

Appendix. Other terms in model (57)

The terms and

in the spacecraft attitude system (Equation57

(57)

(57) ) are given by

(A1)

(A1) and

(A2)

(A2) with

(A3)

(A3)

(A4)

(A4)

(A5)

(A5) where in the above series of equations,

(A6)

(A6) is the rotational-angular velocity of the earth, and the set of π- functions appeared are given by

(A7)

(A7)

(A8)

(A8)

and

(A9)

(A9)