Abstract

The present study investigated the impact of a utility-value intervention on students’ behavioral, cognitive, and emotional engagement. Students assigned to the intervention condition were required to write an essay to connect the course contents with their personal hobbies, interests, or goals three times during the course. The results showed that the students who completed all the intervention assignments behaviorally engaged more in the course (evaluated by continuous assignment submission), while those in the control condition became less engaged later in the course. Moreover, the students who completed the intervention assignments remained cognitively engaged even at the end of the course. We discussed how a utility-value intervention works on students’ engagement and practical implications for amplifying the effectiveness of a utility-value intervention.

Introduction

Appreciating the value of learning is an important predictor of academic achievement among students. Psychologists have revealed that the utility-value intervention can help students find out the relevance of learning content in the context of their personal lives and encourage them to achieve higher academic achievement (Hulleman & Harackiewicz, Citation2020). Although the effectiveness of this intervention has been repeatedly reported, it remains unclear how it makes students to be engaged in the course. The present research aims to extend theoretical model of the utility-value intervention by investigating its impact on students’ behavioral, cognitive, and emotional engagement (e.g., Fredricks et al., Citation2004).

Utility-value interventions

The utility-value intervention was developed based on expectancy-value theory (Eccles et al., Citation1983), which proposes that learning motivation is proximally determined by expectancy beliefs and subjective values. Subjective values are categorized into four facets: intrinsic value, utility value, attainment value, and cost (negative value). Past research has revealed that subjective values, except cost, contribute to students’ motivation and achievement in educational contexts (e.g., Hulleman et al., Citation2008).

Hulleman and Harackiewicz (Citation2009) initially reported the effectiveness of the utility-value intervention in a double-blind, randomized controlled classroom experiment. This intervention requires students to write about the relevance and usefulness of the particular course content in the context of their personal goals and lives. Previous research has shown that students who completed utility-value intervention assignments achieved higher grades than those who simply summarized what they had learned (for a review, see Hulleman & Harackiewicz, Citation2020). The effectiveness of utility-value interventions has been tested in a number of fields, including psychology, mathematics, physics, biology, and agriculture.

Extend to students’ engagement mechanisms

The utility-value intervention is considered to encourage students’ motivation and students’ engagement in the learning process (Hulleman & Harackiewicz, Citation2020). However, few research has directly addressed how the utility-value intervention works on students’ engagement. Students’ engagement is conceptualized as a multidimensional construct, generally consisted by behavioral, cognitive, and emotional engagement (e.g., Fredricks et al., Citation2004). Behavioral engagement refers to the effort-investment behaviors such as persistent attendance, assignments completion, and participating in class discussion. Cognitive engagement refers to the qualitative aspect of learning processes, including the approach to learning and metacognitive activities. Emotional engagement refers to positive emotional experiences in the learning process, such as interest and enjoyment. It is unclear which of these concepts would be encouraged by the utility-value interventions.

Although the theoretical model claims that the utility-value intervention makes student behaviorally engaged in the learning processes (Hulleman & Harackiewicz, Citation2020), this was not directly validated. In most cases, previous research has tested the effectiveness of the utility-value intervention on the final course grade or grade point average, which were the consequences of behavioral engagement. There is a need for research to address it by assessing the impact of the utility-value intervention on some observable behaviors, such as persistent assignment submissions.

It can be expected that students who complete a utility-value intervention protocol would persistently work on until the end of a course. In general, although students are highly motivated to learn at the beginning of a course, they tend to become less motivated over time. As motivational factors are important predictors of students’ persistent learning (Robbins et al., Citation2004), fostering students’ motivation by the intervention will lead to persistent learning behaviors. The effect of the utility-value intervention can be also expected to be long-lasting as it was repeatedly reported that this intervention encouraged higher grades throughout the semester.

Previous research have given little attention to the impact of the utility-value intervention on cognitive engagement. One way to address this issue is to focus on the effectiveness of the utility-value interventions on the approach to learning. The approach to learning is a concept that describes qualitative differences in students’ process of learning and can be classified into three distinct approaches: deep, surface, and strategic (Entwistle & Ramsden, Citation1983). Researchers have mainly focused on the difference between the deep approach (e.g., structuring the information to understand it) and the surface approach (e.g., memorizing to reproduce in tests), as the former facilitates students’ understanding and achievement in educational settings while the latter impairs them (e.g., Senko, Citation2019). It can be expected that the utility-value intervention can promote the deep approach to learning as it also requires students connecting learned contents to their personal lives.

Contrary to behavioral and cognitive engagement, the past findings supported that the utility-value intervention would encourage emotional engagement. Previous research have reported that students who completed a utility-value intervention found a higher utility value in the learning content and had higher interest than those who did not (e.g., Hulleman et al., Citation2010; Hulleman & Harackiewicz, Citation2009). The utility-value intervention is also considered to be able to help students be more autonomous, be more involved in the learning process, and identify with the learning content. These would lead students to have emotionally positive experience in the learning processes.

The aim and overview of the present research

The aim of the present research was to investigate how the utility-value intervention encourages students’ engagement with focusing on behavioral, cognitive, and emotional engagements. Based on the previous findings and theoretical accounts, we hypothesized that the utility-value intervention will encourage all of the three types of students’ engagement. Behavioral engagement was estimated by students’ persistence based on whether they continued to submit course assignments until the end of the semester. Cognitive engagement was assessed by students’ approach to learning by using the self-report scale and their scores on the tasks that required to use the surface or deep cognitive processing. Emotional engagement was assessed by the self-report at the end of the course.

We investigated the intervention effects on students’ engagement through a quasi-experimental design with the course in AY2020 as the control condition and AY2021 as the intervention condition. In a quasi-experiment design, some factors may confound the experimental manipulation. We assessed three covariates that would be associated to students’ engagement as the baseline measures to use for controlling out confounding factors; achievement goals, self-efficacy, and trait self-control. Achievement goals are reasons for engaging in achievement behavior, traditionally classified as mastery goals (to develop competence) and performance goals (to demonstrate competence; Elliot & Church, Citation1997). Researchers have revealed that mastery goals and performance-approach goals would be beneficial to academic success, while performance-avoidance goals impair student performance (Harackiewicz et al., Citation1998). Previous research has also revealed that achievement goals predict individual differences in learning approaches (Senko, Citation2019). Self-efficacy is an individual’s belief in their capabilities to execute certain behaviors necessary to obtain specific achievements (Bandura, Citation1977). It is well known that self-efficacy can predict high engagement and achievement in educational settings. Trait self-control is a well-known personality trait that is a predictor of high persistence and achievement (Tangney et al., Citation2004). We will test for bias in participants characteristics across conditions by seeing the group differences. Then, we will test whether the intervention effect is robust by statistically controlling these covariates.

Methods and materials

Participants

Participants were students at a private university in Japan who enrolled in an intermediate-level psychology course offered in two different years (AY2020 and AY2021). This course, “Psychology of Leaning and Language,” was a prerequisite for certified public psychologists in Japan. Of the 255 students enrolled, 107 (81.1%) in AY2020 and 90 (73.2%) in AY2021 provided informed consents and agreed to provide their learning data. Thus, 197 students participated in the present study (58.9% females; 69.0% sophomores, 24.4% juniors, and 7.1% seniors). Most of the students majored in clinical psychology (93.9%), and the rest majored in sociology (6.1%). The AY2020 course was implemented as the control condition and the AY2021 course as the intervention condition.

Course design and research procedure

This course took place over 15 week and was offered in an on-demand format online in accordance with COVID-19 guidelines. As the courses were offered in the second semester (from September to January), both the students in AY2020 and AY2021 had already experienced and accustomed to the on-demand format online courses. Each week, students were asked to study the course contents using the lecture video (provided via YouTube), PowerPoint documents, and the textbook and then submit an assignment via a learning management system. Course contents were available on Wednesday evenings, and the deadline for assignments was 23:59 on the next Monday. Thus, students had almost four days and eight hours to work on the course contents each week. Due to technical issues, the deadlines were extended in week 5 of AY2020 (by three days) and week 13 of AY2021 (by one day). The weekly assignments consisted of two tasks: the surface processing task, which could be completed by recalling, outlining, or highlighting the course content, and the deep processing task, which called for exemplifying, applying, analyzing, or evaluating with reference to the course content (i.e., students needed to relate the course contents to other experiences or knowledge). The surface and deep processing tasks were designed based on Bloom’s taxonomy (Bloom et al., Citation1956). In the intervention condition (i.e., AY2021), at weeks 4, 8, and 12, students were asked to complete the intervention protocol (described in the following section) as a deep processing task. Except for the deep processing task in weeks 4, 8, and 12, the course contents were identical in both conditions. Table S1 presents the course schedule and a summary of each week’s assignments.

Students were asked to complete two surveys at weeks 1 and 15. At week 1, students were asked to complete a survey about their motivation (i.e., achievement goals and self-efficacy) and trait self-control as baseline measures. At week 15, students were asked to complete a survey about the approach to learning and emotional experiences. The research procedure was approved by the ethical committee of the authors’ affiliation.

Intervention protocol

The students in the intervention condition completed the intervention assignment based on the build connection material provided at www.characterlab.org. The students first made a list of their personal interests, hobbies, and goals. Next, they created a list of important topics that they had learned. They then drew lines between their own interests and learned topics that they thought were relevant. Finally, they wrote a short essay describing the connection between their interests and the learned topics. Students were asked to submit only the essay part through the learning management system.

Baseline measures

Achievement goals

We used the achievement goal scale developed by Tanaka and Fujita (Citation2003) based on Elliot and Church (Citation1997). This scale consists of three subscales with five items each: mastery goal, performance-approach goal, and performance-avoidance goal. Ratings are measured on 6-point Likert-type scale ranging from 1 (not true for me) to 6 (extremely true for me).

Self-efficacy

We used the self-efficacy scale developed by Ito (Citation1996) based on the scale of Pintrich and De Groot (Citation1990). This scale consists of six items measured on a 6-point Likert-type scale from 1 (not true for me) to 6 (extremely true for me).

Trait self-control

We used the Japanese version of the Brief Trait Self-Control Scale (Ozaki et al., Citation2016; originally developed by Tangney et al., Citation2004). This scale consists of 13 items measured on a 5-point Likert-type scale from 1 (not at all) to 5 (very much).

Engagement measures

Evaluation of assignment performance

First, we estimated behavioral engagement based on the assignment submission itself. We evaluated students’ engagement on a binary criteria of whether they submitted it or not for each week. Then, we estimated cognitive engagement based on the performance of each assignment. For all the submitted assignments, their performances were evaluated by two trained raters. Due to the technical error, we could not evaluate the surface processing task in week 3. To calibrate their scoring standards, before evaluating each assignment, they saw the course contents and submitted the course assignments as students did in the online course. The author then marked and corrected the raters’ assignments by providing examples of the correct answers and scoring standards. After these training sessions, two raters independently evaluated each student’s response on both tasks: 2 (adequate), 1 (partly adequate), or 0 (not adequate). Cohen’s Kappa for ratings from two raters was .48, indicating the evaluations were moderately reliable. The average score of the two raters’ evaluations were the assignment performance scores.

Approach to learning

To assess students’ cognitive engagement, we used the self-report scale about approach to learning developed by Kawai and Mizokami (Citation2012) based on the scale of Entwistle et al. (Citation2002). This scale consists of eight items each for deep learning and surface learning. While this scale was developed for assessing individual differences in students’ general dispositions, we modified the instruction to assess individual differences in course-specific dispositions by instructing students “rate what degree you have experienced those described in each item during this course”. Ratings are made on a 6-point Likert-type scale from 1 (not at all) to 6 (very much).

Emotional experiences

To assess students’ emotional engagement, we asked students to six types of emotional experiences during the course by a single item for each: interest (I became interested in the course contents), convergent curiosity (I would like to learn about things that I still do not understand), divergent curiosity (I would like to learn more than what I learned in this course), surprise (I was surprised at what I learned), boredom (I found the course boring), and frustration (I was frustrated because there were things that remained unclear). Ratings were measured on a 6-point Likert-type scale from 1 (not at all) to 6 (very much).

Results

Preliminary analysis

We analyzed the data of students who submitted all the assignments at weeks 4, 8, and 12 in both the control and intervention conditions. We included only the data from them because, first, some students missed the intervention assignments in weeks 4, 8, and 12, or had already dropped out of the course. Second, as some students in the control condition also dropped out from the course before week 12, we may have underestimated their engagement if we analyzed all the data. As we should compare the students who equally intended to accomplish the course, we reported the results obtained from the data of 139 (70.6%) students, 82 (76.6%) in AY2020 and 57 (63.3%) in AY2021, who submitted all of the assignments in weeks 4, 8, and 12. While the trend of the main findings were almost consistent if we analyzed the data of 192 students (97.5%) who submitted at least one of three targeted assignments, we report the results of the data applying strict exclusion criteria to make fair comparisons between conditions. There were no significant differences in any of the baseline measurements (Table S2), indicating that students’ motivation and characteristics were very similar between conditions.

Moreover, as some missed to complete the survey at week 15, we analyzed the self-report data of 81 students (58.3%), 51 (62.2%) in AY2020 and 30 (52.6%) in AY 2021. As the results of regression analysis revealed that none of the demographic variables and baseline measures significantly predicted the completion of the survey at week 15, we assumed that respondents were not biased in whole sample.

Intervention effect on behavioral engagement

We compared the submission rates, the percentages of submitted assignments to total number of assignments, between the conditions. The t-test results revealed that students in the intervention condition (M = 0.965, SD = 0.080) submitted more assignments than those in the control condition (M = 0.916, SD = 0.115), t (137) = 2.790, p = .006, g = 0.478. The intervention effect was significant when conducting regression analysis with controlling for baseline measures (b = 0.057, SE = 0.018, p = .002).

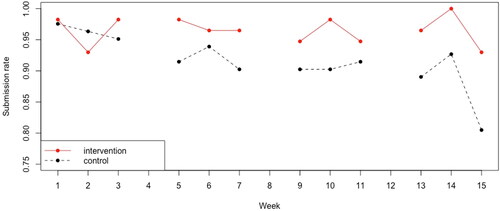

Next, we compared the weekly trends in assignment submission. The submission rates for assignments each week (except for weeks 4, 8, and 12) in . We conducted a generalized linear mixed-effects model to predict weekly assignment submission (0 = not submitted, 1 = submitted) by baseline measures, week, and condition (0 = control, 1 = intervention). We entered the fixed effects of all dependent variables and the random effect of the week. All baseline measures were grand-mean centered. We also entered the interaction terms of week and intervention. The results revealed that the interaction effect of week and intervention was significant (b = 0.118, SE = 0.059, p = .046). There were few differences between the submission rates before the intervention (i.e., weeks 1–3), but the submission rates after the intervention (i.e., after week 4) were higher in the intervention condition than in the control condition.

Intervention effect on cognitive engagement

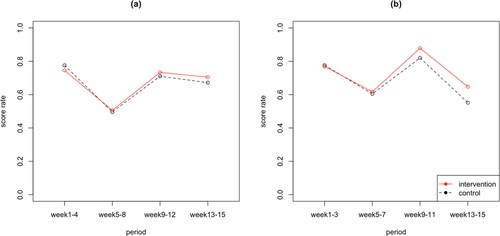

The course was divided into four periods (weeks 1–4, 5–8, 9–12, and 13–15) based on intervention implementation at weeks 4, 8, and 12. The score rate for each period, the percentage of evaluated score to full score, were compared between conditions. We conducted a 4 (within: period) × 2 (between: condition) analysis of variance (ANOVA) to compare the score rates for each task. The mean score rates for the assignments in each period are shown in . The results of the ANOVA revealed that there were no significant differences between the conditions nor an interaction effect in the surface processing task. For the deep processing task, the interaction effect was significant, F (3, 411) = 2.732, p = .044, ηp2 = 0.020. The results of multiple simple effect analysis with Shaffer’s correction revealed that students in the intervention condition obtained higher score rates in the fourth period (i.e., week 13–15) than those in the control condition. The significance of these differences remained consistent when conducting regression analysis on the score rates in the fourth period with controlling for baseline measures (b = 0.116, SE = 0.038, p = .003). There were no such differences in the first, second, or third periods. As the students in the intervention condition had not yet completed any of the intervention protocols in the first period, it is reasonable that the intervention effect could be seen at the end of the course. Rather, these results suggested that there were no group differences in the students’ basic academic skills.

Figure 2. The mean score rates for assignments in each period for each condition. The mean scores for the surface processing tasks were presented in the panel (a), and those for the deep processing tasks were presented in the panel (b).

Then, we tested the intervention effect on the approach to learning. The results of the t-test revealed that students in the intervention condition (M = 4.167, SD = 0.603) were more likely to report that they had engaged in a deep approach to learning than those in the control condition (M = 3.855, SD = 0.706), t (79) = 2.018, p = .047, g = 0.460. The trends in these differences remained consistent when conducting regression analysis with controlling for baseline measures (b = 0.289, SE = 0.151, p = .059). This measure was also positively correlated to the total assignment performance score on the deep processing tasks (r (79) = .218, p = .050). These results suggest that the current intervention had an impact on the deep approach to learning, which is consistent with the results of assignment performance. On the other hand, there was no significant difference in the surface approach to learning, t (79) = 0.947, p = .347, g = 0.216 (M = 3.476, SD = 0.730 in the intervention condition vs M = 3.305, SD = 0.815 in the control condition). This measure was negatively correlated to the total assignment performance score on the surface processing tasks (r (79) = −0.209, p = .060), while the coefficient was not significant.

Intervention effect on emotional engagement

We tested the intervention effect on the emotional experiences. There were no significant differences in any emotions (Table S2). Although the differences were not significant, students in the intervention condition reported high interest, curiosity, and surprise and less boredom than those in the control condition. This trend is consistent with previous studies, which have shown that students who received a utility-value intervention were more likely to be highly interested in the course content.

Discussion

The aim of the present research was to investigate how the utility-value intervention encourages students’ engagement with focusing on behavioral, cognitive, and emotional engagement. The results showed that the this intervention prevented a weekly decrease in assignment submission and enhanced students’ deep approach to learning, suggesting that it can encourage behavioral and cognitive engagement.

The results can contribute to expand theoretical models of the utility-value intervention to students’ engagement mechanisms. Regarding the behavioral engagement, the students who completed the intervention protocol persistently continued to submit assignments until the end of the course, while there was a decrease in submission rates in the control condition. It supports the theoretical account that the utility-value intervention encourages students’ behavioral engagement (Hulleman & Harackiewicz, Citation2020). While most of the previous research focused on the final grade, the consequences of behavioral engagement, our results directly tested the intervention effect on dynamically trend of students’ persistence.

Our results provided a new insight into the beneficial effects of the utility-value intervention. We revealed that students who completed the intervention protocol cognitively engaged in a deep approach to learning, which is vital for academic achievement along with behavioral engagement (Fredricks et al., Citation2004). The results were consistent with self-report measures and actual task performance, that is, the students who completed the intervention protocol perceived that they were engaged in the deep approach to learning more than those who did not and actually performed better in the task requiring deep processing. The utility-value intervention may have encouraged a deep approach to learning because deep processing tasks require students to make the course content relevant to their own experiences or knowledge, similar to the process of the utility-value intervention. These structural similarities may have facilitated the transfer of activities in the intervention to task completion. However, we cannot infer the reason why the intervention effect on the deep approach was observed only at the end of the course, since we did not equalize the difficulties of the assignments and assess the self-report of the approach to learning during the course. Research that addresses the dynamical changes in the approach to learning would lead to develop the theoretical model of the utility-value intervention.

Future research should address the interacting influences of the utility-value intervention and task characteristics. The results regarding the approach to learning suggest that the utility-value interventions can be enhanced when assignments requiring a deep approach to learning. Even if a lecturer include a utility-value intervention in their course, it may not be fully effective without opportunities for students to demonstrate what they have learned from the intervention. Recently, it has been argued that such a fit between motivation and task characteristics influences academic achievement regarding other motivational concepts (Higgins, Citation2008; Senko, Citation2019). Our results provide practical implications for amplifying the effectiveness of the utility-value intervention. However, we cannot rule out the possibility that assignments requiring a deep approach to learning themselves have also worked as the utility-value intervention. Future research can address this issue by field experiments using these assignments as a summative assessment or laboratory experiments.

It should be noted that although the utility-value intervention did not encourage the surface approach, it did not inhibit it. These findings need to be addressed in future studies with considering the role of surface processing in learning. The research literature of the approach to learning theoretically claims that the surface approach is insufficient and even maladaptive (Entwistle & Ramsden, Citation1983). Contrary to this, the learning taxonomy do not assume it as maladaptive, rather, the surface processing is fundamental for developing the deep processing (Bloom et al., Citation1956). We did not adequately incorporate the theoretical differences of the role of surface approach into our research design. In fact, our results showed that self-reported measure of surface approach was negatively correlated to the surface processing task performance. Future research should separate the maladaptive and adaptive aspects of the surface approach, and then test whether the utility-value intervention is more effective when combined with interventions that encourage the adaptive aspect of surface approach to learning.

We could not find the intervention effect on emotional engagement. Although the trend is promising, our results did not provide significant evidence that this intervention promote emotional engagements. However, previous research repeatedly reported that the intervention promoted students’ interests (e.g., Hulleman & Harackiewicz, Citation2009, Citation2020). The use of a single item in this study may have resulted in unreliable measurements and high variance in individual differences, which may explain why no significant differences were obtained.

Limitation and future directions

The current study had a few limitations that should be noted. First, as the present research was conducted as the quasi-experimental design, we cannot rule out the possibility that cohort effects were contaminated. The result that the intervention effect was observed even after controlling for baseline measures and only after the intervention was implemented, refutes the possibility of a cohort effect. However, the possibility of unobserved variable effects cannot be ruled out, and it is desirable to follow up with randomized controlled experiments. Second, although we observed the significant effects of the intervention, the effect sizes were generally small. This may be because the course was offered as mandatory one to obtain a certification and thus it made students more patiently engaged than other courses. As the present research investigated the intervention effects in a single course, it is necessary for future studies with similar designs to test the generalizability of its findings.

Conclusion

The utility-value intervention provides useful tips for educational practices based on the psychological theory of human motivation. While it is necessary to understand how robustly this intervention can have an effect on academic achievement, it is also important to understand what causal process it takes. We revealed that the utility-value intervention encourages the behavioral and cognitive engagement. Our research provides useful insight for course design and will encourage future researchers to test its effect by focusing on detailed learning processes.

Supplemental Material

Download MS Word (23.4 KB)Disclosure statement

The authors declare that they have no conflict of interest.

Data availability statement

The datasets generated and analyzed during the current study are available from the corresponding author on reasonable request.

Additional information

Funding

References

- Bandura, A. (1977). Self-efficacy: Toward a unifying theory of behavioral change. Psychological Review, 84(2), 191–215. https://doi.org/10.1037//0033-295x.84.2.191

- Bloom, B. S., Engelhart, M. D., Furst, E. J., Hill, W. H., & Krathwohl, D. R. (1956). Taxonomy of educational objectives: The classification of educational goals. In Handbook I: Cognitive domain. David McKay Company.

- Eccles, J. S., Adler, T. F., Futterman, R., Goff, S. B., Kaczala, C. M., Meece, J. L., & Midgley, C. (1983). Expectancies, values and academic behaviors. In J. T. Spence (Ed.), Achievement and Achievement Motivation (pp. 75–146). W. H. Freeman.

- Elliot, A. J., & Church, M. A. (1997). A hierarchical model of approach and avoidance achievement motivation. Journal of Personality and Social Psychology, 72(1), 218–232. https://doi.org/10.1037/0022-3514.72.1.218

- Entwistle, N. J., & Ramsden, P. (1983). Understanding student learning. Croom Helm.

- Entwistle, N., McCune, V., & Hounsell, D. (2002). Approaches to studying and perceptions of university teaching-learning environments: Concepts, measures and preliminary findings. Occasional Report 1. ETL Project (ESRC).

- Fredricks, J. A., Blumenfeld, P. C., & Paris, A. H. (2004). School engagement: Potential of the concept, state of the evidence. Review of Educational Research, 74(1), 59–109. https://doi.org/10.3102/00346543074001059

- Harackiewicz, J. M., Barron, K. E., & Elliot, A. J. (1998). Rethinking achievement goals: When are they adaptive for college students and why? Educational Psychologist, 33(1), 1–21. https://doi.org/10.1207/s15326985ep3301_1

- Higgins, E. T. (2008). Regulatory fit. In J. Y. Shah & W. L. Gardner (Eds.), Handbook of motivation science (pp. 356–372). Guilford Press.

- Hulleman, C. S., Durik, A. M., Schweigert, S., & Harackiewicz, J. M. (2008). Task values, achievement goals, and interest: An integrative analysis. Journal of Educational Psychology, 100(2), 398–416. https://doi.org/10.1037/0022-0663.100.2.398

- Hulleman, C. S., Godes, O., Hendricks, B. L., & Harackiewicz, J. M. (2010). Enhancing interest and performance with a utility value intervention. Journal of Educational Psychology, 102(4), 880–895. https://doi.org/10.1037/a0019506

- Hulleman, C. S., & Harackiewicz, J. M. (2009). Promoting interest and performance in high school science classes. Science (New York, N.Y.), 326(5958), 1410–1412. https://doi.org/10.1126/science.1177067

- Hulleman, C. S., & Harackiewicz, J. M. (2020). The utility-value intervention. In G. W. Walton & A. Crum (Eds.), Handbook of wise interventions: How social-psychological insights can help solve problems (pp. 100–125). Guilford Press.

- Ito, T. (1996). Self-efficacy, causal attribution and learning strategy in an academic achievement situation. The Japanese Journal of Educational Psychology, 44(3), 340–349. https://doi.org/10.5926/jjep1953.44.3_340

- Kawai, T., & Mizokami, S. (2012). Analysis of learning bridging: Focus on the relationship between learning bridging, approach to learning and connection of future and present life. Japan Journal of Educational Technology, 36(3), 217–226.

- Ozaki, Y., Goto, T., Kobayashi, M., & Kutsuzawa, G. (2016). Reliability and validity of the Japanese translation of Brief Self-Control Scale (BSCS-J). Shinrigaku Kenkyu : The Japanese Journal of Psychology, 87(2), 144–154. https://doi.org/10.4992/jjpsy.87.14222

- Pintrich, P. R., & De Groot, E. V. (1990). Motivational and self-regulated learning components of classroom academic performance. Journal of Educational Psychology, 82(1), 33–40. https://doi.org/10.1037/0022-0663.82.1.33

- Robbins, S. B., Lauver, K., Le, H., Davis, D., Langley, R., & Carlstrom, A. (2004). Do psychosocial and study skill factors predict college outcomes? A meta-analysis. Psychological Bulletin, 130(2), 261–288. https://doi.org/10.1037/0033-2909.130.2.261

- Senko, C. (2019). When do mastery and performance goals facilitate academic achievement? Contemporary Educational Psychology, 59, 101795. https://doi.org/10.1016/j.cedpsych.2019.101795

- Tanaka, A., & Fujita, T. (2003). Achievement goals, evaluation of instructions, and academic performance of university students. Japan Journal of Educational Technology, 27(4), 397–403.

- Tangney, J. P., Baumeister, R. F., & Boone, A. L. (2004). High self-control predicts good adjustment, less pathology, better grades, and interpersonal success. Journal of Personality, 72(2), 271–324. https://doi.org/10.1111/j.0022-3506.2004.00263.x