?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Cooperation for public goods poses a dilemma, where individuals are tempted to free ride on others’ contributions. Classic solutions involve monitoring, reputation maintenance and costly incentives, but there are important collective actions based on simple and cheap cues only, for example, unplanned protests and revolts. This can be explained by an Ising model with the assumption that individuals in uncertain situations tend to conform to the local majority in their network. Among initial defectors, noise such as rumors or opponents’ provocations causes some of them to cooperate accidentally. At a critical level of noise, these cooperators trigger a cascade of cooperation. We find an analytic relationship between the phase transition and the asymmetry of the Ising model, which in turn reflects the asymmetry of cooperation and defection. This study thereby shows that in principle, the dilemma of cooperation can be solved by nothing more than a portion of random noise, without rational decision-making.

KEYWORDS:

People may want to realize or preserve public goods, for example, democracy and clean air, but because contributors are disadvantaged in the face of free riders, there is a dilemma (Gavrilets, Citation2015; Hardin, Citation1968; Olson, Citation1965; Ostrom, Citation2009). Solutions typically require efforts of the participants to monitor one another (Rustagi, Engel, & Kosfeld, Citation2010) and spread information (gossip) (Nowak & Sigmund, Citation2005) through their network reliably that establishes reputations (Panchanathan & Boyd, Citation2004), upon which some of them have to deliver individual rewards or (threats of) punishments (Fehr & Fischbacher, Citation2003), under pro-social norms to preclude arbitrariness. These provisions are not always (sufficiently) available, though, whereas in certain situations, participants still manage to self-organize cooperation, even without leaders. Cases in point are impromptu help at disasters, non-organized revolts against political regimes (Lohmann, Citation1994; Tilly, Citation2002; Tufekci, Citation2017) and spontaneous street fights between groups of young men.

These examples have in common a high uncertainty of outcomes, and unknown benefits and costs. Rational decision-making is therefore not feasible. Participants who identify with their group or its goal (Van Stekelenburg & Klandermans, Citation2013), and thereby feel group solidarity (Durkheim, Citation1912), use the heuristic of conformism to the majority of their network neighbors (Wu, Li, Zhang, Cressman, & Tao, Citation2014), which can be based on no more than visual information. Human ancestors lived in groups for millions of years (Shultz, Opie, & Atkinson, Citation2011) and in all likelihood, solidarity and conformism are both cultural and genetic (Boyd, Citation2018). On an evolutionary timescale, conformism must have been beneficial on average when future benefits and costs were unknown (Van den Berg & Wenseleers, Citation2018). To explain cooperation under conformism, we use an Ising model (Weidlich, Citation1971; Galam, Gefen, & Shapir, Citation1982; Jones, Citation1985; Stauff, Citation2008; Castellano, Fortunato, & Loreto, Citation2009). This model recovers the critical mass that makes cooperation self-reinforcing but without the rationality assumptions of critical mass theory (Marwell & Oliver, Citation1993). Other applications of the Ising model to a range of social science problems are reviewed by Castellano cum suis (Citation2009).

1. Model

A group of individuals who share an interest in a public good is modeled as a network with weighted and usually asymmetric ties

denoting

paying attention to

. There is no assumption that

and

know each other before they meet at the site where collective action might take place. Consistent with other models of social influence (Friedkin & Johnsen, Citation2011), the adjacency matrix is row-normalized, yielding cell values

, hence

= 1.

Individuals have two behavioral options, defect () and cooperate (

),

, and all defect at the start. The average degree of cooperation among

individuals is described by an order parameter

, where the behavioral variable

can take the value

or

, for example

. Everybody gets an equal share of the public good but cooperators incur a cost. A widely used definition of payoffs for cooperators

and defectors

in a group

is the following (Perc et al., Citation2017),

with an enhancement, or synergy, factor of cooperation, and

the number of cooperators when the focal player decides. In our case,

which is identical to EquationEq. 1(1)

(1) in standard game theory when

and

. Our payoffs can be negative but that does not matter because they are used only comparatively. Other, for example non-linear, payoff functions (Marwell & Oliver, Citation1993) may also be used. The key point, however, is that under high uncertainty, participants do not maximize their payoff (directly) but align with others instead, thereby forming a collective lever that can increase their payoff while avoiding exploitation. Behavior and network ties are expressed in the conventional, but here asymmetric, Ising model

Solving the model boils down to minimizing , where

can be interpreted as average dissatisfaction. Minimizing can be done computationally with a Metropolis algorithm (Barrat, Barthelemy, & Vespignani, Citation2008), where individuals decide sequentially as in many network models, or through a mean-field analysis, elaborated below.

High-uncertainty situations to which the model applies are characterized by turmoil, or temperature in the original model. It causes arousal, measurable as heart rates (Konvalinka et al., Citation2011) and produces noise (Lewenstein, Nowak, & Latané, Citation1992) in individuals’ information about the situation, which in turn becomes partly false, ambiguous, exaggerated or objectively irrelevant. Turmoil and its noise may consist of rumors, fire, provocations and violence. Some social movements produce turmoil by themselves, for instance an increasingly frequent posting of online messages (Johnson et al., Citation2016). Arousal and noise entail “trembling hands” (Dion & Axelrod, Citation1988) as game theorists say, which means a chance that some individuals accidentally change their behavior. The model is to show that few accidental cooperators entail a cascade of cooperation. An example of turmoil and its ramifications is the self-immolation of a street vendor in December 2010, which, in the given circumstances, set off the Tunisian revolution. Other examples are the revolts in East Germany (Lohmann, Citation1994) and Romania in 1989 and in Egypt and Syria in 2011 (Hussain & Howard, Citation2013), where protesters were agitated by rumors about the events in neighboring countries. Autocratic rulers try to prevent revolts by suppressing turmoil, for instance by tightening media control.

Noise is different from a stable bias, for instance the ideology of an autocratic regime, which entails revolts against it less often than a weakened regime or stumbling opponents in street fights. Opponents’ weakness gives off noisy signals that they might be overcome, which readily entail collective actions against them (Collins, Citation2008; Goldstone, Citation2001; Skocpol, Citation1979). Whereas responses to noise are typically spontaneous, collective responses to stable signals, biased or not, tend to be mounted by organized groups with norms, incentives and all that (Goldstone, Citation2001; Tilly, Citation2002; Tufekci, Citation2017). Combinations of signal and noise also occur, of course, which can result in, for example, an organized peaceful demonstration to suddenly turn violent. Our focus is on spontaneous cooperation.

2. Results

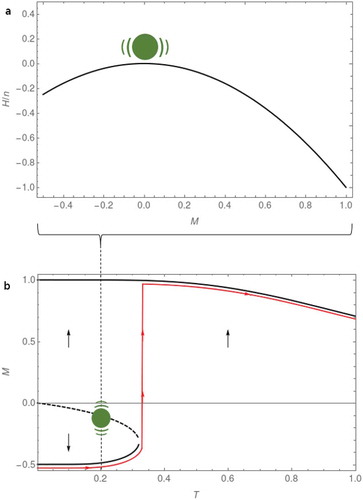

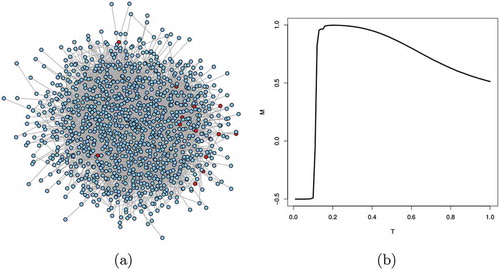

Our general result is that within finite time and at low turmoil, cooperation does not get off the ground, but it does emerge at a critical level . This pattern is illustrated by the red line in ) along the direction of the arrows. The figure was obtained with a mean-field approach, but numerical simulations with the Metropolis algorithm show up the same pattern, with lower

for small networks (). Other topological variations, of density, clustering and degree distribution, are elaborated elsewhere (Bruggeman & Sprik, Citation2020). Moreover, if a certain cluster is exposed to locally higher turmoil, cooperation emerges there. The model thus shows that at a critical level of turmoil, few accidental cooperators can trigger a cascade of cooperation. If

keeps increasing way beyond

, cooperators co-exist with increasing numbers of defectors, until the two behaviors become equally frequent. If in actuality cooperation then collapses completely is an issue for further study. Otherwise, cooperation ends when the public good is achieved, the participants run out of steam, or others intervene.

Figure 1. Cooperation for public goods. (a) Mean dissatisfaction and level of cooperation

. When all defect,

is at a local minimum, on the left, but to proceed to the global minimum where all cooperate, on the right, participants are hindered by a hill. (b) Mean-field analysis with

shows below

one stable state with mostly cooperators, at the top, and another stable state in finite time with mostly defectors, at the bottom. A metastable state in between, indicated by the dotted line, corresponds to the hilltop in Figure 1(a). Above

only one state remains, where with increasing

, cooperators are joined by increasing numbers of defectors

Figure 2. Numerical simulation on a university e-mail network with and clustering

; data from Guimerà (2003). (a) the network at a near-critical turmoil level of

;

. Some nodes start cooperating (red) whereas most still defect (blue). (b)

is smaller than in the mean-field approximation, but the overall pattern is qualitatively the same (compare to Fig. 1 b)

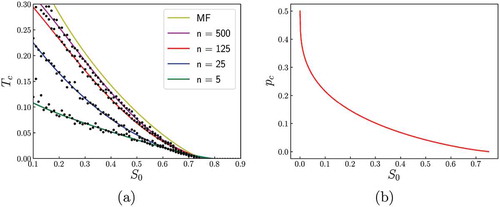

Figure 3. Consequences of shifting with the mean-field approach (MF), keeping

. (a) With increasing

, less agitation is necessary to turn defectors into cooperators. For comparison, numerical simulations on several random networks with density = 0.8 are shown as well. (b) The proportion of defectors

at

decreases with increasing

Alternatively, if participants get to understand an enduring situation, their uncertainty will reduce and they may start acting strategically, which requires pro-social norms to prevent. If the participants then develop such norms prescribing rewards and punishments, these norms can be easily modeled as field(s) by adding term(s) to the Hamiltonian (EquationEq.3)

(3)

(3) . Consequently, cooperation emerges without a phase transition. The actual maintenance of these norms, however, will entail additional costs over and above the contributions, whereas spontaneous cooperation is relatively cheap.

2.1. Comparison with the symmetric Ising model

Rewriting the asymmetric Ising model in a symmetric form enables a direct comparison with results in the literature for symmetric models and a generalization to arbitrary values of . A model with asymmetric values can be reformulated as a symmetric model with an offset, or bias,

and an increment

by the mapping

Accordingly, the values chosen in ), , imply

and

. For given

, increasing

means increasing interest in the public good, or, in line with the literature on protests, increasing grievances (Van Stekelenburg & Klandermans, Citation2013).

Substitution of and

chosen from

in

yields

Expanding in orders of

yields

The first term in the expansion is a symmetric model with the same adjacency matrix as the original asymmetric model. The second term

is proportional to

and can be interpreted as a local field that modifies

. The contribution of this local field can be expressed in terms of row and column sums of

as

For row-normalized adjacency matrices, with for all rows

,

becomes

where the first term is a local field varying for each , and the second term is a homogeneous external field independent of

. The third term in the expansion of

is independent of the values of

and is a constant depending on

only. Hence, it does not play a role in the minimization of

. For a connected network with row-normalization, the last expression can be further simplified to

The asymmetry in is then equivalent to a symmetric system with an external field

. In another paper, we simulate the effect of different

values in different network clusters on the tipping point (Bruggeman & Sprik, Citation2020).

2.2. Mean-field analysis

The expected value of as a function of

can be obtained by assuming that the network is very large and by abstracting away from its topology; in the language of thermodynamics, by approximating the interaction energy by the energy of one spin (here, behavior) in the mean-field of its neighbors (Barrat et al., Citation2008),

. The value of

can now be expressed in closed form in terms of the probabilities given by the exponential of the Hamiltonian energy and

as

This reduces to an implicit relation,

where only dimensionless ratios of ,

and

with

remain in the expression. The mean degree

, defined for binary ties, does not occur in it because the adjacency matrix is row-normalized and the mean weighted outdegree

.

By analyzing the intersection of the line defined by and the tanh term on the right-hand side of EquationEq. 11

(11)

(11) , the possible values for

at a given

can be found. For

there is one stable high

solution and for

there is one stable solution of (nearly) full cooperation, another solution that is stable in finite time with (nearly) full defection, and one unstable solution. At

the two stable solutions merge and the intersecting line coincides with the tangent line touching the tanh function; see ). At that point, a closed relation for

in terms of

and

can be found,

where EquationEq. 12

(12)

(12) is used in ). It shows that if

increases while keeping

constant, less agitation is required to motivate defectors to cooperate. When

decreases to

, defection loses its appeal. The figure also shows that numerical simulations yield very similar results for large networks but diverge for small ones. This also holds true for

in , which is lower for smaller networks (not shown).

From the mean-field approximation follows the proportion of defectors and cooperators

at given

and pertaining

, after a time long enough for the system to settle down. The proportion of cooperators

at

is the critical mass (Marwell & Oliver, Citation1993), and can be inferred from the value of

at the phase transition,

The mean-field analysis of yields

Note that the function in EquationEq. 14

(14)

(14) only yields a result when

, and sets a limit to

for given

. For the choice

, the maximum value of

. Solving for

yields

used for ). It shows that the proportion of defectors at

decreases with increasing

. In contrast to critical mass theory, however, the Ising model has no assumptions about initiative takers or leaders who win over the rest, rational decision-making (Marwell & Oliver, Citation1993), or learning that would require fairly stable feedback (Macy, Citation1991).

3. Discussion and conclusion

We have shown that under high uncertainty, the dilemma of collective action can be solved by nothing more than a portion of random noise. The asymmetric Ising model does not require any knowledge or accurate expectations of the participants, and only depends on conformism, which can be empirically observed in synchronous motion, gestures or shouting (McNeill, Citation1995; Jones, Citation2013). In particular, it has no assumptions about actors’ rationality, in contrast to critical mass theory, whereas it supports that theory’s key findings of the critical mass and the tipping point. Simulations add to our mean-field result that turmoil-driven cooperation is most likely in small groups, where cooperation starts at relatively low levels of noise.

Shortly before we finished this manuscript, two other papers appeared where an Ising model was used to solve this dilemma (Adami & Hintze, Citation2018; Sarkar & Benjamin, Citation2019), but their symmetric model requires complex quantum physics to define payoffs, in contrast to our simple definition. Along with empirical testing, perhaps also on other species, a future direction might be to explicitly model noisy information transmission on the group’s network (Quax, Apolloni, & Sloot, Citation2013), too.

Acknowledgments

Thanks to José A. Cuesta, Raheel Dhattiwala, Don Weenink and Alex van Venrooij for comments.

References

- Adami, C., & Hintze, A. (2018). Thermodynamics of evolutionary games. Physical Review E, 97, 062136.

- Barrat, A., Barthelemy, M., & Vespignani, A. (2008). Dynamical processes on complex networks. New York: Cambridge University Press.

- Boyd, R. (2018). A different kind of animal: How culture transformed our species. Princeton, NJ: Princeton University Press.

- Bruggeman, J., & Sprik, R. (2020). Cooperation for public goods under uncertainty. (arXiv:2001.02677)

- Castellano, C., Fortunato, S., & Loreto, V. (2009). Statistical physics of social dynamics. Reviews of Modern Physics, 81, 591.

- Collins, R. (2008). Violence: A micro-sociological theory. Princeton: Princeton University Press.

- Dion, D., & Axelrod, R. (1988). The further evolution of cooperation. Science, 242, 1385–1390.

- Durkheim, E. (1912). Les forms élémentaires de la vie religieuse. Paris: Presses Universitaires de France.

- Fehr, E., & Fischbacher, U. (2003). The nature of human altruism. Nature, 425, 785.

- Friedkin, N. E., & Johnsen, E. C. (2011). Social influence network theory: A sociological examination of small group dynamics. Cambridge, MA: Cambridge University Press.

- Galam, S., Gefen, Y., & Shapir, Y. (1982). Sociophysics: A new approach of sociological collective behaviour: Mean-behaviour description of a strike. Journal of Mathematical Sociology, 9, 1–13.

- Gavrilets, S. (2015). Collective action problem in heterogeneous groups. Philosophical Transactions of the Royal Society B, 370, 20150016.

- Goldstone, J. A. (2001). Toward a fourth generation of revolutionary theory. Annual Review of Political Science, 4, 139–187.

- Hardin, G. (1968). The tragedy of the commons. Science, 162, 1243–1248.

- Hussain, M. M., & Howard, P. N. (2013). What best explains successful protest cascades? ICTs and the fuzzy causes of the Arab Spring. International Studies Review, 15, 48–66.

- Johnson, N. F., Zheng, M., Vorobyeva, Y., Gabriel, A., Qi, H., Velásquez, N., & others. (2016). New online ecology of adversarial aggregates: Isis and beyond. Science, 352, 1459–1463.

- Jones, D. (2013). The ritual animal. Nature, 493, 470–472.

- Jones, F. L. (1985). Simulation models of group segregation. The Australian and New Zealand Journal of Sociology, 21, 431–444.

- Konvalinka, I., Xygalatas, D., Bulbulia, J., Schjødt, U., Jegindø, E.-M., Wallot, S., … Roepstorff, A. (2011). Synchronized arousal between performers and related spectators in a fire-walking ritual. Proceedings of the National Academy of Sciences, 108, 8514–8519.

- Lewenstein, M., Nowak, A., & Latané, B. (1992). Statistical mechanics of social impact. Physical Review A, 45, 763–776.

- Lohmann, S. (1994). The dynamics of informational cascades: The Monday demonstrations in Leipzig, East Germany, 1989–91. World Politics, 47, 42–101.

- Macy, M. W. (1991). Chains of cooperation: Threshold effects in collective action. American Sociological Review, 56, 730–747.

- Marwell, G., & Oliver, P. (1993). The critical mass in collective action. Cambridge, MA: Cambridge University Press.

- McNeill, W. H. (1995). Keeping together in time: Dance and drill in human history. Cambridge, MA: Harvard University Press.

- Nowak, M. A., & Sigmund, K. (2005). Evolution of indirect reciprocity. Nature, 437, 1291–1298.

- Olson, M. (1965). The logic of collective action: Public goods and the theory of groups. Harvard: Harvard University Press.

- Ostrom, E. (2009). A general framework for analyzing sustainability of social-ecological systems. Science, 325, 419–422.

- Panchanathan, K., & Boyd, R. (2004). Indirect reciprocity can stabilize cooperation without the second-order free rider problem. Nature, 432, 499–502.

- Perc, M., Jordan, J. J., Rand, D. G., Wang, Z., Boccaletti, S., & Szolnoki, A. (2017). Statistical physics of human cooperation. Physics Reports, 687, 1–51.

- Quax, R., Apolloni, A., & Sloot, P. M. (2013). The diminishing role of hubs in dynamical processes on complex networks. Journal of the Royal Society Interface, 10, 20130568.

- Rustagi, D., Engel, S., & Kosfeld, M. (2010). Conditional cooperation and costly monitoring explain success in forest commons management. Science, 330, 961–965.

- Sarkar, S., & Benjamin, C. (2019). Triggers for cooperative behavior in the thermodynamic limit: A case study in public goods game. Chaos, 29, 053131.

- Shultz, S., Opie, C., & Atkinson, Q. D. (2011). Stepwise evolution of stable sociality in primates. Nature, 479, 219–222.

- Skocpol, T. (1979). States and social revolutions: A comparative analysis of France, Russia and China. Cambridge, UK: Cambridge University Press.

- Stauff, D. (2008). Social applications of two-dimensional Ising models. American Journal of Physics, 76, 470–473.

- Tilly, C. (2002). Stories, identities, and political change. Lanham: Rowman and Littlefield.

- Tufekci, Z. (2017). Twitter and tear gas: The power and fragility of networked protest. New Haven: Yale University Press.

- Van den Berg, P., & Wenseleers, T. (2018). Uncertainty about social interactions leads to the evolution of social heuristics. Nature Communications, 9, 2151.

- Van Stekelenburg, J., & Klandermans, B. (2013). The social psychology of protest. Current Sociology, 61, 886–905.

- Weidlich, W. (1971). The statistical description of polarization phenomena in society. British Journal of Mathematical and Statistical Psychology, 24, 251–266.

- Wu, -J.-J., Li, C., Zhang, B.-Y., Cressman, R., & Tao, Y. (2014). The role of institutional incentives and the exemplar in promoting cooperation. Scientific Reports, 4, 6421.