?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Iron is an essential element in the construction materials for fission and fusion reactors. Due to its complexity, the evaluation of iron cross sections continues to represent a challenge for the international nuclear data community. A comprehensive validation of any new nuclear data evaluation (and the computational procedure) against experimental benchmarks is therefore needed. The shielding benchmark database SINBAD includes relatively numerous experiments with iron as a shielding material; altogether, 27 benchmarks and several more are known but have not yet been evaluated in the database. However, in order to use the benchmark information with confidence and to rely on the predictions based on integral benchmark calculations, it is crucial to verify the quality and accuracy of the measurements themselves, as well as the (completeness of) available experimental information. This is done in the scope of the benchmark evaluation process. A further check of the reliability of the experimental information can be achieved by intercomparing the results of similar types of benchmark experiments and checking the consistency among them.

I. INTRODUCTION

Shielding benchmark experiments with well characterized neutron sources, geometry setups, and material compositions offer a powerful means for the verification and validation of the computational methods and models used for nuclear reactor analysis. Due to the importance of iron as an essential construction component material for fission and fusion reactors and the complexity of the underlying nuclear data, a large number of benchmarks have been performed over the years for the validation of iron cross sections. The shielding benchmark database SINBAD (CitationRef. 1) includes numerous experiments with iron and/or steel as a shielding material; altogether 27 out of a total of 102 benchmarks and several more are known but have not yet been evaluated in the database. Three among them, the ASPIS Iron88, the Pool Critical Assembly (PCA) (PCA Replica), and the Oak Ridge National Laboratory (ORNL) PCA Pressure Vessel Facility (1980) (PCA ORNL), performed in the scope of the reactor pressure vessel (PV) surveillance program in the 1980s, are examined in this paper.

In order to use the benchmark information with confidence and to rely on the predictions based on integral benchmark calculations, it is crucial to verify the quality and accuracy of the measurements themselves, as well as the (completeness of) available experimental information. This is done in the scope of projects such as the International Criticality Safety Benchmark Evaluation Project (ICSBEP), the International Reactor Physics Benchmark Experiments (IRPhE), and SINBAD through a detailed examination of the available experimental documentation, preferably in cooperation with the experimentalists if (still) available. Further checks on the reliability of the experimental information can be achieved by intercomparing the results of similar types of benchmark experiments and checking the consistency between them.

Note that in the current SINBAD evaluations, the term benchmark experiment refers to the experimental measurements being performed under controlled, well understood, and specified conditions and does not involve any computational adjustment or “corrections” of experimental values to account for the impact of computational model simplifications. This definition may differ from the definitions used in some other databases, such as the ICSBEP/IRPhE. Still, model simplifications are necessarily present because the detailed description of the setup is not available for these older experiments, such as the description of the neutron source and the surrounding.

II. VALIDATION OF IRON CROSS SECTIONS AGAINST SINBAD SHIELDING BENCHMARKS

Several iron benchmarks with different degrees of similarity, the ASPIS Iron 88 (CitationRefs. 2 and Citation3), the PCA ORNL (CitationRef. 4), and the PCA ReplicaCitation5 (ASPIS) benchmarks, were analyzed using Monte Carlo (MCNP, Tripoli, and Serpent) and deterministic (DORT/TORT) codes to verify the trends observed for various nuclear data,Citation6–10 including JEFF-3.3 (CitationRef. 11), JENDL-4.0u (CitationRef. 12), ENDF/B-VIII.0 (CitationRef. 13), FENDL-3.2 (CitationRef. 14), etc. Due to uncorrelated experimental uncertainties, the PCA ORNL and PCA Replica experiments, although very similar in geometrical and measurement setups, represent a unique set of uncorrelated experimental data.

Two of the these benchmarks have already been through the SINBAD quality review processCitation15,Citation16 and received the best score (♦♦♦, i.e. “Valid for nuclear data and code benchmarking”). MCNP computational models were prepared in the scope of the quality evaluation and are included in the evaluations. For the PCA ORNL benchmark,Citation17,Citation18 the quality review still needs to be done.

A few drawbacks found in the SINBAD evaluations are listed in . Supplementary information on the experiment was recently received from the Jacobs reportCitation19 on the geometrical arrangement of the fission plate and ASPIS cave, geometry and material of the detectors, measurement arrangements and background contributions, and the 235U fission chamber measurements. The documentCitation19 is available from the Working Party on International Nuclear Data Evaluation Co-operation Subgroup 47 (WPEC SG47) github repository to the working group participants,Citation20 and it is planned to be included in the SINBAD distribution.

TABLE I Drawbacks Identified in the SINBAD Benchmark Documentation for the ASPIS Iron88, PCA ORNL, and PCA Replica Benchmarks

These benchmarks have been extensively used in the past for the validation of nuclear data, starting probably with JEFF-2.2 and ENDF/B-V. In CitationRef. 2, it was already concluded from an ASPIS Iron88 analysis that

The JEF2.2 iron data give good results for 32S(n,p)32P, 103Rh(n,n’) 103mRh and 197Au(n,γ) 198Au/Cd reaction rates through up to 67 cm of mild steel but poorer results for 115In(n,n’) 115mIn reaction rates suggest possible errors in the cross-sections of iron between 0.6 MeV and 1.4 MeV.

More recently, the analyses of the previously mentioned benchmarks indicated an underperformance of some recent iron cross-section evaluations. Since 2014, the authors signaled the discrepancies in Collaborative International Evaluated Library Organisation (CIELO) iron evaluations (later ENDF/B-VIII.0) and JEFF-3.3 at high neutron energies, where the new evaluations performed considerably worse than the old evaluations, such as ENDF/B-VI and B-VII, JENDL-4.0, and others.Citation6,Citation7 On the other hand, no major problems have been observed in the analyses of critical benchmarks. In spite of the reported bad performance in shielding benchmarks, these evaluations were later selected for the official ENDF/B-VIII.0 and JEFF-3.3 libraries. The WPEC SG47 report, entitled “Use of Shielding Integral Benchmark Archive and Database for Nuclear Data Validation,”Citation20 was initiated in 2019 in order to improve the feedback loop.

Due to the importance of elements such as iron for fission and fusion reactor shielding applications, it is now well understood that validation against shielding benchmarks is crucial, which requires the availability of reliable benchmarks with well characterized and understood experimental uncertainties.

II.A. ASPIS Iron88, PCA Replica, and PCA ORNL Benchmark Descriptions

The ASPIS Iron88 benchmarkCitation2,Citation3 studied the neutron transport for penetrations up to 67 cm in mild steel using neutrons emitted from a 93%-enriched uranium fission plate driven by the NESTOR research reactor. The shield was made from 13 mild steel plates, each approximately 5.1 cm thick, 182.9 cm wide, and 191.0 cm high, and a backing shield manufactured from mild and stainless steel (). The Au, Rh, In, S, and Al activation foils were inserted at up to 14 experimental positions between the 13 mild steel plates. The experimental uncertainties of the measured activation reaction rates were generally around 5%, including power normalization uncertainty (4%), counting statistics (in general 1% to 2%, but as high as 20% at the deepest S detector position), and detector calibration (systematic) uncertainty (1% to 5%). Systematic and statistical uncertainties were well separated and characterized in the reportsCitation2,Citation3 and allowed for the construction of the correlation matrix of the measured reaction rates as needed for nuclear data adjustment studies.Citation6

The PCA ORNL 12/13 configurationCitation4 consisted of a water/iron shield reproducing the ex-core region of a pressurized water reactor, simulating the thermal shield (5.9 cm thick), PV (22.5 cm), and the cavity with two light water interlayers (12/13 cm each) (). The neutron source was composed of 25 material test reactor fresh fuel elements, with a 93% 235U enrichment. The 237Np, 238U, 103Rh, 115In, 58Ni, and 27Al activation foils were measured at up to seven positions in the mock-up, including at 1/4, 1/2, and 3/4 of the PV thickness. The uncertainties in the measured equivalent fission fluxes were between 6% and 10%.

The PCA Replica reproduced the ORNL PCA experiment with a highly enriched fission plate driven by the NESTOR research reactor at Winfrith, United Kingdom, as a neutron source instead of the reactor core (). The experiment was performed at Winfrith in the early 1980s. The Rh, In, S, and Mn activation foils were inserted at up to seven experimental positions selected similarly to the PCA ORNL benchmark. The experimental uncertainties were around 5%, including absolute power calibration uncertainty (3.6%), counting statistics (generally about 2%), absolute calibration (systematic) uncertainties (3%, 2%, and 4%, respectively, for Rh, In, and S), and background (~1%).

The PCA Replica benchmark experiment was an almost exact replication of the PCA ORNL benchmark, with the exception that a well-defined fission plate containing 93%-enriched 235U was used as the neutron source instead of the reactor core, which resulted in a better characterization and lower uncertainty in the source description. Since the two benchmarks were performed independently, i.e., by different experimentalists using independent measurement systems, experimental block fabrications, and different neutron sources, the comparison between both results provides a valuable validation and verification of the uncertainties involved in the measurement results.

III. COMPUTATIONAL ANALYSIS

The computational analyses of the three benchmarks were performed independently by the United Kingdom Atomic Energy Authority (UKAEA) (ASPIS Iron88 and PCA Replica) and the Nuclear Research and Consultancy Group (NRG) (PCA ORNL) using the respective computational tools in the same way that the experimental work at ORNL (PCA) and ASPIS (PCA Replica and Iron88) was independent. The analyses included the transport calculations using the MCNP code and the nuclear data sensitivity and uncertainty (S/U) analyses using deterministic SUSD3D/DORT-TORT (UKAEA) and Monte Carlo MCNP (NRG) techniques.

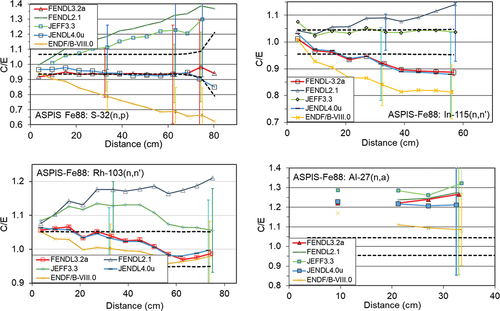

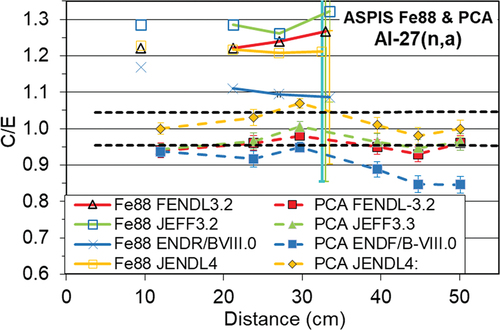

An example of the results of the transport alculateons of the ASPIS Iron88 benchmark using the MCNP code is shown in in terms of calculated-to-experimental (C/E) ratios. The results have a relatively large spread, but are still roughly consistent with the experimental and nuclear data computational uncertainties shown in and , which can be observed among the modern nuclear data evaluations. The MCNP results are considered a reference solution here, but consistent C/E values within 5% to 10% were also obtained using deterministic discrete ordinates codes (DORT/TORT). Good agreement between the deterministic and MCNP results provided confidence in the S/U results obtained using the SUSD3D code based on the direct and adjoint neutron fluxes calculated by the DORT and TORT codes.

TABLE II Experimental and Computational Nuclear Data Uncertainties for the Reaction Rates Measured in the ASPIS Iron88 Benchmark

TABLE III Experimental and Computational Nuclear Data Uncertainties for the Reaction Rates Measured in the PCA Replica Benchmark

Fig. 4. ASPIS Iron88 benchmark: C/E ratios for the 103Rh(n,n’) and 115In(n,n’) reaction rates calculated using the MCNP code and cross sections from the FENDL-3.2 and 2.1, JEFF-3.3, ENDF/B-VIII.0, and JENDL-4.0u evaluations. Dashed lines delimit the ±1σ measurement standard deviations. Examples of ±1σ computational (nuclear data) uncertainties calculated using the SUSD3D codes are shown.

The uncertainties in the calculated reaction rates due to nuclear data uncertainties are given in and . Covariance matrices were taken from the JEFF-3.3, ENDF/B-VI.1, ENDF/B-VIII.0, and JENDL-4.0 evaluations, which are included in the XSUN-2023 package.Citation20 Due to its high-iron slab thickness, the uncertainties in the calculated reaction rates of the ASPIS Iron88 benchmark are by a factor of 2 to 3 higher than in the case of PCA Replica. The ASPIS Iron88 benchmark therefore represents the most severe test of iron cross-section data among the previously described benchmarks.

Note that the “Distance (cm)” in the tables and figures is measured in the case of the PCA ORNL and PCA Replica benchmarks from the aluminum window simulator (see and ), and in the case of ASPIS Iron88, from the beginning of the fission plate (A1 position in ). For ASPIS Iron88, this represents approximately the iron block thickness (minus 7.4 mm voids for foil positioning), but not for the PCA ORNL and PCA Replica experiments, where the total iron block thickness was only ~27 cm.

III.A. Consistency Between the PCA Replica and PCA ORNL Benchmark Results

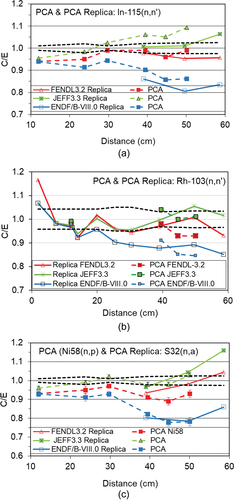

The consistency between the PCA ORNL and the PCA Replica experiments is most easily studied for the 103Rh and 115In activation foil results because these dosimetry reactions have been measured in both experiments. and demonstrate a good consistency in the C/E results for the cross-section libraries that have been used in both cases, i.e., ENDF/B-VII.1, ENDF/B-VIII.0, FENDL-3.2a, and JEFF-3.3. For both In and Rh, the C/Es for both experiments agree within about 5%, which is consistent with the 1σ experimental uncertainties of the measurements. The C/E values for the Ni and S reaction rates have reasonably comparable responses and thresholds (~0.4 and ~1 MeV), as measured in the PCA ORNL and PCA Replica benchmarks, respectively. They are compared and shown in and show similar trends. The Ni foil measurements in the PCA ORNL benchmark also confirm the results of the In foils, both having roughly similar thresholds (~0.4 MeV). This confirms that these benchmarks represent a reliable basis for a comprehensive iron cross-section validation.

Fig. 5. PCA Replica and PCA ORNL benchmarks: C/E ratios for the 103Rh, 115In, and 32S/58Ni activation foils calculated using the MCNP code and cross sections from the FENDL-3.2 and 2.1, JEFF-3.3, ENDF/B-VIII.0, and JENDL-4.0u evaluations. Dashed lines delimit the ±1σ measurement standard deviations. (c) Comparison of the C/Es for two reactions having approximately similar thresholds: 32S(n,α) (ASPIS Iron88) and 58Ni(n,p) (PCA Replica).

For both experiments, the ENDF/B-VII.1, FENDL-3.2a, and JEFF-3.3 results are reasonably satisfactory, with, however, the JEFF-3.3 results on the high side for the 115In and 32S results, and FENDL-3.2 slightly lower for the 115In and 58Ni reaction rates. The ENDF/B-VIII.0 underestimates the measurements and shows a downward trend with increasing distance in steel.

An exemption to the good agreements is the set of 27Al(n,α) reaction rate measurements discussed in Sec. II.C.

III.B. Comparison Between ASPIS Iron88 and PCA Replica Benchmark Results

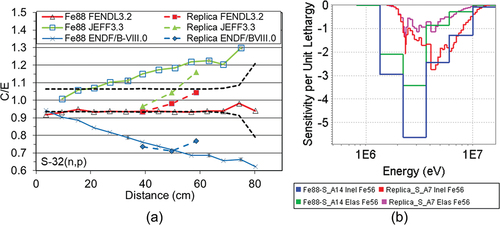

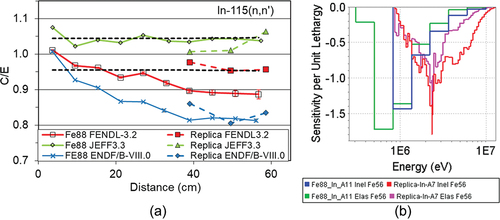

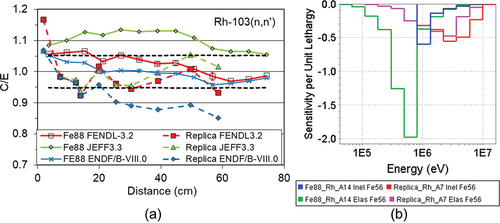

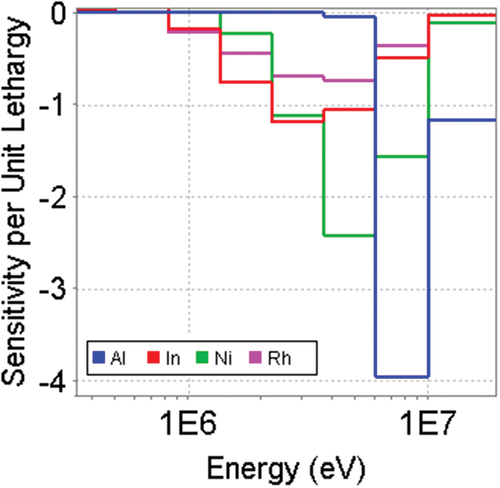

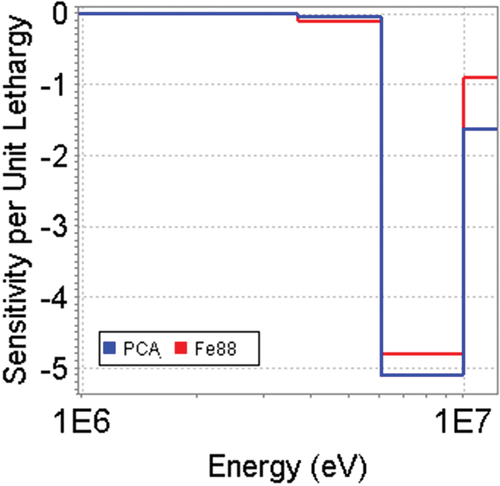

The analysis further demonstrated a good consistency among the ASPIS Iron88, PCA Replica, and PCA ORNL benchmark results, taking into account the differences in the nuclear data sensitivities and uncertainties. As shown in and , the ASPIS Iron88 benchmark, with a stainless steel thickness more than two times larger than in the PCAs, represents the most severe test of iron cross-section data among the benchmarks, with considerably larger sensitivities of the calculated reaction rates to the underlying iron cross sections. In the case of the ASPIS Iron88 benchmark, the sensitivities and the nuclear data uncertainties for high-energy reactions, such as 32S, are up to about three times higher than in PCA Replica.

However, in addition to the differences in magnitude, the energy dependences of the sensitivity profiles also differ considerably, in particular for the In and Rh activation foils. As demonstrated in , and , which compare the sensitivity of the measured reaction rates to the 56Fe elastic and inelastic cross sections, the sensitivities at deep positions in the ASPIS Iron88 block are shifted toward lower neutron energies compared to PCA Replica. Sensitivity profiles were calculated using the SUSD3D code and the direct and adjoint fluxes calculated by the TORT code and the JEFF-3.3 175- and/or 33-group cross sections. The sensitivity of PCA ORNL to the 56Fe inelastic cross section is similar to that of PCA Replica, as evidenced in , which shows the sensitivities at position D6 at about 50 cm in the experimental setup calculated using the MCNP code.

Fig. 6. Comparison of the ASPIS Iron88 and PCA Replica benchmark results for the 32S reaction rates. (a) C/E ratios calculated using the MCNP code and cross sections from the FENDL-3.2 and 2.1, JEFF-3.3, ENDF/B-VIII.0, and JENDL-4.0u evaluations. Dashed lines delimit the ±1σ measurement standard deviations. (b) Sensitivity of the 32S(n,p) reaction rates at the deepest measurement positions in the ASPIS Iron88 (position A14 at 74 cm) and PCA Replica (position A7 at 59 cm) benchmarks to the 56Fe inelastic and elastic cross sections. Legend: “Fe88_S_A14 Inel Fe56” stands for the sensitivity of the 32S reaction rate at position A14 to the inelastic cross section of 56Fe, etc.

Fig. 7. Comparison of the ASPIS Iron88 and PCA Replica benchmark results for the 115In reaction rates. (a) C/E ratios calculated using the MCNP code and cross sections from the FENDL-3.2 and 2.1, JEFF-3.3, ENDF/B-VIII.0, and JENDL-4.0u evaluations. Dashed lines delimit the ±1σ measurement standard deviations. (b) Sensitivity of the 115In reaction rates at the deepest measurement positions in the ASPIS Iron88 (position A11 at 57 cm) and PCA Replica (position A7 at 59 cm) benchmarks to 56Fe inelastic and elastic cross sections. Legend: “Fe88_In_A11 Inel Fe56” stands for the sensitivity of the 115In reaction rate at position A11 to the inelastic cross section of 56Fe.

Fig. 8. Comparison of the ASPIS Iron88 and PCA Replica benchmark results for the 103Rh reaction rates. (a) C/E ratios calculated using the MCNP code and cross sections from the FENDL-3.2 and 2.1, JEFF-3.3, ENDF/B-VIII.0, and JENDL-4.0u evaluations. Dashed lines delimit the ±1σ measurement standard deviations. (b) Sensitivity of the 103Rh reaction rates at the deepest measurement positions in the ASPIS Iron88 (position A14 at 74 cm) and PCA Replica (position A7 at 59 cm) benchmarks to 56Fe inelastic and elastic cross sections. Legend: “Fe88_Rh_A14 Inel Fe56” stands for the sensitivity of the 103Rh reaction rate at position A14 to the inelastic cross section of 56Fe, etc.

Fig. 9. Sensitivity of the 32Al(n,α), 58Ni(n,p), 103Rh(n,n’), and 115In(n,n’) reaction rates at the measurement position D6 (~50 cm) in the PCA ORNL benchmark to the 56Fe inelastic cross sections calculated using the MCNP6 code.

These differences in the magnitude and energy distribution of the sensitivities are reflected in the differences in the C/E behavior between ASPIS Iron88 and PCA Replica shown in , and .

III.C. Discrepancy in Al Activation Foil Measurements Between the ASPIS Iron88 and PCA ORNL

As shown on , the 27Al(n,α) reaction rates, sensitive to the high-energy neutron flux above ~3 MeV, are severely overestimated for the ASPIS Iron88 experiment by 20% to 30% (except for ENDF/B-VIII.0 with 10% overestimation, however, these cross sections severely underperform for the other threshold reactions). In principle, this could be explained by the nuclear data uncertainties, which were found to be of the same order of magnitude (see and ), with the main contribution coming from the prompt fission neutron spectra uncertainties (~17%, ~29%, and ~14% using, respectively, the JEFF 3.3, ENDF/B-VII.1, and JENDL 4.0u covariance matrices) at high energies. On the other hand, a reasonably good agreement, or even an underestimation, was observed for the same reaction rates in the PCA ORNL benchmark. Moreover, the sensitivities of the 27Al(n,α) reaction rates with respect to the iron cross sections are very similar for the ASPIS Iron88 and PCA ORNL benchmarks (see ).

Fig. 10. The ASPIS Iron88 and PCA ORNL benchmarks: C/E ratios for the 27Al(n,α) reaction rates calculated using the MCNP code and cross sections from the FENDL-3.2 and 2.1, JEFF-3.3, ENDF/B-VIII.0, and JENDL-4.0u evaluations. Dashed lines delimit the ±1σ measurement standard deviations. Examples of ±1σ computational (nuclear data) uncertainties calculated using the SUSD3D codes are shown.

Fig. 11. Sensitivity of the 27Al(n,α) reaction rate at the deepest measurement positions in the ASPIS Iron88 and PCA ORNL benchmarks to 56Fe inelastic cross sections.

This suggests that the previous C/E discrepancy between the two benchmark results could pinpoint to possible measurement issues, probably in ASPIS Iron88 since a reasonably good C/E agreement was observed for other reactions and the 27Al results in the ASPIS Iron88 are the only outliers. Furthermore, Al activation foils were not regularly used in the other ASPIS benchmarks.

IV. CONCLUSIONS

In order to build confidence in the results of nuclear data, verification and validation analyses of integral benchmark experiments and the benchmark measurements themselves must be evaluated and checked to ensure the experimental information is complete and consistent. Three benchmark experiments from the SINBAD database, PCA ORNL, PCA Replica, and ASPIS Iron88, which were performed independently by different experimental teams and experimental equipment, were computationally analyzed independently by two analysts using Monte Carlo and deterministic transport and S/U computational tools.

Although some systematic uncertainties may be present in the two ASPIS benchmarks due to the use of common equipment (e.g., NESTOR reactor-driven fission plate, foil measurement system), they are expected to be minor. A good consistency was demonstrated among the three benchmark results, which gives confidence in the quality of the experimental information and measured results as described in the SINBAD evaluations. This confirms that these SINBAD benchmark evaluations represent a reliable basis for iron data validation. Due to the high thickness of the iron slab, the ASPIS Iron88 benchmark represents clearly the most severe test of iron cross-section data among the three experiments.

A high spread of C/E results was observed among the modern iron nuclear data evaluations. Among the recent nuclear data evaluations, FENDL-3.2 and JENDL-4.0 demonstrated good performance for the ASPIS Iron88, PCA ORNL, and PCA Replica benchmarks. An underestimation of the 115In reaction rates using the recent FENDL-3.2 by about 5% to 10% was nevertheless observed for all three studied benchmark experiments.

It is highly recommended to integrate shielding benchmarks, such as the three benchmarks studied here, in the nuclear data verification and validation procedures. It was also demonstrated that S/U analysis combined with benchmark C/E comparisons can assist in the comprehensive verification and validation process and pinpoint nuclear data deficiencies.

Acknowledgments

This work has been partly funded by the RCUK Energy Programme (grant number EP/T012250/1). To obtain further information on the data and models underlying this paper, please contact [email protected]. Some fusion benchmark analyses have been partly carried out within the framework of the EUROfusion Consortium, funded by the European Union via the Euratom Research and Training Programme (grant agreement no. 101052200—EUROfusion) and from the Engineering and Physical Sciences Research Council (EPSRC) (grant no. EP/W006839/1). The views and opinions expressed, however, are those of the authors only and do not necessarily reflect those of the European Union or the European Commission. Neither the European Union nor the European Commission can be held responsible for them.

Disclosure Statement

No potential conflict of interest was reported by the authors.

References

- I. KODELI and E. SARTORI, “SINBAD—Radiation Shielding Benchmarks Experiments,” Ann. Nucl. Energy, 159, 108254 (2021); https://doi.org/10.1016/j.anucene.2021.108254.

- G. A. WRIGHT and M. J. GRIMSTONE, “Benchmark Testing of JEF-2.2 Data for Shielding Applications: Analysis of the Winfrith Iron 88 Benchmark Experiment,” Report No. AEA-RS-1231, EFF-Doc-229, and JEF-Doc-421, OECD/NEA (1993).

- G. A. WRIGHT et al., “Benchmarking of the JEFF2.2 Data Library for Shielding Applications,” Proc. 8th Int. Conf. on Radiation Shielding, Vol. 2, p. 816 (1994).

- “LWR Pressure Vessel Surveillance Dosimetry Improvement Program: PCA Experiments and Blind Test,” W. N. MCELROY, Ed., NUREG/CR-1861 (HEDL-TME 80-87 R5), Hanford Engeneering Development Laboratory (July 1981).

- J. BUTLER et al., “The PCA Replica Experiment. PART I, Winfrith Measurements and Calculations,” AEEW-R 1736, UKAEA, AEE Winfrith (1984).

- I. KODELI and L. PLEVNIK, “Nuclear Data Adjustment Exercise Combining Information from Shielding, Critical and Kinetics Benchmark Experiments ASPIS-Iron 88,” Progress in Nuclear Energy, 106, 215 (2018).

- I. KODELI, “Transport and S/U Analysis of the ASPIS-Iron-88 Benchmark Using Recent and Older Iron Cross-Section Evaluations,” Proc. Physics of Reactors Int. Conf. 2018. (PHYSOR 2018), American Nuclear Society (2018) (CD-ROM).

- A. HAJJI et al., “Interpretation of the ASPIS Iron 88 Programme with TRIPOLI-4® and Quantification of Uncertainties due to Nuclear Data,” Ann. Nucl. Energy, 140, 107147 (2020); https://doi.org/10.1016/j.anucene.2019.107147.

- M. PESCARINI, R. ORSI, and M. FRISONI, “PCA-Replica (H2O/Fe) Neutron Shielding Benchmark Experiment—Deterministic Analysis in Cartesian (X,Y,Z) Geometry Using the TORT-3.2 3D Transport Code and the BUGJEFF311.BOLIB,” BUGENDF70.BOLIB and BUGLE-96 Cross Section Libraries, ENEA-Bologna Technical Report, UTFISSM-P9H6-009, ENEA Bologna (2014).

- P. C. CAMPRINI and K. W. BURN, “Calculation of the NEA-SINBAD Experimental Benchmark: PCA-Replica,” LA-CP-13-00634, ENEA-Bologna Technical Report, SICNUC-P000-014, ENEA Bologna (2017).

- “JEFF-3.3, The Joint Evaluated Fission and Fusion File,” Nuclear Enegy Agency; https://www.oecd-nea.org/dbdata/jeff/jeff33/.

- “JENDL-4.0,” Japan Atomic Energy Agency; https://wwwndc.jaea.go.jp/jendl/j40/j40.html.

- “ENDF/B-VIII.0 Evaluated Nuclear Data Library,” National Nuclear Data Center, Brookhaven National Laboratory; https://www.nndc.bnl.gov/endf-b8.0/.

- “Fusion Evaluated Nuclear Data Library—FENDL-3.2b,” Nuclear Data Service, International Atomic Energy Agency; https://www-nds.iaea.org/fendl/.

- A. MILOCCO and I. KODELI, “Quality Assessment of SINBAD Evaluated Benchmarks: Iron (NEA-1517/34), Graphite (NEA-1517/36), Water (NEA-1517/37, Water/Steel (NEA-1517/49), Water/Iron (NEA-1517/75), Status Report,” Nuclear Energy Agency (2015).

- A. MILOCCO, B. ZEFRAN, and I. KODELI, “Validation of Nuclear Data Based on the ASPIS Experiments from the SINBAD Database,” Proc. RPSD-2018, 20th Topl. Mtg. Radiation Protection and Shielding Division, August 26–31, 2018, Santa Fe, New Mexico, American Nuclear Society ( 2018).

- I. REMEC and F. B. K. KAM, “Pool Critical Assembly Pressure Vessel Facility Benchmark,” NUREG/CR-6454 ORNL/TM-13205, Oak Ridge National Laboratory (1997).

- S. VAN DER MARCK, “The PCA Pressure Vessel Facility Benchmark—Results with JEFF Q19, ENDF/B, JENDL,” JEFF Mtg., Processing, Verification, Benchmarking, Validation, JEFDOC-2016, Nov. 25, 2020, OECD/NEA (2016).

- D. HANLON, “Responses to Quality Assessment Concerns: ASPIS PCA Replica and NESDIP 3,” Jacob’s report, Jacobs, Dorset (2021).

- I. KODELI et al., “Outcomes of WPEC SG47 on Use of Shielding Integral Benchmark Archive and Database for Nuclear Data Validation,” EPJ Web Conf., 284, 15002 (2023) ND2022, https://doi.org/10.1051/epjconf/202328415002.