Abstract

Design decision-making in infrastructure tenders is a challenging task for contractors due to limited time and resources. Multi-criteria decision analysis (MCDA) promises to support contractors in dealing with this challenge. However, the ability of MCDA to ensure decision quality in the specific context of infrastructure tenders has gained little attention. By undertaking a longitudinal case study on early design decisions in a tender for a design-build project in the Netherlands the relationship between MCDA and decision quality is investigated. The case results show that in the early tender phase the decision making very much relies on the experience and knowledge of engineers. If MCDA is inappropriately used in such a context it can create impressions of soundly underpinned evaluations of design options while neglecting uncertainties and leading to low-quality decision. Although MCDA defines the “what” is required for structuring the decision problem, it does not support decision-makers in the “how” to do it. The explicit consideration of decision quality elements in MCDA can support the “how” and can create awareness for decision makers concerning importance, scope and uncertainty of criteria.

Introduction

In the context of public infrastructure projects integrating design and construction, contracting firms are required to explore and decide on various design alternatives before a tender is let. During this tender period, which can last from 3 months for smaller projects to 1 year for larger projects, contractors have to evaluate a number of design options with varying levels of detail based on a preferred design that reflects different and sometimes conflicting customer needs or prescribed functional requirements. In addition, developing an overall design solution for an infrastructure tender requires an early understanding of the impact of design choices on later project stages (Van Der Meer et al. Citation2015). Whether these early phase design decisions will lead to the most competitive and economically feasible solution remains unknown until the client has evaluated all submitted solutions and selects a preferred bidder. At that moment, the preferred bidder still runs the risk of having submitted an economically unfeasible solution due to mistakes made during the tender. These mistakes will manifest themselves during later project phases such as the detailed engineering phase or the construction phase.

To address the large variety of criteria involved in design decisions for infrastructure tenders, the use of multi-criteria decision analysis (MCDA) tools and methods (e.g. Analytic Hierarchy Process, Multi-Attribute Utility Theory) appears beneficial for systematically structuring both the decision-making problem and the considerations and preferences of the stakeholders regarding the different alternatives. The promise of MCDA is to significantly improve the quality of the decision-making process by introducing transparency, analytic rigour, auditability and conflict resolution for multidimensional decision problems (Kabir et al. Citation2014). Not surprisingly, MCDA has gained popularity in different industries (Wang et al. Citation2009, Huang et al. Citation2011, Kabir et al. Citation2014, Mardani et al. Citation2016) but also for decision problems in the construction and infrastructure sector (Jato-Espino et al. Citation2014, Bueno et al. Citation2015, Tscheikner-Gratl et al. Citation2017). This also includes the tender phase of construction projects. Previous research has suggested several MCDA approaches that can support construction clients in selecting the appropriate contractor (Hatush and Skitmore Citation1998, Fong and Choi Citation2000, Mahdi et al. Citation2002, Cheng and Li Citation2004, Singh and Tiong Citation2005) or contractors in selecting a suitable bidding strategy (Fayek Citation1998, Marzouk and Moselhi Citation2003). However, these studies also have shown that the application of MCDA typically requires the decision maker to make sharp criteria judgements while the information basis is rather weak or to follow a time-consuming process to account for decision uncertainties. This raises some doubts about the suitability of MCDA for ensuring qualitative design decisions in early tender phases for integrated projects. Here, contractors are often forced to make design decisions due to limited time and resource availability without having sufficient information to completely understand the entire set of infrastructure requirements, the operational environment of the infrastructure, and the emergent infrastructure behaviour (Laryea Citation2013, Van Der Meer et al. Citation2015).

By conducting a longitudinal case study on early design decisions in a tender for a design-build project in the Netherlands, this research aims at exploring the suitability of MCDA to ensure decision quality in the context of infrastructure tenders. It extends the understanding of the application of MCDA in the construction sector by showing that inappropriately used MCDA tools and methods can create impressions of soundly underpinned evaluations of design options while neglecting uncertainties and leading to premature decisions of low quality. It particularly shows that MCDA in early tender phases of integrated projects cannot prevent variations in the problem framing between engineers, differences in the logic of using and relying on criteria in the decision-making, and inconsistencies in the desired outcomes resulting from inadequate detail in the design solutions.

In the next section, the decision quality concept is introduced and integrated with the general steps of creating an MCDA to develop a framework that allows the analysis of the achieved decision quality in a tender for a Dutch infrastructure design-build project. Next, the research approach for the longitudinal case study is outlined and how the MCDA process consisting of the weighted-sum method (WSM) and a trade-off matrix (ToM) as MCDA tool is evaluated. Thereafter the case study results are presented. The discussion section outlines the decision quality in infrastructure tenders when using MCDA and outlines possible improvements for the quality of the decision-making process. Some general remarks regarding the possibility of safeguarding decision quality in the tender context by combining decision quality elements with MCDA are made in the conclusion section.

Conceptual basis

Decision quality

The quality of decision making can manifest in two ways: (1) by the process of making a decision and (2) by the different outcomes of a decision (Hershey and Baron Citation1992, Keren and Bruin Citation2005). The outcome perspective puts emphasis on the actual consequences of a decision that is, however, very hard to determine because there is no objective criterion available when the decision is made. That is, for evaluating decision quality, one must know the possible outcomes of a decision, which are not readily accessible prior to the decision (Timmermans and Vlek Citation1996). For construction projects, many evaluations of comparable projects are required to determine the possible outcomes of decisions made in tenders. Although these evaluations might be valuable for contractors, they are impossible to compare. Decisions made in construction projects have a high level of coherence which makes it impossible to determine the actual consequences of each decision. Some outcomes are impossible to evaluate, even if the evaluation data are available. For example, the bidding strategy of competitors is an uncertain determinant that cannot be judged prior to the decision and can lead to losing the bid despite all the best analysis during the tender. From a process-oriented perspective, the effort used to make the decision determines the quality of the decision. The main idea here is that the quality of the decision is not influenced by the outcome of the decision but merely by the quality of the analysis and thought while making the decision (Abbas Citation2016). This means that the quality of a decision does not consequently affect the outcome of a decision. For example, a carpenter decides to quickly repair a rooftop leaving his safety equipment untouched. The repair is successful and without any accidents. In this case, the decision itself would not be classified as of high quality, although the outcome of the decision is successful. The carpenter can only influence the quality of the decisions before making the decision. He has no control over the outcome of decisions because of external circumstances such as a sudden gust of wind. Therefore, the quality of a decision is better measured by the process of making the decision. This process-oriented view on decision quality corresponds to the tender context because the outcome of the design decisions remains unknown until the project is awarded or eventually built. For example, a decision is made to repair an existing construction instead of rebuilding the construction. Given this decision, the contractor only knows the outcome (and the corresponding consequences) of the chosen option if the tender is awarded. The consequences of the other alternative remain unknown. Without this outcome information, the evaluation of the decision contains an inherent component of uncertainty (Einhorn and Hogarth Citation1978). Thus, the main argument for following a process-based approach is that all decisions in an infrastructure tender are made under uncertainty and risk or as Vlek (Citation1984) put it: “A decision is, therefore, a bet, and evaluating it as good or not must depend on the stakes and the odds, not on the outcome (page 7)”. The difficulty, however, is to obtain the appropriate structure and problem space, reflecting all possible outcomes, the degree to which they fulfil the goals, the contingencies between decision and outcome, and the probability of occurrence of different outcomes. The best decision, then, is the alternative with the highest chance of fulfilling the decision maker’s goals. Therefore, the process-oriented approach evaluates a decision’s quality by its structure, including how well it represents the decision maker’s goals (Keren and Bruin Citation2005).

High-quality decisions can be characterized by the following six elements that all need to be present in the decision-making process ( Howard Citation1988, Spetzler et al. Citation2016 ). The first element is an appropriate frame of the decision, which includes a clear understanding of the problem and the determination of the boundaries of the decision. These boundaries are created by what is given, what needs to be decided during the tender and what can be decided after the contract is awarded. For each tender, these boundaries vary based on the client wishes, the contractual boundaries such as the price and non-price factors in the economic scoring formula (Ballesteros-Pérez et al. Citation2012) and the connection with existing infrastructure. The second element is the identification of creative and feasible alternatives. The design alternatives in tenders vary between higher levels of detail based on a preferred design reflecting the clients’ needs or lower levels of detail if alternatives are only based on functional requirements. The third element is the availability of meaningful, reliable and unbiased information that reflects all relevant uncertainties and risks. The information in a tender can be made available by the client or requires additional resources of the contractor for doing inspections, tests, or research on site. During a tender, specific (governmental) regulations that guarantee transparency and equal opportunism for bidders, limit the availability of relevant information to reduce uncertainty and risk. Examples are all sorts of inspections required for analyzing the current state of constructions, soil conditions or specific stakeholder requirements and wishes as it is often not allowed to contact stakeholders. The fourth element is the clarity about the desired outcomes, including acceptable trade-offs. This element relates to the subjective assessment of the potential outcomes of each alternative described in terms of qualitative (e.g. scores) and quantitative (e.g. predicted costs) values and the corresponding assessed outcome probabilities. The fifth element is the logic by which the decision is made. This process includes considerations of uncertainty and risk related to the appropriate level of complexity. Within the infrastructure tenders, under-defined and conflicting objectives such as the economic impact of client wishes and incomplete knowledge of the infrastructure behaviour at later project stages are only a few considerations of uncertainty. The decision maker should select the alternative with the highest expected value, the most certain alternative, or use any other logic for the decision. The sixth element is the commitment to action by all stakeholders to achieve effective action.

These decision quality elements (DQ elements) provide criteria for evaluating the performance of the decision maker on (1) obtaining relevant information and (2) the construction of the problem space and inserting the relevant information appropriately in the decision problem structure.

Multi-criteria decision analysis and decision quality

The aim of multi-criteria decision analysis (MCDA) is to help decision-makers in dealing with complex problems that are characterized by conflicting objectives. It supports a decision maker by organizing and synthesizing the available information to identify the most important criteria for selecting a solution, comparing alternative solutions on those criteria and finally deciding on one solution. This process typically requires scoring or ranking various alternatives against multiple criteria. The result of decision analysis is derived from the scores, as the alternative with the highest score or rank is the most preferred solution (Keeney Citation1988). The decision maker is expected to be consistent and rational in his/her preferences and avoid post-decision regret or drawbacks in the decision process.

Literature on multi-criteria decision making has increased tremendously since the 1970s and a multitude of decision making methods and tools have been developed for a variety of decision problems (Belton and Stewart Citation2003, Mela et al. Citation2012) Numerous reviews have been conducted on the application of MCDA methods in different fields such as agriculture (Hayashi, Citation2000), environmental planning (Huang et al. Citation2011), forest management (Ananda and Herath Citation2009), sustainable energy (Wang et al. Citation2009) or supply chain management (Ho et al. Citation2010) but also construction (Jato-Espino et al. Citation2014) and infrastructure (Kabir et al. Citation2014). These reviews reveal the advantages and disadvantages of MCDA methods which are often designed for a unique decision context. They also show that, despite the variety, the methods all have in common the aim of structuring and guiding the decision-making process to support rational, well-informed and committed decisions. In this sense, they inherently intend to improve the decision quality of the MCDA process which can be described by the following four steps (Guitouni and Martel Citation1998) and can be related to the elements of decision quality ():

Determine various alternatives: The identification of alternatives is required to start a multi-criteria decision analysis. This first step in an MCDA is linked with the DQ element “alternatives”, as the identified alternatives should fit with the problem at hand. Therefore, the first step of an MCDA supports the decision quality by structuring the considered alternatives. In infrastructure tenders, a reference design is often provided by the client and can be used as input for the contractor’s design alternatives. Contractors typically consider this reference model and will use their design and construction knowledge to come up with other feasible alternatives.

Determine the criteria that need to be considered: This second step in an MCDA determines the criteria required to compare alternatives. This step is linked to the DQ elements “frame” and “information”. The “frame” represents the boundaries of the decision that are determined by the considered criteria in an MCDA. These criteria determine what relevant information is required or should be known including the uncertainty in this information. For example, the price and non-price criteria stated in the economic scoring formula used in public tendering as well as the contract requirements and possible opportunities and risks can be input to determine the criteria in an MCDA for an infrastructure tender.

Determine the scoring of each alternative per criterion: the scoring of the criteria is linked with the DQ elements “logic” and “information”. The scoring of each alternative in an MCDA is affected by individual decision-making behaviour (Barfod et al. Citation2011) or group decision-making behaviour (Skorupski Citation2014) and can require the consideration of cognitive limitations (Simon Citation1979) or personal biases (Laing et al. Citation2014). The scoring of each alternative includes considerations of uncertainty and risk which are affected by the increased level of complexity in a tender.

Summarize the scores and determine the solution: the scoring of different criteria is combined for each alternative to find the best choice. The DQ elements “desired outcomes”, “logic” and “commitment to action” are linked in this last step of an MCDA. The potential outcomes of each alternative are described in terms of qualitative and quantitative values, and a decision is made based on sound reasoning. That is, do we agree on the chosen solution and are we committed to this decision?

Table 1. Linking decision quality and MCDA.

These four steps cover all DQ elements, indicating the potential of MCDA in safeguarding the quality of design decisions in infrastructure tenders.

Multi-criteria decision analysis for design decisions in infrastructure tenders

Recent research has shown that decisions made in a construction tender do not hold up well once the project is awarded due to premature tender documents, too many changes in owner’s requirements and unrealistically low tender-winning prices (Rosenfeld Citation2014). With the increased design responsibility of contractors in integrated projects, the quality of design decisions becomes additionally at stake since the tender phase introduces uncertainties related to the internal and external environment of the decision-making process (Durbach and Stewart Citation2012). Uncertainties related to the external environment of design decisions arise through multiple stakeholders in integrated projects with often under-defined and conflicting objectives, changing and unique decision criteria, and unclear preferences over alternatives (Kim and Augenbroe, Citation2013). Uncertainties related to the internal environment stem from limited time and resources for design tasks in a tender. Design teams are often forced to advance the design by taking decisions without completely understanding the entire set of requirements, the operational context and the emergent behaviour of the solution (Laryea Citation2013, Van Der Meer et al. Citation2015). Typically, decision-makers try to control internal uncertainties and assess external uncertainties of design decisions and MCDA is supposed to support them in this. However, two opposing challenges of design teams in a tender may undermine the potential of MCDA in ensuring decision quality. On the one hand, there is pressure to propel the design process by taking decisions under resource and time constraints. It has been shown that if decision makers experience time pressure, they process less information by narrowing down their field of attention and revert back to known behaviour in a rigid way (Klapproth Citation2008). MCDA does not provide guidance on how to obtain relevant information, how to construct the problem space and link relevant information appropriately to it. Thus, in pressurized situations, different frames, different levels of information, or different logics of the individuals involved are likely to be retained. On the other hand, there is a need to cope with design uncertainties. The application of MCDA requires the decision maker to either assess criteria in a deterministic way or assign probability distributions to criteria and establish utility curves to account for uncertainties. For design decisions in the tender context, the former can only revert to incomplete and insufficient information and the latter represents a time-consuming and methodological-demanding process (Velasquez and Hester Citation2013). If, in addition, the decision maker is not able to understand the way MCDA methods work and whether these methods are appropriate to make the decision, then the outcome of an MCDA can create the illusion of a consistent and rational choice (Polatidis et al. Citation2006, Scholten et al. Citation2015). Although scholars have extensively addressed the methodological differences and challenges of MCDA methods, the relationship between MCDA and decision quality has gained little attention so far and there are currently no studies on this relationship for design decisions in integrated project tenders.

Research design

Longitudinal case study of an infrastructure tender

In order to explore the relationship between MCDA and decision quality in the tender context, a single longitudinal case study was set up. The chosen case was a large size infrastructure tender covering the integration of the design, engineering and (re)construction of a large traffic junction with more than 30 km of highway and at least 40 civil engineering objects. The case study took place over a period of 7 months starting with the tendering of the contract until the moment of submitting the tender. This time window represents a valid boundary for the investigation (Street and Ward Citation2012) since it reveals consistency and rationality of the decision-making process during the tender and thus the quality of the decisions made. The tender can be considered complex because of its large size, its multi-disciplinary scope, the integration of design, engineering and (re)construction phases and the limited preparation time of 7 months. The budget was capped at about €420 million. The tender organization consisted of a consortium of three contractors supported by a consultancy firm specialized in the planning phase of projects. The three contractors set up a separate firm for this project while the consultancy firm was involved as a special partner. The scope of the research was limited to the decision-making for the design of the traffic junction. The design decisions for the 40 objects and other parts of the highway were excluded.

The rational for choosing a single case was that the investigated tender represents a “typical case” (Yin Citation2003) for integrated projects in the Dutch infrastructure sector in terms of the responsibility of contractors for integrating design and construction for an infrastructure composed of multiple objects, the involvement of multi-disciplinary teams in the design process, and the restricted time frame for preparing the tender. The case study results are expected to be insightful for similar projects. Another rationale was the longitudinal and exploratory character of the study (Yin Citation2003) through which the influence of the tender phase on the quality of design decisions could be revealed.

Data collection

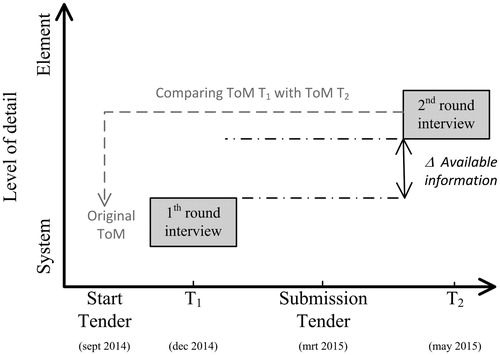

During the tender period of 7 months, 6 observations and 10 interviews took place. Between 2 and 3 months after the tender submission, another 15 interviews were held (see ). The entire tender period as the selected time unit is appropriate for ensuring time unit validity (Street and Ward Citation2012) since this allowed capturing the change of decision quality elements as a result of the tender process.

The first author was actively involved in the project but took no part in the team that was responsible for the design decisions. This allowed the researchers to have full access to all project information including the trade-off matrices used for the design decision and memos of the design meetings. This involvement made it possible to quickly notice sudden changed situations and observe how the team reacted to such changes. These are for example changes in the attitude of the team after a meeting with the client or changes in contract requirements. The observations were carried out during weekly meetings between the management team and the head engineers. The objective of the observations was to identify the group process when discussing possible alternatives and identify the general opinion of the group regarding the current state of the design. The observations were carried out by the first author who made notes during the meetings. The observations, desk research and interviews allowed for triangulation of the data. To be able to assess the quality of the design decisions about the traffic junction and the related decision-making behaviour, the 25 interviews were divided into two separate rounds during and after the tender ().

First round of interviews

In the first round (at T1), 10 interviews were conducted with individuals from the management team and head engineers, which included the tender, design, process, road-design, construction-design, traffic, planning and construction managers, the scheduler and the calculator. The interviews were designed to determine the influence of the interviewee on the decision-making process and on the drawing up of the trade-off matrix. The 10 individuals were chosen because they were key players in the design and tender processes. The interviews were held half-way through the tender to ensure that the chosen solution in the MCDA at the end of the tender would not interfere with the responses given by the interviewees. A list of predetermined questions regarding the determination of the alternatives and the criteria, the availability of required information for scoring the MCDA and the logic behind the scoring formed the basis for the semi-structured interviews. Each interview lasted ∼1 h. All interviews were recorded, transcribed and compared with the observations made during the tender.

Second round of interviews

The second round (at T2) consisted of 15 interviews with key individuals to understand the decision-making process during their design task. The identical team members from round one were interviewed. However, five domain-specific specialists (geotechnical engineer, traffic specialist, architect, road engineer, and civil construction engineer) were also interviewed, because they were involved in the decision-making process of the traffic junction. The interviews were held directly after the tender submission to evaluate the decision process. This created the opportunity to re-create the decision-making process with the participants. However this time, the participants could use all the knowledge and information they gathered during the tender. The outcome of this re-created decision-making process was compared with the original outcomes of the tender. These semi-structured interviews lasted between 1 and 1.5 h and were recorded and transcribed.

To understand the decision-making process of the key individuals, the conceptual content cognitive map (3CM) method of Kearney and Kaplan (Citation1997) was used. The 3CM method is a technique for exploring and measuring the engineer’s perspective regarding the multi-criteria decision-making process in a graphical representation (Tegarden and Sheetz Citation2003). These decisions are based on an engineer’s mental model, as each engineer interprets information differently (Steiger and Steiger Citation2007). Mental models are knowledge structures that integrate the ideas, assumptions, beliefs, facts and misconceptions that together shape the way an individual views and interacts with reality (Kearney and Kaplan Citation1997). The benefit of explicitly mapping the different perspectives is required to frame information in a way that it encouraged evaluation of the engineers’ decision-making process at an individual level (Ahmad and Azman Ali Citation2003). This individual perspective on the decision-making process is valuable, as each individual engineer holds different cognitive maps due to their differences in experience and training. Using cognitive maps allowed us to compare all the individual mental models with the overall decision-making process during the tender. This method was especially valuable under the challenging circumstances of this tender context, because of the many multi-disciplinary criteria to be considered in just 7 months without knowing the impact of the chosen alternative on the planning and construction phase. The team had to re-design the junction within an existing junction, understand the consequences for the environmental impact assessment, and assess whether the new junction could be built with minimal nuisance for the traffic.

In preparation for the second round of interviews, a list of criteria considered relevant for general decision making in infrastructure tenders using trade-offs was developed (). This criteria list was developed by three experts with more than 10 years’ experience in decision making in construction projects. They were not involved in the case study itself. Using this list created a situation in which all participants began with the same set of initial criteria, which is suitable to address large sample sizes and is less time consuming (Kearney and Kaplan Citation1997).

Table 2. List of criteria.

The interviews were structured by the following steps:

The predetermined list of criteria was used to support the interviewee when choosing the most important criteria for the trade-off. To control for bias, the interviewees were told that they could also write down criteria that were not listed.

The interviewee had to give a short explanation of each chosen criteria.

The interviewee had to cluster all chosen criteria and had to state the relationship between the clusters. Within each cluster, the most important criteria were appointed.

The same steps were repeated to list the information sources they considered important for each criterion.

Upon completion of the map, the interviewee had to reflect on the decision-making process during the tender. This step allowed us to validate each created map, which was the result of the engineer’s perspective regarding the multi-criteria decision-making process based on his experience during the tender. The interviewee had to state the similarities and differences between the created map and the decision-making process during the tender. The interviewee was also asked whether all relevant criteria were mentioned.

The interviewee had to compare the original trade-off ( represents the considered criteria in the ToM) with the created cognitive map.

The interviewee had to re-score the trade-off used during the tender. However, for the purpose of this interview, all the scores were erased that were given by the engineers during the tender. The scoring during the tender was given by domain-specific engineers who only scored the domain-specific criteria. For example, the road design manager scored the criteria for the road design while the civil design manager only scored the criteria for the constructions. During the interview, we simulated the same situation by asking the interviewee to re-score only the domain-specific criteria. At both scoring moments (T1 and T2), the engineers had to give a score ranging from −1 (this design solution is worse than the other design solutions), 0 (this design solution is as good as the other design solutions), 1 (this design solution is better than the other design solutions). At T1, the engineers scored 11 design solutions. These design solutions were based on 5 design solutions (turbine-stack hybrid, windmill interchange, two-level turbine, clover-stack interchange and a hybrid interchange) but with small changes in the design. At T2, the engineers only scored the top 3 design solutions because the interview time was limited.

We ended the interview by presenting the original scores to allow for a short narrative of the differences and similarities. We discussed the scoring variations with the engineer to account for possible differences caused by the reduction in compared alternatives.

Table 3. Results of consistency scoring.

Data analysis

The tender teams of the project case applied the weighted-sum method (WSM) (Triantaphyllou Citation2000) for the MCDA and used a trade-off matrix (ToM) as a tool for comparing and scoring design options on various criteria. The ToM also served as means in the case study to understand the MCDA process and the quality of the design decisions, since difficulties in achieving decision quality were expected in step two (determine the criteria) and three (determine the scoring) of the MCDA. The data analysis then also focused on steps 2 and 3 of building an MCDA. The creative process of step 1 is required as input for studying the decision process itself. This step is briefly described in the results for clarifying the case. Steps 2 and 3 were analyzed to create an understanding of the quality of the decision process. The combination of active involvement, observations and interviews made it possible to analyze the development of the design decisions in the related context of a tender. A summary of all the steps in the analysis is presented in .

Table 4. Summary of the taken steps in the analysis.

The analysis started by coding both the interviews and the cognitive maps manually using software for qualitative data analysis (ATLAS.ti). First, the number of times a criterion is mentioned in the cognitive map was counted. Because people learn during their involvement in a project, it was assumed that the engineers would consider the criteria mentioned in the template of the ToM in their cognitive map. Besides these criteria used in the tender, we also wanted to reveal the criteria that were important to the individual engineer. To reduce the impact of learning, each engineer was asked to give a relative weight to the most important criteria listed in the cognitive map. Second, the absolute number of criteria mentioned in the cognitive maps were ordered based on their relative weight. The criteria that were only considered by one or two engineers were excluded from the analysis. For the most mentioned criteria, the engineers’ interpretations were analyzed based on the similarities and differences. This strategy of using both interviews and cognitive maps was followed because the cognitive maps acted as a trigger to map the criteria and the interviews acted as a trigger to give an interpretation of the criteria.

In order to assess the DQ elements “frame”, “information”, “desired outcomes” and “logic”, the consistency and rationality of the design criteria were analyzed. The element “frame” was analyzed by criteria consistency which comprises the extent to which the engineers had a shared understanding of the conceptual meaning of the criteria used at T1 and T2. The data of the most mentioned criteria with the given interpretation were analyzed to find different and similar interpretations between engineers. The element “desired outcome” was analyzed by the scoring consistency; the difference between the original scores given at T1 with the scores given at T2. The element “logic” was analyzed by the rationality of the decision making which covers the extent to which the decision process involved the use of the criteria being considered important in the cognitive maps and the reliance upon these criteria during the tender. The relative importance of each criterion at T2 was compared with the criteria considered in the original ToM at T1. This resulted in a 2 × 2 matrix in which the importance of the criteria based on the cognitive maps is set out against whether the criterion is considered in the ToM during the tender or not. The element “information” was analyzed at T1 and T2 by comparing the available and required information for evaluating and deciding on the design options.

Results

The results that define the quality of the decisions for the traffic junction in the case study are presented in the order of the four generic steps of an MCDA. First, we briefly report on the identification of alternatives and the understanding of the problem (step 1). Thereafter, we report on the DQ element “frame” based on the results of criteria consistency and report on the DQ element “logic” based on the rationality of the used criteria (step 2). Then, we report on the DQ element “desired outcome” based on the consistency in the scoring of the criteria (step 3). The decision made (step 4) is reported on last.

Determine various alternatives (Step 1)

A small team started with the creation of broad alternatives for the layout of the interchange to obtain a first impression of the problem. A creative session resulted in ∼25 alternatives. These were reduced to five alternatives, including the reference design of the client, by eliminating the alternatives that did not comply with the functional requirements in the contract: the required traffic speed, the minimum required connections and the traffic safety. This analysis was carried out by the design manager, traffic engineer, road engineer and architect. The remaining five alternatives formed the basis for the decision frame and roughly varied from one other by the type of intersection with the following four directions: turbine-stack hybrid, windmill interchange, two-level turbine, clover-stack interchange and a hybrid interchange. The management team together with the design managers created a so-called “strategy to win” the tender after the five alternatives were chosen. This “strategy to win” was the result of translating the assessment criteria stated in the contract: (1) reduction of nuisance during construction, (2) process approach, (3) sustainability, (4) CO2-ambition, (5), number of included wishes and (6) price.

Determine the criteria (Step 2)

At the start of the tender, the team decided on the criteria that should be included in the ToM. The “strategy to win” together with the contract requirements shaped the main criteria used in the MCDA. These criteria were translated into the sub-criteria listed in the ToM by each responsible discipline itself (see ).

Criteria consistency

The most mentioned criteria in the cognitive maps are summarised (from most to least mentioned) in . For each criterion, the given interpretations of the engineers are included in italics phrases. The interpretation of differences and commonalities are described in the analysis column of . Consistent interpretation between the engineers exists for the criteria “schedule”, “integrated team” and “phasing” whereas the interpretations for the criteria “risk”, “requirements”, “cost”, “strategy to win” and “support” show inconsistencies. In other words, these criteria were differently framed by the team members. The observation revealed that design discussions during the tender were focussed on the technical effects of the design without developing a common understanding of the predetermined criteria in the ToM. For example, the contract required a design speed of 100 km/h while during a meeting with the client a possible design speed of 80 km/h was discussed. This new customer need flowed into the discussion of the technical design effects (e.g. smaller curve radius) but without being explicitly incorporated in the framing of the decision criterion “requirements”. As a result, some engineers interpreted the criterion “requirements” solely based on the scope of the contract while others also included the new customer need in their interpretation of the criterion “requirements”. The use of the ToM tool supported the team in structuring the criteria but did not result in a shared framing of the problem.

Table 5. Most frequently mentioned and most important criteria with interpretations.

Rationality of criteria

represents the rationality in using the criteria which resulted from a comparison between the criteria used in the tender with the important criteria mentioned in the cognitive maps (). The criteria “schedule”, “costs”, “risks”, “phasing” and “requirements” were considered important criteria during the tender and are mentioned as important criteria in the cognitive maps.

Table 6. Overview of the rationality of criteria.

The criteria “traffic flow”, “traffic safety”, “amount of engineering objects”, “architectural design”, “impact studies”, “Economically Most Viable Bid (EMVB)” and “sustainability” were considered relevant during the tender but were identified as less important criteria in the cognitive maps at T2. Although being relevant, the criteria “impact studies”, “EMVB” and “sustainability” were not scored in the tender. The available detail in the design made it impossible to differentiate between alternatives on these criteria for which the geographical location of the current junction was required. The other criteria (traffic flow, traffic safety, amount of engineering objects and architectural design) could be scored because the functionality of the alternatives could be compared at a functional level of the design, for example, by simply counting the number of engineering objects.

The criteria “strategy to win”, “integrated team”, “support”, “creativity”, and “collaboration” were seen as important in the cognitive maps at T2 but were not explicitly mentioned in the MCDA during the tender. However, the interviews revealed that these criteria were implicitly considered as preconditions required for performing an MCDA. The various cognitive maps showed that, for example, the “strategy to win” was required as input for defining the criteria. The criteria “integrated team”, “support”, “creativity” and “collaboration” were preconditions to ensure that people interact and work together.

All criteria used in the tender were also mentioned in the cognitive maps. However, the criteria in the cognitive maps considered less important were project-specific criteria which were determined based on the required functionalities. Criteria mentioned to be important included those criteria that are crucial for any construction tender, such as “schedule”, “cost”, “risk”, and “requirements”.

Determine the scoring (step 3)

The scoring range of −1, 0, 1 was determined by the process manager at the start of the tender and formalized by the management team before the template of the ToM was used in the tender.

Consistency in scoring criteria

The results in show that three criteria (critical requirements, functional traffic design and constructions) have equal scores at both T1 and T2, two criteria (road design and architectural design) have different scores, while all remaining criteria (impact studies, schedule, procedures and support, risks, fictive disturbance hours, cost and EMVB) were not scored at all.

The criteria that received similar scores at both moments were based on unchanged and available information during the tender. For example, the criteria “functional traffic design” and “critical requirements” were scored based on information regarding the functionality (traffic flow) in the design that did not change. The number of engineering objects could be easily counted and did not change during the tender.

The scoring of the criteria “road design” and “architectural design” were not consistent between T1 and T2. This inconsistency is caused by a difference in the amount of available information between both moments. At T1, the design manager scored the criterion “road design” based on his experience. At T2, the score was based on the available information regarding the required traffic speed, curve radius and the project contour boundaries. The inconsistency in the scoring of the criterion “architectural design” stemmed from additional information on customer needs that was received half-way through the tender as explained by the architect and road design manager. This information changed the way in which the architectural design would be assessed by the client.

A striking result for the criteria that were not scored at both moments is that four of these criteria (schedule, costs, risk and phasing) were considered important criteria (). Engineers were not able to score these criteria because the level of design detail in the tender was insufficient to assess and compare alternatives on the criteria. For example, details about the exact geographical location of the current junction were required to score the criteria “phasing” and “costs” because this information determined the required space and the location of existing constructions. However, this detailed level of design and thus more detailed information were unavailable during the tender (see ). Limited time and resources prevented the identification of other possible competitive solutions and the iteration between various (more detailed) alternatives. The interviewees and the observations during the weekly meetings indicated that engineers struggled with the limited available time. They requested more technical information about alternatives and were hesitant to make decisions. Additional client wishes to be incorporated in the design aggravated the time pressure. Eventually, there was no time left to find more detailed information and the engineers were forced by the management team to make choices and use the remaining time to finalize the bid.

Determine the solution (step 4)

To choose the economically most feasible solution (step 4), the team members discussed the results of the ToM at T1 and used the conclusion of this discussion for their decision. The observation revealed that this logic was based on the engineers’ preferences and experiences, given the available drawings of the alternatives. The ToM was only used to summarise and log the outcome of the decision after the decision was made. In addition, the ToM was not able to make the decision makers aware of the involved uncertainty in their decision. The scoring did not account for variations in criteria outcomes and the criteria “risk” was not scored at all. The ToM suggested a decision that would be based on a well-underpinned comparison of alternatives scored on different criteria while the actual decision was experience-driven and afflicted with risk and uncertainty.

Discussion

A decision process based on MCDA is expected to result in consistent and rational decisions and MCDA tools and methods should support the decision maker in structuring a complex decision-making problem by organizing and synthesizing the available information, identifying the criteria for selecting a solution, comparing design solutions and choosing a solution (Kabir et al. Citation2014). There is mutual consent among scholars that depending on the decision situation and the decision maker different MCDA tools and methods can lead to different decision outcomes and therefore, in order to be supportive, should fit the decision context (Parkan and Wu Citation2000, Mela et al. Citation2012). The presented case study adds to this research line on the usability and appropriateness of MCDA tools and methods by addressing their capability of ensuring decision quality. Instead of comparing MCDA tools and methods for a particular decision problem, it reveals the extent to which quality aspects of a decision can be at stake in a contextual setting of time pressure and limited information, despite the usage of an MCDA. Design decisions in tenders for integrated infrastructure projects have to be made in such a context. While in a traditional design process, more detailed design information is produced through iterative loops of designing, testing and evaluating, the number of iterations in the design process for an infrastructure tender are restricted by the tender duration. This leads to increased design uncertainty because detailed design information for finding an economically feasible solution is unavailable. Decision makers have to judge criteria based on a limited amount of information in a period of just a few months. The results of the case study suggest that in the tender context an MCDA does not necessarily support decision makers in making criteria judgements to allow for consistent and rational decisions and ensure qualitative decisions. It can even create the illusion of a rational decision-making process while the decision quality is characterized by several shortcomings as discussed below.

Variations in problem framing

The variations in the interpretation of the identified criteria indicate that the DQ elements “frame” and “information” were not agreed upon. The engineers’ interpretation of the criteria defines the boundaries of the decision frame and consequently the information required for this decision frame. For example, different information is required if the criterion “risk” is interpreted as a “potential showstopper” compared to a “cost-related risk” interpretation. A “potential showstopper” requires information at a functional level of a solution while the “cost-related risk” requires more detailed information about the possible consequences in terms of, for example, costs. The boundaries of the “frame” determine the required level of information which results in different levels of uncertainty if information is not available. Without knowing and addressing this involved uncertainty, it is impossible to foresee whether one alternative is better than another alternative and thus to make rational decisions. The MCDA was not able to prevent these variations in problem framing and could not create awareness for the uncertainties emanating from them.

Differences in the logic of using and relying on criteria

The differences in the rationality of using criteria suggest that the “logic” of the decision making is not aligned with the appropriate level of design complexity. The criteria considered important (risk, costs, schedule) during and after the tender were not scored because of the shortage of design details. Besides the fact that these criteria were considered important by the interviewees, these criteria are also often classified as criteria that are important for any tender. However, without aligning these criteria with the scope of the tender it is not possible to score these criteria using considerations of risk and uncertainty, instead, the criteria were discussed within the tender team trying to understand the consequences of each alternative. The final decision was based on the partially filled ToM together with the results of the discussion, but without explicitly involving the related uncertainties in the ToM. The ToM supported the decision-making process by structuring the criteria and scoring the criteria if possible, but the ToM was not used for making the final decision based on the scoring results. The ToM was rather used to give the decision a rational character by structuring the decision at a level of detail that was not given while ignoring the incomplete and uncertain information underlying the decision.

Inconsistencies in the desired outcomes

The inconsistencies in the scoring of the alternatives before and after the tender indicate the influence of the available information on the DQ element “desired outcomes”. If the scores were given based on the experiences of engineers, then the results show that roughly the same scores were given during and after the tender. If the scores were given based on the availability of information, then the results show differences in the scoring during and after the tender. For example, more information regarding the “client wishes” and “curve radius” became available during the tender and led to different scores. These results about the re-scoring of alternatives after submission of the tender point to the insufficient information available and time pressure faced during the tender and the reliance on experiences when making decisions (Klapproth Citation2008). In combination with the unawareness of uncertainties in the design decision, this again shows the insufficiency of the MCDA to support design decisions in a tender context which may lead to the impression of soundly made decisions neglecting the uncertainties.

Managerial implications

Using MCDA tools and methods other than the ones in the case study will probably result in the same results because the decision process would still be based on the same amount of information using the same problem frame. Instead, the incorporation of DQ elements in the MCDA process by adding a few important steps can create the opportunity to better track the quality of the decision process. These steps should support a tender team in defining the scope of the criteria, in determining the uncertainty involved in the decision and should create situational awareness. The achieved decision quality for the traffic junction decision could, in this case, be improved by focusing on the DQ elements “frame” and “information”. The boundaries of each criterion in an MCDA could be set by simply discussing the definition of each criterion. Such a discussion creates awareness about which criteria to consider and a common understanding of the criteria. These definitions can then be used to identify the required information for evaluating the alternatives and associated information uncertainties. The DQ elements then indicate when more focus is required on specific elements to increase the probability of finding the best competitive solution and make quality decisions without knowing the outcome of a decision.

Limitations

The limitation of the research design is that only the quality of the process could be indicated. Whether the most competitive solution was found could not be indicated. Furthermore, the research findings need to be interpreted closely within the specific context of the Dutch construction market and within the context of public tenders. The results are based on a single Dutch project which is considered a typical tender and therefore informative for other tenders. Nevertheless, further research should verify, test and compare our results from a broader perspective, for example in other similar tenders. The focus of this study was based on the interpretation and selection of criteria required for building a ToM. The way a ToM is scored was only briefly researched. We encourage further research into the scoring method itself and into the possibility of influencing the engineer in his perception of the problem. Each engineer has his/her own specific preferences or risk perceptions of the alternatives, which he/she uses to score the alternatives. The influence of both individual and team preferences and perceptions on the outcome of using a ToM is unknown. Therefore, not only is further research required to explore the link between decision analysis and decision quality, but further research is also required to explore the impact of preferences and perceptions of engineers on the scoring of alternatives.

Conclusions

By following an exploratory, longitudinal case study approach a tender for an integrated infrastructure project in the Netherlands was analyzed to capture the capability of MCDA to ensure the quality of design decisions made by engineers in the tender phase. Contributions are made to our understanding of MCDA in the context of the construction sector by taking a contractor’s perspective on design decisions in public tenders, which is currently missing in the literature. It shows that in the tender context the decision making very much relies on the experience and knowledge of the engineers and that an inappropriately used MCDA can create impressions of soundly underpinned evaluations of design options while neglecting uncertainties and leading to low-quality decisions. Based on the insights of how a ToM as MCDA tool is used in the design practice of a tender it can be concluded that an MCDA defines the “what” is required in terms of structuring the decision problem, but it does not define the “how” to do it. The explicit consideration of DQ elements in MCDA can support the “how” by defining the decision frame for each criterion and supporting the evaluation of whether the quality of the used information is in line with the defined problem frame. Incorporating DQ elements in MCDA can create awareness for decision makers concerning importance, scope and uncertainty of criteria to consider in their search for a competitive solution without knowing the outcome of the decision.

Disclosure statement

No potential conflict of interest was reported by the authors.

References

- Abbas, A.E., 2016. Perspectives on the use of decision analysis in systems engineering: Workshop summary. In: Annual IEEE Systems Conference (SysCon) proceedings, 18–21 April 2016. Orlando Florida: IEEE, 1–6.

- Ahmad, R. and Azman Ali, N., 2003. The use of cognitive mapping technique in management research: theory and practice. Management research news, 26 (7), 1–16.

- Ananda, J. and Herath, G., 2009. A critical review of multi-criteria decision making methods with special reference to forest management and planning. Ecological economics, 68 (10), 2535–2548.

- Ballesteros-Pérez, P., González-Cruz, M.C., and Cañavate-Grimal, A., 2012. Mathematical relationships between scoring parameters in capped tendering. International journal of project management, 30 (7), 850–862.

- Barfod, M.B., Salling, K.B., and Leleur, S., 2011. Composite decision support by combining cost-benefit and multi-criteria decision analysis. Decision support systems, 51 (1), 167–175.

- Belton, V. and Stewart, T.J., 2003. Multiple criteria decision analysis: an integrated approach. Boston: Kluwer Academic Publishers.

- Bueno, P.C., Vassallo, J.M., and Cheung, K., 2015. Sustainability assessment of transport infrastructure projects: a review of existing tools and methods. Transport reviews, 35 (5), 622–649.

- Cheng, E.W.L. and Li, H., 2004. Contractor selection using the analytic network process. Construction management and economics, 22 (10), 1021–1032.

- Durbach, I.N. and Stewart, T.J., 2012. Modeling uncertainty in multi-criteria decision analysis. European journal of operational research, 223 (1), 1–14.

- Einhorn, H.J. and Hogarth, R.M., 1978. Confidence in judgment: persistence of the illusion of validity. Psychological review, 85 (5), 395–416.

- Fayek, A., 1998. Competitive bidding strategy model and software system for bid preparation. Journal of construction engineering and management, 124 (1), 1–10.

- Fong, P.S.-W. and Choi, S.K.-Y., 2000. Final contractor selection using the analytical hierarchy process. Construction management and economics, 18 (5), 547–557.

- Guitouni, A. and Martel, J.M., 1998. Tentative guidelines to help choosing an appropriate MCDA method. European journal of operational research, 109 (2), 501–521.

- Hatush, Z. and Skitmore, M., 1998. Contractor selection using multicriteria utility theory: an additive model. Building and environment, 33 (2), 105–115.

- Hayashi, K., 2000. Multicriteria analysis for agricultural resource management: a critical survey and future perspectives. European journal of operational research, 122 (2), 486–500.

- Hershey, J.C. and Baron, J., 1992. Judgment by outcomes: when is it justified? Organizational behavior and human decision processes, 53 (1), 89–93.

- Ho, W., Xu, X., and Dey, P.K., 2010. Multi-criteria decision making approaches for supplier evaluation and selection: a literature review. European journal of operational research, 202 (1), 16–24.

- Howard, R.A., 1988. Decision analysis: practice and promise. Management science, 34 (6), 679–695.

- Huang, I.B., Keisler, J., and Linkov, I., 2011. Multi-criteria decision analysis in environmental sciences: ten years of applications and trends. Science of the total environment, 409 (19), 3578–3594.

- Jato-Espino, D., et al., 2014. A review of application of multi-criteria decision making methods in construction. Automation in construction, 45 (C), 151–162.

- Kabir, G., Sadiq, R., and Tesfamariam, S., 2014. A review of multi-criteria decision-making methods for infrastructure management. Structure and infrastructure engineering, 10 (9), 1176–1210.

- Kearney, A.R. and Kaplan, S., 1997. Toward a methodology for the measurement of knowledge structures of ordinary people. Environment and behavior, 29 (5), 579–617.

- Keeney, R.L., 1988. Value-driven expert systems for decision support. Decision support systems, 4 (4), 405–412.

- Keren, G. and Bruin, W.B.D., 2005. On the assessment of decision quality: considerations regarding utility, conflict and accountability. In: D. Hardman, L. Macchi, eds. Thinking: psychological perspectives on reasoning, judgment and decision making. Hoboken, NJ: John Wiley & Sons.

- Kim, S.H. and Augenbroe, G., 2013. Decision support for choosing ventilation operation strategy in hospital isolation rooms: a multi-criterion assessment under uncertainty. Building and environment, 60, 305–318.

- Klapproth, F., 2008. Time and decision making in humans. Cognitive, affective & behavioral neuroscience, 8 (4), 509–524.

- Laing, G., Ross, S., and Joubert, M., 2014. Economic decision making and theoretical frameworks: in search of a unified model. e-Journal of social & behavioural research in business, 5 (1), 36–49.

- Laryea, S., 2013. Nature of tender review meetings. Journal of construction engineering and management, 139 (8), 927–940.

- Mahdi, I.M., et al., 2002. A multi-criteria approach to contractor selection. Engineering, construction and architectural management, 9 (1), 29–37.

- Mardani, A., et al., 2016. Multiple criteria decision-making techniques in transportation systems: a systematic review of the state of the art literature. Transport, 31 (3), 359–385.

- Marzouk, M. and Moselhi, O., 2003. A decision support tool for construction bidding. Construction innovation, 3 (2), 111–124.

- Mela, K., Tiainen, T., and Heinisuo, M., 2012. Comparative study of multiple criteria decision making methods for building design. Advanced engineering informatics, 26 (4), 716–726.

- Parkan, C. and Wu, M.-L., 2000. Comparison of three modern multicriteria decision-making tools. International journal of systems science, 31 (4), 497–517.

- Polatidis, H., et al., 2006. Selecting an appropriate multi-criteria decision analysis technique for renewable energy planning. Energy sources, part B: economics, planning, and policy, 1 (2), 181–193.

- Rosenfeld, Y., 2014. Root-cause analysis of construction-cost overruns. Journal of construction engineering and management, 140 (1), 1–10.

- Scholten, L., et al., 2015. Tackling uncertainty in multi-criteria decision analysis – an application to water supply infrastructure planning. European journal of operational research, 242 (1), 243–260.

- Simon, H.A., 1979. Rational decision making in business organizations. The American economic review, 69 (4), 493–513.

- Singh, D. and Tiong, R.L.K., 2005. A fuzzy decision framework for contractor selection. Journal of construction engineering and management, 131 (1), 62–70.

- Skorupski, J., 2014. Multi-criteria group decision making under uncertainty with application to air traffic safety. Expert systems with applications, 41 (16), 7406–7414.

- Spetzler, C., Winter, H., and Meyer, J., 2016. Decision quality: value creation from better business decisions. Hoboken, NJ : John Wiley & Sons.

- Steiger, D.M. and Steiger, N.M., 2007. Decision Support as knowledge creation: an information system design theory. In: 40th Annual International conference on System sciences. Hawaii: IEEE.

- Street, C.T. and Ward, K.W., 2012. Improving validity and reliability in longitudinal case study timelines. European journal of information systems, 21 (2), 160–175.

- Tegarden, D.P. and Sheetz, S.D., 2003. Group cognitive mapping: a methodology and system for capturing and evaluating managerial and organizational cognition. Omega, 31 (2), 113–125.

- Timmermans, D. and Vlek, C., 1996. Effects on decision quality of supporting multi-attribute evaluation in groups. Organizational behavior and human decision processes, 68 (2), 158–170.

- Triantaphyllou, E., 2000. Multi-criteria decision making methods: a comparative study, applied optimization. Dordrecht/Boston/London: Kluwer Academic Publishers.

- Tscheikner-Gratl, F., et al., 2017. Comparison of multi-criteria decision support methods for integrated rehabilitation prioritization. Water, 9 (2), 68.

- Van Der Meer, J., et al., 2015. Challenges of using systems engineering for design decisions in large infrastructure tenders. Engineering project organization journal, 5 (4), 133–145.

- Velasquez, M. and Hester, P.T., 2013. An analysis of multi-criteria decision making methods. International journal of operations research, 10 (2), 56–66.

- Vlek, C., 1984. What constitutes ‘a good decision’?: A panel discussion among Ward Edwards, István Kiss, Giandomenico Majone and Masanao Toda. Acta psychologica, 56 (1), 5–27.

- Wang, J.-J., et al., 2009. Review on multi-criteria decision analysis aid in sustainable energy decision-making. Renewable and sustainable energy reviews, 13 (9), 2263–2278.

- Yin, R.K.-Z., 2003. Case study research: design and methods, 3rd ed. Thousand Oaks, CA: Sage.