ABSTRACT

Game-based learning (GBL) is widely utilised in various domains and continues to receive interest and attention from researchers and practitioners alike. However, there is still a lack of empirical evidence concerning its effectiveness, making GBL evaluation a critical undertaking. This paper proposes an integrated approach for planning and executing GBL evaluation studies and presents its application by evaluating the effectiveness of a GBL approach to improve the Arabic reading skills of migrant refugee children in an informal learning setup. The study focuses on how children’s age group, learning modality preference, and prior mobile experience affect their learning, usability, and gameplay performance. A quasi-experiment with a one-group pretest-posttest design was conducted with 30 children (5–10 years old) from migrant refugee backgrounds. The results show a statistically significant improvement in their reading assessment score. The results also outline a clear impact of children’s age groups on their learning gain, usability score, and total levels played. Moreover, learning modality preference and prior mobile experience both had a statistically significant effect related to usability and gameplay performance parameters. However, no effect was found on learning gain. Based on the findings, some design recommendations are suggested for more inclusive design focusing on user characteristics.

1. Introduction

In recent years, educational games have become more prevalent, and game-based learning (GBL) is now a well-established and growing research area that receives substantial attention from researchers and practitioners (Salah et al. Citation2016; Backlund and Hendrix Citation2013; Ariffin and Sulaiman Citation2014). Although GBL is considered an alternative learning tool for education and training and is widely utilised in various settings and domains (Prensky Citation2003), there is still a need for more empirical studies to prove its effectiveness (Ariffin and Sulaiman Citation2014; Boyle et al. Citation2016). Thus, it is essential to evaluate educational games’ effectiveness before being used in a real context (Becker Citation2013).

With the increase in complexity and cost of learning game design, evaluating them is essential (Becker Citation2013; Wouters, Van der Spek, and Van Oostendorp Citation2009). Although plenty of resources are available for the design of educational applications, approaches to guide the evaluation are not (Becker Citation2013; Schleyer and Johnson Citation2003; Kafai, Franke, and Battey Citation2002). Mohamed and Jaafar (Citation2010) identified three challenges in educational game evaluation: evaluation criteria, evaluators, and the evaluation process. The evaluation criteria are important as they address the essential elements that need to be evaluated in the educational game to fulfil the evaluation goal. According to the literature on GBL evaluation (Calderón and Ruiz Citation2015; Tahir and Wang Citation2017), educational games are evaluated at different development stages and depending on the evaluation goal, different characteristics are assessed by selecting different criteria. The GBL evaluation literature also shows that different methods, techniques, and procedures have been used to evaluate learning games. However, when assessing the impact of learning games, the main interest mostly is to determine the educational effectiveness (learning outcomes), usability, and the user experience of the game. Most authors prioritise the evaluation criteria with respect to evaluation goals and verifying that the game has satisfied its specified objectives (Calderón and Ruiz Citation2015). Patton (Citation2008) and other researchers pointed out that there is no single universal approach for designing an evaluation study (Diamond, Horn, and Uttal Citation2016). Therefore, we can say that each evaluation study is distinct and unique. When developing the evaluation plan, it is important to select the criteria and measures specific to the evaluation goal to guide the learning game's assessment. Calderón and Ruiz (Citation2015) proposed a taxonomy of models useful for evaluating different quality characteristics. Hence, a guiding approach for developing an evaluation plan would be useful for planning and designing an evaluation study, from articulating the purpose/goal to selecting evaluation characteristics/criteria and establishing evaluation questions to specify metrics and analysis methods to guide the assessment of our learning game (Diamond, Horn, and Uttal Citation2016).

Several researchers have highlighted the importance of GBL in language learning to improve students’ performance and make learning more active, mainly focusing on classroom practice (Godwin-Jones Citation2014; Ju and Adam Citation2018; Sahrir and Yusri Citation2012). Language learning becomes even more important for migrants, as it is crucial to learn the language to integrate into a new society (Lou and Noels Citation2020). The Syrian crisis (Yazgan, Utku, and Sirkeci Citation2015) deprived over 2.25 million Syrian children of school education both within Syrian and other countries. Refugee children have to cope with high levels of stress and traumas affecting their learning ability, and little means of education were available for these children. It is important to teach these children basic literacy skills in Arabic (Wofford and Tibi Citation2018) for further integration into schools, or we might end up with a whole generation that cannot read or write in their mother tongue. Since smartphones are commonly used among migrants to stay connected (Gordano Peile and Ros Hijar Citation2016; Ros Citation2010), this can be a means for language learning purposes (Gaved and Peasgood Citation2017; Castaño-Muñoz, Colucci, and Smidt Citation2018). In their research, Bradley et al. (Citation2020) focused on mobile literacy of Arabic-speaking migrants and the use of mobile technology to support migrants’ language learning process and integration in Sweden. Researchers (Sahrir and Yusri Citation2012) have highlighted a lack of research regarding instructional and learning support in Arabic. Many have made an effort to improve this situation by focusing on Arabic language learning games and indicated positive results concerning GBL effectiveness for students’ acquisition of Arabic language skills. However, here also, the focus has been mostly on classroom teaching (Sahrir and Yusri Citation2012; Eltahir et al. Citation2021; Sahrir and Alias Citation2012). According to Boyle et al. (Citation2016), investigating informal learning in games can provide important insights into game mechanisms that can improve learning game design.

Moreover, limited educational gaming research has focused on user characteristics and their influence on performance outcomes and game experience in GBL environments (Orvis, Horn, and Belanich Citation2009). Learner characteristics affect online learning (Lim and Kim Citation2003), and the growing use of learning technologies demands a sound understanding of learner characteristics that affect learning with technology (Nakayama, Yamamoto, and Santiago Citation2007). However, this relation is less explored in learning games since the greater focus has been on learning effectiveness and usability (Calderón and Ruiz Citation2015; Tahir and Wang Citation2017).

This paper outlines the simple and effective integrated LEAGUÊ-GQM approach to create an evaluation plan for assessing learning games’ effectiveness by establishing the goals, defining questions, and identifying measures for the evaluation process with the LEAGUÊ evaluation guide. The main novelty of the presented approach is its simplicity and the GBL-specific guidance. The proposed approach is applied in practice for planning and conducting an evaluation study on the Arabic language learning game Feed the Monster (FTM). With the backdrop of the Syrian crisis and the ‘EduAppSyria’ initiative project focusing on Arabic language learning games for Syrian refugee children (Nordhaug Citation2019), this user study's main objective was to evaluate the effectiveness of the GBL approach for migrant refugee children to learn Arabic reading skills in an informal learning environment. The study employed a quasi-experimental design with 30 children (5–10 years old) and collected quantitative and qualitative data to answer five research questions. The research questions focused on the learning gain using GBL intervention; age-group differences between younger and older children; the correlation between learning modality preferences of children and their learning gain, usability score and gameplay performance; and the correlation between mobile usage experience of children and their learning gain, usability score and gameplay performance, and usability issues faced by children when playing with the learning game for the first time. This study used mixed methods including pre/post-test, questionnaire, interview, game logs, observation checklist, and notes to analyse the learning gain with GBL and the effect of user characteristics (age group, learning modality preferences, and mobile usage experience) on the learning gain, usability, and game performance.

The contribution of this paper is two-fold. First, it proposes an integrated approach (LEAGUÊ-GQM) for planning a GBL evaluation. Second, it presents implications and design recommendations for effective learning game design based on the evaluation study results on the potential of using game-based language learning for teaching refugee children Arabic reading skills in an informal learning setup. The rest of this paper is organised as follows: Section 2 presents an overview of the related work. Section 3 describes the methodology that includes the proposed integrated LEAGUÊ-GQM approach for planning GBL evaluation and its application to the user study (evaluation of Arabic language learning game-Feed the Monster) presented in this article. Section 4 presents the results from the quasi-experiment concerning five research questions. Section 5 discusses the results, design recommendations, and limitations of the study. Lastly, Section 6 concludes the article and gives directions for future research.

2. Related work

This section presents the related work describing the potential of GBL identified by relevant research studies, importance of GBL evaluation and challenges in educational game evaluation. Further it highlights the scarcity of empirical research concerning the effectiveness of digital game-based language learning (DGBLL) in general and a lack of DGBLL studies in Arabic language learning. Moreover, this section also underlines the need for research concerning learner characteristics to understand the mitigating factors (such as age, gender, learning styles, prior knowledge etc.) that influences learning with serious games.

2.1. Game-Based learning effectiveness evaluation

Game-based learning (GBL) refers to games for education and learning purposes (Tang, Hanneghan, and El Rhalibi Citation2009). Many researchers have investigated the potential of learning games, and mixed results are obtained regarding the evidence about its impact (Salah et al. Citation2016). Some research studies found positive effects, whereas others found no significant effect of using games for learning (Boyle et al. Citation2016; Wouters, Van der Spek, and Van Oostendorp Citation2009). López-Fernández et al. (Citation2021) based on their research finding reported that students who used educational games for learning were more motivated and experienced fun. Moreover, majority of students prefered GBL over traditional teaching approach. Similarly, Eltahir et al. (Citation2021) also found in their study that students using GBL showed more improved knowledge of concepts and higher motivation compared to students taught with traditional strategy. Akçelik and Eyüp (Citation2021) investigated the effects of educational games on vocabulary knowledge and found that students enjoyed learning vocabulary with games and learned more easily. Although many researchers have reported that games can increase motivation and interest, there is still a need for more empirical studies to assess the educational effectiveness of learning games as most of the studies base their claims on subjective judgment and personal encounters (Ariffin and Sulaiman Citation2014). According to the systematic review conducted by Kalogiannakis, Papadakis, and Zourmpakis (Citation2021) digital technologies such as gamification has the potential to heavily influence the learning process. However, the review outlined that mostly small longitudinal studies revealing mixed results have been conducted highlighting the need for more research exploring long-term effects to clarify the impact of such technology on education. Boyle et al. (Citation2016) reported an increase in the empirical evidence concerning positive outcomes of playing games. However, they suggested that detailed experimental studies are crucial for future research to systematically explore the game features most effective in supporting learning. Behnamnia et al. (Citation2020) investigated whether digital game-based learning application can improve creativity skills in preschool children. The study focused on the components of creativity and learning levels when using Digital Game-Based Learning (DGBL) for children under the age of six and found that DGBL can potentially affect young children’s’ ability to develop creative skills, knowledge transfer, critical thinking, acquisition of digital experience skills and a positive attitude for deep and insightful learning. According to Bellotti et al. (Citation2013), educational games must be able to show that necessary learning has occurred like any other education tool. Therefore, to affirm their impact, it is crucial to systematically evaluate them (Marciano, de Miranda, and de Miranda Citation2014). Moreover, the costly and time-consuming development of educational games demands for continued assessment of their efficacy and a need to identify principal criteria (De Freitas and Oliver Citation2006; De Freitas and Liarokapis Citation2011). The diverse GBL characteristics make its evaluation a difficult task (Djelil et al. Citation2014). Mohamed and Jaafar (Citation2010) identified that establishing evaluation criteria and process are the main challenges in educational game evaluation. Although different aspects important for GBL have been highlighted by previous research, an overreaching approach is required to guide evaluation and design iterations (De Freitas and Liarokapis Citation2011; Van Staalduinen and De Freitas Citation2011; Oprins et al. Citation2015). Depending on the evaluation goal, educational games are evaluated at different development stages, and different criteria are selected for assessing different characteristics (Calderón and Ruiz Citation2015; Tahir and Wang Citation2017). Patton (Citation2008) and other researchers pointed out that there is no single approach for designing an evaluation study (Diamond, Horn, and Uttal Citation2016). However, All, Castellar, and Van Looy (Citation2021) evaluated the feasibility of previously defined best practices for assessing DGBL effectiveness and focused on research design components providing insights into feasible experimental designs to further guide design of DGBL effectiveness studies. Dondi and Moretti (Citation2007) pointed out that identifying criteria is a time-consuming and complex process, and not many approaches are available to guide the evaluating process of learning games (Becker Citation2011). Ak (Citation2012) emphasised the need to define critical aspects of educational games that make them effective. These aspects could serve as evaluation criteria and guide the evaluation process. Calderón and Ruiz (Citation2015) proposed that having a taxonomy of models could be helpful for evaluating different quality characteristics. Moreover, according to Zourmpakis and Kalogiannakis (Zourmpakis, Papadakis, and Kalogiannakis Citation2022), teachers play a key role to understand the individual needs of students and provide them with proper learning material and evaluate the complete learning process. Therefore, it is also important to explore how teachers design and integrate gamified environments into education and teaching.

2.2. Educational games in language learning, refugee context and arabic language

The use of learning games for language acquisition is not new, and many researchers have focused on digital games for language and second language learning (Hung et al. Citation2018; Poole and Clarke-Midura Citation2020; McKiddy Citation2020). Most studies have investigated the following categories of language acquisition using games: alphabets, listening, reading, speaking, writing, vocabulary, grammar, pronunciation, and mixed or integrated skills, focusing mainly on formal education (Hung et al. Citation2018; Kocaman and Cumaoglu Citation2014; Neville, Shelton, and McInnis Citation2009; Jalali and Dousti Citation2012; Chen and Yang Citation2013). Despite the increase in the emerging literature on digital GBL and its educational value, empirical evidence is scarce concerning its effectiveness in language education (Godwin-Jones Citation2014). Chen and Yang (Citation2013) investigated the effects of using an adventure game for college students’ foreign language learning. They found no significant difference in the vocabulary score of the two groups. However, the students perceived the game to be helpful in improving their motivation and language skills. Jalali and Dousti (Citation2012) explored the impact of educational games on grammar and vocabulary gain of elementary students and found no significant differences between the two groups (experimental and control).

The devastating impact of the Syrian conflict on refugee children and their education led researchers to focus on literacy education interventions for serving Syrian refugee children (Wofford and Tibi Citation2018; Al Janaideh et al. Citation2020). A review study juxtaposing refugee needs with mobile learning apps characteristics found that mobile learning is beneficial for refugees (Drolia et al. Citation2020). The study concluded that mobile learning provides access to education and also improves the quality of education provided to refugees. Drolia et al. (Citation2022) highlighted in their study that mobile learning research focusing on social groups such as refugees, learner with disabilities or learning difficulties is very limited. The review study focused on existing mobile learning applications for refugees and their characteristics, and the most important characteristics included refugees’ cultural features and interwoven psychological and educational features. Many researchers have focused on language learning tools for refugees and identifying the language learning needs (Castaño-Muñoz, Colucci, and Smidt Citation2018; Abou-Khalil et al. Citation2019). Akçelik and Eyüp (Citation2021) found that educational games are effective for teaching vocabulary and improved refugee students’ Turkish vocabulary knowledge. According to Hung et al. (Citation2018), English as a second language is most targeted in digital game-based language learning (DGBLL) literature, whereas other languages like Arabic are lacking. Although the research has highlighted the need for Arabic language and literacy skills for the academic achievement of refugee students (Baddour Citation2020), very few DGBLL initiatives focus on Arabic literacy skills (Czauderna and Guardiola Citation2019). Azizt and Subiyanto (Citation2018) investigated the effects of digital GBL on high school students’ Arabic reading skills. They found it useful to increase students’ academic performance (experimental group scored significantly higher) in Arabic learning. Kenali et al. (Citation2019) investigated the impact of using smartphone language games on Arabic speaking skills in non-native speakers by conducting an experiment with university students. The findings showed a significant positive effect on the speaking skill of students. Sahrir and Alias (Citation2012) found a positive perception of learning Arabic using online games among university students. Moreover, Putri et al. (Citation2021) in their study reported that educational games are effective in increasing Arabic vocabulary in the higher education by increasing learning motivation and making it easier for students to understand the content. Eltahir et al. (Citation2021) also investigated the impact of GBL on an Arabic language grammar course in higher education and found that students using GBL showed more improved knowledge of Arabic grammar concepts and higher motivation compared to students taught with traditional strategy. According to a review by Murtadho (Citation2021), there is increased interest in digital tools among Arabic language educators. However, its availability and usage are still limited in Arabic classrooms and require more successful management, acquisition and use.

Moreover, as highlighted by Masrop et al. (Citation2019), there is a lack of DGBLL studies in Arabic language learning, and only a few Arabic learning games are dedicated to children. According to bin Zainuddin et al. (Citation2021) digital game-based language learning applications for Arabic Language are much needed for primary school children to improve their Arabic language proficiency. Ali Ramsi (Citation2015) analysed the Arabic learning games for children and found that they are generally simplistic, revolve around the same trivial idea, lack a systematic design process, and do not have quality animation, colours, graphics, and voice-over. Similar results were reported by Masrop et al. (Citation2019) from their analysis of existing games. In addition, they found that Arabic language learning games are mostly limited to alphabet content and lack engaging features in the game. The majority of studies in DGBLL focused on higher education/university students, and only a few studies have explored the individual differences of learners and how it affects their learning and content knowledge (Hung et al. Citation2018). The younger age-groups, informal learning setups, and learner characteristics are less explored areas and need attention.

2.3. Learner characteristics

Previous research indicates that all students do not perform equally in technology-enhanced learning environments due to factors related to learner characteristics (such as learning styles, prior knowledge) or/and the learning environment itself that influences student success (Terrell and Dringus Citation2000; Birch and Bloom Citation2002; Wojciechowski and Palmer Citation2005). With the growing use of learning technologies, there is an increased demand for research concerning learner characteristics (Nakayama, Yamamoto, and Santiago Citation2007; Wojciechowski and Palmer Citation2005). The review on serious game research by Wouters, Van der Spek, and Van Oostendorp (Citation2009) identified the lack of understanding of mitigating factors (such as age, gender) that affect learning with serious games. Nakayama, Yamamoto, and Santiago (Citation2007) emphasised the need to better understand learner characteristics that influence learning and an effective design that customises the learning activities to serve individual characteristics and learning needs of different users. Bontchev and Paunova (Bontchev, Terzieva, and Paunova-Hubenova Citation2021) focused on principles for personalisation of gameplay and learning content in serious games based on player and learner-related aspects of the student’s profile. The research findings highlighted the importance of characteristics such as student’s age, goals, level of knowledge, and learning style to be included in the student model for personalisation of learning content in serious games for learning. Belay, McCrickard, and Besufekad (Citation2016) emphasised the need to consider parameters such as technology exposure, computing literacy, and level of help required for user classification and cater to these differences in the design. Ariffin (Citation2013) found that none of the 16 identified game evaluation frameworks concentrated on learner background (such as culture, spoken language, and ethnicity). However, Kanwar et al. (Jossan, Gauthier, and Jenkinson Citation2021) investigated the impact of culture (cultural integration and cultural associations) on students’ views and acceptability of GBL while adjusting for exposure to video gaming and gender. The study found differences between individuals associating with different cultural groups providing insights into cultural considerations for GBL design and evaluation for international populations. The study suggested that culture should be assessed more broadly as culturally aware design of GBL can further support learning. Moreover, Osman and Bakar (Citation2012) also stressed that factors related to learners’ background should be included in the educational game design model. It helps to provide effective learning experiences and further refine the game design.

The learner characteristics researchers focus most on are gender, prior experience, age, background, and learning style (Nakayama, Yamamoto, and Santiago Citation2007; Chen and Huang Citation2013). Sundqvist and Sylvén (Citation2014) explored the English language-related activities and digital game playing of learners outside of school and found significant gender differences in gaming habits between girls and boys, the latter being more frequent gamers. Sundqvist and Wikström (Citation2015) investigated the relationship between the students’ English learning performance in school and the frequency of out-of-school gameplay. The results indicated that, particularly for male students, the English learning measures are correlated with their gameplay experience. Similar results were found by Erfani et al. (Citation2010). In addition to gender, they also found a significant influence of age on performance. Chen and Huang (Citation2013) demonstrated that prior knowledge (prior digital games experience and digital games playing frequency) has positive effects on GBL but only for the context of declarative knowledge. On the contrary, results from (Kim and Chang Citation2010) showed that individual differences in computer experience, prior mathematics knowledge, and English language skills had no significant effect on students’ achievement when using a computer game. Ariffin and Sulaiman (Citation2014) investigated the effectiveness of GBL in higher education, focusing on learner’s background (culture, ethnicity, and language) and GBL environment. They found a strong correlation between learners’ background and learners’ motivation and between the learner’s motivation and learner’s performance. The research proposed that the learner’s background parameters affect the learner’s learning performance and should be integrated within the GBL environment. Numerous research studies focus on students’ learning modality preferences and how they affect learning (Alkhasawneh et al. Citation2008; Aslaksen et al. Citation2020). Although many research studies show that modality does not affect learning, some researchers think that different media may afford different instructional methods. Therefore, different instructional media's distinctive characteristics and functional capabilities might be relevant to the learning process, which determines their effectiveness (Moreno Citation2006; Rummer et al. Citation2011). Moreover, based on the results from studies focusing on learning style, many researchers advocate the notion of multimodal learning (Aslaksen et al. Citation2020).

3. Materials and methods

This section presents the methodology adopted for this research study. To define the study dimensions and develop a GBL evaluation plan, the authors propose an integrated approach as a guide for planning a GBL evaluation. The approach is inspired by the LEAGUÊ (Learning, Environment, Affective–cognitive reactions, Game factors, Usability, UsEr) framework (Tahir and Wang Citation2020) and the GQM (Goal Question Metric) model (Caldiera and Rombach Citation1994). The LEAGUÊ-GQM approach is used to identify and select the GBL evaluation criteria with respect to evaluation purposes to verify the specified objectives of a learning game. In this way, the approach guides the researchers and practitioners interested in evaluating learning games in different domains. Calderón and Ruiz (Citation2015). The next sections describe the approach and its application in a user study to develop an evaluation plan and demonstrate its use.

3.1. Integrated LEAGUÊ-GQM approach for GBL evaluation

The proposed approach facilitates the GBL evaluation process by providing GBL-specific evaluation criteria on three levels to create a strategy and plan for evaluating learning games. The proposed integrated LEAGUÊ-GQM approach is made up of two parts () and provides a LEAGUÊ-GQM evaluation guide () for guiding the steps in the approach. The two parts are as follows:

P1) Define the evaluation type and data based on GBL development stage and rationale behind the evaluation

P2) Develop a LEAGUE tree and evaluation plan using a three-step parallel process based on the LEAGUÊ-GQM evaluation guide.

Table 1. Integrated LEAGUÊ-GQM approach.

Table 2. LEAGUÊ-GQM evaluation guide.

Educational games are evaluated at different development stages with different purposes/rationale (Calderón and Ruiz Citation2015; Tahir and Wang Citation2017). The type of GBL evaluation is linked with the educational game development stages and rationale behind the evaluation (Steiner et al. Citation2015; Connolly, Stansfield, and Hainey Citation2009; Zaibon and Shiratuddin Citation2010). Likewise, when designing an evaluation study, it is important to select the criteria and measures in line with the evaluation rationale, type, and data to guide the learning game's evaluation process (Calderón and Ruiz Citation2015) as there is no single approach for designing an evaluation study (Patton Citation2008; Diamond, Horn, and Uttal Citation2016). Each evaluation study is distinct, as highlighted by Dondi and Moretti (Citation2007) and identifying criteria for GBL evaluation is a time-consuming and complex process. Hence, a guiding approach like LEAGUÊ-GQM outlines the important criteria in three levels that can guide planning and designing an evaluation strategy from articulating the goal, formulating questions and deciding data sources, and specifying measures and analysis methods. The process is useful for creating a GBL evaluation strategy and plan to guide the evaluation of learning games for both the analytical (single aspect) and global (holistic) evaluation process depending on the required evaluation type. The LEAGUÊ framework (Tahir and Wang Citation2020) serves as a GBL theoretical foundation in the proposed approach, providing core GBL components (Tahir and Wang Citation2020). It presents the GBL criteria listing six dimensions, twenty-two factors, seventy-four sub-factors, ten relations, and five metric types that are incorporated in the LEAGUÊ-GQM evaluation guide (see ) for developing a LEAGUÊ tree. The LEAGUÊ tree provides the structure for the evaluation plan listing the selected GBL evaluation criteria. GQM, on the other hand, provides three measurement levels (conceptual, operational, and measurement) for defining the evaluation plan. Moreover, the evaluation guide also presents data sources (adopted from Petri and von Wangenheim Citation2017; Tahir and Wang Citation2019) and analysis methods (adapted from Petri and von Wangenheim Citation2017) for guiding the steps in the LEAGUÊ-GQM approach. The complete approach is presented in , and the evaluation guide is presented in .

In the first part (P1), the type of evaluation (Steiner et al. Citation2015) (pre-prototype, formative, summative) and the kind of data that will be collected (Petri and von Wangenheim Citation2017) (qualitative, quantitative, both) are determined depending on the learning game's development stage (Zaibon and Shiratuddin Citation2010) and evaluation rationale (the purpose behind the GBL evaluation) (Steiner et al. Citation2015; Connolly, Stansfield, and Hainey Citation2009). Three main types of evaluations can be conducted over the GBL development life-cycle, and identifying the type can help make better decisions by providing the right kind of data at the right time (Aslan and Balci Citation2015; Faizan et al. Citation2019). Pre-prototype evaluation is conducted before the development stage begins for the purpose to incorporate and assess design ideas (for example, through participatory design Danielsson and Wiberg [Citation2006] or user acceptance testing of pre-prototype Davis and Venkatesh [Citation2004]) and to collect benchmark data for subsequent comparative analyses concerning the impact on game project outputs (Steiner et al. Citation2015). Formative evaluation is conducted during the development process for the purpose of highlighting any weaknesses or ambiguity in the learning game prototype or different versions (Steiner et al. Citation2015; Connolly, Stansfield, and Hainey Citation2009). Summative evaluation is conducted at the end of the game development process or after the game has been launched for the purpose of evaluating the potential of the end-product (Steiner et al. Citation2015; Connolly, Stansfield, and Hainey Citation2009).

In the second part (P2), a three-step parallel process is used to incrementally develop a LEAGUÊ tree and an evaluation plan, using the elements from the LEAGUÊ-GQM evaluation guide (see ) and following the GQM levels, as described in . The evaluation guide for the integrated LEAGUÊ-GQM approach (presented in ) specifies the GBL evaluation criteria of the three GQM levels, and evaluators can pick and choose components for each step. Depending on what is to be evaluated, dimension, factors/subfactors, relations, data sources, metric types, and analysis methods can be selected for pre-prototype evaluation to verify the idea, formative evaluation to identify issues to inform design changes, and summative evaluation for determining the effectiveness of the developed game. The three steps of the approach correspond to one of the three levels of GQM: conceptual, operational, and measurement. There are two simultaneous activities at each level: development of the LEAGUÊ tree and development of the evaluation plan. The development of the LEAGUÊ tree is done by selecting relevant dimensions, factors/sub-factors, relations, and metrics types from the evaluation guide for the specific evaluation study. The development of the evaluation plan is carried out by elaborating on the LEAGUÊ tree elements in each step (which act as a guide) for establishing the goal, questions, data sources, specific measures, and analysis methods.

The first step is related to the conceptual level in which the preliminary evaluation purpose is defined, selecting dimensions in the LEAGUÊ-GQM evaluation guide to outline the study dimensions into the final evaluation goal. The second step is the operational level in which this goal is broken down using factors/ sub-factors and defined using relations (if required) in the evaluation guide for formulating quantifiable and assessable questions to assess the goal. The data source(s) for collecting the required data for evaluation is also specified at this level using the evaluation guide. The third and final step is the measurement level, where for each question, measures and analysis methods are defined by selecting metric types from the evaluation guide. Each metric type is elaborated with specific measures based on the selected data sources. The specific evaluation measures are easy to define considering the selected data sources and metric types. For example, the specific measures for metric type ‘score’ can be easily defined based on the selected data sources ‘pre/post-test’ and ‘usability checklist’ as pre/post-test score, usability checklist score (illustrated in the user study, see ). Since the metric types are categorised into objective and subjective, it directs to select relevant analysis methods. The data analysis methods can be selected from the evaluation guide based on qualitative or quantitative data to provide information, answer the formulated questions, and accomplish the goals set in the previous steps. At this point, the evaluation guide incorporates only two qualitative analysis methods based on our experience in GBL evaluation. However, it would be helpful to incorporate a list of different qualitative methods used for GBL evaluations in the LEAGUÊ-GQM guide in the future.

Table 3. User study: FTM.

3.2. User study: evaluating a language learning game using integrated LEAGUÊ-GQM approach

This section illustrates how the integrated LEAGUÊ-GQM approach was used to develop an evaluation plan for the user study on evaluating the potential of the Feed the Monster (FTM) GBL application for reading skills of migrant refugee children.

3.2.1. Feed the monster: Arabic language learning game

Feed the Monster (FTM) is an Arabic language learning game developed as part of the EduApp4Syria project for refugee children to improve their literacy skills in Arabic. FTM is about helping the kind monsters grow and prosper by feeding them letters, words, and sentences. The storyline illustrates a world where an evil character ‘Harboot’ destroys and conquers the land of the friendly monsters who are sent to exile, and he cast a magic spell that turned these friendly monsters into eggs. The player needs to feed these eggs with Arabic letters, syllables, and words in each game level to help them grow and evolve. The storyline was designed to mirror the experience of refugee kids to nurture hope and harness their native language acquisition. The ‘friendly monsters’ are used as the game characters to cope with one's fears. In the game, the players act as the Monsters’ main caregivers, helping them grow and manage their emotions. The players can unlock new monster friends’ with in-game progress.

The main game mechanism is feeding the Monster with the correct answer (letters, syllables, words) based on the level activity. The game divides the Arabic alphabets into small clusters (letter grouping) of five to six alphabets. Each cluster introduces four or five visually distinct letters and one vowel. Every cluster starts with letters (shape and sounds); then players practice vowels variation (shape and sounds); letter in a syllable segment (written form and sound); letter sequence within a word; lastly, words (made of letters already learned in the cluster) and its sound. The small clusters make learning Arabic easy and practical for children because they start learning full words just after the first 5–6 letters, making it fun and engaging. In each task/activity, the player must feed the Monster the correct letter, vowel variation, syllable segment, or letter sequence in the correct spelling order, either based on matching the letter to a copy of the letter or matching the letter to its sound. Each cluster takes 15–20 min to complete, and then the player moves to the next cluster (letter grouping). The player can earn one to three stars in each level depending on performance (the number of correct answers and time taken to answer). Correct answers make the Monster happy. Players earn scores in each level, and periodically the Monster grows bigger. Incorrect answers make the Monster sad, but the child can pet the Monster to make it happy. Instructive feedback is provided by the Monster spitting out the incorrect answer. There are also three mini-games of drawing letters, a memory game, or collecting gifts.

This GBL application is designed for out of school children as a supplementary resource to play at home with minimum adult supervision. The GBL design aimed to provide effective literacy learning opportunities to Arabic-speaking refugee children to improve their literacy skills and psychosocial well-being. Therefore, the game elements used in this GBL application focused on three pillars: ease of use and engaging game experience, Arabic reading acquisition and improvement in psychosocial well-being. The game design uses an intriguing storyline and character of friendly monster to engage children by providing a journey of friendship and discovery. Keeping refugee children in mind the game’s storyline presents a fantasy world designed to nourish hope. The character of monster and simple interactions such as feeding are used to build association with children while keeping the game easy to use.

3.2.2. Evaluation plan using the leaguê-GQM approach

We started by defining the evaluation type and data and planned to conduct a summative evaluation of the final released version of the FTM game with target users using quantitative and qualitative data to test the hypothesis and explore user behaviour and experience in-depth. Next, we used the integrated LEAGUÊ-GQM evaluation guide and followed the three-step parallel process to develop the LEAGUÊ Tree and evaluation plan. presents the complete evaluation plan.

The rationale of this evaluation related to the Eduapp4syria project (Nordhaug Citation2019), was to evaluate the effectiveness of GBL approach (the FTM game) for teaching Arabic skills to refugee children in informal learning setup and without the need of parents’ help. The dimensions in LEAGUÊ evaluation guide directed us to choose learning, game factors, usability and user characteristics that matched with initial rationale and defined our evaluation goal. Further, the LEAGUÊ evaluation guide made is easier to formulate assessment plan by outlining the factors, relations and metric types that were relevant for our selected dimensions and motivated by the employed game. Each level is elaborated below.

For the conceptual level, the dimensions learning, game factors, usability, and user were selected from the LEAGUÊ evaluation guide, which helped define the evaluation goal using the GQM template as shown in . For the operational level, the factors/subfactors selected from the evaluation guide are listed in . Besides, we also targeted the relation: User & (Learning, Game factors, Usability) to formulate five evaluation questions (see ). The data sources for collecting the relevant data were selected keeping in mind the selected factors/subfactors: Pre/Post Test for obtaining children's’ learning gain (learning outcome) with the game; game log data for recording gameplay performance; observation checklist, observation notes and short interview for collecting data related to the usability (interface, learnability, satisfaction); demographic questionnaire for collecting the bio-demographics and experience data, and learning modality preference questionnaire (VARK) for recording children preferences related to learner profile. Finally, for the measurement level, we selected four metric types from the evaluation guide: Scores, Time, Number of occurrences, and Reviews/responses/opinions focusing on both objective and subjective data. Each metric type was used to identify the specific measures for the defined data sources (from the previous step), as shown in . Finally, four analysis methods (quantitative and qualitative) were selected to answer the formulated questions.

3.2.3. Sampling

During Spring 2018, 30 children aged 5–10 years old from refugee migrant background, who speak Arabic but did not have reading or writing skills in Arabic, participated in our study. All participants had no previous experience with the FTM game. These participants were selected because FTM is an Arabic language learning game specifically designed for refugee children to improve their literacy skills in Arabic. Therefore, it was important that participants did not have Arabic literacy skills or previous experience with this game to get accurate results for effectiveness of GBL. The sample comprised 14 girls (mean age: 7.14, SD: 1.875) and 16 boys (mean age: 7.125, SD: 1.746).

In this study, the participants were recruited and contacted through teachers of the weekend class at Muslim Society Trondheim (MST). The study was organised in nine sessions over one month with migrant refugee children selected through this weekend class program at MST, Norway. The weekend program is an initiative organised at MST, a non-profit, religious, and cultural organisation in Trondheim, Norway. The sessions took place before the weekend class program, and 3–5 children participated in each session. A translator was also present in each session. The study was conducted in two rooms assigned by an MST representative.

3.2.3.1. Ethical issues in research with refugee children

Some of the ethical issues when researching with refugee children included gaining access, privacy, language barrier and consent as participants are mostly reached thought trusted NGOs qualification programs or, religious societies. The details are presented in our previous study (Tahir and Wang Citation2019). Petousi and Sifaki (Citation2020) highlighted in their research the need for building trust and confidence. Therefore, the research study was thoroughly explained to the MST representatives and teachers at weekend school to gain their trust before obtaining informed consent from children and parents. Later, the researcher contacted the participants’ parents to obtain consent from the legal guardian for the data collection. The researcher also affirmed the child’s consent to participate in the study.

The study had been notified to the Data Protection Official for Research, Norwegian Centre for Research Data (NSD) and ethical approval was obtained. The parents were required to give consent on behalf of their children if they are willing to let their children participate in the research, and they could request to see the observation checklist/pre/post questionnaire and interview guide. The parents were asked to sign the written informed consent form. The consent form provided the background and purpose of research along with the information to enable parents to understand what participation in the project implies and voluntarily decide whether to give permission to participate. If parents agree, assent was orally obtained from the child. The researcher explained the research activity and asked if they wish to participate, and the child decided whether the research (as he/ she understands it) is an activity in which he or she wanted to participate. In case the child said no, they were free to withdraw from the research. However, as the research involved young children and their decisional capacities may fluctuate, researcher came back to a child who said ‘no’ after some time to see whether he/she may feel differently later. The participation in the research was voluntary and they could withdraw their consent at any time without giving any reason and their data will be removed. Moreover, there were no negative consequences if they chose not to participate or later decided to withdraw. They were also informed that all information will be anonymised. The personal data will be processed confidentially and in accordance with data protection legislation. The personally identifiable information will be removed, re-written or categorised and participants will not be recognisable in the publication.

3.2.4. Study design

A quasi-experiment with a one-group pre–post-test design was used for this study. The experiment was designed to be one-week-long and comprised two parts: a playtest session (40–55 min duration) and one-week play at home. Children and their parents were invited to MST (for the playtest session and instructions for playing at home). Two rooms were specified for this study, where children played the FTM game using smartphones provided by the researchers. The children's demographic and learning modality preferences data were collected from parents using questionnaires. Children played the game individually with one or two observers. The user study was conducted with children without (or with a minimum) Arabic reading and writing skills. A translator and two to three observers (two GBL experts and one novice) were present throughout the intervention focusing on observing, taking notes, and managing the user study's overall execution. A translator (with Arabic and English fluency) was required as most of the parents were not fluent in English.

The first part of the user study (playtest session) had three main sections: (1) A pre-test (10–15 min) to examine the previous knowledge of children with Arabic reading skills; (2) a gameplay session (20–25 min duration), where children will play the game; and (3) a short interview (10–15 min) to ask children follow-up questions regarding their game experience and issues encountered while playing. Each child individually played the FTM game with one observer. The observer was responsible for taking notes using an observation checklist and helping the child if he/she got stuck. An example observation checklist form and guidelines for filling out the observation checklist were provided to all observers before the study to have the same understanding. Children were free to leave playing the game before the session was finished if they did not like it. In the follow-up interview, children were asked to do few simple tasks (pause game, replay, turn music on/off, return to level screen), if they did not explore these features during gameplay session, and ask some questions related to their experience. After the playtest session, smartphones with FTM installed were handed to the parents to let children play the game at home daily for one week (for at least 20 min per day). Finally, a post-test was conducted with children when the parents returned the smartphone after one week.

3.2.5. Data sources

The data sources used in this study were (listed in ):

Pre/Post Test: Early Grade Reading Assessment (EGRA) was used (before and after playing the FTM game) to test Arabic reading skills focusing on the following five tasks: Arabic print orientation knowledge, Arabic letter knowledge, Arabic syllable knowledge, recognise initial sound in Arabic word and Arabic word segmentation. Considering the short duration (only one week) of the experiment, we only focused on the Arabic alphabets in the game's first two clusters (see Section 4.2.2 for game clusters). Therefore, the EGRA test was adapted to include only 10 Arabic letters, syllables, and possible words focusing on these clusters.

Game Log Data: The main data in the game log files included the following details: Session Start, Profile ID, Level Start, Question Start, Answer Response, Wrong Answer, Level End, Rewards Gained, Level Score, Session End. The game logs data were recorded for both gameplay session and one week play at home.

Observation checklist: An observation checklist was developed to record all the actions, behaviours, and expressions of children while observing them playing the game. The design of the checklist is adapted from the LEAGUE framework (Tahir and Wang Citation2020) to our research context. It contained 36 items (constructed using positive phrasing [Diah et al. Citation2010]) focusing on factors/subfactors of usability dimension mapped to the functionalities of the game and the order in which the players are supposed to perform activities/tasks in the ideal scenario (Diah et al. Citation2010).

Demographic questionnaire: This included questions related to bio-demographics (such as age, gender, education of the child, level of education of parents, digital literacy, refugee background, country, and spoken language) and mobile usage experience (mobile usage expertise, dependency, frequency, time spent and years of use).

Learning modality preference questionnaire: VARK for littles was used to collect the learning modality preference of children. VARK stands for visual (11 questions), audio (13 questions), read/write (11 questions), and kinesthetic (11 questions). The parents were asked to fill this form based on what matches their child's activities and preferences for each category. Each category has a score, and the highest score is considered the preferred modality.

3.2.6. Data analysis

For the quantitative data analysis (RQ1–4 in ), the IBM SPSS Statistics v27 software was used. We conducted Wilcoxon Matched Pairs Test to test any potential difference in the Arabic reading assessment (EGRA) score of refugee children following a GBL intervention. To investigate differences between younger and older children, we split the sample using the age of six as a threshold following Piaget's theory of cognitive development (Huitt and Hummel Citation2003) and focusing on pre-operational (younger children) and concrete operational (older children) stages. We conducted the Mann–Whitney test to examine any potential differences in the children’s age group and their learning gain, usability score, and gameplay performance. Spearman correlation was used for identifying any potential correlations: between children learning modality preference and their learning, usability score, and gameplay performance, and between mobile usage experience and their learning, usability score, and gameplay performance. Furthermore, the grounded theory approach proposed by Gioia, Corley, and Hamilton (Citation2013) was used to analyse and interpret the qualitative data (collected through observation notes and interviews) to present data structure for usability issues.

4. Results

This section presents the results from the quasi-experiment concerning the five research questions. The first four questions concern quantitative data analysis using statistical tests. We reported the differences in the learning outcome; looked at differences in children's age groups; identified the effect of learning modality preference and mobile usage experience on learning gain (LG), usability score, and gameplay performance. Lastly, the fifth question concerns qualitative analysis and looked at the usability issues faced by migrant refugee children when playing the game, the first time.

4.1. Learning outcome with game-based learning (RQ1)

This research question focused on the potential learning gain (difference in pre and post-test of Arabic reading assessment scores) of migrant refugee children after using a game-based language learning approach in an informal learning setup. Our null hypothesis was that there is no difference in the Arabic reading assessment score of migrant refugee children from playing the FTM language learning game for one week at home.

A Wilcoxon Matched Pairs Test was conducted to evaluate if there a difference in the pre and post-test scores of refugee children following a GBL intervention. The results revealed a statistically significant positive change in Arabic reading assessment score following playing the Arabic language learning game (‘Feed the monster’), z = −4.7821; p-value is < .00001. The result is significant at p < .05, with large effect size (r = 0.873) according to Cohen (Citation1988) criteria.

4.2. Differences in age groups (learning gain, usability score, and gameplay performance) (RQ2)

This research question investigated how the children's’ learning gain (LG), usability score, and gameplay performance from using a learning game differs between two age-groups: younger (5–6 years old) and older children (7–10 years old). Our null hypothesis was that there are no differences between younger and older children’s learning gain, usability score, and gameplay performance with the FTM language learning game.

A Mann–Whitney test was used to examine the differences between younger and older children. The independent variable was the children’s age (younger or older). The dependent variables were learning gain, usability score, and gameplay performance parameters (total levels, total time, total wrong, total sessions, score in gameplay session). This test is appropriate for our quasi-experiment as it is a nonparametric test used to compare differences between two independent groups where the samples can be of different sizes and when the dependent variable is either ordinal or continuous. The results from the Mann–Whitney Test are shown in .

Table 4. Mann-Whitney test results.

shows significant differences in learning gain (with medium effect size r = 0.4365) and usability score (with large effect size r = 0.5895) of younger and older children. The younger children had higher learning gain compared to the older children, while the older children had better usability scores than the younger children. Observations from the playtest session confirm these differences between younger and older children. Most younger children had difficulty recognising some icons/buttons/concepts and performing gestures (such as extended drag). In comparison, the older children thought that the game was too easy with no option to increase the difficulty. The results showed a statistically significant difference in total levels played (with medium effect size r = 0.4325) by younger and older children. The older children played more levels than younger children. However, there was no significant difference in the total time played, total sessions played, and total wrong answers between the two groups (p ≥ 0.05).

4.3. Effect of learning modality preferences (learning gain, usability score, and gameplay performance) (RQ3)

This research question investigated the effect of learning modality preference on the learning gain, usability score, and gameplay performance of migrant refugee children when playing the language learning game (FTM). Descriptive statistics showed that 33.33% of children had multimodal learning preference, 30% preferred aural, 23.33% preferred kinesthetic, and 13.33% preferred visual. Interestingly, none of the children preferred read/write as a distinct preference, as it was always preferred within multimodal learning preference.

A series of spearman’s correlations were conducted to identify any potential correlation between the children’s learning modality preference parameters (visual, read/write, kinesthetic, and aural) and their learning gain (LG), usability score, and gameplay performance parameters (total levels, total time, total wrong, total sessions, score in gameplay session). Spearman correlation is about the strength and direction of the relation between two variables and is a nonparametric alternative of Pearson correlation. Spearman was used as the assumptions for Pearson correlation were not met. All results for spearman’s correlations are presented in .

Table 5. Spearman correlation results for learning modality preference.

The two-tailed test of significance indicated no significant relationship between the four learning modality preference variables and children's learning gain. It means that the preferred modality of learning does not affect children’s learning with the educational game. However, regarding the association between the four learning modality preference variables and the usability score, spearman’s test verified a relatively strong relationship between one of the four learning modality variables, as indicated in . There was a significant positive relationship between the preference for the read/write learning modality and children's usability score. It shows that children who had a higher preference for read/write modality had higher usability score. The observations from the playtest session also revealed that many children were uninterested in textual instruction provided in the game and would try to skip them without reading.

Lastly, the spearman’s test for the correlation between the four learning modality preferences and the four gameplay performance variables indicated a significant positive relationship between three out of the four learning modality preferences with two out of four gameplay performance variables as shown in . Children's total time playing the learning game was positively related to their preference for visual, read/write, and aural learning modalities. Moreover, children's total levels were positively related to their preference for read/write and aural learning modalities. On the other hand, no significant relation was found between preference for kinesthetic learning mode and gameplay performance variables. Overall, the results showed that usability and gameplay performance was higher for children who prefer the read/write and aural modality for learning. These results are relevant in the employed game context (FTM) as the game highly emphasised these two modalities of learning.

4.4. Effect of mobile usage experience (learning gain, usability score, and gameplay performance) (RQ4)

This research question investigates the effect of mobile usage experience on the learning gain, usability score, and gameplay performance of migrant refugee children when playing the language learning game (Feed the Monster). Again, spearman’s correlation was used to identify any potential correlation between the children’s mobile usage experience parameters (mobile usage expertise, years of mobile use, dependency in mobile usage, frequency of usage, and time spent on mobile) and their learning gain (LG), usability score and gameplay performance parameters (total levels, total time, total wrong, total sessions, score in gameplay session). The results are presented in .

Table 6. Spearman correlation results for mobile usage experience.

Spearman’s test indicated no significant relationship between learning gain and any of the four mobile usage experience parameters. It means that their experience in using mobile technology does not affect children’s learning with the educational game. On the other hand, spearman’s test revealed a significant positive relationship between usability score and two of the four mobile usage experience variables, as indicated in . It shows that children who had more mobile usage expertise and more years of mobile use had high usability scores when playing the learning game for the first time. Regarding the association between mobile usage experience and gameplay performance parameters, spearman’s test revealed a significant positive relation between mobile usage dependency and the total sessions played by children, and between years of mobile use and score in play session. Interestingly, children who had higher mobile usage dependency (i.e. they do not use the mobile alone, but someone else mostly plays with them) played a greater number of sessions. In comparison, children with greater mobile experience (years of use) performed better in the game (higher score).

4.5. Usability issues faced by migrant refugee children (RQ5)

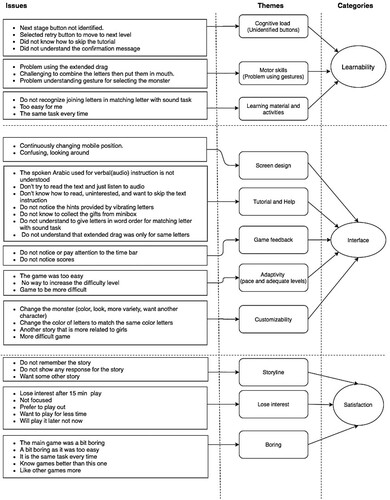

The last research question investigated the issues faced by migrant refugee children when playing the learning game (FTM) for Arabic reading skills. In addition to the usability observation checklist score, we also collected the qualitative data from observation notes and short interviews with children. As reported by Creswell and Creswell (Citation2017), it is important to analyse the qualitative data of the research to deepen the understanding of research participants’ critiques. Therefore, the results reported here () are based on the observations (children's behaviour in the playtest session) and the interview responses. The interview gathered children's feedback regarding what they learned from the game (learning objectives, retention, learning task), their understanding of the game (game definition, narrative, gameplay, rewards), what they liked or disliked about the game, favourite part of the game (game aesthesis, enjoyment, engagement motivation), what difficulties they faced (interface), and if they want to change anything (satisfaction and attractiveness).

We followed the procedure described by Gioia, Corley, and Hamilton (Citation2013) for data analysis and presenting the issues found in the learning game. We came up with the data structure with three levels (shown in ): the first level with the issues highlighted in the raw data (from observations and children interview responses), the second level identified the themes for these issues and, the third level distilled these themes into categories of usability issues. The LEAGUE framework (Tahir and Wang Citation2020) and usability model (Tahir and Arif Citation2015) was used to analyse and combine the groups of codes to generate themes and compared them against each other to form categories.

According to qualitative analysis (presented in ), the learnability category problems were related to cognitive/motor skills and learning material and activities. Many children could not recognise the icons/buttons/concept, or it was difficult for them to perform a gesture. Learning material and activities were not suitable for all users, not comprehensive enough, and not many opportunities. Especially older children found them too easy. Deeper analysis showed that most children with cognitive or motor skills-related issues belonged to younger children age-group (5–6), which is in line with the quantitative results from RQ2. Moreover, issues related to learning material and activities and adaptivity were also age-related, where older children (7–10) wanted the game to be more difficult.

The interface category issues were related to screen-design, tutorial and help, game feedback, adaptivity, and customisability. For screen design, mostly younger children experienced some confusion and difficulty holding mobile while playing the game. For tutorial and help, the qualitative data from observation and interview showed that children most liked the visual and audio instruction; however, some children faced difficulties understanding the audio used in the game when they had a different dialect. On the other hand, many children were either uninterested or did not know how to read the textual instructions. Therefore, they would try to skip the instructions. The instructional support provided through hints such as vibrating letters was not noticed by many children. Moreover, some tasks and activities were not completely understood by children on their own as details were not provided with audio instruction. For game feedback, most children did not notice the time bar or score. For adaptivity, the game's pace was not suitable for many children (especially older), and they thought it was not challenging enough, and there is no variation in the game in terms of difficulty level and customisability. In addition to the need for different difficulty levels, many children wanted customisable options to change different characters, colours, and scenarios in the game.

Lastly, the satisfaction category issues were related to the following themes: storyline, lose interest, and boring. Many children were not engaged in the story of the game and did not remember it. For most children, the game kept their interest for a shorter duration only. Moreover, the game was boring for some children due to little variation in gameplay, and they thought they had other better options (games) to play.

5. Discussion

This section discusses the results from the previous section, presents design recommendations learned from the main findings and discusses some limitations of this evaluation study.

5.1. Discussion of the results

In this section, we discuss the results from our evaluation study with migrant refugee children (5–10 years old), in which we examined the effectiveness of GBL approach for the Arabic reading skills when used in an informal learning context using quantitative and qualitative data (see Section 3.2.1 for evaluation plan).

5.1.1. Impact on learning gain

The findings from this research study suggest that GBL is an effective learning tool for informal learning setups to increase the Arabic reading skills of refugee children (RQ1). The test showed a statistically significant positive difference in the Arabic reading assessment score of children after playing with the game (Feed the Monster) for one week at home, which is in line with similar research by (Salah et al. Citation2016; Azizt and Subiyanto Citation2018; Kenali et al. Citation2019) conducted for formal teaching setup that showed an increase in learning gain.

5.1.2. Age-group differences and need for adaptivity in educational games

To investigate differences between children's age-groups when using GBL, we divided the sample into younger children (5–6 years) and older children (7–10 years) following (Huitt and Hummel Citation2003). The key motivation was to analyse the difference in learning gain, usability, and gameplay performance across age groups that can suggest design improvements for children’s learning games. Surprisingly in this study, the younger children outperformed the older children in terms of learning gain. The qualitative analysis revealed that the main reason for this difference was linked to the educational material and difficulty level of the game, which was too easy for older children, and thus they had lower learning gain and felt boring. According to a study by Greenberg et al. (Citation2010), the strongest motivator for 5th-grade children is the perceived challenge. Therefore, the learning game should provide children with challenges (related to the main task) balanced with their skill level (Kiili et al. Citation2012; Csikszentmihalyi Citation2000). The research findings indicate the need for adaptivity in the learning game (Streicher and Smeddinck Citation2016; Plass and Pawar Citation2020; Peirce, Conlan, and Wade Citation2008). The game should increase the difficulty level in relation to player skills, and the game designers should ensure that the challenges should become more difficult when the player's skill level increases (generate game levels tailored to player's knowledge) (Steiner et al. Citation2012; Kickmeier-Rust Citation2012; Lopes and Bidarra Citation2011; Andersen Citation2012).

However, as expected, the older children had a better usability score than the younger children. It led us to explore further any differences between age-groups in terms of their mobile usage experience, which could be the reason for better usability with older children (supposedly having more mobile experience). Therefore, we conducted another Mann–Whitney test and found no significant differences in mobile usage experience between the two age groups, which confirmed that differences in usability score between younger and older children were due to age-related factors and not because of higher mobile usage experience. These results are also in line with the findings from qualitative data, where younger children faced difficulty in performing complex gestures (such as extended drag), recognising icons/buttons/concepts, and some also found the screen design to be a bit confusing. According to Piaget's theory of cognitive development (Huitt and Hummel Citation2003), these issues are related to cognitive and motor skills that are not fully developed in younger children. Furthermore, the results revealed a statistically significant difference in the number of levels played between younger and older children, where older children played more levels than the younger. It can be linked to the nature of older children being more motivated by competition as claimed by Greenberg et al. (Citation2010) and want to win the game by completing all levels.

To conclude, it appears that the children’s age can be associated with their learning gain, usability, and gameplay performance (RQ2). Therefore, adaptivity is important in learning game to adapt to each child individually for improved effectiveness (Andersen Citation2012; Vandewaetere et al. Citation2013).

5.1.3. Learning modality preferences and multimodal learning approach

We found no significant correlation between children’s learning modality preferences and their learning gain, consistent with the previous research in this area, which debunked the theory that presenting material in preferred modality can improve learning (Lodge, Hansen, and Cottrell Citation2016). Although many research studies showed that modality does not affect learning, some researchers think that different media may afford different instructional methods and be effective for the learning process (Moreno Citation2006).

We found positive and significant correlations between the children’s preference for the read/write learning mode and their usability score. The children who had a higher preference for read/write learning modality had higher usability score. According to the results, the older children (0.63) had a higher mean score for read/write modality than younger children (0.33). Therefore, we conducted a Mann–Whitney test and found that read/write preference in the older group was statistically significantly higher than, the younger children group (U = 40.0, Z = −3.0139, p = 0.00257). The qualitative data from observation showed that most children (especially younger) skipped or tried to skip the textual instructions provided in the game because either they were uninterested or did not know how to read the instructions. On the other hand, some children also had difficulty understanding the audio instructions provided in the game, mainly because of the spoken dialect. However, the majority understood and followed the visual instructions. According to Harskamp, Mayer, and Suhre (Citation2007), the principle of modality is more likely to occur when instructions aim to promote learner understanding. Therefore, the game instructions must be delivered through different media (Moreno Citation2006). The research by Harskamp, Mayer, and Suhre (Citation2007) recommended accompanying graphics by concurrent narration instead of on-screen text. With the use of auditory media to process the words, the children are not forced to divide the limited visual working memory (between pictorial information and on-screen text), thus expanding the capacity of effective working memory (Moreno, Low, and Sweller Citation1995).

Finally, we also found that total time played was positively related to preference for visual, read/write, and aural learning modalities. Similarly, total levels completed was positively related to preference for read/write and aural modalities. These results were predictable since the game (FTM) was for Arabic reading skills and hence had more audio and text focusing on sound and written form of alphabets and words. Therefore, children with a higher preference for read/write, video, and aural modalities played the game more as opposed to those with a higher preference for kinesthetic. It was also evident from observation of playtest sessions where some children were bored after 15 min and instead wanted to play other more physical games. Therefore, it is recommended to provide different learning activities incorporating multiple modalities for the game to be effective for most children (Alkhasawneh et al. Citation2008; Ward et al. Citation2017). Overall multimodal learning is also supported by theoretical development as opposed to the modality-specific learning style theory (Aslaksen et al. Citation2020). Although learners might prefer a modality in certain situations but overall, a more multimodal approach is plausible. According to the results of this study, many children represented a multimodal learning preference, which is similar to the results by Alkhasawneh et al. (Citation2008).

To conclude, children’s learning modality preferences are not associated with their learning gain, but it impacts their usability score and gameplay performance parameters (RQ3). It means that children’s learning with educational games is not affected by their preferred modality of learning; however, different media's functional capabilities can be used to enable effective learning methods based on learning theories and research (Moreno Citation2006). The use of a multimodal approach can enhance the usability and gameplay experience for most users (Aslaksen et al. Citation2020).

5.1.4. Mobile usage experience and need for customisability

Similar to learning modality preferences, we found no significant relation between children’s mobile usage experience and their learning gain using FTM, which is in line with the previous research by Kim and Chang (Citation2010). However, these non-significant findings contrasted with Sylvén and Sundqvist (Citation2012) that reported a positive relationship between the time spent playing games and language proficiency. In another study by Chen and Huang (Citation2013), ANOVA results indicated significant differences in the learning performance of groups with different prior knowledge (frequencies of playing game and experience of playing game). However, the effect of prior knowledge (positive or negative) depended on the nature of knowledge (i.e. declarative or procedural knowledge) delivered by the game, and significant differences were found in only a few groups. Thus, one explanation of this could be that prior experience affects the learning outcome when that experience/knowledge is used in the game to solve tasks that induce learning.

Consistent with prior research (Orvis, Horn, and Belanich Citation2006), this study outlined that mobile usage experience parameters (usage expertise and years of use) are positively related to the usability score when playing the learning game for the first time. The children with more years of mobile use and who require less help to use mobile are already familiar with many of the interface characteristics from the prior experience shared in the current game (Salanova et al. Citation2000). Therefore, researchers argue that experience parameters (such as technology exposure, computing literacy, and level of assistance required) must be considered when designing the user interface (Belay, McCrickard, and Besufekad Citation2016). According to the research investigating expertise in various domains (Ericsson Citation2002), the performance difference between experts and novice is because of the deliberate practice accumulated over a period of time. According to MacDorman et al. (Citation2011), it is important to note that an effective interface is not necessarily the one that is easiest to use for novices as an interface with a steep learning curve is much more efficient to perform the task fast after sufficient experience. Bunt, Conati, and McGrenere (Citation2007) recommended providing customisation suggestions tailored to user characteristics, expertise, use patterns, and features of the interface to maximise user performance. Many researchers supported user interface customisation, Burkolter et al. (Citation2014) showed that it enhances user acceptance and reduces errors, whereas Jorritsma, Cnossen, and van Ooijen (Citation2015) pointed that users work more efficiently when provided with customisation support.