Abstract

Simulation models, in particular System Dynamics (SD) models, can be used in a group modelling setting to communicate, integrate, learn, collaborate, organize knowledge and derive new insights. Such models can play the roles of conceptual integrators, representations, learning or predictive tools. In this ethnographic study of two in-depth SD group modelling projects we discovered that SD models can be active agents in the group-model building process by initiating cognitive transition on participants’ (model and case based) modes of reasoning. We found that the cognitive transition was achieved through a series of surprises or shocks that refuted participants’ prior conceptions and forced them to switch between case-based and model-based reasoning during the model-building process. Based on these insights, we present a framework that describes how simulation models change the mode of reasoning in group modelling project and explains the model’s agency role. The study addresses the calls from earlier OR articles to contribute with more case studies using an ethnographic method looking into simulation artefact agency.

Introduction

Information (or software) technologies undertake significant cognitive processing on behalf of the user and thus are partners in what Pea (Citation1993) calls "distributed intelligence." Such intelligent technologies have a significant effect on human cognition and learning (Salomon et al., Citation1991) and can be seen as exerting agency (Ueno et al., Citation2017). Simulation models in general, and System Dynamics (SD) in particular, are intelligent technologies that are effective in solving complex ill-structured, fuzzy problems through their systemic functions such as exploring scenarios, testing hypotheses or predicting behaviour (Tako, Citation2008; Tako & Robinson, Citation2010). Simulation modelling often involves diverse groups of professionals in creating a shared understanding encapsulated in an explanatory story (or storyline) and testing scenarios.

Over the last decade, operational researchers have paid increasing attention to the process of model building (Tako & Robinson, Citation2010); in particular to the process of groups building simulation models collaboratively and how the process is facilitated by modellers (Franco & Montibeller, Citation2010; Hovmand, Citation2014; Tako & Kotiadis, Citation2015; Vennix, Citation1996). These studies examined how to design a facilitated group-modelling process in order to make explicit the implicit mental models of clients (Andersen et al., Citation1997; Franco et al., Citation2016), the roles of participants, their interactions and engagement (de Gooyert et al., Citation2017), and how mental models are shaped and influenced by participation in the modelling process (Rouwette et al., Citation2002; Scott et al., Citation2013). A further line of operational research (OR) studies has extended this research to highlight the interactions between simulation artefacts and human participants in order to explain the OR process (Burger et al., Citation2019; Ormerod, Citation2010, Citation2014, Citation2017, Citation2020; Tavella & Lami, Citation2019).

The success or smooth progress of group model-building projects is not a given and therefore there is a need to reduce risks during the simulation-building process (Andersen et al., Citation2007). The interaction between group model-building participants and the model is important, particularly how participants’ reasoning changes during the modelling process. Our paper investigates the effect of the artefact on changing participants’ thinking (cognitive transition). While there is significant research on technology artefacts from perspectives such as Actor Network theory, materiality and socio-materiality, boundary objects and several studies in simulation group-modelling processes, the link between simulation agency and cognitive transition is not widely explored. Our study is based on the principles of the socio-technical perspective (Burger et al., Citation2019; Ueno et al., Citation2017) and focuses on the cognitive agency of the artefact (Laasch et al., Citation2020) in order to contribute to the OR studies that initiated work on the artefact agency.

We examine the effects of simulation artefacts on user reasoning in OR studies. Our premise is that it is cognitive integration through interaction between human and artefact that defines both their agencies. We argue that cognitive transition takes place as a result of the integration. This transition can be documented through an ethnographic method (Mingers, Citation2007). Doing so allows for discovering the influence and agency of simulation artefacts while the model is conceptualised, built, and experimented with by model-building groups. Using the lens of artefact agency, we ask: How do simulation models change the mode of reasoning in group modelling projects?

In the remainder of the article, we first review the literature on simulation group modelling, Actor Network Theory, materiality, socio-materiality, and boundary objects to discuss artefact agency, and then discuss project phases and cognitive processes. Then we present our ethnographic method and case studies. In the discussion section, we provide two analytic tables with the comparison of the case studies. We conclude by offering a framework that exhibits the process between the simulation artefact and cognitive reasoning.

Literature review: Artefact agency and cognitive transitions in the group model-building projects

Human participants can treat an artefact as a communication partner, as an active part (or actant) of an activity, or as a component of activities. A central question in philosophical and sociological accounts of technology artefacts is how artefact agency should be conceived; that is, how we understand their constitutive roles in the actions performed by assemblages of humans and artefacts (Rosenberger, Citation2014). There are three perspectives that discuss artefact agency using different lenses: the actor-network, the socio-technical, and the socio-material.

In order to study the agency of simulation artefacts and the cognitive transition of humans in group model-building projects, we adopt the definition of artefact agency from the socio-technical perspective (Burger et al., Citation2019)—that it is the interaction between human and artefact that defines their agency. Therefore, the focus of the study is that there is cognitive integration happening as a result of this interaction and it can be documented through an ethnographic method. From this perspective, the simulation artefact has the ability to challenge and change individuals’ modes of reasoning and eventually leading to the integration of both model- and case-based modes of reasoning using the structure of the artefact.

Our study takes a socio-technical perspective (Burger et al., Citation2019; Ueno et al., Citation2017) and, by focusing on the cognitive agency of the artefact (Laasch et al., Citation2020), contributes to the OR literature on artefact agency (Burger et al., Citation2019; Ormerod, Citation2010, Citation2014, Citation2017, Citation2020; Tavella & Lami, Citation2019). Our premise is that it is cognitive integration through interaction between human and artefact that defines both their agencies and the focus of the study is the cognitive transition that occurs as a result of this integration, documented through an ethnographic method (Mingers, Citation2007).

In order to examine cognitive transitions in group model building using the concept of artefact agency we draw on diverse streams of literature. We have structured the literature review in the following way. We begin by examining the discourse on cognitive reasoning from a story-making perspective to introduce our theoretical basis of case and model based reasoning. Then we provide two review sections focused on the concepts we draw on: First, we review the agency of models from an Actor Network perspective. Second, we look into the group model-building literature. This includes a brief review of facilitation and mental models, an examination of the concept of simulation as an artefact and a discussion of project phases in simulation projects.

Cognitive-reasoning functions through the lens of story-making

The modes of thinking in empirical sciences include inductive and deductive thinking. According to Develaki (Citation2017a, Citation2017b) induction is the inference of general conclusions about a phenomenon from observations and experiments within a limited number of systems. Deduction derives specific proposals or predictions about a phenomenon from a general hypothesis/model, which are then compared to empirical data to check the validity of the initial hypothesis/model (Popper, Citation1959). Inductive and deductive modes of thinking are fundamental to simulation modelling (Develaki, Citation2017b) and the interplay of case- and model-based reasoning. The two related modes of reasoning are found in experiments when participants interact with intelligent technologies and simulation (Parnafes & Disessa, Citation2004; Zhang & Alem, Citation1996).

Case-based reasoning happens when experiences of prior episodes are recalled and adapted to interpret a similar situation. It is the process of learning and abstracting from storied cases and adapting them to interpret and structure similar problems (Graham et al., Citation2004) and emphasises the role of prior experience (i.e., new problems are solved by reusing and, if necessary, adapting the solutions to similar problems that were solved in the past) (González et al., Citation2013).

Model-based reasoning happens when people correlate data using a mental model that can then be used to make sense of the “real world” (Develaki, Citation2017a, Citation2017b). Model-based reasoning is a creative process that includes as part of deductive reasoning a series of practices, including simplifications, justifications, modifications, and adaptations of models to empirical and theoretical constraints (Gilbert & Justi, Citation2016). Parnafes and Disessa (Citation2004) posited that model-based reasoning also includes imagery, and not only inferences. In this sense, model-based reasoning adopts elements of the process of “envisioning” in the context of qualitative simulation. When utilising model-based reasoning, one creates a mental model of a scenario, and “inspects” this mental imagery (Schwartz & Black, Citation1996a, Citation1996b) through testing.

It is important to understand the cognitive transition between case- and model-based reasoning modes in order to understand the active ways in which simulation models exert influence on the way people make sense of complex problems and systems. Cognitive transition occurs in both inductive (case-based) and deductive (model-based) reasoning, for the formation of hypotheses, experimentation tests and analogues (Meadows & Robinson, Citation1985, p. 419). When human cognition and artefacts interact (Hernes & Maitlis, Citation2010, p. 31) inductive (case-based) (Graham et al., Citation2004) and deductive (model-based) reasoning (Develaki, Citation2017b, p. 1003) occur simultaneously or iteratively. In the process of story-making, cognitive transition occurs when people switch between two types of reasoning: case-based and model-based.

The process of making a story is the process of turning facts or information into meaningful stories (Gabriel, Citation1991). Story making is more than describing facts or events—it is a process of symbolic reconstruction (Gabriel, Citation1991) through which we assimilate bits of knowledge, technical language, anecdotes and story fragments (terse stories or ante-narratives; Boje, Citation1991) from the retrospective interpretation of events (Weick, Citation2012) and we abstract meaningful causal links to create a story. Therefore, story-making is predominantly a discursive process of “emplotment” (Ricoeur, Citation1984) in which strings of knowledge are constantly compared, contrasted, combined, corroborated or refuted to construct a bigger story. Story-making is an intermediary between human sense-making and discursive artefacts (Hernes & Maitlis, Citation2010, p. 31), and it is applicable to apply this concept to the case studies where inductive (case-based) and deductive (model-based) reasoning occur.

Story-making is also a polyphonic incomplete process, unfolding during the interplay between conceptual and illustrative empirical material (Cunliffe & Coupland, Citation2012, p. 65) that involves contestation and overlap between sense-making, sense-giving and emotions resulting from responding to and taking into account the voices of others (Weick, Citation2012, p. 80). The process is prone to “counterfeit coherence,” where order amongst (fragments of) stories is imposed inappropriately and explanations are shaped by multi-layered experiences and desires (Boje, Citation1991). Humle and Pedersen (Citation2015) proposed an antenarrative approach to make sense of fragmented stories using discontinuity, tensions and editing.

Group model building can be seen as a process of story-making, because it abstracts the individual stories into a shared cognitive model that looks precise and legitimate (Abolafia, Citation2010). Johansson (Citation2004a) posited that a consultancy process is a co-creative and reflective act between the parties, in which plot lines and characters are constructed and negotiated. Johansson (Citation2004b) emphasised the difference between the storytelling and the story-making approaches—consultancy processes exemplify the latter. Storytelling presumes a story which fits a plot which already exists. Story-making presumes a story that needs to be devised, and a plot (or story-line) which must be invented. While storytelling can be associated with communication and persuasion, story-making emphasises reflection and action.

Section one: Operations research and artefact agency—perspectives from actor network theory

A number of papers published in JORS have highlighted artefact agency as an area for future OR research. Zhu (Citation2011) discussed socio-technical analysis to look at the subject-object agency as relational and distributed. Richard Ormerod wrote a series of articles raising the issue of agency in OR. Drawing on Pickering (Citation1995), Ormerod (Citation2014, Citation2017) suggested that OR case studies will be enhanced by applying concepts emerging from the schools of sociology of scientific knowledge (SSK) and Actor–Network Theory (ANT), and in particular the concept of material agency of objects. Ormerod (Citation2014) suggested that ANT treats human and material (non-human) agency symmetrically. Mingers (Citation2007) pointed to the fact that the application of sociological perspectives in OR, such as ANT, discourse analysis or ethnography, promise interesting insights but first have to be applied to practical cases. Ormerod (Citation2017) suggested a need for OR case studies to help behavioural OR researchers ground their theories, and proposed the “performative idiom” (Pickering, Citation1995, p. 144). Such a view expands the concept of agency from the human-centred view of OR with the claim that material technology (such as computer software) can also possess agency. Ormerod (Citation2017) encouraged the use of perspectives such as ANT and material agency in OR, in both technical and non-technical cases, although he commented in a later paper (Ormerod, Citation2020) that the idea of material agency seems far from obvious. How could an inanimate object be considered as an “actor” on an equal footing to human actors, and not simply an add-on to a model of human behaviour? Tully et al. (Citation2019) used ANT to support empirical analysis of the OR consulting sales process. Tavella and Lami (Citation2019) found that models exert agency because they trigger particular group behaviours and determine the performativity of Problem Structuring Modelling processes. Although these studies have moved forward the process perspective and incorporated agency from both human and artefact, they did not investigate the connection with human reasoning.

This study contributes to this debate by further exploration of the impact of artefact agency. From an ANT perspective, artefacts are placed on the same ontological level as human agents, forming a person-artefact dyad; therefore analysis focuses on their interactions (Knouf, Citation2007). Callon (Citation2006) argued that agency is a hybrid of humans and artefacts and these should not be considered as separate entities in this interaction. Recent research has demonstrated that there are two important shifts in human-computer interaction (Wiberg et al., Citation2013): (1) a move away from treating both as two separate entities toward bringing them together as one whole, and (2) a move away from how computing is used and interpreted toward a focus on the agency of technology. Laasch et al. (Citation2020) identified five types of artefact agency in these interactions: cognitive, action, interpersonal, development, and material.

Therefore, from an ANT approach, artefact agency is constructed from within an ongoing interaction between the human and the artefact agents. Researchers have taken different approaches to analyse these interactions. For example, Krummheuer (Citation2015) used a conversation analytical perspective on situated practices to analyse the interaction between human-artefact agents. Ormerod (Citation2010) provided a case example how an IBM/Compower system exerted agency when interacting with operatives by analysing the relational activity formed by focusing on the processual aspect of the interaction.

Ueno et al. (Citation2017) expanded on the ANT perspective of agency. They argued that integrated human-artefact arrangements are socio-technical systems, and both human and artefact agencies depend on the level of cognitive integration between the two. Human agents and artefacts form integrated systems, performing information-processing tasks together. In such systems, intelligent technologies undertake some of the cognitive processing, therefore sharing and affecting the cognitive processing undertaken by humans. Human cognitive ability therefore emerges from this integrated arrangement (Ueno et al., Citation2017, p. 95). Wachowski’s (Citation2018) studies show how human–artefact interaction stimulates cognitive action, for example reflection, and even morality. Wachowski argued that shared agency relies on the degree to which humans and artefacts are cognitively integrated. Cognitive integration depends on the nature and intensity of information flow, accessibility, durability, user trust, degree of transparency, and ease of interpretation of the information.

Section two: Group model building

Facilitation and mental models

Within operational research, in the past decade increasing attention has been paid to the process of simulation model building (Tako & Robinson, Citation2010) and in particular, collaborative model building with stakeholder groups where the modellers act as facilitators (Franco & Montibeller, Citation2010; Franco, Citation2013). Researchers have discussed processes for participative model building, not only in regards to System Dynamics (SD), where group model building has been a well-established and highly valued practice (Hovmand, Citation2014; Vennix, Citation1996), but also for Discrete-Event Simulation (Tako & Kotiadis, Citation2015) where participative modelling is less common. Studies in SD focus more on the modelling process, examine the role of stakeholders (de Gooyert et al., Citation2017), and emphasise facilitation.

Andersen et al. (Citation1997) designed an improved facilitation process for group modelling in order to make explicit the implicit mental models of decision-makers. Their starting assumption was that the causal structures of simulation models could improve on and make these implicit mental models more precise. However, the same authors concluded that “this is not the case…” (Andersen et al., Citation1997 p. 188) as other authors (e.g., Vennix, Citation1990) and have opined. Further, Verburgh (Citation1994) argued that, even after extensive training in modelling, no real improvement of participants’ mental models was observed. Therefore the link between a group modelling process and an improvement in group mental models is not automatic. In order to establish a process to refine mental models through group model building, Andersen et al. (Citation1997) focused on how to improve the facilitation processes using specific activity scripts. Studies on the facilitation process also look how the visual representations of simulations facilitate shared understanding and socially constructed shared meaning (Black, Citation2013). Andersen et al. (Citation2007) claimed that SD models impose a considerable level of formalism and analytic burden on participants’ interactions in group model building by stifling and blocking emergent conversation patterns in the construction activities while the reified (concrete) version, i.e., the model itself when coded in SD software, imposes an empirical constraint on their conversations. There is an assumption that participants cannot integrate their reasoning with the artefact in a flexible way, since the focus seems to be on the rigidity of the model technical structure. In response to this, scholars suggest the use of more conceptual artefacts such as maps in the modelling process (Howick et al., Citation2006; Richardson, Citation2006).

Scott et al. (Citation2013) explored how individual mental models are shaped and influenced by participation in the modelling process. They observed that group interaction changed participants’ views such that they became more alike. Similarly, Rouwette et al. (Citation2002) observed that the modelling process results in group mental model refinement, alignment, consensus, and commitment to a decision.

This body of research considers the process of modelling and participation in relation to individual or group mental models. A mental model is “a relatively enduring and accessible, but limited, internal conceptual representation of an external system” (Scott et al., Citation2013, p. 216). According to this definition a mental model resembles a stereotype or an internal representation, and is quite different from our focus on reasoning types which is essentially a cognitive function.

Simulation as an artefact

Previous studies from a socio-material perspective and studies on boundary objects and simulation modelling processes have provided important insights for the understanding of artefacts such as simulation models. These studies identify the effects of human action and interpretation of the artefact, the boundary roles the artefact can play, and the effects of the artefact on human and organisational action. These studies however do not include an investigation of the cognitive transition that happens within the interaction between humans and artefacts.

Materiality and socio-materiality perspectives assign agency to both humans and technologies through a relational lens (Leonardi & Barley, Citation2008). Barad (Citation2003, p. 801) dispensed with the distinction between subject and object and calls for “agential realism" that emphasizes “the ontological inseparability of intra-acting agencies” (Barad, Citation2007, p. 206). Artefact agency in this perspective emerges through intra-actions and is based on their relationships. Despite variance in methods, different formulations of materiality and socio-materiality have two common premises.

First, materiality and socio-materiality perspectives focus on the artefacts’ transformative capacity on human action, without an analytical interest in ascertaining causal relationships. Second, they build on a relational ontology, meaning “people and things only exist in relation to each other” and therefore “entities have no inherent properties, but acquire form, attributes, and capabilities through their interpenetration” (Slife, Citation2004, p. 455). Socio-material research questions any separation between artefacts and people, and instead focuses on their mutual constitution. Artefacts facilitate new practices by bounding the performing of work practices through their material properties (Orlikowski & Scott, Citation2008) so their roles in work practices are not given a priori, but are emergent both temporally and situationally. Taking into account these material properties, social actors enact technology-in-practice, as a set of rules and resources. Artefacts “enframe predictable and coordinated action” (Kallinikos, Citation2005, p. 199) or materialise into a tool for the temporal structuring of tasks and routines (Orlikowski, Citation2007) because they are deployed as platforms supporting predictable interactions. This practice is influenced by technological hindrance or affordance. The initial concept of affordance (Gibson, Citation1977) is tied to the material properties of an artefact, but also stems from the unique ways users make sense of it, because each individual may consider it useful for a different set of activities (Jarrahi & Nelson, Citation2018). From a socio-material perspective, the materiality of the artefact affords or constrains human agency: “the materiality of an object favors, shapes or invites, and at the same time constrains, a set of specific uses” (Jarzabkowski & Kaplan, Citation2015; Zammuto et al., Citation2007, p. 752). This approach does not examine whether (and if so, how) intelligent artefacts can affect cognitive transition that comes as a result of the combination of both artefact and human agency.

The branch of research that investigates the roles of technology artefacts as boundary objects perceives them as complementary organising tools which may enhance or hinder collaboration depending on the complexity of interfaces, knowledge creation and codification involved (Bresnen et al., Citation2003; Hoegl et al., Citation2004) or the strength of interdependency among group members. Artefacts are attached to the broader framework of managerial practices and partially reflect and embody hierarchy (Eriksson-Zetterquist et al., Citation2009). Other studies suggest that artefacts are representations of the interactions between agents in different parts of routines (D’Adderio, Citation2008). Artefacts as boundary objects, for example, mediate the relationships between the group and the organisation (Garrety et al., Citation2004). As such, they are used in aligning objectives and handling conflict, negotiations, creativity and contracts (Alderman et al., Citation2005; Jensen et al., Citation2006; Koskinen & Makinen, Citation2009). A second approach suggests that boundary objects are used to create, integrate, codify and sustain knowledge and symbolic value (Swan et al., Citation2007) which supports learning (Scarbrough et al., Citation2004; Yakura, Citation2002) and sense-making (Papadimitriou & Pellegrin, Citation2007) among stakeholders. However, artefacts can also serve to intensify tensions, divisions and information asymmetry and to enhance roles, norms, identity, power or status within group relations (Carlile, Citation2002; Dodgson et al., Citation2007) thereby reifying cultural boundaries (Barrett & Oborn, Citation2010). This line of research does not consider artefacts’ agency (Chongthammakun & Jackson, Citation2012) and sees them merely as carriers or representations of knowledge, meaning, learning and organising structure (Ewenstein & Whyte, Citation2009) but not as an agent that integrates with the human agent.

Process and phases

Tako (Citation2008) and Tako and Robinson (Citation2010) found that because of the systemic model structure of SD (i.e., built on relationships and interactions within a whole system) SD modellers are compelled to consider the broader aspects of a problem in a more holistic manner. SD does not always involve the development of a quantitative model, but if one is to be developed, the model-building process consists of two distinct phases which typically are iterated cyclically a number of times. The first is the construction of a shared conceptual frame: the model structure. Here, the whole group identifies model elements and links between them from diverse stories, and these links are used to develop the model structure and define the data that need to be collected. The construction phase includes activities in problem definition and in building, testing and formalising the model structure. In the second phase, reification, the modellers determine the equations describing the mathematical relationships, codify and complete the model in a simulation package or programming language, and parameterise the model with the data. The results from the computer model are then discussed with the whole group and validated against real data. Typically, this leads to another iteration of the construction phase, followed by reification. The process repeats until agreement is reached among the group. After this, the model can be used to explore different experimental scenarios.

Problem definition is the most important, intense, time-consuming and risky activity in the construction phase (Cochran et al., Citation1995). Systems that are modelled are usually complex, problems are “fuzzy” or ill-structured, and the problem is often not well-defined (Simon, Citation1978). In the reification process, this can be challenging if people react towards models they disagree with.

During testing scenarios, models constrain the ways in which output can be interpreted (Knuuttila & Voutilainen, Citation2003), and can produce results that collide with priors—i.e., conceptual preconceptions, assumptions or expectations. The existence of priors is a significant risk in achieving group consensus in problem definition activities (Urban, Citation1974). A complex model will often challenge people’s understanding. Major hindrances include the lack of familiarity with modelling, and the inability to think in abstract terms or prioritise information from multiple sources (Cochran et al., Citation1995).

The success or smooth progress of the simulation-building process is not guaranteed and therefore there is a need to identify and reduce risks (Andersen et al., Citation2007). In addition to technical challenges, a group model-building project might face risks due to diversity in the representations of knowledge and organisational domains among participants, and their ability to develop, as a group, a shared and collectively owned understanding of the relevant features of the system. Group model-building projects fail when stakeholders develop separate representations rather than a shared insight (Black, Citation2013).

The lack of access to data or expertise can potentially make it impossible for participants to develop an adequate model and insight, either collectively or individually (Rouwette et al., Citation2002).

A further risk stems from the need to decide how detailed or abstract the model structure should be (Urban, Citation1974). Unsuitable levels of abstraction or disaggregation can impact the development of a model. A mismatch between the level of abstraction used by participants in their reasoning can lead to disagreements and misunderstanding within the group. Trying to bridge gaps in levels of abstraction employed can bring its own challenges: for instance, inappropriate disaggregation driven by the desire to add excessive detail can significantly prolong the effort required (Eskinasi & Fokkema, Citation2006).

Power relationships within the group may hinder the sharing of expertise and joint decision-making. Participants might not feel ownership of the model either because the model is seen as being the facilitator’s model, or because they feel dominated by other stakeholders and consequently disengage from the process or reject the project outcomes.

Research design

The method of developing theory in this study, referred to as theory elaboration, is based on the works of Blumer (Citation1969) and Glaser and Strauss (Citation1967). Theory elaboration is based on the application of concepts borrowed from a theoretical perspective to explain a new phenomenon (Maitlis, Citation2005) and is therefore suited to this study since the focus is to apply prior theoretical concepts on technology and simulation artefact agency and develop them to explain SD modelling processes. We examined the agency of SD models as artefacts in two different modelling projects. We used an ethnographic approach, involving direct observation, examination of secondary data (project documentation) and in-depth interviews. Our aim was to examine, in each case, whether (and if so, how) the SD model causes changes in the type of reasoning (case vs. model) during different phases of the group model-building project and then compare the results. We base the research design, selection of case studies and data analysis using the theory elaboration on the work of Blumer (Citation1969) and Glaser and Strauss (Citation1967).

Data collection

We collected evidence from two group-modelling projects undertaken by the same SD consultancy. Both projects ran over the course of one year (Bolt et al., Citation2020). We participated in a series of modelling workshops (11 sessions in total), in which we observed how the groups worked. In the following year, we conducted interviews with the participants in both projects, including clients, consultants and expert advisory members (17 interviews in total). We also collected project documentation, including minutes of their meetings and model versions shared.

Observations: The researchers produced observation notes in all group meetings. These notes included direct quotations from the discussions heard by the researcher, and any relevant comments and reactions. In particular, the notes summarised conversations about what should be included in the model (the scope of the model), how it was used, type and sources of data, and any issues. The notes also contained diagrams of the model while it was being constructed.

Interviews: A semi-structured interview protocol was agreed. The aim was to discover any changes in participants’ experiences developing the model, their understanding of its functionality, the way they worked because of the model, and the ways they thought about the problem before, during and after the process. The additional intention of the interviews was to corroborate and check with our notes and interpretations of what was really happening in the group meetings. The interview protocol covered five topics:

Participant’s background and role in the modelling process;

How the project activities were organised;

Participant’s experience of interactions in group meetings;

A detailed account of the participant’s experiences developing and then testing/using the model (structure, effect, benefits or issues); and

Comparison with similar prior projects they had been involved in.

Context and case studies

According to the method of theory elaboration, verification and discovery occur when suitable cases are chosen because they refer to similar phenomena but they also include well-defined differences (Glaser & Strauss, Citation1967). According to the guidance of Bluhm et al. (Citation2011) we created an in-depth case design that provides a strong foundation for elaborating theory because the similarities between the cases allow for meaningful comparisons, and their differences provide a basis for discovering new themes. The groups we selected had two important similarities and two important differences.

First, both groups were heterogeneous, in contract with the majority of story-making studies which have, to date, been only conducted in tightly-coupled groups. Both groups were managed by experts from the same consultancy who were experienced in simulating the same health-care processes, and both groups were organised in a similar way. Group members had loose ties because they did not belong to the same organisation and none were contractually obliged to participate in the project. Rather, they responded to the invitation of the project owners, mostly out of professional interest. Participation was inconsistent, low-risk, and minimally coupled. The groups had a very simple, flat structure and clear roles; hierarchical norms or power structures were lacking because there was not enough time for a hierarchy to emerge.

The second similarity was that both groups focused on modelling public health and healthcare interventions for the same clinical problem. The overall aim of both projects was to reduce utilisation of NHS services and improve patient outcomes. The results of the simulation models would then be used for commissioning (procurement) decisions for related services.

The first difference was geographical scope. In group A, the client was a local health commissioning body and the task was to model the local services in a specific area in England. Group B, in contrast, had to develop a generic model that could be used by local commissioning bodies across the country. The owner was a Government Department, and the aim was to make the model available across the UK after completion in order to inform local commissioning.

The second important difference between the groups was their composition. In the local group (A), the members worked in different parts of the local healthcare system, mainly occupying operational or managerial positions, and therefore had a “grassroots” view of services and the issues. In group B, the members worked in research, in academia, policymaking and the Civil Service; therefore they all had a “helicopter” view of the problem and relevant services. Interestingly, the members of group B had a better knowledge of each other’s viewpoints through prior engagement in discussions, while the members of group A had less prior knowledge of each other’s stance even though they worked in the same local health economy.

Data analysis

As mentioned above, the method of developing theory—theory elaboration—that we used in this study is based on the work of Blumer (Citation1969) and Glaser and Strauss (Citation1967). The pre-existing concepts within this method remove the need to rely purely on grounded analysis to develop theory (Bluhm et al., Citation2011). According to Blumer (Citation1969), using concepts as a reference point and not as variables leads to the refinement of these concepts by identifying the relationships among them and then to confirm, refute or specify the circumstances in which they may or may not have potential to be strong explanatory tools for previously unexplained phenomena. Theory elaboration is conducted through qualitative analysis and depends on comparison; data from each case are used to refine concepts and then to compare the outcomes. We used content analysis and comparative matrix analysis (Miles & Huberman, Citation1994) to identify how participants interacted with the SD model, and then mapped what happened to capture the roles and effects of the model.

Case study findings

Group A: From case to model-based reasoning

We begin with the first case, that of the local group A, which had to produce a simulation for a specific local health commissioning body. The project group consisted of 12 people, including a project manager organising the meetings and liaising with the client, a simulation consultant, a data manager and nine local modelling group members. These included a commissioner at the local health commissioning body, representatives of different NHS services (four members) as well as of the local council (two members) and from third-sector service providers (three group members).The group met five times within a period of five months, during which observations were made by our team.

The composition of group A shaped the model-building process in three respects. First, most members were not familiar with modelling and their views were mainly based on their operational experience. Therefore, they were both unfamiliar with the type of abstraction required for modelling and they came to the project without strongly defined expectations: “we were more open-minded because we didn’t know what to expect” (commissioner). Second, because members worked in different roles in different parts of the system, they had diverse perceptions of the problem. Some participants focused on public health measures and prevention while others cared mostly about service provision and treatment.

The client’s primary objective was a reduction in hospital admissions. This was the first in a series of conflicts between their conceptual frames and identities; people had to consider: where am I in this story and what is my significance?

PHASE 1: Construction

During the construction process, the group members also disagreed about the definition of the concepts and categories used: “… we did spend a lot of time talking about definitions of the stocks, key stocks in the model, and that was important because everybody had come in with different definitions about how best to define the groups of patients—there was a national definition which differed from the local definition and that was part of the debate … there were two meetings, at least, before we determined what the stocks finally were…” (consultant); “… the group had a different definition for every word that you could possibly come up with” (data manager). Because their backgrounds varied significantly, the members did not share common interpretations of the same issues. In addition, the discussion was mainly among NHS participants, while the third-sector service providers felt left out of this discussion: “… A lot of it went straight over my head, I’ll have to admit because the things they talked about… I understood the principles of what they were saying but a lot of it … that the NHS are talking to each other, so they understand what they’re talking about … NHS talk” (manager 2). The discussion included arguments largely caused by the differences in group members’ positions and experiences. The resulting partial views, explanations and individual interpretations were based on these position and personal experiences, rather than systematically collected and robust evidence. It was a challenge for the group to unite these fragmentary snippets and abstract the “bigger picture”: “…, they were little in the big picture. They had their views and were based on personal experience rather than on proven science” (commissioner). Therefore, the consultant decided to adopt an inductive case-based approach from details in their narratives to construct the model structure from the details in the individual stories the group members offered.

The group members had different reactions to the inductive approach from the specific to the general. The members mistrusted more abstract and conceptual explanations and they insisted that the model structure should be made more detailed. Instinctively, they also focused on some data and deemphasised other to support their interpretations. In addition, the majority of members took a more short-term perspective than was needed for the problem: “… what we’re looking at there was a project that went well into the future” (manager 1). Reflecting on this phase, some participants pointed towards the diversity in the group which made agreement both challenging and interesting: “… I didn’t fully understand everything that was being spoken of in there. It was interesting. It was, you know… It was an eye-opener to see all these different people coming together to … that are all real, that I didn’t know existed and started there…” (manager 3).

PHASE 2: Reification

During the reification process, similar issues emerged. The members needed to find the data to parameterise the model and start testing it. There was a problem with finding available data sources, and the data were not complete, so this stage was protracted: “… it was difficult for me to sit there as a local manager trying to link in your facts and figures with our facts and figures, because I didn’t have those figures to hand” (manager 4). Some of the data were not considered robust: “That research basically sat on one of the clipboards in one hospital ten years ago…” (commissioner)—so there was doubt about the role of the model as a representation of what really happens. This doubt made the members sceptical, reinforced their disbelief in the abstract view of modelling, and revived the conflict about definitions of the basic concepts and variables in the model. However, chasing data made them keep asking questions, and they continued in cycles, immersing themselves into a re-examination of definitions as they tried to distil a coherent story from the data “… I think that’s why we learnt so much because we had to keep on looking for data…” (manager, local health commissioning body). By that point in the modelling process, the group started to see a shared story, even as they acknowledged that the story might not be completely accurate because of data problems. It was considered at least plausible and coherent, which let them revise their previous more partial and anecdotal beliefs.

The group members also changed their previous beliefs partly because they encountered a series of “surprises” that challenged these prior beliefs. It was both a confusing and illuminating turning point for the members when simulation experiments with the model generated scenario outcomes which were different from expectations. While the model was being reified, it started to have a voice; it was as if it kept on saying to them to not only look for different, more robust data, but also to re-think how the system works and which relationships affected different interventions. Some people started to feel that they did not really know the answers anymore, “… they thought oh, actually not, maybe our assumptions were wrong, and they later changed their approach,” (manager, local health commissioning body), and started asking themselves why “… there was a little bit of why, because when we could see that the effects of some of the things which we may have logically thought would have had an effect, didn’t, or because something else did at some point” (data manager). This was a recursive process: as they used more inductive case-based reasoning, they changed the model, which in turn gave them results they did not expect, triggering more analysis: “… I saw their understanding change the resource for certain things. They obviously see how it changed the model…” (manager 2). An interesting dialogue emerged in which people were speculating in terms of what the model would say in response to their explanations: in this “dialogue” the model was changed—but the model also triggered a change in the way participants offered explanations.

The first surprise was that, when confronting it, the service is more complex than group members originally thought. Reflecting on this learning experience, one participant considered: “Is it the fact that it highlighted that actually it’s a lot more complicated than what we think? You know, we think working in this field, we think it’s quite simple” (manager 3). Initially some had questioned the value of modelling for what they perceived to be a simple system “… I can see them working on this sort of work with, you know, like BP and British Airways. Do you know what I mean? I can see that, but for the town, this little process of getting people into treatment services isn’t that complicated…” (manager 4). This prior expectation was challenged when members saw that patients go in and out of remission and therefore g use the same services repeatedly, mixing across pathways that were initially presumed to be linear. The consultant used the analogy of the road network to describe the phenomenon: “… It surprised me, so then it would surprise them even more, … you tend to stay within a cycle. It’s very, very difficult to get out of it completely. The only way out of that true cycle is to die…” (data manager). This insight of the complex nature of the issues generated a lot of discussion on “… where they were, what they were doing and what needed to be done” (manager 5).

The second surprise was whether prevention or treatment services would have a greater impact. The members still struggled with the fact that the problem was about reducing hospital admissions; eventually a more holistic point of view won them over, and they concurred with the storyline that preventive and treatment services are complementary: “… I think the link-in with the different services was helpful because it allowed them to understand where we could action things from the front” (manager 1); and “… I can see in the grand scheme of things where we do fit in” (manager 2). The third surprise generated strong arguments because the model highlighted the importance of the chronic, long-term components of the condition as the main cause behind hospital admissions. Previously all the group members believed the cause to be short-term, acute issues. The engagement with the model led them to change their stories—the model refuted their prior beliefs and triggered their questioning of why they held these originally.

The final major surprise came when the model challenged the presumption that established interventions were effective. The model showed that the key intervention that could work long-term to reduce admissions of chronic patients was a public health measure, not changes to treatment services: “… when people started to see the effect when changing some of the parameters, some of the things you might have expected to make a huge difference might have made a difference in the beginning but then levelled out. I was very sceptical about the whole thing, but when the model showed what the effect was, it was quite surprising to me to see that” (data manager); “We looked at a whole range of interventions, both proactive and reactive, … and nothing really made too much difference. That was another thing that was worth learning, actually…” and “… they got out of that the single most effective thing they could do is (identified specific policy intervention). … There’s quite a bit of resistance to that theory’ (consultant). Naturally, there was much resistance to this revelation. Interventions that were taken for granted to be effective suddenly did not seem to be quite so effective.

The results in the reification phase were so dramatic that group members questioned again the accuracy of the model structure and the adequacy of the available data. Whenever they saw surprising results, they went back to almost the beginning to redefine parts of the model structure: “… understandings were actually growing because each time they put forward ideas … they went back to almost the beginning on a lot of occasions, you know…” (manager). In the final analysis, many of the participants understood the value of inductive abstraction whereby the truth in the model lies in how the service is perceived, not in the results: “… It is not so much an accurate tool but a way to think about things—projecting…” (manager 1). This iterative process changed the way they made sense, turning their specific views towards a more abstract understanding, “… but the most useful part of the whole process was not what we had at the end; it was the conversations we had along the way and the extra research it made us go out to do to find out answers to questions and ask more questions along the way, helped everybody understand it a lot more. Every time you come up with an answer, you end up with another question, which is good for research” (local commission body manager).

In the final presentation of the model to the senior management in the client organisation, the model surprised the members, but this time, the justification of what the model was showing was difficult because the board members did not participate in the story-making process, so the way they reasoned was not changed in the way they did in the groups and they did not have the same trust in what the model was saying: “… Because the slides were demonstrating there was a weakness in an area and she (senior manager) was saying, no, we’ve invested quite a lot of money in here and that those figures can’t be right. I don’t think the presentation captivated their attention enough” (manager 3). The senior management questioned the validity of the model citing data issues. As senior management had not been part of the story-making process, they did not trust what the model was telling them (client).

Group B: From model to case-based reasoning

We continue with group B, charged with developing a simulation model for hospital admissions for use by local health commissioning bodies across the country. Group B consisted of 14 members, including a project manager and a simulation consultant. Of the 12 other group members, seven of the members worked at the Department of Health (Government Department) and the National Health Service (NHS) in decision-making roles (clinical, statistics and policy), two were local commissioners, three were senior academics who were nationally recognised experts in the specific health-care area, one was from the third sector, and one was from the National Audit Office. The group met six times across six months, all of which we observed.

Most of the policy, academic and NHS group members were acquainted with each other’s work and had interacted previously (e.g., through conferences). Most of the group members had a broad overview of the relevant issues and deep knowledge of scientific evidence around different interventions. In addition, many had previous experience in modelling the health service using other types of tools. Although they were not close collaborators, many members held a common understanding about the system: “… you know, it’s something we’ve spent our lives thinking about” (clinician). This common understanding, however, was not shared by the third-service providers, who expected that the Government Department would direct the commissioners to use their services through the model. They all, however, had an abstract, “helicopter” view of the service in question. The modelling consultants felt that a more deductive model-based approach was appropriate for this group because there was a broad consensus about the system, and underlying concepts and data for parameter values were available to or even known by the participants.

Despite their holistic view, there was nevertheless disagreement between them in the construction phase, but this time the reason was disagreement regarding the project’s objective: “… the debate was about the brief, not about the model” (consultant). Their disagreement was whether the model should use hospital admissions as the outcome measure. It was difficult for them to set priorities because the policy agenda was ambiguous: “… the problem probably was the case that this is still an emerging piece of work; a lot of hard work has gone into developing the objective so far, but it is still developing, and will extend well beyond the end of the 2015 target” (consultant). An important factor was the complexity of the NHS and the transition of the NHS towards a system with more private provision: “… So, I think there are problems because we don’t really know what sort of healthcare system we’ve got at the moment. We seem to be, you know, in this transition from the NHS as we all knew it, and a new kind of private sector, or at the moment it’s a sort of quasi private sector with the doctors’ management trying to paper over the cracks, I think” (lead clinician).

PHASE 1: Construction

The construction phase started on model reasoning. First, consultants sketched an initial model structure (also assuming that data would be available to parameterise the model). Then, they narrowed down the structure to fit the service. However, “… narrowing the scope just to the NHS service did not paint the full picture of what other services in the rest of the system can contribute” (policy analyst).

There were objections to this process by academics and clinicians who thought that the process should be more exploratory and that this approach resulted in an explanation that was too linear and too simplistic. Others expected a fast process that would produce a forecasting tool for commissioners and also disagreed with the deductive process: “… start with a simpler explanation rather than the more complex to the simple” (economic advisor). Some thought “… that the group understanding of the modelling process, and the group’s input at discussion was out of sync” (clinician). The objectives of the group were disputed during the construction phase and prolonged this, such that it took three or four meetings to come up with a final model structure.

The model structure produced was aggregate and highly abstract. Initial simulation experiments predicted costs which seemed excessive to the group members. For this reason, they did not trust the model and assumed it was overly simplistic. They then decided to alter the model structure and to disaggregate some of the services.

PHASE 2: Reification

During reification, several data issues emerged. The results obtained by simulating the revised model seemed to be trustworthy, even though there were issues with data availability. While the group had access to databases and information resources from the Government Department and academic research, some of the data were based on limited evidence, or not available at a local level, or inconsistent throughout the country. This lack of robust data raised many questions: “does the data exist; is the data correct; is the data suitable; is the data utilised properly” (statistician, Government Department).

Questioning the data led to much creativity during reification and, generally, this group experimented with the model very actively. They raised many questions as to how to utilise the data, analysed relationships, and experimented with parameter values. During experimentation, the participants saw how the model reacted and that they were not in control of the results. They also faced the fact that the model commanded mental discipline which constrained certain ways of thinking. One participant described this as the model exerting a “gravitational force because of the focus it commands,” although “sometimes the focus on building the model overshadows the effort to learn” (academic). While not everyone liked the modelling process, most group members found experimenting with the model stimulating: “… people would physically get up off the table and sort of go and point at the screen and say you know, ‘why?’” (consultant). Testing was “… Absolutely fantastic! It was an eye opener to me anyway … useful in understanding the consequences of potential policies” (clinician)—“… Yes, yes, yes, most definitely, most definitely. I think being allowed to play with ideas, to play with sort of ‘Monopoly’ money if you like, well you know… It is primarily a creative tool” (economic advisor)—“… Yeah absolutely, it’s the ultimate “flight simulator” for the public sector; you have a risk-free environment in which to play out policy changes and see whether the indications are going to work” (associate).

Some members arranged individual meetings with the consultants to obtain more guidance for testing the model themselves. This is the point where people made sense of what the model was saying and started to converse with the storyline, creating new circumstances to see how they could achieve lower hospital admissions. They had to “… choose evidence, challenge it and make extrapolations from it” (academic); they had to challenge and let go of what they thought they knew. It was enjoyable for most and created new surprising insights for the group members.

A surprising insight for them was to discover which services were actually the most expensive, contradicting what the members thought they knew about the service. This discovery led to large changes in their stories and “created a shift, and people directed the shift within the model” (commissioner). After changing the model structure, and despite the fact that the trials were based on quite limited data, participants gained confidence in the model: “… I felt reasonably comfortable, primarily because what was coming out … made intuitive sense, and so from a purely sensecheck perspective, you know, it held true…” (statistician, Government Department). It took two months to digest what the model was saying about the treatment, but during this time, the model generated much discussion and curiosity. Although it was difficult to structure initially, the model helped the group members to think in a more sophisticated way: “… it’s about helping people be more sophisticated about the treatments…” (policy analyst).

The members of this group seemed more comfortable when the model generated surprising results which contradicted their priors. They responded by adopting a more case-based approach in the reification phase. They identified one limitation of their group; that the model was not an adequate representation of front-line service delivery and pointed out the impact that had on the level of aggregation and usability of the model: “… the model is a hybrid suitable to be used at the regional grouping of” … (local health commissioning bodies) … “since it is not specific enough or abstract” (Government Department client). They also faced new questions regarding how to convey these messages to local NHS organisations. However, the members were satisfied that the model provided a focus towards the right changes in commissioning and that the model added to the body of knowledge instead of just implementing it. Most importantly, participants understood that problem solving is not about using a forecasting tool that provides estimates; rather, it changed the way they perceived a complex system.

Discussion: The SD model as an agent and the reasoning of people

As per our research question, How do simulation models change the mode of reasoning in group modelling projects, we observed the cognitive transition of participants’ reasoning in the following ways.

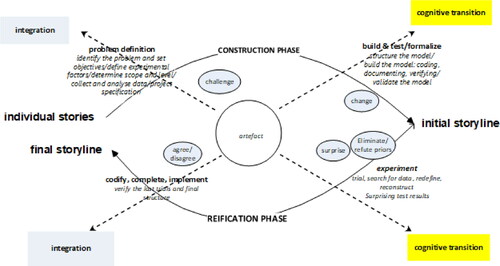

Interaction with the artefact during each phase in the modelling projects changed the reasoning of participants that prevailed in each group (from case to model reasoning, and vice versa). This first happened through a process of cognitive integration between the two and, second, through a series of shocks or surprises that challenged the participants’ a priori assumptions. These shocks were the medium through which the model acted, causing people to switch mode of reasoning. The artefact calibrated their reasoning in a stepwise recursive manner through a series of shocks by revealing the flaws in their individual inferences and shared storylines. Cognitive transitions peaked during the formalisation and experimentation activities during the reification phase in both groups, when the artefact agency had the most impact. Specifically we observed the following during the project activities ():

Table 1 (a). The analysis of the artefact agency in the story-making process in Group A.

Table 1 (b). The analysis of the artefact agency in the story-making process in Group B.

compares the results from the two groups and exhibits the incidents when cognition shifted. The first observation is that the transitions in cognitive switch were opposite. However, the process upon which artefact agency developed and the way it affected cognition was similar in both projects. This process was twofold. First, interaction with the model started during its construction while it was still an abstract entity, and stimulated cognitive action (Wachowski, Citation2018) reflecting on the artefacts’ dimensions and data needs. The agency of the model in the construction phase depended on the central control of the consultant who directed the conversations. While the artefact was systematically constructed, it gradually gained more independence. As participants became more confident interacting with it and cognitively assimilated the model’s logic, the model changed to mode of reasoning of the participants. Cognitive integration started to grow when the model-artefact started to absorb more information and became more explicit in its structure, more transparent in its function, and more familiar and easier to interpret. Over time the reasoning process of the participants started to align with the behaviour of the simulation experiments. The interaction with the artefact eventually created a socio-technical arrangement from where a changed human cognitive mode of reasoning emerged (Ueno et al., Citation2017, p. 95). This was the gradual aspect of this process. The second element in the process was a series of “shocks” or surprises which were incidents that challenged a priori assumptions and held storylines or expectations, resulting in conflict that was resolved only when people started thinking differently—and switched from case- to model-based reasoning, and vice versa. There were specific activities in the process where the model exerted this type of agency which are depicted in .

shows that the difference of the transition between the groups relates to the type of reasoning the participants initially started with, and this is related with the composition of each group. Group A consisted of operational-level professionals, who used case-based reasoning while strategic-oriented professionals in Group B initiated the construction process using deductive reasoning. Eventually, through the process of integration and a series of shocks, Group A () participants transitioned to a deductive model-based reasoning while Group B () switched to a case-based reasoning. When the group followed a line of reasoning that was too inductive or too deductive, the artefact calibrated their reasoning in a stepwise recursive manner, by revealing the flaws in their inferences.

Cognitive transitions peaked during the formalisation and experimentation activities in the reification phase in both groups, when the artefact agency had the most impact. Therefore, we observed how the model-artefact is more than a representation (or mental model) of people’s stories as identified in other studies (Scott et al., Citation2013, p. 216), but it also made them think differently. SD models may (or may not) create convergence of opinions and mental models (Rouwette et al., Citation2002) particularly when participants interact with the model long enough; but the function of the artefact clearly transcends these roles and exerts agency through the integration process and shock incidents as both cases exhibit.

Contribution to theory

As a catalyst for cognitive transition, artefact agency is a powerful construct to explain the process of story-making emphasising the iteration between reflection and action while the story represented by the model is devised, and a plot (or story-line) is invented. Group model building can be seen as a process of story-making, because it abstracts individual stakeholders’ stories into a shared cognitive model that looks precise and legitimate (Abolafia, Citation2010). In story-making, plot lines and characters are constructed and negotiated in a co-creative and reflective way between humans (Johansson, Citation2004a, Citation2004b). Our study exhibits () how such a process unfolds when humans interact with a simulation model. Our investigation reveals what can be described as a process of transformation through “shocks.” These shocks can disengage participants from the process but also motivate them to learn more about the problem which they are attempting to simulate.

In our earlier review section we discussed pertinent work from different fields. summarises how we see the contribution of this study to each of these fields.

Table 2. The contribution of this study to previous literature (the authors).

The first contribution this study complements the more typical focus in OR studies of group model-building—i.e., facilitation and mental models. These studies focus on the modelling activities and group participation processes required to achieve consensus between individuals, and develop a mental model that is shared by the group. Our focus shifts towards reasoning as an outcome of interacting with the artefact, and shows how visual representations facilitate understanding rather than consensus. Thereby, we address earlier calls to demonstrate how the visual representations of simulations facilitate shared understanding and socially constructed shared meaning (Black, Citation2013).

We see our second contribution as bringing theorisation of the construct of “artefact agency” into the discourse in the OR literature; this is a contribution that several studies published in JORS have called for (e.g., Burger et al., Citation2019; Ormerod, Citation2010, Citation2014, Citation2017, Citation2020; Tavella & Lami, Citation2019). More specifically, this previous line of research has called for more OR case studies using an ethnographic method (Mingers, Citation2007), and with a new theoretical direction focusing on human-artefact interaction and shared agency (Burger et al., Citation2019; Ueno et al., Citation2017) by focusing on the cognitive agency of the artefact (Laasch et al., Citation2020). Our study responds to this by exploring how human cognitive transition is caused by artefact agency. We also show the triggers within the different phases of model development that instigate this cognitive transition.

We also hope to contribute to the studies of materiality and socio-materiality perspectives that assign agency to both humans and technologies through a relational lens. Our study examines how changes in the type of reasoning are encouraged or inhibited (afforded or constrained) by artefact agency. While adopting a performative and process perspective, we shift the focus from the effects of individual or organisational behaviour to the cognitive transition that precedes such behaviour.

Our fourth contribution is to the discourse on risks at different phases of the group model-building process. Previous studies have identified the need to investigate human-artefact interaction in order to understand how to overcome or mitigate such risks. Our study shows how artefact agency relates to these process risks.

The final contribution is to the field of case-based and model-based reasoning in simulation projects. Our study provides an empirical theorisation of cognitive transition as a process and describes how the iteration of inductive (case-based) and deductive (model-based) reasoning occurs as a result of artefact agency, for the formation of hypotheses, experimentation tests and analogues. We explore how simulation artefacts challenge and change individuals’ modes of reasoning leading eventually to the integration of both model- and case-based modes of reasoning using the structure of the artefact

Implications for practice

The findings have shown how simulation exerts artefact agency during the group model-building process by causing cognitive transition. A knowledge of simulation agency gives the important added benefit of improving the understanding of human interaction with the technological artefact held by facilitators and modellers, who can now acquire more information on how to use the model structure and data to support different and diverse groups more effectively. This can help modelling practitioners in two ways.

First, the modeller is not a passive observer in this process: the modeller is the facilitator of the whole process as well as instrumental in bringing the participants together into a group. This study shows which elements of the simulation structure influence participants through the two phases—construction of a shared conceptual frame and reification. In addition, the modeller enables the model to exert agency by eliciting ideas from the group and modifying diagrams and models (Black, Citation2013). However, the modeller could inhibit the model’s agency, for example by “cherry-picking” certain ideas or data, or privileging some participants over others. Moreover, the modeller is a facilitator because they direct the attention of the group who depend on them to experiment with the model, and can encourage certain types of experimentation over others. The case studies showed that the understanding achieved through this process was not easily available to people who had not engaged with the model.

It is important to point out that the theory of simulation agency does not diminish the role of the modeller: on the contrary, it emphasises the key role the modeller plays in the group model-building process. We suggest that awareness of the powerful role of the model’s agency enables the modeller to use the model structure and data in the most effective way, supporting the creation and transmission of knowledge. This includes ensuring that group members work together effectively, helping them to conceptualize and build the model, and supporting them in experimenting with the model.

Conclusion

Interaction with the artefact during each phase in the modelling projects changed the mode of participant reasoning that prevailed in each group (from case to model reasoning, and vice versa). This first happened through a process of cognitive integration between the two and, second, through a series of shocks or surprises that challenged the participants’ a priori assumptions. The artefact calibrated participants’ reasoning in a stepwise recursive manner through a series of shocks, by revealing the flaws in their individual inferences and shared storylines. Cognitive transitions peaked during the formalisation and experimentation activities in both groups, when the artefact agency had the greatest impact. Therefore, we observed how rather than being a representation of the synthesis of participants’ fragmented stories, the model-artefact made them think differently and change the storyline of the problem being addressed. SD models are representations (or mental models) as identified in other studies (Scott et al., Citation2013, p. 216), and may create convergence of individual representations (Rouwette et al., Citation2002) if participants interact with the model long enough; but they also clearly transcend these roles and exert agency through the integration process and shock incidents as both cases exhibit.

In order to study the agency of SD artefacts and the cognitive transition of humans in group model-building projects, we adopted the definition of artefact agency from the socio-technical perspective: agency is shared and emerges from the interaction between human and artefact; that eventually become a socio-technical arrangement where the human cognitive mode of reasoning emerges (Ueno et al., Citation2017, p. 95). The case studies provided documented evidence through an ethnographic method that shows how cognitive transition happens as a result of first, a process of interaction and second, by a series of shocks or surprises.

We therefore created a conceptual framework () to show how and when artefact agency causes cognitive transition to human modes of reasoning. The framework not only incorporates this relation between the concepts but also the phased activities during which the transition occurs (conceptual-process model).

The agency perspective on simulation models which we developed here allowed us to investigate the connection between artefact agency and cognitive transition. We registered several contributions in prior literature that this framework offers. Future research projects in these fields could look deeper into the polyphonic interactions between human and artefact during integration and shocks. Several studies have ventured into human-artefact interaction and focus on the translation process within human-artefact interactions but the addition of human cognition would strengthen this investigation. Our study complements the research in simulation group problem-solving by adding the elements of simulation agency and human cognition to the corpus of this literature, and points to an additional direction that may provide more answers to group modelling.

Acknowledgements

We are grateful to the consultants and their clients who allowed us to interview them and observe their modelling work.

Disclosure statement

No potential conflict of interest was reported by the authors.

Correction Statement

This article has been republished with minor changes. These changes do not impact the academic content of the article.

Additional information

Funding

References

- Abolafia, M. Y. (2010). Narrative construction as sensemaking: How a Central Bank thinks. Organization Studies, 31(3), 349–367. https://doi.org/https://doi.org/10.1177/0170840609357380

- Alderman, N., Ivory, C., McLoughlin, I., & Vaughan, R. (2005). Sense-making as a process within complex service projects. International Journal of Project Management, 23(5), 380–385. https://doi.org/https://doi.org/10.1016/j.ijproman.2005.01.004

- Andersen, D. F., Richardson, G. P., & Vennix, J. A. (1997). Group model building: Adding more science to the craft. System Dynamics Review: The Journal of the System Dynamics Society, 13(2), 187–201. https://doi.org/https://doi.org/10.1002/(SICI)1099-1727(199722)13:2<187::AID-SDR124>3.0.CO;2-O

- Andersen, D. F., Vennix, J. A., Richardson, G. P., & Rouwette, E. A. (2007). Group model building: Problem structuring, policy simulation and decision support. Journal of the Operational Research Society, 58(5), 691–694. https://doi.org/https://doi.org/10.1057/palgrave.jors.2602339

- Barad, K. (2003). Posthumanist performativity: Toward an understanding of how matter comes to matter. Signs: Journal of Women in Culture and Society, 28(3), 801–831. https://doi.org/https://doi.org/10.1086/345321

- Barad, K. (2007). Meeting the universe halfway: Quantum physics and the entanglement of matter and meaning. Duke University Press.

- Barrett, M., & Oborn, E. (2010). Boundary object use in cross-cultural software development teams. Human Relations, 63(8), 1199–1221. https://doi.org/https://doi.org/10.1177/0018726709355657

- Black, L. J. (2013). When visuals are boundary objects in system dynamics work. System Dynamics Review, 29(2), 70–86. https://doi.org/https://doi.org/10.1002/sdr.1496

- Bluhm, D. J., Harman, W., Lee, T. W., & Mitchell, T. R. (2011). Qualitative research in management: A decade of progress. Journal of Management Studies, 48(8), 1866–1891. https://doi.org/https://doi.org/10.1111/j.1467-6486.2010.00972.x

- Blumer, H. (1969). Symbolic interactionism: Perspective and method. Prentice-Hall.