?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

The calibration of complex computer codes using uncertainty quantification (UQ) methods is a rich area of statistical methodological development. When applying these techniques to simulators with spatial output, it is now standard to use principal component decomposition to reduce the dimensions of the outputs in order to allow Gaussian process emulators to predict the output for calibration. We introduce the “terminal case,” in which the model cannot reproduce observations to within model discrepancy, and for which standard calibration methods in UQ fail to give sensible results. We show that even when there is no such issue with the model, the standard decomposition on the outputs can and usually does lead to a terminal case analysis. We present a simple test to allow a practitioner to establish whether their experiment will result in a terminal case analysis, and a methodology for defining calibration-optimal bases that avoid this whenever it is not inevitable. We present the optimal rotation algorithm for doing this, and demonstrate its efficacy for an idealized example for which the usual principal component methods fail. We apply these ideas to the CanAM4 model to demonstrate the terminal case issue arising for climate models. We discuss climate model tuning and the estimation of model discrepancy within this context, and show how the optimal rotation algorithm can be used in developing practical climate model tuning tools. Supplementary materials for this article are available online.

1 Introduction

The design and analysis of computer experiments, now part of a wider cross-disciplinary endeavor called “Uncertainty Quantification” or “UQ,” has a rich history in statistical methodological development as far back as the landmark article by Sacks et al. (Citation1989). The calibration of computer simulators, a term reserved for methods that locate simulator input values with outputs that are consistent with physical observations (the inverse problem), is a well-studied problem in statistical science, with Kennedy and O’Hagan’s Bayesian approach based on Gaussian processes the most widely used (Kennedy and O’Hagan Citation2001).

The essence of the statistical approach to calibration is to combine a formal statistical model relating the computer simulator to real-world processes for which we have partial observations (Kennedy and O’Hagan Citation2001; Goldstein and Rougier Citation2009; Williamson et al. Citation2013), with a statistical representation of the relationship between inputs and outputs of the simulator based, typically, on Gaussian processes (Haylock and O’Hagan Citation1996).

Extensions for computer simulators with spatio-temporal output have centered around projecting the output onto a basis and adapting calibration methods to the lower-dimensional projections of these fields. Though wavelets (Bayarri et al. Citation2007) and B-splines (Williamson, Goldstein, and Blaker Citation2012) have been tried, the approach due to Higdon et al. (Citation2008), based on the principal components of the simulator output, has become the default method. Statistical methodological developments in UQ have built on principal component methods (e.g., (Wilkinson Citation2010; Chang et al. Citation2014, Citation2016)), and they have seen wide application, particularly in the analysis of climate models (Sexton et al. Citation2011; Chang et al. Citation2014; Pollard et al. Citation2016).

What statisticians term calibration is referred to as “tuning” in the climate modeling community, a process that has a huge influence on the projections made by each modeling center and by the Intergovernmental Panel on Climate Change (Stocker et al. Citation2013). Each modeling center submits integrations of their climate model for four different forcing scenarios (known as Representative Concentration Pathways) to each phase of the Coupled Model Intercomparison Project (Meehl et al. Citation2000), with the input parameters of the model “tuned” prior to submission so that the model output compares favorably with certain key observations. The resulting integrations, and not the simulators themselves, are what most climate scientists call “climate models” (i.e., simulators are not considered to be functions of these now fixed parameters). These integrations are used to discover physical mechanisms (Scaife et al. Citation2012), projected trends (Screen and Williamson Citation2017), drivers of variability (Collins et al. Citation2010), and future uncertainty to aid policy making (Harris et al. Citation2006).

Despite the application of UQ methods to the calibration of “previous-generation” climate models, referred to in the articles above and many others, UQ is not used for tuning within any of the major climate modeling centers (Hourdin et al. Citation2017). Instead, climate model parameters are often explored individually and tuning done by hand and eye, with the parameters changed, and the new run either accepted or rejected based on heuristic comparison with the current “best” integration. Different descriptions of these processes are offered by Mauritsen et al. (Citation2012), Williamson, Blaker, and Sinha (Citation2017), and Hourdin et al. (Citation2017).

This lack of uptake of state-of-the-art statistical methodology for calibration among some of the world’s most important computer simulators should give us pause for thought. The “off-the-shelf” methodology, Bayesian calibration with principal components, is widely used elsewhere, well published, and is applied to many lower resolution climate models within the climate science literature. Is the lack of uptake a communication issue, or are there features of our methodology that mean it does not scale up well to climate simulators?

In this article, we show how the terminal case, wherein a simulator cannot be satisfactorily calibrated, manifests in the inference of standard UQ methodologies. We then demonstrate that even when there is a good solution to the inverse problem, the use of standard basis representations of spatial output (e.g., principal components across the design) can and regularly do lead to the terminal case and incorrect inference. We develop a simple test to see whether an analysis will lead to the terminal case before performing the calibration and, when the terminal case is not guaranteed, provide a methodology for finding an optimal basis for calibration, via a basis rotation. The efficacy of our methodology is demonstrated through application to an idealized example, and its relevance to climate model tuning through application to the calibration of the atmosphere of the current Canadian climate model, CanAM4.

In Section 2, we review UQ methodologies for calibration and present the terminal case for scalar model output. Section 3 reviews the standard approach to handling spatial output and demonstrates the implications of the terminal case for these methods through an idealized example. Section 4 presents novel methods for finding optimal bases for calibration that overcome the terminal case issues and demonstrates the efficacy of calibrating with optimal bases for our example. In Section 5, we see that standard approaches always lead to terminal analyses in CanAM4 and show how our optimal basis methodology can be used in the process of climate model tuning. Section 6 contains discussion.

2 Calibration Methodologies and the Terminal Case

We consider a computer simulator to be a vector-valued function , with input parameters

that we wish to estimate/constrain, and “control” or “forcing” parameters, x, both of which can be altered to perform computer experiments. For example, x might represent future CO2 concentrations in a climate model.

simulates a physical system

, and we have access to measurements or observations z, of part or all of

. The goal of calibration methods is to use z to learn about

. In what follows we remove the control parameters, x, to simplify the notation, as they are not involved in calibration, but in subsequent prediction.

The two statistical methodologies for calibration that we focus on here are Bayesian (or probabilistic) calibration (Kennedy and O’Hagan Citation2001; Higdon et al. Citation2008), and history matching with iterative refocusing (Craig et al. Citation1996; Vernon, Goldstein, and Bower Citation2010; Williamson, Blaker, and Sinha Citation2017). Both begin with the same type of assumption, namely that there exists a best input setting, , so that

(1)

(1)

for mean-zero independent observation errors, e, and model discrepancy,

(though history matching differs in only requiring uncorrelated terms in (1) rather than independent terms).

Both methods require an emulator, usually a Gaussian process representation of function , trained using runs

based on design

. For scalar

, the general model is

(2)

(2)

where

is a vector of specified regressors,

their coefficients, and

a weakly stationary covariance function with parameters

. The model is completed by specifying a prior on the parameters,

, and posterior inference given F follows naturally with

with

There are many variants on emulation, with some practitioners preferring no regressors (Chen et al. Citation2016), different types of correlation function (including no correlation) (Kaufman et al. Citation2011; Salter and Williamson Citation2016), and different priors, , with some leading to partially analytic posterior inference (Haylock and O’Hagan Citation1996). As history matching only requires posterior means and variances of the emulator, Bayes linear analogues are sometimes used (Vernon, Goldstein, and Bower Citation2010). Generalizations to multivariate Gaussian processes are natural (Conti and O’Hagan Citation2010), and we address the difficulty with high-dimensional output from Section 3 onward.

2.1 Probabilistic Calibration

Though the underlying statistical model and the emulator are similar for both history matching and probabilistic calibration, the assumptions placed upon , and the resulting inference, are quite different. Probabilistic calibration places a prior on

, and a Gaussian process prior for the discrepancy,

, before deriving the posterior

, and marginalizing for

. The discussion of Kennedy and O’Hagan (Citation2001), and the later article by Brynjarsdóttir and O’Hagan (Citation2014), argue that lack of identifiability between

and

mean that strong prior information on

or

is essential for effective probabilistic calibration to be possible.

2.2 History Matching and Iterative Refocusing

Note that, given a discrepancy variance, probabilistic calibration must still give a posterior that integrates to 1, thus predetermining an analysis that will point to some region of parameter space

as being “most likely.” This can be undesirable in some application areas, as often the goal is to find out if the simulator can get “close enough” to the observations, so that experiments predicting the future can be trusted. Climate model tuning is a good example of this, where part of the goal in tuning is to find out whether it is the choice of parameters, or the parameterization itself, that is leading model bias (Mauritsen et al. Citation2012; Hourdin et al. Citation2017).

The method of history matching and iterative refocusing allows the question of whether the model is fit for purpose to be answered as part of the calibration exercise, by altering the problem from one of looking for the best input directly, to one of trying to rule out regions of that could not contain

. A model unfit for purpose would have all of

ruled out. The method defines an implausibility measure,

, with

(3)

(3)

where the expectations and variances of

are derived from the Gaussian process emulator description above and are conditioned on the runs F. If

exceeds a threshold, T, that value of

is considered implausible and ruled out, thus defining a membership function for a subspace

of

that is Not Ruled Out Yet (NROY), with

The choice of T will be problem dependent, though typically, if z is one-dimensional, Pukelsheim’s three sigma rule (Pukelsheim Citation1994) is used to set

(Craig et al. Citation1996; Williamson et al. Citation2015). For

-dimensional z, Vernon, Goldstein, and Bower (Citation2010) define

, the 99.5th percentile of the

-distribution with

degrees of freedom, or a conservative T can be derived through Chebyshev’s inequality.

A key principle behind history matching is its iterative nature. Following an initial set of runs, a “wave” of history matching is conducted, leading to a certain percentage of being ruled out. A new wave can then be designed within NROY space, and the procedure repeated, refocusing the search for possible

(Williamson, Blaker, and Sinha Citation2017).

Discrepancy and observation error variances, and

, are important in both probabilistic calibration and history matching. For the latter, Equationequation (1)

(1)

(1) leads to

in EquationEquation (3)

(3)

(3) , while a Normal assumption on e in calibration means

and

appear in the likelihood.

In this article, we focus on optimal spatial calibration for both types of methodology, as the issues we shall identify in Section 3 apply equally to both, though manifest in different ways, as we shall illustrate now with our discussion of the terminal case.

2.3 The Terminal Case

Consider a computer simulator, , a discrepancy variance assessment

, and an observation error variance

, where both variance matrices are positive definite. We define the terminal case to occur when

, for T as above and for a perfect emulator, so that, in EquationEquation (3)

(3)

(3) ,

and

for all

. So, from a history matching perspective, the terminal case occurs when the model is too far from the observations at every point in parameter space according to the model discrepancy. Hence, all of

is ruled out, and the modelers must reconsider their simulator, or their error tolerance.

Within a probabilistic calibration framework, the terminal case implies a prior-data conflict so that, in some sense, has been “misspecified” or the expert is “wrong.” Lack of identifiability requires informative expert judgment for discrepancy (Brynjarsdóttir and O’Hagan Citation2014), yet the difficulty in providing such judgments for complex computer simulators (Goldstein and Rougier Citation2009) may mean that the terminal case would occur quite often in practice. It is therefore important to see how such prior-data conflict would manifest.

shows 20 steps of an iterative probabilistic calibration of a one-dimensional that we can evaluate quickly (black line), to observations (solid gray line), with

and

misspecified (dashed gray lines ±3 SDs) so that the true function does not come as close to the observations as the expert judgment indicates. Starting with an equally spaced six-point design, a Gaussian process emulator is fitted for fixed correlation length (the mean function is the solid blue line, 2 SD intervals are given by the blue dashed lines), and the posterior distribution

overlaid (solid red line). We then evaluate

at the maximum a posteriori estimate for

, refit our Gaussian process, and compute the new posterior over

to produce the next plot.

Fig. 1 Showing 20 steps of an iterative probabilistic calibration of a computer simulator (black line) to observations (solid gray line) with and

misspecified (dashed gray lines ±3 SDs). Observations of the model (red dots) iteratively taken at the MAP estimate for

following the fitting of a GP emulator (mean solid blue line, ±2 SDs dashed blue lines), and the posterior distribution of

overlaid at each step (solid red line).

From panel 6 onward, we see the issue with the terminal case for probabilistic calibration. Our posterior beliefs are highly peaked at one particular value, yet evaluating the model there completely shifts the peak to a location for which we had near zero prior density. Each evaluation of the simulator, which for climate models may take weeks or months, shifts the posterior spike to an unexpected (a priori) part of parameter space. It is often not efficient to run expensive simulators, such as climate models, that require expert time to run and manage, one run at a time (Williamson Citation2015). The scientists that manage jobs on supercomputers, for example, require batches of runs that can be run in parallel. However, batch designs could be even worse here. Guided by the posterior density at each point, batch designs would be the near equivalent of one point at the MAP estimate, simply shifting the peak of the posterior to somewhere as yet unsampled.

Eventually, as we see from the bottom four panels, posterior uncertainty in is sufficiently reduced, and

settles on the “least bad” value of

, where

is closest to the observations (though around 30 SDs away). For simulators with input spaces of much higher dimensions (the climate models we work with have typically specified 10–30 parameters to focus on, though these would be a subset of several hundred), we are unlikely to ever be able to reduce emulator uncertainty to the extent that the posterior spike settles over the least bad parameter setting. Hence, under an iterative procedure such as this, we would continue to chase the best input throughout parameter space, constantly moving the spike as in a game of whack-a-mole, until we run out of resources.

Our illustration of the terminal case shows that though careful subjective prior information is required for model discrepancy in order to overcome the identifiability issues with the calibration model, if those judgments lead to a prior-data conflict via a terminal case, good calibration will not be possible, and it will take a great deal of resource (enough data to build a near perfect emulator everywhere) to discover this. It would seem more natural to first history match in order to check we are not in a terminal case, and, if not, perform a probabilistic calibration within NROY space as in Salter and Williamson (Citation2016).

Whichever calibration method, or combination of them, is preferred, it is important to understand this terminal case, as we shall show that even for models that can reproduce observations exactly, tractable methods for calibrating high-dimensional output can result in a terminal case analysis.

3 Calibration With Spatial Output

For spatial fields, the most common approach to emulation and calibration involves projecting the model output onto a low-dimensional basis, , and emulating the coefficients, so that fewer emulators are required (Bayarri et al. Citation2007; Higdon et al. Citation2008; Wilkinson Citation2010; Sexton et al. Citation2011) (although alternatives, such as emulating every grid box individually, have been applied, e.g., by Gu and Berger (Citation2016)).

Writing the model output as a vector of length

, so that F has dimension

, the singular value decomposition (SVD) is used to give n eigenvectors that can be used as basis vectors (equivalently, finding the principal components) (Higdon et al. Citation2008; Wilkinson Citation2010; Sexton et al. Citation2011; Chang et al. Citation2014, Citation2016). For the size of model output typically explored using these methods,

will not be of full rank as

. This means that while F can be represented exactly by projection onto

, general

-dimensional fields will not have a perfect representation on

. As the majority of the variability in F is usually explained by only the first few eigenvectors, the basis is truncated after q vectors, giving a basis

of dimension

, often chosen so that more than 95% of F is explained by

. Various rules-of-thumb are used dependent on the problem, for example, Higdon et al. (Citation2008) truncate after 99%, while Chang et al. (Citation2014) use 90%.

In order to emulate the model, the runs are first centered by subtracting their mean, , from each column of F, giving the centered ensemble

(we use the term ensemble to mean the collection of runs, as is common in the study of climate models).

is then projected onto the basis

, giving q coefficients associated with each parameter choice:

(4)

(4)

Given q coefficients, a field of size is reconstructed via

(5)

(5)

with

for

. Emulators for the coefficients of the first q SVD basis vectors are then built:

(6)

(6)

Given these emulators, calibration can either be performed using the entire -dimensional output, with emulator expectations and variances transformed to the

-dimensional space of the original field (Wilkinson Citation2010), or on its q-dimensional basis representation, with the observations projected onto this basis (Higdon et al. Citation2008).

Calibration (via either history matching or probabilistic calibration) requires an informative prior process model for the spatial discrepancy, . This could be a stationary process defined through a simple covariance function over the output dimensions, though a richer class of nonstationary process defined via kernel convolution is often used (Higdon Citation1998; Chang et al. Citation2014, Citation2016). These approaches specify a number of knots over the spatial field and define discrepancy to be a mixture of kernels around each of these knots. As with any calibration problem, however, strong prior information for discrepancy processes is essential to overcome identifiability issues, as discussed in Section 2.3. The way to include this information has been to fix the correlation parameters of the kernels and to have an informative prior for their variances. With such a prior, a terminal case analysis is just as possible as for the one-dimensional example we presented earlier.

Suppose that the prior on the process is strong enough to overcome identifiability issues and is such that we do not have a terminal case. When using a basis emulator to calibrate , we may artificially induce a terminal case analysis, as reconstructions from coefficients on the basis are restricted to a q-dimensional subspace of

-dimensional space. Further, it will not be clear whether our analysis implies that the model is incapable of reproducing z, or that this was due to a poor basis choice. The SVD basis chooses the q-dimensional subspace that explains the maximum amount of variability in F with the fewest number of basis vectors. This choice does not guarantee that important directions in F that are consistent with z are preserved.

3.1 Illustrative Example

We illustrate this problem with an idealized example of a six-parameter function (detailed in Section S1), with output given over a 10 × 10 grid. Observations, z, are given by a known input parameter setting,

, with

observation error added (given in (S2), with

defined in (S3)), so that a calibration exercise should be able to identify

. In our example, the great majority of the input space leads to output that is biased away from z: the proportion of input space leading to output consistent with z is around 0.01%.

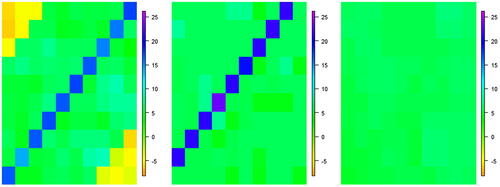

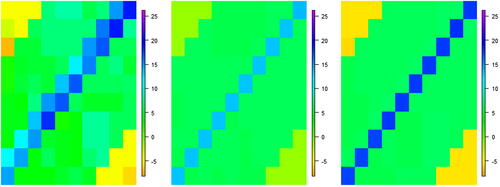

The first panel of shows the observations, z, with a strong signal on the main diagonal. The second panel is the mean of the output field over a maximin Latin hypercube sample of size 60 in (i.e., the mean of ensemble F). The strong signal in the ensemble is a biased version of z. In a climate context, this is analogous to the Gulf Stream being observed in the incorrect place in model output.

Fig. 2 Left: the observations, z, for our function. Center: the ensemble mean. Right: the reconstruction of z using the truncated SVD basis.

We calculate the SVD basis as described above. Over 95% of the ensemble variability is explained by projection onto the first four basis vectors, which we refer to as the “truncated basis,”

. If we project z onto this basis and reconstruct the original field using these coefficients, via EquationEquations (4)

(4)

(4) and Equation(5)

(5)

(5) , we obtain the field given by the third panel of : the distinctive pattern found in z has been lost. That is, spatial calibration with

would ultimately rule out parameter space that contained the true coefficients due to poor reconstruction, suggesting that, for reconstructions of the field using

, we are in the terminal case.

Fitting Gaussian process emulators to the coefficients given by projection of onto the four basis vectors, the expectation and variance at

is given by

To probabilistically calibrate or history match on the original field, we require and

in terms of the coefficient emulators. These are

where

contains the discarded basis vectors, and

is a diagonal matrix with the associated eigenvalues as the diagonal elements (Wilkinson Citation2010).

Calibrating in the coefficient subspace requires projection of , and

onto

. For example, the implausibility in (3) on the coefficients becomes

(7)

(7)

Using the 0.995 value of the chi-squared distribution with 100 degrees of freedom to history match via (3), we rule out the whole parameter space, , and so we are in the terminal case. Hence, probabilistic calibration gives peaked prediction at the incorrect value of

, consistent with the description given in Section 2.3 (see, supplementary material, Section S1.1, Figures S3, S4).

By history matching on the coefficients instead, using (7), and setting T using the chi-squared distribution with 4 degrees of freedom, we find an NROY space consisting of 3.8% of . However, we rule out 58% of the parameter space that was consistent with z, as the important directions for comparing the model to observations are not contained in

.

Whether we are calibrating on the original field, or on the coefficients, the “best” result we are able to find is that given by the reconstruction of z with , given in the final panel of . On the field, we are in the terminal case. On the coefficients, we are attempting to find runs that give coefficients that lead to this reconstruction, regardless of what happens in the directions we are interested in (i.e., the main diagonal pattern). Henceforth, we choose to focus on calibration on the field, as it compares all aspects of the observed output to the model, rather than a few summaries of it.

4 Optimal Basis Selection

For calibration, there are two main requirements for a basis, B, representing high-dimensional output: being able to represent z with B (a feature not guaranteed by principal component methods), and retaining enough signal in the chosen subspace to enable accurate emulators to be built for the basis coefficients (as principal components do).

A natural method for satisfying the first goal is to minimize the error given when the observations are reconstructed using B. Define the reconstruction error, via

(8)

(8)

where

is the norm of vector v, and W is an

positive-definite weight matrix. By setting

is analogous to (3) and is the implausibility when we know the basis coefficients exactly (so that the emulator variance is 0).

As W will not generally be a multiple of the identity matrix, the SVD projection from (4) is not appropriate for . Therefore, (4) becomes

(9)

(9)

with this projection minimizing the error in

(Section S2), hence the definition of the reconstruction error in (8).

We present everything in full generality for positive definite W. Therefore, B is an orthonormal basis if . A basis with this property can be obtained using generalized SVD (Jolliffe Citation2002), with

giving the usual SVD decomposition:

As a measure of whether emulators can be built, we use the proportion of variability explained by projection of the ensemble onto each basis vector , with

(10)

(10)

The proportion of ensemble variability explained by , is

(11)

(11)

The SVD basis maximizes for each k, given the previous vectors and subject to orthogonality.

Prior to building emulators and performing calibration for a given basis, we can assess whether we are in the terminal case or not. For history matching threshold T, if then we are in the terminal case on B, and would even rule out values of

that reproduce z exactly. If

for some

, we may view W as having been misspecified, as in the terminal case described in Section 2.3. However, we may also have underexplored the output dimension of

, so that B does not allow us to get close enough to z. We revisit this test in the context of optimal basis choice in Section 4.2.

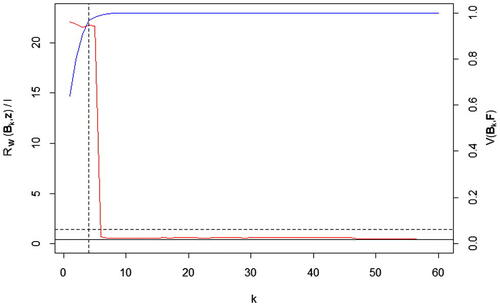

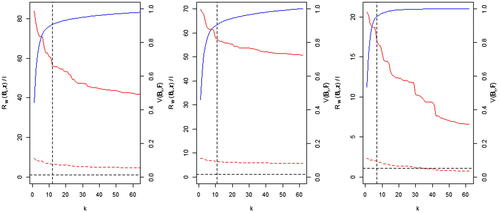

compares and

for the example of Section 3. We refer to plots of this type as varMSE plots. The red line represents

, and the blue line shows

, for each truncated basis,

. The vertical dotted line indicates where the basis is truncated if we wish to explain 95% of the ensemble variability, and the horizontal dotted line represents the history matching bound, T. The solid horizontal line is equal to

. For the SVD basis in our example, we see that

is large (compared to T) until k = 6, where the error decreases below the threshold, indicating that the sixth basis vector contains patterns that are important for explaining z. As further basis vectors are added,

continues to decrease, suggesting that patterns relevant for representing z are in fact included in B for this example. However, the later basis vectors explain low percentages of the variability in the ensemble, with the low signal-to-noise ratio of projected coefficients making accurate emulation impossible. If we could emulate the coefficients for the 5th and 6th basis vectors, we would more accurately represent z, although this rapid decrease in the reconstruction error is a feature of our example, rather than a general property of the SVD basis, and therefore we still truncate at 95% for illustrative purposes.

Fig. 3 A plot showing how the reconstruction error (red) and proportion of ensemble variability explained (blue) change as the SVD basis is increased in size, for .

The SVD basis aims to maximize the blue line for each basis vector added, whereas, for calibration, we require the red line to be below T. The problem of basis selection for calibration is one of trading off these two requirements, reducing while ensuring that each

is large enough to enable emulators to be built. Given that the full SVD basis may contain information and patterns that allow the observations to be more accurately represented, the information contained in this basis may be combined in such a way that the resulting basis is suitable for calibration, with important low-order patterns blended with those that explain more of the ensemble variability.

4.1 Rotating a Basis

Performing a rotation of an ensemble basis B using an n × n rotation matrix, , rearranges the signal from the ensemble, potentially allowing the new truncated basis to be a better representation of z. A general n × n rotation matrix

can be defined by composing

matrices that give a rotation by an angle around each pair of dimensions (Murnaghan Citation1962). Our goal is to find

such that

minimizes

, subject to constraints on

that allow the projected coefficients to be emulated.

To directly define a rotation matrix via optimization requires a large number of angles to be found, even when the ensemble size is small. Instead, we take an iterative approach, selecting new basis vectors sequentially while minimizing

at each step, in such a way that guarantees that the resulting basis is an orthogonal rotation of the original basis.

Given p < n basis vectors, , we define the “ensemble residual” as

This represents the variability in the ensemble not explained by . Define the “residual basis,”

, to be the matrix containing the right singular vectors of

. The residual basis gives basis vectors that explain the remaining variability in

, given vectors

.

4.2 The Optimal Rotation Algorithm

Given an orthogonal basis B for with dimension

; a positive definite

weight matrix

; a vector v, where vi

is the minimum proportion of the ensemble variability to be explained by the ith basis vector; the total proportion of ensemble variability to be explained by the basis

; and a bound T (usually that implied by history matching,

, we find an optimal basis for performing calibration as follows:

If

, stop and revisit the specification of W, or add more runs to

. Else set k = 1.

Let

and set

and set .

Find the residual basis given

, and form the orthogonal rank n basis

Define

as the minimum value satisfying

where

represents the first q columns of

. If

then stop, and return

as the truncated basis for calibration. Else, set

and

, and return to step 2.

Prior to applying the algorithm, we must specify an initial basis, B, a weight matrix, W, and the parameters and v to control the amount of variability explained by each basis vector. We use the SVD basis (with respect to W) for B, however, other choices are possible, for example, we could apply Gram–Schmidt to the ensemble itself and rotate this, or apply a different scaling to the SVD basis.

At each step, our algorithm selects the linear combination of a given basis that minimizes , subject to explaining a given percentage of ensemble variability, and given any previously selected basis vectors. If the defined truncation

satisfies

, then the algorithm terminates, as standard residual variance maximizing basis vectors no longer lead to a terminal case analysis. We allow a basis to be identified that satisfies our two goals: we do not rule out z and have coefficients that can be emulated, if v is set appropriately. To optimize for

, we use simulated annealing (Xiang et al. Citation2013), although any optimization scheme that converges could be used.

The check in step 1 of our algorithm is due to the following result (proved in S2):

Result 1

(Invariance of to rotation) For a rotation matrix

of dimension k × k, and a set of basis vectors

, we have

(12)

(12)

Regardless of the rotation that is applied to B, we cannot reduce the reconstruction error below that given by the full basis originally. However, because the SVD basis is always truncated prior to this minimum value being reached, we can search for a rotation that rearranges the information from the SVD basis in such a way that satisfies

(13)

(13)

incorporating important, potentially low-order, patterns into the q basis vectors that we emulate. Hence, step 1 of the algorithm provides an important test as to whether our ensemble and uncertainty assessment,

, are sufficient to avoid a terminal case analysis, and shows when a rotation exists, up to the choice of v.

Theorem 1.

in step 3 of the optimal rotation algorithm is an orthogonal rotation of B.

The results and proofs required, and the proof of Theorem 1 itself, are found in Section S2. Given that B passes step 1 of the algorithm, existence of an optimal rotation depends on the choice of v:

Theorem 2. A

t the kth iteration of the optimal rotation algorithm, given an orthogonal that satisfies

with

and

, for

, and

. In this case the algorithm converges to

.

Proof.

By construction,

. Hence, if

such that

.

If with

,

(by Theorem 1),

: let

and

be the coefficients given by projecting z onto

and

, respectively. Let

, then

Finally, with

(by convergence of the optimizer, e.g., Aarts and Van Laarhoven (Citation1985) for simulated annealing). □

In practice, when applying our algorithm to high- dimensional model output, we have found that only a small number (three or fewer) of iterations have been required, hence v often has a low dimension. The values of v required will depend on the problem, with a different approach required when a small number of vectors explain the majority of the ensemble, compared to when a large proportion of the variability is spread across many SVD basis vectors. In the former case, the values of v may be relatively high, while in the latter they can be lower, relative to the proportion explained by the equivalent SVD basis vectors. A reasonable approach is to initially set v as half of the proportion explained by the corresponding SVD basis vectors, reducing these further if the resulting q is too large. As Theorem 2 shows, it is possible to set v in such a way that the algorithm is unable to find a suitable basis. If we cannot find a kth basis vector that satisfies the variability constraint, given , then a basis does not exist for this choice of v, and the specification needs revisiting: either vk

needs to be decreased, or an earlier constraint needs relaxing.

The choice of is also a concern for the standard UQ approaches based on principal components. In our experience, using similar rules (e.g., 95% or 99%) to the SVD applications leads to 0–2 extra basis vectors required.

In an application, it may be desirable to include certain physical patterns, deemed to be important, in our basis B, which may not lie within the subspace defined by . In this case, if we have p selected physical vectors,

, combining these with the first n – p vectors of the residual basis will not necessarily explain all of the variability in

. The algorithm may be applied to the n + p vectors given by the physical vectors and the full residual basis, giving a rotation of this space rather than of

. As truncation occurs after the majority of variability of

, is explained, the resulting truncated basis, while not strictly a rotation of the subspace defined by

, will exhibit similar qualities, and may be superior for representing z, if important physical patterns can be emulated when combined with signal from the ensemble.

To perform the algorithm with basis vectors from outside the subspace defined by , rather than finding linear combinations of the residual basis at step k > 1,

is used at each step, with orthogonality imposed after each new basis vector has been selected, via Gram–Schmidt (as by Result S3, applying Gram–Schmidt does not affect

).

4.3 Idealized Example Continued

We now apply the optimal rotation algorithm to the example of Section 3. We set , and B as the SVD basis, with the projection of (4) used for consistency with Section 3.1, to show that rotation fixes the described problems. One iteration of the algorithm finds a basis that satisfies

, with q = 5 (i.e., we need the first four vectors of the residual basis so that

explains at least 95% of

).

The reconstruction of z with this basis, and associated varMSE plot, are shown in . Now, our basis allows us to accurately represent z with the leading vectors, as the important patterns from low-order eigenvectors have been combined with the leading patterns (hence an additional vector being required to explain more than 95% of ).

Fig. 4 The reconstruction of z using the truncated basis , and the varMSE plot for this basis, with the truncated SVD basis given by the red and blue dotted lines.

Performing history matching as before, and using the reconstructions of the original fields rather than the coefficients, we find that 31.5% of is now in NROY space (Figure S5). Performing our previous check on the accuracy of the match, we find that no runs consistent with z have been ruled out.

As we are no longer in the terminal case, we perform probabilistic calibration on the field. The posterior densities found by calibrating on are shown in Figure S3, with the average simulator output given by samples from this posterior in the first plot of . While the samples here are not consistent with z, as the off-diagonal is too strong, we have been able to identify runs where there is signal on the main diagonal. This is because the rotated basis allows for this direction of the output space to be searched. The limited signal in the important directions from F has been extracted and used to guide calibration.

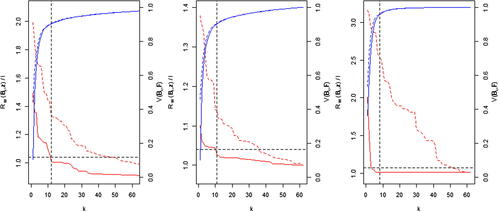

Fig. 5 The mean output for samples of

from the probabilistic calibration posterior, for the wave 1 rotated basis, the wave 2 rotated basis and the wave 3 SVD basis.

We continue the calibration by running a new design within NROY space. This new design should contain more signal in the direction of z, and hence it should be possible to find a rotation that reduces further than at the previous wave. We select 60 points from the wave 1 NROY space and run

at these points to give the wave 2 ensemble. We perform a rotation, and emulate and calibrate using the wave 2 ensemble. History matching reduces NROY space to 3.1% of

(Figure S7). If we instead perform probabilistic calibration, with zero density assigned to regions outside of the wave 1 NROY space, we find the average output field in the 2nd plot of (posteriors in Figure S6).

These results represent a large improvement over performing only one wave. We have ruled out the majority of , allowing future runs to be focused in this region. Probabilistic calibration is more accurate, with samples containing a strong diagonal, as with z.

Repeating the process, our wave 3 ensemble contains patterns more consistent with z than in previous waves, and hence the truncated SVD basis does not rule out the reconstruction of z, and no rotation is required. Following emulation for this basis, history matching leads to an NROY space consisting of 2% of (Figure S8). Probabilistic calibration (in the wave 2 NROY space) gives the average output in (posteriors in Figure S6), showing that our samples are now consistent with z.

5 Application to Tuning Climate Models

In this section, we will demonstrate that optimal rotation is an important and necessary tool if attempting to perform UQ for climate model tuning. However, as we will discuss, climate model tuning is not a solved problem, and it would be of limited value to simply show how calibration with our method can lead to an improvement in one output field over the standard methods, without necessarily improving the whole model or addressing the concerns of the community. We will motivate our discussion using the current Canadian atmosphere model, CanAM4.

CanAM4 is an Atmospheric Global Climate Model, which integrates the primitive equations on a rotating sphere employing a spherical-harmonic spatial discretization truncated triangularly at total wavenumber 63 (T63), with 37 vertical levels spanning the troposphere and stratosphere (von Salzen et al. Citation2013). CanAM4 has a large number of adjustable, “free,” parameters of which 13 will be varied here. The climatological influence of each set of free parameters is determined from 5-year “present-day” integrations with prescribed sea-surface temperatures and sea-ice. Model output is represented on the “linear” 128 × 64 Gaussian grid corresponding to the model’s T63 spectral resolution.

There are many output fields that must be checked for consistency with the observed climate when tuning the parameters of a climate model (in the case of CanAM4 there are more than 20). Here, we focus on just three two-dimensional fields: vertical air temperature (TA), the top of the atmosphere radiative balance (RTMT, W m− 2), and the cloud overlap percentage (CLTO). For RTMT and CLTO, the output is given over a longitude-latitude grid, so that . TA is the temperature averaged over longitude for each latitude and vertical pressure level so that

. There is also a temporal aspect to the output, with monthly values for every grid box; however, we remove this here by averaging over 5 years of June, July, August (JJA) output.

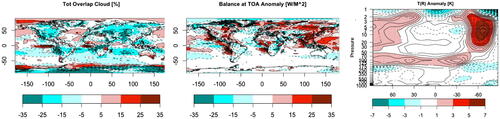

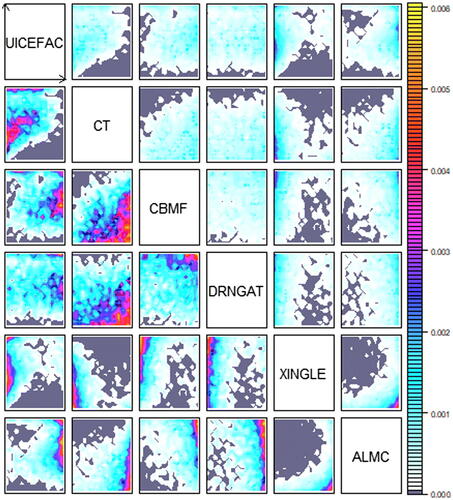

When evaluating and tuning the model, spatial anomaly plots are routinely examined to see how the model compares with observations. An anomaly plot shows the difference between the model and the observations with a blue-white-red color scale set such that blue is “too negative,” white is “alright” and red is “too positive.” So, for example, in a temperature anomaly plot, red areas show where the model is too warm (for the modelers) compared to observations. shows anomaly plots for CLTO, RTMT, and TA for the standard configuration of CanAM4, with the color scales representing the standard colors used by the modelers when tuning the model.

A goal of tuning is to try to reduce or remove biases that are visible from these plots. Yet equally important is to learn which biases cannot be removed simply by adjusting the model parameters. This is the search for “structural errors” in the model (what statisticians would call model discrepancies). Structural errors indicate that there are flaws with individual parameterizations, or with the way they interact, that cannot be fixed by tuning. Understanding what these structural errors are so that they might be addressed either as part of this phase of development or for the next is one of the major goals of tuning (Williamson et al. Citation2015). However, joint estimation of model discrepancy variances and model parameters is not possible without strong prior information (Brynjarsdóttir and O’Hagan Citation2014) due to lack of identifiability.

When working with CanAM4 then, our goal is to use history matching with a “tolerance to error” discrepancy variance (Williamson et al. Citation2015; Williamson, Blaker, and Sinha Citation2017) that aims to reduce the size of NROY space, so that, ultimately, in a calibration exercise we have strong prior information about and some structured information on discrepancy. A formal methodology for achieving this is beyond the scope of this article. However, we will demonstrate that optimal rotation is a crucial component for any attempt of this nature.

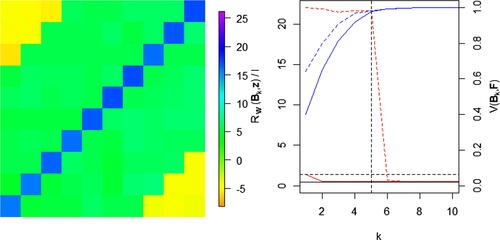

We designed 62 runs of CanAM4, varying 13 parameters and using a k-extended Latin Hypercube (Williamson Citation2015). shows varMSE plots for the output fields CLTO, RTMT, and TA for this ensemble. The weight matrix W used for the reconstructions represents our tolerance to model error [we discussed its correspondence to model discrepancy () in Section 4], and the red lines in these plots represent two alternatives based on interpreting the color scales pertaining to the white regions in . We assume that the white region represents 3 SDs (solid red line) and 1 SD (dashed red line), and set a diagonal W accordingly. The solid red lines on each plot indicate that we have a terminal case analysis under the small model discrepancy.

Fig. 7 varMSEplots for (a) CLTO, (b) RTMT, and (c) TA, with W based on 1 SD (dotted line) and 3 SDs (solid line). The dotted horizontal line indicates T.

The larger discrepancy indicates a terminal case in CLTO and RTMT, and that 35 basis vectors would be enough to adequately reconstruct TA. However, the blue line in the TA plot shows that there is so little ensemble signal on the basis vector coefficients after arguably 20 (or fewer) basis vectors, that calibration on 35 basis vectors is not possible. If discrepancy were increased (an operation that involves scaling the red line until it lies below the bound T represented by the dashed horizontal line in the plots), all three panels demonstrate that the reconstruction error under SVD decreases too slowly, so that a large number of basis vectors, each with coefficients that are increasingly difficult to emulate due to the decreasing ensemble signal, would be required to avoid a terminal case analysis.

Suppose model discrepancy so that we can consider

in the following. In order to use optimal rotation, we require W such that

, which is not true under our specification above for RTMT and CLTO. If we really believed our

represented the climate model’s ability to reproduce observed climate, then this indicates that we need a larger ensemble in order to explore the model’s variability. In that case, it may be desirable to follow a procedure like the one we present here to design these runs.

In our case, we believe it is clear that we have misspecified model discrepancy. In fact, we used a place-holder tolerance to error, so this analysis indicates that we are not tolerant enough to model error (at this stage). To explore model discrepancy, we first perform a rotation under the W given above, using the algorithm without step 1 in order to find as close to the reconstruction error of the untruncated SVD basis as possible, for small q and while retaining emulatability by setting

(as three rotated vectors is enough). Given this rotation, we then set

(14)

(14)

where j < 0.995 is a tuning parameter. This ensures that when reconstructed with the new basis, the observations will not be ruled out, and hence we can identify an NROY space likely to contain runs as consistent with z as possible, given the limited information we have with 62 ensemble members. This has the effect of “scaling” the reconstruction error for the rotated basis seen in below the horizontal dotted line at the point the basis is truncated. For our fields, we set j = 0.95 for RTMT, and j = 0.68 for the others (as j = 0.95 ruled out all of

for CLTO and TA).

Fig. 8 varMSEplots for CLTO, RTMT, and TA, with , with rotated basis (solid lines) and SVD basis (dotted lines). The dotted horizontal line indicates T.

We define NROY space as runs where is not ruled out using (3) for each of TA, CLTO and RTMT. This NROY space consists of 0.9% of

. We then design and run a new 50-member ensemble within this NROY space (discussed in Salter (Citation2017), sec. 6.3.5).

Upon inspection of the TA field for this wave 2 ensemble, we observe that every run contains the previously found strong warm bias in the Southern Hemisphere (Figure S9). As our optimal basis choice permitted the search for runs not containing this structural bias, these results are evidence that this may be a structural error. In practice, how much evidence is required before the modelers are convinced that a particular bias is structured or not is a climate modeling decision. Certainly, we could repeat our wave 1 procedure within the current NROY space and run a wave 3 and so on. This has the benefit of increasing the density of points in and the accuracy of emulators in key regions of

, thus insuring against possible “spikes” in the model input space that would correct the bias.

Assuming our modelers were convinced to treat this feature as a structural bias, we demonstrate an approach to include this information within the iterative calibration procedure. We first revisit the specification of the TA discrepancy, selecting the region with the common warm bias shown in Figure S10, deemed to be a structural error, and increasing for the grid boxes in this region. To do this, we set W as a diagonal matrix with 100 for the grid boxes in this region, and 1s elsewhere on the diagonal (note this is one possible choice. We might, instead, increase the correlation between these grid boxes in W in addition).

We redefine the wave 1 NROY space so that it only depends on CLTO and RTMT (consisting of 41.4% of ), and then include NROY wave 1 runs with the wave 2 ensemble when rotating and building emulators for wave 2. For TA, the optimal rotation algorithm is applied using the newly designed W, with the discrepancy

defined via (14), to ensure that z is not ruled out (W reflected our beliefs about the structure, not the magnitude, of

). History matching using the wave 2 bases and emulators leads to an NROY space containing 0.03% of

. Plots illustrating this NROY space for six of the more active parameters are shown in . We see that the regions with the greatest density of points in NROY space are generally found toward the edges of the parameter ranges. From the lower left plots, it is easier to identify relationships between some of the parameters, for example, CBMF generally needs to be high while UICEFAC needs to be toward the center of its allowable range.

Fig. 9 Plot showing the composition of the wave 2 NROY space for six of the parameters of CanAM4. For each cell of a pairwise plot, a large sample is taken over the remaining parameters and the proportion of space that is not ruled out is calculated. The lower left gives the same plots, with scales set for each individual plot to show more structure.

The calibration of climate models, or even simply the search for structural biases, is a massive undertaking, and a full tuning is well beyond the scope of this article. Each small ensemble of CanAM4 required 2 days of super-computer time to run and, in reality, the modelers routinely check over 20 spatial fields (and a host of other metrics) when tuning the model. UQ can help with the tuning procedure in providing tools (emulators) that allow to be explored much more quickly than is currently possible at the modeling centers. However, as this application has demonstrated, using the off-the-shelf methods based on the SVD basis does not work for tuning in general. It did not work for the three fields we showed here, nor have the authors ever found climate model output for which the problems we identified here were not present. Our application demonstrates the optimal rotation algorithm as an effective solution to quickly find bases without these issues for calibration.

6 Discussion

In this article, we highlighted the issue of terminal case analyses for the calibration of computer models. A terminal case analysis occurs when the prior assessment of model discrepancy is inconsistent with the computer simulator’s ability to mimic reality, and leads either to useless and incorrect posterior distributions (using the fully Bayesian procedure) or ruling out all of parameter space (using history matching). We showed that even when the prior assessment of model discrepancy is not inconsistent with the ability of the simulator, dimension reduction of spatial output using the ensemble-derived principal components (the SVD basis) often leads to a terminal case analysis.

We proposed a rotation of the SVD basis to highlight and incorporate important low-signal patterns that may be contained in the original SVD basis, giving a new basis that avoids the terminal case when this is possible. We then presented an efficient algorithm for optimal rotation, guaranteeing to avoid the terminal case when the model discrepancy allows, while ensuring enough signal on leading basis vectors to permit the fitting of emulators. We proved that our algorithm gives a valid rotation of the original basis, and established a fast test to see whether a given ensemble of model runs and discrepancy specification automatically leads to a terminal case analysis prior to rotation. Our methods are presented for models with spatial output, however, if basis methods were to be used for more general high-dimensional output (e.g., spatio-temporal), the optimal rotation approach would not change if, for example, PCA were taken over the entire spatio-temporal output for the design, as in Higdon et al. (Citation2008).

We demonstrated the efficacy of our method using an idealized application and showed that it scaled up to the important case of spatial output for state-of-the-art climate models. Our application highlighted the issue of the terminal case for climate model analyses, and showed the problems with using SVD in practice. We applied history matching for two waves to CanAM4 and showed how, combined with optimal rotation, we can begin to distinguish between what the modelers term “structural errors” and “tuning errors.”

A purely methodological UQ approach for tuning climate models does not exist. It may be tempting, for UQ practitioners who are not familiar with climate models, to claim that calibration of computer simulators is a “solved” problem and that “all” that is required is for the modelers to specify their model discrepancy. We believe that the challenge for model tuning lies in the understanding of this elusive quantity. For the statistical community, rather than focusing on developing comprehensive methods for calibrating climate models automatically, this should mean engaging with modelers to develop robust tools and methods to help identify and understand these errors. This type of approach would have obvious implications for tuning, but would also feed into model development as it becomes better understood which parameters control various biases, and therefore which parameterizations need particular attention during the next development cycle.

Supplementary Materials

The supplementary materials include further details and plots for the examples provided in the main text, and additional results and proofs relating to the optimal rotation algorithm. R code can be accessed at https://github.com/JSalter90/RotateBasis.

Supplemental Material

Download Zip (357 KB)Acknowledgments

The authors gratefully acknowledge support from EPSRC fellowship No. EP/K019112/1 and support from the Canadian Network for Regional Climate and Weather Processes (CNRCWP), funded by the Natural Science and Engineering Research Council (NSERC Grant 433915-2012). The authors thank the Isaac Newton Institute for Mathematical Sciences, Cambridge, for support and hospitality during the Uncertainty Quantification programme where work on this article was undertaken (EPSRC grant no EP/K032208/1). We would also like to thank Yanjun Jiao for managing our ensembles of CanAM4.

References

- Aarts, E. H., and Van Laarhoven, P. J. (1985), “Statistical Cooling: A General Approach to Combinatorial Optimization Problems,” Philips Journal of Research, 40, 193–226.

- Bayarri, M., Berger, J., Cafeo, J., Garcia-Donato, G., Liu, F., Palomo, J., Parthasarathy, R., Paulo, R., Sacks, J., and Walsh, D. (2007), “Computer Model Validation With Functional Output,” The Annals of Statistics, 35, 1874–1906. DOI: https://doi.org/10.1214/009053607000000163.

- Brynjarsdóttir, J., and O’Hagan, A. (2014), “Learning About Physical Parameters: The Importance of Model Discrepancy,” Inverse Problems, 30, 114007. DOI: https://doi.org/10.1088/0266-5611/30/11/114007.

- Chang, W., Applegate, P. J., Haran, M., and Keller, K. (2014), “Probabilistic Calibration of a Greenland Ice Sheet Model Using Spatially-Resolved Synthetic Observations: Toward Projections of Ice Mass Loss With Uncertainties,” Geoscientific Model Development Discussions, 7, 1905–1931. DOI: https://doi.org/10.5194/gmdd-7-1905-2014.

- Chang, W., Haran, M., Applegate, P., and Pollard, D. (2016), “Calibrating an Ice Sheet Model Using High-Dimensional Binary Spatial Data,” Journal of the American Statistical Association, 111, 57–72. DOI: https://doi.org/10.1080/01621459.2015.1108199.

- Chen, H., Loeppky, J. L., Sacks, J., and Welch, W. J. (2016), “Analysis Methods for Computer Experiments: How to Assess and What Counts?,” Statistical Science, 31, 40–60. DOI: https://doi.org/10.1214/15-STS531.

- Collins, M., An, S.-I., Cai, W., Ganachaud, A., Guilyardi, E., Jin, F.-F., Jochum, M., Lengaigne, M., Power, S., Timmermann, A., and Vecchi, G. (2010), “The Impact of Global Warming on the Tropical Pacific Ocean and El Niño,” Nature Geoscience, 3, 391–397. DOI: https://doi.org/10.1038/ngeo868.

- Conti, S., and O’Hagan, A. (2010), “Bayesian Emulation of Complex Multi-output and Dynamic Computer Models,” Journal of Statistical Planning and Inference, 140, 640–651. DOI: https://doi.org/10.1016/j.jspi.2009.08.006.

- Craig, P. S., Goldstein, M., Seheult, A., and Smith, J. (1996), “Bayes Linear Strategies for Matching Hydrocarbon Reservoir History,” Bayesian Statistics, 5, 69–95.

- Goldstein, M., and Rougier, J. (2009), “Reified Bayesian Modelling and Inference for Physical Systems,” Journal of Statistical Planning and Inference, 139, 1221–1239. DOI: https://doi.org/10.1016/j.jspi.2008.07.019.

- Gu, M., and Berger, J. O. (2016), “Parallel Partial Gaussian Process Emulation for Computer Models With Massive Output,” The Annals of Applied Statistics, 10, 1317–1347. DOI: https://doi.org/10.1214/16-AOAS934.

- Harris, G., Sexton, D., Booth, B., Collins, M., Murphy, J., and Webb, M. (2006), “Frequency Distributions of Transient Regional Climate Change From Perturbed Physics Ensembles of General Circulation Model Simulations,” Climate Dynamics, 27, 357–375. DOI: https://doi.org/10.1007/s00382-006-0142-8.

- Haylock, R., and O’Hagan, A. (1996), “On Inference for Outputs of Computationally Expensive Algorithms With Uncertainty on the Inputs,” Bayesian Statistics, 5, 629–637.

- Higdon, D. (1998), “A Process-Convolution Approach to Modelling Temperatures in the North Atlantic Ocean,” Environmental and Ecological Statistics, 5, 173–190.

- Higdon, D., Gattiker, J., Williams, B., and Rightley, M. (2008), “Computer Model Calibration Using High-Dimensional Output,” Journal of the American Statistical Association, 103, 570–583. DOI: https://doi.org/10.1198/016214507000000888.

- Hourdin, F., Mauritsen, T., Gettelman, A., Golaz, J.-C., Balaji, V., Duan, Q., Folini, D., Ji, D., Klocke, D., Qian, Y., Rauser, F, (2017), “The Art and Science of Climate Model Tuning,” Bulletin of the American Meteorological Society, 98, 589–602. DOI: https://doi.org/10.1175/BAMS-D-15-00135.1.

- Jolliffe, I. (2002), Principal Component Analysis, New York: Springer.

- Kaufman, C. G., Bingham, D., Habib, S., Heitmann, K., and Frieman, J. A. (2011), “Efficient Emulators of Computer Experiments Using Compactly Supported Correlation Functions, With an Application to Cosmology,” The Annals of Applied Statistics, 5, 2470–2492. DOI: https://doi.org/10.1214/11-AOAS489.

- Kennedy, M. C., and O’Hagan, A. (2001), “Bayesian Calibration of Computer Models,” Journal of the Royal Statistical Society: Series B, 63, 425–464. DOI: https://doi.org/10.1111/1467-9868.00294.

- Mauritsen, T., Stevens, B., Roeckner, E., Crueger, T., Esch, M., Giorgetta, M., Haak, H., Jungclaus, J., Klocke, D., Matei, D., and Mikolajewicz, U. (2012), “Tuning the Climate of a Global Model,” Journal of Advances in Modeling Earth Systems, 4, M00A01.

- Meehl, G. A., Boer, G. J., Covey, C., Latif, M., and Stouffer, R. J. (2000), “The Coupled Model Intercomparison Project (CMIP),” Bulletin of the American Meteorological Society, 81, 313–318. DOI: https://doi.org/10.1175/1520-0477(2000)081<0313:TCMIPC>2.3.CO;2.

- Murnaghan, F. D. (1962), The Unitary and Rotation Groups (Vol. 3), Washington, D. C.: Spartan Books.

- Pollard, D., Chang, W., Haran, M., Applegate, P., and DeConto, R. (2016), “Large Ensemble Modeling of the Last Deglacial Retreat of the West Antarctic Ice Sheet: Comparison of Simple and Advanced Statistical Techniques,” Geoscientific Model Development, 9, 1697–1723. DOI: https://doi.org/10.5194/gmd-9-1697-2016.

- Pukelsheim, F. (1994), “The Three Sigma Rule,” The American Statistician, 48, 88–91. DOI: https://doi.org/10.2307/2684253.

- Sacks, J., Welch, W. J., Mitchell, T. J., and Wynn, H. P. (1989), “Design and Analysis of Computer Experiments,” Statistical Science, 4, 409–423. DOI: https://doi.org/10.1214/ss/1177012413.

- Salter, J. M. (2017), “Uncertainty Quantification for Spatial Field Data Using Expensive Computer Models: Refocussed Bayesian Calibration With Optimal Projection,” Ph.D. thesis, University of Exeter.

- Salter, J. M., and Williamson, D. (2016), “A Comparison of Statistical Emulation Methodologies for Multi-wave Calibration of Environmental Models,” Environmetrics, 27, 507–523. DOI: https://doi.org/10.1002/env.2405.

- Scaife, A. A., Spangehl, T., Fereday, D. R., Cubasch, U., Langematz, U., Akiyoshi, H., Bekki, S., Braesicke, P., Butchart, N., Chipperfield, M. P., and Gettelman, A. (2012), “Climate Change Projections and Stratosphere–Troposphere Interaction,” Climate Dynamics, 38, 2089–2097. DOI: https://doi.org/10.1007/s00382-011-1080-7.

- Screen, J. A., and Williamson, D. (2017), “Ice-Free Arctic at 1.5 °C?,” Nature Climate Change, 7, 230–231. DOI: https://doi.org/10.1038/nclimate3248.

- Sexton, D. M., Murphy, J. M., Collins, M., and Webb, M. J. (2011), “Multivariate Probabilistic Projections Using Imperfect Climate Models Part I: Outline of Methodology,” Climate Dynamics, 38, 2513–2542. DOI: https://doi.org/10.1007/s00382-011-1208-9.

- Stocker, T. F., Qin, D., Plattner, G.-K., Tignor, M., Allen, S. K., Boschung, J., Nauels, A., Xia, Y., Bex, B., and Midgley, B. (2013), “IPCC, 2013: Climate Change 2013: The Physical Science Basis,” Contribution of Working Group I to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change.

- Vernon, I., Goldstein, M., and Bower, R. G. (2010), “Galaxy Formation: A Bayesian Uncertainty Analysis,” Bayesian Analysis, 5, 619–669. DOI: https://doi.org/10.1214/10-BA524.

- von Salzen, K., Scinocca, J. F., McFarlane, N. A., Li, J., Cole, J. N., Plummer, D., Verseghy, D., Reader, M. C., Ma, X., Lazare, M., and Solheim, L. (2013), “The Canadian Fourth Generation Atmospheric Global Climate Model (CanAM4). Part I: Representation of Physical Processes,” Atmosphere-Ocean, 51, 104–125. DOI: https://doi.org/10.1080/07055900.2012.755610.

- Wilkinson, R. D. (2010), “Bayesian Calibration of Expensive Multivariate Computer Experiments,” in Large-Scale Inverse Problems and Quantification of Uncertainty, Ser. Comput. Stat., eds. L. T. Biegler et al., New York: Wiley, pp. 195–216.

- Williamson, D. (2015), “Exploratory Ensemble Designs for Environmental Models Using k-Extended Latin Hypercubes,” Environmetrics, 26, 268–283. DOI: https://doi.org/10.1002/env.2335.

- Williamson, D., Blaker, A. T., Hampton, C., and Salter, J. (2015), “Identifying and Removing Structural Biases in Climate Models With History Matching,” Climate Dynamics, 45, 1299–1324. DOI: https://doi.org/10.1007/s00382-014-2378-z.

- Williamson, D. B., Blaker, A. T., and Sinha, B. (2017), “Tuning Without Over-Tuning: Parametric Uncertainty Quantification for the NEMO Ocean Model,” Geoscientific Model Development, 10, 1789–1816. DOI: https://doi.org/10.5194/gmd-10-1789-2017.

- Williamson, D., Goldstein, M., Allison, L., Blaker, A., Challenor, P., Jackson, L., and Yamazaki, K. (2013), “History Matching for Exploring and Reducing Climate Model Parameter Space Using Observations and a Large Perturbed Physics Ensemble,” Climate Dynamics, 41, 1703–1729. DOI: https://doi.org/10.1007/s00382-013-1896-4.

- Williamson, D., Goldstein, M., and Blaker, A. (2012), “Fast Linked Analyses for Scenario-Based Hierarchies,” Journal of the Royal Statistical Society: Series C, 61, 665–691. DOI: https://doi.org/10.1111/j.1467-9876.2012.01042.x.

- Xiang, Y., Gubian, S., Suomela, B., and Hoeng, J. (2013), “Generalized Simulated Annealing for Efficient Global Optimization: The GenSA Package for R,” The R Journal, 5, 13–28.