?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Using the spatial structure of various indoor environments as prior knowledge, the robot would construct the map more efficiently. Autonomous mobile robots generally apply simultaneous localization and mapping (SLAM) methods to understand the reachable area in newly visited environments. However, conventional mapping approaches are limited by only considering sensor observation and control signals to estimate the current environment map. This paper proposes a novel SLAM method, map completion network-based SLAM (MCN-SLAM), based on a probabilistic generative model incorporating deep neural networks for map completion. These map completion networks are primarily trained in the framework of generative adversarial networks (GANs) to extract the global structure of large amounts of existing map data. We show in experiments that the proposed method can estimate the environment map 1.3 times better than the previous SLAM methods in the situation of partial observation.

GRAPHICAL ABSTRACT

1. Introduction

Even for unobserved areas that could be predicted with universal common knowledge, such as a corridor or the corner of a room, it is necessary to get observations first to estimate the environment map in previous simultaneous localization and mapping (SLAM) research. To understand the shape and structure of the reachable area in a newly visited environment, autonomous mobile robots generally use a SLAM method which consists of estimating the environment map and self-position concurrently [Citation1–3]. For example, when an autonomous cleaning robot is introduced into a newly human living environment where the environmental map is unknown, the robot necessarily starts operation by acquiring observations using its own sensors to estimate the environment's reachable area. However, in previous SLAM research, such as FastSLAM [Citation4,Citation5], each cell of the occupancy grid map is estimated independently, and the estimation of the environment map is limited to observable areas only. Therefore, to execute tasks using the entire environment map estimated in previous studies [Citation5,Citation6], the robot needs to get sufficient observation beforehand.

In contrast, a human can predict the internal spatial structure of unknown areas to some extent, even if it does not reach everything inside the building, such as the corridor or the room next door. We believe that this ability of humans comes from the prior learning of structures inside various buildings from their own experience. In this study, the robot uses map completion in unknown environments to estimate the unobserved area from the partially observed area using the joint probability of all the occupancy grid map cells. Map completion, which consists of estimating the entire environment map with sufficient environmental information from a partial environment map estimated using insufficient environmental information, can be thought of as an image completion task of computer vision. In this regard, using generative adversarial networks (GANs) [Citation7] is now considered state of the art to perform image completion [Citation8,Citation9]. Indeed, GANs [Citation7,Citation10] have been attracting attention because they can generate genuine data compared to more conventional image generation methods [Citation11,Citation12]. Therefore, we adopt the framework of GANs for map completion and integrate it with a traditional SLAM method.

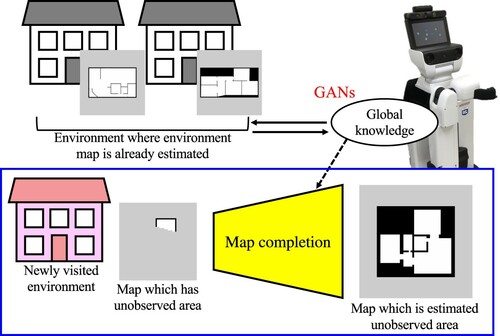

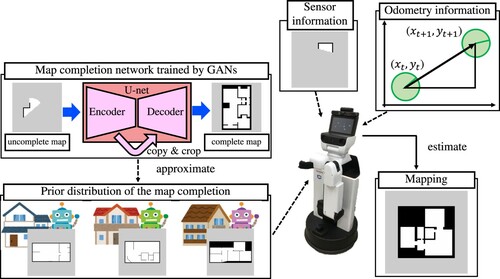

We propose a novel SLAM method, map completion network-based SLAM (MCN-SLAM), that uses map completion trained in the framework of a generative model, and incorporates the prior distribution of the map that modeled the joint distribution of the occupancy probabilities of all the grid cells. Figure shows an overview of the proposed MCN-SLAM. With this method, we extract the global structure of maps of multiple environments and use it as a prior distribution for map completion. Compared to previous SLAM methods where the map is generated only in one environment, MCN-SLAM can acquire or transfer the knowledge of the general room structure from multiple environments. Hence, our contributions with MCN-SLAM are as follows:

We extend the FastSLAM formulation by incorporating map completion using generative models.

We show that our method can estimate the map of the unobserved area from the observed area with higher accuracy than the traditional SLAM methods.

We show that our method can acquire and transfer general room structure knowledge from multiple environments.

Figure 1. Overview of the proposed method: map completion network-based SLAM (MCN-SLAM). MCN-SLAM acquires knowledge of the global room structure from the known environments and transfers it to the unknown environment. Global knowledge includes features represented by occupancy grid maps, such as wall layout and room size. The map is complemented by the interaction of global knowledge with partially generated maps using SLAM in the newly visited environment.

The remainder of the paper is structured as follows. Section 2 introduces the related work. Section 3 describes the proposed method MCN-SLAM. Section 4 compares the proposed method with previous related methods. Finally, Section 5 concludes this study with perspectives for future work.

2. Related work

This section introduces works related to the proposed method, which intersects two fields: simultaneous localization and mapping (SLAM), and image completion with deep generative models (DGMs).

2.1. Simultaneous localization and mapping (SLAM)

SLAM consists of estimating the environment map and self-position at the same time [Citation1–3]. Montemerer et al. proposed FastSLAM as an online method to solve SLAM [Citation4,Citation5] by using a Rao-Blackwellized particle filter. Gmapping [Citation13], which is implemented as a standard Robot Operating System (ROS) [Citation14] package, is a grid-based version of FastSLAM. The environment map estimated by Gmapping is in the form of an occupancy grid map and uses the mechanisms for handling occupancy grid maps prepared in ROS.

The occupancy grid map is one of the map representations that robots commonly use to accomplish various service tasks [Citation1,Citation15–17]. It is a method that divides the environment into grid cells at fixed intervals and stores the occupancy probability of each cell in a 2D list format. The cells with a high value of occupancy probability are assumed to be occupied, and the cells with a low value are assumed to be unoccupied and available for the robot to move to. The occupancy grid map is more suitable for robot navigation tasks than other map representation methods because it can search for routes not occupied by obstacles on the map. Assuming that the grid cell of the occupancy grid map with index i is , the occupancy grid map m is the space divided by grid cells such that

where S is the number of cells in the occupancy grid map. A binary occupancy value is assigned to each grid

:

when occupied and

when unoccupied. An occupancy grid map is used to expand an uncertain or unspecified region as an unsearched area. The unsearched area is a cell for which it is impossible to determine its occupancy from the sensor values.

CNN-SLAM is a method that utilizes convolutional neural networks (CNN) to improve SLAM accuracy with depth prediction [Citation18]. While CNN-SLAM is a method that uses neural networks to complement the observation, our proposed method uses neural networks to complement the environment map estimated from the observation.

There are also methods to complement the unobserved parts of the environment map using the information of the layout of the rooms [Citation19–21]. In particular, Luperto et al. proposed a method that identifies the layout of a partially known room from the walls on the 2D grid map and estimates the room layout from the known parts of the environment to the unknown parts by propagating the regularities [Citation21]. However, map completion functions as post-processing of SLAM and is limited to partial area completion. In our study, SLAM and map completion are theoretically integrated as one probabilistic generative model, and maps are sequentially generated from the global knowledge of spatial structures.

2.2. Image completion with deep generative models (DGMs)

When performing map completion, we want the robot to maintain some features from the observation to the semantic map, such as the occupancy grid and the unoccupied area estimated from the observations in the occupancy grid map. Therefore, map completion can be solved in the pix2pix [Citation8] framework, which is one approach of GANs [Citation7], by regarding the occupancy grid map completion as a similar task of image completion. Indeed pix2pix, which learns the relationship between a pair of images using U-net [Citation22] in the generator part, enables image completion and line drawing coloring. pix2pix is a method based on the conditional GAN (CGAN) framework, which is a GAN method using conditional information to learn the relationships between the training data and the condition data [Citation23]. The pix2pix's generator has skip connections to extract the features of the original image at the encoder by using the image as input and adding these features at the decoder to the output of the generator.

GANs [Citation7] are generative models that simultaneously learn two networks: the generator that performs data generation and the discriminator that estimates the distance between the distribution of the data generated by the generator and that of the real data. GANs have attracted increasing attention because they can generate genuine data compared to conventional generative models [Citation11,Citation12]. However, GANs face the problem of difficult convergence because two networks are trained simultaneously [Citation10]. In this regard, Miyata et al. proposed spectral normalization GAN (SNGAN) with improved performance using spectral normalization as the discriminator weight [Citation24]. Our study uses SNGAN to help the learning converge because SNGAN is easy to implement and can be combined with other GAN structures. For the above reasons, we adopt U-net for the generator and pix2pix and SNGAN for the discriminator.

Katyal et al. proposed an active SLAM method that combines map estimation and map completion using U-net to perform map completion and use an ambiguous position where the map cannot be complemented as the next search position [Citation25]. Shrestha et al. proposed a variational autoencoder (VAE) deep neural network that learns to predict unseen regions of building floor plans and used it for path planning to enhance exploration performance [Citation26]. These methods use local maps to improve the estimation accuracy and determine the navigation targets. In contrast, our study attempts to estimate the shape of the global environment.

3. Proposed method: MCN-SLAM

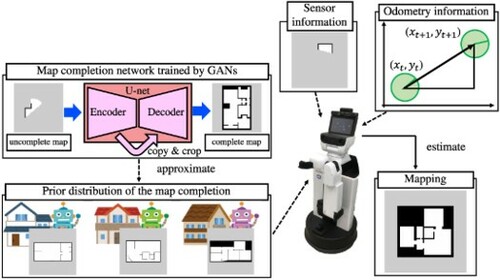

We propose a map completion network-based SLAM (MCN-SLAM) that estimates the unobserved areas based on partial observation by using the global structure extracted from multiple environmental maps. In this method, a network for map completion is trained in advance using the architecture of a generative model, and the prior distribution of the map is modeled by the joint distribution of the occupancy probabilities of all cells. An overview of the proposed MCN-SLAM method is shown in Figure .

Figure 2. The proposed method, map completion network-based SLAM (MCN-SLAM), trains a network to model the global structure extracted from multiple environmental maps using GANs and estimates the unobserved areas based on partial observation. MCN-SLAM extracts the global structure of maps of multiple environments as a prior distribution for map completion. In a newly visited environment, the robot estimates the map using that distribution and observation information. Sensor information and odometry information are used to construct the uncomplete map. The complete map is obtained as a sample from the conditional distribution.

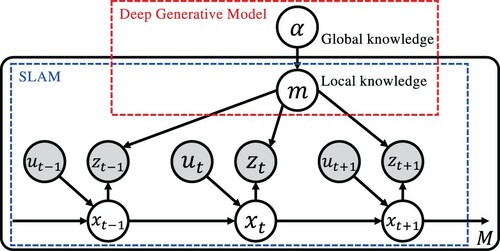

In addition, Figure shows the graphical model representation of MCN-SLAM, and Table its variables. Equations (Equation1(1)

(1) )–(Equation3

(3)

(3) ) describe the generative process of the proposed graphical model as follows:

(1)

(1)

(2)

(2)

(3)

(3)

where the probability distribution of Equation (Equation1

(1)

(1) ) represents a motion model in SLAM, i.e. a state transition model, and the probability distribution of Equation (Equation2

(2)

(2) ) a measurement model in SLAM.

Figure 3. Graphical model representation of the MCN-SLAM generative process where the gray nodes indicate observation variables and the white nodes unobserved variables. The part surrounded by the blue dotted frame is the model representation of the SLAM of one environment. The global knowledge is assumed as a parameter of the prior distribution for maps that integrate local knowledge in various environments.

Table 1. Definition of the variables in the graphical model of MCN-SLAM.

In the following, Section 3.2 describes the training of the map completion network, and Section 3.1 the algorithm that performs SLAM.

3.1. SLAM with map completion

The proposed MCN-SLAM method is an extension of FastSLAM. FastSLAM is formalized as calculating the joint distribution of the trajectory of the self-positions and the map m. Likewise, the formulation of MCN-SLAM is as follows:

(4)

(4)

where it is assumed that the parameter of the prior distribution α is learned in advance. The learning method is described in Section 3.2.

From the standpoint of generative models, the mapping process in SLAM is the inference of the posterior distribution of a map m, as shown in the first term of the Equation (Equation4(4)

(4) ). In the second term of Equation (Equation4

(4)

(4) ), the prior parameter α can be ignored as an approximation because the information outside the sensor observation has little effect on the self-localization, and self-localization is determined by a particle filter. The first term of Equation (Equation4

(4)

(4) ) is the posterior probability distribution calculated from the information up to the present and the parameters that determine the shape of the prior distribution of the map.

Here it is difficult to estimate the shape of the distribution that models the joint distribution of the occupancy probabilities of all cells used as the prior distribution of the map. Therefore, in this study, we approximate the distribution of data sampled from the prior distribution of the map by the distribution of data generated by the map completion network.

3.2. Training map completion networks

In this study, the dependency of the occupancy probability of the cells in the occupancy grid map is obtained from the estimation result of the map in the known environment. The map in an unknown environment is complemented by using the prior distribution of the occupancy grid map.

The map estimated using the partial observation up to a certain time

, where T is the last time at which sufficient observation is given, is as follows:

(5)

(5)

where

uses the value with the maximum weight among the particles estimated by the particle filter.

The map completion task consists of estimating the environment map using and the parameter α that determines the shape of the prior distribution of the map. Since

is a map of the environment estimated from

and

, we approximate it by assuming that it has the information of

and

. This approximation is similar to the calculation process used for sequential updates of grid maps. Therefore, the first term in Equation (Equation4

(4)

(4) ) is approximated as follows:

(6)

(6)

By approximating the probability distribution, various map complement candidates can be expressed stochastically. Therefore, the completed environment map

is sampled by the following:

(7)

(7)

To estimate the prior distribution for map completion, we consider the occupancy grid map as an image, train the network using an image completion method, and approximate the prior distribution by that network. In this study, the prior distribution is approximated by U-net [Citation22]. When training the map completion network, the distribution of the estimated environment map

is trained to approximate the distribution of

. Two types are prepared: with and without the discriminator loss in GANs.

With the discriminator: The objective function C for training the map completion network in the pix2pix framework, which is a CGAN [Citation23], is as follows:

(8)

(8)

where

is the Jensen-Shannon divergence (JSD) between the distribution A and B, c the condition,

the training data distribution, and

the distribution of the data generated by the generator G. CGAN learns to estimate

that minimizes the distance from the distribution

of the training data, which is the map

estimated at the last time T, to minimize this objective function.

Without the discriminator: It is also possible to train the generator G directly without using the discriminator in GANs. We use the L2 loss, i.e. the Euclidean distance, for training. The objective function C for training the map completion network using U-net is as follows:

(9)

(9)

where

is the L2 loss function between the distributions A and B.

In this study, since the condition is , the right side of Equation (Equation7

(7)

(7) ) is expressed by the following equation using a network learned in the above framework:

(10)

(10)

Assuming that generating data from the network is sampling from the distribution of the generated data, the equation for sampling the completed environment map from the prior distribution and the map

can be written from Equation (Equation10

(10)

(10) ) as follows:

(11)

(11)

3.3. Processing of the input and output map images during training

Data format: The occupancy grid map in Gmapping, which is used in the SLAM part of our proposed method, is a two-dimensional tensor. This map data is divided into the map image

and the mask image

, and is converted in a grayscale image. The image data format of the occupancy grid map is

, where S represents the number of cells in the occupancy grid map. Here,

is the i-th pixel in which each cell of the occupancy grid map is described as unoccupied area, occupancy area, or unsearched area, with three values defined as follows:

(12)

(12)

In addition, the mask image data in the unsearched area is

. Here,

is the image of each cell of the occupancy grid map binarized into a searched or an unsearched area as follows:

(13)

(13)

Input and output of the generator: The generator network receives two kind of images,

and

, and generates

and

correspondingly. The input and output size is

.

The outputs of the generator are converted into the image format of a ternary for the occupancy grid map. The cell of the inputs and

are discrete values, but the cell of the outputs

and

are continuous values because they pass through the network. The process of converting the outputs of the generator, which are continuous values, into a discrete value is performed as follows:

(14)

(14)

In the continuous value representation of the occupancy grid map at the output of the generator, it is difficult to distinguish between the unsearched area and the searched area. Therefore,

is output to obtain information that supports the restoration of the map image by estimating the unsearched area. Since U-net requires the input and output layers to have the same structure, mask images are also required for both the input and output regardless of the use of a discriminator.

Input and output of the discriminator: The inputs of the discriminator are , which is the map before completion, and

, which is the map after completion. These inputs correspond to

and

, respectively. The output of the discriminator is a binary value, i.e. true or false.

4. Experiment

4.1. Experimental dataset

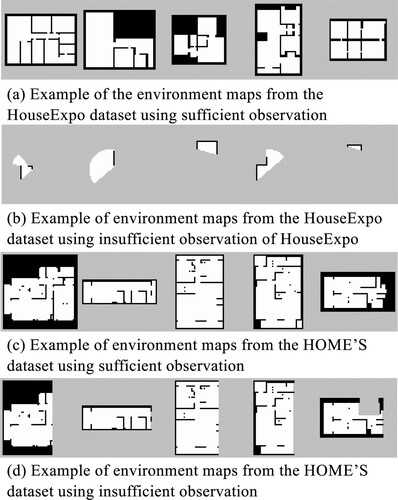

We prepared two types of large-scale home environment datasets: the HouseExpo dataset [Citation27] and the HOME'S dataset [Citation28]. The HouseExpo dataset is a large-scale image dataset of 2D indoor layouts generated from the SUNCG dataset [Citation29]. In our experiments, we use the ground truth included in the dataset as the map data estimated using sufficient observation. The map images generated by a Pseudo SLAM simulator are also included in the HouseExpo dataset, as the map data is estimated using insufficient observation. We used 5000 images as training data, 200 images as model validation data, and 100 images as test data.

On the other hand, the HOME'S dataset includes floor plans of apartments in Japan. We use map images created by classifying the colored areas of the floor plans included in the dataset into the occupancy grid, unoccupied cells, and unsearched areas as the map data estimated using sufficient observation. We also use map images created by replacing a part of these floor plans with an unsearched area as the map data estimated using insufficient observation. We used 3500 images as training data, 200 images as model validation data, and 100 images as test data. All the map images were resized to pixels.

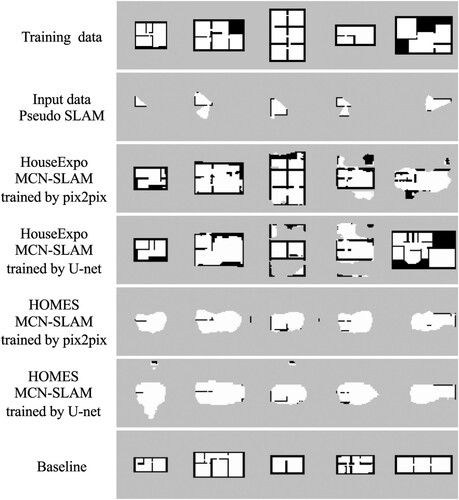

Figure shows some examples of the data. As the HOME'S data are converted from the floor plans included in the dataset, they contain more noise compared to the HouseExpo dataset created in a simulator environment. The environment maps of the HOME'S dataset using insufficient observation randomly lack 10–50% of the map size of the dataset using sufficient observation. The environment maps of the HouseExpo dataset using insufficient observation are generated by the first iteration of SLAM in the simulator.

Figure 4. Examples of the dataset: (a) example of the environment maps from the HouseExpo dataset using sufficient observation, (b) example of environment maps from the HouseExpo dataset using insufficient observation of HouseExpo, (c) example of environment maps from the HOME'S dataset using sufficient observation, and (d) example of environment maps from the HOME'S dataset using insufficient observation.

4.2. Network training

We train a map completion network in the generative model framework. The network architecture is based on U-net [Citation22] for the generator, similarly to pix2pix [Citation8]. For the map completion, we want to maintain some features from the encoder to the decoder, such as the structure of the part in the occupancy grid map estimated from observation and thus likely to be correct. Therefore, we use skip connections in the generator to retain the information.

We also use spectral normalization [Citation24] at the discriminator of pix2pix [Citation8] as it helps the convergence of GANs that are difficult to train. In addition, spectral normalization can improve performance if added to the generator as well [Citation30]. In this study, we also adopt spectral normalization for U-net.

The network's training time was 14.5 h for 10,000 epochs when using an Intel Core i9 7980XE CPU combined with an Nvidia Quadro GV100 GPU. The implementation was realized on Keras and TensorFlow.

4.3. Experiment 1: comparison of the inference models

We measure the accuracy of map completion using both the HouseExpo and HOME'S datasets. The methods to be compared are as follows:

MCN-SLAM (proposed): pix2pix (U-net+discriminator)

MCN-SLAM (proposed): U-net

Pseudo SLAM

Baseline

MCN-SLAM requires the skip connections of a U-net. In this study, we train pix2pix, which is a combination of U-net and discriminator loss. U-net, without discriminator loss, performs supervised learning using the training data. Pseudo SLAM is an occupancy grid map image included in the HouseExpo dataset that was obtained from simulation. Although the experimental results of Pseudo SLAM are not the results of the estimation by the actual robot, we consider the data obtained by the Pseudo SLAM simulator as the occupancy grid map estimated when a robot performs SLAM in the environment. We use the training data with the highest precision with for the estimated map as the result of the baseline method. This shows that the data output by the network does not simply mimic the training data but that the distribution of the training data is closer to the distribution of the generated data by the inference model.

In addition, to show that the proposed method can obtain the prior distribution corresponding to the domain of the training data, we evaluate the proposed method with the same index using another domain. Here, we compare using the test data of the HouseExpo dataset for the network of map completion trained using the HouseExpo dataset and the HOME'S dataset.

In this experiment, we assume that the test data of the dataset to be , and evaluate the accuracy of the map completion method by the proposed method and comparison methods. We evaluate them in terms of accuracy, precision, recall, and F-measure. In this case, we compare the correctness of an occupancy area that represents the shape of the room. Therefore, we use a binary classification: is an occupancy area or is not.

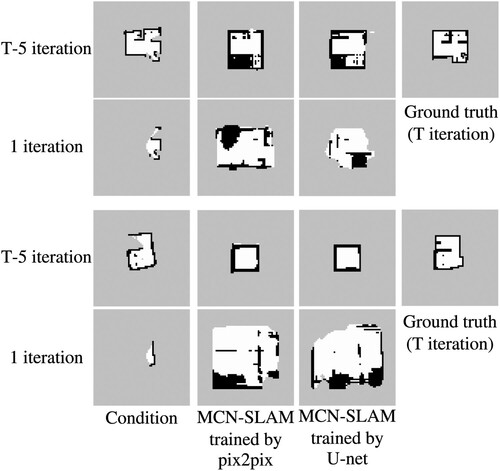

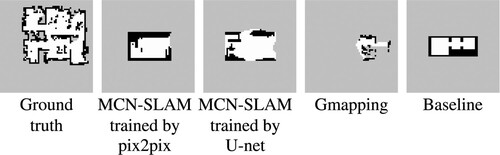

Table shows that the proposed method performed better in accuracy and F-measure than the comparison methods, and Figure shows an example of generated map images. This indicates that the network trained by the proposed method can perform map completion. In addition, comparing pix2pix and U-net, the methods without discriminator performed better to complete maps. As a result, the completed map was made according to the shape of the partially observed map extended by the acquired knowledge. Furthermore, the comparison between the proposed method and the baseline method shows that the data output by the generator network does not simply mimic the training data but that the distribution of the training data is closer to the distribution of the data generated by the inference model.

Table 2. Experiment 1: experimental results using the HouseExpo dataset as test data.

When comparing the networks trained with the HouseExpo dataset and the networks trained with the HOME'S dataset, the accuracy and F-measure performed better on networks trained using the same HouseExpo dataset as the test data. This indicates that the distribution that the map completion network can approximate is a domain-dependent prior distribution.

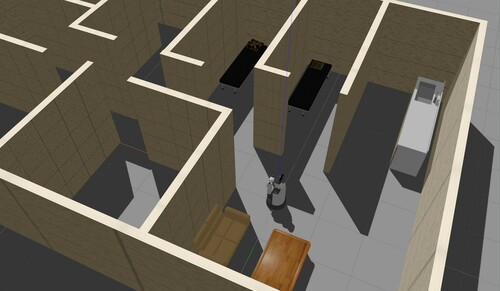

4.4. Experiment 2: map completion in simulation environment

We evaluate the performance of the proposed method that integrates the network of map completion with SLAM using a simulator environment. For the experiment, 10 environments were randomly extracted from the test data of the HouseExpo dataset and reproduced in the Gazebo [Citation31] simulator. Figure shows an example of our simulation environment.

The methods to be compared are as follows:

MCN-SLAM (proposed): pix2pix (U-net+discriminator) + Gmapping

MCN-SLAM (proposed): U-net + Gmapping

Baseline

We compared the results of these methods using only the observations that were acquired without the robot moving. We use the training data with the highest precision with for the estimated map as a result of the baseline method, same as in Section 4.3.

From the result obtained by Gmapping using sufficient observation information as , we evaluate the accuracy of the map completion methods by comparing them with

. We evaluate the methods in terms of accuracy, precision, recall, and F-measure.

Table shows the experimental results and Figure shows an example of generated map images. When comparing the map estimation methods with and without map completion, the accuracy of the previous methods without map completion was higher, but the F-measure of the proposed method with map completion was higher. Accuracy has a large effect on unsearched areas, so it is expected that the accuracy is higher in the method that does not use map completion, in which the proportion of unsearched areas is large in the estimation results. The F-measure of the proposed method using map completion was 1.3 times higher than the baseline, and it shows that map completion can be performed from limited observations.

Table 3. Experiment 2: experimental results of map completion in Gazebo simulation.

Figure 7. Experiment 2: comparison of the maps generated using pix2pix and U-net as conditions at 1 and T−5 iterations of SLAM. This comparison shows that the loss by the discriminator is useful to improve the accuracy of map completion when the observation increases and the area of map completion is narrowed. The maps of condition show the results of Gmapping.

The estimation result of the method combining pix2pix with Gmapping was lower in accuracy than using U-net with Gmapping. From this result, we consider that the discriminator loss is not effective for estimating the environment map using the observation of the initial iteration of SLAM. In order to investigate in detail the difference depending on the existence of a discriminator, the scores at T−5 iteration are shown in the bottom rows of Table . In accuracy, the difference between using U-net and pix2pix is smaller than the result of one iteration, and in F-measure, the result estimated using pix2pix is better. From this result, it is clear that the discriminator's loss is useful to improve the accuracy of map completion when the observation increases and the area of map completion is narrowed.

5. Conclusion

We proposed a map completion network-based SLAM (MCN-SLAM) method that incorporates map completion using generative models into the formulation of FastSLAM and does not require observing the entire environment. Our experiments showed that MCN-SLAM could complement an environment map estimated from few observations and improve the accuracy of the entire map estimation. The proposed method was able to transfer structural knowledge to a new environment by acquiring it from many environmental maps via the latent space.

Improving the accuracy of self-localization by the deep generative model is a challenging task. In the experiments, we did not verify the effect of the completed map on the robot's self-localization. In FastSLAM, a map is stored for each particle generated by a particle filter, and a map can be estimated for each particle using a generative model. By leaving more particles estimating the map with more accurate completion, it is possible to identify a map with incorrect completion better and remove it. Therefore, an accurately completed map will allow accurate self-localization. We would like to verify the effect of the above in the future.

In addition, Experiment 1 indicates that the distribution approximated by the map completion network is a domain-dependent prior distribution. To execute SLAM that performs map completion using the proposed method in a real environment, we can benefit from the observations made by a large number of the same robots in other similar real environments. This allows performing map estimation while performing map completion in unknown environments using the maps estimated from previously visited environments.

Finally, since our proposed MCN-SLAM method approximates the prior distribution of the environment maps, it is possible to obtain an environment map probabilistically by sampling multiple times. As a future perspective, MCN-SLAM will be extended as an approach for active exploration of positions where the map prediction is uncertain [Citation25]. This will hopefully lead to further improvement of robot autonomy.

Acknowledgments

In this paper, we used “LIFULL HOME'S Dataset” provided by LIFULL Co., Ltd. via IDR Dataset Service of National Institute of Informatics.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Yuki Katsumata

Yuki Katsumata received his M.E. degree from Ritsumeikan University, Japan, in 2020. He is currently an Engineer at Sony Group Corporation. His research interests include machine learning, intelligent robotics, and symbol emergence in robotics.

Akinori Kanechika

Akinori Kanechika received his B.E. degree from Ritsumeikan University, Japan, in 2021. His research interests include intelligent robotics and artificial intelligence.

Akira Taniguchi

Akira Taniguchi received his B.E., M.E., and Ph.D. degrees from Ritsumeikan University, Japan, in 2013, 2015, and 2018, respectively. From 2017 to 2019, he was a Research Fellow of the Japan Society for the Promotion of Science. Since 2019, he has been working as a Specially Appointed Assistant Professor at the College of Information Science and Engineering, Ritsumeikan University, Japan. His research interests include intelligent robotics, artificial intelligence, and symbol emergence in robotics. He is also a member of RSJ, JSAI, SICE, and ISCIE.

Lotfi El Hafi

Lotfi El Hafi received his M.S.E. from the Universite catholique de Louvain, Belgium, and his Ph.D. from the Nara Institute of Science and Technology, Japan, in 2013 and 2017, respectively. His thesis focused on STARE, a novel eye-tracking approach that leveraged deep learning to extract behavioral information from scenes reflected in the eyes. Today, he works as a Research Assistant Professor at the Ritsumeikan Global Innovation Research Organization, as a Specially Appointed Researcher for the Toyota HSR Community, and as a Research Advisor for Robotics Competitions for the Robotics Hub of Panasonic Corporation. His research interests include service robotics and artificial intelligence. He is also the recipient of multiple research awards and competition prizes, an active member of RSJ, JSME, and IEEE, and the President & Founder of Coarobo GK.

Yoshinobu Hagiwara

Yoshinobu Hagiwara received his Ph.D. degree from Soka University, Japan, in 2010. He was an Assistant Professor at the Department of Information Systems Science, Soka University, from 2010, a Specially Appointed Researcher at the Principles of Informatics Research Division, National Institute of Informatics, from 2013, and an Assistant Professor at the Department of Human & Computer Intelligence, Ritsumeikan University, from 2015. He is currently a Lecturer at the Department of Information Science and Engineering, Ritsumeikan University. His research interests include human-robot interaction, machine learning, intelligent robotics, and symbol emergence in robotics. He is also a member of IEEE, RSJ, IEEJ, JSAI, SICE, and IEICE.

Tadahiro Taniguchi

Tadahiro Taniguchi received his M.E. and Ph.D. degrees from Kyoto University, in 2003 and 2006, respectively. From 2005 to 2008, he was a Japan Society for the Promotion of Science Research Fellow in the same university. From 2008 to 2010, he was an Assistant Professor at the Department of Human and Computer Intelligence, Ritsumeikan University. From 2010 to 2017, he was an Associate Professor in the same department. From 2015 to 2016, he was a Visiting Associate Professor at the Department of Electrical and Electronic Engineering, Imperial College London. Since 2017, he is a Professor at the Department of Information and Engineering, Ritsumeikan University, and a Visiting General Chief Scientist at the Technology Division of Panasonic Corporation. He has been engaged in research on machine learning, emergent systems, intelligent vehicle and symbol emergence in robotics.

References

- Thrun S, Burgard W, Fox D. Probabilistic robotics (intelligent robotics and autonomous agents). Cambridge, MA: The MIT Press; 2005.

- Durrant-Whyte H, Bailey T. Simultaneous localization and mapping (SLAM): part I. IEEE Robot Autom Mag. 2006;13(2):99–110.

- Bailey T, Durrant-Whyte H. Simultaneous localization and mapping (SLAM): part II. IEEE Robot Autom Mag. 2006;13(3):108–117.

- Montemerlo M, Thrun S, Koller D, et al. Fastslam: a factored solution to the simultaneous localization and mapping problem. In: Eighteenth National Conference on Artificial Intelligence, Edmonton, Alberta, Canada; 2002. p. 593–598.

- Montemerlo M, Thrun S, Roller D, et al. Fastslam 2.0: an improved particle filtering algorithm for simultaneous localization and mapping that provably converges. In: Proceedings of the 18th International Joint Conference on Artificial Intelligence; IJCAI'03, Acapulco, Mexico; 2003. p. 1151–1156.

- Kohlbrecher S, von Stryk O, Meyer J, et al. A flexible and scalable slam system with full 3d motion estimation. In: 2011 IEEE International Symposium on Safety, Security, and Rescue Robotics, Kyoto, Japan; 2011 Nov. p. 155–160.

- Goodfellow I, Pouget-Abadie J, Mirza M, et al. Generative adversarial nets. In: Advances in Neural Information Processing Systems, Montreal, Quebec, Canada, 27; 2014. p. 2672–2680.

- Isola P, Zhu JY, Zhou T, et al. Image-to-image translation with conditional adversarial networks. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA; 2017. p. 5967–5976.

- Zheng C, Cham TJ, Cai J. Pluralistic image completion. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA; 2019.

- Salimans T, Goodfellow I, Zaremba W, et al. Improved techniques for training GANs. In: Advances in Neural Information Processing Systems, Barcelona, Spain, 29; 2016. p. 2234–2242.

- Karras T, Aila T, Laine S, et al. Progressive growing of GANs for improved quality, stability, and variation. In: International Conference on Learning Representations, Vancouver, BC, Canada; 2018.

- Karras T, Laine S, Aila T. A style-based generator architecture for generative adversarial networks. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach California; 2019 Jun.

- ROS wiki gmapping; 2019. Available from: http://wiki.ros.org/gmapping.

- Quigley M, Conley K, Gerkey BP, et al. Ros: an open-source robot operating system. In: ICRA Workshop on Open Source Software, Kobe, Japan; 2009.

- El Hafi L, Isobe S, Tabuchi Y, et al. System for augmented human-robot interaction through mixed reality and robot training by non-experts in customer service environments. Adv Robot. 2020;34(3-4):157–172.

- Taniguchi A, Hagiwara Y, Taniguchi T, et al. Spatial concept-based navigation with human speech instructions via probabilistic inference on bayesian generative model. Adv Robot. 2020;34(19):1213–1228.

- Katsumata Y, Taniguchi A, Hafi LE, et al. SpCoMapGAN: spatial concept formation-based semantic mapping with generative adversarial networks. In: Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, USA; 2020. p. 7927–7934.

- Tateno K, Tombari F, Laina I, et al. Cnn-slam: real-time dense monocular slam with learned depth prediction. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA; 2017.

- Liu Z, von Wichert G. A generalizable knowledge framework for semantic indoor mapping based on markov logic networks and data driven mcmc. Future Gener Comput Syst. 2014;36:42–56.

- Luperto M, Amigoni F. Extracting structure of buildings using layout reconstruction. In: Intelligent Autonomous Systems 15. Baden-Baden: Springer International Publishing; 2019. p. 652–667.

- Luperto M, Arcerito V, Amigoni F. Predicting the layout of partially observed rooms from grid maps. In: 2019 International Conference on Robotics and Automation (ICRA), Montreal, Canada; 2019. p. 6898–6904.

- Ronneberger O, Fischer P, Brox T. U-net: convolutional networks for biomedical image segmentation. In: Medical image computing and computer-assisted intervention – MICCAI 2015. Munich: Springer International Publishing; 2015. p. 234–241.

- Mirza M, Osindero S. Conditional generative adversarial nets; 2014. arXiv:1411.1784.

- Miyato T, Kataoka T, Koyama M, et al. Spectral normalization for generative adversarial networks. In: International Conference on Learning Representations, Vancouver, BC, Canada; 2018.

- Katyal K, Popek K, Paxton C, et al. Uncertainty-aware occupancy map prediction using generative networks for robot navigation. In: 2019 International Conference on Robotics and Automation (ICRA), Montreal, Canada; 2019. p. 5453–5459.

- Shrestha R, Tian FP, Feng W, et al. Learned map prediction for enhanced mobile robot exploration. In: 2019 International Conference on Robotics and Automation (ICRA), Montreal, Canada; 2019. p. 1197–1204.

- Tingguang L, Danny H, Chenming L, et al. Houseexpo: a large-scale 2d indoor layout dataset for learning-based algorithms. In: Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Venetian Macao, Macau; 2019. p. 882.

- LIFULL Co, Ltd. LIFULL HOME'S dataset. Informatics Research Data Repository, National Institute of informatics [dataset]. 2015. DOI:https://doi.org/https://doi.org/10.32130/idr.6.0

- Song S, Yu F, Zeng A, et al. Semantic scene completion from a single depth image. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA; 2017.

- Brock A, Donahue J, Simonyan K. Large scale GAN training for high fidelity natural image synthesis. In: International Conference on Learning Representations, New Orleans, LA, USA; 2019.

- Koenig N, Howard A. Design and use paradigms for Gazebo, an open-source multi-robot simulator. In: Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Chicago, IL, USA; Vol. 3; 2004. p. 2149–2154.

Appendix. Experiment 3: map completion example in a real environment

We perform the map completion using the proposed method and baseline methods in a real environment. The experimental conditions are the same as in Section 4.4. We use a laboratory room, including an experimental space that imitates a home environment, for the experiment as shown in Figure .

Table and Figure show the experimental results. The proposed method obtained better accuracy and F-measure results than the comparison methods from the viewpoint of map completion. The environment map estimated by the proposed method complements the area where the observation was obtained. As shown in Figure , the proposed method succeeded in capturing the rough outline of the walls. However, when using a network trained with the House Expo dataset prepared by the simulator, it was difficult to capture the complex shapes of the real world because of over-fitting to the domain. Note that the sensor characteristics of the robot are also different. As a better way to successfully use the proposed method in real conditions, we are considering training the network using a map of the environment estimated in the real world. We believe that this problem can be solved in the future by deploying a large number of robots sharing the map estimated in their environment with a cloud-based system.

Figure A2. Experiment 3: example of an environment map generated in a real environment by both the proposed and comparison methods.

Table A1. Experiment 3: results of the experiment in a real environment.