Abstract

This study assessed the construct and criterion validity of the short version of the Internet Skills Scale and examined whether its four dimensions – Operational, Information Navigation, Social, and Creative skills – are influenced by a higher-order dimension of general internet skills as one second-order factor. In 2018, a face-to-face survey comprising of the 20-item Internet Skills Scale and 22 other items related to digital inclusion was conducted in a sample of 814 internet users in Slovenia. The results of exploratory and confirmatory factor analyses, as well as other multivariate methods, showed that the Internet Skills Scale is characterized by high to adequate convergent and divergent validity. Acceptable criterion validity was observed for Operational and Information Navigation skills. In terms of measurement invariance, the data supported configural and metric invariance, whereas the scalar invariance was not fully confirmed, suggesting that older adults’ lower scores on the Creative skills items were not related to lower levels of internet skills in the same way as they were among younger individuals. Last, the results provided original evidence of the Internet Skills Scale as a second-order construct, meaning that a single summative Internet Skills Scale score could be created as an adequate measure of an individual’s internet skills.

Introduction

Two decades of research into the differences in how people access and use information and communication technologies (ICTs) has established internet skills to be a key factor for digital inclusion (van Dijk and van Deursen Citation2014). As per DiMaggio et al. (Citation2004, 378) definition, internet skillsFootnote1 is “the capacity to respond pragmatically and intuitively to challenges and opportunities in a manner that exploits the internet’s potential and avoids frustration” (DiMaggio et al. Citation2004, 378). In general, younger individuals with higher socioeconomic status and more prior experience with the internet exhibit relatively high levels of internet skills (Hargittai Citation2002; Hargittai and Dobransky Citation2017; Litt Citation2013; Scheerder, van Deursen, and van Dijk Citation2017; van Dijk and van Deursen Citation2014). In turn, disparities in internet skills leads to disparities in online engagement and also outcomes of such engagement, both online and offline (Dodel and Mesch Citation2018; Hargittai and Dobransky Citation2017; Hargittai and Litt Citation2011; Junco and Cotten Citation2012; Leung and Lee Citation2012; van Deursen et al. Citation2017). In effect, disparities in internet skills are at the core of the second-level digital divide (Hargittai Citation2002).

Since internet skills are critical for digital inclusion (Mossberger, Tolbert, and Stansbury Citation2003; Ragnedda and Muschert Citation2013; Rainie and Wellman Citation2012; Robinson et al. Citation2015), assessment of levels of skills development across various social groups in the general population is becoming increasingly important. Such assessment can help identify specific skills vital in technology-rich social environments, guide concrete policy interventions aimed at increasing digital and/or social inclusion, and enable measurement and comparison of internet skills in a cross-cultural context, among other things (Litt Citation2013). However, assessments of internet skills levels for the population at large are successful only when valid and easy-to-use measurement instruments are employed. However, in face of many theoretical and empirical challenges, the survey instruments have tended to be incomplete, over-simplified and ambiguous (Litt Citation2013; van Deursen, Helsper, and Eynon Citation2014; see section “Measuring internet skills”).

Working to overcome limitations of existing survey measures of internet skills, van Deursen, Helsper, and Eynon (Citation2016) developed long (35 items) and short (23 items) forms of a self-assessment survey instrument – Internet Skills Scale (ISS). To date, the long form of the ISS has been validated in large online survey studies in the Netherlands (NL) and the United Kingdom (van Deursen, Helsper, and Eynon Citation2016), as well as in Italy (Surian and Sciandra Citation2019), showing promising results with regard to measurement validity (see section “Internet Skills Scale”). However, the short version of the ISS has been validated only in the Netherlands using web survey data.Footnote2 To strengthen its application in cross-cultural research, van Deursen, Helsper, and Eynon (Citation2014) pointed to the need for further studies to determine its validity—at exploratory and confirmatory levels—in countries with lower levels of internet penetration and utilization. Following van Deursen, Helsper, and Eynon (Citation2014), this study tests the short version of the ISS in the context of Slovenia, a country that has lower levels of internet penetration and utilization, using a large representative sample of survey data collected face-to-face. Moreover, Slovenia is a medial country in the European Union (EU) in terms of internet use. For example, in Slovenia 66% of the population uses the internet (almost) every day, which is very near the EU-27 average of 67% (European Commission Citation2019a). Also, Slovenia ranked 14th among 27 member states on the Human Capital dimension of the Digital Economy and Society Index, which measures “internet user skills” and “advanced skills and development” (European Commission Citation2019b).

Background

Internet skills and digital inequalities

Digital inequalities research developed on an elaboration of the digital divide (NTIA Citation1995) when researchers recognized that “individuals, organizations, and countries may be differentiated by online experiences and abilities beyond core technical access” (Hargittai and Hsieh Citation2013, 129). Different theoretical models of digital inequalities have been proposed to explain the links between social and digital inclusion/exclusion (DiMaggio et al. Citation2004; Helsper Citation2012; van Deursen et al. Citation2017; van Dijk Citation2005). All these models take internet skills to be one of the core mediating factors between offline social exclusion and online digital exclusion. For example, Helsper (Citation2012) hypothesized that exclusion in the offline field of economic resources (e.g., educational attainment) influences exclusion in the corresponding digital field (e.g., engagement in information and learning activities) mediated by an individual’s level of internet skills, access, and attitudes. van Dijk (Citation2005) understood the process of appropriation of the internet as: (1) successive – motivational access is followed by material, skills and usage access and (2) recursive – this process recurs wholly or partially with every new technology. Moreover, van Deursen et al. (Citation2017) hypothesized a compound and sequential relationship between internet skills, uses, and outcomes. Compound digital exclusion occurs when a person lacks digital resources of the same type (e.g., has low levels of different types of skills). Sequential digital exclusion occurs when “a person’s digital exclusion of one type (e.g., lack of skills) leads to exclusion of a different type (e.g., low levels of internet use)” (van Deursen et al. Citation2017, 456).

Substantial research has indicated sequential relationship between internet skills and online engagement (Blank Citation2013; Correa Citation2016; Correa, Pavez, and Contreras Citation2020; Hargittai Citation2010; Helsper and Eynon Citation2013; Reisdorf, Petrovčič, and Grošelj Citation2020; van Deursen et al. Citation2017; van Deursen and van Dijk Citation2015a). Several recent studies also confirmed the compound relationship between different types of internet skills (van Deursen et al. Citation2017; van Deursen and van Dijk Citation2015a; van Deursen, van Dijk, and Peters Citation2011).

As ICTs have evolved, individuals’ internet skills have become even more important across different population groups “given the increasing number of types of know-how that are required to make the best use of digital media” (Hargittai and Micheli Citation2019, 109). Consequently, several authors proposed to differentiate between various types of skills (for reviews see Litt Citation2013; van Deursen, Helsper, and Eynon Citation2014), stressing the importance of distinguishing between medium-related (i.e., skills necessary to the use of the internet as a medium) and content-related (i.e., skills necessary to engage with the content provided by the internet) internet skills.

In comparison to gaps in access to ICTs, gaps in internet skills are believed to be more profound and lasting, because they may cause structural inequality between people resulting in “an information elite who commands high levels of internet skills and as a result a more diverse use of the internet” (van Deursen and van Dijk Citation2015b, 789). Therefore, digital inequalities research has also focused on determinants of internet skills. Most studies have examined demographic and socioeconomic determinants of skills (e.g., Scheerder, van Deursen, and van Dijk Citation2017). Age has been identified as one of the most important factors, and its relationship with skills has been quite widely studied because of several popular misconceptions (e.g., Hargittai and Dobransky Citation2017). For example, young people are often believed to be “digital natives,” that is, inherently better at using the internet than older people who are believed to be “digital immigrants” (Prensky Citation2001). However, the relationship between age and internet proficiency is not as straightforward. For example, several studies showed that there are considerable differences in levels of internet skills among young people (Correa Citation2010; Cotten, Davison, et al. Citation2014; Hargittai Citation2010; Livingstone and Helsper Citation2007) as well as among older adults (Cotten, Ford, et al. Citation2014; Hargittai and Dobransky Citation2017; Hargittai, Piper, and Morris Citation2019; Xie Citation2011). Furthermore, the association between age and skills is different for different types of skills. On performance tests, older internet users performed more poorly than younger internet users regarding medium-related skills (operational and formal skills), whereas age did not contribute significantly to the level of content-related skills (information and strategic skills; van Deursen and van Dijk Citation2011). In contrast, using a frequency-based survey instrument van Deursen and van Dijk (Citation2015a, Citation2015b) found a negative association between medium- and content-related types of skills and age. In a recent study using the ISS, van Deursen et al. (Citation2017) found no association between age and Information Navigation and Social skills, while the association between Operational and Creative skills was negative.

Measuring internet skills

Despite extensive research on internet skills, their measurement remains a significant challenge. Specifically, three principal measurement approaches have been applied to date: performance or observation measures, self-assessment or self-reported measures, and combined or unique measures (for a review see Litt Citation2013). Although performance-based measures and observational techniques are believed to show the most internal validity (van Deursen, Helsper, and Eynon Citation2014), their use is less widespread (examples include Dodge, Husain, and Duke Citation2011; Hargittai Citation2002; van Deursen and van Dijk Citation2011), as they “pose challenges due to their time-consuming nature, added expense of equipment and personnel, and difficulty in cross-comparison and replication” (Litt, 2013, 619). Consequently, the most widely adopted approach to measure internet skills is self-assessment survey scales on which respondents evaluate their own skill levels, which may lead to overrating or underrating. However, an important advantage of this approach is its effectiveness in terms of scalability, population coverage, processing, and costs (Litt Citation2013; van Deursen, Helsper, and Eynon Citation2014). Some scholars proposed unidimensional skills scales that sum respondents’ scores on individual items (Hargittai and Hsieh Citation2012; Livingstone and Helsper Citation2010; Potosky Citation2007; Zimic Citation2009). Others developed multidimensional skills models with different dimensions corresponding to different types of skills needed in online engagement (Bunz Citation2004; Helsper and Eynon Citation2013; Spitzberg Citation2006; van Deursen and van Dijk Citation2015b).

None of the previously proposed self-assessment survey measures of internet skills have been widely accepted. In part, this is because of their focus on specific aspects of skills, different measurement characteristics, their validation in specific (sub-)populations of internet users such as students, and/or lack of measurement validation in a cross-national perspective (Litt Citation2013). With the goal of overcoming some of these challenges, van Deursen, Helsper, and Eynon (Citation2014) built on existing knowledge and developed the ISS, a set of self-assessment survey measures of internet skills.

Internet Skills Scale

The development and validation of the ISS (van Deursen, Helsper, and Eynon Citation2014, Citation2016) had four stages: (1) a literature review, (2) cognitive interviews in the NL and the UK, (3) small-scale online surveys of random samples of internet users in the NL and the UK, and (4) a large-scale online survey in the NL using a sample representative of the Dutch internet user population. On the results of the small-scale surveys (UK: N = 324; NL: N = 306), the authors performed exploratory factor analysis (EFA) and confirmatory factor analysis (CFA), which resulted in a five-factor solution representing five types of skills: (1) Operational skills – basic technical skills required to use the internet. (2) Information Navigation skills – information searching skills, including the ability to find, select, and evaluate sources of information on the internet. (3) Social skills – online communication and interactions skills, including selecting, evaluating, and acting on contacts online. (4) Creative skills – skills to create content of acceptable quality to be published or shared with others online. (5) Mobile skills – skills for using mobile devices proficiently.

To validate the proposed five-factor solution of the ISS, van Deursen, Helsper, and Eynon (Citation2014, Citation2016) performed tests for discriminant validity and factorial invariance (configural, metric, scalar, and uniqueness invariance; ). Discriminant validity was established for all factor pairs, meaning that all the factors can be identified as separate constructs. Configural and metric invariance suggested that the proposed factor structures fit similarly in the NL and the UK. For individual factors, measurement invariance comparison indicated excellent to moderate invariance on all indicators, except for Social skills. van Deursen, Helsper, and Eynon (Citation2014) proposed a long form of the ISS consisting of 35 items and a short form consisting of 23 items, in which the Operational, Information Navigation, Social, and Creative dimensions are measured with five items and the Mobile dimension with three items. On the large-scale survey data (Dutch internet user population, N = 1,107) using the short version of the ISS, a CFA was performed, which showed high reliability and good fit. In testing whether the scales have similar characteristics independent of the context or the population they are in, the authors: (1) confirmed expected differences in skill levels across different sociodemographic groups, (2) demonstrated adequate convergent and discriminant validity, and (3) confirmed the scale’s consistency. In 2019, Surian and Sciandra (Citation2019) replicated the validation of the long ISS on a sample of opt-in online panelists (N = 1,067) from Italy. The CFA results confirmed the five-dimensional structure of internet skills and demonstrated adequate internal consistency, as well as convergent and discriminant validity for all five dimensions.

Table A2. Means, standard deviations of items measuring four types of internet usage.

Table 1. An overview of the characteristics of previous validation studies of the Internet Survey Scale (ISS).

Testing of the ISS in the Slovenian context

The present large-scale validation of the short version of the ISS in Slovenia builds upon previous work in three ways. First, we collected data using random probability sampling and computer-assisted personal interviewing (CAPI). This is an important difference between the present study and previous studies (i.e., van Deursen, Helsper, and Eynon Citation2014; Surian and Sciandra Citation2019), which collected data using self-administered questionnaires in an online panel. By reviewing comparison studies focused on the online population only, Callegaro et al. (Citation2014) found that “there is more and more evidence that respondents joining online panels of nonprobability samples are much heavier internet users, and more into technology than the corresponding internet population” (47), which poses a particular problem in countries with relatively low internet use rates. By using CAPI and random sampling, we attempted to reduce potential non-coverage survey bias (Callegaro, Lozar Manfreda, and Vehovar Citation2015), ensuring that internet users with potentially very different levels of engagement with ICT had the same probability of being surveyed. This is very important in survey scale testing, because an instrument should perform equally well in different segments of a population with potentially diverse levels of various internet skills.

Second, we extend the work performed by van Deursen, Helsper, and Eynon (Citation2014) by testing whether the ISS dimensions have the same structure and meaning for the groups of younger and older internet users (i.e., testing their measurement invariance). Our focus on age stems from the ongoing debate about differences in internet skills among younger and older generations (see section “Internet skills and digital inequalities”). The requirement of measurement invariance, i.e., ensuring that internet users from different generations perceive and understand survey items measuring internet skills similarly, is expected to become even more important in the future when scholars focus specifically on the internet skills of older adults (Hargittai and Dobransky Citation2017) or aim to study age-related differences in internet skills. Although age 65 has been commonly applied as the cutoff in research on older adults, Hunsaker and Hargittai (Citation2018, 3948) suggested that using a lower age threshold “might better capture the differentiation in findings across older adults, and better pinpoint disparity.” Accordingly, we decided to apply a lower age cutoff, defining younger internet users as those aged 18‒49 years and those aged 50 years or older as older internet users. The selected age threshold is also in line with Damodaran and Sandhu (Citation2016) study, which reported that ICTs learning interventions for the older population in the UK often defined older adults as people aged 50+. Similarly, the EU-funded research project SHARE (Survey of Health, Ageing and Retirement in Europe)Footnote3 focuses on individuals aged 50 or older to better understand the aging European population.

Third, we focus on validation of the core four dimensions of the ISS (Operational, Information Navigation, Social, and Creative skills) and work with 20 items, excluding the dimension Mobile skills.Footnote4 This decision was made to retain the full sample of internet users in the analyses (not all Slovenian internet users are mobile internet users; SURS. Citation2017a, Citation2017b). Equally important, the four core types of skills are conceptually separate and are not linked to a specific device, unlike Mobile skills (van Deursen et al. Citation2017). In effect, the four core types are universally applicable to all internet users regardless of the characteristics of their internet access. Further, this strategy permitted us to assess the criterion validity of each dimension of the short version of the ISS with its corresponding type of internet use as well as to test whether conceptually internet skills are a second-order construct with four sub-dimensions as indicated by the compound digital exclusion hypothesis (van Deursen et al. Citation2017), which suggests that individuals who lack one digital resource also lack other digital resources of the same type.

Research questions

We sought to address four research questions:

RQ1: Does the factor structure of the short version of the ISS in Slovenia resemble the factor structure of the original short form of the ISS, and if so, are the convergent validity and divergent validity of the short form adequate?

While RQ1 replicates some of the validation performed by van Deursen, Helsper, and Eynon (Citation2014, Citation2016), RQ2–RQ4 go beyond the existing research validating the short version of the ISS. In line with established scale measurement procedures, we first examine the factor structure of the short version of the ISS and test its construct validity.

Following van Dijk (Citation2005) model of successive access (material, skills and usage) and van Deursen et al. (Citation2017) hypothesis of sequential digital exclusion (van Deursen et al. Citation2017), we evaluate the criterion validity of the scores of the short version of the ISS by assessing their correlation with the intensity of corresponding types of internet use. While this approach, which considers internet uses as “performed internet abilities”, has been already applied to validate other internet skills measures (see section “Internet skills and digital inequalities;” Helsper and Eynon Citation2013; Litt Citation2013), it has not been yet used on the short ISS. Therefore:

RQ2: What is the criterion validity of each of the four dimensions on the short version of the ISS?

We sought to determine whether the dimensions of the short version of the ISS have the same structure and meaning for different groups of respondents. If they do, we can be confident that the scale scores are comparable across various population groups (Büchi Citation2016). To this end, we examine three types of measurement invariance: configural, metric, and scalar. Configural invariance provides evidence that the same number of latent variables with the same pattern of factor loadings, intercepts, and measurement errors underlie a set of indicators. Metric invariance requires that the pattern and the value of the factor loadings in the measurement model (i.e., the strength of the relationship between the latent variable(s) and the observed variables) should be statistically equal across populations. Scalar invariance supports cross-group comparisons of manifest (or latent) variable means of the latent variable of interest (Putnick and Bornstein Citation2016). In digital inequalities research, for instance, metric invariance is necessary for unbiased comparisons between (age, gender, and education) groups of effects or correlations (e.g., with skills as an independent or dependent variable). Even if the item intercepts varied (i.e., there is no scalar invariance), estimates such as regression weights could be meaningfully compared and offer insights into differential effects of or on skills between groups. Scalar invariance, then, is required if the interest is in comparing latent means, i.e., the absolute level of skills between groups. Although van Deursen, Helsper, and Eynon (Citation2014, Citation2016) showed that the ISS is an invariant measure (taking into account configural, metric, and scalar invariance) in a cross-country comparison between the NL and the UK, they also reported many statistically significant differences in the correlation matrixes between the scores of the short version of the ISS comparing different age groups (Citation2014, 33). The present study extends their work by evaluating the measurement invariance of the short version of the ISS between younger and older internet users.

RQ3: Does the short version of the ISS demonstrate measurement equivalence between younger and older internet users?

The short version of the ISS was designed as a first-order construct with multiple indicators for each dimension. Although there is considerable value in distinguishing different dimensions of internet skills, van Deursen et al. (Citation2017) hypothesized that individuals’ levels of different types of internet skills are determined by their compoundness, allowing individuals to achieve desired goals and function well in an online environment when all levels of different types of skills are high. Another analytical advantage of second-order constructs lies in the ability to combine multiple dimensions into one summative score. Some types of comparative analyses and benchmark research on digital inequalities would certainly benefit from a single skill score (Dolničar, Prevodnik, and Vehovar Citation2014). However, calculating a single summative score would be possible only if the short version of the ISS were found to be a valid second-order construct (Brown Citation2015). Therefore:

RQ4: Does the short version of the ISS measure internet skills as a second-order construct?

Methodology

Procedure

Data used in this study were gathered from the 2018 wave of the Slovenian Public Opinion Survey conducted as part of the International Social Survey ProgrammeFootnote5 (ISSP) in Slovenia. The fielding period was March through June 2018. Standard CAPI fielding procedures for respondent solicitation (e.g., mailing covering letters, multiple visits to a respondent’s address in case of non-response) were applied. The interviews were conducted in respondents’ homes and lasted about an hour. No monetary or non-monetary incentives were provided to participants.

The initial sample included 2,000 residents of Slovenia, aged 18 years or older, who were selected from the Central Population Register (CPR). A two-stage random sampling was used with stratification by type of settlement (i.e., urban, rural) and the statistical region of residence. The number of individuals sampled within each stratum was proportional to the population size of the target populations in the CPR. The survey was completed by 1,047 respondents, a response rate (RR1) of 57% after non-eligible cases were excluded (AAPOR Citation2016). To ensure the sample was representative, its composition was compared with population data. The sociodemographic characteristics of the sample were shown to closely mirror the characteristics of the general population when compared with data retrieved from the CPR according to gender, age, region, and type of settlement. Accordingly, no post-stratification techniques were applied to correct the sample structure. The full sample characteristics are summarized in .

Table 2. Sample characteristics.

Questionnaire

Internet users responded to 20 items on the short version of the ISS. In line with the original ISS, all items were measured on a five-point scale, ranging from 1 (“Not at all true of me”) to 5 (“Very true of me”), and included an “I do not understand what you mean by that” option, along with “Don’t know” and “Declined to answer” options (see also Table A.1 in Appendix).

For assessing the criterion validity, measures of four types of internet use were developed. Internet use types were measured using a 16-item instrument, adapted from the Oxford Internet Surveys (Dutton et al. Citation2013) and the Slovenia Statistical Office (SURS. Citation2017a). The items mapped respondents’ specific types of uses in the Operational, Information Navigation, Social, and Creative domains ( in Appendix). Items were scored on a six-point frequency scale ranging from 1 (“Never”) to 6 (“Several times a day”). For each of the four use types, a composite index score was created by summing the observed values of the items in each type and dividing the total by the number of items in the corresponding type (). The internal consistency of the index scores was not computed, because the multi-item indexes refer to formative constructs that are defined by an aggregation of relatively independent indicators (Diamantopoulos, Riefler, and Roth Citation2008).

All instruments reported in this study were translated into the Slovenian language using the TRAPD (Translation, Review, Adjudication, Pretesting, and Documentation) method (Harkness, Van de Vijver, and Mohler Citation2003). Two independent translations were first produced by research team members and later reviewed by a third researcher, who also checked for any potential translation annotations (e.g., for ambiguous concepts) and compared the reviewed translation with the original questionnaire. Before the translation was approved, the reviewed version was also translated back into English by two research assistants. Potential deviations from the original meanings of scale items in the back-translations were discussed among the research team members to obtain an improved version. The approved translation was then tested in a pilot study (N ∼ 30) and corrected based on the feedback from the pretest. Our goal was to use exact translations of the original ISS items. However, the process of translating and pretesting the questionnaire showed that four items had to be modified slightly to make them fully understandable in the Slovenian language and context.Footnote6

Data analysis

In addition to descriptive and bivariate statistics that were used to identify potential violations at the item level (e.g., outliers, non-normal distributions, paired correlations), we combined EFA and CFA in a sequence to address the research questions, because EFA is a very useful first step in the measurement model specification before the data are cross-validated with CFA (Gerbing and Hamilton Citation1996). The primary aim of EFA was to identify whether the underlying factor structure of the items on the ISS as found in Slovenian context was similar to that found in the NL and the UK. EFA was also used to assess how well its items were linked to the underlying factors if there were no restrictions in the factor model, and to assess the severity of any potential divergence from the original factor structure. Consequently, CFA was used to specify the ISS first-order measurement model, to estimate its convergent and discriminant validity, as well as to assess its measurement invariance. Criterion validity of the ISS was evaluated with a set of multiple linear regression models. Additionally, CFA was run to verify whether the ISS could be considered as a second-order construct. Descriptives, bivariate statistics, EFA, and multiple regressions were performed in IBM SPSS Statistics 24.0 (IBM. Citation2016), while CFA was carried out in IBM SPSS Amos 24 (Arbuckle Citation2016).

Results

Exploratory factor analysis

Before EFA was run, the data were screened for univariate outliers and missing data. In addition to the missing values on single items due to refusals to answer and “Don’t know” or “I do not understand what you mean by that” answers, no out-of-range values were identified.Footnote7 The minimum number of data for factor analysis was satisfied, with a final sample size of 683 (using listwise deletion), providing a ratio of more than 29 per variable.

Initially, the factorability of the 20 items on the ISS was examined by inspecting the zero-ordered Pearson’s correlations between all pairs of items ( in the Appendix). The size of the correlations (with absolute values between .20 and .80; Field Citation2013) for the majority of pairs suggested reasonable factorability without indication of multicollinearity. Additionally, the diagonals of the anti-image correlation matrix were greater than .80. Therefore, we ran EFA on all items. The maximum likelihood (ML) technique was used with a varimax rotation of the factor loading matrix, without imposing restrictions on the number of factors because: (1) the primary purpose was to validate the instrument to be used on other data sets, (2) such an approach can yield better guidance for how to re-specify a model to obtain better fit in CFA, and (3) we aimed to preserve analytical consistency with ML-based CFA (Field Citation2013).

The initial factor solution yielded four factors with an eigenvalue greater than 1.0, explaining 36.0% (Operational skills), 10.1% (Creative skills), 8.7% (Information Navigation skills), and 7.9% (Social skills) of the variance, respectively. The initial factor solution replicated the ISS factorial structure in terms of the number of factors, but the analysis of the factor loadings and communalities revealed that three items had to be suppressed because they did not contribute to a simple factor structure. Notably, items “I know how to change who I share content with (e.g., friends, friends of friends or public)” and “I know how to remove friends from my contact lists” did not meet the minimum criterion of having a primary factor loading greater than or equal to .4 (Guadagnoli and Velicer Citation1988) on the Social skills factor and cross-loaded on other factors. The item “I find it hard to decide what the best keywords are to use for online searches” had to be removed because its value of extracted communality was less than .2 (Child Citation2006). To check whether the removal of these three items improved the factorial structure, a ML factor analysis on the remaining 17 items, using varimax rotations, was rerun without further imposed restrictions related to the number of factors. The results confirmed the four-factor structure, with 67.0% of the variance explained by the four factors and an average value of extracted communalities of .58. Moreover, all items in this solution had primary factor loadings greater than or equal to .47. The few identified cross-loadings had a ratio considerably below the .75 cutoff. The factor loading matrix is presented in .

Table 3. Factor loadings and communalities in initial and final factor solutions of the short Internet Skills Scale.

Confirmatory factor analysis

First-order factor model of the ISS

Measurement model

Based on the EFA results, a first-order four-factor model was specified. The measurement model contained no double-loading indicators, and all measurement errors were presumed to be uncorrelated. The factors were permitted to correlate, based on previous evidence of the compound nature of internet skills (van Deursen et al. Citation2017). As the data had been screened in EFA to ensure their suitability for the ML estimator,Footnote8 they were entered in CFA directly. The covariance matrix was used as the model input.

The overall goodness-of-fit indices suggested that the four-factor model fitted the data adequately (Hu and Bentler Citation1999): χ2(113) = 398.870, p < .001, RMSEA = .061 (90% CI = .054–.067), CFI = .951. All freely estimated unstandardized parameters were statistically significant at a p value of less than .001 (). Factor loading estimates revealed that the indicators were strongly related to their purported factors (range of loadings = .50–.96). Moreover, estimates from the four-factor solution indicated a moderate relationship between the four dimensions, the lowest correlation between Social and Creative skills (θ = .296) and the strongest between Operational and Information Navigation skills (θ = .538) ().

Table 4. Factor loadings, convergent validity and reliability of the short Internet Skills Scale.

Table 5. Discriminant validity of factors of the short Internet Skills Scale.

Convergent and discriminant validity

The internal consistency of the dimensions on the ISS was estimated with reliability and convergent validity (). The reliability of the scales was evaluated with composite reliability (CR) and Cronbach alpha (α) scores. The CR estimates for the scores on the four factors within the ISS were good, with all values ≥ .75 (Gefen, Straub, and Boudreau Citation2000). Likewise, the Cronbach alpha scores revealed acceptable to high internal consistency for all four factors, ranging from α = .74 for Information Navigation skills to α = .92 for Operational skills. On the other hand, convergent validity, which refers to the expectation that measures intended to capture related traits should be correlated (Campbell and Fiske Citation1959), was not confirmed for Information Navigation and Creative skills because the value of the average variance extracted (AVE) was < .50 for both factors. Nevertheless, as Fornell and Larcker (Citation1981) suggested that when CR is > .60, AVE values > .40 can be accepted, the convergent validity of all four factors was considered adequate.

Such findings allowed us to proceed with the estimation of discriminant validity, which refers to the expectation that measures of unrelated traits should not be correlated (Campbell and Fiske Citation1959) and was assessed with a comparison of the value of the shared variance (estimated with the squared correlation θ2) and the AVE for each pair of constructs. For every pair of constructs (columns A and B, ), the AVEs for both factors had to be larger than θ2 between them (Fornell and Larcker Citation1981, 45–46). This criterion was met for all pairs of factors, supporting the notion that each of the theoretically suggested dimensions of the ISS showed adequate discriminant validity ().

Criterion validity

It compares the relationship between a test and another “gold standard” measure or test of that same construct that has established psychometric properties and is known to be valid (Kline Citation1986). In this study, the concurrent criterion validity was evaluated, i.e., we assessed the capacity of the four ISS subscales to predict the performance on the corresponding four types of internet use at a given time. Notably, the criterion validity was confirmed if the selected ISS subscale was: (1) the strongest predictor of the corresponding type of internet use, and (2) its correlation with the corresponding type of use was stronger than associations with other types of internet use. If only condition 1 or condition 2 was fulfilled, partial criterion validity was assessed for the selected ISS subscale. Before the data were entered in the linear regression model, the scale scores for all ISS factors were calculated by averaging across corresponding items, while scale scores for four types of internet use were created as reported in the data analysis section and are shown in (in Appendix).

shows that all four regression models explained an acceptable percentage of variance in different types of internet use. All F-tests with a p value < .01 were considered statistically significant. Criterion validity was fully confirmed for Operational and Creative skills. Notably, Operational skills was the strongest significant positive predictor (β = .339, p < .01) of operational use, while Creative skills was the strongest significant positive predictor (β = .401, p < .01) of creative use; both showing at least moderate criterion validity.Footnote9 In addition, the values of their respective betas were the largest when compared to their betas for other types of internet use. With respect to Information Navigation skills, criterion validity was only partially confirmed. Among all types of internet use, information navigation use was the most strongly positively correlated to Information Navigation skills (β = .113, p < .01). However, Operational skills were the strongest positive predictor of information navigation use (β = .252, p < .01), suggesting that they were a more important antecedent of information navigation use than Information Navigation skills. Finally, as Social skills neither showed a significant correlation (β = .025, ns) with social use nor were its strongest predictor [Social skills were significantly correlated only with creative use (β = −.107, p < .01)], its criterion validity was not acceptable.

Table 6. Summary of multiple regression analyses for criterion validity of the short Internet Skills Scale.

Measurement invariance

To assess the metric equivalence of the ISS between the groups of respondents aged less than 50 years and 50 years and older, measurement invariance was estimated (Putnick and Bornstein Citation2016). Measurement invariance suggests that a construct has the same structure or meaning for different groups. Thus, the measure can be meaningfully tested or constructed across two or more groups of subjects. In this study, we evaluated measurement invariance in three steps: configural, metric, and scalar.

In particular, multi-group CFA models were fitted to the data for both groups simultaneously, by a condition in which a test of configural invariance was followed by increasingly restrictive tests of metric and scalar invariance. Following Byrne (Citation2010), at all three steps, model invariance was evaluated based on the CFI and > .900 as the cutoff value. To examine the assumption of all three types of invariances, we used ΔCFI < .010 as the cutoff value (Putnick and Bornstein Citation2016).

As shown in , the CFI value (> .900) indicated acceptable model fit for the ISS, suggesting conditions that assumed configural invariance between the two age groups. Moreover, the fit index performed well in examining a hypothesis of metric invariance with below the typical .010 cutoff value. In examining relative model fit for scalar invariance, the ΔCFI value (.022) suggested that the scalar invariance hypothesis was not supported for both groups. Therefore, the scalar invariance hypothesis was tested for each intercept of the 17 items on the short version of the ISS, to identify the source of non-invariance. Interestingly, for all five items measuring Creative skills ΔCFI > .01 (see ), the tests suggested that their intercepts were scalar non-invariant.

Table 7. Measurement invariance: tested models, fit indexes, and comparison against baseline model (i.e., Configural model).

Table 8. Scalar invariance analysis and comparison of unconstrained intercepts for Creative skills factor of the short Internet Skills Scale.

The ISS as a second-order construct

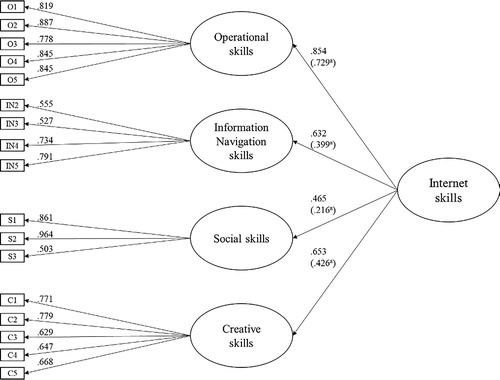

The results of the first-order CFA showed adequate construct validity of the ISS, while indicating the viability of a measurement model with four first-level factors and one second-level factor because of the pattern of correlations (θ matrix) among the four first-order factors (). To answer RQ4, a CFA second-order measurement model of the ISS was specified (see ) and analyzed, using a three-stage approach devised by Brown (Citation2015).

Figure 1. Second-order factor model of the short Internet Skills Scale with four first-order factors with standardized factor loadings.

Notes. N = 683. aExplained first-order factor variance.

In the first stage, we found that the fit of the second-order solution was adequate: χ2(115) = 401.409, p < .001, SRMR = .051, RMSEA = .060 (90% CI = .054–.067), CFI = .951. Next, the second-order model was found to be equally good-fitting when compared to the first model: χ2diff(2) = 2.538, (p = .281).Footnote10 Thus, it could be concluded that the model provides a good account for the correlations among the first-order factors. Finally, shows that each of the four first-order factors loaded adequately on the second-order factor (range of loadings = .465–.854) and that the latter accounted for 22–73% of the variance in the first-order factors (e.g., Operational skills: 1 − .271 = .729). Because the second-order factor model does not result in a significant decrease in model fit, shows adequate factor loadings, and explains enough of the first-order factors’ variance, we can conclude that there is consistent evidence that the short version of the ISS is a second-order construct. This also means that a single summative score for each respondent could be calculated based on all items included on the short version of the ISS (e.g., by averaging or summing the scores of the items).

Discussion

Drawing on a national population survey, this study investigated measurement characteristics of the short version of the ISS in Slovenia— a medial country in the EU in terms of internet use. It is the first study that focused specifically on the short version of the ISS and established its validity using survey data collected face-to-face. Analytically, this study is the first one to evaluate the criterion validity of the ISS and to establish the ISS as a second-order construct ().

RQ1 asked whether the ISS factor structure observed in the Slovenian context resembles the original factor structure. In general, the answer is confirmatory; however, a detailed analysis of factor loadings and communalities showed that three items did not contribute to a simple factor structure. Notably, two of them relate to the Social skill dimension: “I know how to change who I share content with (e.g., friends, friends of friends or public),” and “I know how to remove friends from my contact lists.” They were eliminated because of low factor loading and higher cross-loading on other dimensions of the ISS. Because the two items contain language usually connected to social network sites (SNSs) (i.e., “friends”, “friends of friends”, “share content”), it could be that respondents who do not use or are very low users of SNSs understood these items differently from avid users. These two items also ask about specific and technically demanding actions. The remaining three items that formed the Social skill dimension are general and ask about an individual’s general orientations toward online social behavior (know when/which information to share, appropriate behavior online). In fact, the Social skill dimension was also the most problematic in the original study (van Deursen, Helsper, and Eynon Citation2014). Clearly, further conceptual and empirical research is needed in the area of Social skills, which are vital in social and communicative activities taking place online. The third item that had to be removed was part of the Information Navigation skill dimension: “I find it hard to decide what the best keywords are to use for online searches.” Accordingly, three of the four remaining items refer to the “navigation” part of the dimension and only one to its “information” part. This is not ideal, and future studies should consider including and validating the three Information Navigation items that van Deursen, Helsper, and Eynon (Citation2016) proposed in the long form of the ISS. Taken together, the final factor solution suggests that the short version of the ISS consists of 17 items where Operational and Creative skills are measured with five items, Information Navigation with four items, and Social skills with three items (see ).

RQ2 addressed the criterion validity of the ISS. It was fully confirmed for Operational and Creative skills, partially confirmed for Information Navigation skills, and rejected for Social skills. Again, the dimension of Social skills had the poorest performance on the criterion validity test. This is another indication that this dimension needs further investigation and validation in future research. However, in a comprehensive study of internet skills, uses, and outcomes, van Deursen et al. (Citation2017) found a direct positive relationship between social skills and social uses in informal networks, but not social uses in formal and political networks. Similarly, Helsper and Eynon (Citation2013) found a strong relationship between social skills and social uses. Both studies used somewhat different social engagement indicators. Lastly, the observed range of standardized regression coefficients suggests that for all four dimensions of the short version of the ISS, the similarity between the test and the criterion measure could be stronger. Although some scholars demonstrated high correlations between self-report measures of internet skills and internet uses as indicators of “performed internet abilities” (Litt Citation2013, 620), this finding was somewhat expected. Namely, the frequency and breadth of internet use may not always reflect the level of internet proficiency (Helsper and Eynon Citation2013; Litt Citation2013). Improvement of the substance and wording of the ISS items with concept elicitation techniques therefore remains an important avenue for future research.

Third, with respect to RQ3, younger and older internet users in Slovenia conceptualized the construct of internet skills similarly, with the same number of factors, item–factor association, and structural pattern. Furthermore, younger and older internet users responded to items on the short version of the ISS in the same way, with no disagreement about how the constructs were manifested. Although configural and metric invariances were established for all four dimensions, scalar invariance was not confirmed for Creative skills. This result indicates that younger and older internet users who have the same score for the Creative skills dimension may not obtain the same score on the corresponding scale indicators. Notably, when younger and older Slovenian internet users have the same overall value on a Creative skill dimension, the younger ones tend to report higher average agreement with “I know how to create something new from existing online images, music or video” [C1] and “I would feel confident putting video content I have created online” [C5]. Older internet users, on average, tend to agree more with “I know how to make basic changes to the content that others have produced” [C2], “I know how to design a website” [C3], and “I know which different types of licences apply to online content” [C4]. There are several possible explanations for this finding. First, these differences could result from different understandings of specific items among younger and older internet users. The item [C1] denotes the so-called “remixing” typical of digital culture. A possible explanation could be that this item resonates differently with older internet users who rate their ability to remix lower than younger users do. Likewise, creating and uploading a video may be considered an essential skill for younger internet users, who were among first adopters of smartphones and video services on social media. However, this line of argument was reversed for items [C2], [C3], and [C4], for which older internet users reported higher values. This result could be a consequence of older adults perceiving scant knowledge as substantial knowledge. The line of argument could be as follows: When you have more experience with a certain task, you are more aware of what it takes to perform the task (efficiently). Thus, younger internet users who are more familiar with online content creation (compared to older internet users) may be more demanding of themselves in terms of being aware of different types of licenses, creating a website, or making changes to content that others have produced. Although we are not aware of any evidence-based work that would support this rationale, the direct implication of this finding is that caution is advisable when comparing the (average) level of internet skills among different age groups, particularly when data sets are based on general population samples, which include a higher percentage of less savvy (older) internet users in comparison with (non-probability) online panels (Callegaro et al. Citation2014). In this context, further development of the ISS could include additional cognitive interviews with younger and older internet users that would inform possible modifications of creative skill items, as well as the validation of scalar invariance with other sociodemographic variables, such as education and occupation, as they may be related to how respondents interpret the ISS items.

Finally, RQ4 tested whether a single summative score can be calculated based on the short version of the ISS. Because the first-order CFA solution was good-fitting showing adequate convergent and discriminant validity of the factors, and the magnitude and pattern of correlations among factors in the first-order solution was appropriate, we were able to fit the second-order factor solution. The results showed that “internet skills” can be defined (and measured) as a valid second-order construct. On the one hand, this result has high conceptual value, because it confirms van Deursen et al. (Citation2017) hypothesis of the compound nature of internet skills suggesting that four conceptually distinct types of skills pertain to a latent structure of a single high-order construct. On the other hand, this result also has an applied value, because it justifies the use of a single “internet skills” score based on the short version of the ISS. Such a score is especially useful in empirical studies where internet skills are not the topic of primary interest but serve as an important control variable. However, further research would be helpful to test different approaches to summing the ISS items into a single score (e.g., averages, factor scores) and determining the most appropriate one.

Overall, the results indicate a stable four-dimensional structure of the short version of the ISS that corresponds to the results reported by van Deursen, Helsper, and Eynon (Citation2014, Citation2016) and Surian and Sciandra (Citation2019). This means that the short version of the ISS works as expected in the context of an average digitally advanced country using a different survey mode from that used in previous studies. This is an important contribution to research in the field of digital inclusion and skills in methodological terms. It suggests that the short version of the ISS can provide valid assessments of individuals’ levels of internet skills in countries that are differently digitally developed using different survey modes. As researchers of digital inequalities in general, and internet skills in particular, have called for the development of reliable, updated, and nuanced measures of internet skills that are not context-dependent and thus, could be used in diverse samples (Litt Citation2013; van Deursen, Helsper, and Eynon Citation2016), the present study provides valuable evidence that the short version of the ISS could be established as such a survey instrument for measuring levels of internet skills in a general population in different contexts.

Apart from the methodological contribution, the results of this study also contribute to the ongoing theoretical discussions of digital inequalities where internet skills have been established as one of the most important determinants. The results of criterion validity assessment provide evidence for further testing of the sequential digital exclusion hypothesis (van Deursen et al. Citation2017). We showed that higher levels of Operational, Creative, and Information Navigation skills are related to levels of engagement with corresponding internet use types. Such a relationship was not confirmed for Social skills and uses, which calls for further investigation. Theoretically, social uses of the internet may be broader than other types of use (Helsper Citation2012). Thus, social uses may require a whole spectrum of internet skills, and different types of skills may be needed for different utilizations of digital social platforms. Therefore, for testing the sequential digital exclusion hypothesis in the social domain further conceptual exploration of Social skills seems necessary. On the psychometric level, as mentioned above, Social skills were the poorest-performing dimension of the ISS, and items on this dimension need additional validation.

As the internet is not only no longer a new technology but also always evolving with new internet-enabled devices and applications being constantly developed, the recursive relationship between skills and uses also needs further investigation. Although such an investigation would require a longitudinal data set, the results of the study, which further confirmed the robustness of the ISS, indicate that the ISS could serve as a medium- and content-related skills assessment inventory in such a longitudinal study.

Finally, the analysis of measurement invariance reinforced the ongoing debate about age and internet proficiency (Hargittai and Dobransky Citation2017). Focusing on the measurement issues related to assessing internet skills among different generations, the present study results reveal that it is very important that measurement equivalence of employed measurement models is achieved. Finding such an assessment inventory (e.g., the ISS for Operational, Information Navigation, and Social skills) is the first step in examining important questions such as how internet skills may depend on actual age, period, or cohort effects.

The findings are set against the limitations of this study. One shortcoming is that we worked with and validated the four-dimensional short version of the ISS and not the long ISS including all 35 items. Testing the full instrument would allow for validating the original selection of items that constitute the short version of the ISS. Having additional items would be especially helpful in determining the factor structure of the Social and Information Navigation skills dimensions, where a total of three items were omitted in the present measurement model. Another limitation concerns the test of criterion validity, which involved examining correlations between different types of skills and corresponding types of internet use. It would be better if we could include other criterion variables in the validation, such as access to and breadth of utilization of internet-enabled devices. In addition, variables other than internet use, such as outcomes of online engagement (see van Deursen et al. Citation2017), could be employed in testing the criterion validity of the ISS. Alternatively, the criterion validation could be carried out by comparing the scores of the short version of the ISS with performance or observation measures of internet skills (see Litt Citation2013). Moreover, it would be helpful if tests of invariance were performed for other socioeconomic variables that have been proposed in digital inequalities research, such as education, employment, and occupational status. Such additional testing of the measurement equivalence of the ISS would warrant its use in research comparing levels of internet skills across different sociodemographic groups, which could yield important policy implications.

Conclusion

In conclusion, this investigation presented evidence of adequate construct validity of the scores on the short version of the ISS in a country that is medial in EU in internet use, using face-to-face survey methodology. Although small modifications were required in the ISS inventory to enable the factorability of Social and Information Navigation skills, the short version of the ISS scores are consistent with previous research in exhibiting reasonable levels of convergent and discriminant validity across all scale dimensions. Moreover, this study has extended research on the measurement quality of the short version of the ISS by evaluating its criterion validity and measurement invariance. Referring to the latter, this study showed that the short version of the ISS can be applied equally well among younger and older internet users. However, if researchers are interested in comparing average scale scores for Creative skills between the two generations, caution would be needed, because the scalar equivalence of this dimension has not been established. Likewise, mixed findings were observed in terms of criterion validity. Unlike Operational and Creative skills, which were found to have acceptable validity, criterion validity was only partly supported for Information Navigation skills and not confirmed for Social skills. Therefore, further evidence is called for based on other criteria variables and on samples from other countries and cultures. There is also a need to further evaluate the short version of the ISS as a second-order construct. As the present study has provided convincing evidence for a single summative score of internet skills, it is hoped that these results will facilitate research and yield further empirical evidence in this area. This would allow wider inclusion of the ISS in general social surveys and therefore, enable more conceptually comprehensive and methodologically rigorous research on internet skills and digital inequalities in general.

Declarations of interest

None.

Supplemental Material

Download MS Word (28 KB)Acknowledgements

The authors would like to thank the anonymous reviewers for their helpful and constructive feedback. We are grateful to Jošt Bartol for his assistance in this project.

Additional information

Funding

Notes

1 Also known as digital skills; see Scheerder, van Deursen, and van Dijk (Citation2017).

2 The short version of the ISS was also applied in a small-scale non-representative study in Cuba, where the authors tested the internal consistency of the scale using Cronbach’s alpha (van Deursen and Solis Andrade Citation2018).

4 We also ran EFA and CFA on the subsample of mobile internet users employing the 23-item ISS. The results were comparable to the results presented in the paper and are available as an online supplement.

5 More information about the ISSP is available at http://w.issp.org.

6 Items that were modified are marked with an asterisk (*) in Table A.1 in the Appendix.

7 Following van Deursen, Helsper, and Eynon (Citation2014), such responses were defined as non-valid values. Accordingly, in multivariate models, units with such responses were excluded from the analyses.

8 Alternatively, unweighted least squares (ULS) or diagonally weighted least squares (DWLS) methods could be used to estimate parameters. They could be based on polychoric correlations appropriate for ordinal data as opposed to the conventional covariance matrix treating the indicators as continuous (Diamantopoulos and Siguaw Citation2013). The decision to use ML estimators was based on the EFA results and to keep the methods and estimates comparable with the previous research on the measurement characteristics of the ISS.

9 Innes and Straker (Citation1999) described criterion validity correlation coefficients of ≥ .75 as good and those that fall in the midrange of .50 to .75 as moderate, whereas estimates of ≤ .50 are deemed poor. However, in practice, criterion validity coefficients of ≥ .60 are considered high, while those between .30 and .60 are considered moderate to good (Jang, Chern, and Lin Citation2009).

10 Brown (Citation2015, 296) noted that “a higher-order solution cannot improve goodness of fit relative to the first-order solution, where the factors are freely intercorrelated.”

References

- AAPOR. 2016. Standard definitions: Final dispositions of case codes and outcome rates for surveys. 9th ed. Washington, DC: American Association for Public Opinion Research. Assessed October 25, 2020. http://www.aapor.org/AAPOR_Main/media/publications/Standard-Definitions20169theditionfinal.pdf.

- Arbuckle, J. L. 2016. Amos (version number 26.0) [computer program]. Chicago, IL: IBM SPSS.

- Blank, G. 2013. Who creates content? Stratification and content creation on the internet. Information, Communication & Society 16 (4):590–612. doi: 10.1080/1369118X.2013.777758.

- Brown, T. A. 2015. Confirmatory factor analysis for applied research. 2nd ed. New York: Guilford Press.

- Büchi, M. 2016. Measurement invariance in comparative internet use research. Studies in Communication Sciences 16 (1):61–9. doi: 10.1016/j.scoms.2016.03.003.

- Bunz, U. 2004. The Computer-Email-Web (CEW) Fluency Scale: Development and validation. International Journal of Human-Computer Interaction 17 (4):479–506. doi: 10.1207/s15327590ijhc1704_3.

- Byrne, B. M. 2010. Structural equation modeling with AMOS: Basic concepts, applications, and programming. 2nd ed. New York: Routledge.

- Callegaro, M., K. Lozar Manfreda, and V. Vehovar. 2015. Web survey methodology. Los Angeles, CA: Sage.

- Callegaro, M., A. Villar, D. Yeager, and J. A. Krosnick. 2014. A critical review of studies investigating the quality of data obtained with online panels based on probability and nonprobability samples. In Online panel research: A data quality perspective. Vol. 2, eds. M. Callegaro, R. Baker, J. Bethlehem, A. S. Göritz, J. A. Krosnick, and P. J. Lavrakas, 23–55. New York: John Wiley.

- Campbell, D. T., and D. W. Fiske. 1959. Convergent and discriminant validation by the multitrait-multimethod matrix. Psychological Bulletin 56 (2):81–105. doi: 10.1037/h0046016.

- Child, D. 2006. The essentials of factor analysis. 3rd ed. New York: Continuum.

- Correa, T. 2010. The participation divide among “online experts”: Experience, skills and psychological factors as predictors of college students’ web content creation. Journal of Computer-Mediated Communication 16 (1):71–92. doi: 10.1111/j.1083-6101.2010.01532.x.

- Correa, T. 2016. Digital skills and social media use: How internet skills are related to different types of Facebook use among “digital natives”. Information Communication and Society 19 (8):1095–107. doi: 10.1080/1369118X.2015.1084023.

- Correa, T., I. Pavez, and J. Contreras. 2020. Digital inclusion through mobile phones?: A comparison between mobile-only and computer users in internet access, skills and use. Information Communication and Society 23 (7):1074–91. doi: 10.1080/1369118X.2018.1555270.

- Cotten, S. R., E. L. Davison, D. B. Shank, and B. W. Ward. 2014. Gradations of disappearing digital divides among racially diverse middle school students. In Communication and information technologies annual (Studies in Media and Communication, Vol. 8), eds. L. Robinson, S. R. Cotten, and J. Schulz, 25–54. Bingley, UK: Emerald.

- Cotten, S. R., G. Ford, S. Ford, and T. M. Hale. 2014. Internet use and depression among retired older adults in the United States: A longitudinal analysis. The Journals of Gerontology. Series B, Psychological Sciences and Social Sciences 69 (5):763–71. doi: 10.1093/geronb/gbu018.

- Damodaran, L., and J. Sandhu. 2016. The role of a social context for ICT learning and support in reducing digital inequalities for older ICT users. International Journal of Learning Technology 11 (2):156–20. doi: 10.1504/IJLT.2016.077520.

- Diamantopoulos, A., P. Riefler, and K. P. Roth. 2008. Advancing formative measurement models. Journal of Business Research 61 (12):1203–18. doi: 10.1016/j.jbusres.2008.01.009.

- Diamantopoulos, A., and J. A. Siguaw. 2013. Introducing LISREL: A guide for the uninitiated. Thousand Oaks, CA: Sage.

- DiMaggio, P., E. Hargittai, C. Celeste, and S. Shafer. 2004. Digital inequality: From unequal access to differentiated use. In Social inequality, ed. K. Neckerman, 355–400. New York: Russell Sage Foundation.

- Dodel, M., and G. Mesch. 2018. Inequality in digital skills and the adoption of online safety behaviors. Information, Communication & Society 21 (5):712–28. doi: 10.1080/1369118X.2018.1428652.

- Dodge, A. M., N. Husain, and N. K. Duke. 2011. Connected kids? K-2 children’s use and understanding of the internet. Language Arts 89 (2):86–98.

- Dolničar, V., K. Prevodnik, and V. Vehovar. 2014. Measuring the dynamics of information societies: Empowering stakeholders amid the digital divide. The Information Society 30 (3):212–28. doi: 10.1080/01972243.2014.896695.

- Dutton, W. H., Blank, G., with, D. Grošelj. 2013. Cultures of the internet: The internet in Britain (Oxford Internet Survey 2013 Report). Oxford, UK: Oxford Internet Institute, University of Oxford. Accessed November 1, 2020. http://oxis.oii.ox.ac.uk/wp-content/uploads/2014/11/OxIS-2013.pdf.

- European Commission. 2019a. Standard Eurobarometer 92: Media use in the European Union. Accessed November 1, 2020. https://ec.europa.eu/commfrontoffice/publicopinionmobile/index.cfm/Survey/getSurveyDetail/surveyKy/2255

- European Commission. 2019b. Human capital: Digital inclusion and skills (Digital Economy and Society Index Report 2019). Accessed November 1, 2020. https://ec.europa.eu/newsroom/dae/document.cfm?doc_id=59,976

- Field, A. 2013. Discovering statistics using SPSS. 4th ed. London: Sage.

- Fornell, C., and D. F. Larcker. 1981. Evaluating structural equation models with unobservable variables and measurement error. Journal of Marketing Research 18 (1):39–50. doi: 10.1177/002224378101800104.

- Gefen, D., D. Straub, and M.-C. Boudreau. 2000. Structural equation modeling and regression: Guidelines for research practice. Communications of the Association for Information Systems 4 (7):1–77. doi: 10.17705/1CAIS.00407.

- Gerbing, D., and J. G. Hamilton. 1996. Viability of exploratory factor analysis as a precursor to confirmatory factor analysis. Structural Equation Modeling: A Multidisciplinary Journal 3 (1):62–72. doi: 10.1080/10705519609540030.

- Guadagnoli, E., and W. F. Velicer. 1988. Relation of sample size to the stability of component patterns. Psychological Bulletin 103 (2):265–75. doi: 10.1037/0033-2909.103.2.265.

- Hargittai, E. 2002. Second-level digital divide: Differences in people’s online skills. First Monday 7 (4). Accessed November 15, 2020. https://firstmonday.org/ojs/index.php/fm/article/view/942. doi: 10.5210/fm.v7i4.942.

- Hargittai, E. 2010. Digital na(t)ives? Variation in internet skills and uses among members of the “net generation”. Sociological Inquiry 80 (1):92–113. doi: 10.1111/j.1475-682X.2009.00317.x.

- Hargittai, E., and K. Dobransky. 2017. Old dogs, new clicks: Digital inequality. Candian Journal of Communication 42 (2):195–212.

- Hargittai, E., and Y. P. Hsieh. 2012. Succinct survey measures of web-use skills. Social Science Computer Review 30 (1):95–107. doi: 10.1177/0894439310397146.

- Hargittai, E., and Y. P. Hsieh. 2013. Digital inequality. In The Oxford handbook of internet studies, ed. W. H. Dutton, 129–50. Oxford, UK: Oxford University Press.

- Hargittai, E., and E. Litt. 2011. The tweet smell of celebrity success: Explaining variation in Twitter adoption among a diverse group of young adults. New Media & Society 13 (5):824–42. doi: 10.1177/1461444811405805.

- Hargittai, E., and M. Micheli. 2019. Internet skills and why they matter. In Society and the internet: How networks of information and communication are changing our lives, eds. M. Graham and W. H. Dutton, 109–24. Oxford, UK: Oxford University Press.

- Hargittai, E., A. M. Piper, and M. R. Morris. 2019. From internet access to internet skills: Digital inequality among older adults. Universal Access in the Information Society 18 (4):881–90. doi: 10.1007/s10209-018-0617-5.

- Harkness, J. A., F. J. R. Van de Vijver, and P. P. Mohler. 2003. Cross-cultural survey methods. Hoboken, NJ: Wiley-Interscience.

- Helsper, E. J. 2012. A corresponding fields model for the links between social and digital exclusion. Communication Theory 22 (4):403–26. doi: 10.1111/j.1468-2885.2012.01416.x.

- Helsper, E. J., and R. Eynon. 2013. Distinct skill pathways to digital engagement. European Journal of Communication 28 (6):696–713. doi: 10.1177/0267323113499113.

- Hu, L., and P. M. Bentler. 1999. Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling: A Multidisciplinary Journal 6 (1):1–55. doi: 10.1080/10705519909540118.

- Hunsaker, A., and E. Hargittai. 2018. A review of internet use among older adults. New Media & Society 20 (10):3937–54. doi: 10.1177/1461444818787348.

- IBM. 2016. SPSS statistics for Windows (version number 24.0) [computer program]. Armonk, NY: IBM.

- Innes, E., and L. Straker. 1999. Validity of work-related assessments. Work (Reading, Mass.) 13 (2):125–52.

- Jang, Y., J. Chern, and K. Lin. 2009. Validity of the Loewenstein occupational therapy cognitive assessment in people with intellectual disabilities. The American Journal of Occupational Therapy: Official Publication of the American Occupational Therapy Association 63 (4):414–22. doi: 10.5014/ajot.63.4.414.

- Junco, R., and S. R. Cotten. 2012. No A 4 U: The relationship between multitasking and academic performance. Computers & Education 59 (2):505–14. doi: 10.1016/j.compedu.2011.12.023.

- Kline, P. 1986. A handbook of test construction: Introduction to psychometric design. London: Methuen.

- Leung, L., and P. S. N. Lee. 2012. Impact of internet literacy, internet addiction symptoms, and internet activities on academic performance. Social Science Computer Review 30 (4):403–18. doi: 10.1177/0894439311435217.

- Litt, E. 2013. Measuring users’ internet skills: A review of past assessments and a look toward the future. New Media & Society 15 (4):612–30. doi: 10.1177/1461444813475424.

- Livingstone, S., and E. J. Helsper. 2007. Gradations in digital inclusion: Children, young people and the digital divide. New Media & Society 9 (4):671–96. doi: 10.1177/1461444807080335.

- Livingstone, S., and E. J. Helsper. 2010. Balancing opportunities and risks in teenagers’ use of the internet: The role of online skills and internet self-efficacy. New Media & Society 12 (2):309–29. doi: 10.1177/1461444809342697.

- Mossberger, K., C. J. Tolbert, and M. Stansbury. 2003. Virtual inequality: Beyond the digital divide. Washington, DC: Georgetown University Press.

- NTIA. 1995. Falling through the net: A survey of the “have nots” in rural and urban America. Washington, DC: National Telecommunications and Information Administration, U.S. Department of Commerce.

- Potosky, D. 2007. The internet knowledge (iKnow) measure. Computers in Human Behavior 23 (6):2760–77. doi: 10.1016/j.chb.2006.05.003.

- Prensky, M. 2001. Digital natives, digital immigrants. On the Horizon 9 (5):1–6. doi: 10.1108/10748120110424816.

- Putnick, D. L., and M. H. Bornstein. 2016. Measurement invariance conventions and reporting: The state of the art and future directions for psychological research. Developmental Review 41:71–90. doi: 10.1016/j.dr.2016.06.004.

- Ragnedda, M., and G. W. Muschert. 2013. Introduction. In The digital divide: The internet and social inequality in international perspective, eds. M. Ragnedda and G. W. Muschert, 1–14. Abingdon, UK: Routledge.

- Rainie, L., and B. Wellman. 2012. Networked: The new social operating system. Cambridge, MA: MIT Press.

- Reisdorf, B. C., A. Petrovčič, and D. Grošelj. 2020. Going online on behalf of someone else: Characteristics of internet users who act as proxy users. New Media & Society. doi: 10.1177/1461444820928051

- Robinson, L., S. R. Cotten, H. Ono, A. Quan-Haase, G. Mesch, W. Chen, J. Schulz, T. M. Hale, and M. J. Stern. 2015. Digital inequalities and why they matter. Information, Communication & Society 18 (5):569–82. doi: 10.1080/1369118X.2015.1012532.

- Scheerder, A., A. J. A. M. van Deursen, and J. A. G. M. van Dijk. 2017. Determinants of internet skills, use and outcomes: A systematic review of the second- and third-level digital divide. Telematics and Informatics 34 (8):1607–24. doi: 10.1016/j.tele.2017.07.007.

- Spitzberg, B. H. 2006. Preliminary development of a model and measure of computer-mediated communication (CMC) Competence. Journal of Computer-Mediated Communication 11 (2):629–66. doi: 10.1111/j.1083-6101.2006.00030.x.

- Surian, A., and A. Sciandra. 2019. Digital divide: Addressing Internet skills. Educational implications in the validation of a scale. Research in Learning Technology 27:1–12. doi: 10.25304/rlt.v27.2155.

- SURS. 2017a. Prvo četrtletje 2017: Interneta doslej še ni nikoli uporabilo 18% oseb, starih 16–74 let. Accessed November 15, 2020. https://www.stat.si/StatWeb/News/Index/6998

- SURS. 2017b. Uporaba pametnih telefonov in e-veščine uporabnikov interneta. Accessed November 15, 2020. https://www.stat.si/StatWeb/News/Index/7115

- UNESCO. 2012. International standard classification of education ISCED 2011. Montreal: UNESCO Institute for Statistics. Accessed November 15, 2020. http://uis.unesco.org/sites/default/files/documents/international-standard-classification-of-education-isced-2011-en.pdf.

- van Deursen, A. J. A. M., E. J. Helsper, and R. Eynon. 2014. Measuring digital skills: From digital skills to tangible outcomes. Oxford, UK: Oxford Internet Institute.

- van Deursen, A. J. A. M., E. J. Helsper, and R. Eynon. 2016. Development and validation of the Internet Skills Scale (ISS). Information, Communication & Society 19 (6):804–23. doi: 10.1080/1369118X.2015.1078834.

- van Deursen, A. J. A. M., E. J. Helsper, R. Eynon, and J. A. G. M. van Dijk. 2017. The compoundness and sequentiality of digital inequality. International Journal of Communication 11:452–73.

- van Deursen, A. J. A. M., and L. Solis Andrade. 2018. First- and second-level digital divides in Cuba: Differences in internet motivation, access, skills and usage. First Monday 23 (8). Accessed November 15, 2020. https://www.firstmonday.org/ojs/index.php/fm/article/view/8258/7556. doi: 10.5210/fm.v23i8.8258.

- van Deursen, A. J. A. M., and J. A. G. M. van Dijk. 2011. Internet skills and the digital divide. New Media & Society 13 (6):893–911. doi: 10.1177/1461444810386774.

- van Deursen, A. J. A. M., and J. A. G. M. van Dijk. 2015a. Toward a multifaceted model of internet Access for understanding digital divides: An empirical investigation. The Information Society 31 (5):379–91. doi: 10.1080/01972243.2015.1069770.

- van Deursen, A. J. A. M., and J. A. G. M. van Dijk. 2015b. Internet skill levels increase, but gaps widen: A longitudinal cross-sectional analysis (2010–2013) among the Dutch population. Information, Communication & Society 18 (7):782–97.

- van Deursen, A. J. A. M., J. A. G. M. van Dijk, and O. Peters. 2011. Rethinking internet skills: The contribution of gender, age, education, internet experience, and hours online to medium- and content-related internet skills. Poetics 39 (2):125–44. doi: 10.1016/j.poetic.2011.02.001.

- van Dijk, J. A. G. M. 2005. The deepening divide: Inequality in the information society. Thousand Oaks, CA: Sage Publications.

- van Dijk, J. A. G. M., and A. J. A. M. van Deursen. 2014. Digital skills: Unlocking the information society. New York: Palgrave Macmillan.

- Xie, B. 2011. Effects of an eHealth literacy intervention for older adults. Journal of Medical Internet Research 13 (4):e90. Accessed November 15, 2020. https://www.jmir.org/2011/4/e90/. doi: 10.2196/jmir.1880.

- Zimic, S. 2009. Not so “techno-savvy”: Challenging the stereotypical images of the “net generation”. Digital Culture and Education 1 (2):129–44.

Appendix A