?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Extended drought is a concern for water resource sustainability in the Colorado River Basin of the western USA. Recent instrumental data are a limited rendering of drought risk, while paleoclimate data provide evidence of megadrought that could reoccur; but their impact analyses have not been reported to date. A 645-year tree-ring reconstruction of central Arizona, USA, streamflows reveals several threatening periods including a severe 16th-century multi-decade event. This study translated that record to net basin water supply with comparison to instrumental records to drive an operations model of a key resource system serving metropolitan Phoenix, Arizona. Cumulative system impacts and demand sensitivities find no system depletion to inoperable conditions for any drought of the past several centuries. The megadrought presently afflicting the region has become more severe than any in the paleoclimate record and should be considered the new drought of record for adaptation planning.

Editor R. Woods; Associate editor A. van Loon

1 Introduction

Drought has long been recognized as a potentially devastating natural hazard reoccurring throughout history. Although the global economic impact of drought is difficult to quantify, frequently cited is the estimated annual impact of US$6–8 billion in the USA (FEMA Citation1995, Wilhite et al. Citation2005, p. 121). Hydrological droughts – those with impacts on water resources – are of particular concern within arid and semi-arid regions, as they often tip the scale between water sufficiency and crisis due to the inherently dry climate that is otherwise attractive to many elements of the population. Drought merits specific attention for water resource systems in the Colorado River Basin (CRB) of the western USA, which have supported significant population growth with increasing water demand over the past several decades. Large surface water reservoirs have become essential infrastructure due to the high inter-annual variability of precipitation and runoff with a propensity for multi-year drought in the semi-arid climate. As well, water management in the region has been sensitized to climate threats that challenge their infrastructure, strategies, and operating tactics. Although the recent instrumental record provides important design and operation guidance, its limited length of approximately 120 years is an insufficient dataset when seeking a thorough assessment of complete probabilities and consequences of drought that might threaten future sustainability.

Figure 3. Smoothed time series comparison of the as-reported tree-ring reconstruction to the historical gaged record. There is a 168 000 acre-feet difference between medians.

Alternatively, paleoclimate records provide reconstructions of the past to augment risk assessments and inform adaptation planning. Woodhouse and Overpeck (Citation1998) reviewed a wide range of such records from across the USA, including historical documents, dendrochronology studies, archaeological remains, lake sediments, and geomorphic data, to examine droughts that occurred under naturally varying climate conditions over the last 2000 years. They found evidence that “… droughts of the twentieth century, including those of the 1930s and 1950s, were eclipsed several times by droughts earlier in the last 2000 years, and as recently as the late sixteenth century. In general, some droughts prior to 1600 appear to be characterized by longer duration (i.e. multidecadal) and greater spatial extent than those of the twentieth century.” Notable droughts were identified during the medieval period, particularly in the 13th and 16th centuries; and they state “… the sixteenth century drought appears to have been the most severe and persistent drought in the Southwest in the past 1000–2000 years.” Consequently, the term “megadrought” came to refer to a drought period more prolonged than had been witnessed in the 20th century.

Meko et al. (Citation2007) found evidence in a network of tree-ring chronologies from the Upper CRB of low average river flow periods in the late 12th century, end of 13th century, mid 15th century, and in the late 16th century. Each was identified by anomalously low flow as measured by a 25-year running mean that was as low as 84% of the observed 20th-century normal. Detailed review of the reconstructed time series showed a series of multi-year low-flow intervals embedded in generally dry periods. Droughts were characterized by an absence of years with flows much above normal, often with several consecutive years below the long-term median (Meko et al. Citation2007). Their severity during such periods, defined as the cumulative flow deficit relative to what is required to maintain a system (Mishra and Singh Citation2010), can be large and have complex consequences for water resource infrastructure. It has been suggested that if a medieval megadrought were to reoccur in the future it would challenge the resilience of CRB water resource systems (Woodhouse et al. Citation2006, Ault et al. Citation2014, Citation2016).

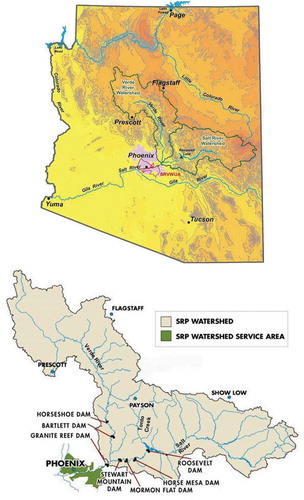

There is significant ongoing research into climatic specifics of drought, and guidance has been offered by which to apply it for hydrology planning (Brekke and Prairie Citation2009). But researchers acknowledge a shortfall in analyses that often only reach coarse representations of actual water system reliability (Prairie et al. Citation2008). With a lack of specific impact assessments for modern infrastructure systems, it is difficult to answer whether if a system was designed to buffer against the worst drought of record, but more severe ones have been revealed in paleoclimate evidence, how will the system respond should they reoccur? And, before system sustainability can be quantitatively assessed, how can paleoclimate evidence be accurately translated to datasets applicable to drought impact evaluation? This study performed such an assessment for a major water resource system in central Arizona, USA. The Verde River, Tonto Creek, and Salt River sub-basins of the Lower CRB encompass approximately 33 000 square kilometers of watershed. Their surface water flows are managed by the Salt River Project (SRP) as they pass through a parallel series of reservoirs on the Salt and Verde Rivers, for release to an extensive distribution system of canals and irrigation laterals in a 248 200-acre service area in metropolitan Phoenix (), where water deliveries can be supplemented by groundwater. The SRP has been managing the system for irrigation and municipal water treatment demand for over a century through the utility cooperative Salt River Valley Water Users’ Association. It operates alongside the public utility Salt River Project Agricultural Improvement and Power District, which provides electricity to Phoenix area customers, a portion of which is hydroelectrically generated on the Salt River. The SRP reservoir system is the first and oldest multipurpose federal reclamation project in the arid western USA, serving as a model of success for U.S. Reclamation Service infrastructure. By delivering a significant share of ongoing water demand for more than a century, the system has been a formative influence on the geographic evolution of south-central Arizona, having supported Phoenix’s growth to the fifth largest city and the 12th largest metropolitan area in the USA (Gammage Citation2016).

The historical SRP system record contains various pluvial and drought periods. After a pluvial era ending in the early 1990s, several sequential years of low reservoir inflows in the late 1990s and early 2000s resulted in record low reservoir storage in 2002–2003 and motivated a dendrochronology study of the watersheds. A set of tree-ring cores were collected in 2005 by researchers at the University of Arizona’s Laboratory of Tree-Ring Research to supplement the existing archive and develop an annual streamflow reconstruction of the Salt-Tonto and Verde River watersheds for the mid-14th century to 2005 (Meko and Hirschboeck Citation2008). Ring widths were found to have a strong annual runoff signal and the reconstruction explained 49–69% of variance in annual flows. This provided a comparison to the 1990s–2000s drought, placing it in the long-term historical context for a more complete understanding of regional climate variability. While 2002 was found to be a single-year low-flow extreme in the reconstructed record (ring absent in 60% of tree cores), more severe droughts were found earlier in the long-term record. These included medieval megadroughts, and they provide a basis upon which to test SRP system response, particularly to the late-16th-century event reported by several paleoclimate researchers.

This study first examines the tree-ring dataset in comparison to the instrumental record to yield important adjustments accounting for effects of data transformations during streamflow reconstruction. Without them, drought details would be overly optimistic. Estimates of reservoir system losses from various mechanisms are then applied to calculate the net basin supply (NBS) of surface water available for delivery from the system. The 645-year NBS time series is then passed through a reservoir operations simulation model to assess expected response of the reservoir system. Results for periods of megadrought are examined for sustainability of the SRP system during those particularly threatening periods.

2 Data

2.1 The water resource system

The Salt River Project began operation over 100 years ago with the dedication of Roosevelt Dam, and descriptions of its watersheds and reservoirs are given in and . The Tonto Creek watershed lies between the Salt River and Verde River watersheds and provides about 15% supplemental inflow at the northwest side of Roosevelt Lake. Since it combines with the Salt River at that reservoir, their data are combined and the two are referred to as the Salt side of the system.

Table 1. (a) The USGS gages and (b) the reservoirs that constitute the SRP system in central Arizona.

Figure 1. Salt River Project (SRP) watersheds, reservoir system, and service area in Central Arizona (from SRP-a). Maps courtesy of SRP.

Water managers of the western USA quantify water volume in acre-feet (a-ft; 1 a-ft = 1233.5 m3) and utilize a water year calendar defined to begin 1 October. That is the point at which summer and heavy customer demand has passed but winter precipitation has typically not yet begun. The date 1 May is a key management date in SRP’s transition from winter to summer operations and water delivery planning, although some winter snowmelt in the Salt watershed occasionally extends into May. These dates align with two climatically and hydrologically distinct runoff seasons on the SRP watersheds. The wetter and cooler winter season (1 October – 30 April) is driven by large-scale synoptic weather systems which are crucial to replenishment of surface water storage not only due to the large precipitation event volumes, but also much lower evapotranspiration which allows flows to reach the reservoirs with modest runoff efficiency. The hotter, drier summer season (1 May – 30 September) is notable for the spatially diverse convective dynamics of the North American monsoon which combine with high evapotranspiration to yield small runoff efficiencies.

The Salt and Verde basins adjoin one another, resulting in seasonal correlations of streamflows captured in two parallel series of downstream reservoirs. Reservoir inflows are typically proportioned between seasons and watersheds as given in , although with year-to-year variability. Total storage capacity of the system’s six reservoirs is 2.3 million a-ft of water. Most of it (88%) is on the Salt side of the system, where Roosevelt Lake accounts for 71% of the total (). Water releases from the last of the Salt and Verde reservoirs combine just upstream of the Granite Reef Dam, where it is diverted into a delivery system of canals bounding the north and south sides of SRP’s service area in the Phoenix metropolitan area. Surface water can be supplemented with pumped groundwater at locations within the metropolitan delivery system. Contrary to general perceptions, water demand from system users has been in a long-term decline, from greater than 1 000 000 a-ft/year a few decades ago to an average 900 000 a-ft/year in 2003–2011, down to recent deliveries averaging 800 000 a-ft/year. This downward trend is a result of conversion of agricultural lands to municipal purposes, delivery system loss mitigation efforts, water efficiency education, and regulatory classification of the service area as an active management area (Gammage et al. Citation2011). Seasonal demand cycles sum to about 41% of water delivered in the seven winter months (Oct–Apr) and 59% in the five summer months (May–Sep), while reservoir inflows are out of phase with deliveries (75% winter, 25% summer).

2.2 Streamflow

Instrumental runoff data were sourced from the archive of daily US Geological Survey (USGS) streamflow gage data which begins in 1913 (USGS-NWIS, SRP-b). The gages () are located just above the first intercepting reservoir on the watersheds. Additionally, SRP produced a reconstruction back to 1889 of monthly streamflow at reservoir inputs (Phillips et al. Citation2009, provided in personal communication). That extended record includes the late 1890s drought and therefore was appended to the instrumental record for analyses. Flow rates were aggregated to volumes of water within seasonal time intervals.

A reconstruction of streamflows for the basins by tree-ring analysis was conducted by Meko and Hirschboeck (Citation2008) at the University of Arizona Laboratory of Tree-Ring Research and is available from the project website. In addition to analysis by water year the researchers examined the degree to which seasonal variations could be identified by examination of partial-ring-width measurements of earlywood and latewood to provide monthly runoff estimates. Those data (1361 to 2005; 645 years) were provided to the authors through personal communication and were aggregated to winter and summer seasons of each water year. Comparative statistical analyses were performed for the period of overlap with instrumental data and are reported below.

2.3 Reservoir releases

The USGS daily streamflow data (USGS-NWIS, SRP-b) are available for gage locations below Bartlett Dam and Stewart Mountain Dam () from the dates at which the reservoirs went into service () by which reservoir discharge is measured for the Verde and Salt sides of the reservoir system, respectively.

2.4 Reservoir storage

The SRP maintains the historical series of daily water volume stored in each of the six reservoirs beginning in 1931 on the Salt and 1946 on the Verde sides of the system (SRP-a) which extend to the present day. The full historical time series for this study was provided by SRP Surface Water Resources staff (in personal communications).

2.5 Miscellaneous water loss and gain

There are important miscellaneous losses and gains of water at the reservoirs which affect storage and supply. Losses can be due to evaporation and interactions between surface and sub-surface water in the proximity of the reservoirs. As well, during periods of high precipitation the reservoirs experience gains larger than the loss mechanisms due to combinations of direct precipitation on reservoirs, ungaged ephemeral streams, overland flow bypassing a stream gage, streambed modifications, or gage calibration performance. The net loss or gain is quantified by the difference between reservoir inflows and releases compared to storage changes over a time period. The imbalance is termed miscellaneous loss because it is expected to be a volume of missing water and is therefore entered as a positive value in system balancing. A negative miscellaneous loss value denotes a gain in reservoir storage that is not supported by the difference between gaged inflow and discharge, and such volumes can be significant during pluvial periods.

The climatic dependencies of seasonal miscellaneous loss at the reservoirs since 1946 (beginning of Verde reservoirs storage record) were examined using air temperature and precipitation data from the Parameter-elevation Regressions on Independent Slopes Model (PRISM) data archive (Daly et al. Citation1994, Citation2002). PRISM generates 4-km gridded monthly estimates of surface air temperature and precipitation depth using station data, spatial datasets, and expert guidance. Multivariate linear regression relationships for loss using the PRISM data were applied to obtain miscellaneous loss estimates for 1895 through 1945, and analogous-year sequences were used to estimate values for 1889 through 1894. The estimates were appended to post-1945 actuals to obtain a data series of miscellaneous loss for the Salt and the Verde reservoirs for each season, 1889 to the present.

2.6 Net basin supply

Net basin supply (NBS) of available surface water is equivalent to runoff aggregated to volume at the reservoir input gages less the miscellaneous loss within a time frame. and provides some insight to the contribution of miscellaneous loss factors as a function of runoff level. If there were no losses, net basin supply should equal runoff along the equivalence line. But, at low runoff levels during dry years, during both winter and summer, there are small net losses. These are most notable on the Salt side of the system where the largest reservoirs are located, and NBS tends to be lower than runoff. However, in the upper tail of the runoff distribution NBS tends to be higher than runoff when there are net storage gains during wet winters. Since the reservoir operations simulation model used in this study works from a time series of net basin supply, the four regression analyses shown in and were used to calculate what NBS would have been for a runoff value in each watershed-season of the tree-ring dataset.

3 Methods

3.1 Low-pass filter smoothing of time series

Climate and streamflow can scale widely across time with modes of variability from multiple origins, both decadal and sub-decadal. To assess underlying multi-decadal variability it is often useful to suppress sub-decadal weather and macro-weather components that are not of interest for an analysis. Borrowing from signal processing methods, an appropriately designed bandpass filter can be applied to display low frequencies and provide insight to important periodicities of time series. For this study a low-pass Lanczos filter (Burt and Barber Citation1996, p. 529–534) was used for smoothing to reveal megadrought patterns. The cutoff filter has an amplitude response that drops to 50% at a cutoff frequency, fcut, of one cycle in 10 years, or 0.1 cycles per observation. A 15-year filter length was used with calculations applied at the center of the data interval evaluated, so a filtered value contains contributions of ±7 years from the center point. As the end of a series is approached, shorter filter lengths are sequentially applied to continue the smoothed curve closer to the end of the series.

3.2 Tree-ring streamflow reconstruction versus observed record

To assess performance of the as-reported tree-ring reconstructed streamflow for how it can render a representation of the distant past, comparisons to the observed record for the 117-year period of overlap (1889–2005) were performed. The smoothed time series in show that similar eras of drought and excess are revealed in both datasets. The deep 1890s and early-2000s droughts are present, as is the shallower 1950s drought. The medians of each dataset are indicated, and a measure of drought duration is provided where the smoothed series pass through the median lines. Crossing points are approximately aligned, but with some small differences. It can also be seen that the highs and lows of tree-ring data are more constrained than actual observations within similar time periods. The 1889–2005 medians are different by 168 000 a-ft, with the tree-ring record overstated. Approximately 90% of that difference originates in the winter season. This is a differential that will be generous to system performance assessments if also present in the prior 528 years. Mean annual differences for the four watershed-seasons and totals () quantify the positive bias in tree-ring values relative to historical observations. Tree-ring researchers employ various evolving methodologies for streamflow reconstructions (Woodhouse et al. Citation2006). For this dataset the bias is attributable to the manner in which the tree-ring data were processed which applied log-transformation prior to regression analyses, which also leads to modifications limiting tails of the distributions. The correlation of the tree-ring and observational records for each water year’s runoff in the overlapping period is shown in . A general pattern of higher tree-ring values up to about 2 300 000 a-ft is evident, and then years above that level do not present accurately in the tree-ring record, as tree-ring widths are affected by more than moisture availability in wet times (Prairie et al. Citation2008). The cumulative frequency distribution (cfd) in also shows this finding.

Table 2. Proportions of typical annual reservoir system inflows.

The Meko and Hirschboeck research (Citation2008) was primarily directed at identifying drought periods without particular attention to runoff distribution tails, since percentile behavior relative to a mean or median was sufficient to characterize times of drought. But those details are now important for the system resiliency assessment of this study due to the cumulative effect of an inflow series rendered by the reservoir simulation model. The positive bias found in the comparison will provide overly optimistic results. A simple bias correction is not appropriate because that does not address data transformation methodology artifacts, and offsets across distributions are obviously complex ( and ). Therefore, a percentile-adjustment approach was applied to each watershed-season’s paired cfds with tree-ring values adjusted per the offset identified at its level in the overlapping period cfds, under the assumption that differentials in the overlap period are representative of those for prior centuries. Percentile adjustment was also performed for totals by water year, with reassignment of adjustments proportional to watershed-season representation in the year’s tree-ring record. The results were similar whether conducted bottom-up by watershed-season or top-down by total. After applying the methodology the scatter evident in was still present although bias-corrected as shown in . There is no way of knowing where in the scatter distribution a particular pre-instrumental year originates or how to modify it, so that remains a feature in the corrected tree-ring data series. But, when a long multi-year sequence is passed through the reservoir simulation model, those variations converge to a representative cumulative system response.

3.3 Net basin supply from the tree-ring record

Once percentile adjustments of the tree-ring data were performed they were transformed to net basin supply by the relationships described in Section 2.6. The resulting NBS time series is the best estimate of surface water supply that could have driven the reservoir system from the mid 14th century through the 19th century. The distribution changes resulting from application of the percentile-adjustment methodology and the NBS translation are shown in . The runoff distribution below the median is reduced about 20% by percentile-adjustment, about one-half that in the third quartile, and is marginally smaller in the 80th percentile. Nearly no change is applied at the 87th percentile, but above that level runoff is larger for the high flows, which were not represented well in the tree-ring data. Calculations of NBS resulted in additional reductions in the range of 5–15% for the lower half of the distribution due to miscellaneous loss mechanisms in the vicinity of the reservoirs. The net gains to the system in wet years result in about 5% more water above the 80th percentile of the distribution. The final result of these changes is a time series that reflects drought risk arising from the lower portion of the distribution and fast reservoir replenishments occurring in wet years in the upper portion of the distribution.

3.4 Reservoir operations simulation model

The cumulative interaction of runoff variability with reservoir design and operation greatly affects the response and status of the system at any point in time. The interplay is essential to understanding impacts on water availability, which is the bottom-line measure of system resilience. To perform that assessment for this study a reservoir operations simulation model (ResSim) was developed. The model incorporates a customer water demand schedule, system replenishments by net basin supply, and the web of decision rules used to manage the integrated six-reservoir system with supplemented groundwater and operating protocols. The decision rules within ResSim were established from published research (Phillips et al. Citation2009) and operational information as of 2010 (SRP-a,-b), and were reviewed with SRP staff for accuracy and completeness. Although the system is managed day-to-day, key operational protocols can be represented in modeling on a seasonal basis, simplifying calculations while maintaining the schedule of operating decisions.

The model was built per current system configuration and operating rules, and its main features can be summarized as:

water year start date of 1 October with 1 May winter-to-summer transition;

six Salt and Verde reservoirs, rated per current storage capacities (no sedimentation considered);

representative seasonal water demand schedule, modifiable from 900 000 a-ft/year;

groundwater pumping per SRP storage planning diagram, as function of reservoir storage status; from 50 000 to 325 000 a-ft/year pumping rates;

no water sourcing from outside the system;

priority to water supply for hydroelectric generation at the Salt reservoirs;

seasonal depletion and replenishment sequences per balance of surface water demand versus net basin supply;

seasonal reservoir positioning rules, with attention to winter runoff;

defined depletion/replenishment sequences within and between the Salt and Verde sides of the reservoir system;

spillage monitoring and correction between the Salt and Verde sides of the system;

reduced water allocation delivery (two-thirds of demand) implemented below 600 000 a-ft of total remaining reservoir storage; and

reservoir system total depletion shutdown at 50 000 a-ft remaining storage.

The model outputs 28 characteristics of the reservoir system for each season, including all volumes of surface water in and out of the system, water stored in each of the six reservoirs, excess Salt or Verde surface water spillage, the customer water demand and the amount of demand delivered, the amount of groundwater pumped to supplement surface water, and coded messages associated with significant thresholds (e.g. reduced allocation, system depletion).

Model evaluation was conducted to test its ability to replicate operational outcomes for the years since the SRP system was fully in operation. However, ResSim is built according to current system configuration and operating rules, and many of those have changed over time, making comparisons difficult. It is evident in a review of the historical storage record that there were significant ongoing modifications of reservoir operations into the early 1970s. And there have been documented changes since the 1970s (Phillips et al. Citation2009), including an expansion of Roosevelt Lake reservoir capacity and accompanying changes to operational safety-of-dams rules, revision of groundwater pumping guidelines in the mid 1990s and again in 2006, and the revision of water allocation reduction rules for conservation in 2006. ResSim emulates the current system configuration and operating guidelines in these respects.

Despite these issues, if the modeled system response on an aggregate scale approximates actual outcomes with explainable differences, it can be employed with confidence that it will respond appropriately to major drivers. While there are several variables of interest, the one most relevant to system sustainability is remaining total water storage at season transitions. As shown in , the model run at 900 000 a-ft/year of demand replicates the seasonal historical progression of storage since 1971 reasonably well, although with attributable periods of offset. Co-variability of simulated and observed reservoir storage is good, with a systematic bias into the 1990s owing to use of the larger post-1996 storage capacity in ResSim. The offset continues until the early 2000s drought, which closes the bias between modeled and observed results. That threatening drought period triggered reduced water deliveries to customers and approximately 500 000 a-ft of water sourcing from the Central Arizona Project. ResSim does not consider water sourcing from outside the system. Subsequent to the drought, simulated storage is consistently less than actual storage due to the carryover effect of that external water purchase. A wet winter in 2010 refilled the reservoirs and spilled water, which is replicated by the model. Since 2012 a model bias has emerged which understates actual storage. This is attributable to a long-term trend of declining customer demand, which has recently averaged 800 000 a-ft/year rather than the 900 000 a-ft/year modeled in . The cumulative differential has amounted to about a half million a-ft of water remaining in the reservoirs relative to what would be expected with the old demand level.

After application of those three offsets to simulation results it was concluded that the ResSim model can provide a representation of reservoir system response mirroring observed storage when factors external to model assumptions are taken into account. So, the model was deemed suitable for research purposes, but only to the extent that research questions are conditional to ResSim model assumptions.

3.5 Drought definitions for the resource system

When median surface water NBS (~850 000 a-ft/year) is added to a minimum operating groundwater pumping rate (50 000 a-ft/year), the system can support deliveries of 900 000 a-ft/year over the long term. Any water delivery reductions from that level readily benefit reservoir storage because the probability of reservoir inflows sufficient to sustain the system is enhanced when withdrawal volumes are below the median of the skewed NBS probability distribution (). The upper tail of the distribution represents high flow years that provide sufficient surface water to readily replenish the reservoir system, as can be seen in for years of abrupt storage recovery. Since that often takes just one wet winter, reservoir system drought is alleviated by those events, and that year is a demarcation point for drought duration. Therefore, a continuous run of years with NBS below median is an appropriate definition of hydrological drought applicable to this system, with a drought initiated by a year of NBS below and terminated by a year above the 850 000 a-ft/year criterion. Drought duration is the difference between initiation and termination times, and drought intensity is the average value of the relevant parameter (NBS) during drought (Mishra and Singh Citation2010). The minimum NBS within the drought is its depth, and the cumulative NBS deficiency relative to the 850 000 a-ft/year criterion is a measure of drought severity.

As reported by Meko et al. (Citation2007), the drought runs of years are often embedded within longer periods that may be classified as a megadrought if of sufficient duration and intensity. In their risk assessments for the Upper CRB region, Ault et al. (Citation2014, Citation2016) proposed a criterion for multi-decadal megadrought as a 35-year mean streamflow more than −0.5 standard deviations from the long-term mean. That may be applicable where data are reasonably symmetric to the high and low sides of distributions, but a standard deviation measure is not readily interpretable in situations of highly skewed distributions such as those characteristic of the Lower CRB. Additionally, drought intensity over shorter than 35 years may be sufficient to threaten reservoir operations, as will be seen below for the 1660s. So, alternatively, the identifying feature of megadrought used in this study is simply decadal average flow below the long-term median (850 000 a-ft/year), and the low-pass filter-smoothed data series readily reveals when those occurred, as can be seen in and . Such periods of a decade or longer are then assessed for reservoir system impacts and comparative severity.

4 Results and discussion

4.1 Reservoir system response: instrumental record

The instrumental record of NBS from the watersheds (1889–present) is shown in . The four run-of-years droughts within the modern era (1898–1904, 1953–1957, 1999–2004, 2011–2016) are noted. The most recent one is considered by regional water management to be a continuation of the 1999–2004 drought which was interrupted by a few wet years (2005, 2008, 2010). When 1999 to present is viewed as one drought era and its timing compared to earlier ones, it is interesting to note the long periodicity (50–70 years) with which significant drought periods have returned to the region, although the instrumental record is still too short to ascribe statistical significance to that observation. It does, however, lend credence to the suggestion of natural modes of low-frequency climate variability contributing to drought occurrence (Woodhouse et al. Citation2006, Ault et al. Citation2014).

portrays the ResSim model simulation of how water storage would have evolved if the reservoirs had been in place and managed per current operating guidelines across the time frame of . Water demand scenarios for 800 000 and 900 000 a-ft/year are shown. Total storage is maximized during periods of high inflows and depleted during cumulative flow deficits. The effect of the 1890s drought is readily apparent, briefly resulting in total remaining storage below 600 000 a-ft if demand had been 900 000 a-ft/year, but with 50% more remaining water if demand had been 800 000 a-ft/year. Any reduced delivery allocations in 1902–1904 would have been abruptly relieved by the very wet 1905 winter. During the pluvial 1910s–1920s period the system would have been repeatedly topped off and spilled excess water. The shallow dry period across the 1950s generally reduced reservoir storage but would not trigger any reduced water allocations for either demand level. The next pluvial period centered in the 1980s repeatedly refilled reservoirs with spillage of excess water. Then, for the early-2000s drought the simulation with demand of 900 000 a-ft/year renders a couple seasons of storage below 600 000 a-ft. As discussed in Section 3.4, the simulation provides an accurate representation of how storage did progress in that time frame when actions were, in fact, taken to adjust water deliveries and water was briefly sourced from outside the system. Those actions could have been avoided with the current system configuration and operating protocols if demand had been 800 000 a-ft/year in that time frame. Although drought relief came briefly with the El Niño winters of 2005, 2008, and 2010, below-median NBS over the subsequent years would have placed storage again at the 600 000 a-ft threshold if demand had been 900 000 a-ft/year. But, with demand declining to 800 000 a-ft/year the imposition of delivery reductions has been avoided and remaining water storage has held between 45 and 75% of capacity.

Figure 9. Simulated total reservoir storage at the end of winter and summer seasons, 1889 to present, as modeled by ResSim. The simulation was initialized with full reservoirs, and they refilled in 1891.

These results demonstrate that it is not only the streamflows of surface water supply that determine condition of the resource system at a point in time, but just as important are the water demand volumes, system design, and management protocols for delivering it. The ResSim reservoir simulation model provides the tool by which to represent those factors and assess cumulative impacts of the highly variable seasonal inflows from the dual watersheds. While examination of historical instrumental records is important and highly instructive, a broader interpretation of drought risks can be supplemented by assessment of the centuries-long tree-ring record of streamflow.

4.2 Identifying tree-ring megadrought periods of interest

The 645-year tree-ring time series of annual NBS was filter-smoothed (as described in Sections 3.1 and 3.5) to reveal its decadal variations, as shown in , and identify time periods when it was below median NBS. A dozen periods of interest were identified, as labeled in the figure, and studied in detail. Key statistics from the unsmoothed data for the periods of interest are given in , including the runs of years within each. Based upon duration, intensity (average NBS), and depth (minimum NBS), those that merit detailed examination are the late-16th-century megadrought that spans 1570–1593 and the 1660s drought of 1662–1670. The 16th-century megadrought is the one that has received significant paleoclimate research attention and has been revealed in other regions (Woodhouse and Overpeck Citation1998, Meko et al. Citation2007). The tree-ring record shows that it was composed of a series of 3–5-year droughts embedded in a long period of generally low flows interrupted by a scattering of high runoff years (, ). While the 1660s drought was shorter overall, it had the longest run of years (nine) without relief by any year above median NBS (, ). And its characteristics indicate it could have had a significant effect on the reservoir system. Therefore, while system impacts of all the droughts were evaluated and summarized in , those two events merit a detailed review and a comparison to how the 2000s drought has been evolving since the tree-ring record ended in 2005.

Table 3. Mean differences between the as-reported annual tree-ring and observation data in the overlap period.

Table 4. Drought events in the tree-ring and instrumental records, and reservoir storage response.

4.3 Reservoir system response: tree-ring record

The tree-ring NBS time series was passed through the ResSim reservoir operations simulation model to test system response. Reservoir storage during the course of a water year is typically lowest at the end of the summer season, and the minimum end-summer storage during the droughts is summarized in for both 800 000 a-ft/year and 900 000 a-ft/year demand. With 800 000 a-ft/year demand the series only dipped below the 600 000 a-ft reduced allocation threshold in one year, and that was during the late-16th-century megadrought. The 1660s drought had no years below threshold. When water demand is 900 000 a-ft/year, the instances below threshold increase, as given in and shown in . They increase to three years in the late 16th century, and a one year occurrence arises in the 1440s and two in the 1660s. The tree-ring record shows no instances below the threshold during the 1890s, 1950s, and 2000s droughts, while reservoir simulation of the instrumental record did briefly cross the storage criterion in the 1890s and 2000s. This serves as a reminder that the tree-ring record is a close approximation to history but with possible differences in detail from the actual record, which should be kept in mind during interpretation of results. When periods of storage below the reduced allocation threshold are limited to a few years, the implementation of conservation measures to reduce deliveries is feasible. But, managing the customer base through such times becomes more challenging if imposition of the measures is extended to multiple years. Results indicate that multi-year conservation periods could occur with higher water demand on the system than currently envisioned.

The late-16th-century megadrought began with a very wet 1565 that would have filled the reservoirs as shown in , followed by two years below median NBS in 1566–67. Reservoir storage would stay high, maintained by a modestly wet 1568. Then followed several years of low flows, of which 1571, 1573, and 1574 were particularly dry. Those would have resulted in depletion of about half of reservoir storage. A wet 1577 provided some storage recovery, followed by three dry years reducing stored water, and then a modest recovery in 1581. Four sequential dry years, 1582–1585, would have reduced reservoir storage to the 600 000 a-ft threshold, but only briefly because of high runoff in 1586 providing a fast-refresh to the system sufficient to nearly refill reservoirs. The system would have topped off in 1588 by a couple modestly wet years. Then a 4year drought with notably low NBS in 1590 would have reduced storage by half. More fast-refresh high runoff in 1594 would have been sufficient to fully refill the system and spill water for an end to that drought era. So, although this megadrought period was long, occasional wet winters provide reservoir refills sufficient to keep the system in manageable water storage positions. The shorter but deeper 1660s drought () contains nine sequential years of low streamflow (1662–1670) which would have brought storage to the reduced allocation threshold. Storage recovery then ensued from a scattering of wetter years in the 1670s and a complete filling of the system in 1680.

There were no depletions of water storage to inoperable levels for any of the tree-ring or instrumental record droughts examined under the range of demand analyzed. The lowest identified storage level was 15% of capacity under a 900 000 a-ft/year demand assumption, but double that level with 800 000 a-ft/year demand, which is more representative of present demand on the system. Exploratory sensitivity analyses indicated that any finite but small depletion risks lie above one million a-ft annual demand, which is larger than what is expected in the coming decades. Robustness of the low depletion risk finding is supported by the consistent observation that amidst megadrought eras there are intermittent wet years sufficient to provide some system recovery and maintain sustainability. Those can be seen in the droughts of the late 16th century and 1660s ( and ) and through recent experience in the 2000s ( and ). Those naturally occurring replenishments are assisted by operating management protocols to increase groundwater pumping (up to a self-imposed maximum rate), and potentially impose reduced water delivery allocations during times of low storage to buffer against reservoirs reaching a totally depleted condition.

4.4 Megadrought severity: past and present

The onset and end of drought periods are often clear only in retrospect once years of data are available and impacts are identified. For this analysis the effect on reservoir water storage is the prime consideration by which to assess drought severity. Onset is established from a sequence of low streamflows forcing an extended reduction in reservoir storage. And similarly, end of drought is identified by onset of high flow sequences returning the system to a high storage condition. Severity of a drought period is defined as the accumulated deficit relative to an expectation criterion (Mishra and Singh Citation2010), which for this study is the system inflows that would maintain elevated reservoir storage under a given water demand scenario. If there is spillage of excess water from either side of the system it is not included in a severity calculation since that resource is permanently lost. So, for the case of 900 000 a-ft/year demand relying upon 850 000 a-ft/year of surface water (and 50 000 a-ft/year groundwater), drought severity is the cumulative sum:

for i = 1 to n, where n is drought duration.

The calculation was made for the periods identified in , and severity evolution for some of those are given in . Runs of years below what is required to meet demand on an ongoing basis force the curves deeper into deficit. Deficits continue to accrue from drought onset until its ending, and termination is evident once the cumulative deficit plateaus. Other surface water criteria could be used in the severity calculation, which would modify the slope of the curves in , but their relative positions would be unchanged.

Figure 14. Evolving severity of the major historical drought periods. Severity is defined as the cumulative deficit of net basin supply versus what is required to meet demand over the drought’s duration. Accumulated shortages direct the curves downward. The current megadrought, as of 2018, is now the most severe on record.

Severity of the late-16th-century megadrought is −4.5 million a-ft accrued over 20 years. That was followed by some recovery in severity before the final 4-year run (1590–1593) accrued a deficit to the same severity level, finally ending that megadrought after 28 years.

The 1660s drought took only a decade to accrue −3.0 million a-ft severity. Calculations for the following years (1670s) are appended in , showing that those years did not accumulate to further severity below the −3.0 million a-ft level.

Effects of the 1870s and 1890s droughts are concatenated in under the hypothesis that they may constitute the same overall megadrought period. If various short-lived climate influences contributed to high streamflows in the mid-to-late 1880s, then one overall megadrought era might be suggested from , reflecting the multi-decadal low-frequency variability also found in the Upper CRB (Woodhouse et al. Citation2006). The severity of that entire period is −3.7 million a-ft over a little more than three decades.

The shallow character of the 1950s drought period is evident in , accruing only a −2.3 million a-ft deficit over 27 years per the instrumental record. A severity calculation from tree-ring data comes to a more severe value. The difference between datasets varies across evolution of the megadrought, with an average of −0.2 million a-ft (tree-ring more severe than instrumental). Only the 1950s period is long enough for a severity comparison between instrumental and tree-ring data. Comparative severity calculations for the 1890s and 2000s droughts is too short to allow for averaging out tree-ring NBS values that represented too high or too low in the reconstruction.

The tree-ring and instrumental records show the current drought era began in 1995. At the time the tree-ring study was reported (Meko and Hirschboeck Citation2008) cumulative deficit of the 2000s drought per instrumental data had been varying around −2.3 million a-ft. And, after only a decade, a megadrought was not yet in evidence. At that time the late-16th-century megadrought was the most severe of any on record. The 2011–2016 run of years then accrued a further flow deficit to make it clear that the region is in a megadrought equally severe to the one in the late 16th century, at −4.5 million a-ft after 21 years. The 2018 water year has presented one of the lowest reservoir inflows on record. It places the current megadrought deficit at −5 million a-ft, which makes it the most severe in the last 700 years.

5 Conclusions

Paleoclimate research has revealed that megadroughts, defined as decadal or longer periods of average streamflow notably lower than the long-term median, have occurred in the southwestern USA during past centuries. Multi-decadal modes of natural variability influence their periodic occurrence and duration (Woodhouse et al. Citation2006) and, as confirmed by this study, raise drought risks posed to modern water resource systems. Researchers (e.g. Ault et al. Citation2014) report that state-of-the-art climate models do not capture the important low-frequency fluctuations, so those models must be set aside and impact analyses performed with available evidence from historical datasets.

The worst megadrought reported from paleoclimate research for the region occurred in the late 16th century, and the question has been posed whether such a drought, if it were to reoccur in the future, would threaten sustainability of modern water resource infrastructure. This study performed such an impact analysis for the SRP water supply system in central Arizona, USA, by applying a 645-year tree-ring reconstruction of streamflows to modeling the response of a reservoir system serving the metropolitan Phoenix area. It was found that quantitative care must be exercised in the translation of the tree-ring record to a time series of net basin supply (NBS) before system impacts can be accurately assessed. Assessments of the cumulative system impact of NBS time series were performed by a reservoir operations simulation model to evaluate surface water storage conditions.

Modeled reservoir storage did not reach a depleted state for any of the dozen megadroughts when delivering the range of water demand expectations on the system. Even during megadrought, occasional fast-refresh wet years occur to replenish water storage and sustain the system through threatening times. This is assisted by groundwater pumping and, if needed, reduced water delivery allocations that constitute part of the system’s operating protocols to buffer against reservoirs being drained during dry periods. The pumping requirements were quantified during system modeling and found to be within permitted groundwater recharge facility capacity (SRP-c), thereby supporting safe yield objectives in the Phoenix active management area (Gammage et al. Citation2011).

Reservoir storage levels modeled with 900 000 a-ft/year demand declined to briefly cross the threshold at which conservation measures would be introduced for reducing water deliveries in a couple seasons in some of the megadroughts. When modeled with the current demand of 800 000 a-ft/year, those occurrences were alleviated. Water resource systems are built to buffer against the effects of drought, and results demonstrate the resilience of system design and operating protocols. Although the paleoclimate record was unavailable when the system was designed, if the historical megadroughts were to reoccur, study results indicate the system is sustainable through such periods.

When megadrought reoccurs in the future it will evolve amid elevated greenhouse gas concentration levels in the atmosphere and higher temperatures in the SRP system. The temperature record reveals two past periods of warming (1920s–1930s, 1980s–1990s) that have elevated the time series 1.8°C above its 19th-century level. As found by Murphy and Ellis (Citation2014), precipitation has remained stationary although highly variable over the entirety of the instrumental record. Similarly, tests of winter runoff (constituting 75% of reservoir inflows, ) indicate stationarity of that time series. However, Hurst-Kolmogorov tests (Koutsoyiannis Citation2011) of summer runoff with ongoing data updates indicate emerging impairments of NBS from increasing temperatures. Later years in the tree-ring reconstruction and the majority of the overlap period analysis include those summer effects that will influence future megadrought outcomes. Future changes in watershed land use should also be anticipated in next steps of system evaluation. Significant population growth has evolved in recent decades in upper portions of the Verde River watershed, with consequent effects on tributary streamflows and groundwater, while the Salt River watershed has changed minimally by comparison.

This study confirmed that the late-16th-century megadrought was the most severe of those revealed in this tree-ring record of the Lower CRB. Other megadroughts predating this record are suggested in studies of the Upper CRB (Meko et al. Citation2007), the details of which are unavailable in Lower CRB records. While the late-16th-century megadrought was the most severe on record at the time of the Meko and Hirschboeck report (Citation2008), that is no longer the case. Analysis of the instrumental streamflow record since the late 19th century demonstrates that megadrought is a continuing hydroclimate feature in the modern era. The recent 1999–2004 and 2011–2016 runs of years below median flow were separated by a few intervening wet winters, but constitute one ongoing megadrought period now more than two decades in duration. Severity calculations as of 2018 demonstrate that this modern era megadrought is now more severe than the late-16th-century megadrought.

Considering the research findings by Woodhouse and Overpeck (Citation1998) that “… the sixteenth century drought appears to have been the most severe and persistent drought in the Southwest in the past 1000–2000 years”, the present megadrought is now a contender for the most severe in any record; and it has not yet ended. Its end will only be evident after more years of data. And, as indicated in , it could continue beyond the present time to reach a more severe level. Previous megadrought eras have ended within a few decades, as can be seen in , forced by low-frequency modes of natural variability (Ault et al. Citation2014). So, historical data indicate the current megadrought could persist for another decade. Previous megadroughts have been followed by pluvial periods, indicating there are risks of wet extremes on the watersheds in coming decades. Meanwhile, SRP system reservoirs are 47% full at the end of the 2018 water year, indicative of the system’s resilience to severe megadrought. As the present ongoing drought augments water management’s experience base, it should serve as the basis for adaptation planning and be considered the new megadrought of record for the southwest USA.

Acknowledgements

The authors thank the Salt River Project, and David Meko and Katherine Hirschboeck for the data provided for this study.

Disclosure statement

No potential conflict of interest was reported by the authors.

References

- Ault, T.R., et al., 2014. Assessing the risk of persistent drought using climate model simulations and paleoclimate data. Journal of Climate, 27, 7529–7549. doi:10.1175/JCLI-D-12-00282.1.

- Ault, T.R., et al., 2016. Relative impacts of mitigation, temperature, and precipitation on 21st-century megadrought risk in the American Southwest. Science Advances, 2 (10), e1600873. doi:10.1126/sciadv.1600873

- Brekke, L. and Prairie, J., 2009. Long-term planning hydrology based on various blends of instrumental records, paleoclimate, and projected climate information[online]. U.S. Department of the Interior, Bureau of Reclamation. Available from: https://www.usbr.gov/research/projects/detail.cfm?id=6395.

- Burt, J.E. and Barber, G.M., 1996. Elementary statistics for geographers. 2nd ed. New York: The Guilford Press.

- Daly, C., et al., 2002. A knowledge-based approach to the statistical mapping of climate [online]. Climate Research, 22, 99–113. Available from: http://prism.oregonstate.edu [ Accessed 17 Nov 2017].

- Daly, C., Neilson, R.P., and Phillips, D.L., 1994. A statistical-topographic model for mapping climatological precipitation over mountainous terrain. Journal of Applied Meteorology, 33, 140–158. doi:10.1175/1520-0450(1994)033<0140:ASTMFM>2.0.CO;2

- FEMA (Federal Emergency Management Agency), 1995. National mitigation strategy: partnerships for building safer communities. Washington, DC.

- Gammage, G., Jr., 2016. The future of the Suburban City. Washington, DC: Island Press.

- Gammage, G., Jr., et al., 2011. Watering the sun corridor. Phoenix, AZ: Arizona State University Morrison Institute for Public Policy.

- Koutsoyiannis, D., 2011. Hurst-Kolmogorov dynamics and uncertainty. Journal of the American Water Resources Association, 47 (3), 481–495. doi:10.1111/j.1752-1688.2011.00543.x

- Meko, D.M. and Hirschboeck, K.K., 2008. The current drought in context: a tree-ring based evaluation of water supply variability for the Salt-Verde River Basin[online]. Available from: http://www.ltrr.arizona.edu/kkh/srp2.htm

- Meko, D.M., et al., 2007. Medieval drought in the upper Colorado River Basin. Geophysical Research Letters, 34, L10705. doi:10.1029/2007GL029988

- Mishra, A.K. and Singh, V.P., 2010. A review of drought concepts. Journal of Hydrology, 391, 202–216. doi:10.1016/j.jhydrol.2010.07.012

- Murphy, K.W. and Ellis, A.W., 2014. An assessment of the stationarity of climate and stream flow in watersheds of the Colorado River Basin. Journal of Hydrology, 509, 454–473. doi:10.1016/j.jhydrol.2013.11.056

- Phillips, D.H., et al., 2009. Water resources planning and management at the Salt River Project, Arizona, USA. Irrigation and Drainage Systems, 23 (2–3), 109–124. doi:10.1007/s10795-009-9063-0

- Prairie, J., et al., 2008. A stochastic nonparametric approach for streamflow generation combining observational and paleoreconstructed data. Water Resources Research, 44, W06423. doi:10.1029/2007WR006684

- SRP-a (Salt River Project). Dams and reservoirs managed by SRP[online]. Available from: https://www.srpnet.com/water/dams

- SRP-b (Salt River Project). Watershed connection[online]. Available from: http://www.watershedconnection.com

- SRP-c (Salt River Project). SRP stores water for tomorrow[online]. Available from: https://www.srpnet.com/water/waterbanking.aspx

- USGS (US Geological Survey), National Water Information System (NWIS)[online]. Available from: http://waterdata.usgs.gov/nwis. [ Accessed 18 May 2018].

- Wilhite, D.A., Svoboda, M.D., and Hayes, M.J., 2005. Monitoring drought in the United States: status and trends. In: V.K. Boken, A.P. Cracknell, and R.L. Heathcote, eds. Monitoring and predicting agricultural drought: a global study. Oxford, UK: Oxford University Press, 121.

- Woodhouse, C.A., Gray, S.T., and Meko, D.M., 2006. Updated streamflow reconstructions for the Upper Colorado River Basin. Water Resources Research, 42, W05415. doi:10.1029/2005WR004455

- Woodhouse, C.A. and Overpeck, J.T., 1998. 2000 years of drought variability in the Central United States. Bulletin of the American Meteorological Society, 79, 2693–2714. doi:10.1175/1520-0477(1998)079<2693:YODVIT>2.0.CO;2