?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Mitigation of adverse effects of global warming relies on accurate flow projections under climate change. These projections usually focus on changes in hydrological signatures, such as 100-year floods, which are estimated through statistical analyses of simulated flows under baseline and future conditions. However, models used for these simulations are traditionally calibrated to reproduce entire flow series, rather than statistics of hydrological signatures. Here, we consider this dichotomy by testing whether performance indicators (e.g. Nash-Sutcliffe coefficient) are informative about model ability to reproduce distributions and trends in the signatures. Results of streamflow simulations in 50 high-latitude catchments with the 3DNet-Catch model show that high model performances according to traditional indicators do not provide assurance that distributions or trends in hydrological signatures are well reproduced. We therefore suggest that performance in reproducing distributions and trends in hydrological signatures should be included in the process of model selection for climate change impact studies.

Editor S. Archfield Associate Editor R. Singh

1 Introduction

Global warming and changes in climate conditions can have adverse effects on water resources. They can affect water supply, irrigation or hydropower production (Xu Citation1999a), and, consequently, economy, safety and ecosystem sustainability (Olsson et al. Citation2016). In many regions around the world, global warming is expected to increase the frequency of hazardous events, such as floods (Dankers and Feyen Citation2009, Dankers et al. Citation2014) or droughts (Wasko et al. Citation2021), both of which cause substantial economic losses (Roudier et al. Citation2016). Global warming acts together with rapid urbanization and population growth in some regions, thereby increasing pressure on water resources and potentially leading to water scarcity (Schewe et al. Citation2014). Therefore, adequate strategic planning and implementation of optimal climate-change adaptation measures are of vital socio-economic importance (Gangrade et al. Citation2020), especially with regard to the realization of the Agenda 2030 Sustainable Development Goals that highlights the importance of water as an integral part of human development (United Nations Water Citation2015, WWAP (United Nations World Water Assessment Programme) Citation2015). However, the identification of relevant adaptation measures largely depends on reliable assessment of climate change impacts on water resources (Feng and Beighley Citation2020).

Climate change impacts on water resources are typically assessed by comparing simulated hydrological variables over a future period to those simulated in the baseline (i.e. historical/present) period (Hakala et al. Citation2019). Climate change impact studies (CCISs) are focused primarily on flows, since they represent an integrated catchment response to alterations in hydroclimatic processes (Beck et al. Citation2017). In particular, CCISs are concerned with changes in flow statistics (Olsson et al. Citation2016), such as mean, high or low flows (Lehner et al. Citation2006, Ludwig et al. Citation2009, Gosling et al. Citation2017, Mishra et al. Citation2020). Analyses of high flows (e.g. Booij Citation2005, Kay et al. Citation2009, Dams et al. Citation2015, Chen and Yu Citation2016) and low flows (e.g. Parajka et al. Citation2016, Pokhrel et al. Citation2021) are important because these types of events can have adverse and far-reaching consequences for societies and ecosystems (Pechlivanidis et al. Citation2016). Therefore, they are essential from the perspective of water resources management (Farmer et al. Citation2018). Changes in flow seasonality and timings, or duration of some specific flows, are also often analysed (Cayan et al. Citation2001, Stewart et al. Citation2005, Addor et al. Citation2014, Mendoza et al. Citation2016, Feng and Beighley Citation2020), since they can be significant from an economic and environmental point of view (Blöschl et al. Citation2017, Blöschl et al. Citation2019b, Wasko et al. Citation2020). These flow regime features (e.g. mean or extreme flows, runoff timings and durations) are hereafter referred to as hydrological signatures (McMillan Citation2021).

Future flows are obtained by running a hydrological model with the downscaled and bias-corrected outputs of a general circulation model (GCM), which is run assuming one or several greenhouse gas concentration trajectories, i.e. representative concentration pathways (Refsgaard et al. Citation2014, Hakala et al. Citation2019). The GCM outputs have rather coarse spatial resolution and have to be downscaled to be suitable for hydrological modelling (Xu Citation1999b, Refsgaard et al. Citation2014, Teutschbein et al. Citation2010). Distributions of the downscaled GCM outputs display strong biases compared to their observed counterparts (primarily precipitation and temperature); thus, they have to be bias-corrected before being used for hydrological simulations (Teutschbein and Seibert Citation2012, Citation2013). Bias-correction improves the accuracy in reproducing distributions of climatic variables, as well as the projected flows (Osuch et al. Citation2016, Hakala et al. Citation2019).

Projected changes in the hydrological signatures depend on the assumed trajectories, and on each element in the complex modelling chain (Velazquez et al. Citation2013); thus, they are accompanied by uncertainties (Prudhomme and Davies Citation2009a, Citation2009b). Extensive research on these uncertainties often indicates climate models as the key source of uncertainty in the projections (Wilby and Harris Citation2006, Minville et al. Citation2008, Bastola et al. Citation2011, Najafi et al. Citation2011, Teng et al. Citation2012, Karlsson et al. Citation2016, Joseph et al. Citation2018). However, in some cases uncertainties induced from hydrological models can be similar or even larger than uncertainties stemming from the climate models (Bosshard et al. Citation2013, Pechlivanidis et al. Citation2016, Eisner et al. Citation2017). For example, low-flow projections are highly sensitive to the choice of hydrological models, presumably due to their limited skills in reproducing low flows (Maurer et al. Citation2010; Velazquez et al. Citation2013, Vansteenkiste et al. Citation2014, Parajka et al. Citation2016, Gangrade et al. Citation2020, Huang et al. Citation2020).

Selection of a hydrological model is a key step in a modelling study (Beck et al. Citation2017, Addor and Melsen Citation2019), including CCISs (Lespinas et al. Citation2014, Seiller et al. Citation2017). However, identification of models that are suitable for CCISs is quite challenging, as modelling under changing climate has remained one of the unsolved problems in hydrology (Blöschl et al. Citation2019a). In particular, there is a lack of specific guidance on the evaluation of model suitability for CCISs, and on model selection (Fowler et al. Citation2018b). Model suitability for CCISs is often assumed to be equal to model transferability that implies consistently good performance across various climatic conditions encountered in the record period (Kirchner Citation2006, Krysanova et al. Citation2018, Motavita et al. Citation2019). Transferable models are generally deemed to reasonably represent runoff generation processes (Euser et al. Citation2013); thus, application of such models is expected to yield credible flow projections (Krysanova et al. Citation2018). Model transferability is traditionally appraised by applying a split- or a differential split-sample test (DSST) (Klemeš Citation1986). The latter is considered more indicative of model performance under contrasting climate conditions (Seibert Citation2003). Recently, several extensions of the DSST have been proposed to enable even more rigorous evaluation of model transferability (Coron et al. Citation2012, Thirel et al. Citation2015, Fowler et al. Citation2018b). However, despite its robustness, DSST can fail to identify transferable models in some instances (Fowler et al. Citation2018b). Furthermore, good performance under current conditions (Krysanova et al. Citation2018), proven by a DSST or an extension thereof (Fowler et al. Citation2018a) is no guarantee that the model can perform well under future climate. This can be attributed to potential modifications of catchment processes (Blöschl and Montanari Citation2010, Chiew et al. Citation2015) or even emergence of processes not encountered in the record period (Peel and Blöschl Citation2011).

Model performance is typically quantified in terms of numerical indicators (efficiency measures), such as Kling-Gupta (Gupta et al. Citation2009) or Nash-Sutcliffe (Nash and Sutcliffe Citation1970) coefficients. These performance indicators (hereafter referred to as indicators) are based on aggregated statistics of the residuals, i.e. differences between observed and simulated flow series (Yilmaz et al. Citation2010). As such, they cannot fully reveal all aspects of model performance (Crochemore et al. Citation2015). For example, these indicators are often skewed towards high flows (Legates and McCabe Citation1999). To put more emphasis on low flows, various flow transformations, such as logarithms, are applied (Oudin et al. Citation2006, Fenicia et al. Citation2007, Santos et al. Citation2018). Furthermore, numerous parameter sets can yield quite similar values of a performance indicator (equifinality, Beven and Binley Citation1992), even though they result in different simulated hydrographs, especially outside the calibration period (Wagener et al. Citation2003). In addition to these indicators, various hydrological signatures can be used to complement evaluations of model performance and facilitate model diagnostics (Yilmaz et al. Citation2008, Pfannerstill et al. Citation2014, Topalović et al. Citation2020, McMillan Citation2021), and can also be used as objective functions in the model calibration (Westerberg et al. Citation2011, Shafii and Tolson Citation2015). Evaluation of model performance in extreme flows, such as annual maxima (Mizukami et al. Citation2019), has been proposed in the literature. Studies on this topic show that high performance in extreme flows is difficult to achieve because model calibration generally leads to a “squeezing” of the flow distribution (i.e. distribution tails move towards the central value), leading to overestimation of low flows and underestimation of high flows (Farmer et al. Citation2018).

Notwithstanding the significant progress in the field of hydrological model evaluation, the gap between information offered by the standard evaluation procedures and traditionally used performance indicators, and the requirements of CCISs, still persists. This discrepancy can be attributed to different approaches to calibration and evaluation of hydrological and of climate models. The former models are conditioned to reproduce entire series, while the latter are expected (and limited) to reproduce distributions over a 30-year period (Ricard et al. Citation2019). Therefore, transferability of hydrological models cannot be fully translated into transferability in the impact-assessment domain (Chen et al. Citation2016). Fowler et al. (Citation2018a) argued that research on model applicability under changing climate conditions is insufficient, and that there is still a clear need for novel, more robust model structures, innovative calibration strategies and modelling practices in general (Gelfan et al. Citation2020), including novel evaluation procedures that can identify the most suitable models for CCISs (Coron et al. Citation2012, Thirel et al. Citation2015).

Evaluation of hydrological model suitability for CCISs should be focused on hydrological signatures that are pertinent to these studies, such as extreme flows or signatures that have various eco-hydrological applications (Mendoza et al. Citation2015, Krysanova et al. Citation2017, Citation2018, Ricard et al. Citation2019). This is in line with the concept of model “fitness for the said purpose” (Xu Citation1999c). Evaluation of model suitability for CCISs should, thus, include distributions of the selected hydrological signatures, because the impact assessments rely on these distributions, rather than on the entire series. Model performance in reproducing distributions of signatures is seldom considered (e.g. Willems Citation2009), especially its potential links to commonly used performance indicators (Coffey et al. Citation2004). Nevertheless, the hydrological community recognized the importance of accurate reproduction of signatures’ distributions, so bias-correction of simulated flows was suggested to improve this aspect of model performance (González-Zeas et al. Citation2012, Farmer et al. Citation2018, Daraio Citation2020, Bum Kim et al. Citation2021). Alternatively, hydrological model parameters alone (Ricard et al. Citation2019), or together with parameters of a bias-correction transfer function, can be optimised to improve accuracy in reproducing signatures’ distributions in the baseline period (Ricard et al. Citation2020). Model ability to reproduce trends in the signatures should also be evaluated, since such trends can affect the assessment of impacts of future climate (Cunderlik and Ouarda Citation2009, Wasko et al. Citation2020). Accurate reproduction of the trends poses a challenge to hydrological modelling, and conflicting conclusions about model performance in this respect were reported (Lespinas et al. Citation2014, Fowler et al. Citation2020, Wasko et al. Citation2021).

In this paper, we hypothesized that commonly used performance indicators are not necessarily informative about hydrological model ability to reproduce observed (and potential future) distributions and trends in hydrological signatures relevant for climate change impact assessments. Such signatures include, for example, flow statistics (e.g. mean, maximum and minimum flows), or runoff timings and durations. To test this hypothesis, we (1) evaluated model performance in reproducing distributions and trends in series of the signatures, and (2) examined relationships between numerous performance indicators and model performance in terms of distributions and trends in the signatures. This study advances previous research on this subject by taking numerous indicators and signatures into consideration, and by comprehensively analysing relationships between these two facets of model performance. All analyses were conducted for 50 Swedish catchments, covering a wide range of hydroclimatic regimes in high latitudes.

2 Methodology

2.1 Catchments and data

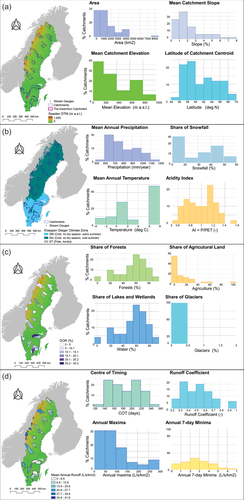

The study focuses on Sweden, a country in Northern Europe that covers an area of approximately 408 000 km2 with an elevation range of −2 to 2100 m.a.s.l. (SLU Citation2015; )). The country’s area comprises 69% forests, 9% wetlands, 8% shrubs and grassland, 8% agriculture, 3% human settlements and 3% open land or glaciers. According to the Köppen-Geiger classification (Kottek et al. Citation2006), Sweden features three major climate zones: the polar tundra climate zone (ET) in the Scandinavian Mountains in northwestern Sweden, the subarctic boreal climate (Dfc) in central and northern Sweden, and the warm-summer hemiboreal climate zone (Dfb) in southern Sweden ()).

Figure 1. The selected catchments in Sweden and their properties: (a) locations of the catchments and distributions of their key topographic properties (area, slope, elevation and latitude); (b) climate of the selected catchments, and the distributions of mean annual precipitation, percentage of precipitation in the form of snowfall, mean annual temperature and the aridity index (ratio of mean precipitation to mean potential evapotranspiration); (c) degree of regulation (DOR, percentage of mean annual runoff from the drainage area of the reservoir) in the catchments and distributions of shares of prevailing land-use types, and water surfaces and glaciers; and (d) mean annual runoff in the catchments and distributions of the centre of timing (COT, in days of water year; Kromos et al. Citation2016), runoff coefficients, and annual maxima and seven-day minima. The hydroclimatic variables presented in the figure were obtained over water years 1962–2020.

Daily precipitation, temperature, and flow series for considered catchments over the period 1961–2020 were obtained from a publicly accessible database (http://vattenwebb.smhi.se/), maintained by the Swedish Meteorological and Hydrological Institute (SMHI). Geospatial data for the streamflow stations were downloaded from SMHI’s Svenskt Vattenarkiv (SVAR) database (Eklund Citation2011, Henestål et al. Citation2012). Daily temperature and precipitation series were obtained from the SMHI’s spatially interpolated 4 km × 4 km national precipitation–temperature grid (Johansson Citation2000, SMHI Citation2005). Mean catchment precipitation and temperature were calculated as an area-weighted average of all grid cells partly or fully lying within the catchment boundaries.

Only catchments for which continuous daily series from January 1961 to December 2020 were available, and which had low percentages of lakes, glaciers and urbanized areas and a low degree of regulation (DOR, i.e. reservoir volume relative to the mean annual runoff volume from its draining area; )) were selected for this study. Regarding the DOR criterion, the catchments were selected according to the results of preliminary analyses, rather than according to a specific threshold. Specifically, we selected only those catchments (1) for which inspection of hydrographs failed to detect any abrupt changes, and (2) for which modelling results, including those presented in the relevant literature on Swedish catchments (Girons Lopez et al. Citation2021), showed satisfactory model performance. Such catchments were considered not heavily regulated, and, therefore, suitable for this study. Only 50 catchments met all the criteria above, six of which were nested within larger catchments. Seventeen selected catchments were regulated (principally with DOR below 15%; )), with the dams that were built before 1961. Key properties of the selected catchments are presented in and in Table S1 of the Supplementary material.

The 50 high-latitude catchments in this study span a latitudinal gradient from 56°N to 68°N and thus adequately reflect spatial variations in Swedish climate, topography, and land use (), Table S1). Over the past 60 years (1961–2020), annual mean temperature in those catchments was on average 2.7°C, with an annual precipitation of 762 mm. In half of the catchments, snowfall comprised approximately one third of annual precipitation, which is consistent with the relatively low mean annual temperatures, which were below 0°C in some catchments ()). The selected catchments were mainly snow-dominated (20 catchments) or transitional (21 catchments), while only nine catchments were rain-dominated according to the centre of timing (Kormos et al. Citation2016; )). Aridity indices, which were mainly lower than 1.5, suggest that the selected catchments are predominantly humid (UNEP Citation1992, Wang et al. Citation2021). Median annual runoff in the selected catchments was 379 mm (12 L/s/km2) over the record period. However, there were considerable spatial variations: specifically, the highest runoff was observed in the Scandinavian Mountains in northwestern Sweden, characterized by mountain snowmelt, and the lowest in southeastern Sweden ()), featured by less pronounced flow peaks. Hydrological regimes of the selected catchments are characterized by relatively high runoff coefficients (greater than 0.5) and high baseflow rates, i.e. baseflow index exceeded 0.5 in most catchments. Mean annual runoff was positively skewed in most catchments, suggesting a tendency towards occurrences of high flows (Carlisle et al. Citation2017).

2.2 The 3DNet-Catch model

In this study, runoff was simulated with the 3DNet-Catch model (Todorović et al. Citation2019). This model requires precipitation, temperature, and potential evapotranspiration series for simulations, and observed flows for calibration. Hydrological simulations consist of runoff simulations and routing, and can allow for correction of precipitation and temperature with elevation in semi- or fully-distributed set-ups (Stanić et al. Citation2018, Todorović et al. Citation2019).

2.2.1 Model structure

Runoff is simulated by employing the interception, snow and soil routines (see Figure S1 of the Supplementary material). Interception is modelled by a single storage with a flexible capacity that varies according to the leaf area index. All precipitation that occurs at temperatures below a particular threshold is considered snow. Snowmelt is simulated by applying the degree-day method with a seasonally varying melt factor. Spatial heterogeneity of the snow cover and snowpack sublimation are also accounted for. In the 3DNet-Catch model, soil is represented by a surface layer and an arbitrary number of subsurface layers that can have different parameters, such as thickness or porosity. Surface runoff, percolation, and evaporation take place in the surface layer (Todorović et al. Citation2019). Surface runoff is simulated by applying the Soil Conservation Service Curve Number (SCS-CN) method (Mishra and Singh Citation2003), which is combined with continuous simulation of soil moisture. Surface runoff can be augmented by the excess water from subsurface soil layer(s). Percolation into the deeper soil layer is simulated by using an analytically solved non-linear outflow equation combined with the Brooks-Corey relation (Brooks and Corey Citation1964). The water balance of the subsurface layer(s) comprises percolation from above as the inflow, and percolation to a deeper layer, or non-linear reservoir, and transpiration as outflows. Both linear and non-linear outflow equations are employed for runoff routing to a catchment outlet. Surface runoff is simulated with a linear outflow equation. Percolation from the deepest soil layer represents inflow to the non-linear reservoir with a threshold: water that exceeds the threshold is routed by an additional linear reservoir and produces subsurface runoff. Water below the threshold is routed by employing the non-linear equation and produces baseflow.

The chosen 50 catchments were characterized by relatively low variations in land use and in elevation span; hence, a spatially lumped model set-up was used in this study, without any corrections of meteorological variables with elevation. The adopted model version has one surface and one subsurface soil layer that share the same parameters, except for thickness and hydraulic conductivity. This model version has 21 free model parameters (see Table S2 of the Supplementary material for parameter list and their prior ranges).

2.2.2 Model calibration and evaluation

For each of the 50 catchments, model parameters were estimated in the first half of the record period (water years 1962–1991), and evaluated in the remainder (water years 1991–2020). Additionally, the model was run in the full record period (water years 1962–2020). All simulations were conducted at a daily time step, with one preceding water year for model warm-up. The model parameters were estimated by applying the Generalised Likelihood Uncertainty Estimation (GLUE) method (Beven and Binley Citation1992). For each catchment, only one best-performing set was chosen out of an initially sampled population of 75 000 from the uniform parameter prior distributions generated with the Latin hypercube sampling (Keramat and Kielbasa Citation1997). The parameter prior distributions were common to all catchments (Table S2). The best set in each catchment was selected according to a composite objective function (OF) that includes Kling-Gupta efficiency (KGE; Gupta et al. Citation2009) computed from daily flows and inverse-square root transformed flows (see for equations) as follows:

This composite OF was selected to obtain balanced performance in high and low flows (Santos et al. Citation2018, Mizukami et al. Citation2019). Although no optimization algorithm was employed in this study, the term “objective function” is nevertheless used to distinguish it from other performance indicators used for the analyses.

To examine impacts of parameter equifinality, we analysed the performance of the 50 best behavioural parameter sets in one catchment. The best sets were selected from the 75,000 initially sampled ones, based on their values of OF (EquationEquation 1(1)

(1) ) in the calibration period. As the reference catchment we selected Assembro ()), since it is a lowland, medium-sized catchment in central-south Sweden with catchment characteristics that correspond relatively well to the average characteristics of the selected catchment set, primarily in terms of hydrological regime features. Specifically, this is a transitional catchment (according to criteria by Kormos et al. Citation2016), with a runoff coefficient of 0.5 and a negligible percentage of lakes (7.4%). The number of behavioural sets was set to 50 to make the results of this simulation comparable to those conducted in the 50 catchments. The objective of the analyses with the best behavioural sets was to examine whether equifinality in terms of performance indicator(s) translates into equifinality in performance in reproducing distributions or trends in the series of signatures. Posterior parameter distributions (with OF as a likelihood measure), or ensembles of simulated flows with the behavioural sets, were not considered as testing the hypothesis of this study did not necessitate such results.

2.3 Evaluation of model performance in reproducing statistical properties of hydrological signatures

2.3.1 Selection of hydrological signatures

To assess the ability of 3DNet-Catch to reproduce hydrological signatures relevant for CCISs, we compiled a suite of signatures (). In addition to mean flows (Qmean), the spring flows were analysed (Qspring, March through June) to detect changes in runoff seasonality, which can be anticipated in high-latitude catchments in a warmer future climate due to a decrease in snowpack and its duration, and earlier snowmelt (Harder et al. Citation2015, Teutschbein et al. Citation2018, Kiesel et al. Citation2020).

Extremely high and low flows of given durations (e.g. annual daily maxima or minimum seven-day flows) are often used for assessment of flood hazard and environmental flows, and are important for consideration of climate change impacts on ecological and social systems (Gain et al. Citation2013). Assessment of climate change impacts on design floods is essential for dam safety (Bergström et al. Citation2001); therefore, the hydrological model should accurately reproduce distributions of maximum flows. Annual minima of given duration, or number of days with flows below a certain threshold, are relevant for assessment of allowable water withdrawals, including water supply withdrawals, or for the assessment of allowable loads from treatment plants, all of which are important for competent water management (Richter et al. Citation1996, Vis et al. Citation2015). As there are considerable uncertainties in simulating such extreme flows (Mizukami et al. Citation2019), the analyses in this study were complemented by moderately low and moderately high flows, represented by different flow percentiles (Gudmundsson et al. Citation2012).

Impact studies are often focused on changes in flow durations and timings that represent runoff seasonality (McMillan Citation2021). Consequently, model performance in reproducing runoff timing should be preferred over model efficiency in pre-defined subperiods (seasons), since these periods can vary over the years, especially under changing climate. Runoff timings are commonly computed from ordinal day of a calendar year and converted to a corresponding angular value between 0 and 2π (Cayan et al. Citation2001). Alternatively, runoff timings can be linearized if computed according to water years starting on 1 October (Wasko et al. Citation2020), which is the approach adopted in this study. Details on the selected hydrological signatures are presented in .

2.3.2 Evaluation of model performance in reproducing distributions of the hydrological signatures

In some studies, model performance in extreme flows was conducted by comparing some specific flow quantiles obtained from observed and simulated flows, such as 100-year floods (Dankers et al. Citation2014, Hoang et al. Citation2016). However, fitting a distribution, which includes selection of a distribution function and parameter estimation method, significantly affects estimated quantiles, particularly for high return periods, and can, therefore, affect reasoning on model performance in reproducing distributions of signatures (Dankers and Feyen Citation2009). Therefore, such an approach was not adopted here, and model evaluation was limited to a comparison of the series signatures obtained from observed and simulated flows.

Model ability to reproduce distributions of the selected signatures was conducted in (1) various catchments with different hydrological regimes, and (2) different periods. This set-up was adopted to reveal whether potential relationships between performance indicators and model ability to reproduce the distributions remain consistent across hydrological regimes and periods (Potter et al. Citation2010, Hannaford et al. Citation2013). Additionally, the analyses were repeated with numerous behavioural parameter sets (Beven and Binley Citation1992) in one catchment to examine whether equifinality in terms of performance indicators also implies equifinality in reproducing distributions of signatures.

The Wilcoxon rank sum (WRS) test (Conover Citation1999) was employed to evaluate model ability to reproduce distributions of the observed hydrological signatures. WRS is a nonparametric test used to compare continuous distributions of two samples, which do not necessarily have to be of the same size (Asadzadeh et al. Citation2014). The test does not imply any assumptions on the true form of probability distributions of the analysed variables, i.e. the two samples do not have to be normally distributed or equal in size (Kottegoda and Rosso Citation2008), and they can contain ties (García-Portugués Citation2022). The null hypothesis (H0) of the WRS test is that the medians of the samples’ distributions are equal, with the underlying assumption that the two distributions have the same shape and variance (Montgomery and Runger Citation2003). The WRS test statistic, z, is considered normally distributed if the sample size is greater than 15 (Kottegoda and Rosso Citation2008).

To evaluate model ability to reproduce distributions, annual series of the selected hydrological signatures () were obtained from daily observed and simulated flows. These series were compared by applying the WRS test: in the case that the test null hypothesis was not rejected at a 5% level of significance, we assumed that the model reproduced distribution of a signature to a satisfactory degree.

2.3.3 Evaluation of model performance in reproducing trends in hydrological signatures

Model performance in reproducing trends has become an important part of model evaluation, particularly in the context of CCISs (Lespinas et al. Citation2014, Pechlivanidis et al. Citation2016, Hattermann et al. Citation2017). However, a common, systematic approach to evaluating this aspect of model performance has not been adopted yet. In this paper, a model was considered to properly reproduce a trend in a series if either (1) trends in the series obtained from both observed and simulated flows were not statistically significant, or (2) trends in both series were statistically significant and had the same sign. Otherwise, we considered that the model did not properly reproduce trends.

The Mann-Kendall test (Kendall Citation1938, Mann Citation1945) with a 5% of level of significance was applied to identify statistically significant trends in the hydrological signatures. The test was run in all 50 catchments (again for the calibration, evaluation and full record periods), as well as for the model runs with the 50 best parameter sets in the Assembro catchment over the calibration period. Serial autocorrelation can affect the results of this test (e.g. Fatichi et al. Citation2009, Harder et al. Citation2015) and, therefore, all considered series were tested for the presence of autocorrelation by applying the Box-Ljung test (Ljung and Box Citation1978) prior to testing for the presence of a trend. Thereafter, the Sen’s slope estimator was used to determine the sign of a trend (Sen Citation1968).

2.4 Relationships between performance indicators and hydrological signatures

2.4.1 Selection of performance indicators

Numerous performance indicators can be used to quantify model efficiency (Crochemore et al. Citation2015), and an entire set of indicators can be attributed to every simulated flow series. In the present study, 18 indicators were chosen to represent model performance (), including indicators focused on performance in high flows (e.g. KGE or Nash-Sutcliffe (NSE) coefficients), low flows (KGE or NSE computed from transformed flows or low-flow segments of flow duration curves), runoff volume (VE) or dynamics (coefficient of determination R2). Indicators based on the squared residuals, such as NSE, and on absolute values of residuals, such as index of agreement (IA), were included (Willmott et al. Citation2012), as well as indicators computed from flow duration curve segments (Pfannerstill et al. Citation2014, Todorović et al. Citation2019). All selected indicators are dimensionless, with higher values suggesting better model performance. A vValue of 1 indicates perfect fit according to all selected indicators, and almost none of the indicators has a lower limit (except for R2, which takes values between 0 and 1).

Selection of the indicators was conditioned by the set-up of analyses of their informativeness about model ability to reproduce statistical properties of signatures. Specifically, some analyses within this study necessitated a monotonic relationship between the value of the performance indicator and model efficiency, and could not be conducted with e.g. percent bias in runoff volume, which can take positive and negative values, and where a value of 0 indicates a perfect fit. The selected performance indicators were calculated separately for each of the 50 catchments for the calibration, evaluation and full record periods, as well as for the 50 best model runs in the Assembro catchment in the calibration period (GLUE).

2.4.2 Informativeness of performance indicators about model performance in reproducing distributions of hydrological signatures

To analyse whether a specific performance indicator can provide insights about the model ability to reproduce distributions of hydrological signatures, we divided the simulations in the 50 catchments (separately for the calibration, evaluation and full record periods), as well as the simulations conducted with the 50 best parameter sets in Assembro, into two groups. One group comprised simulations that properly reproduced distributions according to the WRS test, and the second group included simulations that did not (i.e. H0 of the WRS test was rejected at a 5% level of significance). The values of a performance indicator of the two groups were then compared by applying another WRS test (for the sake of clarity, this particular test is denoted the WRS-2 test hereafter). Rejection of H0 at a 5% level of significance suggests that the median values of the performance indicators of the two groups were different. The alternative hypothesis of the WRS-2 test stated that the median of the indicators of the first group of simulations was greater than the median of the second group. As larger indicator values suggest better model performance, a rejection of the null hypothesis in favour of this alternative hypothesis suggests that higher indicator values would be indicative of better model performance in reproducing distributions of the selected signature. In other words, rejection of H0 of the WRS-2 test would suggest that a particular indicator can be informative about model ability to reproduce the distributions.

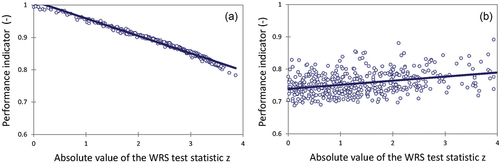

Further examinations of the relationships between performance indicators and model performance in reproducing distributions were based on Pearson correlation coefficients between values of indicators and absolute values of the WRS test statistic, z. High absolute values of the WRS test statistic suggest that it is within the rejection region and, thus, that the distributions are not well reproduced. For example, test statistics greater than 1.96 (or smaller than −1.96) imply rejection of H0 at the 5% level of significance (Kottegoda and Rosso Citation2008). Since this is a two-tailed test, absolute values of the test statistic are analysed. Strong negative correlations between the values of indicators and the absolute z values would suggest that higher indicator values would be informative about model ability to simulate the distributions of signatures ()). Here, correlation coefficients below −0.7 were considered an indication of strong correlation (Blasone et al. Citation2007). Weak negative or positive correlations would suggest that the indicators are not informative about model performance regarding distributions of signatures ()).

Figure 2. Illustration of relationships between performance indicator values and the absolute values of the Wilcoxon rank sum (WRS) test statistic. Larger values of performance indicators imply higher model efficiency, while larger absolute values of the test statistics imply rejection of the WRS test null hypothesis, i.e. the signatures obtained from the observed and simulated flows have statistically different medians. Panel (a) illustrates a performance indicator that can be considered informative about model ability to simulate the distribution of a signature, while panel (b) illustrates a non-informative indicator.

2.4.3 Informativeness of performance indicators about model performance in reproducing trends in hydrological signatures

To examine whether a specific indicator was informative in terms of model ability to reproduce trends, we followed the same logic as for the analysis of the distributions. The set of simulations was divided into two groups: one group containing the simulations that were able to reproduce trends according to the set criteria and one group containing all other simulations. The indicator values of the two groups were again compared using the WRS-2 test. Rejection of the WRS-2 test null hypothesis at a 5% level of significance in favour of the alternative hypothesis would suggest that higher indicator values could be used as an indication of model ability to reproduce trends in a simulation period.

3 Results

3.1 Hydrological model: overall performance of 3DNet-Catch

For each catchment, one parameter set that yielded maximum values of the composite OF (EquationEquation 1(1)

(1) ), which included KGE values computed from daily flows and reciprocals of square root-transformed flows (KGE1/√Q), was selected. The median values of the OF across the catchments varied between 0.71 (evaluation) and 0.76 (calibration). The minimum values of the OF ranged between 0.41 (evaluation) and 0.73 (GLUE), while the maximum values ranged between 0.75 (GLUE) and 0.88 (calibration). No clear relationship between the values of the OF and the geographical location of the catchments was found (). For example, slightly lower values of the OF were obtained in a few catchments in the northwest of Sweden at high elevations, but also in a few catchments in mid and southern Sweden. The highest values of the OF were obtained in a few catchments in the northeast and southwest of the country. Performance in the smaller, nested catchments was quite similar to performance in the larger catchments encompassing them (Table S3 of the Supplementary material). Ranges of the OF values were rather consistent across the three simulation periods, while low variation across the GLUE behavioural sets suggests strong equifinality.

Figure 3. Model performance across the 50 catchments in the calibration (left), evaluation (mid) and full record periods (right panels). Model performance is quantified in terms of the objective function (OF, EquationEquation 1(1)

(1) ; panels a–c), and Kling-Gupta efficiency coefficients obtained from daily flows (KGE; panels d–f) and log-transformed flows (KGE[1/√Q]; panels g–i).

![Figure 3. Model performance across the 50 catchments in the calibration (left), evaluation (mid) and full record periods (right panels). Model performance is quantified in terms of the objective function (OF, EquationEquation 1(1) OF=0.8KGE+0.2KGE1/Q(1) ; panels a–c), and Kling-Gupta efficiency coefficients obtained from daily flows (KGE; panels d–f) and log-transformed flows (KGE[1/√Q]; panels g–i).](/cms/asset/6f19cf1c-cd38-4b06-af73-b9fb0970d047/thsj_a_2104646_f0003_c.jpg)

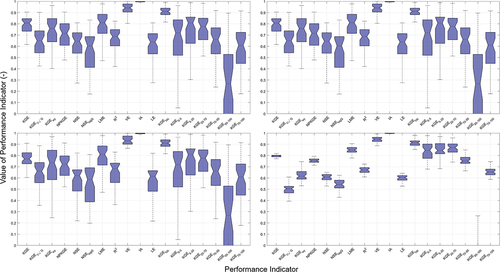

The median values of the majority of the performance indicators were satisfactory (), including KGE and KGE1/√Q, generally suggesting a fair balance between the two parts of the OF. The highest median values (>0.9) were obtained for the IA across the simulations. Good performance was also achieved in terms of VE, showing that the model can accurately simulate runoff volume. The median VE values ranged from 0.92 in the full record period to 0.95 in the GLUE simulations. Another indicator with quite high values was KGEfdc (the median values ranged between 0.88 and 0.92), suggesting that the model, on average, accurately reproduced the flow duration curve (FDC) in all simulation periods. The poorest performance, on average, was obtained in reproducing the lowest-flow FDC segment (KGE95–100), especially in the GLUE simulations that yielded negative medians of this indicator. The median values of all the other indicators exceeded 0.50; however, there were some variations across the simulations: the highest median performance was obtained in the calibration period, while performance in the remaining simulations was slightly lower in most indicators. A few exceptions in this regard included KGE computed over two low-flow segments (namely, KGE95–100 and KGE70–100), which yielded very slightly higher values in the evaluation period.

Figure 4. Model performance in the 50 catchments in the calibration, evaluation and full record periods, and performance of the 50 best parameter sets in the Assembro catchment in the calibration period. Boxes indicate 25th, 50th and 75th percentiles, while the whiskers stretch to the point nearest to 1.5 times the interquartile range from the boxes. Outliers and negative values are omitted from the figures for clarity. Abbreviations for the performance indicators are explained in .

Table 1. Hydrological signatures (flow statistics, and runoff timings and durations) used for model evaluation.

Table 2. Performance indicators used for model evaluation.

The values of high percentiles of the indicators in showed that good performance could be obtained for all the indicators, with exception of KGE95–100 in the GLUE simulations. High percentiles of Nash-Sutcliffe coefficients (NSE, NSElogQ) and KGE computed from the transformed flows (also shown in panels g–i of ) were slightly lower in comparison to the other indicators. In contrast, the low percentiles of the indicators showed that the model in some catchments or with some behavioural parameter sets yielded poor performance on many indicators, especially those related to the extreme flows (KGE computed over the highest and lowest FDC segments, KGE0–5, KGE95–100). An exception in this regard is IA, which consistently took rather high values. It should be emphasized that a high spread between low and high percentiles of the indicators was desired in this study in order to enable analyses of relationships between the indicators and model performance in reproducing distributions or trends in signatures. On the other hand, low variations across the indicator values of the GLUE sets were essential to enable proper analyses of equifinality impacts on model performance in reproducing distributions or trends in the series of signatures.

3.2 Model performance in reproducing statistical properties of hydrological signatures

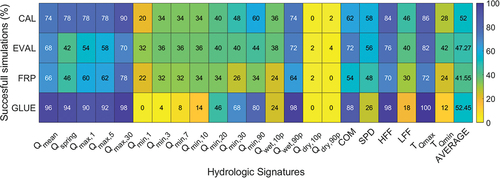

3.2.1 Model performance in reproducing distributions of the hydrological signatures

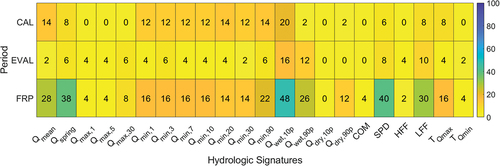

Distributions of the hydrological signatures were, on average, well reproduced by the model in most catchments (). High performances were obtained in mean flows (Qmean), and for most signatures related to high flows (30-day annual maxima: Qmax,30, high flow percentiles in the wet season: Qwet,90p, high-flow frequency: HFF, timing of annual maxima: TQmax), particularly in the GLUE simulations. The poorest performance was obtained in reproducing distributions of signatures related to low flows, especially in the dry season percentiles (Qdry,10p and Qdry,90p). For example, none of the 50 behavioural GLUE sets in the Assembro catchment could reproduce these signatures. Performances in reproducing hydrological signatures were, on average, only slightly higher in the calibration period compared to the evaluation or full record periods. The greatest drop in performance was obtained for spring flows (Qspring), followed by 90-day annual minima (Qmin,90). Conversely, the performance in reproducing 1-day low flow (Qmin,1) and its timing (TQmin) was better in the evaluation period than in the calibration period.

Figure 5. Model performance in reproducing distributions of the selected hydrological signatures (). Cell values are percentages of successful simulations, i.e. percentage of catchments in the calibration (CAL), evaluation (EVAL) or full record period (FRP), or percentage of the behavioural parameter sets in the Assembro catchment in the calibration period (GLUE) that well reproduced the distributions of the signatures. The last column in the heat map shows the average performance of the simulation across all signatures.

3.2.2 Evaluation of model performance in reproducing trends in hydrological signatures

Most of the selected signatures did not exhibit significant trends at the 5% level of significance according to the results of the Mann-Kendall test ( and Fig. S2 of the Supplementary material). Significant trends mostly emerged over the full record period (1962–2020), while they could not be detected in the two subperiods. Trends in mean and spring flows suggest that many of the selected catchments could become wetter, mainly in the north at quite high latitudes. Significant trends in annual maxima were detected in few catchments, as opposed to mostly increasing trends in annual minima detected in many catchments, particularly in the full record period. Concerning low-flow frequency (LFF), significantly increasing as well as significantly decreasing trends were detected, with a shift from decreasing trends in the northern catchments to increasing trends in the southern ones. Decreasing trends that were detected in spring pulse day (SPD) and timing of annual maxima (TQmax) suggest shifts in the annual runoff cycle that could be attributed to earlier snowmelt.

Figure 6. Percentage of catchments in which significant trends were detected in the selected hydrological signatures () according to the results of the Mann-Kendall test in the calibration (CAL), evaluation (EVAL) and full record periods (FRP). The signatures were computed from observed flows.

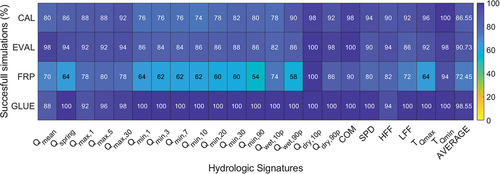

Trends in the series of selected signatures (or the lack of trends) were well reproduced in most catchments in the three simulation periods (), according to the methodology adopted. Model performance in this regard was quite high in calibration and evaluation, with a slight decrease in the full record period, primarily in annual minima and in the wet season flow percentiles. Performance of the GLUE behavioural parameter sets in reproducing trends was exceptionally high across all signatures, including daily minima (Qmin,1) and the 10th flow percentile in the wet season (Qwet,90p), which is the only observed signature that exhibited a significant trend over the full record period in Assembro catchment. Low variations in performance in reproducing trends of signatures across the GLUE behavioural parameter sets suggest that equifinality in performance indicators can also imply equifinality in reproducing trends. The performance in reproducing trends was not straightforwardly related to the presence of significant trends in the observed signatures. In other words, a high percentage of catchments with significant trends in an observed signature did not imply lower performance in reproducing trends in that signature.

Figure 7. Model performance in reproducing trends in the selected hydrological signatures (). Cell values in the heat map are percentages of successful simulations, i.e. percentage of catchments in the calibration (CAL), evaluation (EVAL) or full record period (FRP), or percentage of the behavioural parameter sets in the Assembro catchment in the calibration period (GLUE) that successfully reproduced trends (or the lack of trends) in hydrological signatures. The last column in the heat map shows average performance across all signatures.

3.3 Relationships between performance indicators and hydrological signatures

3.3.1 Informativeness of performance indicators about model performance in reproducing distributions of hydrological signatures

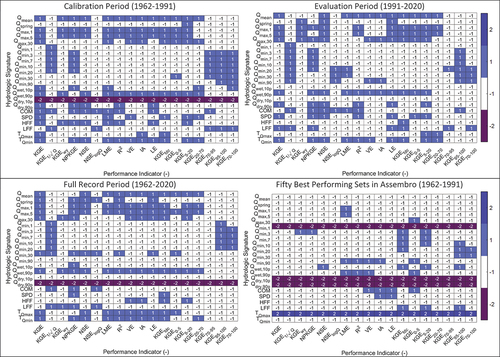

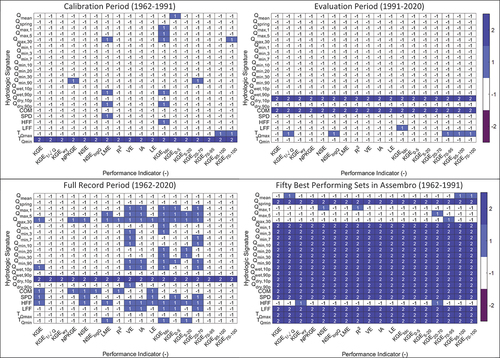

None of the selected performance indicators alone was informative about the model ability to reproduce the distributions of all signatures across different periods (). At the same time, the overall informativeness varied considerably across the indicators, with noticeably lower informativeness about the signatures related to low flows. The signatures that resulted in significantly different indicator values in most cases were mean flows, annual maxima of various durations, the 90th wet season flow percentile, and annual maxima timings, although there were significant variations in these patterns across the four simulations. Many indicators were informative about model performance in reproducing distributions of mean flows and annual maxima and minima in the three simulation periods, but these patterns could not be detected in the GLUE simulations. Such patterns included e.g. informativeness of KGE computed from daily flows and transformed flows about annual maxima and minima, respectively. The few relationships that were consistent across the four simulations include: Liu mean efficiency (LME), R2 and VE, and the 90th wet season flow percentile (Qwet,90p), KGE computed from the entire FDC and Qwet,90p and 30-day annual maxima, and KGE95-100 and 20-day annual minima.

Figure 8. Informativeness of performance indicators () about model ability to reproduce distributions of the hydrological signatures () in different simulations. Values of 1 in the heat maps indicate that the two groups of simulations (i.e. simulations in which the distributions were well reproduced versus the remaining ones) have significantly different indicator values, suggesting that a specific indicator can be informative about model performance in reproducing the distributions. Values of −1 mean that the two groups of simulations result in similar values of an indicator, suggesting that the indicator is not informative about model ability to reproduce the distributions. A value of 2 (−2) suggests that the distributions of a signature were reproduced in all (none) of the catchments or by the behavioural parameter sets (GLUE), respectively.

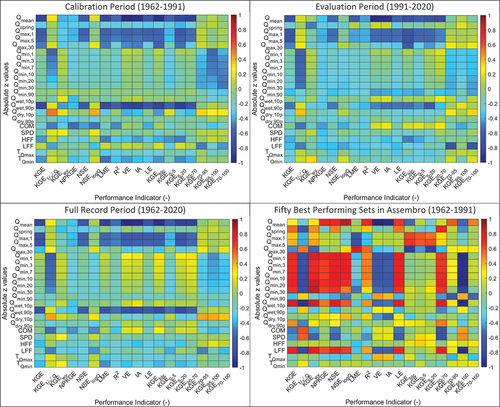

Links between the performance indicators and model ability to reproduce distributions of the signatures were also examined using the Pearson correlation coefficient between the values of indicators and absolute values of WRS test statistic z. Strong negative correlations, with Pearson correlation coefficients smaller than – 0.7 (following Blasone et al. Citation2007), can suggest whether a specific indicator is related to model ability to reproduce distributions of a selected signature. Such correlations were detected in only a few indicators for mean annual flows in the four simulations (), including VE, IA, and KGE computed from the entire FDC. There were no relationships that resulted both in rejection of the WRS-2 test null hypothesis and in a correlation coefficient smaller than −0.7 across all the simulations, both of which would imply informativeness of a performance indicator about model ability to reproduce distribution of a signature.

Figure 9. Pearson correlation coefficients between the values of performance indicators () and absolute values of the Wilcoxon rank sum test statistic, z, obtained in different simulations. Strong negative correlations suggest that a specific indicator can be informative about the model ability to reproduce the distribution of a hydrological signature (), as opposed to weak negative or positive correlations.

3.3.2 Informativeness of performance indicators about model performance in reproducing trends in hydrological signatures

An initial test for autocorrelation in the data with the Box-Ljung test revealed no significant autocorrelation in the considered series of signatures. Comparisons of the simulations that properly reproduced trends in the hydrological signatures to those that did not suggest that the indicators mostly were not informative about model performance in this respect, according to the WRS-2 test (). However, there were differences across the simulations. For example, KGE calculated from the entire FDC (KGEFDC) was informative about performance in reproducing trends in a few signatures in the calibration and full record periods, but not over the evaluation period. No consistent relationship between the performance indicators and model performance in reproducing the trends across the simulations was detected.

Figure 10. Informativeness of performance indicators () about model ability to reproduce trends in series of hydrological signatures () in different simulations. Values of 1 in the heat maps indicate that the two groups of simulations (i.e. simulations in which the trends were well reproduced versus the remaining ones) have different indicator values, which can suggest that a specific indicator is not informative about model performance in reproducing the trends. Values of −1 mean that the two groups of simulations results in similar values of a specific indicator, suggesting that a specific PI is not informative about model ability to reproduce the trends. A value of 2 (−2) suggests that the trends of a signature were reproduced in all (none) of the simulations or by the behavioural parameter sets (GLUE), respectively.

4 Discussion

The specific objectives of this research were twofold: (1) to evaluate whether hydrological models can reproduce distributions and trends in series of hydrological signatures relevant for climate change impact studies, and (2) to examine whether commonly used performance indicators can be informative about model ability to reproduce these distributions and trends. All analyses were conducted in 50 high-latitude catchments with different runoff dynamics, and over different periods to test whether potential relationships between the indicators and the model ability to reproduce the distributions or trends remain consistent. To analyse the impact of equifinality, all analyses were conducted with numerous behavioural parameter sets (Beven and Binley Citation1992) in one catchment.

4.1 Hydrological model: overall performance of 3DNet-Catch

On average, satisfactory values of different performance indicators were obtained across the simulations. The best performance was obtained in terms of index of agreement (IA), which is based on the absolute values of the residuals. This suggests that this indicator has a low sensitivity to discrepancies between simulated and observed flows and, as such, is not sufficiently informative about model performance. Similar conclusions were reported by Krause et al. (Citation2005). Slightly lower performance, on average, was shown by NSE coefficients, Lindström efficiency coefficient and KGE computed from the transformed daily flows and from the highest-flow and low-flow segments of FDCs, all of which are related to model performance in extreme flows (Oudin et al. Citation2006, Pfannerstill et al. Citation2014). Values of KGE computed over the very low-flow FDC segment that was exceeded 95–100% of time (KGE95–100) were systematically lower than KGE computed from low-flow and overall low-flow FDC segments that were exceeded 70–95% and 70–100% of time, respectively. This result shows that partitioning the low-flow segment of FDC into two subsegments (as suggested by Pfannerstill et al. Citation2014) contributes to a more comprehensive model evaluation.

Overall, the results demonstrated that it is challenging to achieve good performance in extreme flows, particularly in low flows during dry periods (Vaze et al. Citation2010, Zhang et al. Citation2015, Fowler et al. Citation2016). Poor performance over dry periods has been frequently reported in the literature, for example in studies dealing with Australian catchments affected by the Millennium drought (e.g. Thirel et al. Citation2015, Fowler et al. Citation2020, Topalović et al. Citation2020). No substantial differences in model performance across the three simulation periods were detected in this study, although there were significant variations in the indicators across the catchments in each simulation period, as opposed to the GLUE behavioural sets characterized by a strong equifinality. These results suggest that model suitability for CCISs should preferably be evaluated in different catchments, rather than in a single catchment with numerous parameter sets.

Variation in model performance across the catchments could not be attributed to variations in their properties in this study. As shown in , model performance could not be linked to e.g. catchment area, latitude, elevation or DOR ()). For example, similar performance was exhibited by catchments of various degrees of regulation, as well as in unregulated ones (e.g. catchments in the southeast of Sweden). The lack of a clear relationship between model performance and DOR can be explained by the fact that the vast majority of the selected catchments were unregulated or had rather low DOR, or that the reservoirs are managed in a way that does not considerably alter the natural runoff regime, and could be properly reproduced by the model. Slightly poorer performance was obtained in few high-elevation catchments in the northwest of Sweden, characterized by a higher percentage of snowfall and high runoff coefficients. High runoff coefficients in these catchments could be partly attributed to uncertainties in precipitation data in these regions caused by spatial interpolation from a sparse rain gauge network (Van Der Velde et al. Citation2013, Citation2014). Uncertainties in precipitation data are common, especially if precipitation occurs in the form of snowfall (Grossi et al. Citation2017), during high-intensity rainfall events (McMillan et al. Citation2011), or in high-elevation regions (Wang et al. Citation2016). Despite permanent development of spatial interpolation methods and processing of precipitation data, it is still a great challenge to obtain datasets of high quality and resolution (Hu et al. Citation2019). Consequently, high runoff coefficients are encountered in many catchments globally, such as catchments in the Pacific Northwest in the US (e.g. Addor et al. Citation2017). Bearing in mind the objectives of this study, a wide range of hydroclimatic regimes, ranging from snowmelt to rainfall driven, as well as those in regulated catchments, and a wide range of values of performance indicators were all essential for reaching valid conclusions on relationships between the two facets of model performance. Therefore, the collection of catchments across high-latitude climate is regarded as adequate for testing the hypotheses in this study. Nevertheless, further research is needed to thoroughly examine relationships between model performance and catchment properties (which was beyond the scope of this study), preferably with an extended collection of catchments, including those from other geographical regions with different streamflow regimes, and with other hydrological models.

4.2 Evaluation of model ability to reproduce statistical properties of hydrological signatures

Model ability to reproduce distributions and trends varied considerably across the hydrological signatures and the simulations. The lowest efficiency was obtained in reproducing distributions of signatures related to low flows (annual minima of various durations, dry-season percentiles, timings of annual minima), which was consistent with the conclusions reached from the indicator values. This was also confirmed by the fact that slightly better performance was obtained during the evaluation period than in the calibration period in reproducing distributions of annual minima (Qmin,1) and their timings (TQmin), as well in terms of KGE95–100 and KGE70–100. The model generally reproduced trends in the series quite well, irrespective of the presence of significant trends in the observed series of signatures. However, statistically significant trends were detected in, on average, six signatures in each catchment over the full record period, and in, on average, two signatures over the calibration and evaluation periods (see Figure S2 of the Supplementary material). Therefore, further research is needed to evaluate model ability to reproduce trends in the series of signatures in other catchments with more pronounced trends, such as some of the catchments presented by Thirel et al. (Citation2015). Model transferability in terms of the indicators generally corresponded to transferability in terms of reproducing distributions and trends in the signatures.

Equifinality affected all aspects of model performance, i.e. it could be detected regardless of the approach to quantification of model performance. For example, equifinality was reflected in low variability across indicator values of different behavioural sets (e.g. VE, ). It was also evident in model ability to reproduce distributions: for example, all behavioural sets reproduced the timings of annual maxima and most sets well reproduced distributions of annual maxima of various durations (). The impact of parameter equifinality was most pronounced in reproducing trends (or the lack of them) in the selected signatures ().

4.3 Informativeness of performance indicators about model ability to reproduce statistical properties of hydrological signatures

There were no clear and consistent relationships between the values of performance indicators and model ability to reproduce distributions or trends in series of hydrological signatures. This study was grounded on the assumption that a comparison between the indicator values of models that can reproduce the distributions, with those that cannot, can show whether specific indicators are informative about model performance in this regard, provided that such relationships remain consistent across the simulations. Few such relationships were detected in this study: for example, relationships between annual minima of various durations and KGE computed from transformed flows (KGE1/√Q) and from very low-flow FDC segments (KGE95–100). The relationship between distributions of annual maxima and KGE95–100 was also confirmed by the fact that model performance in these two aspects was better in the evaluation than the calibration period in some catchments. However, these relationships do not persist in all simulations, including the simulations with the 1% best behavioural parameter sets (Fig. S3 in the Supplementary material), and simulations with the 50 best behavioural sets in the selected 50 catchments (Fig. S4). This conclusion is further supported by combined considerations of these patterns and the correlation coefficients between the indicator values and absolute z values of the WRS test statistic.

The WRS test can suggest informativeness of a certain indicator about model ability to reproduce distributions and/or trends in the series of signatures. However, this does not necessarily imply that a performance indicator that is shown to be informative about model performance in reproducing distributions of some signatures has high values, and vice versa. For example, the median values of KGE1/√Q, KGE70–95 and KGE70–100 were satisfactory in the full record period, but the model failed to reproduce distributions of annual minima of varying durations, dry-season percentiles, and low-flow frequencies in most of the catchments. The discrepancies between these two aspects of model performance (i.e. performance indicators and model ability to reproduce distributions and trends) can be explained by the nature of the indicators themselves. Values of such indicators reflect differences between entire observed and simulated flow series, and are much less sensitive to differences in the distributions of series of signatures, as well as in potential underlying trends in these series. The results presented in this study clearly show that performance indicators and model ability to reproduce statistical properties of series of hydrological signatures represent two distinct facets of model performance that complement each other. Therefore, model evaluation procedures should be extended to include both aspects of model performance.

4.4 Limitations of the study and further research

The conclusions presented in this study build on the simulation results from one hydrological model in 50 high-latitude catchments. Further research is needed to repeat these analyses with other hydrological models, and in catchments in other hydroclimatic regions, and to compare those conclusions on the informativeness of performance indicators, regarding model ability to reproduce distributions and trends in the signatures, to those presented in this study. Similarly, the lists of performance indicators and hydrological signatures chosen in this study, although by no means exhaustive, could be extended in future research. Nevertheless, the timings of the 25th and 75th percentiles of annual runoff volume (Burn and Hag Elnur Citation2002, Harder et al. Citation2015, Melsen et al. Citation2018) might be excluded from such extensions, since initial analyses revealed strong autocorrelation in these series that affects the power of the Mann-Kendall test for trend (Fatichi et al. Citation2009, Harder et al. Citation2015), and since hydrological models have limited skill in reproducing them properly (Plavšić and Todorović Citation2018).

Most analyses conducted in this study rely on applications of the WRS test. This test was preferred for comparisons of the distributions of signatures obtained from observed and simulated flows over other suitable alternatives, such as Kolmogorov-Smirnov or Cramer-von-Mises tests (Conover Citation1999). Although the results from comparisons of the distributions of signatures with the Kolmogorov-Smirnov test depart from those obtained with the WRS test in up to 12% of all instances (results for the full record period), the conclusions about the informativeness of performance indicators about model ability to reproduce the distribution of the signatures corroborate those reached by using the WRS test (see Fig. S5 of the Supplementary material).

Application of the WRS test imposes certain limitations. This nonparametric test is based on a comparison of medians (Kvam and Vidakovic Citation2007, Kottegoda and Rosso Citation2008), with an underlying assumption of the WRS test that the two distributions have the same shape and variance, while other properties of series distributions, such as skewness, are not explicitly considered. Consequently, even if the series obtained from observed and simulated flows did not differ according to the WRS test, that does not mean that the quantiles computed from the two series would be equal, because quantile estimates are strongly affected by various properties of series, such as skewness or presence of outliers (Plavšić et al. Citation2014). Thus, further research is needed to enhance the examination of model ability to reproduce other features of hydrological signatures’ distributions. As part of such research, application of different, complementary statistical tests can be considered.

Climate change impact on floods poses a great concern and a great challenge to CCISs. Models’ ability to accurately simulate the highest flows, such as annual maxima, is generally limited (Brunner et al. Citation2021). To improve model skill in reproducing annual maxima, Mizukami et al. (Citation2019) proposed the annual peak flow bias (APFB) metric as objective function. Although specifically tailored for annual maxima, this performance indicator cannot be considered informative about model ability to reproduce their distributions, according to the methodology adopted in this study (see Fig. S6 in the Supplementary material). The same conclusions were reached for the mean flows and annual daily minima. These results once again highlighted issues with reproducing the most extreme flows, and the need for further research in this regard. Furthermore, spring floods triggered by snowmelt have different statistical properties than rainfall-induced summer or autumn floods; thus, these two series should be analysed independently (Blazkova and Beven Citation2009). The peak over threshold method can also be used for flood flow quantile estimation (Plavšić Citation2005, Todorović et al. Citation2017, Tabari Citation2021).

Gelfan and Millionshchikova (Citation2018) state that: “in order not to get lost in the ‘jungle of models,’ one needs to be able to distinguish between models appropriate for impact studies and unsuitable ones,” and emphasize that “there are a lot of ‘good’ models that pretend to be suitable for impact studies, and the number of such models grows like a snowball.” The approach applied in this paper is in line with the appeals of the hydrological community for novel evaluation procedures aimed at identifying models suitable for CCISs that can potentially mitigate uncertainty in hydrological projections under climate change (Fowler et al. Citation2018b). This study clearly shows that commonly used performance indicators fail to show model performance in reproducing statistical properties of series of hydrological signatures that climate change impact assessments build on. In other words, good model performance in terms of commonly used indicators does not warrant that the statistical properties of signatures are well reproduced, which can eventually result in misleading assessment of climate change impacts and failure in the identification of optimal adaptation strategies. This study presented an evaluation of the performance of hydrological models that are run with the observed climatic series, while hydrological series in CCISs would inevitably be affected by uncertainties in climate projections. However, it is essential to establish robust evaluation procedures that can identify hydrological models suitable for CCISs under current conditions, with observed data that allow comparisons of observed and simulated variables. The application of the WRS test to evaluate model performance in reproducing distributions of the selected signatures represents a step forward in this direction.

5 Conclusions

Climate change adaptation strategies are grounded in statistical analyses of the series of hydrological regime features (i.e. hydrological signatures), such as mean or extreme flows, or extreme flow timings and durations. Therefore, distributions and trends in series of hydrological signatures should be accurately reproduced by hydrological models. Although crucial for climate change impact studies (CCISs), this aspect of hydrological model performance is rarely analysed. In this paper, we provided novel insights on model performance and suitability for climate change impact studies by: (1) thoroughly evaluating model ability to reproduce distributions and trends in series of hydrological signatures relevant for CCISs, and (2) examining whether commonly used performance indicators are informative about how well a model can reproduce these distribution and trend properties.

The simulations with the 3DNet-Catch model for 50 high-latitude catchments in Sweden revealed considerable differences in the model performance. Specifically, distributions of low-flow signatures generally were not well reproduced, while model performance for high-flow-related signatures was noticeably better. Such behaviour was also exhibited by the performance indicators. However, no strong, clear, and consistent relationships between the values of indicators, and model performance in reproducing the distributions and trends in the signatures, were detected in this study. This means that good model performance in terms of the commonly used performance indicators cannot warrant that the distributions or trends in the series of signatures are well reproduced, and vice versa. This clearly shows that these two facets of model performance are distinct and complementary, and that model suitability for CCISs cannot be appraised merely using performance indicators. Therefore, traditional model evaluations based merely on performance indicators should be extended, and evaluation focused on distributions and trends in signatures presented in this paper can be considered a part of these efforts. Model performance in reproducing distributions cannot be used straightforwardly as an OF for model calibration; however, it can be included in a process of model selection for CCISs and, thereby, potentially reduce uncertainties in the flow projections under climate change due to model equifinality.

Supplemental Material

Download MS Word (1.8 MB)Supplemental Material

Download MS Word (1.8 MB)Acknowledgements

This research is conducted as part of the project “Reducing uncertainties in hydrological climate change impact research to allow for robust streamflow simulations” supported by the Swedish Research Council (VR starting grant: 2017-04970). The data used in this paper were obtained from the Swedish Meteorological and Hydrological Institute (SMHI). The authors thank Prof. Miloš Stanić from the University of Belgrade for his help with setting up the 3DNet-Catch model, and the two anonymous reviewers for helpful and constructive comments.

Disclosure statement

No potential conflict of interest was reported by the authors.

Supplementary material

Supplemental data for this article can be accessed online at https://doi.org/10.1080/02626667.2022.2104646

Data availability statement

Hydro-climatic data can be retrieved from the Swedish Meteorological and Hydrological Institute (SMHI) database (http://vattenwebb.smhi.se/). Geospatial data for the streamflow stations can be downloaded from the SMHI SVAR database (https://www.smhi.se/publikationer/svar-svenskt-vattenarkiv-1.17833).

Additional information

Funding

References

- Addor, N., et al., 2014. Robust changes and sources of uncertainty in the projected hydrological regimes of Swiss catchments. Water Resources Research, 50 (10), 7541–7562. doi:10.1002/2014WR015549

- Addor, N., et al., 2017. The CAMELS data set: catchment attributes and meteorology for large-sample studies. Hydrology and Earth System Science, 21 (10), 5293–5313. doi:10.5194/hess-21-5293-2017

- Addor, N. and Melsen, L.A., 2019. Legacy, rather than adequacy, drives the selection of hydrological models. Water Resources Research, 55 (1), 378–390. doi:10.1029/2018WR022958

- Asadzadeh, M., Tolson, B.A., and Burn, D.H., 2014. A new selection metric for multiobjective hydrologic model calibration. Water Resources Research, 50 (9), 7082–7099. doi:10.1002/2013WR014970

- Bastola, S., Murphy, C., and Sweeney, J., 2011. The role of hydrological modelling uncertainties in climate change impact assessments of Irish river catchments. Advances in Water Resources, 34 (5), 562–576. doi:10.1016/j.advwatres.2011.01.008

- Beck, H.E., et al., 2017. Global evaluation of runoff from 10 state-of-the-art hydrological models. Hydrology and Earth System Sciences, 21 (6), 2881–2903. doi:10.5194/hess-21-2881-2017

- Bergström, S., et al., 2001. Climate change impacts on runoff in Sweden-assessments by global climate models, dynamical downscaling and hydrological modelling. Climate Research, 16 (2), 101–112. doi:10.3354/cr016101

- Beven, K. and Binley, A., 1992. The future of distributed models: model calibration and uncertainty prediction. Hydrological Processes, 6 (3), 279–298. doi:10.1002/hyp.3360060305

- Blasone, R.-S., Madsen, H., and Rosbjerg, D., 2007. Parameter estimation in distributed hydrological modelling: comparison of global and local optimisation techniques. Nordic Hydrology, 38 (4–5), 451. doi:10.2166/nh.2007.024

- Blazkova, S. and Beven, K., 2009. A limits of acceptability approach to model evaluation and uncertainty estimation in flood frequency estimation by continuous simulation: skalka catchment, Czech Republic. Water Resources Research, 45 (12), 1–12. doi:10.1029/2007WR006726

- Blöschl, G., et al., 2017. Changing climate shifts timing of European floods. Science, 357 (6351), 588–590. doi:10.1126/science.aan2506

- Blöschl, G., et al., 2019a. Twenty-three unsolved problems in hydrology (UPH) – a community perspective. Hydrological Sciences Journal, 64 (10), 1141–1158. doi:10.1080/02626667.2019.1620507

- Blöschl, G., et al., 2019b. Changing climate both increases and decreases European river floods. Nature, 573 (7772), 108–111. doi:10.1038/s41586-019-1495-6

- Blöschl, G., and Montanari, A., 2010. Climate change impacts—throwing the dice? Hydrological Processes, 381 (3), 374–381. doi:10.1002/hyp.7574

- Booij, M.J., 2005. Impact of climate change on river flooding assessed with different spatial model resolutions. Journal of Hydrology, 303 (1–4), 176–198. doi:10.1016/j.jhydrol.2004.07.013