?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Despite advances in the conceptualisation of facial mimicry, its role in the processing of social information is a matter of debate. In the present study, we investigated the relationship between mimicry and cognitive and emotional empathy. To assess mimicry, facial electromyography was recorded for 70 participants while they completed the Multifaceted Empathy Test, which presents complex context-embedded emotional expressions. As predicted, inter-individual differences in emotional and cognitive empathy were associated with the level of facial mimicry. For positive emotions, the intensity of the mimicry response scaled with the level of state emotional empathy. Mimicry was stronger for the emotional empathy task compared to the cognitive empathy task. The specific empathy condition could be successfully detected from facial muscle activity at the level of single individuals using machine learning techniques. These results support the view that mimicry occurs depending on the social context as a tool to affiliate and it is involved in cognitive as well as emotional empathy.

Humans often react to the emotional expressions of others with spontaneous imitation. Facial expressions that match the expressed emotion of the counterpart have been labelled “facial mimicry” and are considered central for social interactions (Fischer & Hess, Citation2017; Hess & Fischer, Citation2013). Mimicry is an ubiquitous phenomenon that can be found not only in the laboratory (Dimberg, Citation1982) but also in the wild (Fischer, Becker, & Veenstra, Citation2012) and as a reaction to strangers as well as to close interaction partners (McIntosh, Citation2006). Importantly, recent evidence suggests that the extent of facial mimicry depends on contextual factors such as situations, motivations, and affordance (Hess & Fischer, Citation2014; Seibt, Mühlberger, Likowski, & Weyers, Citation2015). Moreover, people seem to mimic the valence of emotions rather than discrete emotions (Hess & Fischer, Citation2013).

One of the most pressing questions in mimicry research regards the role of mimicry in social processing. The new view of context-dependent mimicry seems promising to target this question, as social information occurs naturally within a rich context.

The extant literature emphasises two main claims regarding the function of mimicry, a role in emotion understanding (e.g. Niedenthal, Brauer, Halberstadt, & Innes-Ker, Citation2001), and a means of fostering affiliation (cf. Hess & Fischer, Citation2013). The first notion is theoretically based on the so-called Facial Feedback Hypothesis (Adelmann & Zajonc, Citation1989). This hypothesis posits that the automatic facial muscle activity of the perceiver of an emotion generates neural feedback. This feedback triggers a similar emotion in the perceiver. In turn, the perceiver may better understand the other person’s feelings (Barsalou, Niedenthal, Barbey, & Ruppert, Citation2003). The second approach regards mimicry as a reaction to an emotional signal that serves to signal affiliative intent and thereby can enhance liking and rapport in social interactions (Hess & Fischer, Citation2013).

Mimicry and empathy

A common conceptualisation of empathy distinguishes two facets (Blair, Citation2005; Shamay-Tsoory, Citation2011; Walter, Citation2012) that can be mapped to these possible functions of mimicry: The first facet, cognitive empathy, describes a person’s ability to infer the mental states of others (Baron-Cohen & Wheelwright, Citation2004). The second facet, emotional empathy, is defined as an observers’ emotional response to another individual’s emotional state (Eisenberg & Fabes, Citation1990). The conceptualisation of empathy into these two facets is supported by behavioural (Dziobek et al., Citation2008), neuroimaging (Dziobek et al., Citation2011; Fan, Duncan, de Greck, & Northoff, Citation2011), and neurological findings (Shamay-Tsoory, Aharon-Peretz, & Perry, Citation2009). A relationship with mimicry has been suggested for both facets (for an overview see, Seibt et al., Citation2015).

Cognitive empathy is conceptually linked with emotion recognition (Dziobek et al., Citation2011; Tager-Flusberg & Sullivan, Citation2000; Wolf, Dziobek, & Heekeren, Citation2010): recognising an emotion, especially a complex or subtle emotion in a rich context, requires the capacity to take the other’s perspective and to infer their mental or emotional state. Most mimicry studies focused on the recognition of so-called basic emotion expressions. There is some evidence for reduced emotion recognition when mimicry was blocked, compared to when participants were free to mimic (Ponari, Conson, D’Amico, Grossi, & Trojano, Citation2012; for an overview see, Hess & Fischer, Citation2013). This relationship has been reported mostly for positive emotions like happiness (Oberman, Winkielman, & Ramachandran, Citation2007) or for the detection of smiles (Niedenthal et al., Citation2001; Rychlowska et al., Citation2014) and often only in reference to detection time rather than accuracy or only for female participants (Stel & van Knippenberg, Citation2008). Other studies could not find an effect (Blairy, Herrera, & Hess, Citation1999; Fischer et al., Citation2012; Hess & Blairy, Citation2001).

Decreased cognitive empathy is a core deficit in autism-spectrum conditions (ASC; Dziobek et al., Citation2006, Citation2008). These neurodevelopmental conditions are characterised by impairments in social communication and interaction as well as restricted interests and repetitive behaviour (American Psychiatric Association, Citation2013). Furthermore, aberrant automatic, but intact intentional mimicry of facial expressions has been reported for people with ASC (McIntosh, Reichmann-Decker, Winkielman, & Wilbarger, Citation2006; Stel, van den Heuvel, & Smeets, Citation2008). Individuals with ASC who were instructed to imitate the facial expressions of emotions reported to not experience them – contrary to control participants in the same study and to the Facial Feedback Hypothesis (Stel, van den Heuvel, et al., Citation2008). As autistic traits are assumed to be continuously distributed across the population (Baron-Cohen, Wheelwright, Skinner, Martin, & Clubley, Citation2001), they might be confounding the relationship of empathy and mimicry, as a recent study suggests (Neufeld, Ioannou, Korb, Schilbach, & Chakrabarti, Citation2016).

Concerning emotional empathy, previous research points to a bidirectional relationship with mimicry. Even though people with stronger empathic traits show more consistent mimicry (Dimberg, Andréasson, & Thunberg, Citation2011; Sonnby-Borgstrom, Citation2002), it also has been observed that mimicking another person’s facial emotional expression fosters a shared emotional state in the observer, a form of emotional empathy (Stel, van Baaren, & Vonk, Citation2008). While many studies have focused on mimicry of anger and happiness, a recent study (Rymarczyk, Żurawski, Jankowiak-Siuda, & Szatkowska, Citation2016a) also showed that emotional trait empathy modulated the strength of facial mimicry of fear and disgust. However, some studies did not find any relationship between the extent of such emotional contagion and the level of mimicry (Blairy et al., Citation1999; Hess & Blairy, Citation2001).

To date, only few mimicry studies have measured cognitive and emotional empathy simultaneously. Blairy et al. (Citation1999) conducted a series of experiments, in which they presented pictures of facial expression of basic emotions. They measured decoding accuracy as well as self-reported emotional state. Although they found that participants mimicked the emotions and that the decoding of emotions was accompanied by shared affect, they found no indication of a mediation by mimicry. In a similar study, Hess and Blairy (Citation2001) used video clips of spontaneous emotion expressions from an emotional imagery task. This task had high ecological validity, but leads to very low decoding rates for anger and disgust. The authors reported a null result regarding the relationship of mimicry with emotional contagion and emotion recognition. In both studies, mimicry was assessed by electromyography but activity of the Zygomaticus Major was not measured. This might have skewed the assessment of mimicry of happiness, as this is often based on activity of this muscle, while the mimicry of anger is often based on the activity of Corrugator Supercilli (Rymarczyk, Żurawski, Jankowiak-Siuda, & Szatkowska, Citation2016b).

Hofelich and Preston (Citation2012) used an emotional Stroop task presenting background pictures of basic emotions together with incongruent and congruent emotional adjectives. They replicated the Stroop effect in that the irrelevant emotional facial expressions slowed down the encoding of incongruent adjectives. But this effect was not associated with mimicry of the background faces. Regarding emotional empathy, their study was limited to trait empathy: they classified their participants into a high- and a low-empathic group based on three trait empathy measures. Congruent facial responses towards the emotional adjectives tended to be stronger in the high-empathic group. Sato, Fujimura, Kochiyama, and Suzuki (Citation2013) found relationships among facial mimicry, emotional experience and emotion recognition using video clips of happiness and anger portrayed by amateur Japanese models. They asked participants to rate the valence and arousal of presented emotions. The activity of the Zygomaticus Major and Corrugator Supercilii measured with EMG correlated with the valence and arousal ratings of the recognised as well as the experienced emotions. Yet, such ratings are only a limited proxy for the assessment of discrete emotion recognition. Moreover, the results might be confounded by the intensity of the emotion.

Due to methodological characteristics and limitations, the non-findings in the reviewed studies do not necessarily represent evidence for a non-existing relationship, but point to the need for further studies. Extant research used only decontextualised facial expressions of basic emotions. However, in real life, emotions are almost always more complex, expressed subtly and within a context. Especially, to examine cognitive empathy, the use of contextually embedded and rich social stimuli is advantageous, as it requires more complex processing such as perspective taking and interpretation of an entire scene. A recent study by van der Graaff et al. (Citation2016) targeted this issue by using empathy-inducing film clips with short happy or sad episodes and measured Zygomaticus Major and Corrugator Supercilii activity as well as emotional and cognitive empathy at state and self-reported trait level. State emotional empathy was conceptualised as experiencing the same emotion. To assess participants’ state cognitive empathy, the participants’ statements regarding the reasons why they sympathised with the individuals in the film clips, were coded regarding their cognitive attributions. Valence-congruent muscle activity was positively associated with both facets of state empathy. However, this muscle response might be an emotional reaction to the positive and negative content of the video clips, rather than an imitation of the facial expressions. Moreover, the results are limited to adolescents.

In sum, to date, the research regarding the role of mimicry for the two facets of empathy comes to divergent conclusions. To better understand the relationship of mimicry with both the cognitive and the emotional facet of empathy, studies are needed that carefully investigate both facets together, ideally based on the same stimulus material. Too little attention has been paid to the use of context-embedded naturalistic stimuli. Further, given the argument outlined above, it seems prudent to control for autistic traits as these pose a potential confound. This was the goal of the present study. Specifically, we examined the relationship between mimicry and emotional as well as cognitive empathy in reaction to complex emotions embedded in photorealistic contexts. This more naturalistic setting further allowed us to compare directly the relationships of mimicry with cognitive empathy versus mimicry with emotional empathy. We suggest here that emotional mimicry is related to the understanding of an emotion in context and functions to regulate relations with the other person. Thus, we predicted that mimicry responses should be related to both facets of empathy. Based on previous research, we expected more mimicry for the emotional empathy task pointing to the affiliative role of mimicry. Finally, we explored the possible confounding role of autistic traits.

Method

Participants

Seventy volunteers (age range 18–36 years, mean age: 25.5 years; 31 women) participated individually. The sample size of 70 exceeded the required sample size of 67 estimated by a statistical power analysis (evaluated for bivariate correlations with power = 0.80, α = 0.05 and effect size ρ = 0.30), based on a conceptually similar study (Sato, Fujimura, & Suzuki, Citation2008). More than half of the participants had received a high-school degree and no one scored below 20 in the WST (see below).

All participants were screened to exclude current or prior history of psychological, neurological or psychiatric disorders including substance abuse. Moreover, students of medicine or psychology were excluded as they might guess the real purpose of the EMG electrodes. Due to the EMG measurement, participants with facial sensitivity disorders, paralysis or severe acne could not take part in the study. Participants were asked to abstain from alcohol, caffeine and tobacco for 24 h prior to the experiment. To ensure that all participants understood the emotional words used in the experiment, we assessed their levels of verbal intelligence through a German vocabulary test, the Wortschatztest (WST; Schmidt & Metzler, Citation1992). All participants displayed sufficient language understanding. All participants provided written informed consent prior to participation and received monetary compensation after the experiment. Ethical approval was obtained from the ethics committee of the Humboldt-Universität zu Berlin.

Procedure

After completing the informed consent form, participants were seated in a sound-attenuated and electrically shielded laboratory 1 m in front of a computer screen (1024 × 768), where they completed the revised version of the Multifaceted Empathy Test (MET, Dziobek et al., Citation2008; see below). During the task, facial EMG was measured from the Corrugator Supercilii (brows; associated with negative affect) and Zygomaticus Major (cheek; associated with positive affect). The experimenter told the participants that the EMG electrodes measure sweat so that they would not concentrate on their facial movements. To allow checking for artefacts after completion of the testing, the face of the participant was video recorded during the EMG measurement. After the testing session, the participants completed two personality trait measures: autistic traits were measured via the German short-version (Freitag et al., Citation2007) of the Autism-Spectrum Quotient (AQ; Baron-Cohen et al., Citation2001). To assess trait empathy, the German version (Paulus, Citation2009) of the Interpersonal Reactivity Index (IRI; Davis, Citation1983) was used.

MET

The MET (Dziobek et al., Citation2008) is a computer-based task for the assessment of emotional and cognitive empathy with high ecological validity. There are several advantages of the MET compared to other state empathy measures. First, as the MET uses photorealistic stimuli including complex emotions and context, it requires inferring mental states beyond the simple recognition of isolated basic emotion. Second, contrary to questionnaires, it does not rely on self-report but on performance for measuring cognitive empathy. Third, the test measures both facets of empathy within the same task paradigm allowing for a direct comparison.

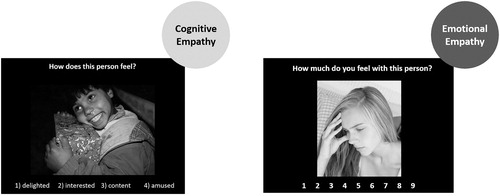

The MET consists of a total of 40 photographs depicting individuals of varying gender and age in emotionally charged situations. Half of the pictures show people expressing positive emotions (e.g. “relaxed”, showing a man receiving a massage) and the other half negative emotions (e.g. “painful”, showing a woman suffering acute pain in a hospital room). The emotional states are embedded in rich contexts, e.g. a hospital room, war scene, or a bicycle race, where other individuals are also seen. However, the target persons, including their face are displayed prominently in each picture to avoid ambiguity in responding. There was no evidence for a less pronounced mimicry response towards the pictures including background faces. Each picture was presented twice (once in the emotional and once in the cognitive empathy condition) and in blocks of 10 with the same valence (positive/negative) and empathy condition (cognitive/emotional). To assess cognitive empathy, the participant was asked to infer the mental state of the person in the picture by choosing one out of four possible emotional words via key press. The time between the presentation of the words and the key press was measured as reaction time. To assess emotional empathy, the participant indicated to what degree they empathise with the individual in the photo. This rating was made on a Likert-scale anchored with 1 (not at all) to 9 (very much). An example for the cognitive and emotional empathy condition is shown in .

Individual difference measures

Autism-Spectrum Quotient (AQ)

The German short-version (AQ-k; Freitag et al., Citation2007) of the AQ (Baron-Cohen et al., Citation2001) is a 33-item self-report questionnaire assessing different areas connected with ASC, such as social and communication skills, imagination and attention. Each item describes a certain behaviour or attitude. On a 4-point scale, participants indicate how strongly they agree with each statement. Every slight or strong agreement to an autistic behaviour adds one point to the total score. A score of 17 and above is seen as an indicator for an amount of autistic traits that might be clinically significant. The AQ has been shown to have good test–retest reliability and inter-rater reliability (Baron-Cohen et al., Citation2001) as well as good discriminative validity and good screening properties in clinical practice (Woodbury-Smith, Robinson, Wheelwright, & Baron-Cohen, Citation2005). While the mean AQ score was low (M = 8.8, SD = 5.4), six participants scored above the cut-off of 17, which might be indicative of a clinical diagnosis of ASC.

Interpersonal Reactivity Index (IRI)

The IRI (Davis, Citation1983; German version: Paulus, Citation2009) is a 16-item self-report questionnaire that taps different dimensions of empathy. It has four subscales: Perspective Taking, Empathic Concern, Personal Distress and Fantasy. This version has shown good reliability, factorial validity and item analysis (Paulus, Citation2009). We used two subscales: perspective taking, as it corresponds most closely to cognitive empathy and empathic concern, as it matches the concept of emotional empathy. An example item of perspective taking is: “before criticising someone, I try to see the issue from their point of view”. An example of an empathic concern item is: “I would describe myself as a warm-hearted person”. The two facets were not significantly correlated in our sample (rs = −0.02, p = .794).

EMG

Before attaching the electrodes, participant’s skin was cleaned with an alcohol pad. Bipolar surface electrodes were attached over the Zygomaticus Major and Corrugator Supercilii muscles on the non-dominant side of the face, according to Fridlund and Cacioppo (Citation1986). Electrode impedance was kept below 50 kΩ. The EMG signals were amplified with EMG amplifiers (Becker Meditec, Karlsruhe, Germany; gain = 1230; band pass 19–500 Hz) and digitised by means of a USB multifunction card USB-6002 (National Instruments Inc., Ireland), connected to a laptop computer running DASYLab 10.0 (National Instruments Ireland Resources Limited). The raw EMG signals were sampled at 1000 Hz and digitised with 16-bit resolution. Within DASYLab, signals were online RMS (root mean square) integrated with a time constant of 50 ms. The integrated fEMG and the trigger signals were down sampled to 20 Hz and stored as an ASCII file.

The muscle activity was sampled for each trial consisting of a fixation cross of 1500 ms as a baseline followed by the picture for four seconds. Afterwards the possible answers were shown, the participant could react and a blank screen appeared for 100 ms ( shows the time course of an example trial). To avoid movement artefacts, the EMG response of this second period including the behavioural reaction was not analysed.

Data reduction and artefact control

Following established procedure (cf. Bush, Hess, & Wolford, Citation1993) the data was within-subjects z-transformed. Standardised facial muscle activity was then averaged in intervals of 100 ms. A change to baseline score was calculated for each interval by subtracting the averaged activity from 500 to 1500 ms preceding stimulus onset. The 500 ms directly preceding stimulus onset could not be used to technical difficulties. The video recording of all participants was screened visually for artefacts, such as sneezing, coughing or yawning that could disrupt the EMG measure. EMG values recorded during artefacts were excluded. Trials with more than 25% of values missing due to artefacts were completely excluded. Additionally, an automatic outlier detection was performed excluding all values from a baseline-corrected trial that were three standard deviations above the mean value of all trials. Data from two participants had to be excluded as they had more than 20% missing trials.

Data analysis strategy

To assess the presence of mimicry, a repeated measures ANOVA with valence (positive, negative), muscle site (Zygomaticus Major, Corrugator Supercilii) and time (bins of 100ms: 500–4000 ms) as within-subject factors was conducted. The first 500 ms after stimulus onset were excluded to avoid confounds from orienting. A significant interaction of muscle and valence was interpreted as support for a mimicking reaction. As an index of the magnitude of mimicry, a difference value of muscle activity depending on the valence was calculated: For positive expressions Corrugator Supercilii activity was subtracted from Zygomaticus Major activity. For negative expressions, the reverse difference was calculated. This difference in muscle activity was averaged over time. A positive value was interpreted as a congruent facial expression.

To analyse the relationship between facial mimicry and emotional and cognitive empathy, linear mixed effects models using residual maximum likelihood estimation were calculated with participants as random factor to account for the dependence of the repeated measures of each individual.

Which condition a facial expression was presented in should be predictable if the task demands lead to different empathic reactions. We used machine learning to explore how accurately the empathy condition (emotional versus cognitive) can be predicted from facial muscle activity. This is a binary classification task with balanced classes (each facial expression is presented once in each condition). We built models mapping the feature vector x gained from the muscle activity to a value of a decision function to predict the empathy condition y. The basic feature vector of a single trial of one participant consists of the z-standardised and baseline-corrected activity values for each muscle (Zygomaticus Major and Corrugator Supercilii). The activity of both muscles was analysed from 500 to 4000 ms after stimulus onset and integrated with a time constant of 100 ms, so that x ∈ R70. Across individuals all trials for each facial expression were averaged separately for each condition.

Additionally, based on previous studies analysing facial EMG (Hess, Kappas, McHugo, Kleck, & Lanzetta, Citation1989), a handcrafted feature vector was calculated on the muscle sequences: the mean and the minimal and maximal activity in a trial as well as the variance, the skewness and the kurtosis of the muscle activity response.

For classification, a random forest classifier and a support vector machine was used. The support vector machine classifies instances by building a separating hyperplane. The algorithm is maximising the margin of the training instances of the two classes from this hyperplane. The solution can be expressed as a linear combination of a subset of the training data called support vectors that lie next to the separating hyperplane (Boser, Guyon, & Vapnik, Citation1992). To account for non-linearly separable data, we used the hinge loss function that gives back zero for correct classification and a value proportional to the distance from the margin for a wrong classification.

A random forest is an ensemble classifier that builds several decision trees on sub-samples of the data and averages the results (Breiman, Citation2001). The use of many trees reduces the habit of decision trees to overfit to the training data. The samples were drawn with replacement (bootstrapping). To measure the quality of the splits, gini impurity was used. The depth of the trees and the minimal number of instances for a leaf node were hyperparameter.

To train and evaluate the machine learning classifiers, the data was split in train and test sets (70/30) with a randomly shuffled stratified three-fold cross-validation. The test instances were not used for training the models. For the tuning of the hyperparameter, a nested five-fold cross-validation inside the training data was used.

The aim of the machine learning analyses differs from the aim of the ANOVA approach. While the ANOVA shows that mimicry responses in the two conditions differ on average over all individuals, the machine learning analysis investigates whether or not a condition can be detected from the mimicry responses of a single individual (Bzdok, Altman, & Krzywinski, Citation2018). Further, while the ANOVA is based on the mean value of the mimicry response, the machine learning approach is based on a richer, high-dimensional representation of the mimicry response, using a 70-dimensional representation in the case of the basic feature vector, and information such as minimum, maximum, variance, skewness, and kurtosis in the case of the handcrafted feature vector. Compared to the other analyses, the machine learning approach thus allows to detect differences between the mimicry response in the two conditions with a much higher resolution.

Results

Behavioural measurement of cognitive and emotional empathy in the MET

On average, participants indicated a level of emotional empathy comparable to previous reported levels for healthy individuals (e.g. Hurlemann et al., Citation2010; Ritter et al., Citation2011). Similarly, their accuracy in the cognitive task (see ) was comparable to previous research. One participant was excluded from all analyses of the empathy task due to a detection rate more than five standard deviations below the mean. Positive and negative expressions were not rated significantly differently – neither in the cognitive nor the emotional empathy task (see ).

Table 1. Cognitive and emotional empathy values of the MET.

Women scored significantly higher (M = 77%, SD = 9%, 95% CI [74%, 81%]) than men (M = 71%, SD = 10%, 95% CI [67%, 74%]) on the cognitive empathy task, T = 2.75, p = .008, d = 0.68. In addition, women’s reaction times (Mdn = 4539 ms), were slightly lower than the reaction times of men (Mdn = 5042 ms), U = 432.0, p = .057, r = 0.23. There were no gender differences for the emotional empathy task.

Cognitive empathy was negatively correlated with AQ values, rs = −0.31, p = .010, the association with the perspective taking subscale of the IRI was not significant (rs = 0.17, p = .181). In line with earlier studies (e.g. Dziobek et al., Citation2008), a positive association of emotional empathy with trait empathic concern (subscale of IRI) was found, rs = 0.33, p = .008 (see for all correlations of MET scores with subscales of IRI).

Table 2. Correlations of MET scores with subscales of IRI.

Facial mimicry

To test the general occurrence of mimicry across both empathy conditions, we used a repeated measures ANOVA with the muscle activity score as dependent variable and with the within-subject factors muscle site (Zygomaticus Major versus Corrugator Supercilii), valence of the expression (pos. and neg.) and time (100 ms bins).

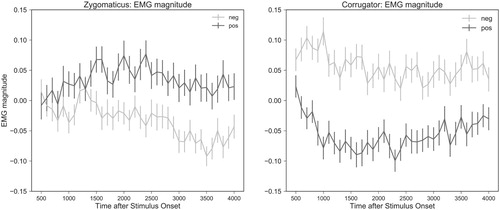

Across both empathy conditions, a significant interaction between muscle site and valence supports the assumption of a mimicry reaction, F(1, 67) = 34.24, p < .001, = 0.304. Additionally, a main effect of valence was observed, F(1, 67) = 4.035, p = .049,

= 0.024, pointing towards a stronger facial reaction towards negative expressions.

Post hoc analyses revealed that, as expected for mimicry, the reaction of the Corrugator Supercilii across time was higher in response to negative facial expressions (M = 0.05, SD = 0.07, 95% CI [0.04, 0.07]) than to positive facial expressions (M = −0.06, SD = 0.07, 95% CI [−0.07, −0.04]), t = 6.27, p < .001, d = 0.77. Similarly, higher Zygomaticus Major activity was observed in response to positive emotions (M = 0.03, SD = 0.08, 95% CI [0.01, 0.05]) than to negative ones (M = −0.03, SD = 0.08, 95% CI [−0.05, −0.01]), t = 3.03, p = .004, d = 0.37. The activity of both muscles over time separated by valence is shown in .

Facial mimicry and emotional empathy

The level of facial mimicry during the emotional empathy condition correlated positively with the person’s emotional empathy score, rs = 0.24, p = .049 (). The person’s emotional empathy total score in turn was positively correlated with mimicry of both negative (r = 0.26, p = .034) and positive emotions (r = 0.29, p = .019) in the emotional empathy condition. A mixed linear model with participants as random factor supported the positive relationship of the emotional empathy response and the mimicry response, b = 0.26, SE = 0.05, z = 5.02, p < .001. However, a model separating the responses by the valence of the facial expression and including the valence by mimicry interaction, revealed that valence moderates the relationship of mimicry and emotional empathy. The model (see ) performed significantly better than models without the interaction and pointed to a strong relationship for the individual’s reaction to positive facial expressions only. Post hoc correlation tests confirmed a positive relationship between mimicry and emotional empathy response for positive (r = 0.25, p = .045) but not for negative expressions (r = 0.15, p = .23). Additionally, a significant positive correlation of the average emotional empathy a photo elicited and the average mimicry the facial expression in this photo evoked was found, r = 0.57, p < .001, as can be seen in .

Table 3. Correlations of MET scores with facial mimicry.

Table 4. Prediction of emotional empathy in the MET with dummy coded valence (0 – positive, 1 – negative).

To assess the association of mimicry with state empathy, which is not explained by trait empathy, we calculated a step-wise linear regression model that controlled for emotional trait empathy as measured by the empathic concern scale of the IRI. In this model, empathic concern and mimicry both contributed significantly to the prediction of emotional empathy (see ). As all responses from the emotional empathy task of the MET by each individual were aggregated, the valence of each item could not be included into the model in a similar way as in the previous single-item analysis of emotional empathy measured by the MET and mimicry. The was no evidence for a direct association of any IRI subscale score with facial mimicry (see ).

Table 5. Prediction of emotional empathy in the MET.

Table 6. Correlations of IRI subscale scores with facial mimicry.

Autistic traits

We calculated a multiple linear regression model with the emotional empathy MET score as dependent variable and the average mimicry response and the AQ score as independent variables to control for the influence of the autistic traits. The emotional empathy score was associated positively with the mimicry response, b = 3.68, SE = 1.22, t = 3.03, p = .004, but not significantly associated with autistic traits, b = −0.04, SE = 0.03, t = −1.35, p = .182.

Facial mimicry and cognitive empathy

As for emotional empathy, we calculated a linear mixed effects model with participant as random factor and valence-condition as random slope to calculate the main effects of mimicry (mean intensity) of the facial expressions as well as valence on the correct response rates for positive and negative facial expressions (two measures). Mimicry influenced the recognition performance positively (b = 0.10, SE = 0.05, z = 2.05, p = .041). The valence of the items did not influence cognitive empathy. Further, there was no significant interaction between valence and mimicry on cognitive empathy. There was no significant point-biserial correlation between the accuracy in cognitive empathy task and the level of mimicry for each item in that task. The response time scaled with the level of mimicry, rs = 0.36, p = .003.

A mixed linear model with the log-transformed reaction times as dependent variable and with pictures as random factor supported the positive relationship of reaction time and mimicry response, b = 0.03, SE = 0.01, z = 2.39, p = .017. An analysis with participants as random factor did not reveal an association of reaction time and mimicry (p = .39).

Autistic traits

Controlling for individual’s AQ-score by including them in the model, did not affect the main effect of mimicry (b = 0.25, SE = 0.10, z = 2.52, p = .012). Further, we replicated previous findings (e.g. Dziobek et al., Citation2008) of a negative association between autistic traits and cognitive empathy (b = −0.01, SE = 0.002, z = −2.18, p = .029). There was no evidence for an influence of the interaction between mimicry and autistic traits on the cognitive empathy score (b = −0.02, SE = 0.01, z = −1.74, p = .082).

Emotional versus cognitive empathy

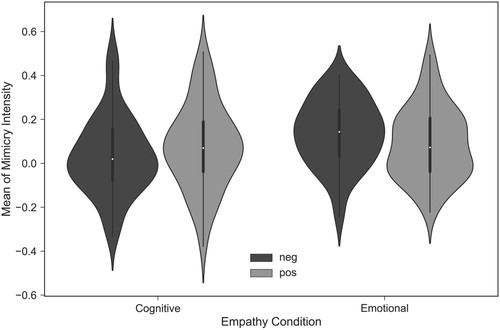

To compare the level of mimicry in the emotional and cognitive empathy task, a repeated measures ANOVA on the accumulated mimicry activity over time was calculated with valence and condition as within-factors. The analysis revealed a significant difference between the emotional and the cognitive empathy condition, F(1, 66) = 10.43, p = .002, = 0.03. There was no evidence for a main effect of valence. The interaction between valence and condition was not significant, F(1, 66) = 3.20, p = .078,

= 0.02. shows the mean mimicry activity as a function of valence and condition.

Prediction of cognitive and emotional empathy by machine learning

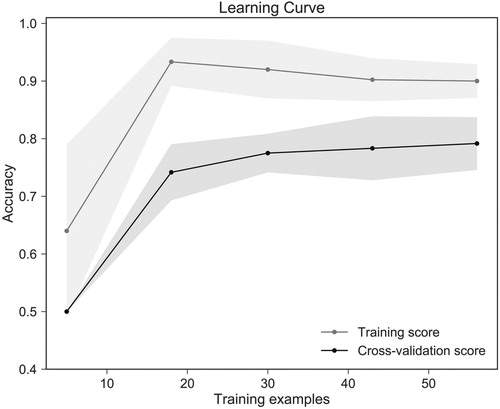

It was possible to predict the condition in which a facial expression had been presented with an accuracy of 70% from the muscle activity of the Zygomaticus Major and Corrugator Supercilii (basic feature vector) with the support vector machine. Based on the handcrafted features a prediction of 72% with the random forest was possible. shows the learning curve of the random forest classifier.

Discussion

The current study presents evidence for the mimicry of complex, context-embedded emotional expressions and its association with cognitive and emotional empathy. The results indicate a stronger association of mimicry with emotional than with cognitive empathy. These findings further support the view of mimicry as primarily affiliative response to an emotional signal in social interaction (Hess & Fischer, Citation2013).

The individuals’ average emotional empathy score (aggregated over positive and negative stimuli) was strongly associated with the degree of mimicry towards both positive and negative emotion expressions. This result goes beyond the previously reported relationship of emotional empathy and mimicry of basic emotions (e.g. Dimberg et al., Citation2011; Sonnby-Borgstrom, Citation2002), because our study focused on emotional empathy elicited by the MET’s complex emotion pictures. Our results also extent recent findings of a study showing a relationship between the degree of emotional mimicry and pain empathy (Sun, Wang, Wang, & Luo, Citation2015) beyond negative emotions to positive emotions.

Mimicry and emotional empathy in the MET were associated, even after we controlled for empathic concern measured by the IRI. The questionnaire serves here as a measure of trait empathy, while the emotional empathy task of the MET measures empathy at the state level. Thus, the relationship of mimicry and emotional empathy is not reducible to the influence of personal attitude or motivation to empathise. As most research on mimicry and emotional empathy so far has focused on trait measures (e.g. Dimberg et al., Citation2011; Sonnby-Borgstrom, Citation2002), this is an interesting finding that further supports the assumption of mimicry as a mechanism to regulate affiliation within a given situation. Controlling for autistic traits did also not eliminate the association between emotional empathy and mimicry.

To better understand the relationship between mimicry and state empathy, we conducted mixed linear models that included the valence of the particular item. The analyses yielded a linear relationship between mimicry intensity and emotional empathy for positive valence only. If we considered negative emotion expressions only, individuals’ mimicry did not correlate with their reported empathy towards individuals in negative emotional contexts.

Furthermore, the correlation analysis aggregating over participants for each item showed the relationship for positive emotions not only on the individual level, but also on the stimulus level: positive facial expressions that elicited more emotional empathy across participants also lead to a more pronounced mimicry response. This supports the assumption of mimicry as a reflection of the intensity with which the emotional stimulus solicits empathy instead of just a reflection of a general level of trait empathy of a person.

In contrast to previous findings (e.g. Sun et al., Citation2015) we found a linear relationship between mimicry intensity and emotional empathy only for positive valence. A possible explanation might be that the negative facial expressions used in this study (e.g. a painful expression in a hospital scene) led to a higher emotional involvement and triggered personal memories or associations, evident in a stronger mimicry response. Thus, the mimicry response might be confounded by a reactive response to the emotional scene, a problem that has previously been noted for mimicry (Hess & Fischer, Citation2014). This confounding might have led to a dissociation of the emotional empathy response and the facial muscles response and, thus, a weaker association.

Accuracy in inferring complex emotions in the MET was also positively related to mimicry. Thus, the current study extends previous findings of a positive relationship between mimicry and basic emotion recognition (e.g. Künecke, Hildebrandt, Recio, Sommer, & Wilhelm, Citation2014; Stel & van Knippenberg, Citation2008) to complex emotions. The understanding of complex emotions is considered to depend on context, culture and attribution of mental states, rather than distinctive facial expressions (Griffiths, Citation2004). Thus, the finding of valance-congruent mimicry of complex emotions and its relationship with recognition supports the assumption that individuals mimic the valence of an emotion in context.

It should be mentioned that not all studies have reported positive effects of mimicry on emotion recognition. A possible reason why we identified such relationship might lie in the complexity of our emotional stimuli and the resulting difficulty of the emotion recognition task. Previous studies often reported ceiling effects regarding emotion recognition that might have reduced the association with mimicry. As the level of complexity in our study equals real-life situations rather than the simplicity of full-blown basic emotions, it can be assumed that mimicry comes into play in everyday life.

The mixed results in the literature regarding the relationship between cognitive empathy and mimicry might also be explained by overlooked personality traits. However, in our study the relationship of cognitive empathy and mimicry survived controlling for autistic traits. In accordance, we did not replicate previous findings of generally reduced spontaneous mimicry in individuals with high autistic traits. This might be due to the fact that we measured a non-clinical sample instead of either individuals with an autism diagnosis (McIntosh et al., Citation2006; Oberman, Winkielman, & Ramachandran, Citation2009; Stel, van den Heuvel, et al., Citation2008) or extremely high or low values on the AQ (Hermans, van Wingen, Bos, Putman, & van Honk, Citation2009).

In line with the observation that more studies in the mimicry field found a relationship with emotional than with cognitive empathy, we also found a higher level of mimicry in the MET emotional empathy than cognitive empathy condition. It seems plausible that the emotional empathy condition triggered an affiliative intent and thus enhanced mimicry (Hess & Fischer, Citation2014). This result is in accordance with a study indicating that the intensity of mimicry can be modulated by the task and the resulting goal of the participant (Cannon, Hayes, & Tipper, Citation2009). The participants in that study only showed mimicry when they were instructed to infer the emotion of facial expressions, not when they reported on the colour of the picture frame.

In our study, the effect of the emotional versus the cognitive condition on the amount of mimicry seemed much stronger for negative valence. A possible explanation for this might be that the idea of emotional empathy triggered by the request to “feel with someone” is more closely connected to showing empathy and sympathy for negative emotions than for positive ones.

We also used machine learning to analyse the predictive value of mimicry, the specific MET empathy condition (recognition versus feeling with) could be successfully predicted via a random forest and via a support vector machine (). This suggests a dissociable pattern of muscle activity in response to the task condition. The facial expression seems to be distinct depending on the motivation of the participant to empathise versus recognise an emotion, as the classifier where able to detect the task condition based on the muscle response. The aspect of differentiable mimicry responses has been neglected so far. Our findings encourage to further research different patterns of the mimicry response.

Figure 5. Learning curve of the random forest predicting the empathy condition (cognitive versus emotional).

Contrary to what was expected, the cognitive empathy task of the MET did not correlate with the perspective taking (PT) subscale of the IRI. There are several possible explanations for this lack of correlation, among others the different task formats, i.e. performance test versus questionnaire. Questionnaires require the ability to introspect and to abstract from single events to general trends (Dziobek et al., Citation2008). Further, questionnaires, are more likely than performance tests such as the MET to be biased by the tendency to give answers in a socially desirable manner (Van de Mortel, Citation2008). This is a special concern for topics with clear societal expectations about the “right” and morally esteemed answers like empathy.

Further, the MET measure performance in cognitive empathy and, accordingly, has been shown to differentiate between individuals struggling with cognitive empathy such as those with autism and healthy controls (e.g. Dziobek et al., Citation2008, Citation2011). In contrast, the IRI PT subscale targets mainly motivational aspects of cognitive empathy (e.g. “I try to look at everybody’s side of a disagreement before I make a decision”). Thus, it is not surprisingly that the cognitive empathy task of the MET as a measure of capacity and the PT subscale of the IRI as a measure of motivational aspects only correlate weakly.

Furthermore, the two measures differ also regarding the valence of their items: The IRI is limited mostly to negative situations, whereas the MET measures empathy for negative as well as for positive emotions to an equal extend. This is also reflected in a trend-level correlation between the PT scale of the IRI and the cognitive task of the MET for negative items. Thus, the cognitive empathy MET score measures an additional dimension (empathy for positive emotions), which is not covered by the IRI at all. This might also contribute to the week correlation of the two measures regarding cognitive empathy.

Our study was designed in line with the new view of mimicry as context-dependent and valence-based by presenting pictures of complex emotions embedded in a context. It could be argued that the static pictures used do not match the rich contexts of real social interactions. While this is true and more research in actual social interaction settings is needed, we used pictures depicting individuals in diverse contexts that show a variety of complex emotions with their faces and with their bodies and gestures. In addition, we used a balanced number of positive and negative emotions while most studies with dynamic stimuli have focused on basic emotions, which only include one positive emotion, i.e. happiness (Hyniewska & Sato, Citation2015; Sato et al., Citation2008). Most research on mimicry and emotional empathy focused on negative emotions, e.g. in the context of pain empathy paradigms or by using self-report measures of empathy biased towards negative emotion response options. To our knowledge, this is the first study that gives equal attention to negative and positive emotions. Future research should explore the relationship between mimicry and empathy in real social interactions.

In sum, we found evidence for an important role of mimicry in both emotional and cognitive empathy. The relationship between mimicry and emotional empathy seems stronger than the one between mimicry and cognitive empathy. Yet, with our design we can only report an association and not test for causality. Nonetheless, the present research provides strong evidence for a context-dependent view on mimicry and its affiliative role.

Acknowledgement

We would like to thank Katharina Bergunde and Christoph Klebl for help with data collection. We acknowledge support by the Open Access Publication Fund of Humboldt-Universität zu Berlin.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

References

- Adelmann, P. K., & Zajonc, R. B. (1989). Facial efference and the experience of emotion. Annual Review of Psychology, 40(1), 249–280.

- American Psychiatric Association. (2013). Diagnostic and statistical manual of mental disorders (5th ed.). Washington, DC: Author.

- Baron-Cohen, S., & Wheelwright, S. (2004). The empathy quotient: An investigation of adults with Asperger syndrome or high functioning autism, and normal sex differences. Journal of Autism and Developmental Disorders, 34(2), 163–175.

- Baron-Cohen, S., Wheelwright, S., Skinner, R., Martin, J., & Clubley, E. (2001). The autism-spectrum quotient (AQ): Evidence from Asperger syndrome/high-functioning autism, males and females, scientists and mathematicians. Journal of Autism and Developmental Disorders, 31(1), 5–17.

- Barsalou, L. W., Niedenthal, P. M., Barbey, A. K., & Ruppert, J. A. (2003). Social embodiment. In B. H. Ross (Ed.), Psychology of learning and motivation, Vol. 43 (pp. 43–92). San Diego, CA: Academic Press, Elsevier Science.

- Blair, R. J. R. (2005). Responding to the emotions of others: Dissociating forms of empathy through the study of typical and psychiatric populations. Consciousness and Cognition, 14(4), 698–718.

- Blairy, S., Herrera, P., & Hess, U. (1999). Mimicry and the judgment of emotional facial expressions. Journal of Nonverbal Behavior, 23(1), 5–41.

- Boser, B. E., Guyon, I. M., & Vapnik, V. N. (1992). A training algorithm for optimal margin classifiers. In Proceedings of the fifth annual workshop on computational learning theory, 5 (pp. 144–152), Pittsburgh, ACM.

- Breiman, L. (2001). Random forests. Machine Learning, 45(1), 5–32.

- Bush, L. K., Hess, U., & Wolford, G. (1993). Transformations for within-subject designs: A Monte Carlo investigation. Psychological Bulletin, 113(3), 566.

- Bzdok, Danilo, Altman, Naomi, & Krzywinski, Martin. (2018). Points of significance: Statistics versus machine learning. Nature Methods, 15, 233–234 .

- Cannon, P. R., Hayes, A. E., & Tipper, S. P. (2009). An electromyographic investigation of the impact of task relevance on facial mimicry. Cognition & Emotion, 23(5), 918–929.

- Davis, M. H. (1983). Measuring individual differences in empathy: Evidence for a multidimensional approach. Journal of Personality and Social Psychology, 44(1), 113–126.

- Dimberg, U. (1982). Facial reactions to facial expressions. Psychophysiology, 19(6), 643–647.

- Dimberg, U., Andréasson, P., & Thunberg, M. (2011). Emotional empathy and facial reactions to facial expressions. Journal of Psychophysiology, 25(1), 26–31.

- Dziobek, I., Fleck, S., Kalbe, E., Rogers, K., Hassenstab, J., Brand, M., … Convit, A. (2006). Introducing masc: A movie for the assessment of social cognition. Journal of Autism and Developmental Disorders, 36(5), 623–636.

- Dziobek, I., Preißler, S., Grozdanovic, Z., Heuser, I., Heekeren, H. R., & Roepke, S. (2011). Neuronal correlates of altered empathy and social cognition in borderline personality dis-order. Neuroimage, 57(2), 539–548.

- Dziobek, I., Rogers, K., Fleck, S., Bahnemann, M., Heekeren, H. R., Wolf, O. T., & Convit, A. (2008). Dissociation of cognitive and emotional empathy in adults with Asperger syndrome using the multifaceted empathy test (MET). Journal of Autism and Developmental Disorders, 38(3), 464–473.

- Eisenberg, N., & Fabes, R. A. (1990). Empathy: Conceptualization, measurement, and relation to prosocial behavior. Motivation and Emotion, 14(2), 131–149.

- Fan, Y., Duncan, N. W., de Greck, M., & Northoff, G. (2011). Is there a core neural network in empathy? An FMRI based quantitative meta-analysis. Neuroscience and Biobehavioral Reviews, 35(3), 903–911.

- Fischer, A., Becker, D., & Veenstra, L. (2012). Emotional mimicry in social context: The case of disgust and pride. Frontiers in Psychology, 3, 475.

- Fischer, A., & Hess, U. (2017). Mimicking emotions. Current Opinion in Psychology, 17, 151–155.

- Freitag, C. M., Retz-Junginger, P., Retz, W., Seitz, C., Palmason, H., Meyer, J., … von Gontard, A. (2007). Evaluation der deutschen version des Autismus-Spektrum-Quotienten (AQ) – die Kurzversion AQ-K. Zeitschrift für Klinische Psychologie und Psychotherapie, 36(4), 280–289.

- Fridlund, A. J., & Cacioppo, J. T. (1986). Guidelines for human electromyographic research. Psychophysiology, 23(5), 567–589.

- Griffiths, P. (2004). Is emotion a natural kind? In R. C. Solomon (Ed.), Thinking about feeling: Contemporary philosophers on emotions (pp. 233–249). New York, NY: Oxford University Press.

- Hermans, E. J., van Wingen, G., Bos, P. A., Putman, P., & van Honk, J. (2009). Reduced spontaneous facial mimicry in women with autistic traits. Biological Psychology, 80(3), 348–353.

- Hess, U., & Blairy, S. (2001). Facial mimicry and emotional contagion to dynamic emotional facial expressions and their influence on decoding accuracy. International Journal of Psychophysiology, 40(2), 129–141.

- Hess, U., & Fischer, A. (2013). Emotional mimicry as social regulation. Personality and Social Psychology Review, 17(2), 142–157.

- Hess, U., & Fischer, A. (2014). Emotional mimicry: Why and when we mimic emotions. Social and Personality Psychology Compass, 8(2), 45–57.

- Hess, U., Kappas, A., McHugo, G. J., Kleck, R. E., & Lanzetta, J. T. (1989). An analysis of the encoding and decoding of spontaneous and posed smiles: The use of facial electromyography. Journal of Nonverbal Behavior, 13(2), 121–137.

- Hofelich, A. J., & Preston, S. D. (2012). The meaning in empathy: Distinguishing conceptual encoding from facial mimicry, trait empathy, and attention to emotion. Cognition & Emotion, 26(1), 119–128.

- Hurlemann, René, Patin, Alexandra, Onur, Oezguer A., Cohen, Michael X., Baumgartner, Tobias, Metzler, Sarah … Kendrick, Keith M. (2010). Oxytocin enhances amygdala-dependent, socially reinforced learning and emotional empathy in humans. Journal of Neuroscience, 30, 4999–5007.

- Hyniewska, S., & Sato, W. (2015). Facial feedback affects valence judgments of dynamic and static emotional expressions. Frontiers in Psychology, 6, 150.

- Künecke, J., Hildebrandt, A., Recio, G., Sommer, W., & Wilhelm, O. (2014). Facial EMG responses to emotional expressions are related to emotion perception ability. PloS ONE, 9(1), e84053.

- McIntosh, D. N. (2006). Spontaneous facial mimicry, liking and emotional contagion. Polish Psychological Bulletin, 37(1), 31.

- McIntosh, D. N., Reichmann-Decker, A., Winkielman, P., & Wilbarger, J. L. (2006). When the social mirror breaks: Deficits in automatic, but not voluntary, mimicry of emotional facial expressions in autism. Developmental Science, 9(3), 295–302.

- Neufeld, J., Ioannou, C., Korb, S., Schilbach, L., & Chakrabarti, B. (2016). Spontaneous facial mimicry is modulated by joint attention and autistic traits. Autism Research, 9(7), 781–789.

- Niedenthal, P. M., Brauer, M., Halberstadt, J. B., & Innes-Ker, ÅH. (2001). When did her smile drop? Facial mimicry and the influences of emotional state on the detection of change in emotional expression. Cognition & Emotion, 15(6), 853–864.

- Oberman, L. M., Winkielman, P., & Ramachandran, V. S. (2007). Face to face: Blocking facial mimicry can selectively impair recognition of emotional expressions. Social Neuroscience, 2(3–4), 167–178.

- Oberman, L. M., Winkielman, P., & Ramachandran, V. S. (2009). Slow echo: Facial EMG evidence for the delay of spontaneous, but not voluntary, emotional mimicry in children with autism spectrum disorders. Developmental Science, 12(4), 510–520.

- Paulus, C. (2009). Saarbrücker Persönlichkeits-Fragebogen (SPF). Based on the interpersonal reactivity index (IRI). Saarbrücken: Universität des Saarlandes. Retrieved from http://hdl.handle.net/20.500.11780/3343/

- Ponari, M., Conson, M., D’Amico, N. P., Grossi, D., & Trojano, L. (2012). Mapping correspondence between facial mimicry and emotion recognition in healthy subjects. Emotion, 12(6), 1398–1403.

- Ritter, K., Dziobek, I., Preißler, S., Rüter, A., Vater, A., Fydrich, T., … Roepke, S. (2011). Lack of empathy in patients with narcissistic personality disorder. Psychiatry Research, 187(1), 241–247.

- Rychlowska, M., Cañadas, E., Wood, A., Krumhuber, E. G., Fischer, A., & Niedenthal, P. M. (2014). Blocking mimicry makes true and false smiles look the same. PLoS ONE, 9(3), e90876.

- Rymarczyk, K., Żurawski, Ł, Jankowiak-Siuda, K., & Szatkowska, I. (2016a). Emotional empathy and facial mimicry for static and dynamic facial expressions of fear and disgust. Frontiers in Psychology, 7, 1853.

- Rymarczyk, K., Żurawski, Ł, Jankowiak-Siuda, K., & Szatkowska, I. (2016b). Do dynamic compared to static facial expressions of happiness and anger reveal enhanced facial mimicry? PloS One, 11(7), e0158534.

- Sato, W., Fujimura, T., Kochiyama, T., & Suzuki, N. (2013). Relationships among facial mimicry, emotional experience, and emotion recognition. PLoS ONE, 8(3), e57889.

- Sato, W., Fujimura, T., & Suzuki, N. (2008). Enhanced facial EMG activity in response to dynamic facial expressions. International Journal of Psychophysiology, 70(1), 70–74.

- Schmidt, K.-H., & Metzler, P. (1992). Wortschatztest: Wst. Weinheim: Beltz Test.

- Seibt, B., Mühlberger, A., Likowski, K. U., & Weyers, P. (2015). Facial mimicry in its social setting. Frontiers in Psychology, 6(576), 1104.

- Shamay-Tsoory, S. G. (2011). The neural bases for empathy. The Neuroscientist, 17(1), 18–24.

- Shamay-Tsoory, S. G., Aharon-Peretz, J., & Perry, D. (2009). Two systems for empathy: A double dissociation between emotional and cognitive empathy in inferior frontal gyrus versus ventromedial prefrontal lesions. Brain, 132(3), 617–627.

- Sonnby-Borgstrom, M. (2002). Automatic mimicry reactions as related to differences in emotional empathy. Scandinavian Journal of Psychology, 43(5), 433–443.

- Stel, M., van Baaren, R. B., & Vonk, R. (2008). Effects of mimicking: Acting prosocially by being emotionally moved. European Journal of Social Psychology, 38(6), 965–976.

- Stel, M., van den Heuvel, C., & Smeets, R. C. (2008). Facial feedback mechanisms in autistic spectrum disorders. Journal of Autism and Developmental Disorders, 38(7), 1250–1258.

- Stel, M., & van Knippenberg, A. (2008). The role of facial mimicry in the recognition of affect. Psychological Science, 19(10), 984–985.

- Sun, Y.-B., Wang, Y.-Z., Wang, J.-Y., & Luo, F. (2015). Emotional mimicry signals pain empathy as evidenced by facial electromyography. Scientific Reports, 5, 16988.

- Tager-Flusberg, Helen, & Sullivan, Kate. (2000). A componential view of theory of mind: Evidence from Williams syndrome. Cognition, 76(1), 59–90. doi:10.1016/S0010-0277

- Van de Mortel, Thea F. (2008). Faking it: Social desirability response bias in self-report research. Australian Journal of Advanced Nursing, 25(4), 40.

- van der Graaff, J., Meeus, W., de Wied, M., van Boxtel, A., van Lier, P. A. C., Koot, H. M., & Branje, S. (2016). Motor, affective and cognitive empathy in adolescence: Interrelations between facial electromyography and self-reported trait and state measures. Cognition & Emotion, 30(4), 745–761.

- Walter, H. (2012). Social cognitive neuroscience of empathy: Concepts, circuits, and genes. Emotion Review, 4(1), 9–17.

- Wolf, I., Dziobek, I., & Heekeren, H. R. (2010). Neural correlates of social cognition in naturalistic settings: A model-free analysis approach. Neuroimage, 49(1), 894–904.

- Woodbury-Smith, M. R., Robinson, J., Wheelwright, S., & Baron-Cohen, S. (2005). Screening adults for Asperger syndrome using the AQ: A preliminary study of its diagnostic validity in clinical practice. Journal of Autism and Developmental Disorders, 35(3), 331–335.