ABSTRACT

Here, we present a module to introduce student peer review of laboratory reports to engineering students. Our findings show that students were positive and felt that they had learnt quite a lot from this experience. The most important part of the module was the classification scheme. The scheme was constructed to mimic the way an expert would argue when making a fair judgement of a laboratory report. Hence, our results may suggest that the success of the module design comes from actively engaging students in work that is more related to ‘arguing like an expert’ than to only supply feedback to peers, which in such a case would implicate a somewhat new direction for feedback research. For practitioners, our study suggests that important issues to consider in the design are (i) a clear and understandable evaluation framework, (ii) anonymity in the peer-review process and (iii) a small external motivation.

1. Introduction

Written communication is one of the engineering skills, where there are strong indications of a mismatch between skills actually developed during university studies and the higher expectations found at workplaces (Nair, Patil, and Mertova Citation2009); a development that seems to be part of a general decline of student writing abilities in the society (Carter and Harper Citation2013). Hence, there is an obvious need to train and progressively improve the writing skills during university studies to reach the desired level and there are a number of suggested methods to do this in different disciplines (Berry and Fawkes Citation2010; Carr Citation2013; Leydon, Wilson, and Boyd Citation2014; Walker and Sampson Citation2013). All these methods have in common that they try to optimise feedback and/or peer-review processes to improve writing skills. Feedback has been reported as one of the most important factors influencing student learning (Hattie and Timperley Citation2007). However, just providing students with feedback on their performance is not enough (Sadler Citation2010; Price et al. Citation2010). Students need to understand the feedback given, have an idea of the desired level of performance standard, and engage with the feedback in order to improve their performance. A promising way forward seems to be to involve students in assessment practices in various ways (Falchikov Citation2005). Being involved in assessment and feedback will give students opportunities to develop an understanding of what is required of them, the standard level and how their work is assessed. Peer assessment, where students assess the work of their peers, has in recent years been more and more common in higher education (Falchikov Citation2007) and its use is also recommended to increase in early university studies (Nulty Citation2011). Besides that, evidence suggests that formative peer assessment and peer feedback are more positive for learning than peer grading (Liu and Carless Citation2006), or in other words, peer review seems to work best when it focus on formative rather than summative assessment. An important step in this process is for students to learn about evaluation frameworks, or assessment criteria, and let them practice using these frameworks (Walker and Williams Citation2014; Rust, Price, and O’Dononvan Citation2003). The ability to judge the quality of their own and otherś work is also a competence that students need to develop (Cowan Citation2010). In addition, there are evidence that performing a peer review is more beneficial for the student's own writing than receiving feedback from others (Lundstrom and Baker Citation2009; Mulder, Pearce, and Baik Citation2014). It seems that evaluating other's work and producing comments for a peer review involves a reflective process where students actively compare their own work with that of others (Nicol, Thomson, and Breslin Citation2014). Providing reviews of other's work involves critical thinking, reflection, evaluation and application of assessment criteria, which may explain the benefits compared to merely receiving feedback. Reviewing peer's work enables students to practice the cognitive skills they will need when improving their own writing, which further sheds light on the benefits of peer review (Cho and MacArthur Citation2011).

In this work, we present a module design where peer review of lab reports in a standard course is used in connection with an evaluation framework designed to mimic how an expert would argue when assessing a report. By actively engaging the students in assessment work through the peer-review process, we hoped to increase the students’ self-awareness of the standards in such a way that they were able to start to internalise expert judgement abilities about report assessment and writing. The module design is described below together with our evaluation based on a student survey.

2. Module design

The module was originally introduced in response to an observation that too many students were not able to respond adequately to the given feedback on their written lab reports. At that time, lab reports on two different lab exercises were written jointly in groups of two students and corrected by teaching assistants, who gave direct feedback to the students about how to improve their reports. Discussions among teachers concluded that many students did not have a sufficiently deep understanding of how to write good reports and how to avoid making serious mistakes in their reports. Also, a few students attempted to pass the examination with as little effort as possible without taking proper responsibility for their own learning, which created additional and unnecessary work for the assistants. To address these issues, it was decided to redesign the module using peer review to encourage all students to work actively with their learning about how to write reports.

The revised laboratory module included 8 hours of lab work with two different lab exercises (same as before), an individual lab report and a peer-review assignment. The students worked in groups of two students during the lab work and each of them were asked to (i) write a report on one of the two lab exercises and (ii) write a peer-review report on another student's lab report from the other lab exercise. This ensured that all students were obliged to go sufficiently deep into their understanding of the subjects covered by both lab exercises.

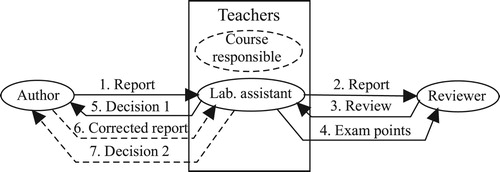

The procedures followed during the peer-review process had many similarities to the ones used by scientific journals and is shown schematically in . Both authors and reviewers wrote their reports (reviews) using pseudonyms in order to create the feeling of a safe environment for all participating students. The students were also informed that many people feel uncomfortable when giving away their work for review for the first time and that they should keep their pseudonyms secret. To preserve the anonymity between students during the process, all communication went through the teaching assistant. The course responsible only handled the rare cases when a disagreement occurred between a student and the assistant. Furthermore, to reduce the workload of the assistant, there were specific assistant instructions for each of the different steps. These were:

The assistant made a 30-second check of the original report for readability and overall impression (step 1) before sending it out to another student for review (step 2). For practical reasons, the assistant could optionally decide to either send out the report to two reviewers (e.g. if previous year students wanted to redo their peer review) or in exceptional cases to a teacher (e.g. if the report was too bad or by some reason had been sent in very late).

The assistant read the review report (step 3) and looked for major errors in the lab report that had been missed in the review report before grading the review report (step 4). A good review report gave a few bonus points on the written exam in the course corresponding to about 6% of the total examination.

The assistant sent back a decision for acceptance or non-acceptance of the lab report to the author together with the review report(s) from the reviewer(s) and possible additional major errors found by the assistant (step 5).

If necessary, the report was improved according to the recommendations (step 6) and sent back to the assistant for a final decision (step 7).

Figure 1. The main steps of information transfer between parties during the peer-review process. Numbers indicate the timing of the different steps and dashed lines show optional parts of the process.

From a student perspective, this was the first time they wrote a peer-review report and it was necessary to explain why peer review is important for an engineer and to supply some guidance in this work. For this purpose, a half-lecture module (45 minutes) was developed with the specific goal of making the students familiar with the review process and how to make proper judgements on report quality. The lecture module included (i) an introduction to why peer assessment is important for engineers, (ii) a short introduction to how peer review is used by scientific journals, in companies and in this course, (iii) a motivation for the evaluation framework used during the peer review and (iv) a peer discussion in groups of 3–5 students about how to categorise errors/faults in a fictive lab report handed out in advance. At the end of the lecture, an exemplar of how a peer-review report on the fictive lab report could have looked like was handed out to the students.

The evaluation framework used in the peer review was based on a classification scheme for categorising the severity of various kinds of errors and faults in a lab report together with an overall judgement scheme based on the total severity of all errors and faults found in the report. The following four categories were used to categorise the severity (see Appendix for the full classification scheme):

Category 1: Incorrect or misleading reporting (very serious errors/faults)

Category 2: Non-acceptable but not misleading reporting (serious errors/faults)

Category 3: Mistakes that need to be corrected (smaller errors/faults)

Category 4: Acceptable reporting that can be improved (details)

The students were also informed about two main problems when categorising errors, namely that (i) all types of errors/faults could not be found in the scheme, and in such cases, they had to make their own judgement of the severity according to the given scale and (ii) different persons could categorise in slightly different categories even if they had read the same report, which reflects that human beings are inherently different. The students were also told that this is not necessarily wrong due to the imprecise way of defining categories. The level for finally accepting the reports to pass the examination was set to a few errors/faults in category 4. A basic idea behind this way of using categories in the evaluation was to give the students an overall idea of some basic principles behind well-informed judgements by experts and, thereby, train the students in making such judgements themselves.

3. Method of investigation

3.1. Participants and context

The module design was used and developed during 5 years for a group of second-year undergraduate students in the Electrical Engineering program at KTH Royal Institute of Technology, Stockholm, Sweden with about 40 participants each year. The students in this study had an earlier training in writing reports as part of a first-year project course in electrical engineering (Lilliesköld and Östlund Citation2008), but in that case feedback on the reports were supplied by teachers. Hence, the module described here was the first time the students met peer review during their university studies. This group of students will also make a written opposition on a written report as part of their third-year bachelor thesis project, which means that the module described here fits well into a written communication unit of an engineering skills progression model like, e.g. the CDIO model (Crawley Citation2002).

3.2. Data collection and analysis

The participants in the latest group of students (year 2014) completed an anonymous online survey directly related to peer review (63% response frequency, 27 respondents) combined with the standard anonymous course survey at KTH. The survey directly related to evaluation of the peer-review process included 15 rating items on an 11-point scale (from 0 = strongly disagree to 10 = strongly agree, with 5 as neutral) and 3 free answer questions, which together constituted 42% of the total course survey. The statements in the survey were chosen to give information about four different aspects related to the module, namely the student's view on (i) their report writing ability, (ii) their peer-review ability, (iii) the importance of different teaching activities for their understanding of peer review and (iv) their emotional reactions on the process. This rating description of student's views was complemented with a qualitative content analysis (Elo and Kyngäs Citation2008) on the free answer questions. Students from previous years (2010–2013) completed also a short anonymous survey about the module as part of the standard course survey and the answers to the free answer questions in those surveys were included in the qualitative content analysis. Thus, the empirical material in the study comprised of both quantitative and qualitative data and in that respect the study is inspired by a mixed method design (Cresswell and Clark Citation2018). Although the quantitative and qualitative data was analysed separately, together they gave a more nuanced picture of students’ perception of the peer review. The main reason for doing a combined investigation is to ensure that both majority and minority aspects are properly handled in the evaluation. A pure rating description risks to neglect minority aspects, while a content analysis alone risks to give an improper balance between different aspects. Finally, the lab assistants were asked to give their opinions about the process.

4. Results

Our sample size (27 respondents) was slightly too small to be considered a valid minimum statistical investigation within the field (see e.g. Cohen, Manion, and Morrison Citation2011). Furthermore, to avoid going too deeply into the long-standing debate about details in the validity of statistical interpretations of rating items (Jamieson Citation2004; Lantz Citation2013; Norman Citation2010), we have chosen to illustrate the answers to the rating items in our survey by the full range of the responses among the respondents, by the median value and by the range of the second and third quartiles. This will give a fair representation of our results and rather well reflect the students’ opinions.

4.1. Rating items

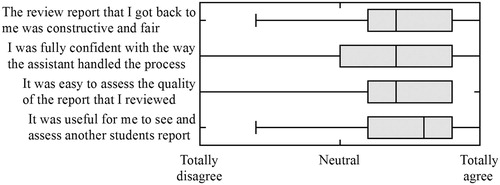

The first rating items concerned the students’ perception of their understanding of report writing and peer review after the module and a summary is shown in . Although there was a certain individual variation, most students stated that they had a reasonable understanding of what is needed to write a good report and how to assess reports after the module. At the same time, they felt that they needed some more practice on both of these issues and that the training in the course helped them to understand how to assess reports and to a lesser extent how to write good reports. This is fully consistent with the expectations one had from knowing that these students had been trained to write reports in a previous course, but had no previous training in reviewing.

Figure 2. Student ratings on statements concerning their understanding of report writing and peer-review skills on an 11-point scale from total disagreement to total agreement. The outer bars give the range of all answers, the boxes show the range of the second and third quartiles among the answers and the middle bar is the median value. On the last question, the median value coincides with the right end of the box.

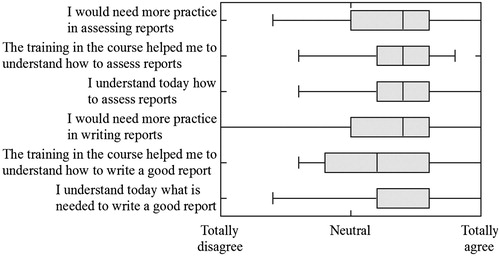

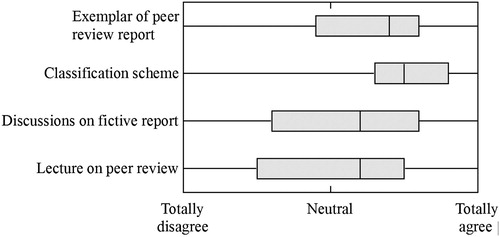

The student view on what was most important for their learning and understanding of peer review is summarised in , where they were asked to rate how important different parts of the training was for their understanding of report assessment. The students’ rating of the classification scheme clearly stuck out in this analysis, showing that they considered it to be the most important part of their learning experience. Furthermore, when analysing the original data in more detail, the width of the answers to this statement was to a large extent created by one single student, who totally disagreed on the statement. This singular student also answered other questions in the survey in a clearly different way compared to other students. He/she rated all the course activities about peer review very low (almost totally disagreed on their importance for understanding peer review), indicated a large self-confidence in understanding writing and reviewing (totally and almost totally agreed on the statements about understanding) and felt no need for further training in writing and reviewing (totally and almost totally disagreed on the statements about the need for further training). If the answer from this student was artificially removed from the data, the range of answers became much smaller (from slightly below neutral to totally agree) and the variation in the answers decreased, which strengthened the conclusion that the classification scheme was the most important part of the module in this course.

Figure 3. Student view on the importance of different parts of the training when asked to rate statements like ‘The exemplar of a peer-review report was important for my understanding of how to assess reports’, etc. The figure should be interpreted in the same way as .

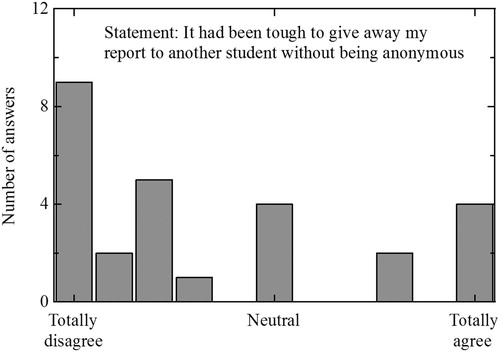

From the answers on the four rating items in related to the student reactions on the peer-review process, the general opinion among the students was that they appreciated the peer-review learning experience and the way it was handled. On all of these statements, there were only a few students that answered on a level below neutral, which indicated that the learning experience with the peer-review process was positive for the students. However, as shown in , the student views were highly polarised when it came to their opinions about the necessity of author anonymity in the peer-review process. There was a substantial minority group (6 out of 27 students in our investigation), which would have felt clearly uncomfortable to give away their written work to another student for review without being anonymous. On the other hand, the majority did not think it was important at all and four students were neutral to the statement. It is obviously important to be aware of this polarisation among the students when introducing peer review for the first time.

4.2. Qualitative content analysis

The student reactions to the peer-review process was also analysed by a qualitative content analysis of the free answer questions in the survey including all the comments given by the students. All written comments (60 in total) were divided into themes and subthemes and then analysed in more detail. From this analysis, we identified four themes as described below [brackets indicate the respondent number in the survey 2014 or alternatively it gives the year of the comment].

4.2.1. Review of others reports

Most of the comments were related to this theme and subthemes to it, and it was clear from the comments that the students appreciated to ‘ … see how others did’ [R17] and ‘that you get the opportunity to review a lab report, which I seldom have met before’ [R18]. Hence, the students considered the novel experience by itself to be interesting. To some students, it was clear that the experience with the peer review also helped their own learning as exemplified by the following two comments related to critical thinking and self-reflection respectively

To let us assess each other's report was a good module. One should find as many errors as possible in the report and then you learn how to think critically. [R24]

It gives an opportunity to train how to make an assessment of someone else's report. This also trains one's own ability to realise faults in the own writing. [R25]

Another aspect of letting the students review reports is that they realised the importance of subject knowledge for the review process as, e.g. phrased through the comment ‘to be able to write a good peer review you need to know the subject’ [R24]. It is specifically mentioned by students that it is important to ‘follow the peer review scheme’ [R13] i.e. that the classification scheme was important. Finally, students also related their peer-review experience in the course to professional knowledge as indicated by the comments ‘it is good to know how the teacher is correcting’ [R16] and ‘it gave a good insight about how you can work with reports in real workplaces’ [R10].

4.2.2. Feedback on own report

There were two main issues related to the received feedback mentioned in the comments – constructive criticism on one's own work and the feeling of unfair assessment. These very different reactions are related to how well the criticism was written and how individual students perceived the criticism. A positive comment that related both to constructive criticism and improvements was phrased:

The feedback that I got on my report was very good, so I got an opportunity to improve my own report writing. [R19]

The negative comments concerned either the feeling of unfairness due to non-objective criticism in the review report or due to differences in different persons’ interpretation of the evaluation criteria. Students were disappointed both when the review reports were too short and when they went too much into detail, as seen in these two comments:

… I would have liked to see a longer evaluation of my own work even if it is not possible to prevent that people do a bad job with their review. [R25]

Most of us have never done anything similar before and can, therefore, be very critical to details. Most of us seem to have corrected the reports with an iron hand instead of comparing the author's report against the goals. It must be clearer that the reviewer should correct against the goals. [2012]

The grading of the review report also gave the students some feedback on the quality of their peer review, although one student commented on the need for ‘feedback on what was good and what was bad with the peer review you wrote’ [2012].

4.2.3. External reward

In the module design, students got an external reward for a good peer-review report in the form of bonus points on the exam in the course (6% of the total exam). This motivated all students to write their review report in due time. 40% of the students were able to achieve the maximum bonus points on their review reports. One student even wrote ‘bonus points have been of great use when making the peer review’ [R23].

4.2.4. Anonymity

It was clear from the rating items and that there was a strong polarisation within the group of students concerning the necessity of anonymity during the process. This was further reflected in the comments and one student summarised it in a good way

Pseudonyms: Personally, I have no problem giving out my own name, but I understand if others don't like it. So, it was good for them. [2010]

One student also made us aware of a conflict between anonymity and the default settings in standard software, which we in the future need to communicate to students in order to preserve anonymity in the review process.

… The assistant ought to check that the documents, which he sends between the participants, do not contain personal identifying information, as e.g. the author field in pdf documents (ctrl-d). [Some software] saves the name of the logged in user in each document. This can be changed in the settings. [R3]

5. Discussion

In this paper, we reported on a module where engineering students were introduced to peer review of a lab report using a classification scheme to find things to improve in their peer's report. The classification scheme was invented to concretise for students what they should look for when evaluating a laboratory report and at the same time give them a fundamental understanding about how severe different types of errors were for the laboratory report as a whole. The inspiration for the scheme came from the concepts of fuzzy sets (Klir and Yuan Citation1995) and multi-dimensionality, the necessity to include both physics skills and writing skills in the overall evaluation and an intention to make the complex evaluation of a laboratory report easily understandable for engineering students.

It is clear from both the quantitative and the qualitative data that the students in our study thought that the peer review was a positive learning experience and that the opportunity to review others’ reports gave new insights. By actively engaging students in the critical thinking about reports through the peer-review process, we then speculate that they simultaneously built up their own basic knowledge about how objective judgement is made, which in turn should help them in their own writing. Reading the report and producing comments require students to think critically, reflect, evaluate and make judgements, which is beneficial for their learning (Cho and MacArthur Citation2011). There are also evidence that when engaging in activities where students get insights into how other students solve the same task, they become aware of their own level of knowledge and performance (Weurlander et al. Citation2012; Nicol, Thomson, and Breslin Citation2014). This process, called inner feedback, seems more important for their learning than the external feedback given by peers (Lundstrom and Baker Citation2009; Mulder, Pearce, and Baik Citation2014; Nicol and Macfarlane-Dick Citation2006) and it seems reasonable to hypothesise that training of inner feedback will be beneficial for learning.

The feedback given on their own report was appreciated when the students perceived it to be fair and constructive. Constructive comments were helpful to improve the report. However, some students felt that the feedback was unfair and/or too short, and consequently did not help them to improve their report. These experiences highlight the importance of clear guidance to students and a discussion about what a constructive feedback entails. Furthermore, our findings suggest that it is important to foster a nonthreatening environment when introducing peer review or peer assessment. For some students in our study, the fact that the peer review was anonymous was important. They would not have liked the peer review if it were open. Having other students scrutinise and judge your knowledge and the quality of your work can cause anxiety and this need to be taken into account when introducing peer review or peer assessment (Cartney Citation2010). A way to minimise anxiety and stress related to being scrutinised by peers for the first time seems to be to ensure anonymity.

External reward in the form of bonus points on the final exam was appreciated by the students and probably motivated many to put some extra effort into the peer-review task. There is, however, evidence that suggest that external rewards may decrease intrinsic motivation and thereby influence learning in an unwanted way (Deci, Koestner, and Ryan Citation2001). If students engage in a learning task merely for the external reward, and if they interpret the reward as a way to control their behaviour (to do the peer review) they might not benefit fully from the experience. In our case, the reward was rather small (bonus points corresponding to 6% of the total examination in the course) and could be considered as a way to encourage students to dare to engage in something completely new to them. However, care should be taken in how rewards are used for learning, and the effect should be further explored within this context.

5.1. Classification scheme

The students clearly rated the classification scheme much higher than any of the other parts of their training and this fact requires a more thorough discussion. One reason for the success of the classification scheme may be that it gave students a concrete tool, which they could easily use in their own assessment work. However, the most important learning contribution is expected to come from the peer-review process that forced the students to work actively with the peer assessment criteria of the report (Rust, Price, and O’Dononvan Citation2003). In line with this, the use of exemplars and student involvement in assessment using criteria has been shown to be beneficial for students’ understanding of the assessment procedure (Sadler Citation2010; Price et al. Citation2010). An important part of the evaluation framework consisted of making judgements based on multiple criteria and putting that together to an overall judgement. This mimics the way an expert would argue when writing down a justification for a fair overall judgement. Hence, instead of relying on a direct holistic judgement method, the students were actively engaged in finding arguments for their overall judgement. Hence, we speculate that it was the ‘arguing like an expert’ component of the evaluation framework that was found to be so attractive for the students when making their peer review. In educational research, there is a present discussion about rethinking feedback practices in higher education from a peer-review perspective (Sadler Citation2010; Nicol, Thomson, and Breslin Citation2014). Our study seems to support these lines with the additional twist that it could be the active work with a task that trains the ability to find proper arguments for a judgement that leads to the actual learning. In such a case, the design used in this study could correspond to a direct training of the students’ inner feedback mechanisms. This would then suggest that the evaluation framework acts in a formative way, which would distinguish it from the evaluation-oriented approach often found in traditional rubrics.

Another aspect that may suggest the evaluation framework used here to be particularly suitable for engineering students (or presumably in a broader sense to science students) is that students in this study are familiar with handling problems by first making an analysis of the situation and then putting together the different parts into an overall synthesis. From this, we speculate that the structure of the classification scheme may be more attractive and clear for engineer students than trying to make a holistic judgement. We had one singular opposing answer saying that the classification scheme was not at all important for the understanding of assessment. Since this student showed a large self-confidence in report writing and peer review on other statements in our survey, it may suggest that the classification scheme is important for the initial learning of how to assess report, but that already experienced writers can have gained the assessment ability through other learning paths. However, we cannot draw any safe conclusions from the answers of one single student and this is still a fully open question. The only thing we can safely state at the present stage is that the two issues raised above in our discussion about the classification scheme clearly suggest further investigations.

5.2. Implications for practice

An obvious advantage with the presented module design is that it is fairly simple to introduce it into already existing courses containing lab work and traditional teacher corrected reports. Hence, the teacher can create an enhanced and deeper student learning about report writing without too much additional effort. The additional initial work concerns preparing the lecture on peer review, writing down a classification scheme with errors/faults that guide students in their peer review and informing the assistants of their role in the process. The additional workload during the course comes from the one-hour introduction lecture on peer review, while the total workload with the reports is roughly unchanged and should even be possible to reduce with the aid of appropriate software. However, there was a tendency for the assistants to put in more work than was actually required. The reason for this was probably that in the design used here, there was no clear division between formative and summative assessment of the lab reports when seen from the assistant's perspective. In a lab course with more labs, a better design would probably be to use the first lab reports for formative assessment by peer review as here and only use the last lab report for summative assessment by the assistant.

As seen from our quantitative data, anonymity turns out to be very important for some of the students, while the majority have no problems at all to show their real names on the reports. From the qualitative data, it is also clear that this is an aspect where student views are highly polarised. In our view, it is important to keep the authors and reviewers anonymous to each other when students review each other's reports for the first time. This creates an including and safe environment for those students that are frightened by the process and anonymity can help them to overcome their fear and train their self-confidence for the future. When students later on become more acquainted with peer review, anonymity should probably be much less of a problem. When implementing this in practice, we let the students freely choose pseudonyms from a predefined and long list of names (with a rough gender balance and also including names with other ethnical background) to ensure that students get different pseudonyms and that they are free to choose a pseudonym they feel comfortable with.

A final aspect is that although most students wrote quite good peer-review reports, there were a few ones that concentrated on details while missing major errors/faults in the lab reports. In such cases, the assistants needed to add additional comments to ensure a sufficiently high quality of the final lab report that was used for grading. In a modified design this could be circumvented by e.g. requiring more review reports for each lab report and, thereby, reducing the consequences of a single review report of bad quality.

Acknowledgements

We thank the lab teachers in the course, Olof Götberg and Viktor Jonsson, for their comments and suggestions for improvements during the development of this module.

Disclosure statement

No potential conflict of interest was reported by the authors.

Notes on contributors

Magnus Andersson planned, prepared and developed the module and was also course responsible for the course. He holds a Ph.D. degree in materials physics and his main research interests are in superconductivity and complex problems. He has more than 20 years of teaching experience and is presently appointed as pedagogic developer at KTH.

Maria Weurlander assisted in the discussions and writing of the article. She holds a Ph.D. degree in medical education and her research is focused on student learning in higher education and designing for enhanced learning. She has more than 10 years of experience in educational development in higher education.

ORCID

Magnus Andersson http://orcid.org/0000-0002-9858-6235

Maria Weurlander http://orcid.org/0000-0002-3027-514X

References

- Berry, D. E., and K. L. Fawkes. 2010. “Constructing the Components of a Lab Report Using Peer Review.” Journal of Chemical Education 87 (1): 57–61.

- Carr, J. M. 2013. “Using a Collaborative Critiquing Technique to Develop Chemistry Students’ Technical Writing Skills.” Journal of Chemical Education 90 (6): 751–754.

- Carter, M. J., and H. Harper. 2013. “Student Writing: Strategies to Reverse Ongoing Decline.” Academic Questions 26 (3): 285–295.

- Cartney, P. 2010. “Exploring the Use of Peer Assessment as a Vehicle for Closing the Gap Between Feedback Given and Feedback Used.” Assessment & Evaluation in Higher Education 35 (5): 551–564.

- Cho, K., and C. MacArthur. 2011. “Learning by Reviewing.” Journal of Educational Psychology 103 (1): 73–84.

- Cohen, L., L. Manion, and K. Morrison. 2011. Research Methods in Education. 7th ed. Abingdon: Routledge.

- Cowan, J. 2010. “Developing the Ability for Making Evaluative Judgements.” Teaching in Higher Education 15 (3): 323–334.

- Crawley, E. F. 2002. “Creating the CDIO Syllabus, a Universal Template for Engineering Education.” 32nd annual conference on Frontiers in Education (FIE 2002), Boston, November 6–9, (2) F3F:8–13.

- Cresswell, J. W., and V. L. P. Clark. 2018. Designing and Conducting Mixed Methods Research. 3rd ed. London: SAGE Publications Ltd.

- Deci, E. L., R. Koestner, and R. M. Ryan. 2001. “Extrinsic Rewards and Intrinsic Motivation in Education: Reconsidered Once Again.” Review of Educational Research 71 (1): 1–27.

- Elo, S., and H. Kyngäs. 2008. “The Qualitative Content Analysis Process.” Journal of Advanced Nursing 62 (1): 107–115.

- Falchikov, N. 2005. Improving Assessment Through Student Involvement: Practical Solutions for Aiding Learning in Higher and Further Education. London: Routledge-Farmer.

- Falchikov, N. 2007. “The Place of Peers in Learning and Assessment.” In Rethinking Assessment in Higher Education: Learning for the Longer Term, edited by D. Boud and N. Falchikov, 128–143. London: Routledge.

- Hattie, J., and H. Timperley. 2007. “The Power of Feedback.” Review of Educational Research 77 (1): 81–112.

- Jamieson, S. 2004. “Likert Scales: How to (Ab)use Them.” Medical Education 38 (12): 1217–1218.

- Klir, G. J., and B. Yuan. 1995. Fuzzy Sets and Fuzzy Logic: Theory and Applications. Upper Saddle River, NJ: Prentice Hall. Chapter 1: From classical (crisp) sets to fuzzy sets: a grand paradigm shift.

- Lantz, B. 2013. “Equidistance of Likert-Type Scales and Validation of Inferential Methods Using Experiments and Simulations.” The Electronic Journal of Business Research Methods 11 (1): 16–28.

- Leydon, J., K. Wilson, and C. Boyd. 2014. “Improving Student Writing Abilities in Geography: Examining the Benefits of Criterion-Based Assessment and Detailed Feedback.” Journal of Geography 113 (4): 151–159.

- Lilliesköld, J., and S. Östlund. 2008. “Designing, Implementing and Maintaining a First Year Project Course in Electrical Engineering.” European Journal of Engineering Education 33 (2): 231–242.

- Liu, N., and D. Carless. 2006. “Peer Feedback: The Learning Element of Peer Assessment.” Teaching in Higher Education 11 (3): 279–290.

- Lundstrom, K., and W. Baker. 2009. “To Give Is Better Than to Receive: The Benefits of Peer Review to the Reviewer’s Own Writing.” Journal of Second Language Writing 18 (1): 30–43.

- Mulder, R. A., J. M. Pearce, and C. Baik. 2014. “Peer Review in Higher Education: Student Perception Before and After Participation.” Active Learning in Higher Education 15 (2): 157–171.

- Nair, C. S., A. Patil, and P. Mertova. 2009. “Re-engineering Graduate Skills – a Case Study.” European Journal of Engineering Education 34 (2): 131–139.

- Nicol, D., and D. Macfarlane-Dick. 2006. “Formative Assessment and Self-regulated Learning: A Model and Seven Principles of Good Feedback Practice.” Studies in Higher Education 31 (2): 199–218.

- Nicol, D., A. Thomson, and C. Breslin. 2014. “Rethinking Feedback Practices in Higher Education: A Peer Review Perspective.” Assessment & Evaluation in Higher Education 39 (1): 102–122.

- Norman, G. 2010. “Likert Scales, Levels of Measurement and the “Laws” of Statistics.” Advances in Health Sciences Education 15: 625–632.

- Nulty, D. D. 2011. “Peer and Self-assessment in the First Year of University.” Assessment & Evaluation in Higher Education 36 (5): 493–507.

- Price, M., K. Handley, J. Millar, and B. O’Donovan. 2010. “Feedback: All that Effort, but What Is the Effect?” Assessment & Evaluation in Higher Education 35 (3): 277–289.

- Rust, C., M. Price, and B. O’Dononvan. 2003. “Improving Students’ Learning by Developing Their Understanding of Assessment Criteria.” Assessment & Evaluation in Higher Education 28 (2): 147–164.

- Sadler, D. R. 2010. “Beyond Feedback: Developing Student Capability in Complex Appraisal.” Assessment & Evaluation in Higher Education 35 (5): 535–550.

- Walker, J. P., and V. Sampson. 2013. “Argument-driven Inquiry: Using the Laboratory to Improve Undergraduate’s Science Writing Skills Through Meaningful Science Writing, Peer-Review and Revision.” Journal of Chemical Education 90 (10): 1269–1274.

- Walker, M., and J. Williams. 2014. “Critical Evaluation as an Aid to Improved Report Writing: A Case Study.” European Journal of Engineering Education 39 (3): 272–281.

- Weurlander, M., M. Söderberg, M. Scheja, H. Hult, and A. Wernerson. 2012. “Exploring Formative Assessment as a Tool for Learning: Students’ Experiences of Different Methods of Formative Assessment.” Assessment & Evaluation in Higher Education 37 (6): 747–760.

Appendix: Classification scheme

Category 1: Incorrect or misleading reporting (very serious errors/faults)

Incorrect description of the physics or incorrect equations

Physical properties have incorrect units or units are totally missing

Incorrect calculations and/or numerical errors (answer is misleading)

Conclusions are incorrect or totally missing

Summary is misleading or totally missing

References to others work used in the report are missing or incorrect

Category 2: Non-acceptable but not misleading reporting (serious errors/faults)

Physical property has missing unit, but correct unit is obvious from the text

Figures have no grading of the axes or text on the axes

Figures and/or tables have no captions

Small numerical errors or too many digits in a numerical answer

Front page is missing or badly formatted

Summary does not include essential information or is vaguely written

References to others work in not sufficiently clear to be easily understood

One or more essential details are missing in the report and must be added

Too many spelling and grammatical errors that disturbs the general impression

Generally hard to follow the argumentation in the report

Category 3: Mistakes that need to be corrected (smaller errors/faults)

Irritating large or too small text in figures and/or tables

Grading of axes in figures are not aesthetically appealing

Front page is somewhat badly formatted

Summary is in part somewhat vaguely written

References to others work can be understood, but should be better presented

One or two less important details are missing in the report and need to be added

Some spelling and/or grammatical errors that slightly disturb the impression

In some parts difficult to follow the argumentations

Category 4: Acceptable reporting that can be improved (details)

Slightly too large or too small text in figures and/or tables

Minor aesthetical errors in the layout of the report

Front page can be somewhat improved

Summary can be somewhat improved

One or two minor details are missing in the report and could optionally be added

A few minor spelling and/or grammatical errors

Slightly difficult to follow the argumentation in the report