ABSTRACT

Return on investment (ROI) has become part of the policymaking toolkit, particularly pertinent for activities like school-based career guidance deemed optional by some policymakers. There are institutions supporting ideal ROI methods alongside an academic critique, but little research on how ROI has been applied in practice in a guidance setting. In this systematic review, we document 32 ROI studies across nine countries that address either school-based guidance or one of three congruent fields: widening participation in education, behaviour in schools and adult career guidance. We find the corpus highly heterogenous in methods and quality, leading to problems in comparability. We argue for a pragmatic approach to improving consistency and the importance of policymakers’ capacity for critically reading ROI studies.

Introduction

School-based career guidance supports individuals to “discover more about work, leisure and learning and to consider their place in the world and plan for their futures” (Hooley et al., Citation2018; Mann et al., Citation2020; Covacevich et al., Citation2021). It is an educational activity that has been identified by numerous policymakers as important to the achievement of policy goals in relation to the labour market, education, social equity, health and wellbeing, the environment and justice (Robertson, Citation2021). Following the Covid crisis, key international bodies including the Organisation for Economic Co-operation and Development (OECD), the International Labour Organization (ILO) and the United Nations Educational, Scientific and Cultural Organization (UNESCO) came together into an international policy Working Group on Career Guidance (WGCG) to argue for investment in career guidance (WGCG, Citation2021).

Yet, despite high-level championing, career guidance has often struggled to establish itself. The WGCG (Citation2021) reports conclude that in “too many countries access to guidance is insufficient, particularly for those who are in greatest need”. The seeming contradiction faced by career guidance of being simultaneously celebrated by some as a policy cure-all whilst struggling to access sufficient funding and support is perhaps partly because it is the focus of a small community who are not wholly contained within any one policy area (Hooley & Godden, Citation2022).

Consequently, career guidance frequently finds itself scrabbling for resources and seeking to demonstrate its value to multiple possible masters. In such cases career guidance, as with many other educational interventions, is asked to demonstrate the evidence underpinning its claims and ultimately that it offers a return on investment (ROI), or equivalently, that it has net positive value in cost-benefit analyses (CBA) or benefit-cost analyses (BCA). Since the early 2000s policy actors have been more explicit about the need to build this kind of evidence, with the OECD (Citation2004) calling for more information on outcomes and cost-effectiveness as part of its international review of guidance policy and practice.

UK policymakers and their delivery bodies have returned to the question of ROI for school-based career guidance in recent years. This has seen a slew of new projects and papers investigating the costs and potential economic benefits of guidance in Northern Ireland (Hughes & Percy, Citation2022), Wales (Careers Wales, Citation2021), Scotland (Hooley et al., Citation2021) and England (Percy & Tanner, Citation2021). The UK is not alone in this concern with considerable energy also devoted to guidance and ROI within the European Union (Cedefop, Citation2020), as well as emphasis in the related fields of US school choice (Page et al., Citation2019) and international perspectives on technical education (UNESCO & National Centre for Vocational Education Research [NCVER], Citation2020).

Given the growing efforts in this area among both policymakers and researchers, it is valuable to step back and consider what ROI really means and to take stock of the state of the art in this area.

What is ROI?

At its most straightforward, a fiscal perspective can be adopted, assessing whether public investment in career guidance will deliver a return on investment to the Treasury. In other words, if a government’s finance ministry invests in career guidance, what does it get back for its investment in terms of changes to future government outgoings (e.g. payments for public services or welfare) and incomings (e.g. tax). Another way of presenting ROI-type insights is a breakeven analysis, which is helpful when ROI point estimates are hard to justify. This identifies what level of impact on a particular outcome needs to be believed for an intervention’s cost to be recouped. If this assumption feels intuitively plausible or conservative, then funders may be convinced the programme will add value. In this paper, we focus on ROI analyses that generate point estimates, excluding those that only produce breakeven analyses.

An extensive methodological literature and supporting organisations have developed to facilitate ROI-related practice. In the UK, the Green Book (Her Majesty’s Treasury [HMT], Citation2020) sets out the government’s aspirations and preferred methods for policy appraisal and evaluation, including Social Cost Benefit Analysis. The UK’s What Works Network was launched in 2013 and comprises several government-supported centres to improve the quality of quantitative analysis and financial evaluation available to guide policy and practice. In the US, the Center for Benefit-Cost Studies in Education supports good practice approaches and conducts research; Washington State Institute for Public Policy (WSIPP) is supported by the Washington State Legislature to document technical BCA methods and apply them to a wide range of policies (WSIPP, Citation2019). Levin et al. (Citation2018) are into their third edition of a popular textbook which addresses BCA methods in the field of education.

At the same time, an academic critique exists, raising both methodological and conceptual challenges. Methodological challenges include what to measure and how to measure it, including the incorporation of outcomes like wellbeing, health or environmental improvement (e.g. Pathak & Dattani, Citation2014). Conceptual challenges include: whether ROI research and the quantitative evidence base behind them can ever fully, or even substantially, capture the impact of an educational activity such as career guidance; the limitations of ROI as developed in a social dynamic characterised by inequality and the potentially distorting funding structures of a marketised context; and the elevation of more easily-measured outcomes and inputs over others (e.g. House, Citation1996; Percy & Dodd, Citation2021; Watts, Citation1995).

All these critiques are serious and need to be engaged with as ROI practice in career guidance is developed. However, the realpolitik is such that it is difficult to abandon the method altogether and conclude that ROI is too morally, methodologically and philosophically problematic to pursue. To do so would be to leave policy analysts to continue to calculate ROI without critical scrutiny from the academic community.

It is also possible to make a defence of ROI as a methodology, even where its common applications to date might be unsatisfactory. In a world where resources are constrained, it is reasonable for policymakers to seek ways to compare different kinds of policies and interventions and to consider what the relative impacts of them will be. Seen as one among several inputs into decision-making, ROI provides a framework for surfacing and challenging assumptions made in such comparisons, where alternatives may lead to overreliance on rhetoric or political convenience.

Article contribution

In this article we want to provide a concrete description of what ROI practice looks like in career guidance and related fields, both to guide efforts to improve practice in the future and to ground the ongoing ethical and policy debates about ROI: What costs and returns are people measuring, what calculations are they performing and what level of ROI are they finding. The article is centred on ROI as it relates to career guidance in secondary education (termed “school-based career guidance” here for short). Our scope is also limited to publicly available studies, recognising that policymakers may also draw on in-house, unpublished ROI analysis as well, such as those produced by the Government Economic Service in the UK. School-based career guidance was therefore the primary field of study for this article. However, the volume of public ROI studies related specifically to school-based career guidance turned out to be too limited and so we expanded our field of study into three additional closely aligned fields to gather a substantial body of ROI studies that related to education, training, lifelong learning, and life or career planning more generally, resulting in a total of four fields across which to gather ROI calculations.

The three congruent fields, identified through a literature review explained further in the Method section, are: adult career guidance or support, particularly as delivered in public employment services; widening participation in education; and behaviour in schools. Except for the first one, these have all proved challenging fields for generating high quality ROI estimates, both in terms of securing resource and commitment to run evaluations and in terms of implementing an effective methodology. They typically comprise small, diverse, often personalised interventions that target multiple long-term outcomes, mediated via a complex theory of change. As such it is perhaps unsurprising that there has been little dedicated systematic review analysis on them to date, unlike for instance the 11 fields analysed by WSIPP (Citation2019).

The approach to ROI within each of these four fields (the original one plus the three congruent to it) will be of direct interest to scholars and advocates working in each of them, enabling them to draw on studies that are typically hard to collate – only 15 of the 32 studies in our corpus are conveniently indexed to an academic database and we relied on 24 experts to source the whole list. But the findings should also be of interest to anyone reflecting on the use of ROI as part of evaluation and impact assessment or policy advocacy, particularly within smaller policy areas with less developed evidence bases. As we will demonstrate, the term ROI hides a multitude of approaches; attempts to calculate ROI can proceed from very different assumptions and be characterised by varied research quality. In this article we will draw out the approaches that are employed and use this grounded description of what ROI actually is to present a critical discussion of how ROI could be used more effectively and consistently in the future.

Method

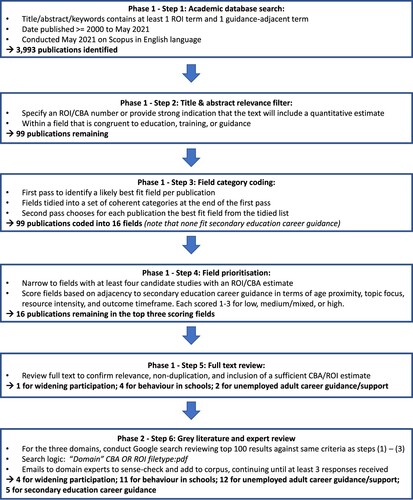

We undertook the review in three phases. The first phase was a structured search of academic databases to identify ROI calculations in the field of school-based career guidance and adjacent fields, out of which we prioritised three fields adjacent to school-based career guidance with sufficient literature to inform the research. Note that no academic papers with ROI calculations on school-based career guidance were identified at this stage. The second phase drew on expert input and general web searches to build as strong a corpus as possible around the four target fields (the original one plus the three congruent to it). With the corpus identified, the third phase involved coding the corpus on criteria grouped around six topics to drive subsequent analysis. provides an overview of the five steps comprising Phase 1 and one step comprising Phase 2.

Phase 1: structured search

The Scopus database was used to drive a search for papers published from 2000 to mid-2021. The search required paper titles, abstracts or keywords to have at least one term that reflected return on investment and at least one term that suggested that the paper was focused on career guidance or on an adjacent area.

The search terms for ROI were CBA, ROI, SROI, BCA, “cost-benefit analysis”, “cost benefit analysis”, “return on investment” or “benefit cost analysis”. We did not include “cost effectiveness”, “cost utility”, “economic evaluation” or “financial value” as these terms do not consistently reflect studies that estimate a monetary value for both costs and benefits (Levin et al., Citation2018). The search terms for school-based career guidance and possible adjacent fields were education, guidance, apprenticeship, college, school, university, TVET, career, careers, employer engagement, employment or unemployment.

This broad approach identified 3,993 papers, reduced to 99 based on title and abstract review, indications that a specific CBA/ROI estimate would be provided and a field that had some congruence with career guidance. None of the identified academic papers directly addressed school-based career guidance. The 99 papers were coded bottom-up into 16 fields which were then prioritised to identify three fields for Phase 2. Fields with three or fewer potential papers identified by the review were excluded on volume. Adjacency was coded with three values (1. Low, 2. Medium/Mixed or 3. High) for each of the following four equally-weighted categories:

– Age proximity – The closer to secondary education phase, the higher the score.

– Topic focus – The more the interventions focused on career or education pathway choices, the higher the score.

– Resource intensity – The lower the resourcing per participant, the higher the score, given that career guidance in schools is often a very low proportion of total education spend.

– Outcome timeframe – The longer the timescale models for outcomes, the higher the score, as theories of change for school-based guidance often set out fairly long-term outcomes such as post-secondary pathway progression and labour market success.

The top three fields adjacent to school-based career guidance surfaced by this method are: career guidance or support for unemployed adults, widening participation in education and behaviour in schools. The first refers to support provided to unemployed adults to return to work or make progress towards work. To merit inclusion in the scope, a guidance or job search related component had to be significant in the programme. Studies were excluded where the following elements were dominant: supported employment, financial incentives, vocational rehabilitation, job creation/subsidy and formal education or training courses.

Secondly, widening participation in education refers to school-based campaigns to increase education uptake, such as entry to higher education among financially disadvantaged students. Target interventions typically prioritise inspiration, guidance, curriculum activities, visits and application support. Such activities relate directly to pathway choices, like career guidance, and often only account for a small proportion of timetable time, making it a close match to school-based guidance. Activities are excluded where they prioritise direct financial subsidies.

Thirdly, behaviour in schools refers to mostly light touch interventions in primary or secondary school to improve student behaviour, mostly focused on social and emotional learning initiatives, school-wide positive behavioural interventions or anti-bullying programmes.

Phase 2: corpus expansion

Following the identification of an initial corpus through academic database searching, we undertook a second phase by engaging a series of experts and conducting web searches across the primary target field (school-based career guidance) and the three congruent fields identified via the database search. We kept contacting experts until at least three replied per field. This process resulted in feedback from 24 experts (see ).

Table 1. Number of experts approached by field.

All papers and sources suggested by experts were reviewed and subjected to the same full-text inclusion criteria as the first phase papers: they must arrive at an ROI estimate (or provide numbers that trivially identify such estimates, such as monetising both costs and benefits) and be relevant to one of the four fields. No quality or accuracy measure is applied, as our primary purpose is to understand the methodology applied to arrive at ROI/CBA estimates.

summarises the relevant literature identified in the four selected fields.

Table 2. Number of papers by field.

The corpus of 32 papers was primarily surfaced by the web search and expert review stage – 25 out of 32. This reflects the majority of papers (17) being grey literature, except for the school behaviour field where there is much stronger academically-published literature.

Corpus coding for analysis

The third phase was to code the final corpus of 32 papers on criteria related to six topics: study description and context, conceptual design of the ROI, benefit selection, cost coverage, counterfactual determination and ROI results and robustness checks. We discussed edge cases and terminology clarifications as required throughout.

The first topic is the study context, including a summary of the intervention, the country it takes place in, and the timespan it covers, and whether the study focuses on a specific, historical intervention or models the likely impact of a generic intervention. Who funded the research and what is their relationship to the intervention being examined? Is ROI primary, secondary or tangential to the main paper focus?

The second topic concerns how the analysts define their ROI scope conceptually. What perspective(s) do they seek to model? The ROI for the taxpayer may be very different from the ROI for the programme participant. The third and fourth topics flow from the definition of ROI perspective and scope: Which specific benefits are selected and how analysts sought to monetise them? Which costs are covered? The fifth topic is counterfactual determination. How do the authors estimate what would have happened without the intervention? The sixth topic summarises the headline ROI results generated by the analysts and the types of robustness checks deployed.

Findings

In this section we examine the corpus against the six topics described, discussing the range of practice present across the papers and highlighting possible areas of good practice that might guide future research.

Context

The interventions cover a broad span of anglosphere and European countries: 12 in the US; 10 in the UK; two each in Australia, Germany and Sweden; and one each in Canada, Denmark, Ireland and the Netherlands. The duration of interventions is similarly broad: from a few hours of guidance support in the adult welfare-to-work setting (Oxford Economics, Citation2008) to long-term interventions, spanning multiple years across whole phases of education (Bowden et al., Citation2020; Klapp et al., Citation2017). Most papers contain a concise summary or citation to describe the main components of an intervention, but it was often hard to identify which components of an intervention account for most of the resource or what engagement is required from third parties, whether voluntary or paid for.

The majority of studies make ROI or CBA a centrepiece of their results, with 26 out of 32 being coded as presenting the ROI analysis as a primary part of the paper. In three papers, core space is dedicated to ROI analysis, but it remains secondary to the main focus on short-term impact evaluation. For three papers, the ROI analysis is tangential, perhaps briefly addressed in a discussion section or in an appendix.

Outside of studies on behaviour in schools, which are primarily academically published, 16 out of 21 studies were published as grey literature, typically by a government body or sector organisation that was responsible for delivering or funding the intervention. Such organisations are often committed to robust evaluation but may also seek to use findings to advocate for their intervention. Indirect, non-financial conflicts of interests can be equally relevant but were hard to identify, such as the authors’ relationships with organisations to access data or their long-term commitments to certain policy positions.

Conceptual frame

Key to any ROI study is how it is framed conceptually. What is the study seeking to achieve and therefore which costs and benefits are being monetised and from whose perspective? In principle, the conceptual framing should be identified first and then the costs and benefits derived from this, but in practice, studies are strongly influenced by the available costs and benefits data. There is however good practice in several studies that can be used to guide future work, even in contexts where the available data do not cover all the desired topics.

Ford et al. (Citation2012, Table 7.1) present an accounting perspective diagram, in which all material flows of benefits and costs are marked against different perspectives, to indicate whether the flow is theorised to be negative, positive or neutral. Flows are not quantified at this point and some may turn out to be immaterial or unmonetisable – the goal is only to list them and their theorised directions. In this case, the cited diagram differentiates four perspectives: the participant, the federal government, the provincial government (as different taxes flow to different levels of government) and society. For instance, an intervention might lead to higher individual wages and higher taxes paid as separate accounting items. Isolating the higher taxes and considering the direct effects only, the diagram illustrates how this is negative for the participant (they lose the money), positive for the government (they gain the money) and neutral for society (the money stays somewhere in the system).

Diagrams can also be used to highlight the flows that are formally monetised in the ROI, those which are only indirectly monetised, perhaps partially captured by other outcomes, and those which are not monetised at all (whether due to lack of data availability, analytical capacity or any other reason). For instance, in Percy (Citation2020, ), a theory of change diagram is shaded to reflect how comprehensively different benefit strands are thought to be captured by the ROI estimation.

Benefits

The corpus varies in terms of the benefits selected, the time horizons they are modelled over and how they are monetised. All five papers for school-based career guidance prioritise labour market outcomes, typically examining benefits across a lifetime for at least some of the benefits in scope. The logic model, that is, the summarised description of how an intervention produces its outcomes (Public Health England, Citation2018), primarily relates wage and employment outcomes to education participation influenced by career guidance, such as reduced education drop-out (e.g. Engelman et al., Citation2016) or increased uptake of education (e.g. Ford et al., Citation2012). Such income and employment-related benefits are translated to fiscal benefits via straightforward albeit simplified assumptions on direct tax take, excluding any multiplier or indirect effects.

Adult career guidance and support similarly focuses on labour market outcomes, typically over a few years from the intervention point, leaving any long-term benefits as upside to their core ROI estimates. However, the other two fields span collectively a broader set of possible benefits. Two widening participation studies monetise such aspects as secondary school students’ comfort and familiarity with university life and confidence in discussing future pathways with their parents (Ravulo et al., Citation2019), and benefits for improved relationships and subject interest (IntoUniversity, Citation2010). These two papers draw on a particular social return on investment (SROI) methodology to monetise these values, linking subjective responses from participants on programme benefit to market prices for goods or services claimed to provide a similar type of benefit. For instance, Ravulo et al. (Citation2019) link feeling more comfortable on a university campus to the price of clothing, which is also argued to make people feel more comfortable. While the logic behind such reasoning is plausible in principle, specific monetary estimates that emerge are highly debatable and would benefit from sense-checking across different individuals and studies.

Creative approaches to benefits and monetisation are also present in the school behaviour field. For instance, social willingness-to-pay estimates to reduce bullying are derived from surveys, noting that the misery of bullying is a present-day harm to be prevented (Beckman & Svensson, Citation2015). Elsewhere, shadow prices are used to infer a state’s willingness to pay for education participation, for instance, the average cost to the state of a day’s schooling (total annual budget divided by school days in year and pupils in school) as a shadow price for a day of school missed due to bullying (Belfield et al., Citation2015). The field often adopts a public health perspective, as seen most directly in three of its 11 papers using national government spend benchmarks for healthcare interventions to provide Quality-Adjusted or Disability-Adjusted Life Years (Turner et al., Citation2020; Legood et al., Citation2021; Jadambaa et al., Citation2021).

The use of third-party estimates to drive an ROI calculation merits careful attention, not least because it is so common in our corpus. Twenty-two out of 32 papers have made extensive use of potentially contestable third-party estimates, such as the lifetime wage benefits of educational achievement, the lifetime costs of adolescent drug use or the likely short-term impact of a hypothetical intervention. Most of these papers have little to no discussion of whether multiple, competing third-party estimates are available and, if so, why one is chosen over another, how it might need adjusting to fit their particular model or how robust their results are to different choices. Only seven discuss the presence of alternative estimates available, of which only four papers provide a structured discussion to motivate the chosen third-party estimates. Some additional papers draw on standard government estimates for monetising outcomes and may not consider it necessary to defend these given their audience. Examples of better practice include Aos et al. (Citation2004) who have a formal search strategy for finding evaluations of programmes and choose meta-analyses where available for longer-term consequences and Percy and Hughes (Citation2022) who have a formal search strategy and specified criteria for choosing their preferred third-party counterfactual impact estimate. Only Percy and Hughes (Citation2022) provided enough detail on all the third-party estimates to permit challenge of their role in the calculation, for example, one or two paragraphs on which benefits the chosen third-party estimate monetises, over what time frame and discount rate and with what type of monetisation method.

Costs

Twenty-seven of 32 studies in our corpus deliberately capture costs only for the direct programmatic costs of delivering the intervention as a contained initiative relative to a business-as-usual service. They do not typically adjust for the leveraged time of school staff or volunteer contributions. Opportunity costs for target beneficiaries are rarely addressed, although the benefits of saving time through not participating in an intervention is partly captured in counterfactuals where comparison group or matched sample designs are used.

Studies that seek to estimate a broader range of costs include: Bowden et al. (Citation2020) which estimates induced costs as a result of third-party programme participation following the identification of individualised support plans per students; Fleissig (Citation2014) who look at training participants’ foregone earnings during a training period; and IntoUniversity (Citation2010) who capture the time cost of volunteers, universities and other third parties supporting their widening participation programme.

The cost per person across our corpus varies widely, although typically well below the costs of providing education and training leading to formal qualifications. At the low end, we have light-touch support to students in full-time education, such as approximately USD 75 (about £60) per student over three years for changing policies and staff approaches to behaviour (Legood et al., 2020). At the high end, we have approximately USD 5000 (about £4000) per student receiving intensive post-18 pathway advice (Castleman & Goodman, Citation2018).

Counterfactual determination

Papers draw on a wide range of empirical methods for the identification of short-term programme impact. The most common, in 11 papers, is the comparison of participants against non-participants outside of a randomised setting but using statistical techniques to improve the comparison; for example, controlling for socioeconomic background, locality and other possible confounders (e.g. Osikominu, Citation2013), matching similar geographical areas (e.g. Hasluck et al., Citation2000) or regression discontinuity designs (e.g. Castleman & Goodman, Citation2018). Nine draw on trials with random assignment, particularly those focused on monetising short-term outcomes, such as one – or two-year return to work outcomes (Krug & Stephan, Citation2013; Michaelides et al., Citation2012) or changes in bullying experiences (Legood et al., 2020; Belfield et al., Citation2015). Systematic reviews are drawn on in two studies, although detail is typically not provided on the quality of underlying trials in the meta-analyses.

Less robust empirical methods are also present in the corpus, illustrating the potential to gain insights from a range of qualitative and quantitative methods. Five rely on interviewing stakeholders directly or using single group pre/post survey data. For instance, Ravulo et al. (Citation2019) asked stakeholders whether they thought certain benefits are a result of the programme. Five use sectoral benchmarks or propose an impact directly, without any statistical adjustments to reflect the intervention population or study context. For instance, Engelman et al. (Citation2016) compared outcomes relative to a benchmark, which was the drop-out rate from the participating schools in 2010/11 prior to the start of the programme.

Among papers interested in consequences that extend beyond a few years, it is common for the authors of the papers to draw on observational longitudinal evidence, since individuals are rarely followed for decades in randomised trials. This evidence is sometimes presented directly, such as the wage outcomes descriptively associated with certain education levels (Ford et al., Citation2012), but the majority of studies use statistical adjustments in an attempt to make the non-participants more comparable to participants (e.g. the third-party studies used in Bowden et al. [Citation2020] and Percy [Citation2020]).

ROI results

The ROI estimates vary widely across the studies, even within fields. All fields include at least one estimate for which the intervention returns are below breakeven. However, of the 45 qualifying ROI estimates contained in the studies, only six are negative and one breakeven. The typical range is from about breakeven to high single digits and occasional very high ROI outliers ().

Table 3. ROI by field.

In the chosen fields in mature, mixed-market economies, high ROIs for at-scale interventions might merit particular scrutiny or adjustment before used in policymaking. The benefits for a small number of participants may be very high, particularly where decisions early in life have a butterfly effect on later outcomes, but average outcomes across a large group will typically be much lower for interventions at scale. This corpus potentially includes five such outlier papers, with estimates for ROI over 30x (i.e. a return 30 times larger than the investment); the other estimates are all below 15x. Very high large-scale ROI activities can, of course, exist, particularly in lower-income country contexts, such as health interventions to combat malaria or parasitic worms, well targeted infrastructure such as electricity/internet access or bridges, and basic literacy, but in any case such strong claims should be interrogated closely before being accepted.

Studies typically emphasise that their ROI estimates are uncertain and the corpus exemplifies a wide range of robustness checks on headline estimates. Robustness checks in this sense refers to checks on the ROI modelling itself rather than the various specification and sensitivity tests that might be applied to underlying econometric analyses. A few studies present a back-of-the-envelope estimate with no checks at all, while others dedicate dozens of pages to it. Reviewing the corpus suggests that ROI robustness checks can be usefully grouped into four broad categories.

First, the consequences of changing the model conceptually – which benefits and costs are modelled, what time horizons are of interest, what perspective is adopted and so on. Second, adjusting input parameters in a given model or calculation chain to see how the results vary. Altering one or few parameters at the same time gives rise to static scenario comparisons, or perhaps tornado charts if each variable is adjusted individually. Altering all parameters simultaneously, according to defined probability distributions to capture the uncertainty in each parameter, for example, via Monte Carlo simulation techniques, can populate probabilistic sensitivity charts, supporting claims like an 80% confidence interval of a certain ROI range, given a particular model design. Third, examining heterogeneity. For instance, would a different ROI be identified for particular subgroups of the population or for particular variants or components of an intervention? Fourth, triangulation using third-party insights or other models. For instance, do stakeholders, sector experts, practitioners, funders or beneficiaries find the results convincing? What proportion of individuals (who could in principle be appropriately sampled) suspect that the true ROI would be higher or lower? What other ROI studies exist on similar interventions, whether formal or approximate replications? How do those ROI estimates vary and what explanations can be found for variations?

Discussion

The corpus of 32 ROI estimates across four fields of interventions provides a sense of the wide range of practice adopted by analysts. Some ROIs can draw on high quality counterfactual data, such as RCTs and multiple longitudinal studies; others rely on pre/post comparisons or subjective assessment by participants. Some use half a page of narrative motivation to suggest a possible point estimate of ROI; others devote whole papers to ROI estimation with dozens of pages of robustness checks. Some focus on few impacts measured over a one – or two-year time horizon; others seek to capture as many types of benefit as possible stretching over decades. This observation of heterogeneity, both within and between fields of intervention, leads to three general points for discussion: comparability, quality and realpolitik.

Comparability

The most direct consequence of heterogeneity is limitations on comparability of resulting ROI estimates. Consumers of such research need to consider what combination of three likely explanations accounts for any significant differences in ROI. First, that the interventions or their contexts are sufficiently different such that they genuinely have very different returns. Second, that while the interventions and contexts may be similar in some respects, the modelling choices or data availability are different, perhaps capturing different subsets of the true benefits and costs. Third, that the methodologies or calculations are incorrectly applied in some studies, or the input assumptions are simply wrong: garbage in, garbage out.

In principle, a conservative net positive ROI, reviewed and found convincing by a funder, can still be taken on its own merits to motivate investment decisions, that is, without comparison to alternatives. In practice, however, short-term budget constraints are fixed and decision makers want to invest in the best option available, rather than investing in all net positive options.

Unfortunately, ROIs in our corpus cannot be directly compared to each other, being typically the case for other ROI studies as well. In some cases, studies are specifically designed to support comparisons, such as the WSIPP database (www.wsipp.wa.gov/BenefitCost). Such comparisons typically become possible only by applying a lowest common denominator logic to the methodology, so the gains in comparability come at the cost of rigour in individual estimates. Where explicitly comparative studies are not available or not suitable, there is a case for engaging a third-party methodological expert to review available studies, to consider how and why ROI estimates vary for a particular type of intervention and identify which might be most appropriate in a given context.

Quality

Heterogeneity includes wide variation in quality and rigour. However, even at the top end of quality, none of the corpus papers reviewed can be considered to have identified the full, true ROI of an intervention. The theoretical ideal for an ROI estimation is effectively unattainable for complex social interventions whose theory of change ricochets through an individual’s lifetime and ripples through their social interactions. For an intervention like career guidance, which may lead to subtle changes in self-awareness, self-esteem, and pathway decision-making, influencing the shape and experience of life trajectories, there are simply too many overlapping benefits to model all of them convincingly.

In theory, it is also important to understand indirect and systemic consequences of interventions. In practice, uncertainty on such estimates can mean analysts sensibly treat them as out of scope. None of the papers in this corpus discussed or attempted a full quantitative analysis of indirect effects, system effects, or possible distributional consequences. As an example of such effects, increased earnings have implications for economic growth via multiplier effects and new opportunities for spending taxes (wisely or otherwise), as well as possible distributional effects that might be relevant for community cohesion or as policy priorities in their own right. Behavioural adjustments at the individual level can be captured through well-designed comparison groups, but systemic consequences are not always easily captured. Regardless of whether analysts formally model systemic effects, it is important to acknowledge them and for policymakers to consider them in their decision making, even if only qualitatively.

The key systemic consideration for career guidance is possible displacement. In a trivial sense, most jobs are gained at another’s expense. However, the fiscal benefit of guidance would only suffer materially if the person who misses out would go on to be unemployed with the same level of difficulty as the individual supported into the role. In opposition, if the person gaining the job is better matched than the person missing out, that is, they perform better in the role, perhaps being happier or more productive in it for longer, then there are positive economic consequences. Empirical evidence on this topic is highly limited, with a note of caution raised in one France-based study (Crépon et al., Citation2013). Percy (Citation2023) provides a broader discussion of displacement and how it might be empirically tested in a schools setting.

Higher quality papers typically draw on formal identification of counterfactual outcomes. However, where appropriate econometric estimates do not exist, it is reasonable for analysts to use benchmarks, subjective reports, or expert estimates. Such assumptions allow the calculation of an initial ROI and can act as placeholders to guide further research. In some cases, robustness checks in the modelling may reveal that the results are not sensitive to a plausible, yet broad range of estimates for a given placeholder assumption, such that research effort can be better directed elsewhere.

Realpolitik

Where does the near unattainability of theoretically accurate or comparable ROI estimates leave decision-making? Incremental improvements in ROI studies, with better benefits coverage and understanding of systemic effects like displacement, will result in more useful estimates – provided the uncertainty introduced by working with hard-to-measure effects is reflected in appropriately nuanced claims consumed thoughtfully by decision makers. However, the range of practice we observe in the corpus suggests that useful ROI estimates, at least deemed useful by peer review or by research funders, can be derived even when ideal data are not available or analytical time is highly limited. Our view is that light touch ROI estimates can be useful inputs to decision making, provided their limitations in scope are engaged with the same intensity as their headline results.

The wide diversity of counterfactual methods used in the corpus underlines differences between ROI work as an input to policymaking and causal inference as an academic discipline. In the latter, there is a stronger focus on immediate consequences from historic interventions, which makes it easier to apply experimental methods and make context-constrained estimates. As such, it can often be reasonable to withhold judgement pending the improvement of an evidence base. In the former, policy decisions are always informed by expert judgement in the context of uncertainty. Historical interventions, no matter how stringently evaluated, only ever shed a little light on the effects of likely future interventions. Choosing not to intervene, or to intervene in a different way because of withholding judgement on a particular topic, has consequences too. The point being that no perfect evidence exists to predict the future financial impact of policy interventions in complex domains with long-term consequences – the best we can do is use all available evidence, with their varying degrees of imperfection, to the best of our ability.

A better evidence base would be greatly helped with more standardisation of ROI methodology and terminology (e.g. using accounting perspective diagrams, motivating third-party evidence selection, listing the unmonetised direct and indirect effects) and commissioning that incorporates cost capture and at least light touch ROI estimates into standard impact evaluations.

There would be value in adapting economic evaluation checklists to ROIs for guidance and life pathway interventions in the social sciences, reflecting on standards that have been developed for the health domain (e.g. the “CHEERS” method described in Husereau et al. [Citation2013] and the “CHEC” method described in Evers et al. [Citation2005]). Standards for meta-analysis applied to social sciences ROI would also be welcome, building on the distributional and multivariable analysis of library provision ROI studies from Aabø (Citation2009) or of geospatial systems by Trapp et al. (Citation2015). Such techniques need greater analytical clarity on the consistent measurement of uncertainty and on the minimum necessary similarity (or adjustments necessary to support certain comparability goals) to substitute for the role of standard errors in traditional meta-analysis.

A more subtle limitation of ROIs for decision-making concerns the distinction between average ROI as typically surfaced by ROI estimates and marginal ROI as better reflecting the actual decision often facing policymakers (HMT, Citation2020). For instance, if everyone that will have significant benefits from a particular intervention is already being served, the marginal ROI on additional investments will be much lower than an average ROI calculated on the intervention’s record against all current or historical participants. None of the corpus papers addressed this distinction directly.

These limitations of ROI reinforce that such analysis can only ever be one input among several into decision-making. Benefits, costs and system interaction effects that are not modelled, or modelled only very partially, need to be incorporated separately into decision-making, and not given less weight as a result of not being modelled in the ROI. Ethical considerations, political feasibility, operational tractability and precedent setting also matter and can often dominate ROI consideration. Sometimes we might want to incorporate more complex benefits or considerations (such as implications on inequality) as best we can into future ROI models, to increase their usefulness. In other cases, such considerations are best understood qualitatively and reviewed alongside model outputs, rather than subsumed within it.

The alternative to ROI-informed decision making is not that imperfect estimates of the costs and benefits of a decision are suddenly ignored or cease to be relevant. The alternative is that costs and benefits are assessed intuitively and illegibly by decision-makers – indeed perhaps conflictingly and incorrectly. A transparent approach that surfaces poor assumptions for challenge and improvement is preferred to having such assumptions hidden and untested.

A framework for career guidance ROI

The discussion highlights some of the weaknesses of the extant ROI literature on career guidance and congruent areas. There is a need to improve both quality and consistency and to give greater consideration as to how these findings can be fed into policymaking and resourcing decisions in a way that will improve policy and practice. To aid this, we propose a framework of high-level questions in , as a complement to the technical, quality-focused checklists that we have cited. The framework is based around the six topics outlined in the analysis section. It provides key questions that should shape the ROI and then more detailed questions that may be helpful in guiding studies which particularly focus on school-based career guidance. The contents of this table should be read alongside the Findings section, which contains additional discussion of the issues and more examples drawn from the corpus.

Table 4. A framework for ROI in school-based career guidance.

It would be possible to take this framework further and, for example, identify what a gold standard approach to ROI calculation is. However, such judgements risk ignoring policy realities where researchers, policymakers and other actors often need to operate with less than perfect information and limited funding for evidence and evaluation. In such a situation it is important to recognise that there are a range of legitimate decisions that researchers can make, whilst also trying to increase the consistency in the way these decisions are communicated.

Conclusion

In this paper, we have drawn on a structured academic literature search and expert input to assemble a corpus of 32 studies that estimate a return on investment (ROI) metric in complex education-related interventions: school-based career guidance, adult career guidance, widening participation in education and behaviour in schools. Such interventions commonly have features that make it hard to develop high quality impact evidence and ROI models, the latter including a focus on multiple long-term outcomes, a light-touch intervention with low cost per person and a personalised approach to delivery. The corpus illustrates the considerable heterogeneity in analytical methods and ROI results both within and between fields. We argue that such heterogeneity and corresponding lack of standardisation makes it hard to compare ROI studies and relates to the near intractability of generating full ROI estimates of complex interventions. Nonetheless, imperfect ROI studies can be useful, both as frameworks for understanding key assumptions or areas of future work and as one input among several into informed policy decision making. Our analysis and discussion sections draw out examples of good practice and recommendations for both analysts and users of ROI papers.

Acknowledgements

We would like to thank the sector experts who helped identify additional studies and verify our corpus. We also thank Ian Turek who provided research assistance in corpus coding.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Data availability statement

All corpus documents are publicly available at the time of writing. Corpus details are available from the corresponding author upon reasonable request.

Additional information

Notes on contributors

Chris Percy

Chris Percy is a Visiting Research Fellow at the University of Derby, UK. His research interests focus on using data, financial modelling and policy research to better support career pathways and school-to-work transitions.

Tristram Hooley

Tristram Hooley researches and writes about career and career guidance. He is Professor of Career Education at the University of Derby, UK, and Professor II at the Inland Norway University of Applied Sciences, Norway.

References

- Aabø, S. (2009). Libraries and return on investment (ROI): A meta-analysis. New LibraryWorld, 110(7/8), 311–324.

- Aos, S., Lieb, R., Mayfield, J., Miller, M. G., & Pennucci, A. (2004). Benefits and costs of prevention and early intervention programs for youth. Washington State Institute for Public Policy. https://www.ojp.gov/ncjrs/virtual-library/abstracts/benefits-and-costs-prevention-and-early-intervention-programs-youth.

- Beckman, L., & Svensson, M. (2015). The cost-effectiveness of the Olweus bullying prevention program: Results from a modelling study’. Journal of Adolescence, 45, 127–137. https://doi.org/10.1016/j.adolescence.2015.07.020

- Beesley, A., Gulemetova, M., & DiFuccia, M. (2015). Georgia governor’s office of student achievement innovation fund SROI analysis final report. IMPAQ International.

- Belfield, C., Bowden, A. B., Klapp, A., Levin, H., Shand, R. D., & Zander, S. (2015). The economic value of social and emotional learning. Journal of Benefit-Cost Analysis, 6, 508–544. https://doi.org/10.1017/bca.2015.55

- Bowden, A. B., Shand, R., Levin, H. M., Muroga, A., & Wang, A. (2020). An economic evaluation of the costs and benefits of providing comprehensive supports to students in elementary school. Prevention Science, 21(8), 1126–1135. https://doi.org/10.1007/s11121-020-01164-w

- Careers Wales. (2021). Brighter futures: our vision 2021-2026. Cardiff: Careers Wales. https://careerswales.gov.wales/about-us/brighter-futures

- Castleman, B., & Goodman, J. (2018). Intensive college counseling and the enrollment and persistence of low-income students. Education Finance and Policy, 13(1), 19–41. https://doi.org/10.1162/edfp_a_00204

- Cedefop. (2020). Call for papers. Towards European standards for monitoring and evaluation of lifelong guidance systems and services. cedefop.europa.eu/files/2020_11_16_call_for_papers_cedefop_careersmonitoring.pdf.

- Covacevich, C., Mann, A., Santos, C., & Champaud, J. (2021). Indicators of teenage career readiness: An analysis of longitudinal data from eight countries. OECD Education Working Papers, 258. https://doi.org/10.1787/cec854f8-en

- Crépon, B., Duflo, E., Gurgand, M., Rathelot, R., & Zamora, P. (2013). Do labor market policies have displacement effects? Evidence from a clustered randomized experiment. The Quarterly Journal of Economics, 128(2), 531–580. https://doi.org/10.1093/qje/qjt001

- Engelman, A., Morgan, G., Ruthven, M., & Pugh, E. (2016). 2016 legislative report Colorado school counselor corps grant program. Colorado Department of Education. https://www.cde.state.co.us/postsecondary/2016-school-counselor-corps-grant-program.

- Evers, S., Goossens, M., de Vet, H., van Tulder, M., & Ament, A. (2005). Criteria list for assessment of methodological quality of economic evaluations: Consensus on health economic criteria. International Journal of Technology Assessment in Health Care, 21(2), 240–5. https://doi.org/10.1017/S0266462305050324

- Fleissig, A. (2014). Return on investment from training programs and intensive services. Atlantic Economic Journal, 42(1), 39–51. https://doi.org/10.1007/s11293-013-9394-y

- Ford, R., Frenette, M., Nicholson, C., Kwakye, I., Hui, T., Hutchinson, J., Dobrer, S., Fowler, H., & Hebert, S. (2012). Future to discover: Post-secondary impacts report. Social Research and Demonstration Corporation. http://www.srdc.org/publications/Future-to-Discover-FTD–Post-secondary-Impacts-Report-details.aspx.

- Foster, E. M., Jones, D. E., & Conduct problems prevention research group (2006). Can a costly intervention be cost-effective? Archives of General Psychiatry, 63(11), 1284–1291. https://doi.org/10.1001/archpsyc.63.11.1284

- Frontier Economics. (2021). The economic value of WorldSkills UK. WorldSkills UK.

- Gauge Ireland. (2012). Social return on investment – Final report (December 2012). e-Merge - An Initiative of the Mount Street Trust – Employment Initiative delivered by Ballymun Job Centre. Ballymun Job Centre.

- Gough, D., & Thomas, J. (2016). Systematic reviews of research in education: Aims, myths and multiple methods. Review of Education, 4(1), 84–102. https://doi.org/10.1002/rev3.3068

- Hasluck, C., McKnight, A., & Elias, P. (2000). Evaluation of the new deal for lone parents: Early lessons from the Phase One Prototype - Cost-benefit and econometric analyses research report No. 110. Department of Social Security.

- HM Treasury. (2020). The green book: Central government guidance on appraisal and evaluation. https://www.gov.uk/government/publications/the-green-book-appraisal-and-evaluation-in-central-governent.

- Hollenbeck, K., & Huang, W. (2016). Net impact and benefit-cost estimates of the workforce development system in Washington State. Upjohn Institute Technical Report, 16-033. W.E. Upjohn Institute for Employment Research. https://doi.org/10.17848/tr16-033

- Hooley, T., & Godden, L. (2022). Theorising career guidance policymaking: Watching the sausage get made. British Journal of Guidance & Counselling, 50(1), 141–156. https://doi.org/10.1080/03069885.2021.1948503

- Hooley, T., Percy, C., & Alexander, R. (2021). Exploring Scotland’s career ecosystem. Skills Development Scotland.

- Hooley, T., Sultana, R. G., & Thomsen, R. (2018). The neoliberal challenge to career guidance. Mobilising research, policy and practice around social justice. In T. Hooley, R. G. Sultana, & R. Thomsen (Eds.), Career guidance for social justice. Contesting neoliberalism. Routledge.

- House, R. (1996). Audit-mindedness’ in counselling: Some underlying dynamics. British Journal of Guidance & Counselling, 24(2), 277–283. https://doi.org/10.1080/03069889608260415

- Hughes, D., & Percy, C. (2022). Transforming careers support for young people and adults in northern Ireland. Department for the Economy. https://www.economy-ni.gov.uk/publications/transforming-careers-support-young-people-and-adults-northern-ireland.

- Huitsing, G., Barends, S., & Lokkerbol, J. (2020). Cost-benefit analysis of the KiVa anti-bullying program in The Netherlands. International Journal of Bullying Prevention, 2, 215–224. https://doi.org/10.1007/s42380-019-00030-w

- Husereau, D., Drummond, M., Petrou, S., et al. (2013). Consolidated health economic evaluation reporting standards (CHEERS) statement. Value Health, 16(2), e1–e5. https://doi.org/10.1016/j.jval.2013.02.010

- ICF International. (2016). Social innovation fund – return on investment study for three national fund for workforce solutions (NFWS) workforce partnership programs in Ohio. ICF.

- IntoUniversity. (2010). Intouniversity social return on investment.

- Jadambaa, A., Graves, N., Cross, D., Pacella, R., Thomas, H., Scott, J., Cheng, Q., & Brain, D. (2021). Economic evaluation of an intervention designed to reduce bullying in Australian schools. Applied Health Economics and Health Policy, 20, 79–89. https://doi.org/10.1007/s40258-021-00676-y

- Klapp, A., Belfield, C., Bowden, B., Levin, H., Shand, R. D., & Zander, S. (2017). A benefit-cost analysis of a long-term intervention on social and emotional learning in compulsory school. The International Journal of Emotional Education, 9, 3–19. https://repository.upenn.edu/cbcse/7/

- Krug, G., & Stephan, G. (2013). Is the contracting-out of intensive placement services more effective than provision by the PES? Evidence from a randomized field experiment. IZA Discussion Papers, 7403. Institute for the Study of Labor (IZA).

- Legood, R., Opondo, C., Warren, E., Jamal, F., Bonell, C., Viner, R., & Sadique, Z. (2021). Cost-utility analysis of a complex intervention to reduce school-based bullying and aggression: An analysis of the inclusive RCT. Value in Health, 24(1), 129–135. https://doi.org/10.1016/j.jval.2020.04.1839

- Levin, H. M., McEwan, P. J., Belfield, C., Bowden, A. B., & Shand, R. (2018). Economic evaluation in education: Cost-effectiveness and benefit-cost analysis (3rd ed.). SAGE publications.

- Maibom, J., Rosholm, M., & Svarer, M. (2017). Experimental evidence on the effects of early meetings and activation. The Scandinavian Journal of Economics, 119(3), 541–570. https://doi.org/10.1111/sjoe.12180

- Mann, A, Denis, V, & Percy, C. (2020). Career ready? How schools can better prepare young people for working life in the era of COVID-19 (OECD Education Working Paper 241). Paris: OECD. https://doi.org/10.1787/e1503534-en

- Michaelides, M., Poe-Yamagata, E., Benus, J., & Tirumalasetti, D. (2012). Impact of the reemployment and eligibility assessment (REA) initiative in Nevada. IMPAQ International.

- OECD. (2004). Career guidance: A handbook for policy makers. OECD Publications.

- Osikominu, A. (2013). Quick job entry or long-term human capital development? The dynamic effects of alternative training schemes. Review of Economic Studies, 80(1), 313–342. https://doi.org/10.1093/restud/rds022

- Oxford Economics. (2008). Examining the impact and value of EGSA to the NI economy: Final report.

- Page, L., Iriti, J., Lowry, D., & Anthony, A. (2019). The promise of place-based investment in postsecondary access and success: Investigating the impact of the Pittsburgh promise. Education Finance and Policy, 14(4), 572–600. https://doi.org/10.1162/edfp_a_00257

- Pathak, P., & Dattani, P. (2014). Social return on investment: Three technical challenges. Social Enterprise Journal, 4(1), 3–18. https://doi.org/10.1332/204080513X661554

- Percy, C. (2020). Personal guidance in English secondary education: An initial return-on-investment estimate. The Careers & Enterprise Company.

- Percy, C. (2023). Quantifying the economic impact of career guidance in secondary education. [PhD Thesis, University of Derby]. UDORA. https://doi.org/10.48773/9x456

- Percy, C., & Dodd, V. (2021). The economic outcomes of career development programmes. In P. Robertson, T. Hooley, & P. McCash (Eds.), The Oxford handbook of career development (pp. 35–48). Oxford University Press.

- Percy, C., & Hughes, D. (2022). Lifelong guidance and welfare to work in Wales: Linked return on investment methodology. In F. Cedefop (Ed.), Towards European standards for monitoring and evaluation of lifelong guidance systems and services (Vol. I, pp. 109–138). Publications Office of the European Union. Cedefop. http://data.europa.eu/doi/10.2801/422672.

- Percy, C., & Tanner, E. (2021). The benefits of Gatsby Benchmark achievement for post-16 destinations. The Careers & Enterprise Company.

- Public Health England. (2018). Creating a logic model for an intervention: evaluation in health and wellbeing. https://www.gov.uk/guidance/evaluation-in-health-and-wellbeing-creating-a-logic-model.

- Ravulo, J., Said, S., Micsko, J., & Purchase, G. (2019). Utilising the social return on investment (SROI) framework to gauge social value in the fast forward program. Education Sciences, 9(4), 290. https://doi.org/10.3390/educsci9040290

- Robertson, P. J. (2021). The aims of career development policy: Towards a comprehensive framework. In P. J. Robertson, T. Hooley, & P. McCash (Eds.), The Oxford handbook of career development (pp. 113–128). Oxford University Press.

- Swain-Bradway, J., Lindstrom Johnson, S., Bradshaw, C., & McIntosh, K. (2017). What are the economic costs of implementing SWPIBS in comparison to the benefits from reducing suspensions? PBIS Technical Assistance Center, University of Oregon. https://www.pbis.org/resource/what-are-the-economic-costs-of-implementing-swpbis-in-comparison-to-the-benefits-from-reducing-suspensions.

- The Sutton Trust. (2010). The mobility manifesto: A report on cost-effective ways to achieve greater social mobility through education.

- Trapp, N., Schneider, U., Mccallum, I., Fritz, S., Schill, C., Borzacchiello, M., Heumesser, C., & Craglia, M. (2015). A meta-analysis on the return on investment of geospatial aata and systems: A multi-country perspective’. Transactions in GIS, 19(2), 169–187.

- Turner, A. J., Sutton, M., Harrison, M., Hennessey, A., & Humphrey, N. (2020). Cost-effectiveness of a school-based social and emotional learning intervention: Evidence from a cluster-randomised controlled trial of the promoting alternative thinking strategies curriculum. Applied Health Economics and Health Policy, 18(April 2020), 271–285. https://doi.org/10.1007/s40258-019-00498-z

- UNESCO & NCVER. (2020). Understanding the return on investment of TVET: A practical guide. United Nations Educational, Scientific and Cultural Organization & National Centre for Vocational Education Research.

- Van Reenen, J. (2001). No more skivvy schemes? Active labour market policies and the British new deal for the young unemployed in context. The Institute for Fiscal Studies.

- Watts, A. (1995). Applying market principles to the delivery of careers guidance services: A critical review. British Journal of Guidance & Counselling, 23(1), 69–81. https://doi.org/10.1080/03069889508258061

- WGCG (The Inter-Agency Working Group on Career Guidance). (2021). Investing in career guidance. https://www.etf.europa.eu/sites/default/files/2021-09/investing_in_career_guidance.pdf.

- WSIPP. (2019). Benefit-cost technical documentation (Dec 2019 edition). Washington State Institute for Public Policy.