?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Motivated by applications in matrix constructions used in the inverse eigenvalue problem for graphs, we study a concept of genericity for eigenvectors associated with a given spectrum and a connected graph. This concept generalizes the established notion of a nowhere-zero eigenbasis. Given any simple graph G on n vertices and any spectrum with no multiple eigenvalues, we show that the family of eigenbases for symmetric matrices with this graph and spectrum is generic, strengthening a result of Monfared and Shader. We illustrate applications of this result by constructing new achievable ordered multiplicity lists for partial joins of graphs and providing several families of joins of graphs that are realizable by a matrix with only two distinct eigenvalues.

1. Introduction

Let G be a simple graph with vertex set and edge set

, and consider

, the set of all real symmetric

matrices

such that, for

,

if and only if

, with no restriction on the diagonal entries of A. The inverse eigenvalue problem for graphs (IEP-G) seeks to characterize all possible spectra

of matrices

. The IEP-G is solved for a few families of graphs (complete graphs, paths, generalized stars [Citation1], cycles [Citation2,Citation3], generalized barbell graphs [Citation4], linear trees [Citation5]) and for graphs of order at most 5[Citation6]; the problem remains open in many other cases.

The closely related ordered multiplicity inverse eigenvalue problem for graphs seeks to characterize all possible ordered multiplicities of eigenvalues of matrices in , i.e. to characterize the ordered lists of nonnegative integers

for which there exists a matrix

and

, such that

, where

denotes

copies of

. We call such an ordered list

an ordered multiplicity vector of G. The ordered multiplicity inverse eigenvalue problem has been resolved for all connected graphs of order at most 6[Citation7]. For some specific families of connected graphs, several ordered multiplicity vectors have been determined (see, e.g. [Citation4,Citation6,Citation8]). Moreover, Monfared and Shader proved the following theorem in [Citation9], showing that

is an ordered multiplicity vector of any connected graph G, which can be realized with a nowhere-zero eigenbasis, that is, a basis of eigenvectors, each containing no zero entry.

Theorem 1.1

[Citation9,Theorem 4.3]

For any connected graph G on n vertices and distinct real numbers , there exists

with spectrum

and an eigenbasis consisting of nowhere-zero vectors.

Our main result, Theorem 2.4, is a strengthening of Monfared and Shader's theorem. We show that we can make considerably more stringent genericity requirements of the eigenbasis than simply asking that it is nowhere-zero; in the language of [Citation10], we show that any spectrum with only simple eigenvalues is generically realizable, for any connected graph G.

Recall that a matrix is nowhere-zero if each of its entries is nonzero. Let be a multiset of real numbers. For a connected graph G, we say the multiset σ is realizable for G if

for some

; we say σ is generically realizable for G if, moreover, for any finite set

there is an orthogonal matrix U so that

, where D is a diagonal matrix with spectrum σ and

is nowhere-zero for all

. (As noted above, this condition is stronger than the realizability of σ in

with a nowhere-zero eigenbasis: consider

.) Let

be the set of strictly positive integers. An ordered multiplicity vector

is spectrally arbitrary for G if for any real numbers

, the multiset

is realizable for G. Further, we say

is generically realizable for G if it is spectrally arbitrary for G, and every assignment of eigenvalues results in a generically realizable multiset for G, as defined above.

We will also need to deal with disconnected graphs. The notion of the multiplicity matrix of a disconnected graph was introduced in [Citation10] as a generalization of the ordered multiplicity vector of a connected graph, as follows. Let G be a graph with connected components

. For

, an

matrix V with non-negative integer entries is said to be a multiplicity matrix for G if, for

, the ith column of V is an ordered multiplicity list realized by a matrix in

. Note that a trivial necessary condition for V to be a multiplicity matrix of G is that the orders of the connected components of G are the same as the column sums of V; we abbreviate this by saying that V fits G. If every column of a multiplicity matrix V for a graph G is generically realizable for the corresponding connected component of G, then we say V is generically realizable for G.

The methods used in [Citation6,Citation11] to resolve the IEP-G and the ordered multiplicity IEP-G for small graphs make essential use of strong properties. In particular, a symmetric matrix A is said to have the strong spectral property (the SSP) if the zero matrix 0 is the only symmetric matrix X satisfying AX = XA and

, where ○ denotes the Hadamard product. The SSP was first defined in [Citation11]. One of its key features is the following perturbation result.

Theorem 1.2

[Citation11,Theorem 10]

Let be a spanning subgraph of a graph G. If

is a matrix with the SSP, then for any

there is a matrix

with the SSP such that A and

have the same eigenvalues and

.

To establish Theorem 2.4, we first prove it for trees, and then apply the SSP in the case that is a spanning tree of G. A 0–1 matrix contains only 0s and 1s; casting Theorem 2.4 in this language, we obtain Theorem 2.5: every 0–1 multiplicity matrix which fits a graph G is generically realizable for G. In particular, this implies that every multiplicity matrix which fits a path

is generically realizable for

. In the terminology of [Citation10], we say the graph

is generically realizable.

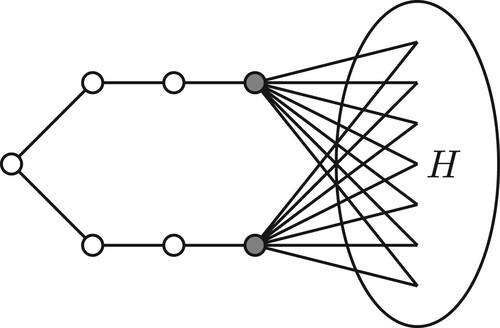

Figure 1. Sketch of the partial join , where pendant vertices X of

are coloured grey. The edges of H are not drawn.

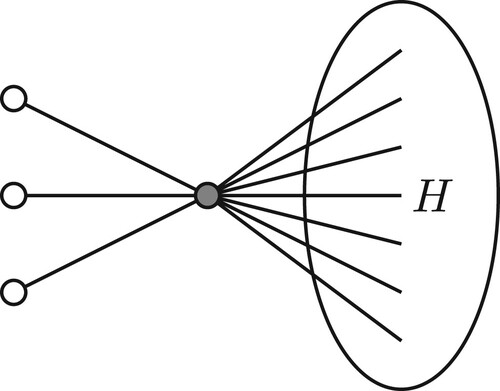

Figure 2. Sketch of the partial join , where the high degree vertex v is coloured grey. The edges of H are not drawn.

We provide some applications in Section 3. In particular, we are interested in finding examples of joins of graphs that allow a small number of distinct eigenvalues. The minimum number of distinct eigenvalues of a graph

where

denotes the number of distinct eigenvalues of a square matrix A, is one of the parameters motivated by IEP-G. The study of

was initiated by Leal–Duarte and Johnson in [Citation12], and it has been broadly studied since then (see e.g. [Citation9–11,Citation13–17]). Monfared and Shader [Citation9,Theorem 5.2] proved that

if G and H are connected graphs with

. A consequence of our result is the following generalization (Theorem 3.4): if G and H are arbitrary graphs, each with k connected components

and

, so that

for each i, then we still have

.

In this paper, we use the following notation. For an integer n, let us denote , and we also write

. Column vectors are typically written using boldface; for example,

denotes the column vector of ones in

, and

is the vector with the 1 in the ith entry and 0s elsewhere. We write

for the

identity matrix,

is the zero

matrix, and we also write

and

. Where the context allows, we may omit these subscripts altogether.

For a vector let us denote by

the vector

with its ith component removed, and for a matrix A let

denote its principal submatrix with the ith row and column of A removed.

All graphs considered are simple undirected graphs with non-empty vertex sets

. The order of G is

and we often assume (without loss of generality) that

. For a connected graph G, the distance between a pair of vertices is the number of edges in a shortest path between them, and the diameter

is the largest distance between any pair of vertices. A subgraph H is a spanning subgraph of G if

. The join

of two graphs G and H is the disjoint union

together with all the possible edges joining the vertices in G to the vertices in H. We abbreviate the disjoint graph union of k copies of the same graph G by

. For a subgraph H of G and a matrix

, we define the matrix

as the principal submatrix of A whose rows and columns are the vertices of H. We write

,

and

for the path, the cycle and the complete graph on n vertices, respectively, and we denote the complete bipartite graph on two disjoint sets of cardinalities m and n by

.

2. Generic realizability of 0–1 matrices

In this section, we will prove that any 0–1 multiplicity matrix that fits a graph G is generically realizable for G.

Throughout this section, we fix , real numbers

, and the diagonal matrix

. Further, let

denote the smooth manifold of all

symmetric matrices with eigenvalues

. This manifold was studied in [Citation11], where the following lemma was proven in the special case that

for all i, j. A nearly identical argument yields the more general result we require, and we give the details for completeness. (In fact, yet further generalizations may be proven, although we will not need those here.)

Lemma 2.1

Let G be a connected graph of order m. Given a function and

, consider the family of manifolds given by

(1)

(1) For every

, there exists

and a matrix

with the SSP so that

.

Proof.

In this proof, we use definitions and notations from [Citation11]. In particular, denotes the normal space to a smooth submanifold

of the

matrices at some

, and two such manifolds

and

intersect transversally at

if

.

Let be the smooth family of manifolds of

symmetric matrices defined in (Equation1

(1)

(1) ). Note that

is the set of diagonal matrices. By [Citation11], we have

and

. Since these two normal spaces have trivial intersection, the manifolds

and

intersect transversally at Λ.

By [Citation11,Theorem 3], there exists r>0 and a continuous function such that

and for

, the manifolds

and

intersect transversally at

. Hence, for any

, for sufficiently small

, the matrix

has

and

. To see that A has the SSP, we have to prove that

and

intersect transversally at A (see [Citation11,page 11]). Since

, it follows that

. Further, since

and

intersect transversally, we have

, proving that

and

intersect transversally at A.

Lemma 2.2

For , let

and let

be an orthogonal matrix with non-negative diagonal entries satisfying

. If

as

, then

as

.

Proof.

For each , let

be a polynomial with

for

. Then

is the orthogonal projection onto the

-eigenspace of

. Hence

In particular,

. Since

, this implies that

, which implies in turn that

if

. Hence,

, proving

.

In the previous lemma, we showed that the off-diagonal elements of decay to zero. We now show that when we also have

where

, it is sometimes possible to precisely determine the rate of decay of off-diagonal elements of

.

Lemma 2.3

Let G be a tree of order m. Given , let

with

and let

,

.

For , let

be the subgraph of G consisting of the shortest path from i to j,

, and

. (In particular,

,

and

.)

Suppose with

, and

with

as

. For

, let

be an orthogonal matrix with non-negative diagonal entries so that

. Then

as

, for all

.

Proof.

Assuming and

, it follows that

for each

. Since

and

, we may assume (by taking n sufficiently large) that

and for

,

is bounded away from zero.

Fix and consider the vector

. This is a normalized eigenvector of

with eigenvalue

. Equivalently, for

we have

(2)

(2) where

is the set of neighbours of i in G.

For , let

denote the distance in G from i to

. We claim that for

:

if

with

, then

as

and

if

with

, then

as

.

We will establish this claim by induction on x.

Since converges to Λ, the

th column of

converges to

by Lemma 2.2. This implies that the claim holds for x = 0.

Now assume inductively that the claim holds for all x with . We proceed to prove that it holds for

as well. First consider claim (a), and suppose

with

. Rearranging (Equation2

(2)

(2) ), we obtain

For any

, we have

, so

, and

Since

, we have

for all

, hence by part (a) of the claim for

, each term in the sum above converges to 0. Since we also have

, part (a) of the claim holds for

. This proves (a) for all x with

.

Now consider part (b). Suppose . Since G is a tree, there exists precisely one

with

, and by the definition of s, we have

. We have

, so

and we can rearrange (Equation2

(2)

(2) )as follows:

(3)

(3) For every

we have

Hence, by part (a) of the claim, all the terms under the sum in (Equation3

(3)

(3) ) converge to zero. Taking limits and using part (b) of the claim show that

.

Theorem 2.4

Let G be a connected graph of order m, and choose a finite set . There exists a matrix

with the SSP and an orthogonal matrix U with

so that

is nowhere-zero for all

.

Proof.

First we prove the statement for trees, so let be a tree. Suppose that the statement is false for

. Choose

and

as in Lemma 2.3 so that the corresponding function

satisfies

if and only if

. (This may be guaranteed by a suitable choice of g.) Further, let

also be as in Lemma 2.3, with the SSP (which we can guarantee by Lemma 2.1) and let

be orthogonal matrices with non-negative diagonal satisfying

. Since we suppose the statement is false for

, for every n there exists

so that some entry of

is zero. Passing to a subsequence, we may assume that there is some fixed vector

and a fixed index

so that

for every

. Let

denote the kth row of

, hence we are assuming

for all

. We aim to arrive at the contradiction by proving

. From Lemma 2.3 we know that

, hence

. Let

be such that

, and

be minimal with

. Now

By Lemma 2.3, we know that

converges to a nonzero constant and

converges to zero for

. Hence

, a contradiction, showing that the statement holds for any tree

.

Now, let G be a connected graph, and be a spanning tree of G. Since the claim holds for

, there exists

with the SSP so that

,

, and

is nowhere-zero for all

. Since

has the SSP, by Theorem 1.2 there exists

with the SSP arbitrarily close to

and with the same eigenvalues as

. Since the eigenvalues are distinct, the

eigenspaces are close for A and

, so for A and

sufficiently close, there is an orthogonal matrix U diagonalizing A which is sufficiently close to

to ensure that

is also nowhere zero for all

.

Theorem 2.5

If G is any graph, then every 0–1 multiplicity matrix which fits G is generically realizable for G.

Proof.

Given such a matrix V, label the connected components of G so that the multiplicity vector

fits

. Every entry of

is 0 or 1, so by Theorem 2.4,

is generically realizable for

. Hence, V is generically realizable for G.

If every multiplicity matrix for a graph G is generically realizable for G, then we say G is generically realizable. This is a strong requirement for a graph; in particular, it implies that G is spectrally arbitrary for every multiplicity matrix that can be realized by G. In fact, the only families of generically realizable graphs previously known are unions of complete graphs [Citation10]. We can now extend this to include paths.

Corollary 2.6

The path

is a generically realizable graph, for every

.

If every connected component of a graph G is either a complete graph or a path, then G is generically realizable.

Proof.

The maximum multiplicity of an eigenvalue of a path is 1 [Citation18], so every multiplicity vector for is a 0–1 vector, hence it is generically realizable by Theorem 2.5. Complete graphs are also generically realizable [Citation10], so the second assertion follows immediately.

3. Applications to the inverse eigenvalue problem

In [Citation10], the authors developed an approach to study , where G is the join of two graphs. We now give some applications of preceding results to this problem.

First we briefly review the set up from [Citation10]. For a matrix X with at least 3 rows, we write for the matrix obtained by deleting the first row and the last row of X. Let

denote the set of

matrices with entries in a set

, and let

be the set of non-negative integers. Given

with

, the matrices

and

are said to be compatible if

and

is nowhere-zero. We say the graphs G and H have compatible multiplicity matrices if there exist compatible matrices V and W where V is a multiplicity matrix for G and W is a multiplicity matrix for H.

Which graphs G and H have ? The answer is closely related to the existence of compatible multiplicity matrices.

Theorem 3.1

[Citation10,Theorems 2.5 and 2.14]

Let G and H be two graphs. If , then G and H necessarily have compatible multiplicity matrices.

Moreover, if there exist compatible generically realizable multiplicity matrices V and W for G and H, then .

By Corollary 2.6, in the case of unions of paths or complete graphs we can strengthen this to a necessary and sufficient condition.

Corollary 3.2

If G and H are unions of paths or complete graphs, then if and only if G and H have a pair of compatible multiplicity matrices.

For general graphs, by combining Theorem 2.5 and Theorem 3.1, we obtain the following purely combinatorial sufficient condition for to be 2.

Corollary 3.3

Let G and H be two graphs. If there exist compatible 0–1 matrices V and W that fit G and H, respectively, then .

In general, deciding whether there exist compatible 0–1 matrices with prescribed column sums seems to be a difficult combinatorial question, which we plan to examine further in upcoming work.

Monfared and Shader [Citation9,Theorem 5.2] proved that if G and H are connected graphs with

. Using Corollary 3.3, it is now simple to generalize their result to the case

, and also to pairs of disconnected graphs with equal numbers of connected components.

Theorem 3.4

Let and suppose G and H are graphs that both have k connected components. If we can label these components of G and H as

and

, respectively, so that

for each

, then

Moreover, if

for all

, then

Proof.

Let for

,

and

. Let

and consider

(4)

(4) where

are chosen so that

, and

for all

. (The existence of such vectors is assured by the condition

.) Since

and the first row of E is nowhere-zero, V and W are compatible 0–1 matrices fitting

and

, respectively, hence

by Corollary 3.3.

If , then the assumption

for all

implies the existence of compatible matrices V and W as in (Equation4

(4)

(4) ), except that we don't require the first and last rows of V to be 0–1 vectors, i.e.

. By [Citation10] and Theorem 2.5, V and W are generically realizable multiplicity matrices for G and H, respectively, so q = 2 by Theorem 3.1.

For connected graphs G and H, we have just seen that if

. We now show that we generally cannot relax this inequality.

Example 3.5

Take above, and suppose G is a connected graph with

. In this case, let us prove that the condition

, i.e.

, is also necessary for

to be equal to 2.

If , then by Theorem 3.1 there exist compatible multiplicity matrices

and

for G and

, respectively. The maximum multiplicity of an eigenvalue of a path is 1, so

. By compatibility, we have

and without loss of generality, we can delete any zeros in these column vectors to reduce to the case

where

and

. Since these matrices fit G and

, respectively, we have

and

, as required.

Recall that if T is a tree and , then the extreme eigenvalues of A have multiplicity one. Hence, by a similar argument to that of the previous paragraph, we have

if and only if

for any trees

.

We now turn to another class of applications of Theorem 2.5, in which we obtain certain achievable spectra of partial joins. We require additional terminology given below.

Recall that if G is a graph and , then the vertex boundary of W in G is the set of all vertices in

which are joined to some vertex of W by an edge of G.

Suppose that and

are vertex-disjoint graphs and let

for i = 1, 2. The partial join of graphs

and

is the graph

formed from

by joining each vertex of

to each vertex of

. If

, then we write

.

Suppose G and are graphs so that G is obtained from

by deleting s vertices and adding arbitrary number of edges (in particular,

). If a matrix

has the SSP, then by the Minor Monotonicity Theorem [Citation6,Theorem 6.13] there exist a matrix

with the SSP, such that

, where

for distinct

and

for

. In the next result, we provide a statement of a similar flavour that does not depend on the SSP. The construction given in the lemma was first developed in the context of the nonnegative inverse eigenvalue problem [Citation19,Citation20].

Lemma 3.6

Let G be a graph, a partition of

, and

be the vertex boundary of

in G. Suppose H is any connected graph with

and consider the graph

.

Let and suppose that

is a multiset of real numbers so that

is generically realizable for H. Then there exists

with spectrum

.

Proof.

Let us denote for i = 1, 2 and

. Assume without loss of generality that

,

and

. We have

, with

,

, and

. Let

be an orthogonal matrix, such that

. Write

, and let

. Moreover, let

with eigenvalues

.

Since is the vertex boundary of

in G, we have

, where no column of

is zero. Let us denote the set of columns of

by

, and let

denote the set of vectors obtained from elements of

by appending t zeros. Then

. Since Q is orthogonal, all vectors in

are nonzero.

Since is generically realizable for H, there exist a matrix

with

, and an orthogonal matrix

, such that

and

is a nowhere-zero vector for all

.

Let

where

Since

is a nowhere-zero vector for

, the matrix

is nowhere-zero. Hence,

, and

as desired.

Lemma 3.6 can be used to build explicit examples or to provide more general results. Implementation is faced with two issues: first we need some information on , and second, we need to prove generic realizability of

for H. In our first application, we rely on Theorem 2.5 for generic realizability.

Theorem 3.7

Let G be a graph, a partition of

, and

be the vertex boundary of

in G. Suppose H is any connected graph with

and consider the graph

.

If there exists so that

has distinct eigenvalues

, then there exists

with spectrum

where

and

are any distinct real numbers satisfying

for all

.

Theorem 3.7 allows us, in some sense, to replace a submatrix with distinct eigenvalues with a matrix corresponding to an arbitrary connected graph of equal or bigger size. Note the technical requirement that has distinct eigenvalues is automatically satisfied when

is a path. This observation allows us to improve an upper bound for the number of distinct eigenvalues of joins of graphs that is given in [Citation15,Section 4.2], where it is proved that for connected graphs G and H,

is bounded above by

.

Corollary 3.8

For any and a graph H with

we have

In particular, if G and H are both connected, then

Proof.

The first part follows easily from Theorem 3.7. Assume now that both G and H are connected. If , then

by Theorem 3.4. To cover the case

, we note that Theorem 3.4 implies

and hence we have

. By symmetry the statement follows.

Next we explore applications of Lemma 3.6 in the special case where G is a cycle. Cycles are a nice family to use for this purpose, since every induced connected subgraph of a cycle is a path, and the IEP-G for cycles is known to have the following solution.

Lemma 3.9

[Citation3,Theorem 3.3]

Let be a list of real numbers,

. Then

is the set of eigenvalues of a matrix in

if and only if

Example 3.10

Fix and let X be the set of degree one vertices of

. Given a connected graph H, consider

(see Figure ). We claim that if

and

, then

is an unordered multiplicity list for some matrix in

. Let

. By Lemma 3.9, there is a matrix in

with unordered multiplicity list

. Partition the vertices of G as

so that

and

, and apply Theorem 3.7.

Note that Lemma 3.6 allows us to increase the multiplicities of eigenvalues of M, provided the technical conditions of the lemma are satisfied. For example, the eigenvalues that we add in Lemma 3.6 can be chosen to agree with eigenvalues in

, hence increasing their multiplicity, provided they are not also eigenvalues of

. When this condition is not satisfied, Theorem 2.5 cannot be applied to assure generic realizability. Examples of both eventualities are given in the two examples below. In the first example, we exhibit ordered multiplicity lists that achieve

, where G is a wheel graph of order 4m−1.

Example 3.11

Let us apply Lemma 3.6 to ,

and

. Choose any distinct real numbers

. By Lemma 3.9, there exists a matrix

with

Let

be the leading principal

submatrix of M. By interlacing and [Citation18], we have

where

for

.

Let and let

. By Lemma 3.9, there exists

with

. Let us prove that we can choose a nowhere-zero eigenbasis for A. Every eigenspace

of A is spanned by two linearly independent vectors

and

. If

for some

, then

and

are linearly independent eigenvectors of

corresponding to the same eigenvalue, which contradicts the fact every matrix corresponding to a path has simple eigenvalues, [Citation18]. Hence for each i either

or

, so we can choose their linear combinations to be nowhere-zero and hence we can choose a nowhere-zero eigenbasis for A.

Since A has a nowhere-zero eigenbasis and no simple eigenvalues, the proof of [Citation10,Proposition 3.1] shows that is generically realizable for

. By Lemma 3.6, there exists a matrix

with spectrum

Hence the ordered multiplicity list

is realizable for

, which are wheel graphs.

Note that Lemma 3.9 prohibits an odd number of eigenvalues between any two double eigenvalues of a matrix corresponding to a cycle. Hence by interlacing a matrix corresponding to cannot have an even number (including zero) of eigenvalues between any two triple eigenvalues. Therefore with the above construction we have found a matrix corresponding to

with the maximal multiplicity of an eigenvalue and the minimal number of distinct eigenvalues. In particular,

.

Example 3.12

If and H is any connected graph with 3m−2 vertices, then the multiplicity list

is spectrally arbitrary for

and, in particular,

. To see this, take

,

and

. For any

, let

with

. Then

, where

for

(since a path has only simple eigenvalues). Now,

is a principal submatrix of

, so by [Citation21,Problem 4.3.P17], the eigenvalues of

strictly interlace those of

. In particular, the eigenvalues of

do not intersect

. Applying Theorem 3.7 we see that the spectrum

is realized by a matrix in

, as required.

Remark 3.13

In particular, by interlacing, the maximal multiplicity of an eigenvalue of a matrix corresponding to is equal to 3 and hence

. This result complements [Citation15,Example 4.5], where they proved that

. It would be interesting to determine

in general.

In Theorem 3.7, we can make use of graphs for which the IEP-G is solved (or better understood) to construct new realizable multiplicity lists for partial joins; we illustrate this idea also for generalized stars [Citation1].

Example 3.14

Let and H be an arbitrary connected graph. If

, such that

,

, and

, then

is an unordered multiplicity list for

(see Figure ).

To prove this, let be a generalized star with k arm lengths equal to 1 and one arm length equal to

,

,

, and let

be the high degree vertex of G. By [Citation1,Theorems 14 and 15], any matrix in

has the unordered multiplicity list

, such that

is majorized by

, hence

. By Theorem 3.7, there exists a matrix in

with the same eigenvalues as a matrix in

.

Acknowledgements

Polona Oblak acknowledges the financial support from the Slovenian Research Agency (research core funding no. P1-0222).

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Johnson CR, Leal-Duarte A, Saiago CM. Inverse eigenvalue problems and lists of multiplicities of eigenvalues for matrices whose graph is a tree: the case of generalized stars and double generalized stars. Linear Algebra Appl. 2003;373:311–330. doi: 10.1016/S0024-3795(03)00582-2

- Ferguson Jr WE. The construction of Jacobi and periodic Jacobi matrices with prescribed spectra. Math Comp. 1980;35(152):1203–1220. doi:10.1090/S0025-5718-1980-0583498-3.

- Fernandes R, da Fonseca CM. The inverse eigenvalue problem for Hermitian matrices whose graphs are cycles. Linear Multilinear Algebra. 2009;57(7):673–682. doi: 10.1080/03081080802187870

- Lin JC-H, Oblak P, Šmigoc H. On the inverse eigenvalue problem for block graphs. Linear Algebra Appl. 2021;631:379–397. doi: 10.1016/j.laa.2021.09.008

- Johnson CR, Wakhare T. The inverse eigenvalue problem for linear trees. Discrete Math. 2022;345(4):112737 doi: 10.1016/j.disc.2021.112737

- Barrett W, Butler S, Fallat SM, et al. The inverse eigenvalue problem of a graph: multiplicities and minors. J Combin Theory Ser B. 2020;142:276–306. doi: 10.1016/j.jctb.2019.10.005

- Ahn J, Alar C, Bjorkman B, et al. Ordered multiplicity inverse eigenvalue problem for graphs on six vertices. Electron J Linear Algebra. 2021;37:316–358. doi: 10.13001/ela.2021.5005

- Adm M, Fallat S, Meagher K, et al. Achievable multiplicity partitions in the inverse eigenvalue problem of a graph. Spec Matrices. 2019;7:276–290. doi: 10.1515/spma-2019-0022

- Monfared KH, Shader BL. The nowhere-zero eigenbasis problem for a graph. Linear Algebra Appl. 2016;505:296–312. doi: 10.1016/j.laa.2016.04.028

- Levene RH, Oblak P, Šmigoc H. Orthogonal symmetric matrices and joins of graphs. Linear Algebra Appl. 2022;652:213–238. doi: 10.1016/j.laa.2022.07.007

- Barrett W, Fallat S, Hall HT, et al. Generalizations of the strong Arnold property and the minimum number of distinct eigenvalues of a graph. Electron J Combin. 2017;24(2):28 doi: 10.37236/5725 Paper 2.40.

- Leal-Duarte A, Johnson CR. On the minimum number of distinct eigenvalues for a symmetric matrix whose graph is a given tree. Math Inequal Appl. 2002;5(2):175–180. doi: 10.7153/mia-05-19

- Adm M, Fallat S, Meagher K, et al. Corrigendum to ‘Achievable multiplicity partitions in the inverse eigenvalue problem of a graph’ [Spec. Matrices 2019; 7:276–290.], Special Matrices; 2020; Vol. 8(1). pp. 235–241. doi: 10.1515/spma-2020-0117

- Ahmadi B, Alinaghipour F, Cavers MS, et al. Minimum number of distinct eigenvalues of graphs. Electron J Linear Algebra. 2013;26:673–691. doi: 10.13001/1081-3810.1679

- Bjorkman B, Hogben L, Ponce S, et al. Applications of analysis to the determination of the minimum number of distinct eigenvalues of a graph. Pure Appl Funct Anal. 2018;3(4):537–563.

- da Fonseca CM. A lower bound for the number of distinct eigenvalues of some real symmetric matrices. Electron J Linear Algebra. 2010;21:3–11. doi: 10.13001/1081-3810.1409

- Levene RH, Oblak P, Šmigoc H. A Nordhaus–Gaddum conjecture for the minimum number of distinct eigenvalues of a graph. Linear Algebra Appl. 2019;564:236–263. doi: 10.1016/j.laa.2018.12.001

- Fiedler M. A characterization of tridiagonal matrices. Linear Algebra Appl. 1969;2:191–197. doi: 10.1016/0024-3795(69)90027-5

- Laffey TJ, Šmigoc H. Spectra of principal submatrices of nonnegative matrices. Linear Algebra Appl. 2008;428(1):230–238. doi: 10.1016/j.laa.2007.06.029

- Šmigoc H. Construction of nonnegative matrices and the inverse eigenvalue problem. Linear Multilinear Algebra. 2005;53(2):85–96. doi: 10.1080/03081080500054281

- Horn RA, Johnson CR. Matrix analysis. 2nd ed., Cambridge: Cambridge University Press; 2013. doi: 10.1017/CBO9781139020411