Abstract

DALDIS is a three-year EU-funded Erasmus+ Project on Digital Assessment for Learning (2019–2022) involving five European countries. DALDIS is pilot testing a digital assessment for learning solution focused on Science and Modern Foreign Language Learning in years 5–9. Underpinned by the Study Quest technology, which is designed to drive students’ learning progression using well-designed question-sets and student feedback, DALDIS is built on the principle that formative assessment is one of the best methods to encourage student achievement. Although Assessment for Learning (AFL) using technology has great potential for learning, it is not widely used in Europe. As the project lead Ireland was responsible for the first adaptation of StudyQuest, known as JCQuest for its Junior Cycle science and French curriculum. Unexpectedly, the commencement of DALDIS coincided with school closures due to Covid-19 and this appears to have influenced the uptake in student usage of JCQuest to support online learning and exam preparation. This paper provides an insight into how students engaged with JCQuest, key usage patterns and types of devices used based on Google Analytics data during two crucial school closure periods during Ireland’s pandemic.

Introduction

The evolution of digital technologies has provided educators with new ways of accessing and processing knowledge (Martinovic and Zhang Citation2012). Technology has the potential to transform teaching and learning practices by providing new ways to engage learners (Finger Citation2015). While traditionally, education has been slow to change, particularly when it comes to technology adoption, one of the unanticipated consequences of Covid-19 is how schools have had to rapidly adapt to new digital environments for continuity in teaching (Shonfeld, Cotnam-Kappel, and Judge Citation2021). An interesting example of this phenomenon is the DALDIS Erasmus+ Project (2019–2022) which commenced shortly before Covid-19, but whose implementation has benefitted from the crisis.

The DALDIS (Digital Assessment for Learning informed by Data to motivate and Incentivise students) project involves five countries – Ireland Poland, Greece, Turkey, Denmark and Belgium representing the primary, secondary and third level sectors. One of the project partners has developed the project’s technology – ‘Study Quest’ (www.study-quest.com).

Although digital Assessment for Learning (AFL) has great classroom potential (Maier Citation2014), its use in Europe is not widespread. DALDIS is attempting to address this by testing the application of AFL methodology using technology in the project partners’ schools. Informed by the assessment for learning (AFL) research, DALDIS aims to develop and research a technology-based solution for AFL to drive student learning progression using well-designed question-sets and student feedback. The two target curriculum areas are science teaching and modern foreign language learning (MFL) for age groups 11–15.

As the lead partner for DALDIS Ireland is responsible for the first adaptation of StudyQuest, known as JCQuest (www.jcquest.ie) designed to support AFL in the context of the ‘new’ Junior Cycle curriculum, ten Irish schools participated in a pre-pilot of JCQuest in 2020. This case study will present and discuss the implications of key access and usage patterns of JCQuest based on Google Analytics against the backdrop of school closures and re-opening during the Covid-19 pandemic. Although the data presented here are mainly descriptive, they provide a valuable insight into how JCQuest supported the online curriculum and assessment needs of students preparing for exams during the pandemic.

Assessment for learning

DALDIS is underpinned by the AFL theory and educational technology. The project is built on the principle that formative assessment is one of the best methods to encourage student achievement (Hattie Citation2009) and William and Black’s (Citation1998) definition of formative assessment practices as methods of feedback inform teaching and learning activities. Since then, research has examined various aspects of AFL, in particular feedback. Many empirical studies see feedback as central to learning with Hattie (Citation1999, 9), describing it as ‘the most powerful single moderator that enhances achievement’. According to Gamlem and Smith (Citation2013), feedback is most useful to learners when it is delivered immediately after a test and includes specific comments about errors and suggestions for improvements. Students receiving positive feedback on how to improve become more interested and engaged in a subject and elaborate more deeply on the content (Rakoczy et al. Citation2008).

Computer-based assessment (CBA)

The use of technology within education impacts many aspects of learning including assessment. Good assessment practices that are essential for learning and technology can be used to enhance assessment and feedback (Deeley Citation2018). Computer-Based Assessment (CBA) is seen as a promising way to promote formative assessment practices in schools (Russell Citation2010) as it can ‘individualize feedback, increase student engagement, and support self-regulated learning’ (Van der Kleij and Adie Citation2018, 612).

Although CBA tools, such as Clickers, Kahoot and RecaP, are used widely in schools the research evidence on their impact is mixed (Zhu and Urhahne Citation2018; Lasry Citation2008). Both the ad hoc nature with which they are deployed in classrooms and the limitations of their affordance capabilities as tools rather than systems are undoubtedly constraining factors. That’s why the Office of Educational Technology (2017) called for more advanced systems that provide teachers with high quality formative assessment tools. However, as Luckin et al. (Citation2012, 64) advise technology alone does not promote learning; instead, there is a need to focus more on ‘evolving teaching practice to make the most of technology’. This is particularly true when it comes to Assessment for learning whose implementation to date has encountered many challenges.

These challenges are well documented in the academic literature where research shows that teachers and student to not fully engage with formative assessment processes (Van der Kleij and Adie Citation2018). Multiple studies indicate a deficit in teachers’ assessment literacy competencies with novice teachers especially feeling unprepared for assessment in schools (Herppich et al. Citation2018), leading to calls for an urgent need for ongoing professional development to build teachers’ assessment capability (Lysaght and O’ Leary Citation2017). Furthermore, effective implementation of AFL, like all educational innovations is strongly influenced by teachers’ beliefs, attitudes and pedagogical perspectives (Judge Citation2020; Sach Citation2013; Lee, Feldman, and Beatty Citation2012). Teachers with a more constructivist, student-centered approach to learning tend to use AFL more and in a more meaningful way (Hargreaves Citation2013; Birenbaum, Kimron, and Shilton Citation2011). Given the increasing emphasis on the use of technology for assessment, this is particularly significant as the link between constructivism and the adoption of technology by teachers is well established. This suggests that many of the first and second level barriers (Ertmer Citation2005; Judge Citation2013) associated with the use of ICT in schools, such as computer competency, infrastructure, openness to change, attitudes and beliefs, are also important factors influencing the implementation and adoption of Assessment for Learning.

DALDIS, JCQuest implementation, school closures and covid-19

The backbone of the DALDIS project is the Study Quest technology platform that has been designed to support a variety of systems, devices and browsers for both school and home use to ensure maximum accessibility. At a conceptual level, Study Quest is informed by research, in particular Hattie and Timberley’s (Citation2007) guidelines on feedback based on their systematic meta-analysis of 196 studies on the topic. In StudyQuest, carefully designed formative feedback for all questions ‘nudge’ students towards the correct answer, reinforcing basic knowledge while also helping students develop conceptual understanding.

As the lead partner in DALDIS, Ireland is the first country to adapt Study Quest through the creation of its own curriculum-specific platform – ‘JCQuest’ (www.jcquest.ie). JCQuest fully aligns with the ‘new’ Junior Cycle (JC) curriculum that has undergone a major revision in recent years. As the ‘new’ JC encourages the use of formative assessment with Classroom-Based Assessment now constituting 10% of the overall JC examination mark, it provides an ideal opportunity to test out the development of a fully curriculum-aligned AFL system like JCQuest.

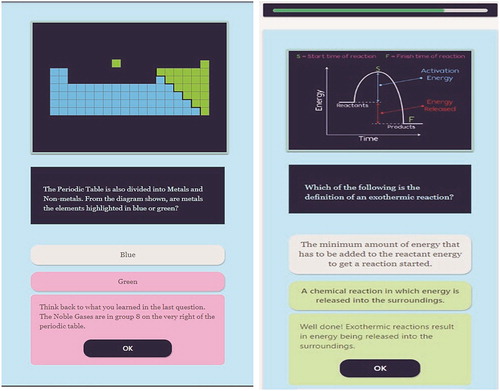

JCQuest comprises a repository of multiple choice question-sets to support Science and French language learning. These question-sets are based on popular textbooks for ease of integration in the classroom. The system has been designed to support learning progression through providing immediate feedback to help students build understanding and support key curriculum concepts. For both correct and incorrect answers, students receive appropriate feedback to promote further learning ().

Figure 1. Feedback for right and wrong answers supports the student with positive nudges in JCQUEST.

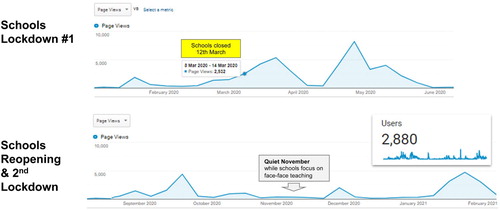

A beta version of JCQuest was launched with ten Irish schools for piloting in January 2020. Uptake was slow until Ireland’s first national pandemic lockdown was announced on March 12th when schools closed immediately and did not re-open until August. Nonetheless, despite school closures, the Government insisted that the Junior and Senior Cycle terminal examinations would still go ahead as normal in June 2020. However, on April 29th the government backtracked and announced the cancellation of the JC examination; instead, students would now be awarded a standard certificate of completion based on an assessment of student work. Schools had until May 29th to complete additional tests for students if required.

Findings

From the perspective of DALDIS, these dates and events are significant as can be seen from JCQuest’s online engagement patterns. As illustrates the surge in usage, once lockdown commenced is striking with average weekly users increasing from just 46 pre Covid-19 to over 200 during the first Covid-19 lockdown (March–June), representing a five-fold increase. Apart from the Easter break in April, online engagement remained strong until the end of May with over 50,000 page impressions recorded in this period. Interestingly usage data patterns reveal three daily activity spikes: from 11 am to 12.30, from 2 to 4 pm and again from 6 to 8 pm

An analysis of the top 50 of a total of 174 page titles accessed demonstrates high-quality usage of JCQuest question-sets based on duration and bounce rates. In JCQuest a question-set generally comprises 15 pages, which takes approximately 4–5 min to complete and the analytics show that students generally spend 6.5 min on each question set. This indicates a high level of engagement, which bodes well for the future development of JCQuest and the DALDIS project. Although the French material was not released until April, its uptake was rapid, with usage levels matching science by the end of May. In terms of science material, it is worth noting that seven of the top ten page views are related to biology.

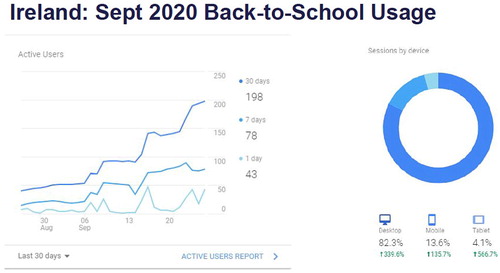

When students finally returned to school in late August, usage of JCQuest gradually increased. By September the numbers accessing JCQuest at almost 200 closely matched peak March lockdown figures (). October, however, saw a drop in usage especially coming into the mid-term break.

It is noticeable that the flat lining in usage that commenced at the October mid-term continued into November. There then follows a spike in December and yet another large spike from late January 2021 onwards when teaching moved completely online due to another national lockdown. provides an interesting comparative snapshot of JCQuest usage during school closures and reopening periods during the Pandemic to date.

Discussion

While these are still very early days in the unfolding narrative of JCQuest’s deployment in schools, the initial beta piloting of the system has yielded some interesting data, while also rising additional questions requiring further investigation. Uniquely and somewhat fortuitously it provides an insight into how Irish students used a digital AFL system to support learning during the pandemic, how they organized their learning day and the types of devices used to support learning.

The noticeable spike in the usage of JCQuest once schools closed in March 2020 indicates the system clearly addressed a need for students, and JC examination students particularly. As all question-sets fully aligned with the curriculum, JCQuest was an ideal companion for students forced to work independently and online with limited teacher interaction once schools closed. Although the system has not yet been fully data-mined to see which year groups were engaging the most, it is our hypothesis that it was mainly Year 3 JC students preparing for the upcoming JC exam. As the system data revealed that students spent most of their time accessing year 1 and year 2 material, this would suggest that students were revising topics covered in earlier years as these would be less well remembered by students compared to the content covered in the current academic year. This hypothesis is further supported by the significant drop-off in online engagement from mid-May onwards following the announcement on April 29 to cancel the 2020 JC examination.

It’s also interesting to see how students organized their learning time during lockdown. JCQuest’s usage pattern indicates that students followed a standard school day comprising an intense period of morning study, followed by a similar afternoon pattern. The evening engagement period (6–8 pm) mirrors the time normally spent doing ‘homework’ in a pre-pandemic world. This suggests that students, who were independently engaging with schoolwork online, were sticking to a tried and trusted ‘offline’ formula to manage their studies.

The types of devices used to access JCQuest are also worth noting. While the system had been designed with the expectation that students would be mainly using mobile phones, the high percentage of students using desktops/laptops is a surprise. The fact that 62% of students were using desktops during the first lockdown would suggest that larger devices provided a more comfortable working environment when it came to sustained online interaction. The even higher usage of desktops (82%), when students returned to school, indicates in-class usage of JCQuest where desktops/laptops are the norm. The increase in mobile usage during that same period (18.9%), therefore, likely points to students using JCQuest for independent learning, revision or homework, as mobiles are largely discouraged in schools. Tablet usage, which declined from 10.1% during lockdown to just 1.4% post-lockdown, is surprisingly low and would support the view that tablets are more consumer devices rather than serious workhorses when it comes to enterprise or education.

In terms of additional questions requiring further investigation, two, in particular, stand out concerning firstly the popularity of biology and secondly the online/offline peaks and troughs engagement patterns.

The popularity of biology may simply be due to the fact that studying biology involves a lot of memorization around facts, diagram labelling and definitions and, therefore, it can be argued that JCQuest’s multiple-choice question format provides an ideal examination preparation tool. However, it’s also possible that gender factors are at play, particularly when one considers that girls in developed countries are more conscientious than boys when it comes to school-work and preparing for exams (OECD Citation2015); that subject choices in senior cycle are influenced by how well one performs in the junior cycle; and that internationally girls in post primary schools are increasingly interested in studying biology rather than physics and engineering subjects unlike boys (Baram-Tsabari and Yarden Citation2011). This gender imbalance in STEM subject choices is a complex phenomenon involving multiple factors operating at individual, institutional, familial and societal levels, so much so that the STEM field itself is segregated along gender lines. For example, males outnumber females in Engineering and Technology fields, whereas occupations, such as medicine and life-sciences, are more gender balanced (Makarova, Aeschlimann, and Herzog Citation2019). Thus, like their international counterparts girls, who continue to study science at senior cycle in Irish schools, are more inclined to choose biology (Delaney and Devereux Citation2019) especially when the highly priced university courses they wish to pursue such as medicine, dentistry, veterinary medicine, physiotherapy and nursing all have a strong biology component. This is in line with Blickensta’s (Citation2005) findings that biology-related careers, which are more closely affiliated with caring, represent a femininity continuum of science disciplines. Understandably, the motivation to do well in Junior Cert science particularly when it comes to the study of biology is very strong for girls interested in pursuing science careers and this may explain the high usage of biology on JCQuest.

The recurrent online/offline usage pattern for JCQuest is also interesting, and in truth probably raises more questions than answers. Clearly there was good use of the system when schools first reopened after lockdown, but then from October on usage declines sharply almost until the second lockdown. As researchers we wonder why? Was it because in September teachers were familiarizing students with a system that they would use later on when more curriculum material had been covered and when JCQuest’s assessment capabilities could be more fully utilized? Was it because teachers were preparing students to use the system should another lockdown be required which was a real possibility in September when Covid-19 figures began to increase again nationally? As it turned out schools did not face another lockdown until January 2021 and with this second lockdown usage of JCQuest rose rapidly again, with engagement figures returning to levels experienced in the first lockdown. While this is reassuring, it should be remembered that JCQuest was primarily designed as a digital AFL solution to support classroom teaching and learning not as a pandemic stop-gap for online learning. Therefore, the lull in usage, when teachers were back in their classrooms, may be concerning as it could indicate that once teachers returned to ‘normal’ face-to-face teaching, they were less inclined to use JCQuest for assessment; going back instead to more traditional ways. Should this be the case one must question to what extent some of the challenges surrounding the adoption of AFL discussed earlier in this paper, such as teachers’ assessment literacy deficit, pedagogical beliefs/attitudes and the lack of ongoing professional development in AFL methods and practices, are barriers that urgently need addressing?

Conclusion

While the DALDIS project predated Covid-19, the surge in usage of JCQuest by Irish students, during both school lockdown periods, indicates that a well-designed digital platform fully aligned with the curriculum can be a valuable AFL solution once students and teachers use it. This paper has only discussed the Irish experience based on a beta launch of the JCQuest platform, whose implementation perversely appeared to have benefitted from a need to move to online learning due to a national pandemic. However, in the wider DALDIS context, the intention is to produce ‘JCQuest equivalents’ based on StudyQuest for each partner country throughout the project’s three-year lifecycle (2019–2022). These will contain question-sets that closely match national curricula and classroom teaching practices locally and over time differences in teaching and learning approaches, pedagogies and curriculum structures will be researched to advance the understanding of Assessment for Learning (AFL) in classrooms at a European level.

In so doing the project researchers are fully cogniscent of Hargreaves’s (Citation2005) position that AFL is better approached from an inquiry rather than a measurement perspective. Understandably, the temptation when using a CBA system like JCQuest, which facilitates such ready access to quantitative data, is to go down the measurement route. However, as the pilot implementation of JCQuest illustrates, it’s the data that can’t be so readily measured such as why the biology material proved so popular and why system usage declined when normal face-to-face teaching resumed that raise the more interesting questions.

Therefore, as DALDIS develops, the Google Analytics data will be supplemented with more qualitative data involving interviews, observations and surveys to shed light on these more nuanced and challenging issues. Adopting a more mixed methods approach will, we believe, result in a fuller and more comprehensive understanding of the implementation and adoption of a digital AFL solution that reflects and respects the ‘lived experience’ of everyday classroom life, not just in Ireland but in European classrooms also.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Miriam Judge

Dr Miriam Judge is a lecturer in digital media in the School of Communications at Dublin City University, Ireland. Her main research interest is in the use of digital media and educational technology in schools. She has led and managed a number of high profile projects in the area of technology enabled learning both nationally and internationally including the Micool Project (2015–2017) on the deployment of Tablet technologies in European schools (www.micool.org) and the DALDIS project (2019–2022) on Digital Assessment for Learning (www.daldis.eu). She is treasurer and a member of the National Executive of the Computers in Education Society of Ireland (www.cesi.ie), an organisation which promotes and supports the use of digital technology in Education, a steering committee member of the National Digital Skills Coalition and a former Advisory Board member of TechWeek (www.techweek.ie), Ireland's nationwide festival of technology. In 2019 Dr Judge was made a fellow of the Irish Computer Society (www.ics.ie) in recognition of her work in promoting and supporting the educational technology agenda in Ireland.

References

- Baram-Tsabari, A., and A. Yarden. 2011. “Quantifying the Gender Gap in Science Interests.” International Journal of Science and Mathematics Education 9: 523–550. doi:10.1007/s10763-010-9194-7.

- Birenbaum, M., H. Kimron, and H. Shilton. 2011. “Nested Contexts That Shape Assessment “for” Learning: School-Based Professional Learning Community and Classroom Culture.” Studies in Educational Evaluation 37: 35–48. doi:10.1016/j.stueduc.2011.04.001.

- Blickenstaff, J. 2005. “Women and Science Careers: Leaky Pipeline or Gender filter?” Gender and Education 17 (4): 369–386.

- Deeley, S. J. 2018. “Using Technology to Facilitate Effective Assessment for Learning and Feedback in Higher Education.” Assessment & Evaluation in Higher Education 43 (3): 439–448. doi:10.1080/02602938.2017.1356906.

- Delaney, J., and P. J. Devereux. 2019. “Understanding Gender Differences in STEM: Evidence from College Applications.” Economics of Education Review 72: 219–238.

- Ertmer, P. A. 2005. “Teacher Pedagogical Beliefs: The Final Frontier in our Quest for Technology Integration?.” Educational Technology Research and Development 53 (4): 25–39.

- Finger, G. 2015. “Creativity, Visualization, Collaboration and Communication.” In Teaching and Digital Technologies: Big Issues and Critical Questions, edited by M. Henderson, and G. Romeo, 89–103. Melbourne: Cambridge University Press.

- Gamlem, S. M., and K. Smith. 2013. “Student Perceptions of Classroom Feedback.” Assessment in Education: Principles, Policy and Practice 2013 (20): 150–169. doi:10.1080/0969594X.2012.749212.

- Hargreaves, E. 2005. “Assessment for Learning? Thinking Outside the (Black) box.” Cambridge Journal of Education 2005 (35): 213–224. doi:10.1080/03057640500146880.

- Hargreaves, E. 2013. “Inquiring into Children’s Experiences of Teacher Feedback: Reconceptualising Assessment for Learning.” Oxford Review of Education 39: 229–246. doi:10.1080/03054985.2013.787922.

- Hattie, J. A. C. 1999. “Influences on Student Learning.” In Inaugural Lecture Held at the University of Auckland, New Zealand, 2.

- Hattie, J. 2009. Visible Learning- Meta Study. Abingdon: Routledge.

- Hattie, J., and H. Timberley. 2007. “The Power of Feedback.” Review of Educational Research 77 (1): 81–112.

- Herppich, S., A. Praetoriusm, N. Forster, I. Glogger-Frey, K. Karst, D. Leutner, L. Behrmann, et al. 2018. “Teachers’ Assessment Competence: Integrating Knowledge-, Process-, and Product-Oriented Approaches Into a Competence-Oriented Conceptual Model.” Teacher Education 76: 181–193.

- Judge, M. 2013. “Mapping Out the ICT Integration Terrain in the School Context: Identifying the Challenges in an Innovative Project.” Irish Educational Studies 32 (3): 309–333. doi:10.1080/03323315.2013.826398.

- Judge, M. 2020. “Computer-Based Training and School ICT Adoption, A Sociocultural Perspective.” In Encyclopedia of Education and Information Technologies, edited by A. Tatnall. Cham: Springer. doi:10.1007/978-3-319-60013-0_77-1.

- Lasry, N. 2008. “Clickers or Flashcards: Is There Really a Difference?” The Physics Teacher 46 (4): 242–244.

- Lee, H., A. Feldman, and I. D. Beatty. 2012. “Factors That Affect Science and Mathematics Teachers’ Initial Implementation of Technology-Enhanced Formative Assessment Using a Classroom Response System.” Journal of Science Education and Technology 21: 523–539. doi:10.1007/s10956-011-9344-x.

- Luckin, R., B. Bligh, A. Manches, S. Ainsworth, C. Crook, and R. Noss. 2012. Decoding Learning: The Proof Promise and Potential of Digital Education. https://media.nesta.org.uk/documents/decoding_learning_report.pdf.

- Lysaght, Z., and M. O’ Leary. 2017. “An Instrument to Audit Teachers’ Use of Assessment for Learning.” Irish Educational Studies 2017 (32): 217–232. doi:10.1080/03323315.2013.784636.

- Maier, U. 2014. “Computer-based, Formative Assessment in Primary and Secondary Education – A Literature Review on Development, Implementation and Effects.” Unterrichtswissenschaft 42 (1): 69–86.

- Makarova, E., B. Aeschlimann, and W. Herzog. 2019. “The Gender Gap in STEM Fields: The Impact of the Gender Stereotype of Math and Science on Secondary Students’ Career Aspirations.” Frontiers in Education 4: 60. doi:10.3389/feduc.2019.00060.

- Martinovic, D., and Z. Zhang. 2012. “Situating ICT in the Teacher Education Program: Overcoming Challenges, Fulfilling Expectations.” Teaching and Teacher Education: An International Journal of Research and Studies 28 (3): 461–469.

- OECD. 2015. The ABC of Gender Equality in Education: Aptitude, Behaviour, Confidence. Pisa: OECD Publishing. doi:10.1787/9789264229945-en.

- Rakoczy, K., E. Klieme, A. Bürgermeister, and B. Harks. 2008. “The Interplay Between Student Evaluation and Instruction: Grading and Feedback in Mathematics Classrooms.” Journal of Psychology 216: 111–124. doi:10.1027/0044-3409.216.2.111.

- Russell, M. 2010. “Technology-Aided Formative Assessment of Learning.” In Handbook of Formative Assessment, edited by H. L. Andrade, and G. J. Cizek, 125–138. New York: Routledge.

- Sach, E. 2013. “An Exploration of Teachers’ Narratives: What are the Facilitators and Constraints Which Promote or Inhibit ‘Good’ Formative Assessment Practices in Schools?” Education 3–13 43: 322-335. doi:10.1080/03004279.2013.813956.

- Shonfeld, M., M. Cotnam-Kappel, M. Judge. 2021. “Learning in Digital Environments: A Model for Cross-Cultural Alignment.” Educational Technology Research and Development 2021. doi:10.1007/s11423-021-09967-6.

- Van der Kleij, F., and L. Adie. 2018. “Formative Assessment and Feedback Using Information Technology.” In Second Handbook of Information Technology in Primary and Secondary Education, Springer International Handbooks of Education. Cham, edited by J. Voogt, 601–615. Switzerland: Springer International Publishing AG. doi:10.1007/978-3-319-71054-9.

- William, D., and P. Black. 1998. Inside the Black Box: Raising Standards Through Classroom Assessment. London: Kings College.

- Zhu, C., and D. Urhahne. 2018. “The Use of Learner Response Systems in the Classroom Enhances Teachers’ Judgement Accuracy.” Learning and Instruction 58: 255–262.