Abstract

Artificial Intelligence (hereinafter AI), and specifically, Machine Learning (hereinafter, ML), has shown tremendous potential to revolutionize the internal audit (hereinafter, IA) profession, from enabling audit coverage of entire test populations, to introducing objectivity in the analysis of key areas. However, prior literature shows that the multiplicity of innovation options can be overwhelming. This paper aims to offer a theoretical framework that would enable audit practitioners, within both industry and professional services, to consider how ML capabilities can be harnessed to their fullest potential across the internal audit lifecycle, from audit planning to reporting. The paper discusses how DA and ML capabilities relate to the internal audit function’s (hereinafter, IAF) remit, drawing from extant literature. In doing so, the paper identifies the most specific options available to IAFs to drive innovation across each segment of the audit lifecycle, leveraging various DA and ML techniques, and supports the assertion that auditors require a continuous innovation mind-set to be effective change agents. The paper also draws out the requirement for effective guardrails, especially with emerging technology, such as Generative AI. Finally, the paper discusses how the value arising from these efforts can be measured by IAFs.

INTRODUCTION

The role of Internal Audit as the “third line” in an organization’s three-line model is well-entrenched across most organizations today and most recently reinforced by the Institute of Internal Auditors (IIA) in their 2020 publication (The Institute of Internal Auditors [IIA], Citation2020). However, when originally introduced by the IIA in 2013 (Taft et al., Citation2020), the world of business was perhaps not as significantly impacted by disruptive technology and AI as it is today. Technology has introduced complexity across multiple organizational layers (Betti & Sarens, Citation2021; Cangemi, Citation2013), and this has made organizations fundamentally rethink how they do business and demonstrate compliance. This principle no doubt extends to IAFs as well and therefore it should come as no surprise that expectations from IAFs have significantly increased. Specifically, the role of an IAF is either direct or tangential to evaluating, improving, or developing an organization’s controls framework in response to evolving risks, and is therefore organizationally significant (Roussy et al., Citation2020). This risk-based approach to auditing perhaps remains the only consistent, visible attribute in an IAF’s structure - a principle finding acceptance across academia as well as practice. Indeed, the use of a risk-based approach for internal auditing has been the subject of research for the last decade (Castanheira et al., Citation2010; Coetzee & Lubbe, Citation2013). This is well summarized by Coetzee and Lubbe (Citation2013), who highlight the value arising from a risk-based audit approach as being the performance of audit engagements more effectively and efficiently. Importantly, they also highlight the use of a risk-based internal audit approach in “ensuring that scarce internal audit resources are used optimally.”

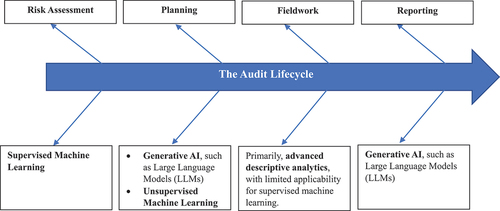

As established by Betti and Sarens (Citation2021), the increased digitization of the business environment means that internal auditors are increasingly expected to imbibe “digital skills”, consider the increasing importance of information technology (IT) risks, such as cybersecurity threats, and demonstrate greater agility with audit planning. Read with the principle of an IAF being risk-focused, it stands to reason that innovation within the IAF should ensure a continued focus on risk, enabling agile audit responses, from timely identification of high-risk organizational units to comprehensive assurance test coverage, to ultimately drive timely detection of control failures and improvement opportunities. These goals are somewhat aided by the progressive and rapidly growing implementation of new technologies, such as data analytics, but the scalability of such progress is dependent on the rapid upskilling of IAFs across the industry and professional services firms. This position is further reinforced by Lehmann and Thor (Citation2020), who call out machine learning and advanced analytics as key components of innovation in the internal audit profession as it looks to the future (see ). The “Auditor of the Future” should therefore demonstrate strong innovation capabilities, to challenge historical mind-sets and ultimately, deliver on the IAF’s mandate for the organization.

It is therefore crucial to understand the “what” and the “how” in the context of innovation for IAFs. A few obvious questions emerge:

What does innovation look like for an IAF?

What has been done in the past, and what lessons have been learnt?

Are there thematic principles that can be used to drive consistent adoption of innovative techniques, such as machine learning or advanced data analytics, across the entirety of the internal audit lifecycle?

How can IAFs measure progress and value-add

This paper attempts to work through the four questions listed above and offers a theoretical framework that maps machine learning techniques to existing elements of the internal audit lifecycle.

This framework is expected to enable a holistic appreciation of the possibilities arising from the use of innovation, which could help IAFs draft a roadmap to how they can get to their desired “North Star” position as early as possible.

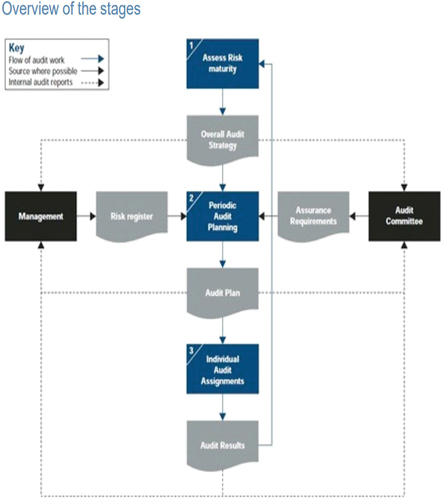

For the purposes of this paper, the internal audit lifecycle is defined as a cyclical process, also referred to as the “risk based internal audit model”, originally set out by the IIA in their 2023 publication (The Institute of Internal Auditors [IIA], Citation2023a, Citation2023b) and reproduced below ():

Assessing Risk Maturity.

Periodic Audit Planning.

Fieldwork - for individual audit assignments.

Reporting - of audit results to the audit committee, which in-turn informs future assessments of risk maturity.

LITERATURE REVIEW

Approach

The literature review was initiated using the following keywords:

Internal Audit Risk Assessment, Continuous Auditing, Audit Data Analytics, Automation in Internal Audit, Innovation in Internal Audit, Anomaly Detection in Internal Audit, Process Mining in Internal Audit, Machine Learning in Audit, Blockchain for Auditing and Anomaly Detection in Internal Audit.

The above keywords fall into two broad categories:

Generic: The keywords ‘Internal Audit Risk Assessment’, ‘Audit Data Analytics’, ‘Continuous Auditing’, ‘Automation in Internal Audit’ and ‘Innovation in Internal Audit are considered fundamental to the literature review, given that they collectively enable a direct assessment of how innovation in various IAFs has been dealt with by academics and organizations.

Specific: ‘Anomaly Detection in Internal Audit’, ‘Process Mining in Internal Audit’, ‘Machine Learning in Audit’ and ‘Blockchain for Auditing’ represent specific aspects of innovation and were expected to reveal more pointed approaches to innovation in Internal Audits, to enable a review of the most recent state of the art. These reviews were expected to be supported with contextual examples, to enable a detailed examination of specific case studies.

Search Engines – Databases and Pre-Print Servers

Once defined, the keywords were used to search for literature across three sources:

Inclusion Criteria

As a final step, the following inclusion criteria was defined to select papers for assessment:

Language: Papers written in English alone were selected for literature review.

Time period: Only literature published in the last twenty years (2003 – 2023 inclusive) was selected for research, with a bias for research performed in the preceding ten years (2013 – 2023). This timeframe was consciously defined to consider a wide array of approaches, analyses, and perspectives, whilst being cognizant of the impact of recent innovation.

Abstract Assessment: A qualitative assessment of the abstracts of each of the above papers was performed, to finalize inclusion of the most relevant and impactful research for a systematic literature review.

The above approach yielded the primary sources of research for this paper and was repeated with individual keywords to delve deeper into specific points of research as required.

Findings

As part of their exploratory study into the usage of audit data analytics in practice, Eilifsen et al. (Citation2020) interviewed the Heads of Professional Practice at five international public accounting firms in Norway and analyzed the “perceptions towards audit data analytics” by 216 senior audit practitioners in the country. The Eilifsen et al. (Citation2020) analysis concluded that a) firms differ in strategies on how to implement advanced data analytics, b) the use of advanced data analytics is “rare” and c) there exists significant uncertainty on the response of supervisory inspection authorities with respect to the use of analytics. The paper, published in the respected American Accounting Association (AAA) journal, has been cited repeatedly since publication and therefore could be considered a seminal study into the use of advanced data analytics by auditors. The Eilifsen et al. (Citation2020) study and conclusions are representative of the broader attempts made to assess the impact of data-driven audit capabilities and innovation in the field of audit, with research carried out by Bibler et al. (Citation2023) supporting the assertion of increased benefits from utilizing an innovation mind-set to provide insights, through leveraging data analytics on the audit lifecycle.

Prior literature examining the qualitative impact of audit data analytics appears to take the approach of focusing on individual aspects of the internal audit lifecycle, as set out in above, and appears to be largely motivated by two drivers – firstly, the use of analytics to enable greater coverage of transactions subject to auditing (typically referred to as “full population testing”), as opposed to basing audit conclusions on sample-based test work (“audit sampling”) (Butkė & Dagilienė, Citation2022; Chiu & Jans, Citation2017; Huang et al., Citation2022; Nonnenmacher & Gomez, Citation2021) and secondly, the expectation that technology-based innovation, such as audit data analytics, could allay pressure on internal auditors (Cardinaels et al., Citation2021; Eulerich et al., Citation2020, Citation2022). Additionally, attempts to introduce innovation within the internal audit lifecycle show a sense of proactive experimentation, as can be seen through the application of multiple technologies, most notably through the use of ML or data analytics (Austin et al., Citation2020). It is therefore unsurprising that the investment in data analytics capabilities is likely to be in the region of $9 billion over the next few years (Austin et al., Citation2020). We also find research supporting the assertion that the increased use of data analytics correlates with the IAF’s ability to build stronger relationships with relevant stakeholders, such as the audit and risk committees (Rakipi et al., Citation2021).

From the use of statistical programming languages such as Python and R (Stratopoulos & Shields, Citation2018), to the deployment of advanced data analytics techniques, IAFs have not shied away from experimenting with innovation for audit delivery. However, three striking features emerge from the prevailing literature. Firstly, previous attempts have focused on specific case studies, which may not lend themselves to a thematic assessment. Secondly, anecdotal attempts at introducing innovation have been met with mixed success. Thirdly, these studies largely point to the positive attitude and perception toward the use of advanced data analytics by the internal audit community.

As such, in this section, the literature review findings have been mapped across the various stages of the internal audit lifecycle:

Risk Assessment and Audit Planning

Limited academic literature exists in the area of internal audit risk assessments and/or audit planning, an assertion backed up in Awuah et al. (Citation2021)’s research paper, who analyzed data from 75 private and state-owned enterprises and noted that the actual usage of data analytics in the areas of risk assessment, fraud detection and substantive testing are still low, despite existing research showing possibilities of how analytics could be applied to support some of these objectives, such as fraud prediction (Perols et al., Citation2015). A detailed research paper by Kotb et al. (Citation2020) profiling the extant academic literature post the Enron accounting fraud saga, contextualizes this position further. One of the most relevant examples in prior literature examining the impact of advanced analytics in the area of internal audit risk assessments is research performed by Eulerich et al. (Citation2020), who specifically examined the use of continuous auditing (CA) as an audit methodology to support risk assessment, and by Kahyaoglu and Aksoy (Citation2021), who discussed the challenges and opportunities brought about by AI in the field of Internal Audit Risk Assessments. The Eulerich et al. (Citation2021) study included analysis of responses provided by 264 Chief Audit Executives and concluded that CA plays a growing role in risk-based audit planning, there is consensus in the audit practitioner community as to the increasing importance of data analytics and the improvement of risk assessment is one of the main benefits of adopting forensic data analytics techniques, such as CA. However, this particular study does not offer an insight into how such an improvement might be achieved. In contrast, Kahyaoglu and Aksoy (Citation2021) clearly position the role of AI in driving agility in the detection of risks arising from increased business complexities. Similarly, whilst S. S. Cao et al. (Citation2019) offers an encouraging analysis of the application of blockchain technology for internal auditing, alternative literature, such as Dai and Vasarhelyi (Citation2017), Fuller and Markelevich (Citation2019), Munevar and Patatoukas (Citation2020), and Cagle (Citation2020) shows that there is a lack of consensus or adequate research on how such technology would be realistically applied for auditing purposes.

As such, the prevailing literature does indicate a preference for the use of classical artificial intelligence capabilities based on previous experimentation with the use of data analytics for audit planning and risk assessments.

Audit Fieldwork

The use of data analytics for audit fieldwork offers a wide spectrum of possibilities, given that the nature of audit fieldwork naturally varies from one audit engagement to another. However, “professional scepticism” is a consistent feature that auditors are required to demonstrate across the internal audit lifecycle, and specifically as part of audit fieldwork. Barr-Pulliam et al. (Citation2020) conducted empirical research to study the influence and impact of data analytics on how auditors apply professional skepticism to audit engagements. The Barr-Pulliam et al. (Citation2020) analysis performed an experiment to observe how auditors assess visualizations from a data analytic tool that had analyzed financial and non-financial measures (NFM) for a hypothetical audit engagement. The study demonstrated that an increase in the rate of false positives from data analytics had a negative correlation with the auditor’s level of professional skepticism, on the basis that auditors were “more comfortable” dismissing any red flags highlighted by data analytics. This is also consistent with Nelson’s research (Citation2009), who propounded a model describing “how audit evidence combines with auditor knowledge, traits and incentives to produce actions that reflect relatively more or less professional scepticism”. Nelson (Citation2009)’s model shows that preexisting knowledge, traits and incentives work together to impact professional skepticism, and by extrapolation, auditor judgment and conclusions. Indirectly, these experiments highlight the significant influence and perception of data analytics on audit fieldwork effectiveness.

Additional research conducted by Butkė and Dagilienė (Citation2022), on a selection of companies across agricultural, retail and manufacturing sectors, where a risk-based approach to audit was implemented, showed that the use of advanced data analytics yielded much higher productivity and completeness of testing. This finding was consistent across all analytics techniques deployed as part of the research, which included Classification, Clustering, Regression, Association Rules, Text Analysis and Visualization. Additionally, this study found that the results yielded by most data analytics tools deploying the above techniques were easily interpretable, without requiring additional skills or expertise. This research serves as strong advocacy for the consistent use of analytics capabilities for audit fieldwork for full population testing. To this end, Huang et al. (Citation2022) assert that audit sampling is beginning to lose its relevance in the era of big data and offer a qualitative framework to apply audit data analytics to full population testing on an audit. This literature serves as a powerful advocate for the positive qualitative impact of data analytics on the execution of individual internal audit assignments and is supported by multiple, supplementary studies. Additionally, it positions innovation in the field of internal auditing as a necessity, rather than a “nice to have” attribute within the profession, which could serve as a much-needed catalyst for adoption, including training and awareness.

In their submission to the International Journal of Accounting Information Systems, Bäßler and Eulerich (Citation2023) offer a rare empirical insight into how the deployment of a machine learning techniques on a classic internal audit test of payment timeliness enabled the auditors on the team to “provide assurance, reduce risk and prevent undesired outcomes”. The study showed that the use of machine learning techniques (in this case, supervised machine learning using classification) could enable the prediction of control failures in the payments process prior to the formal completion of the audit, whilst retaining the objectivity and independence of the audit. The empirical study considered the impact and usefulness of:

Predicting control failures too close to the audit deadline (which showed the highest accuracy; however, with limited additional value, given the inability of the first- and second-line Management teams to prevent the control failure in good time).

Predicting control failures too early, which could result in a potential confusion of roles and responsibilities between the Internal Audit function and the first- and second lines of defense.

Identifying the ‘optimal cutoff’ – in this experiment, defined as seven business days prior to the audit deadline. This Bäßler study identified this threshold as the optimal point that enabled the highest level of value-add from advanced analytics, whilst retaining auditor objectivity and independence.

The use of advancements via ML and data analytics is also seen within the public sector. A concerted effort by a government regulator, the Inland Revenue Service (IRS; responsible for tax administration and collections in the United States), is a case in point when it comes to the use of analytics for full population testing, yielding rich outcomes. Specifically, research by Houser and Sanders (Citation2018) shows that the IRS has invested in both predictive and descriptive analytics to support a preliminary review of tax returns, with a view to detecting potential non-compliant behavior and profile taxpayers for potential audits, where needed. The IRS’ significant investment in a dedicated analytics program, namely, the Automated Substitute for Returns, staffed with just 65 full-time equivalent employees, finalized c.400,000 cases resulting in $542.8 million in additional assessments, in fiscal year 2016 alone. This value-add works out to a cost of 35c. for every $100 collected.

The above examples show the pointed use of data analytics and/or ML capabilities for individual fieldwork case studies. There are additional examples in literature, where research has shown the ability of data analytics to unlock efficiency gains for IAFs. As part of their research, Eulerich et al. (Citation2022) surveyed 268 auditors to assess the qualitative impact and effectiveness of technology-based audit techniques (TBATs), including data analytics, and found that the use of TBATs is positively associated with audit fieldwork effectiveness, and consistent with auditors’ and Chief Audit Executive (CAE) perceptions of TBATs. The Eulerich et al. (Citation2022) analysis also showed that the use of TBATs positively impacts audit efficiency and calculated that one standard deviation increase in TBAT usage is associated with a 13.5% decrease in the number of days necessary to complete an audit.

We therefore arrive at three interim conclusions for this segment:

The lack of explainability could potentially hinder the adoption of advanced analytics capabilities, such as artificial intelligence, for the use of audit fieldwork.

Sponsorship from senior stakeholders, including regulators, in paramount for scalable adoption.

Prevailing literature shows a preference for efficiency gains, and full population testing, as desirable results or outcomes from the adoption of data analytics within audit fieldwork.

Audit Reporting

Cardinaels et al. (Citation2021) found that auditors were less pressured in drawing their final conclusions when their test work was supported by data analytics, and that the use of analytics improves the overall internal audit quality of internal auditors as hypothesized. A similar conclusion was identified by Gao et al. (Citation2020) in their research exploring the impact of data analytics on audit quality. Perhaps the best empirical evidence in prior literature for the application of advanced data analytics within audit reporting comes from Stanisic et al. (Citation2019), who show that a combined application of statistical techniques and ML capabilities could be used to develop predictive models to generate predictions of internal audit opinions, with high accuracy and importantly, interpretability. The empirical analysis in the Stanisic study was based on a hand-collected dataset of 13,561 pairs of audit reports, which makes the findings of this study particularly impactful. Additionally, the Stanisic study was stratified across audit reports where prior client information was available and cases where such information was missing, supporting scalable adoption of the modeling approach across organizations. In all instances, we see emphasis on the use of these capabilities to generate efficiency gains for the IAF, i.e., the reduction of manual efforts by auditors, for example to produce the final version of audit reports prior to issuance.

In summary, prior academic literature and practical research offer plenty of promising examples where the application of ML and data analytics has proven to be impactful across the internal audit lifecycle. However, research also shows that the uptake of innovation is limited by a) awareness and b) lack of training opportunities outside the “big four accounting firms” (Stratopoulos & Shields, Citation2018). Furthermore, in their paper, T. Cao et al. (Citation2021) offer that a major source of reluctance by auditors to consider bolder and more innovative approaches to the use of advanced analytics to support audit delivery, is the concern that regulatory authorities have yet to accept the efficacy of the outputs of data analytics on the audit lifecycle. Indeed, prior literature has recognized the important role played by regulators in the adoption of advanced analytics techniques and emerging technologies (Chatzigiannis et al., Citation2021; Locke & Bird, Citation2020; Warren & Smith, Citation2006; Wellmeyer, Citation2022), as well as the reasons for the non-adoption of advanced data analytics capabilities by auditors (Krieger et al., Citation2021). Research has also explored how regulation could be built into complex business processes and emerging technology, such as decentralized finance (Auer, Citation2022). Wellmeyer (Citation2022) found that despite increasing investments in data [analytics] technologies, auditors continue to significant rely on audit sampling techniques, and citing “fear of inspection findings and lack of clarity from regulators/firms on testing full populations, cross-border data usage and independence issues” being key drivers for this approach. Additionally, research by Kipp et al. (Citation2020) found that whilst advanced data analytics could result in the identification of a large exceptions population (presumably, as the result of full population testing), the risk of liability on auditors from undetected misstatements is lowered only by the application of auditor experience and expertise. The Kipp et al. (Citation2020) analysis supplements the Wellmeyer (Citation2022) findings and serves as a case in point to highlight the caution typically exercised by audit functions purely to mitigate liability, which may presumably arise from regulatory intervention or inspections.

Collectively, it is safe to conclude that whilst prior literature reinforces the positive message that there is massive potential from the use of internal audit innovation across the audit lifecycle, this is beset with challenges that require a considered approach to enable scalable adoption by IAFs across industry sectors and geographies (Butkė & Dagilienė, Citation2022; Eulerich et al., Citation2022; Hampton & Stratopoulos, Citation2016; Nonnenmacher & Gomez, Citation2021).

A FRAMEWORK FOR ML ON THE AUDIT LIFECYCLE

Prior research (Omidi Firouzi & Wang, Citation2020) has shown that ML and Artificial Intelligence not only has the potential to enrich every stage of the internal audit lifecycle, but also disrupt the fundamentals of the traditional IAF lifecycle. The transformative impact of ML on the IAF should therefore be understood as a concept requiring constant evaluation and challenge, rather than as a one-off, anecdotal exercise.

Analysis of the prevailing literature across planning, fieldwork and reporting has yielded preliminary conclusions marked in the previous section, which has been transposed into the following fish-bone framework. The framework uses these conclusions to consider how the most appropriate innovation techniques across machine learning and advanced data analytics map to each stage in the audit lifecycle:

The above framework takes a relatively simplistic two-stage structure. For the “spine”, i.e., the internal audit lifecycle, we can see:

The “head” of the fishbone as being the constituent component of the internal audit lifecycle, and

The “branches” extending from the spine as being the proposed categories of ML/advanced data analytics.

The mapped ML/data analytics techniques:

Draw on the preliminary/interim conclusions identified from the literature review, as highlighted in bold in Section 2, and

Represent the most relevant approaches that can be effectively applied to generate either efficiencies, objectivity, or full population testing - the three main value aspirations from the use of these capabilities on the internal audit lifecycle, as seen from the literature review. The theory underlying these attributes is explained further in Section 4.

This is notwithstanding the fact that each IAF, and indeed each audit engagement may present different nuances and challenges preventing the effective delivery of one or more of the above stages, which could be dealt with in detail as part of a follow-up study. The rationale for this suggested framework is explained below:

The above framework should be considered as a starting position for practitioners and researchers alike, when considering the thematic adoption of innovation techniques at scale across an IAF’s audit portfolio. When applied consistently, this framework will naturally lend itself to further refinement, and necessitate the appropriate re-skilling on the part of internal auditors, due to nuances in each stage presenting practical challenges at the point of implementation. Additionally, this paper does not seek to offer practical guidance on the implementation of specific actions across each of the above components, as these would vary across organizations and rely heavily on senior stakeholder sponsorship. As such, these aspects should be considered as avenues for future research possibilities on this subject.

MEASURING VALUE

As can be seen from a synthesis of extant literature, traditional attempts to introduce innovation broadly exhibit three main value aspirations:

Full population testing - also referred to as assurance coverage. See Huang et al. (Citation2022) and Butke et al. (Citation2022).

Audit efficiencies, through the reduction of manual efforts brought about by the use of advanced descriptive analytics, machine learning and/or generative AI capabilities. See Eulerich et al. (Citation2022).

Objectivity, through the reduction of an auditor’s confirmation bias (defined as the human tendency to seek evidence that supports a preexisting conclusion once a potential explanation has been identified, ignoring evidence that could disconfirm the original explanation (Luippold et al., Citation2015). The Bäßler (Citation2023) analysis demonstrates how this can be achieved in practice, as does the Barr-Pulliam et al. (Citation2020) analysis. Similarly, research by Mökander et al. (Citation2023) and Emett et al. (Citation2023) show how Generative AI capabilities, such as the use of large language models, can be used to drive objectivity within the audit lifecycle.

The common thread that runs across the three objectives above is that they all serve to effectively reduce the risk of an IAF expressing a flawed audit opinion, also known as audit risk (IIA, Citation2020). As a corollary, an innovation that can be designed to meet at least one of the above objectives should be prioritized for delivery by the IAF.

Finally, it should not be lost on the profession that the impact of AI on Internal Audit not only presents a fantastic opportunity to rethink the fundamentals of the internal audit lifecycle, but also recalibrate the skillsets of the internal auditor, a position reiterated by previous research (see Ruhnke, Citation2022). By continuously honing these skills and embracing the transformative potential of emerging technologies, internal auditors can thrive in an ever-evolving business landscape and contribute to the success of their organizations.

CONCLUDING REMARKS

This paper should be viewed as a starting point for detailed experimental research and practical implementation of advanced data analytics and/or machine learning capabilities across the audit lifecycle. Empirical studies should be considered off the back of the above theoretical framework, to discuss actual value-add and identify improvement opportunities.

It is also clear that IAFs should invest in advanced skillsets to unlock value from the various ML capabilities that are discussed in this paper and ensure the “explainability” of any implementations at the senior leadership level to enable ongoing sponsorship. Whilst specialists might be an obvious starting point for initiating implementation efforts, scalable implementation across an IAF will require auditors to develop at least a basic, fundamental understanding of key ML concepts to enable them to contribute to the innovation journey. Indeed, this paper brings out concepts that require significant upskilling by IAFs over the short- to long-term. To some extent, the use of “low code” analytics software can support audit professionals along this learning curve; however, these will not absolve auditors from requiring a solid fundamental understanding of machine learning concepts, to fully appreciate the possibilities at each stage of the assurance lifecycle. Therefore, IAFs should look to earnestly incorporate Data Analytics and ML fundamentals as part of in-house training programs, to enable auditors to upskill themselves for the future.

ACKNOWLEDGMENTS

Dr. Andrea Bracciali, University of Stirling (the author’s academic supervisor on the Professional Doctorate in Data Science Programme)

DISCLOSURE STATEMENT

No potential conflict of interest was reported by the author(s).

REFERENCES

- Arzhenovskiy, S. V., Bakhteev, A. V., Sinyavskaya, T. G., & Hahonova, N. N. (2019). Audit risk assessment model. International Journal of Economics & Business Administration, 7(1), 74–85. Retrieved December 20, 2023, from https://doi.org/10.35808/ijeba/253

- Auer, R. (2022). Embedded supervision: How to build regulation into decentralised finance (CESifo Working Paper No. 9771). Retrieved August 12, 2023, from http://dx.doi.org/10.2139/ssrn.4127658

- Austin, A. A., Carpenter, T., Christ, M. H., & Nielson, C. (2020). The data analytics journey: Interactions among auditors, managers, regulation, and technology. Contemporary Accounting Research, 38(3), 1, 3, 7. Retrieved July 31, 2023, from https://doi.org/10.2139/ssrn.3214140

- Awuah, B., Onumah, J. M., & Duho, K. C. T. (2021). Information technology adoption within internal auditing in Ghana: Empirical analysis (Dataking Working Paper Series No. WP2021-04-04). Retrieved August 7, 2023, from http://dx.doi.org/10.2139/ssrn.3824403

- Barr-Pulliam, D., Brazel, J. F., McCallen, J., & Walker, K. (2020). Data analytics and skeptical actions: The countervailing effects of false positives and consistent rewards for skepticism. 4–6. Retrieved July 31, 2023, from https://doi.org/10.2139/ssrn.3537180

- Bäßler, T., & Eulerich, M. (2023). Predictive process monitoring for internal audit: Forecasting payment punctuality from the perspective of the three lines model. 6–7, 14–19. Retrieved July 31, 2023, from http://dx.doi.org/10.2139/ssrn.4080238

- Bertomeu, J., Cheynel, E., Floyd, E., & Pan, W. (2019). Using machine learning to detect misstatements. Review of Accounting Studies. Retrieved August 12, 2023, from https://ssrn.com/abstract=3496297

- Betti, N., & Sarens, G. (2021). Understanding the internal audit function in a digitalised business environment. Journal of Accounting & Organizational Change, 17(2), 197–216. Retrieved August 8, 2023, from https://doi.org/10.1108/JAOC-11-2019-0114

- Bibler, S., Carpenter, T., Christ, M., & Gold, A. (2023). Thinking outside of the box: Engaging auditors’ innovation mindset to improve auditors’ fraud actions in a data-analytic environment (Foundation for Auditing Research (2023/03-13) Working Paper). Retrieved August 12, 2023, from https://foundationforauditingresearch.org/files/papers/2023-03-13-far-wp-gold2019b02-full.pdf

- Butkė, K., & Dagilienė, L. (2022). The Interplay between traditional and big data analytic tools in financial audit procedures. SSRN Electronic Journal, 5, 17–19. Retrieved August 7, 2023, from https://doi.org/10.2139/ssrn.4239405

- Cagle, M. (2020). A mapping analysis of blockchain applications within the field of auditing. The World of Accounting Science, 22(4), 695–724. Retrieved August 7, 2023, from https://ssrn.com/abstract=3878577

- Cangemi, M. P. (2013). The new era of risk management: Collaboration across the business, risk, compliance and audit. The EDP Audit, Control, and Security Newsletter, 48(3), 14–16. Abstract only from Taylor & Francis Online. Retrieved July 22, 2023, from https://doi.org/10.1080/07366981.2013.830912

- Cao, S. S., Cong, L., & Yang, B. (2019). Financial reporting and blockchains: Audit pricing, misstatements, and regulation. Retrieved August 7, 2023, from http://dx.doi.org/10.2139/ssrn.3248002

- Cao, T., Duh, R.-R., Tan, H.-T., & Xu, T. (2021). Enhancing auditors’ reliance on data analytics under inspection risk using fixed and growth mindsets. Retrieved July 31, 2023, from https://doi.org/10.2139/ssrn.3850527

- Cardinaels, E., Eulerich, M., & Salimi Sofla, A. (2021). Data analytics, pressure, and self-determination: Experimental evidence from internal auditors. SSRN Electronic Journal. 3-7, 22–24. Retrieved August 6, 2023, from http://dx.doi.org/10.2139/ssrn.3895796

- Castanheira, N., Lima Rodrigues, L., & Craig, R. (2010). Factors associated with the adoption of risk‐based internal auditing. Managerial Auditing Journal, 25(1), 79–98. Abstract only from Emerald. Retrieved July 22, 2023, from https://doi.org/10.1108/02686901011007315

- Chatzigiannis, P., Baldimtsi, F., & Konstantinos, C. (2021, June 21–24). Applied cryptography and network security: 19th international conference, ACNS 2021. Proceedings, Part II (pp. 311–337). Retrieved August 12, 2023, from https://doi.org/10.1007/978-3-030-78375-4_13

- Chiu, T., & Jans, M. J. (2017). Process mining of event logs: A case study evaluating internal control effectiveness. 16–22. Retrieved August 12, 2023, from http://dx.doi.org/10.2139/ssrn.3136043

- Coetzee, P., & Lubbe, D. (2013). Improving the efficiency and effectiveness of risk-based internal audit engagements. International Journal of Auditing. Abstract only from Wiley Online. Retrieved July 22, 2023, from https://doi.org/10.1111/ijau.12016

- Dai, J., & Vasarhelyi, M. A. (2017). Toward blockchain-based accounting and assurance. Journal of Information Systems 1 September 2017, 31(3), 5–21. Retrieved August 12, 2023, from https://doi.org/10.2308/isys-51804

- Eilifsen, A., Kinserdal, F., Messier, W. F., Jr., & McKee, T. (2020). An exploratory study into the use of audit data analytics on audit engagements. Accounting Horizons, 34(4), 3-4, 17–21. Retrieved July 31, 2023, from https://doi.org/10.2139/ssrn.3458485

- Emett, S. A., Eulerich, M., Lipinski, E., Prien, N., & Wood, D. A. (2023). Leveraging ChatGPT for enhancing the internal audit process – A real-world example from a large multinational company. Retrieved August 8, 2023, from https://doi.org/10.2139/ssrn.4514238

- Eulerich, M., Georgi, C., & Schmidt, A. (2020). Continuous auditing and risk-based audit planning. 4, 7, 10–12. Retrieved August 7, 2023, from http://dx.doi.org/10.2139/ssrn.3330570

- Eulerich, M., Masli, A., Pickerd, J. S., & Wood, D. A. (2022). The impact of audit technology on audit task outcomes: Evidence for technology-based audit techniques. 3–7, 16–20. Retrieved August 6, 2023, from http://dx.doi.org/10.2139/ssrn.3444119

- Eulerich, M., Pawlowski, J., Waddoups, N., & Wood, D. A. (2021). A framework for using robotic process automation for audit tasks. Contemporary Accounting Research. Retrieved August 12, 2023, from https://ssrn.com/abstract=3904734

- Fuller, S., & Markelevich, A. J. (2019). Should accountants care about Blockchain? Retrieved August 7, 2023, from https://doi.org/10.2139/ssrn.3447534

- Gao, R., Huang, S., & Wang, R. (2020). Data analytics and audit quality (Research Paper No. 2022-151). Singapore Management University School of Accountancy. 2-3,14–15. Retrieved August 6, 2023, from http://dx.doi.org/10.2139/ssrn.3928355

- Hampton, C., & Stratopoulos, T. C. (2016). Audit data analytics use: An exploratory analysis. 3. 16–17. Retrieved August 6, 2023, from https://doi.org/10.2139/ssrn.2877358

- Houser, K., & Sanders, D. (2018). The use of big data analytics by the IRS: What tax practitioners need to know. Journal of Taxation, 128(2), 4–5. Retrieved July 31, 2023, from https://ssrn.com/abstract=3120741.

- Huang, F., No, W. G., Vasarhelyi, M., & Yan, Z. (2022). Audit data analytics, machine learning, and full population testing. 6–8. Retrieved August 6, 2023, from https://doi.org/10.2139/ssrn.4033165

- Imoniana, J. O., & Gartner, I. R. (2008). Towards a multi-criteria approach to corporate auditing risk assessment in Brazilian context. Retrieved August 8, 2023, from https://doi.org/10.2139/ssrn.1095950

- The Institute of Internal Auditors. (2020). The IIA’s three lines model: An update of the three lines of defence. 3–6. Retrieved July 22, 2023, from https://www.theiia.org/globalassets/documents/resources/the-iias-three-lines-model-an-update-of-the-three-lines-of-defense-july-2020/three-lines-model-updated-english.pdf

- The Institute of Internal Auditors. (2023a). Annual internal audit coverage plans. 2–4. Retrieved July 22, 2023.

- The Institute of Internal Auditors. (2023b). Risk based internal auditing. 1–3. Retrieved July 22, 2023, from https://www.iia.org.uk/resources/risk-management/risk-based-internal-auditing/doing-a-risk-based-audit/

- Kahyaoglu, S. B., & Aksoy, T. (2021). Artificial intelligence in internal audit and risk assessment. In Financial ecosystem and strategy in the digital era. Contributions to Finance and Accounting. Springer. Retrieved November 24, 2023, from https://doi.org/10.1007/978-3-030-72624-9_8

- Kipp, P., Olvera, R., Robertson, J. C., & Vinson, J. (2020). Examining Algorithm Aversion in Jurors’ Assessments of Auditor Negligence: Audit Data Analytic Exception Follow Up with Artificial Intelligence. https://ssrn.com/abstract=3775740

- Kotb, A., Elbardan, H., & Halabi, H. (2020). Mapping of internal audit research: A post-Enron structured literature review. Accounting Auditing & Accountability Journal, 33(8), 1969–1996. Retrieved August 8, 2023, from https://doi.org/10.1108/AAAJ-07-2018-3581

- Krieger, F., Drews, P., & Velte, P. (2021). Explaining the (non-) adoption of advanced data analytics in auditing: A process theory. International Journal of Accounting Information Systems, 41, 100511. Retrieved August 8, 2023, from https://doi.org/10.1016/j.accinf.2021.100511

- Lehmann, D., & Thor, M. (2020). The next generation of internal audit: Harnessing value from innovation and transformation. The CPA Journal. Retrieved July 23, 2023, from https://www.cpajournal.com/2020/02/18/the-next-generation-of-internal-audit/

- Locke, N., & Bird, H. L. (2020). Perspectives on the Current and imagined role of artificial intelligence and technology in corporate governance practice and regulation. Australian Journal of Corporate Law. Retrieved August 12, 2023, from https://ssrn.com/abstract=3534898

- Luippold, B. L., Perreault, S., & Wainberg, J. (2015). 5 ways to overcome confirmation bias. Journal of Accountancy. Retrieved November 24, 2023, from https://www.journalofaccountancy.com/issues/2015/feb/how-to-overcome-confirmation-bias.html

- Mökander, J., Schuett, J., Kirk, H. R., & Floridi, L. (2023). Auditing large language models: A three‑layered approach. SSRN Electronic Journal. Retrieved December 20, 2023, from https://doi.org/10.1007/s43681-023-00289-2

- Munevar, K., & Patatoukas, P. N. (2020). The blockchain evolution and revolution of accounting. Information for Efficient Decision Making: Big Data, Blockchain and Relevance, 1, 5, 19. Retrieved August 12, 2023, from https://doi.org/10.2139/ssrn.3681654

- Nelson, M. W. (2009). A model and literature review of professional skepticism in auditing. Auditing: A Journal of Practice & Theory, 28(2m), 1–34. Retrieved November 19, 2023, from https://doi.org/10.2308/aud.2009.28.2.1

- Nonnenmacher, J., & Gomez, J. M. (2021). Unsupervised anomaly detection for internal auditing: Literature review and research agenda. International Journal of Digital Accounting Research, 21, 1–22. Retrieved August 6, 2023, from https://doi.org/10.4192/1577-8517-v21_1

- Omidi Firouzi, H., & Wang, S. (2020). A fresh look at internal audit framework at the age of Artificial Intelligence (AI). Retrieved August 12, 2023, from http://dx.doi.org/10.2139/ssrn.3595389

- Perols, J., Bowen, R. M., Zimmermann, C., & Samba, B. (2015). Finding needles in a haystack: Using data analytics to improve fraud prediction. 1, 10–20. Retrieved August 12, 2023, from https://doi.org/10.2139/ssrn.2590588

- Rakipi, R., De Santis, F., & D’Onza, G. (2021). Correlates of the internal audit function’s use of data analytics in the big data era: Global evidence. Journal of International Accounting, Auditing & Taxation, 42, 100357. Abstract only from Science Direct. Retrieved July 22, 2023, from https://doi.org/10.1016/j.intaccaudtax.2020.100357

- Roussy, M., Barbe, O., & Raimbault, S. (2020). Internal audit: From effectiveness to organizational significance. Managerial Auditing Journal, 35(2), 322–342. Abstract only from Emerald. Retrieved July 22, 2023, from https://doi.org/10.1108/MAJ-01-2019-2162

- Ruhnke, K. (2022). Empirical research frameworks in a changing world: The case of audit data analytics. Journal of International Accounting, Auditing and Taxation, 51, 100545. Retrieved August 7, 2023, from https://doi.org/10.2139/ssrn.3941961

- Stanisic, N., Radojevic, T., & Stanic, N. (2019). Predicting the type of auditor opinion: Statistics, machine learning, or a combination of the two? The European Journal of Applied Economics, 16(2), 1–58. Retrieved August 7, 2023, from https://doi.org/10.5937/EJAE16-21832

- Stratopoulos, T. C., & Shields, G. (2018). Basic audit data analytics with R. SSRN Electronic Journal. Abstract only from SSRN. Retrieved July 31, 2023, from https://doi.org/10.2139/ssrn.3142207

- Taft, J. P., Webster, M. S., Bisanz, M., & Tsai, J. (2020). The blurred lines of organizational risk management. Retrieved November 24, 2023, from https://www.mayerbrown.com/en/perspectives-events/publications/2020/07/the-blurred-lines-of-organizational-risk-management.

- Tysiac, K. (2022). A refreshed focus on risk assessment. Journal of accountancy. Retrieved December 20, 2023, from https://www.journalofaccountancy.com/issues/2022/jan/refreshed-focus-risk-assessment.html

- Warren, J. D., & Smith, M. (2006). Continuous auditing: An effective tool for internal auditors. Internal Auditing, 21(2), 27–35. Retrieved August 12, 2023, from https://ssrn.com/abstract=905144

- Wellmeyer, P. (2022). In the era of audit data analytics, what’s happened to audit sampling? Retrieved August 12, 2023, from http://dx.doi.org/10.2139/ssrn.3690569

- Western Illinois University. (n.d.). Internal auditing > value added audit services > risk assessment process. Retrieved October 5, 2023, from https://www.wiu.edu/internal_auditing/value_added_audit_services/risk.php#:~:text=During%20the%20risk%20assessment%20process,risk%20is%20at%20manageable%20levels