1. Introduction and motivation

Due to significant technological advances in the field of artificial intelligence (AI), which are driven by increased computing power, the ubiquitous availability of data, as well as new algorithms, new forms of intelligent systems and services have been developed and brought to the market (Choudhury et al., Citation2020; Clark et al., Citation2019a; Diederich et al., Citation2022; Kaplan & Haenlein, Citation2019; Ransbotham et al., Citation2018; Robert et al., Citation2020; Rzepka & Berger, Citation2018). In addition to specific applications in the form of virtual assistants, such as Apple’s Siri or Amazon’s Alexa, companies increasingly develop chatbots and enterprise bots to interact with customers (Diederich et al., Citation2022; Maedche et al., Citation2016; McTear et al., Citation2016). What all these systems have in common, is that they allow their users to interact with them using natural language, which is why the systems are summarized by the term conversational agent (CA) (Diederich et al., Citation2022; McTear et al., Citation2016). There are already various use cases for CAs today, ranging from executing smartphone functions, such as creating calendar entries or sending messages to smart home control, to interaction in the healthcare context (Ahmad et al., Citation2022; Elshan et al., Citation2022; Gnewuch et al., Citation2017; McTear et al., Citation2016; Sin & Munteanu, Citation2020). Thus, CAs currently offer a new way of interacting with information technology (Morana et al., Citation2017). Recent literature reviews show a growing interest in CAs and AI-enabled systems (Diederich et al., Citation2022; Elshan et al., Citation2022; Nißen et al., Citation2021; Rzepka & Berger, Citation2018), but mainly a limited variety of application contexts, which mostly focus on short-term interactions in marketing, sales, and support. Application scenarios that require long-term interaction are available but under-researched (Diederich et al., Citation2022; Elshan et al., Citation2022). Additionally, the current applications show that CA’s main goal is to provide personal assistant functionality, while less attention goes to the actual interaction with the system which should be improved by social behaviors being incorporated (Elshan et al., Citation2022; Gnewuch et al., Citation2017; Krämer et al., Citation2011; Nißen et al., Citation2021; Rzepka & Berger, Citation2018). Most of these interactions are initiated by the user and not by the CA, which means that the CA acts reactively rather than proactively. Moreover, these interactions are isolated, transactional, and based on predefined paths, as if they are starting over every time (Seymour et al., Citation2018). Although presently, from a technological perspective, CAs can predominantly conduct restricted conversations related to a specific topic (Diederich et al., Citation2022), modern language prediction models such as the Generative Pre-trained Transformer 3 (GPT-3) are able to fundamentally expand the capabilities of CAs. They achieve this by enabling open-topic and richer conversations with strong interpersonal character (Brown et al., Citation2020). The GPT-3 and many other recent language models are built on Transformer (Vaswani et al., Citation2017), a neural network architecture invented by Google Research in 2017. Google’s recent language model LaMDA shows how human-like ways of interacting can be achieved by enabling open-topic conversations based on the modern autoregressive language model (a Transformer-based deep learning neural network). Google argues that although conversations “tend to revolve around a specific topic, their open-ended nature means they can start in one place and end up somewhere completely different.”Footnote1 Especially when a person is talking to a friend instead of an assistant, the open-ended nature of conversation is highly important to ensure a co-equal discussion (Collins & Ghahramani, Citation2021).

As research has shown, humans often treat machines like social actors and mindlessly apply social rules and expectations to them (Nass & Moon, Citation2000; Nass et al., Citation1994). If machines are designed to act in a human-like manner, interpersonal relationships can be established when long-term interactions with them take place. Thus, incorporating theories of interpersonal relationships in the design of human-machine interaction can lead to more natural interaction and prompt requirements for a collaborative and benevolent relationship between humans and machines (Krämer et al., Citation2011; Lee et al., Citation2021; Qiu & Benbasat, Citation2009). Prior research has already discussed different characteristics for establishing long-term relationships between humans and machines by incorporating findings from social interaction studies (Bickmore & Picard, Citation2005; Danilava et al., Citation2012; Krämer et al., Citation2011; Seymour et al., Citation2018). Research shows that social relationships are important conditions that contribute to psychological well-being (Krämer et al., Citation2011). Companionship, a special form of friendship, is perceived as an important aspect to strengthen psychological well-being (Rook, Citation1987). Companionship is described as having someone familiar with whom you like spending time, while a relationship is characterized by an inner and intimate bond, an intense affection, and a high emotional connection (Bukowski et al., Citation1993, Citation1994; Mendelson & Aboud, Citation2012). Therefore, companions differ significantly from assistants, who are not co-equal, do not elicit feelings of closeness, do not form long-term social bonds, and do not behave proactively (Bukowski et al., Citation1994; Krämer et al., Citation2011; Seymour et al., Citation2018). In human-computer interaction, many researchers believe that systems with social and affective behaviors are perceived as more natural and effective when people interact with them (Heerink et al., Citation2010; Kanda et al., Citation2004; Nass et al., Citation1995; Tsiourti, Citation2018; Young et al., Citation2008). Further, these kinds of behavior bring an increased sense of credibility, reliability, and authenticity of the system (Becker et al., Citation2007). Krämer et al. (Citation2011) address the aspect of sociability and especially companionship within CAs as “[…] it becomes more and more feasible that artificial entities like robots or agents will soon be parts of our daily lives.” (Krämer et al., Citation2011, p. 474').

Today, there are already several CAs with companionship characteristics aimed at building long-term relationships with their users. Replika,Footnote2 for example, is an AI companion, as the providers themselves call it, which specializes in extended conversations and long-term relationships to provide its users with an omnipresent friend. Microsoft has developed XiaoIce,Footnote3 a service they call an AI companion that aims to establish an emotional connection with its users in order “[…] to satisfy the human need for communication, affection, and social belonging.” (Zhou et al., Citation2020, 53'). Although both systems are very successful (Replika has 7 million users and XiaoIce has around 660 million users), the design decisions made to build a long-term relationship have not been studied and scientifically derived (Zhou et al., Citation2020). There is already research (Bickmore & Picard, Citation2005; Vardoulakis et al., Citation2012) investigating the use of companionship in CAs to establish a long-term relationship with their users. However, prescriptive knowledge, which is transferable to different instances, is especially required by designers of different CAs developed to incorporate aspects of companionship (Diederich et al., Citation2022; McTear, Citation2018). Prescriptive design knowledge, such as design principles and design theories, add value by providing recommendations to all stakeholders involved in designing and developing CA instantiations (Gregor & Jones, Citation2007; Gregor et al., Citation2020). With theory, and design theory specifically, one can make distinctions and order how we understand phenomena, form interpretations and explanations, and develop informative accounts for researchers and practitioners (Cornelissen et al., Citation2021). Hence, the primary objective of this paper is to address our lacking insight by constructing a design theory for so-called virtual companionship (VCS) that can help to build CAs equipped with aspects of companionship, where companionship is identified as a beneficial added value. With VCS, the human-machine interaction can be enhanced, because it encourages longer-lasting and more intuitive conversations (Bickmore & Picard, Citation2005; Gnewuch et al., Citation2017; Seymour et al., Citation2018), increases the likability and interpersonal trust users have in a system (Bickmore & Picard, Citation2005), and builds a foundation to enable collaborative scenarios between humans and machines (Li et al., Citation2013; Seeber et al., Citation2019). In building a theoretical framework for VCS, we first turn to basic human-human interaction theories and discuss their applicability to human-machine interaction, as these provide us with a starting point to explain VCS (Krämer et al., Citation2011, Citation2015). After all, humans inevitably exhibit human patterns in their communication with machines (Nass & Moon, Citation2000; Nass et al., Citation1994). Following Krämer et al.’s (Citation2011) prior work on VCS, however, we do not see designing virtual companions to replicate humans as an ideal, since machines that are too human-like can have negative perceptual effects (Mori, Citation2012; Seymour et al., Citation2021). Rather, we focus on integrating individual social elements on a well-balanced level to enrich the interaction with CAs (Feine et al., Citation2019; Seeger et al., Citation2018).

Building on these insights, our research pursues four objectives, namely (1) to derive theoretically grounded design principles for VCS that can be applied to CAs, summarized as a nascent design theory (Gregor & Hevner, Citation2013; Gregor & Jones, Citation2007), (2) to instantiate the theoretically grounded design principles in the form of artifacts and to evaluate these artifacts, (3) to analyze existing CAs that incorporate companionship characteristics, to determine the suitability of our proposed design principles, and (4) to derive design features that guide the translation of the proposed design principles into instantiated artifacts.

The nascent design theory thus serves the purpose of creating design knowledge, and aims to address our overarching design-oriented research question (RQ) (Thuan et al., Citation2019):

RQ: How is virtual companionship made manifest and how should it be designed?

To address this research question, we define two more narrowly focused sub-questions (SQs) that address the aspect of how virtual companionship should be designed and how it makes itself manifest:

SQ1: Which requirements, design principles, and design features establish virtual companionship?

SQ2: How do CAs demonstrate companionship elements and how do users perceive them?

In answering the two SQs, we find answers to the overarching RQ and contribute to constructing our nascent design theory. We followed a systematic and iterative design science research (DSR) approach (Hevner et al., Citation2004) in developing our VCS design theory, relying on the adapted process model proposed by Kuechler & Vaishnavi (Citation2008) , which emphasizes the contribution and knowledge generation of DSR. After theoretically deriving the design principles for VCS, we developed an instantiation (Sarah) which we then evaluated in a within-subject design experiment. Since design principles are prescriptive statements in a means-end relationship, an experimental study is necessary to empirically demonstrate the design decisions’ effects in evaluating the prescriptive statements (Gregor & Jones, Citation2007; Gregor et al., Citation2020). In a second study, we examined Replika’s features and functionality based on the previously derived principles for VCS design. Next, eight design experts provided feedback on the extent to which Replika meets the design principles we established and which design features are used. This mixed-method approach combines quantitative and qualitative research methods to “develop rich insights into various phenomena of interest that cannot be fully understood using only a quantitative or a qualitative method” (Venkatesh et al., Citation2013, p. 22). Thus, this approach enables the evaluation of the design principles and the development of our nascent design theory. Further, with this multi-method approach, we aimed to evaluate both, a self-designed virtual companion (Sarah) and an existing solution (Replika). Thus, the limitations of the two approaches (Sarah’s potential low external validity and Replika’s potential low internal validity) can be weighed and the results can be properly combined. Sarah is a designed expository instantiation following Gregor and Jones (Citation2007) that shows how the design principles can be instantiated. Furthermore, causal statements based on the empirical findings regarding the means-end relationship of our design principles can be made through the within-subject experiment. Through the design experts’ evaluation of Replika, our prescriptions can be further extended with concrete design features. Our nascent design theory eventually contributes to a better understanding of an emerging phenomenon (i.e., VCS) by conceptualizing, describing, explaining, and informing us on characteristics, distinctions, as well as design decisions and their impact and antecedents related to VCS (Gregor, Citation2006; Sandberg & Alvesson, Citation2021).

2. Theoretical foundations

2.1. Conversational agents

CAs are systems that allow their users to interact with them using natural language (Gnewuch et al., Citation2017; McTear et al., Citation2016). The development of CAs started in the 1960s with, for example, the chatbot ELIZA (Weizenbaum, Citation1966), one of the first text-based CAs (Shah et al., Citation2016). While in the past chatbots worked using simple pattern matching, chatbots today are more intelligent due to developments in the fields of machine learning and natural language processing (Knijnenburg & Willemsen, Citation2016; McTear et al., Citation2016; Shawar & Atwell, Citation2007). Public interest in CAs especially grew after Apple’s Siri and later Amazon’s Alexa or the Google Assistant were introduced, since these brought virtual assistants to the smartphones and homes of large numbers of people (Diederich et al., Citation2019a; Maedche et al., Citation2016; McTear et al., Citation2016). One of the differences between chatbots and virtual assistants is the input/output modality. While chatbots are mostly text-based, virtual assistants usually communicate using spoken language (Gnewuch et al., Citation2018b). Chatbots are often used for domain-specific purposes, for example, in giving customer support, and are mostly used via text-based input (Diederich et al., Citation2019b; Gnewuch et al., Citation2017). There are also interactive voice response interfaces which are chatbot applications that enable interaction via speech and are used, for example, in telephone hotlines (Clark et al., Citation2019a). The technological progress through AI has made it possible to interact with a CA using natural language and not only a command-based language that uses only predefined commands (Diederich et al., Citation2022; Gnewuch et al., Citation2017; Rzepka & Berger, Citation2018).

These developments enabled virtual assistants such as Siri and Alexa, whose primary function is to assist their users (Gnewuch et al., Citation2017; McTear, Citation2017). Virtual assistants are often used with speech, even if not exclusively so (Gnewuch et al., Citation2017). Virtual assistants differ from chatbots in a general-purpose approach; for example, they assist users in performing daily tasks (Gnewuch et al., Citation2017; Guzman, Citation2017). Illustrative current use cases are smartphones that execute functions such as creating calendar entries, sending messages, or asking for the weather forecast. Thus, virtual assistants offer a new way of interacting with information systems (Morana et al., Citation2017). However, as stated before, they do so only by providing personal assistant functionalities, without social behaviors being included in the actual interaction with the system (Gnewuch et al., Citation2017; Krämer et al., Citation2011; Rzepka & Berger, Citation2018). below gives an overview of the distinct terms.

Table 1. Relevant definitions.

Gnewuch et al.’s (Citation2017) definition of a virtual assistant, given above, restricts CAs to their pure assistance services, e.g., giving support in entering calendar entries or navigating web pages (McTear et al., Citation2016). However, recent research shows that machines are capable of providing more than assistance services, especially in building interpersonal relationships. Several studies have shown that machines are able to build relationships with humans, for example, by giving CAs feelings of trust and establishing a long-term relationship with them (Benbasat & Wang, Citation2005; Hildebrand & Bergner, Citation2021; Krämer et al., Citation2011; Lee et al., Citation2021). Thus, the evolution from virtual assistant to virtual companion is particularly notable in the fact that the CA focuses on developing a relationship with its user.

The evolution of CAs from being simple virtual assistants or chatbots to virtual companions designed to have a benevolent and long-term relationship with humans, can be explained primarily by the fact that humans often mindlessly apply the same social heuristics used in human interaction to the CA when they display characteristics similar to those of humans (Moon, Citation2000). The evolutionary steps in the development from CA to virtual assistant to virtual companion are illustrated in . Notably, chatbots and virtual assistants can be enhanced by adding characteristics and principles of the VCS.

2.2. Computers are social actors

Nass and Moon’s (Citation2000) work provides the foundation for reflection on the social behavior of CAs. They demonstrated that humans apply social rules in their interaction with machines, and that machines can incorporate such social behavior. For example, in an experiment involving a computer tutor with a human voice, Nass et al. (Citation1994) found that users respond to different voices produced by the same computer as if they were distinct social actors and to the same voice as if it were the same social actor, regardless of whether the voice came from the same or a different computer. These findings prompted the formation of the “Computers are Social Actors” (CASA) paradigm, also referred to as the “social response theory” (Nass & Moon, Citation2000; Nass et al., Citation1994). Further findings have established that if the machine has features normally associated with humans, such as interactivity, the use of natural language, or human-like appearance, users respond with social behavior and social attributions (Moon, Citation2000). Since such human-like design can amplify humans’ social responses, interactions between individuals and computers have the possibility of being social (Feine et al., Citation2019; Nass & Moon, Citation2000; Nass et al., Citation1994). Humans do this because they are accustomed to only humans showing social behavior. Notably, the CASA paradigm does not claim that users regard machines as human beings. Nass and Moon (Citation2000) emphasize that they are aware the interlocutors are machines. Even so, they behave socially toward them, thus indicating “a response to an entity as a human while knowing that the entity does not warrant human treatment or attribution” (Nass & Moon, Citation2000, p. 94). Such behavior, referred to as ethopoeia, was also witnessed in that people showed politeness to machines so that the interaction with them closely resembled an equal relationship in which reciprocity was evident (Moon, Citation2000; Nass & Moon, Citation2000). If a computer acts socially, humans mindlessly apply known social rules in the interaction with the computer as well (Fogg, Citation2002; Nass & Moon, Citation2000). Thus, elements of social conventions that typically guide interpersonal behavior can also be applied to human-machine interaction (Moon, Citation2000).

Although Nass and Moon (Citation2000) refer to human response to machines’ actions in general, it has since been determined that the CASA paradigm is also applicable to CAs in particular (Porcheron et al., Citation2018; Reeves et al., Citation2018). In the case of speech-based CAs such as Amazon’s Echo, their ubiquity in the household even leads to family members competing to control the Echo during dinner, illustrating how the technology triggers social behavior in users (Porcheron et al., Citation2018). Diederich et al. (Citation2022). This emphasizes the importance of the CASA theory as one of the most significant theories in the context of CA research. Social reactions to a CA’s behavior can be enhanced if the CA exhibits human-like behavior in giving social cues (Feine et al., Citation2019; Seeger et al., Citation2018). Many users perceive the integration of such human-like elements as pleasant (Feine et al., Citation2019). For example, Gong’s (Citation2008) results show that the more human-like the CA, the more naturally users responded to the CA’s interaction behavior. Further, integrating social cues can encourage the establishment of a trusting relationship (Bickmore & Picard, Citation2005; De Visser et al., Citation2016), which leads to CAs being seen as reliable and composed. Users particularly trusted the CA’s opinions when they had to take important decisions (Gong, Citation2008). Using Amazon’s Alexa illustratively, Doyle et al. (Citation2019) define dimensions for implementing human-likeness in speech-based CAs, which include establishing a social bond with the user or utilizing human-like linguistic content in communication. This highlights the feasibility of anthropomorphic CAs. Still, we need to note that significant differences remain between how humans communicate with one another and how humans and CAs communicate (Doyle et al., Citation2019). Furthermore, human-like design features should be used thoughtfully in CAs (Diederich et al., Citation2022; Seeger et al., Citation2018), as anthropomorphic design can also be perceived negatively (Doyle et al., Citation2021, Citation2019; Mori, Citation2012). A number of studies emphasize the CASA paradigm’s importance and apply the theory to a variety of different CAs with a view to designing a human-machine relationship (Kim et al., Citation2013; Lee & Choi, Citation2017; Pfeuffer et al., Citation2019b; Qiu & Benbasat, Citation2010). Thus, we find the CASA paradigm appropriate to gain an understanding of how people interact with CAs and how relationships can be built between humans and CAs.

2.3. Theories of interpersonal relationship

Humans have a fundamental need to develop long-term interpersonal relationships (Baumeister & Leary, Citation1995; Cacioppo & Patrick, Citation2008; Deci & Ryan, Citation1985; Krämer et al., Citation2012). Long-term studies such as Bickmore and Picard (Citation2005) show that CAs are capable of building relationships and thus satisfying this need. Such studies then demonstrate increased perception of these interactions. An interpersonal relationship is an advanced and close connection between one or more persons that can take on different forms, such as friendship, marriage, neighborliness, or companionship (Kelley & Thibaut, Citation1978). As an important and pervasive human need, meaningful interpersonal relationships arise over time and exist when the probable course of future interactions differs from such courses between strangers (Hinde, Citation1979). Depending on the nature of the relationship, a number of theories have been established in relationship science, i.e., in the study of interpersonal relationships (Berscheid, Citation1999). This field has long been well researched. We consider selected theories of this kind that are most relevant to companionship (Krämer et al., Citation2011, Citation2012; Skjuve et al., Citation2021), i.e., the need for belonging (Baumeister & Leary, Citation1995), the social exchange theory (Blau, Citation1968; Homans, Citation1958), the social penetration theory (Altman & Taylor, Citation1973), the equity theory (Walster et al., Citation1978), the common ground theory (Clark, Citation1992), the theory of mind (Carruthers & Smith, Citation1996; Premack & Woodruff, Citation1978), and interpersonal trust theory (Rotter, Citation1980).

As social beings, humans have a strong desire for interpersonal relationships motivated by the need to belong (Baumeister & Leary, Citation1995). This leads to people’s interest in building and maintaining positive and warm relationships in their pursuit of happiness (Baumeister & Leary, Citation1995; Krämer et al., Citation2012). Building and maintaining functioning relationships involves reciprocal behavior, in which costs and rewards are balanced (Blau, Citation1968; Homans, Citation1958). This social exchange drives relationship decisions, establishes a norm between the partners, and subsequently maintains and stabilizes the relationship (Blau, Citation1968; Homans, Citation1958; Krämer et al., Citation2011). Further, interpersonal relationships are characterized by depth and breadth of self-disclosure, which is also referred to as the social penetration theory (Altman & Taylor, Citation1973; Carpenter & Greene, Citation2015). According to Skjuve et al. (Citation2021), consideration of the social exchange and social penetration theories are crucial to build a relationship with a CA since, in the long run, the phenomena they propagate help to bond users with the CA, as existing applications like Replika have demonstrated (Skjuve et al., Citation2021). This equitable input-outcome ratio eventually leads to a co-equal relationship, as the equity theory predicts (Sprecher, Citation1986; Walster et al., Citation1978). Equity contributes to partners feeling valued and satisfied in an interpersonal relationship, which is also relevant for the relationship between humans and machines (Fox and Gambino Citation2021). The formation of interpersonal relationships requires developing common ground over time (Clark, Citation1992; Krämer et al., Citation2011), building the ability to grasp and share the mental perspective of the relationship partner and to predict their actions. This phenomenon is often described as people being “soulmates” or “mindreaders” and defines deep interpersonal relationships that are especially relevant in communication between human interactants (Krämer et al., Citation2011). Common ground is created in interaction, for example, when conversation partners make an effort to find a common communication style (Clark & Brennan, Citation1991; Clark & Schaefer, Citation1987), ensure mutual understanding (Clark, Citation1996; Svennevig, Citation2000), or draw on shared knowledge and assumptions they have about each other (De Angeli et al., Citation2001). With the abovementioned aspects incorporated, CAs are able to form a common ground when they communicate and interact with the user (Bickmore et al., Citation2018; Chaves & Gerosa, Citation2021). Interpersonal trust, which is an indispensable condition in relationships, strongly influences the smooth functioning of such relationships. Rotter (Citation1980) defines interpersonal trust as the willingness to be vulnerable to the actions of a relationship partner. As mentioned, several researchers have already shown that CAs and humans are capable of interacting in a trusting relationship (Benbasat & Wang, Citation2005; Hildebrand & Bergner, Citation2021; Lee et al., Citation2021). This then leads to individual well-being, better communication and interaction overall, and finally to a benevolent relationship (Rotter, Citation1980; Schroeder & Schroeder, Citation2018).

2.4. Derivation of the virtual companion

That humans can feel socially related to an individual whose existence is of an artificial nature, is not a new idea: Horton and Wohl (Citation1956) originally recognized parasocial interaction, which describes the non-reciprocal manner in which an audience member interacts with a media persona. Parasocial interaction can become a parasocial relationship, which is defined as the ongoing process of affective and cognitive responses to a media persona outside the viewing time, and leading to a long-term relationship being established (Stever, Citation2017). Although the original concepts of parasocial interaction and parasocial relationship referred mainly to media personas on television as an individual’s relationship partner, research has shown that these concepts can also be applied to human-machine interaction (Krämer et al., Citation2012). Such application of interpersonal relationship theories to human-machine interactions, enables the crafting of a new kind of relationship between human and machine, namely VCS. Earlier research already followed similar approaches, such as using the artificial companion concept (Danilava et al., Citation2012; Wilks, Citation2005), the relational agent (Bickmore & Picard, Citation2005), or Seymour et al.’s (Citation2018) suggestion of building relationships with visual cognitive agents. These relationships are more a side-product of their main research interest in using natural face technology to create a realistic visual presence. Bickmore and Picard (Citation2005) successfully demonstrated the relevance and benefits of long-term human-machine relationships in a study on an exercise adoption system. Danilava et al. (Citation2012) relied on a concept Wilks (Citation2005) introduced, in a first attempt at requirement analysis, and Krämer et al. (Citation2011) built a solid foundation for understanding human-CA relationships with their theoretical framework proposed for designing artificial companions by applying social science theories to human-machine interaction. The authors discuss aspects of sociability in developing a theoretical framework for sociable companions in human-artifact interaction. The identified theories were grouped into a micro-, meso- and macro-level according to their level of sociability in order to guide, among other things, the “implementation of the companions’ behaviors” (Krämer et al., Citation2011, p. 475). A fundamental focus of their work is to subdivide interpersonal theories according to aspects of sociability, and thereby to narrow down the definition of companionship. Further, they raised several questions on the usefulness of their approach and its benefits in deriving guidelines. We find that their theory of companions provides a solid foundation introducing a variety of important theories upon which we can build our VCS research.

The research presented above, that draws on the CASA paradigm, suggests that various aspects of human-to-human relationships and human-to-human interactions are transferable to the relationship between humans and CAs. Although several studies already show the evolution from virtual assistants to virtual companions, we lack theoretically well-founded design knowledge and theories to simplify the virtual companion designing process (Clark et al., Citation2019a; Krämer et al., Citation2011; McTear, Citation2018). This is reflected in the generalizable design knowledge being inadequately available and in multiple user contexts rarely being analyzed (Clark et al., Citation2019a). Therefore, we require prescriptive knowledge, which is transferable to different instances (Diederich et al., Citation2022). Such design principles and theories add value by providing prescriptive recommendations to the parties involved in designing and developing CA instantiations (Gregor & Jones, Citation2007; Gregor et al., Citation2020). Additionally, different users perceive virtual companions very differently, which can have positive as well as negative effects, so one-size-fits-all solutions are inappropriate (Krämer et al., Citation2011). Thus, the consideration of individual user needs must also be reflected in prescriptive design knowledge (Dautenhahn, Citation2004; Krämer et al., Citation2011). Due to the user-specific individuality of companionship, design decisions should not be derived from theory alone; individual choices should be left to the user, for example, through the possibility of the CA being customizable (Krämer et al., Citation2011). According to recognized approaches to crafting and presenting prescriptive knowledge in DSR (Gregor & Hevner, Citation2013; Kuechler & Vaishnavi, Citation2008), design knowledge for VCS needs further elaboration to effectively support the instantiation of virtual companion artifacts.

3. Methodology

In developing our nascent design theory for VCS, we followed a systematic and iterative DSR approach. DSR combines characteristics of behavioral science and design science ensuring that artifacts are designed (construction) based on business needs and requirements (relevance) and are built on relevant knowledge gained from theories, frameworks, and methods (rigor; Hevner et al., Citation2004). The nascent design theory is successively and iteratively developed following the adapted process model Kuechler and Vaishnavi (Citation2008) proposed as in , and that emphasizes the contribution and knowledge generation of DSR. This continuous development ensures a rigorous process to create our nascent design theory.

Figure 2. The DSR approach based on Kuechler and Vaishnavi (Citation2008)

Kuechler and Vaishnavi (Citation2008) argue that within a design cycle their five steps can generate various contributions. Constraint knowledge (circumscription) can already arise in the development and evaluation steps, while design knowledge emerges after the evaluation and conclusion, all contributing to the body of knowledge. The constraint knowledge emerges through the application of theories, instantiations of technology, and the analysis of contradictions that arise (Kuechler & Vaishnavi, Citation2008). Consequently, a design cycle can have several DSR outcomes in the different process steps. The levels of artifact abstraction in DSR (see, ) vary, ranging from models, processes, instantiations, methods, or software. Gregor and Hevner (Citation2013) note that any artifact has a specific level of abstraction, while proposing three main levels for categorization. An artifact can be described by the two attributes “abstract” and “specific” and by their general knowledge maturity. A specific and limited artifact (level one), such as software products or implemented processes, contribute situated and context-specific knowledge. An abstract and complete artifact (level three), such as design theories about embedded phenomena, contribute broad and mature kinds of knowledge. Design principles and constructs, methods, models, or design features (level two) lie between a solely abstract and a specific artifact, contributing nascent design theories or operational principles (Gregor & Hevner, Citation2013). Hence, there can be different degrees of abstraction depending on the extent of the artifact’s generalization in artifact level 2.

Recently, the call for a theoretical contribution has been emphasized (Gregor & Hevner, Citation2013; Kuechler & Vaishnavi, Citation2012). Gregor and Hevner (Citation2013) argue that summarizing the prescriptive knowledge in the form of a nascent design theory, can substantially increase the knowledge contribution and impact. Hence, we follow and use the eight components of a design theory introduced by Gregor and Jones (Citation2007), which are: 1. Purpose and scope (set of meta-requirements, which specify what the system is for), 2. Constructs (entities of interest), 3. Principles of form and function (the meta-design in the form of design principles), 4. Artifact mutability, 5. Testable propositions (truth statements), 6. Justificatory knowledge (underlying theory), 7. Principles of implementation (description for implementation in the form of design features) and 8. Expository instantiation (physical implementation of the artifact).

As our understanding of the individual components has recently evolved, we build on current methodological findings. The principles of form and function, for example, are understood as design principles according to Gregor et al. (Citation2020), and the meta-requirements are considered as the artifact related requirements that Walls et al. (Citation1992) originally presented, on which Gregor and Jones (Citation2007) built (Iivari, Citation2019). Following Möller et al. (Citation2020) and Meth et al. (Citation2015), we formulated the principles for implementation in the form of design features, which show how the design principle prescriptions can be physically instantiated, while at the same time leaving space for their technological implementation, since they are more generally formulated.

Gregor and Jones (Citation2007) argue that design theories manifest through the building and evaluation of various instantiations. These instantiations should go beyond prototypical artifacts that are tested in laboratory settings to show real world application. We address this by including two different instantiations of our nascent design theory, which are Sarah, a self-designed virtual companion and Replika an existing real work application. Kuechler and Vaishnavi (Citation2008) emphasize the necessity of a rigorous evaluation to codify design knowledge as design theories because evaluation findings extend, refine, confirm, or reject the constructed design knowledge. To conduct this evaluation as holistically as possible, we follow a mixed-method approach and the guidelines of Venkatesh et al. (Citation2013) on combining quantitative and qualitative methods. In this way, we could discover and develop integrative insights based on quantitative and qualitative findings. The first guideline requires checking the appropriateness of a mixed-method approach, which is advised if researchers need to “gain a better understanding of the meaning and significance of a phenomenon of interest” (Venkatesh et al., Citation2013, p. 37). Combining quantitative and qualitative analysis strengthens our understanding of CAs, as does the independent instantiation (Sarah) and the analysis of an existing instantiation (Replika). The second guideline determines the strategy, which in our case is sequential since we designed the two studies consecutively to build on one another. The final guideline Venkatesh et al. (Citation2013) propose is that meta-inferences should be developed as theoretical statements which serve to combine the quantitative and qualitative methods’ findings and to see them as a holistic explanation of the phenomenon. This theoretical statement, explaining the results of the mixed-method approach is the nascent design theory of VCS.

Our DSR project outcomes are listed in and presented in the following sections. This paper’s main contribution is our nascent design theory for VCS, which summarizes the design knowledge collected thus far. The instantiation of the virtual classmate Sarah was conceptually designed, developed as a prototype, and evaluated. The real-world virtual companion Replika was then analyzed as an existing instantiation. This paper presents Sarah and Replika as expository instantiations. According to Gregor and Hevner’s (Citation2013) three levels of DSR contribution types our instantiation, Sarah, is a level 1 artifact and the design principles, as well as the nascent design theory for VCS are a level 2 artifact.

By summarizing our results and contributions in the form of a nascent design theory, we aim to extend the body of knowledge for researchers and practitioners, and provide an initial step toward a comprehensive design theory (Gregor & Jones, Citation2007).

4. Designing virtual companionship

4.1. Deriving design principles for virtual companionship

According to Gregor and Jones (Citation2007), meta-requirements are derived to serve a whole class of artifacts, rather than a single instance of a system. Additionally, they are part of a design theory and define its purpose and scope (Gregor & Jones, Citation2007). Meta-requirement elicitation is a process that involves multiple sources, such as literature reviews and core theories (Möller et al., Citation2020). Our literature review was conducted in a semi-structured systematic manner (Webster & Watson, Citation2002). For this purpose, we used various search queries intended on the one hand for studies about mapping human-like relationships to human-machine interaction, and on the other hand for gaining insight around CA design in general. The results and findings were independently collected and aggregated in an Excel spreadsheet by three researchers in the author’s team. The final table was divided into the three main areas: human-human relationship, human-machine interaction, and the VCS to bridge the gap. Based on this table, the three researchers individually derived the meta-requirements, which they then collaboratively aggregated, resulting in 25 meta-requirements (MR1 to MR25) for VCS. To adhere to the meta-requirements, we translated them into five design principles (DP1 to DP5) as proposed by Möller et al. (Citation2020), who state that design principles are formulated “[…] as a response to meta-requirements.” (p. 212). As the design principles’ general objective, we followed Chandra et al.’s (Citation2015) suggestion, later adapted by Gregor et al. (Citation2020) for action and materiality oriented design principles, which “[…] prescribe what an artifact should enable users to do and how it should be built in order to do so.” (Chandra et al., Citation2015, p. 4043). Here, the authors point out the importance of involving stakeholders (Purao et al., Citation2020) in formulating design principles with the goal that they will be “prescriptive statements that show how to do something to achieve a goal” (Gregor et al., Citation2020, p. 1622'). Gregor et al. (Citation2020) analyzed existing formulations of design principles, identified the most important components of a design principle, and proposed the anatomy of a design principle, stating that a design principle consists of the goal, the implementer and user, the context, the mechanism, and the rationale. We do not additionally list the context since it is the same in all five of our proposed design principles, i.e., the interaction between human and virtual companions. The set of design principles has been iterated and refined throughout this project, following the iterative approach of a design process (Kuechler & Vaishnavi, Citation2008). We present the final design principles in the following sections.

According to the social response theory (i.e., CASA paradigm) users attribute human characteristics to non-humans and invest human-like characteristics in their designed behavior (Moon, Citation2000; Nass & Moon, Citation2000), which leads to an adapted interaction with machines that are fundamentally social (Nass & Moon, Citation2000). Humans tend to form and appreciate companionships as social bonds with other humans (Fehr, Citation1996; Hinde, Citation1979; Kelley & Thibaut, Citation1978). If we create VCS between a human and a machine, the machine should also be designed with a human-like appearance and behavior, which is possible according to the social response theory (MR1). A human-like design usually integrates so-called social cues in the CA design (Feine et al., Citation2019; Seeger et al., Citation2018). Such cues are design features originally associated with humans, so that the machine has features such as the ability to use human gestures, tell jokes, or speak with a human-like voice (Feine et al., Citation2019). Integrating social cues into intelligent systems such as CAs consistent with the CASA paradigm, encourages people to act socially toward them (Feine et al., Citation2019; Fogg, Citation2002; Nass & Moon, Citation2000). This yields diverse benefits. For example, it has been shown that incorporating social cues in CAs can increase trust and perceptions of credibility toward the CA (Bickmore et al., Citation2005; Demeure et al., Citation2011; De Visser et al., Citation2016). Further, such integration fosters the establishment of a social bond between the human and the CA (Bickmore & Picard, Citation2005), which opens the potential for VCS (Krämer et al., Citation2011). According to Vardoulakis et al. (Citation2012), CAs designed to build a trustful relationship are “typically designed as computer-animated, humanoid agents that simulate face-to-face dialogue with their users” (Vardoulakis et al., Citation2012, p. 290). However, it is important to consider current standards, norms, and values of the society to ensure the responsible and accepted use of trusted forms and applications of AI (e.g., degree of autonomy, independence, or human-likeliness; Abbass, Citation2019)(MR2). With technological progress, over time the socially accepted standards are also changing. Whereas in the past people would not have spoken to their smartphones, we already find this behavior and we expect it to be common in the future. Recent research has shown that the uncanny valley theory (Mori, Citation2012) is also applicable to CAs (Gnewuch et al., Citation2018a; Seeger et al., Citation2018; Seymour et al., Citation2019). This should then be taken into account in designing a virtual companion (MR3). The theory argues that individuals have a greater affinity to machines that are more realistic, until the machine becomes so human-like that users find its non-human imperfections disturbing. This is the point at which the affinity dramatically drops and the CA is experienced as uncanny. The uncanny valley effect relates not only to the appearance of the virtual companion, but also its verbal interaction. Thus, users of speech-based CAs can even perceive voices that are too human-like as unpleasant (Clark et al., Citation2021; Doyle et al., Citation2021, Citation2019). Mori (Citation2012) suggests that with a very high degree of realism, and thus anthropomorphism, the uncanny valley effect can be overcome. According to this, affinity would reach its highest level, when the human imitation can no longer be distinguished from a real human. We emphasize that we refer to CAs which usually interact with their users via a messaging platform or a speech interface. These have to be distinguished from embodied CAs (Cassell et al., Citation1999), which refer to virtual representations of humans, including a physical appearance with bodily gestures and facial expressions, which would have more profound requirements (Seeger et al., Citation2018). According to Seeger et al. (Citation2018), who developed a framework for the design of anthropomorphic CAs, it is important not to follow a “more is more” approach when designing a CA’s appearance and behavior, but rather to balance human identity (e.g., a human-like representation or demographic information like gender or age), non-verbal features (e.g., hand gestures, facial expressions, or the use of emojis) and verbal characteristics (e.g., the choice of words and sentences) (MR4). Qiu and Benbasat (Citation2009), having investigated the construct of social presence referring to the feeling of being with another, described a quasi-social relationship between human and machine, which can be used to measure the user’s perception of an agent’s social characteristics. By incorporating a human-like appearance and behavior the experience of social presence can be increased (Qiu & Benbasat, Citation2009). Thus, we propose DP1 in .

Table 2. Principle of human-like design.

Due to the high individuality of the concept of companionship, it is difficult to derive design decisions based solely on theory (Krämer et al., Citation2011). Every person has their own ideas and requirements for a companionship relation to be satisfactory. This is dependent on individual characteristics and behavior that appeals to the user (Bukowski et al., Citation1993; Krämer et al., Citation2011) (MR5). Especially when it comes to an embodied virtual companion’s appearance, aspects such as what gender the system should have, whether it should have a gender at all, what the face and hair color, but also the clothing should look like, are very individual (Siemon & Jusmann, Citation2021; Xiao et al., Citation2007). Several studies have investigated the bias in and implications of gender decisions in CA design, yet none draw a final conclusion, especially for VCS (Feine et al., Citation2020; Pfeuffer et al., Citation2019a; Winkle et al., Citation2021). Therefore, we suggest letting the users decide about the preferred gender of their virtual companion in the particular moment (Feine et al., Citation2020) (MR6). This would mean that the user is given the opportunity to customize aspects of the virtual companion’s appearance and demeanor. To create an artifact that is tailored to the user’s preferences (MR7), it is thus necessary to enable adaptivity and adaptability to let the user customize certain features.

To understand the behavior and language characteristics of the user, it is important to get to know him or her (Danilava et al., Citation2012; Seymour et al., Citation2018) (MR8). Understanding the user better over time, steadily improves the ability to adapt to him or her (Park et al., Citation2012) (MR9). This ability is important. Not only to maximize a better interaction, but also to help establish common ground and mutual language (Danilava et al., Citation2012; Krämer et al., Citation2011; Turkle, Citation2010). When interpersonal relationships are formed, common ground is necessary (Clark, Citation1992; Krämer et al., Citation2011) (MR10), which enables the ability to better take the mental perspective of the relationship partner and predict his or her actions (Carruthers & Smith, Citation1996; Premack & Woodruff, Citation1978). The virtual companion should therefore use a language that is familiar to the user (Park et al., Citation2012) (MR11) and must be designed to understand the user’s language as well as possible (MR12), as this is an inevitable condition for satisfactory communication (Gnewuch et al., Citation2017). Additionally, the rule of attraction, according to which the machine adapts to the user can enhance the overall communication satisfaction (Hecht, Citation1978; Nass et al., Citation1995; Park et al., Citation2012). Thus, we propose DP2 in .

Table 3. Principle of adaptivity and adaptability.

social exchange theory assumes that relationships are driven by rewards and costs, where costs are elements with negative value for the individual, such as effort put into building the relationship (Blau, Citation1968; Homans, Citation1958). In contrast, rewards are elements with a positive value, such as acceptance or support (Blau, Citation1968; Homans, Citation1958). Applied to a human-machine relationship the costs to the user could be releasing personal data or paying attention, and the rewards delivered by the machine would be support or information of high quality. For a stable relationship, cost and rewards should be balanced in the VCS (Blau, Citation1968; Krämer et al., Citation2012) (MR13). Therefore, the virtual companion should reward the user when costs are invested (MR14) and it should incorporate a reciprocal exchange (MR15) in order to build an equal relationship (Blau, Citation1968; Gouldner, Citation1960; Krämer et al., Citation2012). Collaborative tasks could be given to the user and the virtual companion to reward the user in fulfilling the task and to encourage a reciprocal exchange (Kapp, Citation2012). Moreover, the virtual companion has to act proactively and reactively by, for example, demanding and asking, as well as giving and answering in an equal measure (L’Abbate et al., Citation2005) (MR16). Thus, we propose DP3 in .

Table 4. Principle of proactivity and reciprocity.

This reciprocal exchange of information, however, must be governed by a set of guidelines that protect the user’s privacy. As in a close relationship, user information should be kept confidential and not disclosed to third parties (Elson et al., Citation2018; Solove, Citation2004) (MR17). The user should also be able to determine what he or she wants to disclose and what information is necessary to obtain which value (Fischer-Hübner et al., Citation2016; Schroeder & Schroeder, Citation2018; Taddei & Contena, Citation2013) (MR18). The handling of this information must therefore be transparent so that it is clear to the user how it is stored and what added value it creates (Saffarizadeh et al., Citation2017; Turilli & Floridi, Citation2009; Wambsganss et al., Citation2021) (MR19). Another important aspect in the AI design of is to observe ethics (Wambsganss et al., Citation2021; Yu et al., Citation2018). Due to an abundance of different perspectives and ethical norms, such as machine ethics (Anderson & Anderson, Citation2011) or robo-ethics (Veruggio et al., Citation2016), developers should decide on and adhere to suitable ethical commitment, and also design the virtual companion as an entity that adheres to ethical principles (MR20). Incorporating transparent treatment of the users’ information, preserving privacy, and implementing ethical rules can increase the users interpersonal trust in the virtual companion (Al-Natour et al., Citation2010; Fischer-Hübner et al., Citation2016; Saffarizadeh et al., Citation2017; Schroeder & Schroeder, Citation2018; Turilli & Floridi, Citation2009). Thus, we propose DP4 in .

Table 5. Principle of transparency, privacy and ethics.

People are naturally inclined to form social attachments, as “human beings are fundamentally and pervasively motivated by a need to belong, that is, by a strong desire to form and maintain enduring inter-personal attachments” (Baumeister & Leary, Citation1995, p. 522). This need to belong is what motivates humans to seek the company of others (Krämer et al., Citation2012) (MR21). A virtual companion, designed according to the needs and preferences of its user would give the user the impression of having someone familiar and likeable spending time with him or her, and would therefore enable the feeling of being together, rather than being alone (Krämer et al., Citation2011; Qiu & Benbasat, Citation2009; Seymour et al., Citation2018). This feeling of being with another is essential for companionship, since being with someone is the central part of our companionship definition. Therefore, this kind of feeling should also be triggered in the user, who interacts with the virtual companion as in a human-human relationship, to reach VCS (MR22). Due to its artificial nature, the virtual companion can and should always be available to its user. Humans as social beings seek the company of other people regularly (Baumeister & Leary, Citation1995). Behind purely being in the company of the other, however, is the fundamental desire to build close, positive, and warm relationships that result in the motivation to build friendships (Krämer et al., Citation2011). Hence, companionship can be seen as a part of friendship (Bukowski et al., Citation1994; Krämer et al., Citation2011; Mendelson & Aboud, Citation2012), thus an overall goal of the virtual companion could be oriented toward principles of friendship (Fehr, Citation1996; Rawlins, Citation2017) in order to make and maintain friendship (MR23). As VCS can be seen as a form of human-machine collaboration (Li et al., Citation2013), principles of collaboration (Siemon et al., Citation2017) can also be taken into account. Such collaboration is defined as the joint effort toward a common goal (Randrup et al., Citation2016) (MR24). Because of the human need to belong, “people seek frequent, affectively positive interactions within the context of long-term, caring relationships” (Baumeister & Leary, Citation1995, p. 522). A great deal of today’s CAs routinely engage with their users as if they were starting over each time an interaction takes place. The ability to build long-term relationships with a user over time would be a huge advantage enhancing the human-machine interaction (Bickmore & Picard, Citation2005; Krämer et al., Citation2012; Seymour et al., Citation2018) (MR25). Thus, we propose DP5 in .

Table 6. Principle of relationship.

In sum, we derived 25 meta-requirements and five design principles for VCS, each identified with a short title, as illustrated in .

Based on the executed meta-requirements and design principles, we derive the definition of a virtual companion as well as VCS as given in .

Table 7. Definitions of the virtual companion and the virtual companionship.

It is important to note that exactly delimiting or defining when VCS is reached is not necessarily possible or desirable, as different characteristics can be implemented to different degrees. Designers should therefore follow the design principles to fit their own needs, leading to companionship in CAs based on the individual case of application.

4.2. Deriving testable propositions and constructs

To assess our proposed design principles, we derived five testable propositions (TP1 to TP5) as truth statements (Gregor & Jones, Citation2007; Walls et al., Citation1992). The propositions are formulated generally, so they can be tested by instantiating virtual companion artifacts (Gregor & Jones, Citation2007). Based on our theoretical foundation we derived the five propositions to evaluate virtual companions with respect to the proposed effects (see, Section 4.1) on the following constructs: social presence (Qiu & Benbasat, Citation2009), communication satisfaction (Hecht, Citation1978), reciprocity (Kankanhalli et al., Citation2005), trusting beliefs (Qiu & Benbasat, Citation2009), trust and reliability (Ashleigh et al., Citation2012), friendship as stimulated companionship (Mendelson & Aboud, Citation2012), and friendship as help (Mendelson & Aboud, Citation2012).

TP1: According to DP1, an appropriate human-like appearance and behavior of a virtual companion increases users’ feelings of social presence.

TP2: According to DP2, the possibility of customization (adaptability) and automatic adaptation of the virtual companion increases communication satisfaction and usage intention.

TP3: According to DP3, proactive and reciprocal behavior in a virtual companion increases users’ perception of reciprocity.

TP4: According to DP4, preserving transparency, privacy, and ethics in a VCS increases users’ trusting beliefs and their feelings of trust, also in the virtual companion’s reliability.

TP5: According to DP5, a collaborative and friendly long-term relationship increases users’ feelings of a supportive companionship.

If a long-term study is not feasible, we suggest using the construct usage intention (Qiu & Benbasat, Citation2009) to predict users’ possible re-use over a longer period of time (Venkatesh et al., Citation2003), since regular use encourages a long-term relationship.

5. Instantiations

5.1. Virtual classmate: an illustrative instantiation and experiment

5.1.1. Sarah instantiation

For a first assessment of the nascent design theory and our developed design principles, we designed an illustrative instantiation according to our five design principles. As already mentioned, it is not always possible or necessary to precisely define when a CA can be seen as a virtual companion, since companionship can be implemented to different extents. The following prototype shows several companionship characteristics that differentiate it from current CAs (see, ). Also, our goal is not to build on current specific technological developments, since AI is constantly changing, which means that every design principle can be implemented differently, first because of current technological advancements, and second because of the developer’s capabilities. With the illustrative instantiation we want to show that by following the prescriptive statements (design principles) that incorporate the companionship properties, the proposed effects can be achieved. Thus, we tested the prototype against another instantiation, designed based on answers and reactions from currently existing virtual assistants, such as Apple’s Siri and Google’s Assistant. This real world instantiation explicitly does not follow any of the design principles we derived; rather, it is based on functions of currently available virtual assistants. The two instantiations contain the same information and thus offer the users equivalent kinds of support. The virtual companion takes on the role of a virtual classmate for students during their studies at a university as this scenario is a promising application of VCS (Harvey et al., Citation2016; Hobert, Citation2019; Woolf et al., Citation2010).

To incorporate DP2, we designed the virtual companion in a co-creation process with users (students). The participatory design approach ensures that the virtual companion is designed and implemented according to the students’ preferences (Bødker & Kyng, Citation2018). More accurately, every virtual companion would have to be designed specifically for the individual student. Since this is not possible in the scope of an experimental approach, as it would result in as many prototypes as participants, we chose the intersection of needs as common ground in designing the virtual companion. For this purpose, four students were closely involved in the design process. Each talked to a total of 75 students in their environment about design characteristics for a virtual classmate structured according to the design principles. The design possibilities were gathered collaboratively using a digital canvas approach with the whiteboard tool MiroFootnote4 The author team synthesized the resulting design possibilities in collaboration with the four students and further discussed with 12 students. This resulted in the final design of the prototype Sarah.

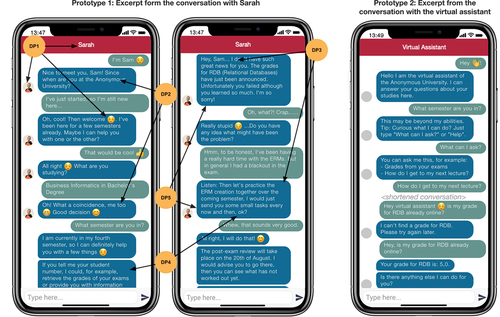

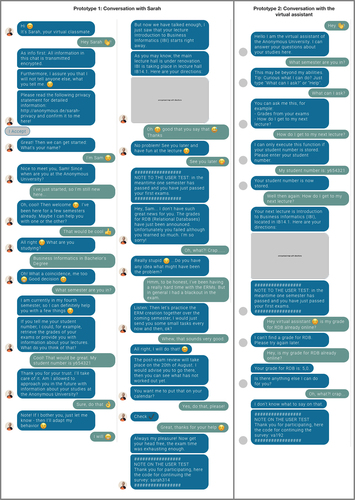

Sarah was implemented in the form of a simulated conversation using the conversational interface design service BotsocietyFootnote5 representing all design principles. Thus, DP2 was fulfilled by including the potential users in designing the virtual companion. provides an overview of both Sarah (Prototype 1) with the implemented design principles, and of the implemented virtual assistant without the companionship characteristics (Prototype 2). The full simulated conversation of both agents (originally in German) is given in Appendix A. Additionally, the prototypes are given online: Sarah at (https://bit.ly/prototyp-sarah, pw: vcpro) and the virtual assistant at (https://bit.ly/prototyp-va, pw: vcpro).

The orange circles in refer to the respective design principles at the particular positions in the conversation and virtual companion design. Following DP2, Sarah is designed according to the students’ preferences, which refer to the profile picture, the retrieval of grades, and that Sarah should be in the same course of study, but in a higher semester. Following DP1, Sarah has a human name, a human profile picture, and uses natural language combined with the use of emojis. Following DP2, Sarah wants the student to get to know her and to build common ground with a “me too” position. According to DP3, Sarah, for example, offers to study together with the student by providing tasks, and according to DP4, Sarah transparently shows which information is used for which purpose, asking the student for his or her approval. DP5 is generally followed in the fictitious example, since Sarah and the student are still in conversation after one semester (see explanation of the time jump in Appendix A: “in the meantime one semester has passed”).

5.1.2. Experimental procedure and measures

The goal of our evaluation is to provide evidence that the prescriptive statements lead to “some developed artifact that will be useful for […] making some improvement” (Venable et al., Citation2016, p. 79). Therefore, we propose that Sarah is a virtual companion designed according to our prescriptive statements that can lead to the desired effects (i.e., the means-end relationship of the prescriptive statement) in a context where Sarah functions as a virtual classmate.

Therefore, we implemented a within-subject experimental design, in which 40 participants rated interactions with both versions, i.e., with Sarah and with the virtual assistant, with the order of the interactions presented randomly. Due to the random ordering, the two groups of participants that would see either Sarah or the virtual assistant first, were counterbalanced. The results assist us in assessing whether a virtual classmate is an appropriate scenario in which VCS could be beneficially implemented.

After each simulated interaction, the participants were given a questionnaire intended to assess the testable propositions TP1-TP5 (see, section 4.2). We relied on our constructed TPs, specifically designed to measure the expected effects of our design principles (e.g., having an increased feeling of social presence for TP1). Thus, the TPs serve as means of verifying whether the prescriptive knowledge, i.e., how something should be designed to achieve a desired effect, can be fulfilled or not. Only through applying the TPs and through using an experimental design that incorporated a test and a control group, could we draw conclusions as to whether our prescriptions (design principles) serve their purpose. Based on our TPs, we developed a post-interaction questionnaire consisting of 53 items. The questions are based on the established constructs mentioned in section 4.2, namely, social presence, trusting beliefs, perceived usefulness and usage intention (Qiu & Benbasat, Citation2009), communication satisfaction (Hecht, Citation1978), reciprocity (Kankanhalli et al., Citation2005), trust and reliability (Ashleigh et al., Citation2012), friendship as stimulated companionship (Mendelson & Aboud, Citation2012) and friendship as help (Mendelson & Aboud, Citation2012), and were adapted for our use case. Further, the survey consisted of five open questions, designed to give participants the opportunity to describe in more detail what they liked or disliked about different variants and why they would or would not use them, as well as to give any suggestions for improvement. The survey ended with general demographic questions. Two researchers independently translated the items into German, mutually aggregated and independently backward translated them to ensure validity. The items were randomly arranged in the questionnaire. All constructs used a 7-point Likert-scale (7 = “totally agree”). The full version of the questionnaire with the items for each construct, as well as the open questions are given in Appendix B.

5.1.3. Participants and results

We randomly recruited participants by distributing the experiment via internal student mailing lists at a German university. To follow a user-centered approach for evaluation, learners were surveyed, as they are potential end-users of the virtual classmate. They were mostly students from the fields of business information systems, business engineering, electrical engineering, or civil engineering. Most of them (65%) were registered for a master’s degree, while 25% were registered for a bachelor’s degree and 10% for a doctorate. Overall, 61 participants started the experiment, of which 40 (male = 27, female = 13, agemean = 25) fully completed the experiment. Participation in the experiment was voluntary, and subjects received no compensation for taking part. The resulting data was tested for their type of distribution using the Shapiro-Wilk test (SW) (Shapiro & Wilk, Citation1965). The results indicate non-normal distribution (p < .01 for all constructs) leading to the use of the Wilcoxon Signed-rank sum test (also due to ordinal scaled data and a within-subject design) to test whether the participants rated Sarah (S) better than the virtual assistant classmate (VA). The test was performed separately for each construct. , presents the results as Cronbach’s alphas (α) computed for all constructs, as well as the descriptive statistics.

Table 8. Results of the experiment.

The results indicate that the participants rated the social presence higher in the interaction with Sarah than with the virtual assistant (Z = 11.98, p < .001), meaning that Sarah had an appropriate human-like appearance and behavior (supporting TP1). Communication satisfaction for the interaction with Sarah was rated higher than for the interaction with the virtual assistant (Z = 12.17, p < .001), meaning that Sarah understands and adapts better to the user (supporting TP2). Reciprocity was rated higher for the interaction with Sarah than for the one with the virtual assistant (Reciprocity: Z = 8.63, p < .001), meaning that Sarah’s reciprocal behavior is better (supporting TP3). Trusting beliefs, as well as trust and reliability were rated higher for the interaction with Sarah, than for the interaction with the virtual assistant (Trusting beliefs: Z = 11.92, p < .001; Trust and Reliability: Z = 4.33, p < .001), meaning that Sarah is a transparent virtual companion that respects privacy (supporting TP4). As with usage intention, stimulating companionship and help were rated higher for the interaction with Sarah than for the interaction with the virtual assistant (Usage intention: Z = 6.38, p < .001; Stimulating companionship: Z = 10.91, p < .001; Help: Z = 12.39, p < .001), meaning that Sarah has a better collaborative and friendly long-term relationship with the user (supporting TP5 and TP2).

The answers to the open questions revealed that the virtual assistant was “cold and without emotions” and that, although the communication was “clear” and “goal oriented,” it was “too rigid” and “impersonal.” In contrast to that, Sarah was rated as “humanlike,” “personal,” “friendly,” and showing emotions. Participants highlighted Sarah’s proactivity and appreciated her empathy and adaptivity. In addition, Sarah was rated significantly higher in all nine constructs, meaning that Sarah can be seen as a virtual companion and that following our prescriptive design principles does lead to the desired effects. Our example instantiation shows that, although not all design principles were followed and implemented to the same extent, no real long-term user involvement took place. Considering that the comparison instantiation deliberately avoided using companionship characteristics, VCS can be designed following our design principles to achieve the desired effects and offering added value compared to other, especially currently existing, CAs.

5.2. Replika: qualitative analysis of a virtual companion

For a second analysis of our nascent design theory, we looked at the established virtual companion service Replika that is used by 7 million users (provider’s own data). Luka, Inc defines Replika as an “AI companion” and provides a service that is available as an Android appFootnote6 as well as an iOS app.Footnote7 Nowadays, the Replika app (over 5 million downloads on Android, July 2021) has several hundred thousand reviews on Google Play Store and Apple App Store with a rating of 4.3 out of 5 (Google Play Store) and 4.6 out of 5 (Apple App Store). Replika was first conceptualized by Eugenia Kuyda with the idea of creating a personal AI that allows and enables users to express and experience themselves (Murphy, Citation2019). The AI achieves this by offering a natural and helpful conversation in which thoughts, feelings, experiences, and even dreams could be shared. Replika is, so to speak, a messaging app that has the aim of creating a personality that is similar to and communicates just like its user (Murphy, Citation2019). Over time, interacting with this AI creates a companion that simulates an enormous perceived similarity to the user and acts as a long-term conversation partner, friend, and companion (Murphy, Citation2019). Luka, Inc states on its website that Replika is “always here to listen and talk,” “always on your side,”Footnote8 and cares about its users. Replika therefore presumably represents a virtual companion as we define it. To evaluate this assumption, we analyzed the service to be able to draw conclusions about whether Replika represents VCS, how and whether the service implements the design principles we derived, and how the users perceive and evaluate the service. We qualitatively analyzed Replika to determine whether the service implements the design principles as we defined them, and how it implements them in particular.

Since Replika defines itself as a virtual companion, we explore the extent to which Replika follows our proposed design principles, and more importantly, we can also use Replika to check the sufficiency and instantiability of our design principles. Aiming to check the degree to which and the form in which Replika implements our design principles, we recruited eight design experts (DE1 to DE8), who were asked to use Replika over a certain period of time, and to fill out an analysis sheet during this time. The design experts were from a project at a German financial services company in which they designed and developed a virtual companion for the company’s employees. We approached the experts, ensuring that their participation was voluntary and not remunerated. Thus, all design experts had experience with designing and developing VCS. DE1, DE6, and DE7 are junior researchers, while DE1, DE6 and DE7 are currently working toward their master’s degrees – the first two in information systems, and DE7 in industrial engineering. DE2 is a business developer, DE4 a procurement specialist, DE5 a software engineer, and DE8 an IT system manager.

The analysis sheet we gave to the DEs for conducting the analysis, includes an introduction of the topic, with a one-page description of the VCS concept, and exposition of the general structure of the design principles, and a task definition. We asked the DEs to use Replika over a period of between 1.5 and 2 weeks, for at least 15 minutes on every or every other day. They documented the usage in a table with dates, usage time, and a short explanation of what was done that day. The usage documentation was followed by the five design principles for which the experts were to record aspects and functions with which Replika implements the respective design principle. After that, they had to do an assessment in the form of a 5-point Likert scale (1 – not implemented at all, to 5 – fully implemented) indicating to what extent Replika had implemented the respective design principle. In addition, the experts were able to document further statements such as criticism or suggestions.

The design experts used Replika between July 29th and September 1st, 2021. On average, the period of use was 18.38 days, of which, per DE on average, Replika was actively used on 11.75 days with a usage time of 186 minutes, in sum. The analysis sheets were completed using Microsoft Word or Google Docs and had a total average length of 16.6 pages. provides a results overview of the DEs’ assessment of each design principle.

Table 9. Assessment of the implementation of the design principle.

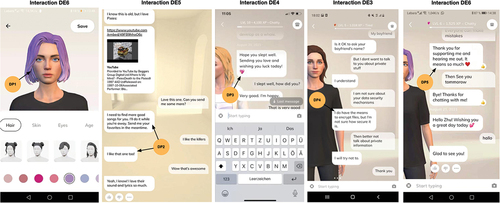

Overall, the experts’ assessment of the extent to which the individual design principles had been implemented, ranges between a mean value of 3.38 and 3.88 (see, ). The principle of transparency, privacy, and ethics appears to have been implemented the best, while the principle of adaptivity and adaptability has the lowest value. However, Replika scored well overall and seems to follow all design principles to some extent in multiple design features. The design experts identified and named the design features and documented them in the form of descriptive texts and screenshots, which illustrate the form in which the respective design principle was implemented. gives a selection of screenshots showing instantiated design features for each of the five design principles, which the design experts made and identified.

Figure 6. Instantiation examples of the design principles in the interactions of the design experts.

We analyzed the evaluation sheets using codes as an efficient data-labeling and data-retrieval device (Miles & Huberman, Citation1994). Saldaña (Citation2015) pointed out that several coding cycles are needed for analyzing qualitative data, therefore the coding effort was divided into two cycles. In a first cycle we coded all design features and other observations, and in a second coding cycle we aggregated and refined them. Two researchers of the author team conducted the coding collaboratively, while one researcher did the code book editing, and aggregated the codings after discussion with the other. The codebook editor has five years of experience with qualitative data analysis and coding on multiple research projects. The other researcher has eight years of experience on multiple projects. In all, 124 codes were assigned 171 times in total. Of the 124 codes, 66 could be clearly assigned to specific features, characteristics, and behaviors of the companion with which the respective design principle was implemented. The remaining codes relate to general remarks, as well as criticism or suggestions. In the second coding cycle, these 66 characteristics were combined and categorized into design features. This resulted in 20 design features that can be clearly assigned to the design principles, as listed in . The full mapping diagram, which also takes into account the meta-requirements, is shown in Appendix C. Each design feature has been labeled and associated with specific characteristics of Replika, which represent the codes given in the analysis. The third column of the table refers to the respective design experts who documented the characteristics. Following here, we give some quotes from the design experts’ assessment, translated from German into English.

Table 10. Design principle and design feature mapping.

DP1: Principle of human-like design