ABSTRACT

The use of expressive Virtual Characters is an effective complementary means of communication for social networks offering multi-user 3D-chatting environment. In such contexts, the facial expression channel offers a rich medium to translate the ongoing emotions conveyed by the text-based exchanges. However, until recently, only purely symmetric facial expressions have been considered for that purpose. In this article we examine human sensitivity to facial asymmetry in the expression of both basic and complex emotions. The rationale for introducing asymmetry in the display of facial expressions stems from two well-established observations in cognitive neuroscience: first that the expression of basic emotions generally displays a small asymmetry, second that more complex emotions such as ambivalent feeling may reflect in the partial display of different, potentially opposite, emotions on each side of the face. A frequent occurrence of this second case results from the conflict between the truly felt emotion and the one that should be displayed due to social conventions. Our main hypothesis is that a much larger expressive and emotional space can only be automatically synthesized by means of facial asymmetry when modeling emotions with a general Valence-Arousal-Dominance dimensional approach. Besides, we want also to explore the general human sensitivity to the introduction of a small degree of asymmetry into the expression of basic emotions. We conducted an experiment by presenting 64 pairs of static facial expressions, one symmetric and one asymmetric, illustrating eight emotions (three basic and five complex ones) alternatively for a male and a female character. Each emotion was presented four times by swapping the symmetric and asymmetric positions and by mirroring the asymmetrical expression. Participants were asked to grade, on a continuous scale, the correctness of each facial expression with respect to a short definition. Results confirm the potential of introducing facial asymmetry for a subset of the complex emotions. Guidelines are proposed for designers of embodied conversational agent and emotionally reflective avatars.

Introduction

The use of expressive Virtual Characters is an effective complementary means of communication for social networks offering multi-user 3D-chatting environment (Gobron et al. Citation2012). We examine here the interaction context where users are unable to directly control their avatar facial expression through their own facial expressions (such type of real-time performance animation has already been successfully addressed in Ichim, Bouaziz, and Pauly (Citation2015). Compared to real-live human-human conversations, how to deliver a realistic and effective communication between virtual characters in VR social platform is still an open question (Gratch and Marsella Citation2005).

Ahn et al. (Citation2012) proposed an emotional model to formulate and visualize the facial expression of virtual humans in a conversational environment by using Valence-Arousal-Dominance (VAD) parameters. This approach has the very interesting degree of freedom of allowing to generate asymmetric facial expression. Indeed, despite a large body of experimental work revealing facial asymmetry during the expression of basic emotions (Borod, Haywood, and Koff Citation1997), no systematic effort has been done to transfer this human characteristic onto online conversational agents and real-time autonomous virtual humans. We relate this lack to two causes: the limited computing resources during real-time interaction that led to sacrifice the integration of a model of asymmetric facial expression, and the lack of knowledge about our sensitivity to facial asymmetry of virtual characters. One consequence is that purely symmetric faces and emotion display may have contributed to what makes the virtual character look artificial. Conversely, synthetic characters appearing in feature films can nowadays display the full spectrum of human emotions, including complex ones inducing facial asymmetry, because they are finely crafted by animators from the input of directors over as much larger time frame.

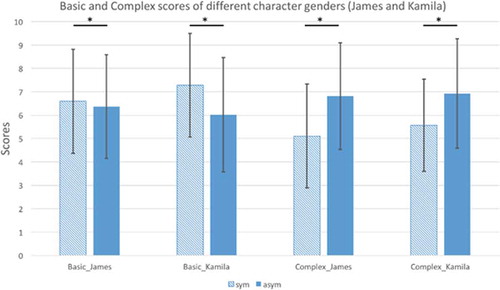

Yet, beyond the facial animation level, the automatic control of virtual characters requires a higher level model of emotion also compatible with real-time performance. For this reason we rely on a well-known three-dimensional model allowing the instantaneous expression of emotions driven by the VAD parameters. Within that framework we can automatically map a 3D VAD emotional state to a facial expression (Ahn et al. Citation2012). In this way, a much larger range of emotions can be synthesized beyond the six basic emotions identified by Ekman (Citation1971) (anger, disgust, fear, happiness, sadness, surprise). This approach also overcomes the significant effort required by the facial morphing technique (Parke and Waters Citation2008) for which predefined facial expressions have to be designed for each character. However the VAD model cannot produce some complex emotions such as ambivalent feelings that simultaneously integrate more than a single emotion. A frequent occurrence of such context results from the conflict between the truly felt emotion and the one that should be displayed due to social conventions. In such a case, the scalar valence parameter is not sufficient to model the conflict between a negative and a positive emotional context (Norman et al. Citation2011). Instead it tends to smooth down the two conflicting valences into an average scalar value. Hence, we have proposed a FACS-based system synthesizing complex and ambivalent emotions by combining two distinct VAD emotions for the left and the right sides of the face (Ahn et al. Citation2013). Likewise the system can also introduce a small degree of asymmetry in the display of basic emotions to produce more ecologically valid expressions (Borod, Haywood, and Koff Citation1997). A controlled experiment on the human perception of symmetric and asymmetric facial expressions has been conducted and the first results have been presented in Ahn et al. (Citation2013). The results are summarized in which depicts that symmetric facial expression is preferred in basic emotional word expression, regardless of the virtual character genders. However, opposite results have been found in complex emotional word expression, for which the asymmetric facial expression is significantly more effective than asymmetric facial expression.

Figure 1. Summary of the results from (Ahn et al. Citation2013). Results are the same for both the male (James) and the female characters (Kamila). Basic emotions were rated as less realistic with asymmetry whereas complex emotions were rated as more realistic with degree of asymmetry.

Yet, the extent to which the asymmetrically altered or the bivalent expressions are correctly perceived was not analyzed. Other important questions to examine are whether:

The side of the asymmetry (left-right vs right-left) induces a different perception of the displayed emotion.

There exists an interaction between subject gender and character gender.

Male and female subjects have the same perception in symmetrical and asymmetrical facial expressions.

The paper is organized as follows. The next section reviews past works establishing the asymmetry of human facial expressions and how it has been reflected in the synthesis of real-time facial expression and models of emotions. We then describe the experimental protocol in detail prior to examining how the above-mentioned questions are reflected in the evaluation results. We conclude by providing guidelines for designers of real-time virtual characters and proposing further research directions.

Related works

Asymmetry of human facial expression

Despite the intrinsic symmetry of the human skeleton and face with respect to the medial/sagittal plane, numerous experimental studies have reported with statistical significance that the expression of emotions were more intense on one side of the face [see the survey from Borod et al. (Citation1998)]. It was initially reported in Sackeim, Gur, and Saucy (Citation1978) that emotions are more intense on the left side, and this, independently of the right or left handedness of the subjects. Given the brain organization of motor control, it characterizes a laterality effect with a greater involvement of the right brain hemisphere for emotional expression (Schwartz, Ahern, and Brown Citation1979). Asymmetry also spreads through the different time scales that are at play in the expression of emotions, from the small timing nuance of a smile (Ekman and Friesen Citation1982) to the longer lasting emotional coloring that pervades emotional life (Cowie Citation2009).

Mixed emotions

As recalled in the previous section, Norman et al. (Citation2011) has demonstrated the existence of bivalent state of mind, i.e. the simultaneous presence of emotions with positive and negative valences due to a conflicting situation between avoiding a source of danger and being attracted toward a potential reward. A recent review has confirmed the possibility to experimentally elicit mixed emotions in humans, including happy-sad, fearful-happy, and positive-negative(Berrios, Totterdell, and Kellett Citation2015). Research on mixed emotions dates back to Kellogg (Citation1915) where mixed emotions were understood as a transient state between opposite affects until one of them dominates. Their duration is sufficient to make such mixed emotions subjectively felt by the subject but also perceived by an external observer. As reported in Berrios, Totterdell, and Kellett (Citation2015) mixed emotions are complex affective experiences that are more than the sum of the individual involved emotions; this makes them difficult to fit in existing dimensional models of emotion (See following section).

Facial expression synthesis

A large body of work has been performed on facial expressivity in general (Parke and Waters Citation2008) and in real-time interaction with autonomous virtual humans in particular (Vinayagamoorthy et al. Citation2006). Pelachaud and Poggi provide a rich overview of a large set of expressive means to convey an emotional state (Pelachaud and Poggi Citation2002) including head orientation. Albrecht et al. (Citation2005) describe a text-to-speech system capable of displaying emotion by radially interpolating key emotions within a 2D emotion space (hence the paper title “mixed feelings” although the proposed approach does not simultaneously integrate two distinct emotions). In 2009 Pelachaud (Citation2009) acknowledges the whole body scale of emotion expression and its temporal organization of multimodal signals. She describes a componential approach where a complex expression is obtained by combining (symmetric) facial areas of source expressions, the final expression resulting from the resolution of potential conflicts induced by the context (e.g. due to social display rules). A study on emotion expression through gaze (Lance and Marsella Citation2010) stresses the relationship between a three-dimensional emotion model and multiple postural factors including the head and torso inclination and velocity (Patterson, Pollick, and Sanford Citation2001). The influence of autonomic signals such as blushing, wrinkles, or perspiration in the perception of emotions has been evaluated in de Melo, Kenny, and Gratch (Citation2010). However, the asymmetry is not acknowledged as a determinant factor in these studies.

3D emotion model and facial expression mapping

In the mid 1970’s, a number of psychologists challenged the issue of defining a dimensional model of emotion. An emotion space spanned by three independent dimensions has been proposed with slightly different terms depending on the authors (Averill Citation1975; Bush Citation1973; Russell Citation1980). In the present paper, we adopt the terms Valence, Arousal, and Dominance for these three axes. The Valence axis also referred to as a Pleasure axis represents the positivity or negativity of an emotion. The Arousal axis describes the degree of energy of the emotion. Finally, the Dominance axis indicates the feeling of power carried by the emotion. Based on this 3D emotion space (Ahn et al. Citation2013) described a linear mapping of an emotion expressed as a 3D point in VAD space to the activity of antagonist facial muscle groups to produce the corresponding facial expression. The identification of this mapping was initiated by exploiting the precious resource of the Affective Norms for English Words (ANEWs)(Bradley and Lang Citation1999) (this study has experimentally quantified a set of 1034 English words by male and female subjects in terms of their expressed Valence, Arousal, and Dominance). Given a homogenous sampling of eighteen ANEW words over the VAD space, including the six basic emotions, Ahn was able to quantify each muscle group activity as a linear function of the three VAD emotion parameters. Asymmetric facial expressions can then be built either by introducing a small left-right bias for basic emotions, or by combining a different VAD emotion on each side of the face for more complex and ambivalent feelings.

Materials and methods

Participants

We recruited 58 naïve participants (33 M, 25 F), aged (mean 24, standard deviation 2.51, [min:18, max:32]), through the EPFL-UNIL online platform. Each participant received a monetary reward of 10 CHF for 30 minutes. Their degree of familiarity with 3D avatars and real-time characters was ranging from null to very familiar.

Materials

Eight facial expressions encompassing three basic emotions and five ambivalent feelings were produced for both a male and a female 3D virtual character with the VAD-based approach (Ahn et al. Citation2013). The static images were computed from the 3D models with the same white background as the evaluation screen ( right), the same set of virtual lights and the same virtual camera with Unity3D.

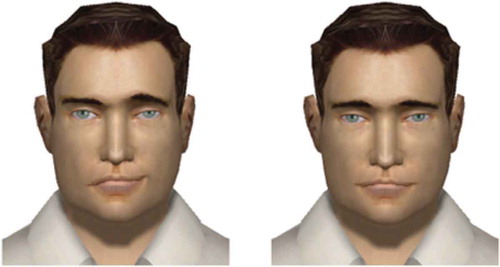

Figure 2. Facial expressions for a basic emotion (sadness). This asymmetric facial expression (left) is slightly amplified on the character’s left side of the face. It is built by biasing a single emotion (right).

Figure 3. Facial expressions for a complex emotion (vicious) combining two distinct VAD states for the asymmetric facial expression (left). The symmetric facial expression is obtained by averaging the VAD coordinates of the two asymmetric components (right).

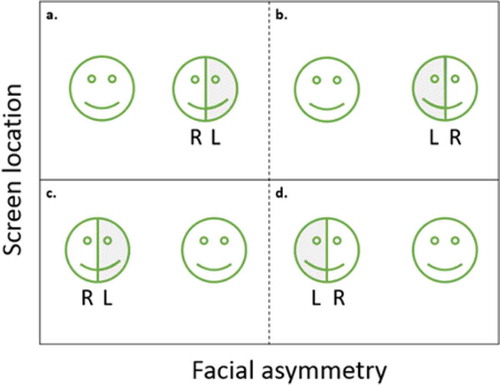

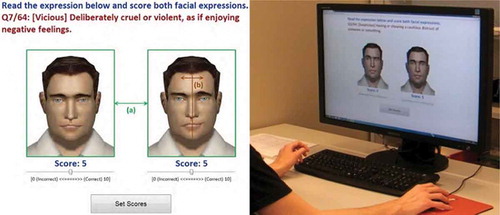

Figure 4. (left) One of the four illustrations of a keyword showing a symmetric and an asymmetric facial expression on the male agent. The other three combinations for this agent and keyword were obtained by swapping the location of the two faces (a) and by mirroring the left and right sides of the asymmetrical face (b). (Right) The stimuli display setup on a 30” screen.

The eight chosen facial expressions were characterized by a single keyword or a short expression, together with an additional short definition to reduce ambiguity. The keywords associated to the basic emotions were sadness, peaceful, and fear while the keywords and expressions used for the more complex feelings were smirk, vicious, “pretend to be cool,” “too good to be true,” and suspicious. Their associated short definitions are provided in Appendix , while illustrations of all emotions are gathered in Appendix .

Table 1. Screen location influence (p-value).

Table 2. Side of the facial asymmetry influence (p-value).

Table 3. Subject gender and character gender (p-value), different scores of symmetry and asymmetry given by male and female subjects.

Table 4. Subject gender and asymmetry (p-value).

Table 5. keywords and the associated short definitions.

Table 6. VAD coordinates of ANEW keywords exploited for building the asymmetric expressions. LR indicates the left-biased asymmetric expression.

Table 7. One of the four combinations of each of the eight keywords for the male and female agent’s facial expressions. Facial expression stimuli (one of the four possible combinations per character) for the eight emotions:

For a basic emotion such as sadness the asymmetric facial expression ( left) is built by biasing the symmetric one ( right). Both possible asymmetric biases (left-intense vs. right-intense) are compared to the un-biased symmetric expression. For a complex feeling described by the keyword vicious (), the asymmetric facial expression ( left) is built by combining two distinct emotions, namely the one corresponding to the ANEW word jealousy on the left side (image-left) and the one corresponding to the ANEW word pleasure on the right side. The symmetric facial expression ( right) is obtained from the average VAD value of the two asymmetric components. Both this asymmetric facial expression and its mirror image are compared to the control condition (symmetric). Appendix gathers the VAD coordinates of all ANEW keywords used to build the basic and complex emotions.

depicts one of the four illustrations of an emotional keyword for the male character. The other three combinations for this agent were obtained by swapping the location of the two faces [) left] and by mirroring the left and right sides of the asymmetrical face [) left]. The illustration also features the instruction to subject (in blue) on top of the screen. Below this line (in red): (1) an index of the current evaluation with respect to the total of 64; (2) a keyword in square brackets; and (3) a short definition with well-known words, were visible below each facial expression, a continuous scale from 0 (= incorrect) to 10 (= correct) was provided with an initial value of 5. The “set score” button at the bottom of the screen allowed to move to the next illustration only after both scores had been edited. Each illustration was presented on a 30” (76 cm) diagonal screen where each facial expression was occupying a surface of 16.6 cm × 13.55 cm /6.5“ × 5.3“ ( right).

Experimental design and procedure

Subjects were asked to score both symmetric and asymmetric facial expressions for eight emotional keywords: sadness, peaceful, fear, smirk, vicious, “pretend to be cool,” “too good to be true,” and suspicious. Among these eight keywords, sadness, peaceful, and fear are labeled as basic emotions and others are labeled as complex emotions. We also informed subjects to spend around 25 seconds per question and pay attention to the correspondence of given emotional keyword and conversational agent’s facial expression rather than the graphics rendering of the agent ().

For each emotional keyword, both virtual character genders were used for displaying symmetric and asymmetric expressions. Each keyword was presented in the four combinations obtained by swapping the screen location of the two faces (/c and b/d) and by mirroring the left and right sides of the asymmetrical face ( a/b and c/d). In total, eight emotional keywords times four combinations for two character genders result in sixty-four questions that were shown in a randomized order. We only enforced an alternation of male /female character gender to minimize the gender influence. A between-trial white screen was displayed during 2 seconds to minimize any influence of the previous trial.

Statistical analysis and results

In the present analysis we examine whether:

The screen location of the facial expression influences the subject’s decision.

The side of the asymmetry (left-right vs right-left) induces a different perception of the displayed emotion.

There exists an interaction between subject gender and character gender.

Male and female subjects have the same perception in symmetrical and asymmetrical facial expression.

Screen location

First we examine the potential influence of the screen layout. As expected no significant difference was observed (p-values are listed in Appendix ) among ratings of any emotions when presented on the left or the right side of the screen.

Side of the facial asymmetry

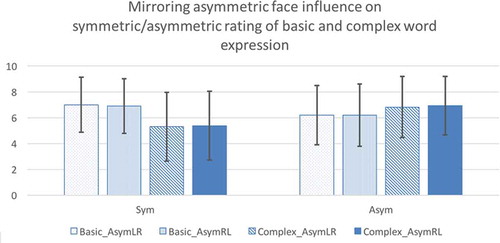

Then we examine the potential influence of the face side of the asymmetry. depicts the scores obtained for the two variants of asymmetric expressions for basic emotions and complex emotions. A paired-samples t-test was conducted to compare whether the differences are significant. No significant differences were observed for the present choice of virtual characters and emotions (p-value in ).

Subject gender and character gender

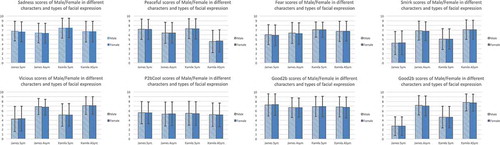

We examine here the potential influence of the subject’s gender and the virtual character gender (Male: James, Female: Kamila). For all emotional words, the results of a Welch’s t-test indicate that there is no significant difference of symmetric/asymmetric rating with respect to subject gender or virtual character gender (, Appendix ).

Subject gender and asymmetry

Both subject genders consistently evaluate the eight emotional words (Appendix ). The basic emotional words, such as sadness, and peaceful, symmetric facial expression performed significantly better than asymmetric facial expression (p < 0.05). On the contrary for complex word expressions such as smirk, vicious, and suspicious, both male and female subjects gave higher rating to asymmetric facial expression (p < 0.05). For basic emotion word Fear, and complex emotion word P2bCool, both subject genders have the same perception on symmetric and asymmetric expressions, no significant differences exist (p > 0.05). For the complex emotion word Good2b, symmetric facial expression was preferred than asymmetric expression in both Male and Female subjects.

Discussion and conclusion

Based on the results presented in the previous sections, we can provide some refined guidelines compared to Ahn et al. (Citation2013) for designing intuitive user interfaces leveraging on the automated expression of emotions. We first confirmed that the experimental evaluation protocol was not biased by the location of the facial expressions on the screen by showing that this factor had no influence on the ratings. We then investigated the human sensitivity to left and right sides of face in symmetric and asymmetric facial expressions of basic and complex emotions and observed no statistical significance that the expression of emotions was more intensively perceived on one side of the face. This result is useful because, despite the observation gathered on real humans (Borod, Haywood, and Koff Citation1997), the design of virtual characters does not need to take this factor in consideration for the level of details and lighting conditions that we used. Then we studied whether the gender of both the subjects and the virtual characters had an influence in the evaluation of facial expressions. Likewise the results showed no influence. Finally, when examining in more detail the influence of asymmetry we can refine the guidelines for embodied conversational agents as follows:

Basic emotions should still be designed with symmetry, otherwise there is a risk of suggesting an ambivalent emotion.

Asymmetry is successful at conveying only some of the complex emotions. We conjecture that, due to their transient nature (Berrios, Totterdell, and Kellett Citation2015), mixed emotions may not be optimally conveyed on a static picture. In particular, we observe that complex emotions involving a closed mouth were much better rated than those with a partially open mouth that may appear as less believable when the time dimension is missing.

In the future, further experimental studies should consider: (1) include a greater variety of synthetic characters to reduce confounding factors linked to the numerous character attributes; and (2) integrate a short-term temporal dimension to highlight the transient nature of mixed emotions. We believe the integration of time should re-enforce the expressive strength of asymmetry in more classes of complex expressions, e.g. those including a component of surprise that involves the opening of the mouth.

Acknowledgments

The authors wish to thank Mireille Clavien for the design of the virtual male and female 3D characters, Stéphane Gobron and Quentin Silvestre for their contributions to the Cyberemotions chatting system software architecture, and Dr Arvid Kappas for fruitful discussions on the evaluation of facial asymmetry.

Funding

This work was supported by the EU FP7 CyberEmotions Project (grant 231323).

Additional information

Funding

References

- Ahn, J., S. Gobron, D. Garcia, Q. Silvestre, D. Thalmann and R. Boulic. 2012. An NVC Emotional Model for Conversational Virtual Humans in a 3D Chatting Environment. In Proceedings of the 7th International Conference on Articulated Motion and Deformable Objects (AMDO 2012), Port d’Andratx, Mallorca, Spain, Lecture Notes in Computer Science 7378. Heidelberg, Berlin: Springer, 47–57. ( accessed July 11–13, 2012)

- Ahn, J., S. Gobron, D. Thalmann, and R. Boulic. 2013. Asymmetric facial expressions: Revealing richer emotions for embodied conversational agents. Computer Animation and Virtual Worlds 24 (6):539–51. doi:10.1002/cav.1539.

- Albrecht, I., M. Schröder, J. Haber, and H.-P. Seidel. 2005. Mixed feelings: Expression of non-basic emotions in a muscle-based talking head. Virtual Reality 8 (4):201–12. doi:10.1007/s10055-005-0153-5.

- Averill, J. R. 1975. A semantic atlas of emotional concepts. JSAS Catalog of Selected Documents in Psychology 5 (330):1–64.

- Berrios, R., P. Totterdell, and S. Kellett. 2015. Eliciting mixed emotions: A meta-analysis comparing models, types and measures. Frontiers in Psychology 6 (428). doi:10.3389/fpsyg.2015.00428.

- Borod, J. C., C. S. Haywood, and E. Koff. 1997. Neuropsychological aspects of facial asymmetry during emotional expression: A review of the normal adult literature. Neuropsychology Review 7 (1):41–60.

- Borod, J. C., E. Koff, S. Yecker, C. Santschi, and J. M. Schmidt. 1998. Facial asymmetry during emotional expression: Gender, valence, and measurement technique. Neuropsychologia 36 (11):1209–15. doi:10.1016/S0028-3932(97)00166-8.

- Bradley, M. M., and P. J. Lang. 1999. Affective Norms for English Words (ANEW): Stimuli, instruction manual and affective ratings (Tech. Report C-1). Gainesville: University of Florida, Center for Research in Psychophysiology.

- Bush, L. E. 1973. Individual differences multidimensional scaling of adjectives denoting feelings. Journal of Personality and Social Psychology 25 (1):50–57. doi:10.1037/h0034274.

- Cowie, R. 2009. Perceiving emotion: Towards a realistic understanding of the task. Philosophical Transactions of the Royal Society B: Biological Sciences 364 (1535):3515–25. doi:10.1098/rstb.2009.0139.

- de Melo, C. M., P. Kenny, and J. Gratch. 2010. Influence of autonomic signals on perception of emotions in embodied agents. Applied Artificial Intelligence 24 (6):494–509. doi:10.1080/08839514.2010.492159.

- Ekman, P. 1971. Universals and cultural differences in the judgments of facial expressions of emotion. Nebraska Symposium on Motivation 5 (330):207–83.

- Ekman, P., and W. V. Friesen. 1982. Felt, false, and miserable smiles. Journal of Nonverbal Behavior 6 (4):238–52. doi:10.1007/BF00987191.

- Gobron, S., J. Ahn, D. Garcia, Q. Silvestre, D. Thalmann and R. Boulic. 2012. An Event-Based Architecture to Manage Virtual Human Non-Verbal Communication in 3D Chatting Environment. In Proceedings of the 7th International Conference on Articulated Motion and Deformable Objects (AMDO 2012), Port d’Andratx, Mallorca, Spain, Lecture Notes in Computer Science 7378, Heidelberg, Berlin: Springer. 58–68. ( accessed July 11–13, 2012).

- Gratch, J., and S. Marsella. 2005. Lessons from emotion psychology for the design of lifelike characters. Applied Artificial Intelligence 19:215–33. doi:10.1080/08839510590910156.

- Ichim, A. E., S. Bouaziz, and M. Pauly. 2015. Dynamic 3D avatar creation from hand-held video input. ACM Transactions Graph 34 (4):45:1–45: 14. doi:10.1145/2766974.

- Kellogg, C. E. 1915. Alternation and interference of feelings. Princeton, N. J., and Lancaster, Pa.: Psychological review company.

- Lance, B., and S. Marsella. 2010. The expressive gaze model: Using gaze to express emotion. IEEE Computer Graphics and Applications 30 (4):62–73. doi:10.1109/MCG.2010.43.

- Norman, G. J., C. J. Norris, J. Gollan, T. A. Ito, L. C. Hawkley, J. T. Larsen, J. T. Cacioppo, and G. G. Berntson. 2011. Current emotion research in psychophysiology: The neurobiology of evaluative bivalence. Emotion Review 3 (3):349–59. doi:10.1177/1754073911402403.

- Parke, F. I., and K. Waters. 2008. Computer facial animation, 2nd ed. AK Peters Ltd.

- Patterson, H. M., F. E. Pollick, and A. J. Sanford. 2001. The role of velocity in affect discrimination. Proceedings of the 23rd Annual Conference of the Cognitve Science Society, Edinburgh, Scotland. 756–61.

- Pelachaud, C. 2009. Modelling multimodal expression of emotion in a virtual agent. Philosophical Transactions of the Royal Society B: Biological Sciences 364 (1535):3539–48. doi:10.1098/rstb.2009.0186.

- Pelachaud, C., and I. Poggi. 2002. Subtleties of facial expressions in embodied agents. The Journal of Visualization and Computer Animation 13 (5):301–12. doi:10.1002/vis.299.

- Russell, J. A. 1980. A circumplex model of affect. Journal of Personality and Social Psychology 39 (6):1161–78. doi:10.1037/h0077714.

- Sackeim, H. A., R. C. Gur, and M. C. Saucy. 1978. Emotions are expressed more intensely on the left side of the face. Science 202 (4366):434–36.

- Schwartz, G. E., G. L. Ahern, and S.-L. Brown. 1979. Lateralized facial muscle response to positive and negative emotional stimuli. Psychophysiology 16 (6):561–71. doi:10.1111/j.1469-8986.1979.tb01521.x.

- Vinayagamoorthy, V., M. Gillies, A. Steed, E. Tanguy, X. Pan, C. Loscos, and M. Slater. 2006. Building expression into virtual characters. In Eurographics 2006 - State of the art reports, 21–61. Eurographics, Switzerland: Eurographics Association. http://www.eg.org/EG/DL/Conf/EG2006/stars.