ABSTRACT

The job shop scheduling problem (JSSP) is an important NP-hard practical scheduling problem that has various applications in the fields of optimization and production engineering. In this paper an effective scheduling method based on particle swarm optimization (PSO) for the minimum makespan problem of the JSSP is proposed. New variants of the standard PSO operators are introduced to adapt the velocity and position update rules to the discrete solution space of the JSSP. The proposed algorithm is improved by incorporating two neighborhood-based operators to improve population diversity and to avoid early convergence to local optima. First, the diversity enhancement operator tends to improve the population diversity by relocating neighboring particles to avoid premature clustering and to achieve broader exploration of the solution space. This is achieved by enforcing a circular neighboring area around each particle if the population diversity falls beneath the adaptable diversity threshold. The adaptive threshold is utilized to regulate the population diversity throughout the different stages of the search process. Second, the local search operator based on critical path analysis is used to perform local exploitation in the neighboring area of the best particles. Variants of the genetic well-known operators “selection” and “crossover” are incorporated to evolve stagnated particles in the swarm. The proposed method is evaluated using a collection of 123 well-studied benchmarks. Experimental results validate the effectiveness of the proposed method in producing excellent solutions that are robust and competitive to recent state-of-the-art heuristic-based algorithms reported in literature for nearly all of the tested instances.

Introduction

Job shop scheduling problem (JSSP) is a well-known practical optimization problem that is crucial in the planning and organization of industrial systems. JSSP is one of the most challenging scheduling problems due to the various constraints that are inevitable in practical applications. The objective of JSSP is to determine a schedule (the order of processing jobs over machines) in the shop so that a specified design criterion is optimized. The minimization of the total completion time of all operations known as the makespan (Cmax) of the production sequence is mostly investigated in the literature. The minimization of the makespan reduces the production time and cost, increases resource utilization, and thus leads to high efficiency production systems. Another important criterion is the minimization of the average job tardiness which designates the ability to meet specific production due dates that exist in real manufacturing systems. JSSP has been a vigorous research area since it was first presented by Fisher and Thomson in 1963 and it was shown to be NP-complete (Garey, Johnson, and Sethi Citation1976). For a typical JSSP the number of possible solutions (both feasible and infeasible) is

. Therefore, exhaustive search of the solution space is computationally expensive even for small-sized problems. Many approaches employing mathematical formulations and approximation methods have been presented. Exact methods, such as branch and bound (Heilmann Citation2003) and dynamic programming (Lorigeon, Billaut, and Bouquard Citation2002) have been efficient in solving small-sized instances of the problem. Though, these methods are computationally expensive for solving medium or large instances. Therefore, many approximate and heuristic strategies have been proposed to overcome the shortcomings of exact enumeration techniques and to find high-quality solutions in an acceptable computational time. Lagrangian relaxation (Chen and Luh Citation2003; Kaskavelis and Caramanis Citation1998), and Tabu search (TS) (Geyik and Cedimoglu Citation2004; Watson et al. Citation2003) have made significant achievements in improving the solution quality in JSSP.

In recent years, nature-inspired heuristics have emerged and have been applied successfully to solve difficult JSSP that are hard to solve by traditional techniques. Intelligent optimization algorithms such as ant colony optimization (Neto and Godinho Filho Citation2013; Zhang et al. Citation2006), artificial bee colony (ABC) (Banharnsakun, Sirinaovakul, and Achalakul Citation2012; Chong et al. Citation2006), genetic algorithms (GA) (Amirthagadeswaran and Arunachalam Citation2006; Liu, Tsai, and Chou Citation2006), particle swarm optimization (PSO) (Lian, Jiao, and Gu Citation2006; Xia and Wu Citation2006), simulated annealing (SA) (Suresh and Mohanasundaram Citation2006), neural networks (Yu and Liang Citation2001), and memetic algorithm (MA) (Gao et al. Citation2011) were reported to find optimal/near optimal solutions within reasonable computational time. Nevertheless, each metaheuristic has its own strength and weakness. Some of these methods can achieve excellent effectiveness and efficiency but require extensive implementation efforts and their performance is significantly dependent on the initial parameter selection and structure. PSO is a computational paradigm that efficiently utilizes the distributed and parallel computing capabilities of living swarms in nature (Eberhart and Kennedy Citation1995). The foremost advantage of PSO is its fast convergence, ease of implementation, and robustness which compares favorably with other optimization methods. Due to the attractive features of PSO, it has been applied to a wide-range of optimization problems including JSSP. Liao, Tseng, and Luarn (Citation2007) extended the discrete version of PSO to solve the flowshop scheduling problems. Yongxian, Xiaotian, and Jinfu (Citation2008) established a job shop scheduling model based on the PSO and investigated the problem of optimized execution of operations under limited resources. Tseng and Liao (Citation2008) have exploited the PSO algorithm to solve the problem of the scheduling jobs on parallel machines with a total tardiness objective. Liu et al. (Citation2011) proposed a multi-objective optimization model based on PSO to minimize the bottleneck machines’ makespan and the total products’ tardiness for machine tool job shop scheduling.

However, PSO lacks the ability to achieve good exploration-exploitation balance as it cannot effectively explore the whole solution space and possibly converge prematurely to local optima. Hybrid algorithms that combine two or more meta-heuristic techniques were developed to improve effectiveness and efficiency. Hybrid approaches aim to improve the performance by combining the advantages of different algorithms to improve both global and local search capabilities. Motivated by these perspectives, various hybrid PSO-based algorithms have been reported to improve the solution quality of the JSSP. Sha and Hsu (Citation2006) proposed a PSO-TS hybrid algorithm. Tasgetiren et al. (Citation2006) presented a hybrid method based on PSO and differential evolution and enhanced with an efficient local search based on variable neighborhood search (VNS). Ge, Du, and Qian (Citation2007) utilized the efficient global search capability of PSO with the great ability of escaping local minima of SA by introducing an algorithm called hybrid evolutionary algorithm. In another research effort, Ge et al. (Citation2008) introduced a hybrid algorithm incorporating PSO and artificial immune system (AIS) to solve the minimum makespan JSSP. Another hybrid swarm intelligence algorithm (MPSO) consisting of PSO, SA, and a multi-type individual enhancement scheme was developed by Lin et al. (Citation2010). Tang et al. (Citation2010) introduced a hybrid algorithm combining PSO and newly proposed evolutionary genetic operators to solve the JSSP. The algorithm was found to outperform regular standalone PSO and GA. Another PSO-GA hybrid algorithm was proposed by Liu et al. (Citation2015). In their work, the PSO algorithm is redefined and modified by introducing genetic operators such as crossover and mutation to update particles in the population. Although most of these algorithms attain different mechanisms to escape local optima and premature convergence, no single algorithm is proven to be successful for finding an optimal solution for all problem sizes and complexities. In additions, most of the genetic and PSO operators utilized in these algorithms do not make use of knowledge of the JSSP problem domain. This may lead to the construction of infeasible solutions and inefficient exploration of the solution space.

In this paper, an improved PSO-based algorithm for the JSSP is introduced. The proposed algorithm incorporates several features to address drawback of prior algorithms. The main contributions of this work are as follows:

The conventional PSO velocity and position operators are modified making use of the knowledge of the JSSP domain.

New neighborhood-based operators are proposed to improve the population diversity through the search process. Neighborhood-based operators improve the search process by relocating neighboring particles to achieve broader exploration of the solution space. The neighborhood-based operators are adaptive to regulate the population diversity thru the different stages of the search process.

An efficient neighborhood-based local search operator is introduced to perform local exploitation nearby best particles.

Specially designed genetic operators are utilized to evolve stagnated particles in the swarm.

The structure of this paper is organized as follows: in the next section, the mathematical formulation of the JSSP is presented. In the section “Particle swarm optimization”, the conventional PSO algorithm is provided. The improved PSO algorithm and the proposed genetic and neighborhood-based diversity operators are explained in sections “Proposed algorithm” and “Genetic and neighborhood-based operators”, respectively. Computational results for benchmark instances and comparisons to existing algorithms are reported in the section “Experimental results”. Finally, concluding remarks and future research are summarized in the section “Conclusions and future work”.

Job shop scheduling problem

A typical JSSP can be described as follows: There are

different jobs J = (J1, J2, …. Jn). Each job contains

operations that have to be processed through

different machines M = (M1, M2, ….. Mm). Each operation has to be performed without preemption on each machine for a predefined processing time. The order of processing machines is different from one job to another. The aim of JSSP is to find the optimal schedule and the starting time on each machine so that one or more design criteria are optimized. In this study, we attempt to find a schedule that minimizes the makespan of the jobs. That is the completion time of the last job processed in the system. The difficulty with JSSP is due to the high number of conjunctive and disjunctive constraints associated with real-world industrial applications. Two methods will be employed through this paper to formally model and represent solutions to the JSSP problem: the disjunctive graph model to specify the relative order of operations of each job processed on different machines and the Gantt chart to specify the set of start and completion times for all operations.

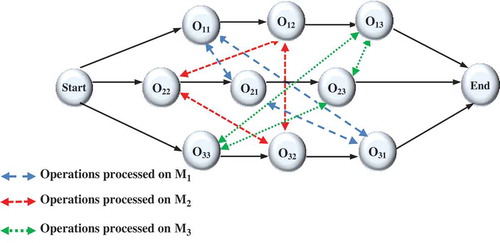

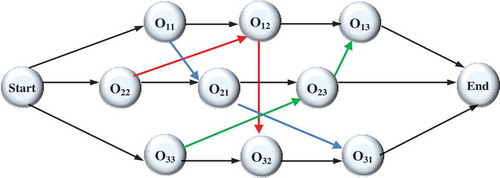

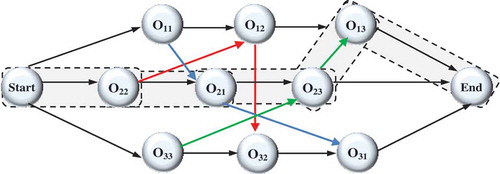

Consider the JSSP presented in . A typical JSSP may be characterized with a disjunctive graph G(N, A, E) as presented in . N = {Start, End, Oij for i = 1,2,…,

; j = 1,2,….

} is the set of operations to be scheduled (represented by the nodes in the graph). Oij characterizes the operation of the job i processed on machine j and is associated with a predefined processing time tij. Start and End are two dummy nodes with zero processing time used to characterize the beginning and the termination of the schedule, respectively. A is the set of conjunctive directed edges that correspond to precedence relations of operations of the same job (designated with directed solid lines). A = {(Start,O11), (O11,O12), (O12,O13), (O13, End), (Start,O22), (O22,O21), (O21,O23), (O23, End), (Start,O33), (O33,O32), (O32,O31), (O31, End)). E is the set of disjunctive non-directed edges which correspond to the precedence constraints of operations processed on the same machine the (denoted by dashed lines). E is the union of the subsets E1 = {(O11,O21), (O21,O31), (O31,O11)}, E2 = {(O12,O22), (O22,O32), (O32,O12)} and E3 = {(O13,O23), (O23,O33), (O33,O13)}, where subset Ej designates edges which connect the operations processed on a particular machine j. The aim of the schedule is to determine the sequence of the operations processed on every machine, i.e. to find the directions of the non-directed edges that construct a path that traverse all nodes in each edge subset. A candidate schedule to the

JSSP is presented in . The critical path is defined as the longest path from the Start to the End node. Consequently, the length of critical path governs the longest completion time of the schedule.

Table 1. An example of JSSP.

Given any feasible schedule, we can determine the operation sequences and the completion time on each machine. The following symbols will be used throughout this paper.

Oij: The operation of job i processed on machine j.

tij: The processing time of operation Oij

S(Oij): The start time of operation Oij.

C(Oij): The completion time of operation Oij.

Cmax: maximum makespan.

The JSPS problem based on minimizing the maximal makespan can be described as follows.

Subject to:

where D denotes a large positive number. and

designate two index coefficient defined as follows:

Particle swarm optimization

PSO is a novel metaheuristic based on the phenomenon of cooperative intelligence proposed by Eberhart and Kennedy in 1995. PSO is derived from the social behavior of insect colonies and animal societies that live and interact in large groups. In particular, it mimics swarming actions observed in bird flocking or fish schooling. PSO is a population-based algorithm consisting of a group of processing elements (swarm) conceptualized as particles. Each particle, explores the solution space of the optimization problem to search for the optimum solution. Thus, each particle position characterizes a candidate solution for the problem. The position of the ith particle in D-dimensional search space is represented as Xi = (xi1, xi2,….., xiD). The particle velocity characterizes the position variation between consecutive iterations and is represented by Vi = (vi1, vi2, …….., viD). Evolution is guided with a fitness function defined to evaluate the goodness of the acquired solution. The fitness value is calculated for each particle in the swarm and is compared to the fitness of the best previous value for that particular particle and to that of the best particle in the swarm

. Similar to other nature-inspired algorithms, PSO is driven by two fundamental processes: the deviation process, which enables exploring different regions of the solution space, and the selection process, which assures the exploitation of previous information about the search space based on the defined fitness function. Each particle moves according to the velocity determined by the distance between the prior position of the particle and the

,

values. The main objective of the particle velocity are to keep the particle moving toward the global and local best solutions while maintaining inertia to avoid becoming trapped in local optima. The particles evolve by adjusting their velocity and position values according to the following equations:

where {i = 1, 2,…….,N}, and N is the swarm size. Constants c1 and c2 are cognitive and social parameters ∈[0, 1]. Appropriate fine-tuning of c1 and c2 results in faster convergence and alleviation of local minima. r1 and r2 are two random numbers, with uniform distribution U[0,1]. Vmax is the maximum velocity value that confines the velocity vector, such that -Vmax ≤ Vi(t + 1) ≤ Vmax . In equation (7), the first term ω represents the inertia of previous velocity. Proper adjustment of ω provides a balance between global and local explorations and exploitations. Experimental results indicate that the PSO performance is improved if the inertia is initially set to a large value to promote global exploration at the early stages of the search process. This value should be gradually decreased to get more refined solutions as we approach the end of the search process. This can significantly reduce the number of iterations required to find a satisfactorily optimal solution. The inertia weight approach proposed by Eberhart and Shi (Citation2001) is utilized in this work as follows:

where ωmin and ωmax are the minimum and maximum values of , tmax is the maximum number of iterations, and t is the current iteration. The second term in (7) is the “cognitive” component demonstrating the particular knowledge of the particle itself. The last term in (7) is the “social” component, representing the collaboration between all particles in the swarm. The iterative steps will continue until the termination condition is satisfied.

Proposed algorithm

Particle encoding

Defining the appropriate particle representation is essential to develop an appropriate problem mapping and to construct an efficient PSO-based schedule. Determining a schedule is equivalent to finding the order of the operations processed on each machine. Operation-based (permutation) encoding method is adopted in this paper to encode a schedule as a sequence of operations (Tsujimura, Mafune, and Gen Citation2001). For a JSSP, the length of a particle is

containing a non-partitioned combination of integers from 1 to n. This encoding schema uses integer encoding to encode the schedule as a sequence of jobs and operations. Since each job consists of different operations that have to be processed on the m machines, each job number appears exactly m times in the particle. Each gene in particle denotes a specific operation of one of the n jobs. By examining the particle from left to right, the kth occurrence of a job number refers to the kth operation in the technological sequence of this job. For example, for the

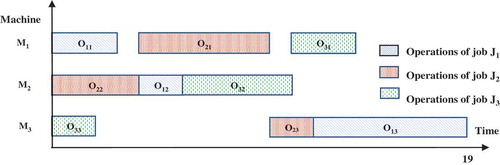

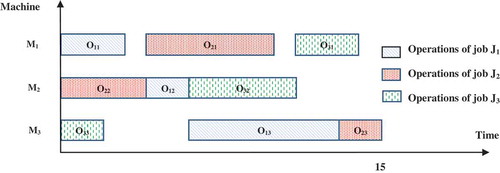

JSSP described in and , one possible solution is presented in . The schedule can be represented by the particle [2 3 1 2 2 1 3 1 3], where 1, 2, and 3 denote the corresponding jobs J1, J2,and J3, respectively. The particle in the above example can be interpreted as follows: the first 2 in the particle represents to the first operation of job J2 to be processed first on machine M2. Then, the first 3 characterizes the first operation of job J3 to be processed on M3. Similarly, the first 1 corresponds to the first operation of job J1, which will be processed on M1. The second 2 corresponds to the second operation of job J2, which will be processed on machine M1. The third 2 in the particle corresponds to the third operation of job J2, which will be processed on machine M3. Therefore, the particle [2 3 1 2 2 1 3 1 3] is decoded into the following operation sequence [O22 O33 O11 O21 O23 O12 O32 O13 O31] as presented in . The prominent advantage of this encoding is that it guarantees that any permutation in the particle can be indirectly decoded into a feasible solution. Operations are processed on the corresponding machines consecutively at the earliest permissible time. The corresponding feasible schedule is represented by the Gantt chart shown in . Due to the precedence restrictions between different operations of the same job, idle time can occur in the Gantt chart between operations processed on the same machine.

Initial population

Conforming to the operation-based representation method, the particle is set as a string of integers in the range [1, n]. It must be assured that each job index occurs exactly m times in each particle. This process is repeated to construct the N particles in the swarm. The initial population can be acquired by many methods such as diverse insertion, shifting bottleneck, or random methods, etc. However, the initial population has a limited effect on the quality of the final solution; nevertheless, it may affect the convergence time.

Fitness function

PSO preserves a swarm of particles that are evaluated in every iteration. In the proposed framework, evolution is guided with a fitness function that assesses the goodness of the particles based on their ability to minimize the makespan calculated according to the description in the section “Job shop scheduling problem”. Particle fitness values are calculated in every generation. Lower fitness values correspond to superior particles that need to be conserved for the subsequent generations.

Updating feasible solutions by PSO operators

Conventional PSO velocity and position equations are modified to conform to the discrete solution space of the JSSP. The velocity index of particle i at iteration t is defined as a sequence of swap operations as follow:

where , represents job positions

1,

] and L represents the length of the swap list which is an integer

1, n]. After generating the velocity index

, the new particle velocity is computed using (7). The basic operations implemented for updating the particle velocity and position are explained below:

Subtraction between two positions: Assume that X1and X2 are two position vectors representing two different particles in the swarm. The subtraction between X2–X1 is a velocity vector. In (7) subtracting positions () and (

) result in two velocity vectors characterized as a sequence of swap operations. For example, consider the following values for the position vectors at iteration t:

[2 3 1 2 2 1 3 1 3],

[2 3 1 2 1 1 3 2 3] and

[3 3 1 2 3 1 2 1 2]

() =

means the required sequence of swap operations to reach the target particle

given the initial starting particle

). This is achieved by swapping the genes in positions 5 and 8 {

.

Similarly,

() = (

is the required sequence of swap operators to reach the particle

given the initial particle

. To achieve this, first genes in positions 1 and 7 are swapped {(1, 7)} to get

. Then genes in positions 5 and 9 {(5, 9)} are swapped to get

which is the target particle. It should be mentioned that the arrangement of the swap operators in V is important as the operators in the sequence act on the particle in order.

Addition between position and velocity: Assume a position vector X and a velocity vector V. A new position X’ = X + V is found by applying the sequence of swap operations of V to X.

For example if [2 3 1 2 2 1 3 1 3] and V = {(1, 3), (4, 6)} then X’ is obtained by exchanging the genes in positions 1 and 3 followed by exchanging the genes in positions 4 and 6. Consequently, the new position particle X’ = [1 3 2 1 2 2 3 1 3].

Addition between two velocities: let V1 and V2 be two velocity vectors. To compute V1 + V2, we perform the sequence of swap operations of V1, followed by the swap operations of V2. The arrangement of the swap operators in V is important as the operators in the sequence act on the particle in order. For example if V1 = {(1, 3), (4, 6)} and V2 = {(2, 5)} then the velocity vector V1 + V2 = {(1, 3), (4, 6), (2, 5)}

Multiplication: Let c be the learning coefficient and V be the velocity. Coefficient c indicates the probability of performing a particular swap operator from the velocity list on the position vector. In every iteration, constants c1 and c2 are generated randomly in the interval [0, 1] such that c1+ c2 ≤ 1. The particle will move towards the local best with probability c1, and will move towards the global best

with probability c2. Therefore, if c1> c2 then the term

in (7) will dominate and the velocity vector will be characterized by the movement towards

. Otherwise, the velocity will move towards

For example, consider the following values for a

JSSP at iteration t:

[2 3 1 2 2 1 3 1 3],

[2 3 1 2 1 1 3 2 3]and

[3 3 1 2 3 1 2 1 2]

{(2, 5), (7, 1)}, c1 = 0.7, c2 = 0.3, and

Termination condition

The iterative section of the algorithm will be repeated until the maximum number of iteration tmax is attained or the change in the fitness function does not exceed a certain threshold value. The algorithm terminates whenever one of these two conditions occurs. When the stopping criterion is met, the best particle in the swarm characterized by will be the recommended solution for the JSSP.

Genetic and neighborhood-based operators

Similar to other stochastic algorithms, premature convergence presents a major limitation for PSO during the search process. This is due to the fact that conventional PSO utilizes a deterministic attraction phase, in which particles are drawn to their local and global best values. This causes particles in the swarm to move rapidly to the same area of the search space increasing the similarities among the particles and hence decreasing the diversity of the population. Furthermore, it is often useful to search the neighborhood area of local optimal solutions as it may contain the global optimum. Motivated by these perspectives, genetic and two neighborhood-based search mechanisms are proposed in this section to enhance the population diversity and to perform local search.

Diversity enhancement operator

Different variants of the PSO algorithm have been reported in the literature to maintain swarm diversity and to avoid premature convergence. This is achieved through modifying the movement schema or the learning strategy of the particles. Riget and Vesterstrøm (Citation2002) proposed a diversity-guided PSO, called ARPSO, which describes a repulsion phase based on a modified velocity update model. Sun, Xu, and Fang (Citation2006) proposed a mutation operator conducted on the Pgbest to enhance the swarm diversity when it falls under a predefined threshold value. Arani et al. (Citation2013) introduced a territorial PSO algorithm with a new collision operator and a social interaction schema which guides the particles in the direction of the weighted average of their ‘‘elite’’ neighbors. In the proposed algorithm a variable neighborhood-based diversity enhancement mechanism is utilized to improve population diversity. This is achieved by enforcing a circular neighboring area with a pre-defined radius R around each particle if the diversity of the population is less than a variable threshold value δ(t). The diversity of the swarm is characterized in terms of the scatter index among the particles measured by the average mean square distance as follows:

If Diversity (t) < δ(t), we check for neighborhood overlap between neighboring particles. If the neighborhood of one particle collides with another particle

, a shifting operator operates on both of them according to Eq. (12) as presented in . The weaker particle (the particle with higher makespan) will be shifted to the distance of 2R opposite to the stronger particle to generate new particles

and

as follows:

The shifted particle replaces the original particle only if it has a better fitness value. Otherwise the particle remains unchanged. For example particle Pi will be updated as follows:

where Fi and Fj are the fitness values of the two neighboring particles, and Pi(t) and Pj(t) are the positions of two particles at iteration t. This neighborhood operator guarantees that weak particles keep a definite distance from strong ones so that the precipitous clustering of particles can be prevented and weak particles are guided to search other regions of the solution space. The variable threshold δ(t) used in the algorithm gives the ability to control the diversity of the population throughout the different stages of the search process. Intuitively, it is crucial for the algorithm to have greater population diversity in the initial stages of the search process to avoid premature convergence. However, the propensity for convergence accelerates at the final stages of the search process. Therefore, the diversity threshold will start with a big value that is decreased gradually as the algorithm reaches. In this paper, the exponential decay function is employed for δ(t) as it provides an efficient particle distribution during the search process defined as follows:

where is the initial diversity threshold and K > 0 is the exponential decay constant.

Local search operator

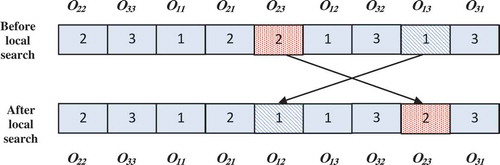

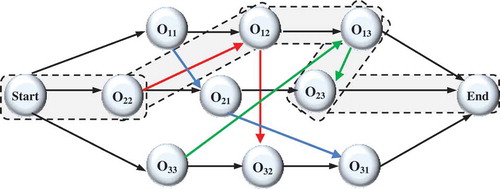

The local search process is imperative for efficient exploration of the solution space and avoiding getting trapped in local optima. The basic idea of the local search procedure is to transfer from one solution to another in the neighborhood of a candidate solution until an optimal solution is found. In this paper, neighbor particles are constructed based on critical path exploration. The makespan of a candidate solution is characterized by the length of its critical paths. Therefore, the makespan cannot be decreased without changing the present critical paths. The objective of the local search operator is to distinguish and break the existing critical paths to obtain a new schedule with a smaller makespan. A possible critical path can be characterized with a set of successive operations processed one by one without interruption and repetition from the start towards the end node. For example, if we consider the feasible schedule in , the sequence of operations O22→ O21→ O23→ O13, represent the critical path of the schedule as shown in . The Gantt chart presented earlier in designates the possible schedule of the JSSP. Idle time may exist in the Gantt chart between operations processed on the same machine due to the precedence constraints among operations of the same job. The makespan can be computed as the length of the critical path: Cmax = t22 + t21 + t23 + t13 = 4 + 6 + 2 + 7 = 19.

In this paper, the critical path is determined using the algorithm presented by Qing-Dao-Er-Ji and Wang (Citation2012). Any operation on the critical path is called a critical operation. It is possible to partition the critical path into a number of blocks in which each block is the arrangement of adjacent critical operations that are processed on a particular machine. In , the critical path is divided into three blocks, B1 = {O21} to be processed on M1, B2 = {O22} to be processed on M2 and B3 = {O23, O13} to be processed on M3. In the proposed algorithm, a local search operator based on the critical-path is employed to obtain new solutions by moving critical operations. The local search operator is performed on the global best particle and can be described as follows:

Step 1: Randomly select two operations α and β critical block Bj, where j

[1, m]. If a critical block contains only one operation then the local search operator cannot be applied to that block.

Step 2: Generate a new particle by exchanging the positions of operations α and β.

Step 3: Evaluate, if F(

then set

and terminate. Else go back to Step 1.

If we apply the local search operator to the feasible solution shown in , block B3 = {O23, O13} is the only critical block containing more than one operation. The exchange of the two critical operations is illustrated in . The distinctive graph and Gantt chart in and present the feasible solution and the critical path after the local search operator. Critical path operations after the local search are O22, O12, O13, O23 and new Cmax = t22 + t12 + t13 + t23 = 4 + 2 + 7 + 2 = 15.

Genetic operators

Genetic operators impose a significant computational overhead if applied to all particles in the swarm. Therefore, genetic operators such as crossover and selection are applied to particles in which their local best does not change for a designated number of iterations. The selection of the particles is based on a non-linear ranking selection schema as explained in the following subsections.

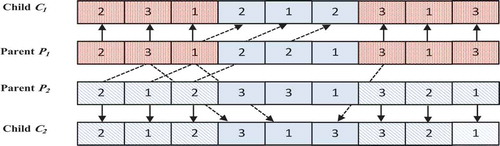

Crossover operator

Two-point crossover is executed on the stagnated particle and another particle selected from the population poll. The conventional crossover operator is modified to guarantee that the offspring represents a feasible solution to the JSSP. The proposed crossover operator is described as follows:

Step 1: Given an JSSP, select two candidate solutions P1 and P2, respectively.

Step 2: Select two random indices r1 and r2 in the range [1,] such that r1 < r2.

Step 3: Copy the genes before r1 and after r2 in P1 to the corresponding location in child C1. Similarly, copy the genes before r1 and after r2 in P2 to the corresponding location in child C2.

Step 4: Empty genes in C1 will be filled with genes of P2 in the order presented by P2. Genes in parent P2 are read from left to right. If the gene has already occurred in C1 m-times, then ignore it; otherwise, place the gene in the first empty location in C1. The same procedure is utilized to construct child C2.

Step 5: Evaluate C1 and C2, the child with a smaller makespan is to replace its parent in the current population.

An illustration of the crossover operator is presented in .

Non-linear ranking selection

Ranking methods employ the fitness function to map candidate solutions to a partially arranged set. All particles in the swarm are ranked from best to worst based on their fitness values. The selection probability of a particle (p) is calculated based on its rank (r) as follows:

Subject to:

where q ∈ [0, 1] is the probability of selecting the best individual and r is the rank of the individual defined as follows:

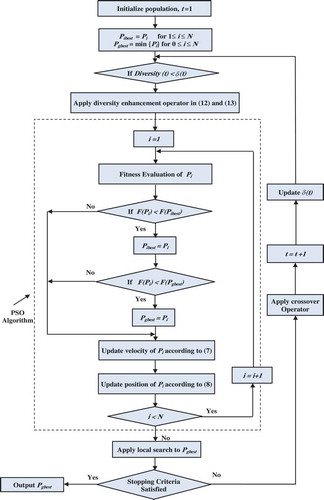

It can be seen that this selection probability doesn’t employ the absolute value of the fitness and hence it avoids fitness value scale transformations and prevents premature convergence. The proposed algorithm aims to balance the exploration and exploitation capabilities of the search process by containing both deterministic and stochastic components. The deterministic component in the algorithm is the iterative application of the conventional PSO. This component is helpful as it exploits the distributed capabilities of swarm intelligence to accelerate the convergence speed due to the attraction to preceding best particles and the global best particle. Whereas the stochastic component is present in the randomized selection, mutation, and crossover operators utilized in the neighborhood search phase. This component is beneficial in increasing the diversity of the swarm by adjusting the disparities between the particles. The main framework of the proposed PSO algorithm with neighborhood and genetic operators (PSO-NGO) is presented in the flowchart shown in .

Experimental results

The performance of the PSO-NGO was assessed using benchmarks taken from the Operations Research (OR) Library. In addition, to verify the efficiency and validity of our algorithm on larger scale problems, we have chosen the test set designed by Taillard (Citation1993) with sizes that correspond to real dimensions of practical industrial problems. In particular, a set of 123 instances from three classes of standard JSSP test problems were considered: 3 problems designed by Fisher and Thompson (Citation1963): referred as Ft06, Ft10, and Ft20, 40 problems designed by Lawrence (Citation1984): referred as La01–La40, and 80 problems designed by Taillard (Citation1993): referred as Ta01–Ta80. The dimension of these problem instances range from 6 to 100 jobs and 5 to 20 machines. The objective of the PSO-NGO was the minimization of makespan described in (1). Constant D that confirms the satisfaction of operation precedence constraints and machine constraints in equations (2) and (3) was set to 109. The proposed algorithm was implemented in C++ on an Intel Pentium IV 3GHz PC with 2GB of RAM. Due to the probabilistic nature of the algorithm and to ensure result consistency, 10 independent runs for each problem were performed to ensure meaningful results.

PSO and GA parameter setting

PSO parameters were chosen experimentally in order to get a satisfactory solution quality in an acceptable time span. Different parameter combinations from the PSO literature were examined (Eberhart and Shi Citation2001). During the preliminary experiment, four swarm sizes (N) of 10, 20, 50, and 100 particles were selected to test the algorithm. The outcome of N = 20 was superior and used for all further experiments. The maximal number of iterations was set to 200. The inertial weight ω was decreased linearly from ωmax to ωmin during iterations using equation (9). ωmax and ωmin were selected from the set {0.5, 0.7, 0.9} and {0.1, 0.3, 0.5}, respectively. The parameter combination that yielded the best results and that was used throughout the experiments is presented in .

Table 2. Parameter settings for PSO and GA.

Genetic parameters such as the crossover probability (Pc) and the probability of selecting the best individual (q) also affect the performance of PSO-NGO. To select better values for these two control parameters, we investigated the performance of PSO-NGO under variant Pc and q values. Pc and q were selected from the set {0, 0.2, 0.4, 0.6, 0.8, 1} and {0.1, 0.3, 0.5, 0.7, 0.9, 1.0}, respectively. Therefore, there are possible choices for the combination. Preliminary experiments demonstrated that Pc = 0.8 and q = 0.7 are the relatively best choices and were used throughout the experiments.

Diversity enhancement operator parameter setting

The neighborhood-based diversity enhancement mechanism enforces a circular neighboring area with a predefined radius R around each particle. Experimental tests were conducted to find the best value for R. Radius R was chosen to change from 0 to 10 with 0.1 steps. In the preliminary experiments all test instances were considered for finding the best value of R. Experiments were conducted 10 times for each test instance and the average percentage error (APE) was calculated as follows:

where is the average makespan of the 10 runs of the test instance and

is the value of the best known solution for the problem. The best results for all test instances were obtained when R was between 5.2 and 5.9. Furthermore, for high values of R, the performance of the algorithm would be degraded due to the fact that the particles would be stuck in the early stages of search process. Similarly, the performance of the algorithm is not satisfactory for low values of R since the particles would be trapped in local minima. Thus, it would be reasonable to use the value 5.6 for R.

Similarly, the initial diversity threshold and exponential decay constant (K) are used to adjust the diversity of the population throughout the different stages of the search process. It is essential to provide the particles with a greater diversity in the early stages of the search process to prevent them from prematurely clustering around local optima. Experimental tests have been conducted to obtain the best possible setting values for

and K. Test instances La21, La27, La29, La37, La39, and La40 were employed for this purpose.

was chosen to change from 0 to 5 with 0.1 steps and K was chosen to change from 0.1 to 2 with 0.1 steps. Each experiment was conducted 10 times for each test instance and the APE values were recorded. Experimental results demonstrated that that the minimum APE values for all test instances were obtained when

was between 2.5 and 3 and K was between 1.2 and 1.4. Therefore, the values

= 2.75 and K = 1.3 were used throughout the experiments.

Effect of different strategies

The proposed approach employs two strategies: neighborhood-based operators and genetic operators. To investigate the effect of these strategies, we studied the performance of the standard PSO, PSO with neighborhood-based operators (NPSO), PSO with genetic operators (GPSO), and the proposed PSO-NGO which includes both mechanisms. This will help to verify the effectiveness of these strategies separately. For the parameters, we have used the same settings as described in the sections “PSO and GA parameter setting” and “Diversity enhancement operator parameter setting”.

presents the name of the benchmark problem, the problem size, the best known solution to the problem (BKS), and the value of the best solution found by each strategy in the 10 replications. In addition, presents the APE calculated as presented earlier in (18) and the average percentage improvement (API) of the PSO-NGO against the other approaches (averaged over the 10 replications). The API is calculated as follows:

Table 3. Comparison of the different strategies: PSO, NPSO, GPSO, and PSO-NGO.

Where is the solution obtained by the proposed algorithm and H is the solution obtained by the approach compared against.

The PSO-NGO obtained the BKS for 41 test instances compared to 18, 34, and 29 for PSO, NPSO, and GPSO, respectively. The APE for the proposed PSO-NGO was 0.026 compared to 0.555, 0.189, and 0.350 for PSO, NPSO, and GPSO, respectively. The PSO-NGO achieved an API of 0.529, 0.163, and 0.324 over PSO, NPSO and GPSO.

From the comparison of NPSO with PSO, we can see that incorporating the neighborhood-based operators was effective for most test instances. NPSO achieved better results than stand-alone PSO in all test cases except for instances Ft06, Ft10, Ft20, La1- La15, and La29 where it achieved similar results. For La29, both NPSO and GPSO hardly improved the quality of solution. PSO performs better than GPSO on La29, while GPSO outperforms or achieves similar results to PSO for all the other test instances. By combining these two strategies, PSO-NGO obtains excellent performance. It outperforms other three PSO algorithms in most of the test instances. Specifically, for some of the high-dimensional problems using a single strategy can hardly achieve promising solutions such as in instances La37- La40 where both NPSO and GPSO fall into local minima, while PSO-NGO achieves satisfactory results. This is due to the fact that the neighborhood-based operators are improved variants of the conventional PSO algorithm that are based on the attraction to or

. They help to accelerate the convergence rate of PSO, but run the risk of losing swarm diversity. On the other hand, the genetic operators help to increase the swarm diversity. Yet, they can slow down the convergence rate especially for high-dimensional problems. The hybridization of these two strategies in PSO-NGO achieves the desired balance between the exploration and exploitation search abilities.

Comparison with other metaheuristics

Results obtained by the PSO-NGO were compared to results reported in the literature such as: results obtained by TS with shifting bottleneck (TSSB) (Pezzella and Merelli Citation2000), hybrid PSO (HPSO) (Sha and Hsu Citation2006), hybrid PSO with VNS(PSO-VNS) (Tasgetiren et al. Citation2006), a multiple-type individual enhancement PSO (MPSO) (Lin et al. Citation2010), best-so-far artificial ABC (Banharnsakun, Sirinaovakul, and Achalakul Citation2012), the PSO and AIS Hybrid (PSO-AIS) (Ge et al. (Citation2008), hybrid genetic algorithm (HGA) (Qing-Dao-Er-Ji and Wang Citation2012) and the MA (Gao et al. Citation2011). The parameters setting used for the proposed algorithm was reported earlier in the section “PSO and GA parameter setting”.

presents the computational results of the experiments performed on 43 benchmark instances: Ft06, Ft10, Ft20, and La01–La40, taken from the OR Library. The table includes the name of the benchmark problem, the size, BKS, the value of the best solution found by the proposed algorithm in the 10 replications, the best values reported for the other algorithms, the APE for each algorithm and the API of the proposed PSO-NGO against the other approaches. For small benchmark instances Ft06, Ft10, Ft20, and La01– La19, all algorithms attained the optimal solution. For relatively larger instances La16–La40, the performance of the proposed algorithm was better than or similar to other algorithms. According to , the PSO-NGO algorithm found the best known solution for 41 out of the 43 test instances. This is similar to the results obtained by HPSO and Best-so-far ABC and better than MPSO, PSO-AIS, HGA, and MA which found 35, 32, 33, and 33 best known solutions, respectively. In particular, the two instances where the PSO-NGO algorithm did not yield the optimal solution were La29 and La40. For instance La29 the result obtained by the PSO-NGO algorithm (1163) was similar to HPSO and was worse than the results obtained by the HGA (1160). For instance La40, the solution found by the PSO-NGO algorithm (1224) was similar to the result obtained by HPSO, PSO-VNS, and Best-so-far ABC. The APE for the proposed PSO-NGO was 0.026 compared to 0.026, 0.087, 0.087, 0.148, 0.028, 0.325, 0.176 and 0.330 for HPSO, PSO-VNS, MPSO, Best-so-far ABC, PSO-AIS, HGA, and MA, respectively. The PSO-NGO achieved an API of 0.006, 0.122, 0.001, 0.299, 0.161, and 0.304 over PSO-VNS, MPSO, Best-so-far ABC, PSO-AIS, HGA, and MA, respectively.

Table 4. Computational results of PSO-NGO, HPSO, PSO-VNS, MPSO, Best-so-far ABC, PSO-AIS, HGA, and MA for Ft and La test problems.

We further tested PSO-NGO on Ta test problems: Ta01-Ta80 (Taillard Citation1993). The computational results are shown in . We particularly compared PSO-NGO with HPSO (Sha and Hsu Citation2006) and TSSB (Pezzella and Merelli Citation2000). The PSO-NGO obtained the BKS for 42 test instances compared to 27 and 31 for HPSO and TSSB, respectively. The APE for the proposed PSO-NGO was 0.433 compared to 0.566 and 0.812 for HPSO and TSSB, respectively. The PSO-NGO achieved an API of 0.155 and 0.378 over HPSO and TSSB. PSO-NGO performed better than HPSO on 37 test instance, obtained similar results for 39 test instances and worse results on only 4 test instances. In particular, in large scale problems () problem instances PSO-NGO outperformed HPSO in 9 out of 10 instances and obtained similar results in 1 test instance. Whereas, PSO-NGO performed better than TSSB on 44 test instance and obtained similar results for 36 test instances.

Table 5. Computational results of PSO-NGO, HPSO, and TSSB for Ta test problems.

Experimental results presented in , and validate the effectiveness of the proposed PSO-NGO algorithm in solving the minimal makespan JSSP in terms of robustness and solution quality.

Conclusions and future work

In this paper, an effective PSO-based scheduling algorithm for minimizing the makespan in the JSSP is presented. In the PSO system, a new model for updating the velocity and the position of the particles is proposed to conform to the JSSP. The conventional PSO algorithm is improved by defining two neighborhood-based operators to enhance population diversity and to avoid premature convergence to local optima. First, the diversity enhancement operator tends to improve the population diversity by relocating neighboring particles to avoid premature clustering and to achieve broader exploration of the solution space. This is achieved by enforcing a circular neighboring area around each particle if the diversity of the population falls less than the diversity threshold value. The diversity threshold is adaptively modified to regulate the population diversity throughout the different stages of the search process. Second, the local search operator is used to create new solutions in the neighborhood of candidate solutions based on critical path analysis. Moreover, the algorithm is reinforced with genetic well-known operators “selection” and “crossover” to evolve stagnated particles in the swarm. The proposed algorithm has been evaluated using 123 well-studied benchmarks. Computational results validate the effectiveness of the proposed algorithm in producing high quality solutions. Results are compared with those obtained using other existing algorithms and the proposed algorithm was found to yield reasonable improvement in solution robustness and quality in particular for large-sized problem instances.

There are several potential improvements to the proposed algorithm that can be investigated in future research. In this paper a single-criterion objective function based on makespan was studied. The proposed framework can be utilized for a wider range of objective functions or extended to multi-criteria objective functions. This is extremely important in practical real-life production problems where the appropriateness of a schedule is measured according to various objectives simultaneously. Further research can possibly be taken to compare the performance of various crossover and mutation operators in the proposed algorithm. Another possible research direction is considering a parallel implementation of the algorithm to reduce the computational time associated with the diversity enhancement operators. Moreover, the proposed algorithm can be extended to handle practical manufacturing situations such as dynamic arrivals, machine breakdown, or other factors that affect job status over time.

References

- Amirthagadeswaran, K. S., and V. P. Arunachalam. 2006. Improved solutions for job shop scheduling problems through genetic algorithm with a different method of schedule deduction. The International Journal of Advanced Manufacturing Technology 28(5–6):532–40. doi:10.1007/s00170-004-2403-1.

- Arani, B. O., P. Mirzabeygi, and M. S. Panahi. 2013. An improved PSO algorithm with a territorial diversity-preserving scheme and enhanced exploration–Exploitation balance. Swarm and Evolutionary Computation 11:1–15. doi:10.1016/j.swevo.2012.12.004.

- Banharnsakun, A., B. Sirinaovakul, and T. Achalakul. 2012. Job shop scheduling with the best-so-far ABC. Engineering Applications of Artificial Intelligence 25(3):583–93. doi:10.1016/j.engappai.2011.08.003.

- Chen, H., and P. B. Luh. 2003. An alternative framework to Lagrangian relaxation approach for job shop scheduling. European Journal of Operational Research 149(3):499–512. doi:10.1016/S0377-2217(02)00470-8.

- Chong, C. S., A. I. Sivakumar, M. Y. H. Low, and K. L. Gay. 2006. A bee colony optimization algorithm to job shop scheduling. Proceedings of the 38th conference on Winter simulation, 1954–61. Winter Simulation Conference. Monterey, CA, USA: IEEE.

- Eberhart, R. C., and J. Kennedy. 1995. A new optimizer using particle swarm theory. Proceedings of the sixth international symposium on micro machine and human science, vol. 1, 39–43. Piscataway NJ, United States: IEEE Press.

- Eberhart, R. C., and Y. Shi. 2001. Particle swarm optimization: Developments, applications and resources. Evolutionary Computation, 2001. Proceedings of the 2001 Congress on, vol. 1, 81–86. Seoul, South Korea: IEEE.

- Fisher, H., and G. L. Thompson. 1963. Probabilistic learning combinations of local job-shop scheduling rules. Industrial Scheduling 3:225–51.

- Gao, L., G. Zhang, L. Zhang, and X. Li. 2011. An efficient memetic algorithm for solving the job shop scheduling problem. Computers & Industrial Engineering 60(4):699–705. doi:10.1016/j.cie.2011.01.003.

- Garey, M. R., D. S. Johnson, and R. Sethi. 1976. The complexity of flowshop and jobshop scheduling. Mathematics of Operations Research 1(2):117–29. doi:10.1287/moor.1.2.117.

- Ge, H., W. Du, and F. Qian. 2007. A hybrid algorithm based on particle swarm optimization and simulated annealing for job shop scheduling. Natural Computation, 2007. ICNC 2007. Third International Conference on, vol. 3, 715–19. Haikou, China: IEEE.

- Ge, H. W., L. Sun, Y. C. Liang, and F. Qian. 2008. An effective PSO and AIS-based hybrid intelligent algorithm for job-shop scheduling. Systems, Man and Cybernetics, Part A: Systems and Humans, IEEE Transactions On 38(2):358–68. doi:10.1109/TSMCA.2007.914753.

- Geyik, F., and I. H. Cedimoglu. 2004. The strategies and parameters of Tabu search for job-shop scheduling. Journal of Intelligent Manufacturing 15(4):439–48. doi:10.1023/B:JIMS.0000034106.86434.46.

- Heilmann, R. 2003. A branch-and-bound procedure for the multi-mode resource-constrained project scheduling problem with minimum and maximum time lags. European Journal of Operational Research 144(2):348–65. doi:10.1016/S0377-2217(02)00136-4.

- Kaskavelis, C. A., and M. C. Caramanis. 1998. Efficient Lagrangian relaxation algorithms for industry size job-shop scheduling problems. IIE Transactions 30(11):1085–97. doi:10.1080/07408179808966565.

- Lawrence, S. 1984. Resource constrained project scheduling: An experimental investigation of heuristic scheduling techniques (supplement). Pittsburgh: Graduate School of Industrial Administration, Carnegie Mellon University.

- Lian, Z., B. Jiao, and X. Gu. 2006. A similar particle swarm optimization algorithm for job-shop scheduling to minimize makespan. Applied Mathematics and Computation 183(2):1008–17. doi:10.1016/j.amc.2006.05.168.

- Liao, C. J., C. T. Tseng, and P. Luarn. 2007. A discrete version of particle swarm optimization for flowshop scheduling problems. Computers & Operations Research 34(10):3099–111. doi:10.1016/j.cor.2005.11.017.

- Lin, T. L., S. J. Horng, T. W. Kao, Y. H. Chen, R. S. Run, R. J. Chen, and I. H. Kuo. 2010. An efficient job-shop scheduling algorithm based on particle swarm optimization. Expert Systems with Applications 37(3):2629–36. doi:10.1016/j.eswa.2009.08.015.

- Liu, L. L., R. S. Hu, X. P. Hu, G. P. Zhao, and S. Wang. 2015. A hybrid PSO-GA algorithm for job shop scheduling in machine tool production. International Journal of Production Research, 53(19):5755–5781.

- Liu, L. L., G. P. Zhao, S. S. Ou’Yang, and Y. J. Yang. 2011. Integrating theory of constraints and particle swarm optimization in order planning and scheduling for machine tool production. The International Journal of Advanced Manufacturing Technology 57(1–4):285–96. doi:10.1007/s00170-011-3294-6.

- Liu, T. K., J. T. Tsai, and J. H. Chou. 2006. Improved genetic algorithm for the job-shop scheduling problem. The International Journal of Advanced Manufacturing Technology 27(9–10):1021–29. doi:10.1007/s00170-004-2283-4.

- Lorigeon, T., J. C. Billaut, and J. L. Bouquard. 2002. A dynamic programming algorithm for scheduling jobs in a two-machine open shop with an availability constraint. Journal of the Operational Research Society 53(11):1239–46. doi:10.1057/palgrave.jors.2601421.

- Neto, R. T., and M. Godinho Filho. 2013. Literature review regarding Ant Colony Optimization applied to scheduling problems: Guidelines for implementation and directions for future research. Engineering Applications of Artificial Intelligence 26(1):150–61. doi:10.1016/j.engappai.2012.03.011.

- Pezzella, F., and E. Merelli. 2000. A tabu search method guided by shifting bottleneck for the job shop scheduling problem. European Journal of Operational Research 120(2):297–310. doi:10.1016/S0377-2217(99)00158-7.

- Qing-Dao-Er-Ji, R., and Y. Wang. 2012. A new hybrid genetic algorithm for job shop scheduling problem. Computers & Operations Research 39(10):2291–99. doi:10.1016/j.cor.2011.12.005.

- Riget, J., and J. S. Vesterstrøm. 2002. A diversity-guided particle swarm optimizer-the ARPSO. Dept. Comput. Sci., Univ. of Aarhus, Aarhus, Denmark, Tech. Rep, 2, p.2002.

- Sha, D. Y., and C. Y. Hsu. 2006. A hybrid particle swarm optimization for job shop scheduling problem. Computers & Industrial Engineering 51(4):791–808. doi:10.1016/j.cie.2006.09.002.

- Sun, J., W. Xu, and W. Fang. 2006. A diversity-guided quantum-behaved particle swarm optimization algorithm. In Wang TD. et al. (ed.), Simulated evolution and learning, SEAL 2006, 497–504. Springer, Berlin, Heidelberg.

- Suresh, R. K., and K. M. Mohanasundaram. 2006. Pareto archived simulated annealing for job shop scheduling with multiple objectives. The International Journal of Advanced Manufacturing Technology 29(1–2):184–96. doi:10.1007/s00170-004-2492-x.

- Taillard, E. D. 1993. Benchmarks for basic scheduling problems. European Journal of Operational Research 64:278–85. doi:10.1016/0377-2217(93)90182-M.

- Tang, J., G. Zhang, B. Lin, and B. Zhang. 2010. A hybrid PSO/GA algorithm for job shop scheduling problem. In Tan K.C. (ed.), Advances in Swarm Intelligence, ICSI 2010, 566–73. Springer, Berlin, Heidelberg.

- Tasgetiren, M. F., M. Sevkli, Y. C. Liang, and M. M. Yenisey. 2006. A particle swarm optimization and differential evolution algorithms for job shop scheduling problem. International Journal of Operations Research 3(2):120–35.

- Tseng, C. T., and C. J. Liao. 2008. A particle swarm optimization algorithm for hybrid flow-shop scheduling with multiprocessor tasks. International Journal of Production Research 46(17):4655–70. doi:10.1080/00207540701294627.

- Tsujimura, Y., Y. Mafune, and M. Gen. 2001. Effects of symbiotic evolution in genetic algorithms for job-shop scheduling. Proceedings of the 34th IEEE Annual International Conference on System Sciences, Hawaii, 1–7.

- Watson, J. P., J. C. Beck, A. E. Howe, and L. D. Whitley. 2003. Problem difficulty for Tabu search in job-shop scheduling. Artificial Intelligence 143(2):189–217. doi:10.1016/S0004-3702(02)00363-6.

- Xia, W. J., and Z. M. Wu. 2006. A hybrid particle swarm optimization approach for the job-shop scheduling problem. The International Journal of Advanced Manufacturing Technology 29(3–4):360–66. doi:10.1007/s00170-005-2513-4.

- Yongxian, L., L. Xiaotian, and Z. Jinfu. 2008. Research on job-shop scheduling optimization method with limited resources. The International Journal of Advanced Manufacturing Technology 38(3–4):386–92. doi:10.1007/s00170-007-1345-9.

- Yu, H., and W. Liang. 2001. Neural network and genetic algorithm-based hybrid approach to expanded job-shop scheduling. Computers & Industrial Engineering 39(3):337–56. doi:10.1016/S0360-8352(01)00010-9.

- Zhang, J., X. Hu, X. Tan, J. H. Zhong, and Q. Huang. 2006. Implementation of an ant colony optimization technique for job shop scheduling problem. Transactions of the Institute of Measurement and Control 28(1):93–108. doi:10.1191/0142331206tm165oa.