ABSTRACT

In this paper, we have proposed a low-cost solution for driver fatigue detection based on micro-sleep patterns. Contrary to conventional methods, we acquired images by placing a camera on the extreme left side of the driver and proposed two algorithms that facilitate accurate face and eye detections, even when the driver is not facing the camera or driver’s eyes are closed. The classification to find whether eye is closed or open is done on the right eye only using SVM and Adaboost. Based on eye states, micro-sleep patterns are determined and an alarm is triggered to warn the driver, when needed. In our dataset, we considered multiple subjects from both genders, having different appearances and under different lightning conditions. The proposed scheme gives 99.9% and 98.7% accurate results for face and eye detection, respectively. For all the subjects, the average accuracy of SVM and Adaboost is 96.5% and 95.4%, respectively.

Introduction

In the past few years, there has been a tremendous increase in the number of road accidents due to driver’s fatigue (Dhivya and Suresh Babu Citation2015). According to a report published by the European Union, driver fatigue forms a notable factor of about 20% of the road crashes. Drivers with a diminished vigilance level experience a notable drop in their capacity of recognition, response, and control, which increases the risk of accident. It increases the need for systems that can detect symptoms of fatigue and warn the driver in advance (Commission Citation2016). More recently, a study by the National Sleep Foundation in the US showed that in their study, more than 51% of the adult drivers with fatigue symptoms had driven a vehicle and 17% had momentarily fallen asleep while driving. It is estimated that 76,000 injuries and 1,200 deaths are due to fatigue-induced accidents annually (National Sleep Foundation Citation2016). Due to the recent attention to fatigue-related crashes, fatigue detection systems have become an active area of research.

Driver Fatigue Detection Systems

Monitoring driver fatigue is important to prevent road accidents and to improve road safety. When a driver is fatigued, he/she shows particular signs (e.g. yawning, eyes closed for relatively longer periods, and the head bouncing, etc.) that can be observed easily. Moreover, fatigue can also be determined by monitoring the driving behavior, e.g. steering movements, lane keeping, car speed, gear changing, acceleration, braking, etc. (Wierwille Citation1995). The driver fatigue detection system automatically analyzes the driving or driver’s behavior and if the driver is fatigued, it generates an alarm that can wake up the driver.

Symptoms of Fatigue

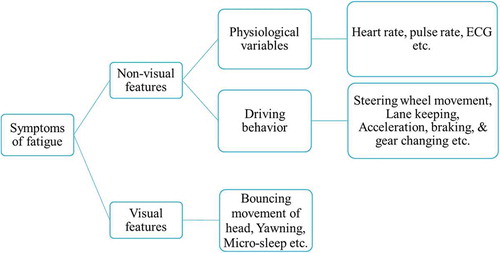

When a driver is fatigued, he/she shows multiple nonvisual and visual signs that can be measured to detect fatigue, such as .

Besides physical state, the mental state of the driver also affects the driving and hence the physiological variables which give information about stress state, heart rate, brain activity, etc., can also be used for the analysis of the driver’s attentiveness. Eye closure is a widely used fatigue measure and based on the eye behaviors micro-sleep patterns can be determined. A micro-sleep (MS) is a brief, unintended, and temporary episode of loss of attention or sleep which may occur when a driver is fatigued. It may be associated with the event of prolonged eye closure which may last for fraction of a second or up to 30 s.

Fatigue Detection Techniques

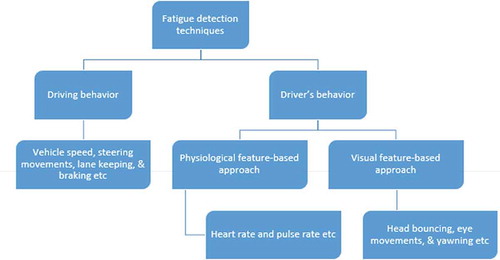

Driver’s fatigue detection techniques can be broadly divided into two categories (). The first is to detect driving behavior and the other is to detect driver’s behavior, which further includes two approaches, i.e. physiological feature-based approach and a visual feature-based approach. The former is based on heart rate, pulse rate, and brain activity, etc. The latter tracks the driver’s eye and head movements, yawn and facial expressions (Kong et al. Citation2015).

Driving behavior information–based methods are affected by the individual variation in driving behavior and the vehicle type. Physiological feature–based approaches usually result in good performance of fatigue detection. Electroencephalogram (EEG) for brain waves, Electrocardiogram (ECG) for heart rate, HRV (Heart Rate Variability), and Electrooculography (EOG) for eye movements, can give good results; however, these methods are intrusive because the sensors must be in contact with the driver’s body (Kong et al. Citation2015). Moreover, different specialized hardware devices are also used for monitoring driver fatigue however they are costly in terms of money and energy usage.

Visual feature–based approaches are mostly preferred because they are nonintrusive to the driver. Among the visual features, the head bouncing can be a bit misleading as there can be more than one reason for this, e.g. bumpy road or music, etc. Similarly, yawning is not a reliable measure for driver fatigue detection as one’s mouth may be open for multiple reasons, e.g. talking to someone or maybe he/she is habitual to keep mouth open ().

Eye movements can be the best correlated with the most obvious symptom of fatigue, i.e. micro-sleep. In this research work, we focused on the visual feature–based approach to monitor driver’s attentiveness, and based on the eye behavior of the driver, micro-sleep patterns are determined.

Contributions

Though a lot of research work has been done in this area, there is still scope for improvement in face and eye detection and eye-state classification results. Second, for fatigue detection mostly images are acquired by placing the camera in front of driver which is not practical, as it can block the driver’s view, even though it makes the task of eye closure detection relatively easier. The contributions of this research work are as follows:

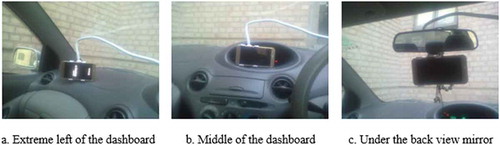

First, we introduced the new idea for video acquisition in which the camera does not distract the driver or block his/her view. Three camera locations with/without zoom were tested with two subjects and after detailed analysis, we concluded that the most suitable location for camera is on the extreme left of the dashboard without zoom.

Second, we generated a dataset in which we considered multiple subjects from both genders, having different appearances, in different cars and under different lightning conditions.

Third, we proposed face and eye-detection algorithms that facilitate accurate face and eye detection, even when the driver is not facing the camera or driver’s eyes are closed.

Fourth, achieved results are comparable with the existing literature.

Fifth, the overall cost of the proposed system is very low as there is no specialized experimental setup used.

The rest of the paper is organized as follows: The “Review of Related Literature” section reviews the related literature. In the “Proposed Scheme” section, an overall framework of the proposed scheme is explained in detail. In the “Results” section, experimental results of the proposed scheme and its performance evaluation are presented and discussed. The “Conclusion” section concludes the paper and future research suggestions are briefly presented in the “Future Work” section.

Review of Related Literature

Extensive research has been carried out in the domain of driver fatigue detection, and visual features (e.g. eye movements, yawning, head nodding, etc.) are considered as the key symptoms of fatigue. We have reviewed the existing literature and categorized the schemes with respect to the approaches used, i.e. whether it is based on eye behaviors or it uses yawn analysis or is it a hybrid scheme.

Eye Behaviors

In literature, mostly eye behaviors particularly eye closure, blink rate, blink frequency, etc., have been used to determine fatigue (AL-Anizy Citation2015; Bergasa et al. Citation2006; Budiharto, Putra, and Putra Citation2013; Bharambe and Mahajan Citation2015; Batchu and Kumar Citation2015; Diddi and Jamge Citation2015; Devi, Choudhari, and Bajaj Citation2011; Dasgupta et al. Citation2013; Fazli and Esfehani Citation2012; Flores et al. Citation2010a; Jo, Lee, and Jung Citation2011; Jayanthi and Bommy Citation2012; Jiménez-Pinto and Torres-Torriti Citation2013; Karchani, Mazloumi, and Saraji Citation2015; Kong et al. Citation2015; Kumari Citation2014; Lenskiy and Lee Citation2012; Liu et al. Citation2010; Liu et al. Citation2010; Patel et al. Citation2010; Patel, Patel, and Sharma Citation2015; Punitha and Geetha Citation2015; Sugur, Hemadri, and Kulkarni Citation2013; Suryaprasad et al. Citation2013; Sacco and Farrugia Citation2012a; Shewale and Pranita Citation2014; Shen and Xu Citation2015; Tang, Fang, and Hu Citation2010; Vikash and Barwar Citation2014; Zhang, Cheng, and Lin Citation2012).

Patel et al. (Citation2010) presented a driver fatigue detection system that uses a color camcorder manufactured by JVC to capture the video. Skin segmentation model based on Gaussian distribution was used for face detection, followed by the eyes detection based on iris using binary images. Finally, fatigue was detected by assessing the eye blink rate. Authors claim good performance however there are some limitations such as false detection of eyes and iris detection in partially opened eyes. Moreover, the proposed system tracks eyes in every frame thus increasing the processing.

Lenskiy and Lee (Citation2012) proposed a novel skin-color segmentation algorithm based on an NN and texture segmentation algorithm based on SURF features, for eye detection. Blink frequency and eye closure duration were then estimated by tracking the localized eyes with extended Kalman filter.

Jo, Lee, and Jung (Citation2011) have used NIR LEDs with a narrow band-pass filter to lighten the driver’s face and then acquired filtered images. The proposed eye-detection algorithm combines Adaboost, template matching, and blob detection along with the eye validation using SVM, PCA, and LDA. Eye-state detection algorithm combines statistical features with appearance features and finally, SVM with RBF kernels was used for classification.

Zhang, Cheng, and Lin (Citation2012) presented an eye-detection algorithm to overcome the limitations of changes in driver pose and lightening condition. To do so, six measures were used to evaluate the drowsiness and fisher’s linear discriminant functions were then used as the classification function. Experimental evaluation was performed with six participants and high fidelity simulator.

Fazli and Esfehani (Citation2012) proposed a novel eye state tracking technique for drowsiness detection. A CCD camera was used to capture the video, followed by the image conversion to YCbCr color space. First, the face is detected and cropped from the image. Then, eyes are localized using a canny edge detector. Finally, drowsiness is detected based on the white pixel count of the remaining region.

In Jayanthi and Bommy (Citation2012), face detection is done using the skin color model followed by the eye tracking using dynamic template matching. Templates were trained using artificial neural network (ANN). Authors claim an overall good performance; however, there are some limitations such as quick head rotation, large head movement, and distance from the camera. Jiménez-Pinto and Torres-Torriti (Citation2013) presented a novel technique for drowsiness detection which uses driver’s kinematic model and optical flow analysis (Lucas and Kanade Citation1981).

Batchu and Kumar (Citation2015) proposed another drowsiness monitoring system, where face and eyes are detected using inbuilt HARR classifier cascades in OpenCV. Eye camera was used to take facial images of the driver and to speed up the processing only the open eye state is detected. Vikash and Barwar (Citation2014) also used the similar approach with the motivation to develop an efficient yet non-obtrusive drowsiness detection system.

Contrary to the previous techniques, Sugur, Hemadri, and Kulkarni (Citation2013) argued that HAAR cascade classifier is inefficient when working with different types of facial views and multiple image frames. As an alternate, Local Successive Mean Quantization Transform (SMQT) features and split up snow classifier algorithm are used for face detection. Then, eye region is extracted and converted to binary, followed by the eye blink detected using shape measurement. Finally, drowsiness is detected using three different criteria.

Viola–Jones algorithm (Viola and Jones Citation2004) is one of the most efficient tools for object detection and is widely used to detect faces (Lopar and Slobodan Citation2013). Several researches have used Viola–Jones algorithm for driver drowsiness detection (AL-Anizy Citation2015; Bharambe and Mahajan Citation2015; Diddi and Jamge Citation2015; Flores et al. Citation2010a; Karchani, Mazloumi, and Saraji Citation2015; Kong et al. Citation2015; Kumari Citation2014; Liu et al. Citation2010; Liu et al. Citation2010; Patel, Patel, and Sharma Citation2015; Punitha and Geetha Citation2015; Suryaprasad et al. Citation2013; Shewale and Pranita Citation2014; Shen and Xu Citation2015).

Suryaprasad et al. (Citation2013) presented a drowsiness detection system, composed of five modules from video acquisition to fatigue detection. First, the region of interest is marked followed by the face and eye detection using pre-defined Haarcascade samples. Likewise, Shewale and Pranita (Citation2014) presented a fatigue monitoring system based on Viola–Jones algorithm which uses Haar-like features technique to detect face and eye. However, the paper lacks the experimental evaluation.

Karchani, Mazloumi, and Saraji (Citation2015) proposed a drowsiness detection system design, employing virtual-reality driving simulator. Twenty professional urban male bus drivers with normal eyesight (without wearing glasses) participated in the study and images of driver’s face were taken with CC Camera placed in front of the driver. Image processing techniques (i.e. Viola–Jones algorithm, binary and histogram methods) were employed to detect the eyes. Once the eyes are extracted, frames are transformed into grayscale space. Finally, the level of drowsiness was determined on the basis of close eye, eye blink duration, and blink frequency, and MLP neural network.

Patel, Patel, and Sharma (Citation2015) used a standard webcam to continuously capture the images of the driver, followed by face detection and eye tracking using Viola–Jones. An alarm is issued if the eyes are found to be closed or blinking rate is abnormal for 4–5 consecutive frames. However, the paper lacks the experimental evaluation.

Kong et al. (Citation2015) proposed an improved drowsiness detection strategy where Viola–Jones Adaboost algorithm is trained for both fronts and deflected faces. First, images were converted to grayscale and enhanced by histogram equalization. Second, the modified algorithm is used for face detection and eye state recognition. Finally, fatigue is determined on the basis of eye closure.

The idea behind Kumari’s work (Kumari Citation2014) was to develop an economical yet efficient drowsiness detection system. A low-quality webcam was used to capture the video and Viola–Jones algorithm was used for face detection and facial feature extraction. Unlike previous approaches, average intensity variations were used to determine eye state (open/close). However, the paper does not include any experimental results.

Bharambe and Mahajan (Citation2015) proposed another real-time vision-based driver drowsiness detection system which adopts Viola–Jones classifier. Webcam was used to capture videos of eight drivers in normal lightning conditions. First, the images were preprocessed to remove noise using histogram equalization. Second, face and eyes were detected using Haar like feature-based object detection algorithm and template matching method, respectively. Finally, the drowsiness is detected on the basis of eye state (open/close) in consecutive frames. Likewise, Diddi and Jamge (Citation2015) also used the similar approach for drowsiness detection. Authors claim that the proposed scheme is low cost and able to detect all types of eyes of any gender.

Liu et al. (Citation2010) proposed another fatigue detection algorithm based on Viola–Jones cascaded classifiers algorithm and the diamond search algorithm. A simple feature is extracted from a temporal difference image and the distance between the lower and upper eyelids is then used to analyze the eye state. Three criteria were used to determine driver’s attentiveness. Similarly, Liu et al. (Citation2010) proposed a novel algorithm to detect eye closure using an infrared camera. Viola–Jones framework was used to localize face and eyes, followed by the extraction of eye region. Then, the proposed algorithm detects eye corners and eyelid's movement, based on which eye state (open/close) was classified.

Several researches used a combination of Viola–Jones framework and Support vector machine (SVM) for image processing and eye-state classification, respectively (AL-Anizy Citation2015; Flores et al. Citation2010b; Punitha and Geetha Citation2015; Shen and Xu Citation2015).

Flores et al. Citation2010b presented a real-time fatigue detection system that works under different illuminations. IR Camera was used for image acquisition followed by the face detection using Viola–Jones framework. Gabor filter and condensation algorithm were applied for feature extraction. Finally, the drowsiness is detected using SVM based on eye state (open/close).

Drowsiness detection scheme proposed in Punitha and Geetha (Citation2015) uses Viola–Jones face cascade of classifiers to detect the face and then eyes are detected heuristically (i.e. with respect to height and width of face). Eye images were preprocessed using histogram equalization and minimum intensity projection is used to detect the eye state, which is then fed to SVM to classify as open/close. Finally, the drowsiness is detected on the basis of eye state (open/close) in consecutive frames.

Shen and Xu (Citation2015) proposed an embedded driver drowsiness monitoring system that contains four modules, i.e. image detection, image process, fatigue detection, and display. In order to minimize the lightning effects, an infrared camera is used. The underlying algorithm is based on Viola–Jones framework and SVM is used to determine eye state. Finally, the fatigue is detected on the basis of eye closure.

Similarly, in AL-Anizy (Citation2015) a two-phase scheme is proposed where image processing is based on Viola–Jones HAAR face detection algorithm and SVM is used as a classifier. Six drivers participated in the study and a standard Hp laptop webcam is used to capture images. Unlike previous approaches, template matching was replaced by histogram equalization to speed up the processing.

Yawn Analysis

Several studies have proposed methods for driver drowsiness detection based on yawn analysis (Abtahi Citation2012; Saradadevi and Bajaj Citation2008).

Saradadevi and Bajaj (Citation2008) used Viola–Jones framework for face detection and mouth region extraction. For fatigue detection SVM was trained to recognize the yawn.

Abtahi (Citation2012) introduced three different methods for driver’s fatigue detection based on yawn analysis, namely, color segmentation, active contour model, and Viola–Jones method. Participants with varied characteristics (e.g. male/female, with/without glasses, with/without beard, etc.) were chosen for study and a Canon A720Is digital camera was used for video acquisition.

Hybrid

Some researchers have also proposed hybrid schemes for drowsiness detection where they have combined two or more facial features (Churiwala et al. Citation2012; Sacco and Farrugia Citation2012a; Sigari, Fathy, and Soryani Citation2013).

Churiwala et al. (Citation2012) combined eye movement, yawn detection, and head rotation for driver drowsiness detection. An algorithm based on Haar classifier was used to compute the four parameters (i.e. duration of eye closure, eye bink frequency, yawning, and head rotation) and their outputs altogether help to get the result. Authors claim good performance; however, the paper does not include the test results.

Sacco and Farrugia (Citation2012a) used Viola–Jones object detection framework for detecting facial features along with the correlation coefficient template matching to determine the state of features. Finally, overall fatigue level is determined applying SVM on the combination of three features.

Sigari, Fathy, and Soryani (Citation2013) proposed an adaptive fuzzy expert system that uses a combination of eye region-related symptoms (i.e. distance between eyelids and eye closure) and face region (i.e. head rotation) for drowsiness detection. A digital camera is used for image acquisition, followed by the face detection using Viola–Jones method. The conventional eye detection step was bypassed and instead horizontal projection of top half segment is used for the extraction of eye region related symptoms while template-based matching is used for face region. Finally, the fuzzy expert system is used for drowsiness detection with a short training phase.

From the cited literature review, we observed that mostly eye behaviors, particularly eye closure, have been used to determine fatigue by applying a combination of Viola–Jones and Support Vector Machine (SVM) for eye detection and eye-state classification, respectively. The issues pertaining to the real-time driver fatigue detection problem are as follows:

Dataset collection is the biggest challenge since there are no standard datasets available to study fatigue detection problem.

Determine a suitable camera location for video acquisition in such a way that the camera will not distract the driver or block his/her view.

Detect face and eyes:

When the driver is not facing the camera.

When driver’s eyes are closed.

Across gender with variations in physical appearances.

Under different lightning conditions.

In the presence of interference and noise while driving.

Devise a low-cost solution for fatigue detection.

Proposed Scheme

Workflow

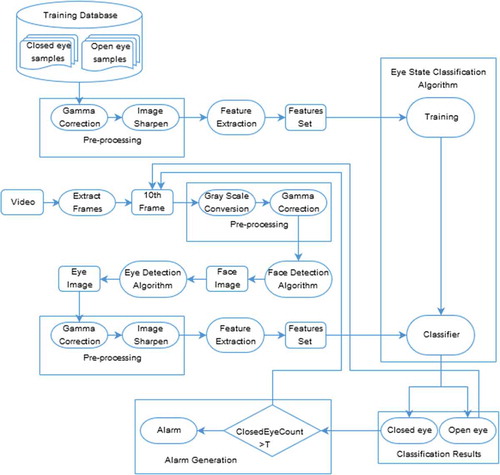

First, the driver’s video is captured and input frames are preprocessed, followed by the face and eye detection. After localizing the right eye, eye state is determined and if the eye is found closed for a certain number (i.e. threshold) of consecutive frames, it is marked as micro-sleep. Once a micro-sleep pattern is detected, an alarm will be triggered to warn the driver. Pseudo-code for the basic workflow of the proposed system is given in and the major phases of the system are depicted in the block diagram given in .

Performance evaluation will be done in terms of face and eye detection accuracies along with their respective miss rates, eye-state classification accuracy, and overall fatigue recognition accuracy along with the number of missed/false alarms.

Method Description

Face and Eye Detection

The Viola–Jones framework (Viola and Jones Citation2004) is the first object detection framework proposed in 2001 by Paul Viola and Michael Jones. Viola–Jones is the state-of-the-art object detector which is used to detect people’s faces, noses, eyes, mouth, or upper body, based on their Haar features.

Features Extraction

Features extraction is the process of selecting relevant attributes in the data that are used for the construction of the model. This phase has two main objectives:

Enhance the dataset quality and classifier performance by eliminating the redundant/irrelevant features.

Decreasing the size of the input data.

Principal Component Analysis (PCA) is used for features extraction. PCA extracts the most relevant features of an image which can principally differentiate among various input images. It uses an orthogonal transformation to convert a set of observations of possibly correlated variables into a set of values of uncorrelated variables called principal components. Later the extracted features’ set is passed to the classifiers for training and testing.

Classification Methods

Two classification methods which have been used for the eye-state classification are briefly described in the following subsections.

Support Vector Machine (SVM)

Support vector machine (SVM) is supervised learning models that analyze data and recognize patterns, used for classification. SVM is based on the principle of maximum margin. It represents the samples as points and map the points from separate classes in such a way that they are divided by a clear gap. New test samples are then mapped into that same space and classified based on the side of the boundary they fall. SVM uses two datasets, i.e. training dataset and testing dataset.

Given a set of training samples, each belonging to one of the two classes, SVM training algorithm builds a model that assigns new test samples into one class or the other.

Adaboost

Adaboost is an ensemble learning algorithm used for classification, which creates the strong learner by iteratively adding weak learners. Training is done in such a way that during each round, a new weak learner is added to the ensemble and a weight vector is adjusted to focus on samples that were misclassified previously.

Dataset Collection

In our dataset we considered multiple subjects from both genders, having different appearances, in different cars and under different lightning conditions ().

We placed a mobile camera on the extreme left of the dashboard and made videos of each driver in different vehicles, covering multiple scenarios, i.e. parked car, while driving, in shade, in sunlight, etc ().

The datasets used in the research papers we studied are not standardized and there is a lot of variation in the experimental setup as well ().

Implementation Steps

The proposed scheme is implemented in MATLAB and is based on several sequential steps, which have been described in the following sections.

Video Acquisition

Initially, we performed an exercise in order to find the most suitable camera location for video acquisition in such a way that camera will not distract the driver or block his/her view (). Three camera locations with/without zoom were tested with two subjects:

Extreme left of the dashboard

Middle of the dashboard

Under the back view mirror

First, the frames extracted from the videos were preprocessed, followed by the face and eye detection. Second, we took opinion from the drivers about which camera location is less distracting while driving. After analyzing the face and eye detection results and the opinion of drivers, we finalized the camera location (i.e. on the extreme left of the dashboard without zoom).

Figure 5. Camera locations tested for video acquisition (extreme left of the dashboard, middle of the dashboard, under the back view mirror).

For video acquisition in our proposed system, we placed a mobile camera on the extreme left of the dashboard and made videos of each driver in different vehicles, covering multiple scenarios, i.e. parked car, while driving, in shade, in sunlight.

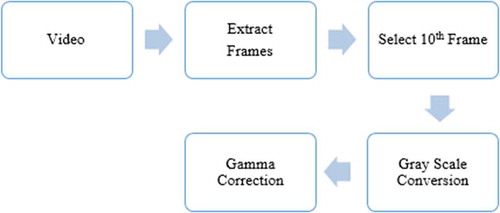

Preprocessing

In this step, we extracted frames from the video and every 10th frame is taken as input. Input frame is preprocessed by converting to grayscale followed by the gamma correction. Gamma correction controls the overall lightness or darkness of the image and is used to improve the image quality ().

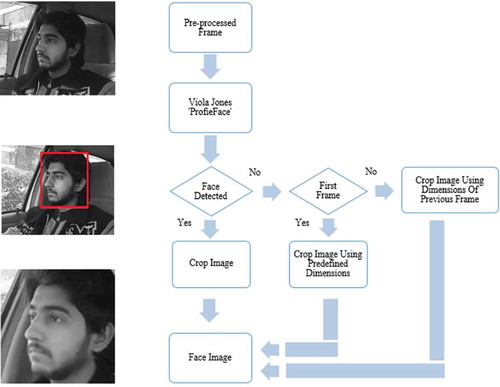

Face Detection

After preprocessing the input frame, face is detected. In our face-detection algorithm, we have used Viola–Jones “ProfileFace” model since the video is captured from the left side of the driver (, and ).

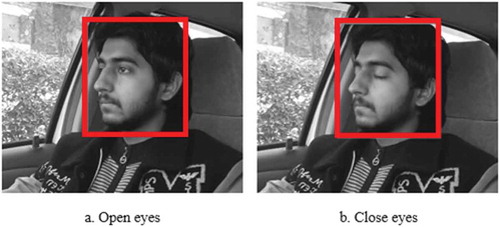

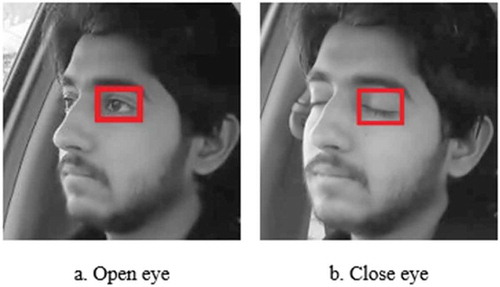

Eye Detection

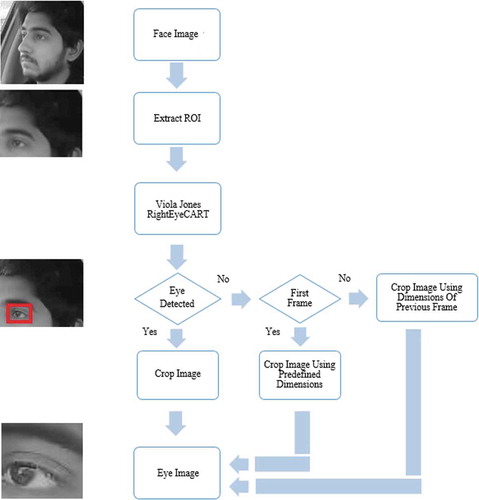

After extracting the face image, right eye is detected in each frame. In our eye-detection algorithm, we have used Viola–Jones “RightEyeCART” model since the video is captured from the left side of the driver (, and ).

Features Extraction

Principal Component Analysis (PCA) is used for features extraction (). Later the extracted features set is passed to the classifiers for training and testing.

Eye-State Classification

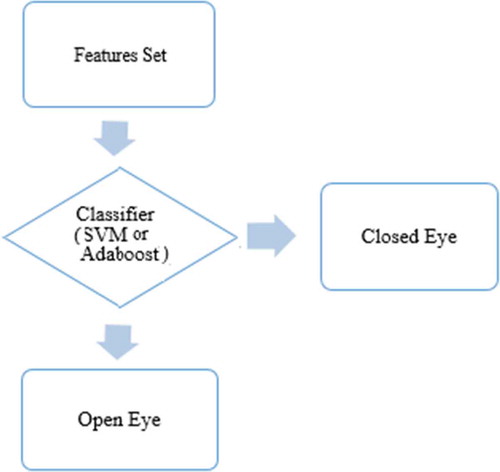

Once the right eye has been localized successfully, the next step is eye-state classification using two different classification techniques, i.e., Support Vector Machine (SVM) and Adaboost ().

Dataset

First, we complied the eye samples (both open and close) of all the subjects in the form of a dataset and assigned the corresponding labels (i.e. one for close eye and two for open eye). Both classifiers are trained and tested on this dataset ().

Training Dataset

Training dataset includes frames form all the videos of all the subjects except the videos of the subject which is being used for testing.

Testing Dataset

All the videos are tested one by one in such a way that videos of the test subject are not included in the training.

Classification

For classification purpose, the following two methodologies are explored to find which performs better.

Support Vector Machine (SVM)

Support Vector Machine (SVM) with 10-fold cross validation is used for eye-state classification.

Adaboost

Adaboost classifier is used for eye-state classification in which an ensemble of decision trees is trained using adaptive boosting.

Alarm Generation

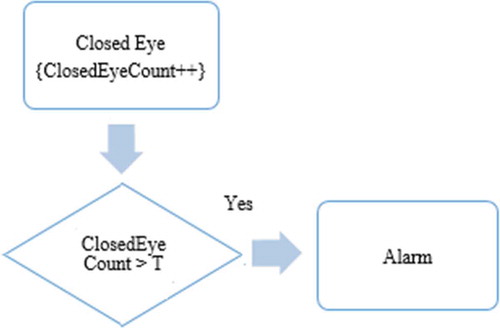

If the time for which the eye is closed exceeds a certain threshold, micro-sleep is identified and an alarm is set off, alerting the driver. The term “ClosedEyeCount” represents the number of processed frames in which the eye is found closed. “T” represents the threshold means, the maximum number of consecutively processed frames in which if the eye is found closed then the driver is considered fatigued and the alarm is generated (). In our system, threshold is set to three consecutive frames.

Performance Evaluation

A detailed performance evaluation of the proposed scheme is done using a confusion matrix and alarm generation accuracy.

Confusion Matrix

Following Confusion Matrix () is used to evaluate the results we obtained from the proposed scheme:

Alarm Generation Accuracy

Alarm Generation Accuracy = Correct Alarms – Missed Alarms/Manual Alarms * 100

Comparison of Results

The results obtained from the proposed scheme are compared with the results of the studied literature. The comparison table is given in the “Results” section.

Results

Results of Proposed Scheme

First, we manually recorded our results for all the frames of all the videos. Later, we compared these results with the automated results obtained by applying SVM and Adaboost. We have categorized our results into four categories which are

Face detection results

Eye detection results

Eye-state classification results

Alarm generation results

Face Detection Results

Face Detection results are given in . Viola–Jones algorithm gives better performance for images captured from front, as we have created our dataset by placing the camera on extreme left side of the driver, so there are few detection failures. The average accuracy is 99.9% with zero miss rate.

Table 1. Comparison of fatigue detection techniques.

Table 2. Characteristics of the subjects that participated in the study.

Table 3. Experimental setup for video acquisition.

Table 4. Comparison with existing literature with respect to the dataset and experimental setup.

Table 5. Dataset used for eye-state classification.

Table 6. Confusion Matrix used to evaluate the results of the proposed scheme.

Table 7. Face detection results.

Eye Detection Results

Eye detection results are givenin . Viola–Jones algorithm gives better performance for eye detection in artificial light, as we have created our dataset in natural light so there are few tracking failures. The average accuracy is 98.7% with zero miss rate.

Table 8. Eye detection results.

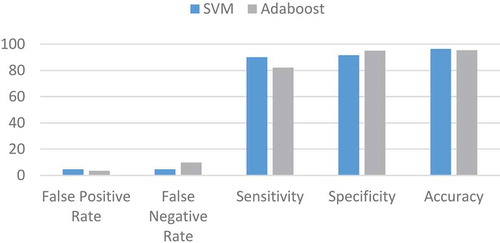

Eye-State Classification Results

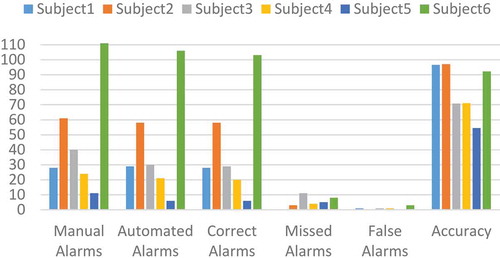

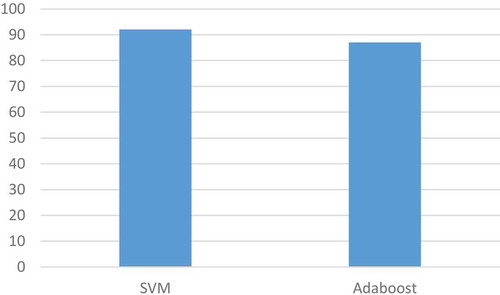

Eye-state classification results by SVM and Adaboost are given in and , respectively. In , the overall comparison of results obtained by applying SVM and Adaboost is depicted in the form of false-positive rate, false-negative rate, accuracy, sensitivity, and specificity. After the comparison of results, we observed that SVM provided comparatively better results.

As graphical representation is more easily understandable, we have plotted a graph for the comparison of classification techniques used. shows the overall accuracy of both techniques. SVM provided 96.5% accuracy and Adaboost provided 95.4% accuracy.

Support Vector Machine (SVM)

Table 9. Eye-state classification results using SVM.

Adaboost

Table 10. Eye-state classification results using Adaboost.

Table 11. Comparison of eye-state classification results using SVM and Adaboost.

Alarm Generation Results

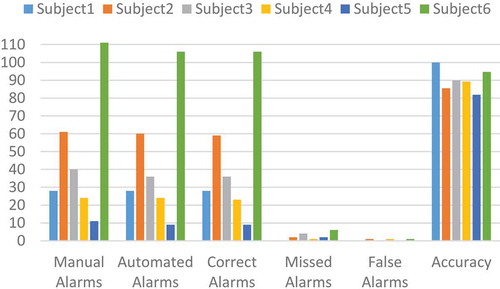

In the last step, we have computed the alarm generation results for both the techniques separately. , , and show results for alarm generation using SVM and Adaboost, respectively. The overall alarm generation results are given in and .

Table 12. Alarm generation results using SVM.

Table 13. Alarm generation results using Adaboost.

Table 14. Comparison of results with existing literature.

Comparison of Results

It is difficult to do a comparison with existing fatigue detection techniques due to the variation of experimental setup and datasets used in the research papers we studied. Details of the dataset collection can be found in chapter 3. We have summarized the results of existing literature and our proposed scheme in .

Conclusion

Driver fatigue detection problem was studied in this research. First, face and right eye of the driver are detected followed by the eye-state classification. If the eye is found closed for a particular number of consecutive frames then an alarm is triggered to warn the driver. In our dataset, we considered multiple subjects from both genders, having different appearances, in different cars and under different lightning conditions. Viola–Jones object detection algorithm is used to detect the face and right eye of the driver and then two different classification techniques, i.e., Support Vector Machine (SVM) and Adaboost are used to determine eye state. After detailed performance evaluation, it has been concluded that SVM gave better results than the Adaboost.

The contributions of this research work are as follows: First, we introduced the new idea for video acquisition in which camera does not distract the driver or block his/her view. Three camera locations with/without zoom were tested with two subjects and after detailed analysis, we concluded that the most suitable location for camera is on the extreme left of the dashboard without zoom. Second, we generated a dataset in which we considered multiple subjects from both genders, having different appearances, in different cars and under different lightning conditions. Third, we proposed face and eye-detection algorithms which facilitate accurate face and eye detection, even when the driver is not facing the camera or driver’s eyes are closed. Fourth, the achieved results for face detection, eye detection, and eye-state classification are comparable with the state of the art. Fifth, overall cost of the proposed system is very low as there is no specialized experimental setup is used.

Future Work

Our future research objective will be to further improve the accuracy of face and eye detections by using different techniques, e.g., kinematic model with the extended Kalman filter, KLT algorithm, etc. Currently, we have taken videos under different lightening conditions during daytime only and the fatigue detection at nighttime is not included in the current scope of work. In future, we will also include the videos taken at night in our dataset. Similarly, we have used multiple subjects with varying facial features for our experiments, but there is no subject with glasses. Later on, subjects wearing glasses can also be included in the dataset and then the performance can be improved for those challenges too. Moreover, the proposed scheme can also be extended to develop a mobile application. To achieve our objectives, additional experiments will be performed in a more organized way, using additional drivers and incorporating scenarios like nighttime and drivers wearing glasses.

Abbreviated title

Driver Fatigue Detection

References

- Abtahi, S. 2012. Driver drowsiness monitoring based on yawning detection. http://www.ruor.uottawa.ca/fr/handle/10393/23295.

- AL-Anizy, G. J. 2015. Automatic driver drowsiness detection using Haar algorithm and support vector machine techniques. Asian Journal of Applied 8:149–57. http://www.researchgate.net/profile/Md_Jan_Nordin/publication/272997195_Automatic_Driver_Drowsiness_Detection_Using_Haar_Algorithm_and_Support_Vector_Machine_Techniques/links/54f4476b0cf24eb8794d9e67.pdf.

- Batchu, S., and S. P. Kumar. 2015. Driver drowsiness detection to reduce the major road accidents in automotive vehicles. International Research Journal of Engineering and Technology (IRJET) 2 (1). http://www.irjet.net/archives/V2/i1/Irjet-v2i163.pdf.

- Bergasa, L. M., J. Nuevo, M. A. Sotelo, R. Barea, and M. E. Lopez. 2006. Real-time system for monitoring driver vigilance. IEEE Transactions on Intelligent Transportation Systems 7 (1):63–77. doi:10.1109/TITS.2006.869598.

- Bharambe, S. S., and P. M. Mahajan. 2015. Implementation of real time driver drowsiness detection system. International Journal of Science and Research (IJSR) 4 (1). http://www.ijsr.net/archive/v4i1/SUB15742.pdf.

- Budiharto, W., W. D. Putra, and Y. P. Putra. 2013. Design and analysis of fast driver’s fatigue estimation and drowsiness detection system using android. Journal of Software 8 http://ojs.academypublisher.com/index.php/jsw/article/view/10081.

- Churiwala, K., R. Lopes, A. Shah, and N. Shah. 2012. Drowsiness detection based on eye movement, yawn detection and head rotation. International Journal of Applied Information Systems (IJAIS) 2. http://research.ijais.org/volume2/number6/ijais12-450368.pdf.

- Commission, European. 2016. Professional drivers - European commission. http://ec.europa.eu/transport/road_safety/users/professional-drivers/index_en.htm.

- Dasgupta, A., A. George, S. L. Happy, and A. Routray. 2013. A vision-based system for monitoring the loss of attention in automotive drivers. IEEE Transactions on Intelligent Transportation Systems 14 (4):1825–38. doi:10.1109/TITS.2013.2271052.

- Devi, M. S., M. V. Choudhari, and P. Bajaj. 2011. Driver drowsiness detection using skin color algorithm and circular hough transform. Emerging Trends in. http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=6120568.

- Dhivya, R., and P. Suresh Babu. 2015. A survey on driver cognitive distraction detection. International Journal of Research. http://edupediapublications.org/journals/index.php/ijr/article/view/1804.

- Diddi, V. K., and S. B. Jamge. 2015. A vision based system for monitoring the loss of attention in automotive drivers. International Journal of Science and Research (IJSR) 4 (7). http://www.ijsr.net/archive/v4i8/SUB157300.pdf.

- Fazli, S., and P. Esfehani. 2012. Tracking eye state for fatigue detection. Conference on Advances in Computer http://psrcentre.org/images/extraimages/3. 1112021.pdf.

- Flores, M. J., J. M. Armingol, and D. L. E. Arturo. 2010a. Real-time warning system for driver drowsiness detection using visual information. Journal of Intelligent & Robotic Systems 59 (2):103–25. doi:10.1007/s10846-009-9391-1.

- Flores, M. J., J. M. Armingol, and A. de la Escalera. 2010b. Driver drowsiness warning system using visual information for both diurnal and nocturnal illumination conditions. EURASIP Journal on Advances in Signal Processing 2010 http://dl.acm.org/citation.cfm?id=1928491.

- Jayanthi, D., and M. Bommy. 2012. Vision-based real-time driver fatigue detection system for efficient vehicle control. International Journal of Engineering and Advanced. http://scholar.google.com.pk/scholar?q=Vision-based+Real-time+Driver+Fatigue+Detection+System+for+Efficient+Vehicle+Control&btnG=&hl=en≈sdt=0%2C5≈ylo=2011#0.

- Jiménez-Pinto, J., and M. Torres-Torriti. 2013. Optical flow and driver’s kinematics analysis for state of alert sensing. Sensors 13:4225–57. doi:10.3390/s130404225. http://www.mdpi.com/1424-8220/13/4/4225/htm.

- Jo, J., S. J. Lee, and H. G. Jung. 2011. Vision-based method for detecting driver drowsiness and distraction in driver monitoring system. Optical Engineering 50:127202. http://opticalengineering.spiedigitallibrary.org/article.aspx?articleid=1158525.

- Karchani, M., A. Mazloumi, and G. N. Saraji. 2015. The steps of proposed drowsiness detection system design based on image processing in simulator driving. http://irjabs.com/files_site/paperlist/r_2607_150608134844.pdf.

- Kong, W., L. Zhou, Y. Wang, J. Zhang, J. Liu, and S. Gao. 2015. A system of driving fatigue detection based on machine vision and its application on smart device. Journal of Sensors 2015:1–11. http://www.hindawi.com/journals/js/aa/548602/.

- Kumari, K. B. M. 2014. Real time detecting driver’s drowsiness using computer vision. International Journal of Research in Engineering and Technology. http://esatjournals.org/Volumes/IJRET/2014V03/I15/IJRET20140315027.pdf.

- Lenskiy, A. A., and J.-S. Lee. 2012. Driver’s eye blinking detection using novel color and texture segmentation algorithms. International Journal of Control, Automation and Systems 10 (2):317–27. doi:10.1007/s12555-012-0212-0.

- Liu, A., L. Zhichao, L. Wang, and Y. Zhao. 2010. A practical driver fatigue detection algorithm based on eye state. 2010 Asia Pacific Conference on Postgraduate Research in Microelectronics and Electronics (PrimeAsia), 235–38. IEEE. doi:10.1109/PRIMEASIA.2010.5604919.

- Liu, D., P. Sun, Y. Xiao, and Y. Yin. 2010. Drowsiness detection based on eyelid movement. 2010 Second International Workshop on Education Technology and Computer Science, 2:49–52.IEEE. doi:10.1109/ETCS.2010.292.

- Lopar, M., and R. Slobodan. 2013. An overview and evaluation of various face and eyes detection algorithms for driver fatigue monitoring systems. Computer Vision and Pattern Recognition, October. http://arxiv.org/abs/1310.0317.

- Lucas, B. D., and T. Kanade. 1981. An iterative image registration technique with an application to stereo vision.” IJCAI. http://flohauptic.googlecode.com/svn-history/r3/trunk/optic_flow/docs/articles/LK/Barker_unifying/lucas_bruce_d_1981_2.pdf.

- National Sleep Foundation. 2016. Drowsy driving: symptoms & solutions - National sleep foundation. http://sleepfoundation.org/sleep-topics/drowsy-driving.

- Patel, M., S. Lal, D. Kavanagh, and P. Rossiter. 2010. Fatigue detection using computer vision. International Journal of Electronics and Telecommunications 56 (4):457–61. doi:10.2478/v10177-010-0062-8.

- Patel, S. P., B. P. Patel, and M. Sharma. 2015. Detection of drowsiness and fatigue level of driver. International Journal for Innovative Research in Science & Technology 1 (11). http://www.ijirst.org/articles/IJIRSTV1I11075.pdf.

- Punitha, A., and M. K. Geetha. 2015. Driver eye state detection using minimum intensity projection - An application to driver fatigue alertness. Indian Journal of Science and Technology 8. doi:10.17485/ijst/2015/v8i17/69918.

- Sacco, M., and R. Farrugia. 2012a. Driver fatigue monitoring system using support vector machines. Communications Control and Signal. http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=6217754.

- Sacco, M., and R. A. Farrugia. 2012b. Driver fatigue monitoring system using support vector machines. 2012 5th International Symposium on Communications, Control and Signal Processing, 1–5. IEEE. doi:10.1109/ISCCSP.2012.6217754.

- Saradadevi, M., and P. Bajaj. 2008. Driver fatigue detection using mouth and yawning analysis. Journal of Computer Science and Network. http://paper.ijcsns.org/07_book/200806/20080624.pdf.

- Shen, H. M., and M. H. Xu. 2015. Design and implementation of embedded driver fatigue monitor system. 2015 International Conference on Artificial. http://www.atlantis-press.com/php/download_paper.php?id=22072.

- Shewale, A. N., and C. Pranita. 2014. Real time driver drowsiness detection system. International Journal of Advanced Research in Electrical, Electronics and Instrumentation Engineering 3 (12):13685–89. http://www.ijareeie.com/upload/2014/december/31_Real.pdf.

- Sigari, M. H., M. Fathy, and M. Soryani. 2013. A driver face monitoring system for fatigue and distraction detection. International Journal of Vehicular 2013:1–11. http://www.hindawi.com/journals/ijvt/aip/263983/.

- Sugur, S. M., V. B. Hemadri, and U. P. Kulkarni. 2013. Drowsiness detection based on eye shape measurement. IJCER. http://ohs.ijcer.org/index.php/ojs/article/view/189.

- Suryaprasad, J., Dr., D. Sandesh, V. Saraswathi, D. Swathi, and S. Manjunath. 2013. Real time drowsy driver detection using haarcascade samples. In Computer science & information technology (CS & IT), 45–54. Academy & Industry Research Collaboration Center (AIRCC). doi:10.5121/csit.2013.3805.

- Tang, J., Z. Fang, and H. Shifeng. 2010. Driver fatigue detection algorithm based on eye features. 2010 Seventh International Conference on Fuzzy Systems and Knowledge Discovery, 5: 2308–11. IEEE. doi:10.1109/FSKD.2010.5569306.

- Vikash, and D. N. C. Barwar. 2014. Monitoring of driver vigilance using Viola-Jones technique. International Journal of Application or Innovation in Engineering & Management (IJAIEM) 3 (5). http://www.ijaiem.org/volume3issue5/IJAIEM-2014-05-29-102.pdf.

- Viola, P., and M. J. Jones. 2004. Robust real-time face detection. International Journal of Computer Vision 57 (2):137–54. doi:10.1023/B:VISI.0000013087.49260.fb.

- Wierwille, W. W. 1995. Overview of research on driver drowsiness definition and driver drowsiness detection. Proceedings of the fourteenth international technical conference on enhanced safety of vehicles. http://trid.trb.org/view.aspx?id=476858.

- Zhang, W., B. Cheng, and Y. Lin. 2012. Driver drowsiness recognition based on computer vision technology. Tsinghua Science and Technology 17 (3):354–62. doi:10.1109/TST.2012.6216768.