ABSTRACT

The current study explores the determinants of ChatGPT adoption and utilization among a sample of Norwegian university students. The theoretical perspective of the study is anchored in the Unified Theory of Acceptance and Use of Technology (UTAUT2) and based on a previously tested model. The proposed model integrates six constructs to explain the Behavioral intentions and actual usage patterns of ChatGPT in a higher education context. The study analyzed responses from 104 students attending Universities in West and Central Norway using the partial-least squares approach to structural equation modeling. The data showed that performance expectancy emerged as the construct with the biggest impact on Behavioral intention, followed by Habit. This study contributes to the research on the factors influencing university students’ engagement with generative AI technologies. Furthermore, it contributes to a more comprehensive understanding of how tools like ChatGPT can be integrated effectively in educational contexts in both students learning and instructors teaching.

Introduction

Chatbots have evolved into sophisticated AI-driven virtual assistants, marking a significant shift in human-computer interaction. The early chatbots were limited in their capabilities. These softwares were rule-based and able to respond only to predefined commands (Agarwal and Wadhwa Citation2020). However, the integration of neural networks marked a central advancement in chatbot technology, enabling them to “learn” from data and enhance their responses over time (Agarwal and Wadhwa Citation2020). This led to the creation of chatbots capable of handling complex conversations with the users (Kasthuri and Balaji Citation2021). The ability of these machines to understand and respond in a convincing human-like manner (Svenningsson and Faraon Citation2019) is a result of advancements in the technology, which enables them to interpret human language in an effective way (Khadija, Zahra, and Naceur Citation2021). The functioning of chatbots relies on a combination of machine learning algorithms, statistical models, and linguistic rules, allowing them to interact with language and context, and even improving their responses based on previous interactions with the user (Adamopoulou and Moussiades Citation2020). These chatbots have reached human-level performance in a number of tasks that have been traditionally only possible for human, as for example cognitive tasks testing creative behavior (Grassini and Koivisto Citation2024; Guzik, Byrge, and Gilde Citation2023; Koivisto and Grassini Citation2023)

In the business sector, chatbots have become invaluable for streamlining customer support and reducing the workload on human agents. They are particularly beneficial in e-commerce, assisting with product searches, handling queries on shipping and returns, and facilitating purchases (Camilleri and Troise Citation2023; Kim et al. Citation2023; Moriuchi et al. Citation2021; Suhel et al. Citation2020). Chatbots have been extensively deployed on websites and social media platforms to provide 24/7 customer support (Xu et al. Citation2017). Furthermore, they are being used in healthcare, finance, and education for personalized assistance (González-González et al. Citation2023; Krishnan et al. Citation2022; Luo et al. Citation2021). As AI technology continues to progress, the applications of chatbots are expected to become even more diverse and sophisticated, spanning various industries (Androutsopoulou et al. Citation2019; Buhalis and Cheng Citation2019; Casillo et al. Citation2020; Okuda and Shoda Citation2018).

ChatGPT, the Chatbot offered by OpenAI’s, showcases right now the forefront of AI for natural language processing. This service was first launched in November 2022, and since then has already undertaken several updates and improvements. The advent of generative AI, with ChatGPT as a prominent instance, has provoked discussions about its implications across various sectors, including scientific production (Grimaldi et al. Citation2023), and education (Grassini Citation2023b). In the sphere of higher education, ChatGPT offers an opportunity to rethink traditional educational approaches, especially in assessment and teaching methods. Educational institutions have the potential to use ChatGPT not only for evaluation purposes but also to cultivate critical thinking and advanced writing skills. Furthermore, it provides a medium to engage in discussions about the role of AI in contemporary society. Thus, ChatGPT represents a transformative element in education, promoting a dynamic and inclusive learning environment. The exploration of ChatGPT’s applications in higher education covers several aspects. These encompass aiding in essay writing (Crawford, Cowling, and Allen Citation2023) and offering personalized feedback on student assignments and acting as coaches and teaching assistants to the students (Møgelvang et al. Citation2023), and facilitating critical conversations about AIs societal impact (Van Dis et al. Citation2023). The academic sector, particularly educators, are beginning to integrate ChatGPT into their teaching strategies. This integration serves to reveal the technological limitations and to challenge its capabilities. As higher education institutions ponder the future integration of AI in their curricula, ChatGPT stands as a key player, presenting a broad spectrum of groundbreaking and transformative educational opportunities (Lim et al. Citation2023).

The integration of ChatGPT in the academic landscape has provoked significant scholarly interest, as evidenced by the extensive range of topics covered in current peer-reviewed literature. This body of work includes investigations into the application of ChatGPT in various educational settings, with notable emphasis on its incorporation into general education (Cotton, Cotton, and Shipway Citation2023) and medical education (Gilson et al. Citation2023). The methodologies employed in these studies are diverse and innovative, ranging from conducting interviews directly with ChatGPT to evaluate its educational impact (Lund and Wang Citation2023), to exploring the broader spectrum of implications associated with ChatGPT in academic research, writing, and publishing. These studies highlight the potential advantages and challenges presented by ChatGPT (Perkins Citation2023). It is important to note, however, that a portion of the contributions to this field, such as commentaries, letters, and editorials, may not have undergone stringent peer-review processes, reflecting the nascent nature of this research domain.

To examine and analyze technology acceptance among the student demographic, our study utilizes the Unified Theory of Acceptance and Use of Technology (UTAUT2) as the foundational theoretical framework. This model, initially conceptualized and subsequently refined by Venkatesh and colleagues (Venkatesh et al. Citation2003; Venkatesh and Xu Citation2012), has demonstrated its efficacy as a diagnostic instrument for interpreting technology assimilation within diverse educational settings. The UTAUT2 model has been employed in a spectrum of studies exploring an array of educational technologies, including, but not limited to, animations (Dajani and Abu Hegleh Citation2019), lecture capture systems (Farooq et al. Citation2017), e-learning platforms (Zacharis and Nikolopoulou Citation2022), mobile devices (Hoi Citation2020), and learning management systems (Raza et al. Citation2021; Zwain Citation2019). The application of UTAUT2 in our investigation is anticipated to facilitate a holistic understanding of student engagement and acceptance patterns in relation to ChatGPT, thereby augmenting the body of knowledge in this nascent area of research.

This methodological approach aligns with the one recently employed in a study that has investigated the factors influencing the acceptability and use of ChatGPT among Polish university students (Strzelecki Citation2023). The findings reported in such study (Strzelecki Citation2023) corroborated the suitability of the adapted UTAUT2 model for understanding ChatGPT usage among the student population, identifying Habit as the construct that mostly impacted Behavioral intention, succeeded by Performance Expectancy and Hedonic motivation. The study also observed significant yet modest effects of Effort expectancy and Social influence on Behavioral intention, although with minimal effect sizes (f2 < 0.02), indicating negligible impact (Sarstedt, Ringle, and Hair Citation2021). Additionally, the inclusion of Personal Innovativeness (a construct not typically encompassed in the UTAUT2 framework) exhibited a slightly positive influence (f2 < 0.02) on Behavioral intention.

The Present Study

Our study seeks to build upon and provide further context to the findings of Strzelecki (Citation2023) by examining the same research questions within a different socio-cultural setting: a sample of higher education students in Norway, roughly six months after Strzelecki’s original study. This investigation into the Norwegian higher education context can help uncovering how distinct cultural and educational environments influence the dynamics of technology acceptance and use. Despite the relatively short interval since the original study, the rapid pace of ChatGPT adoption could have already significantly affected student attitudes and behaviors toward the AI tool. Our approach is supported by previous literature advocating for the examination of technology acceptance across a variety of cultural backgrounds (Menon and Shilpa Citation2023; Strzelecki Citation2023), emphasizing the importance of diverse samples to improve our understanding of how new AI technologies are adopted in different contexts.

Our proposed hypotheses were based on the hypotheses related to the standard UTAUT2 model (Venkatesh and Xu Citation2012) that were proposed and confirmed in the study of Strzelecki (Strzelecki Citation2023) and were pre-registered prior to data collection (see pre-registration information in the method section). The hypotheses that were tested were the following:

H1:

Performance Expectancy affects directly and significantly Behavioral intention.

Performance expectancy, as conceptualized (Davis Citation1989; Venkatesh et al. Citation2003), is an individual’s belief in how a specific technology will enhance their task performance. It has been emphasized its crucial role in adopting educational technologies (El-Masri and Tarhini Citation2017). This has been in the published scientific literature on use and acceptance of Google Classroom (Kumar and Bervell Citation2019), as well as in the context of mobile learning and learning management systems (Arain et al. Citation2019; Raman and Don Citation2013). In the setting of ChatGPT’s use in higher education, performance expectancy pertains to students’ expectations of its educational benefits.

H2:

Effort expectancy affects directly and significantly Behavioral intention.

Effort expectancy (Moore and Benbasat Citation1991; Venkatesh et al. Citation2003), relates to how effortless a technology appears to users. Recent research (Hu, Laxman, and Lee Citation2020; Jakkaew and Hemrungrote Citation2017; Raza et al. Citation2021), highlights its importance in adopting educational technologies like mobile learning and learning management systems. In the context of ChatGPT, effort expectancy refers to students’ perceptions of its ease of use.

H3:

Social influence affects directly and significantly Behavioral intention.

In the context of technology adoption within educational contexts, the concept of Social influence is considered to be critical. Such a concept is defined as the perceived support or encouragement from influential figures in one’s social sphere – such as peers, teachers, or other key individuals – for using a particular technology (Ajzen Citation1991; Venkatesh et al. Citation2003). This phenomenon is fundamental in shaping students’ intentions to use technology in educational environments. Several pieces of evidence have highlighted the substantial impact of Social influence on adopting various educational technologies. This includes mobile learning (Nikolopoulou, Gialamas, and Lavidas Citation2020), e-learning platforms (Samsudeen and Mohamed Citation2019), and learning management systems (Ain, Kaur, and Waheed Citation2016). Within the parameters of this study, Social influence is conceptualized as the extent to which students perceive their peers, educators, and other prominent figures in their educational setting as supportive of their engagement with innovative tools like ChatGPT.

H4:

Facilitating conditions affects directly and significantly Use behavior.

Facilitating conditions, which pertain to the perceived availability of necessary resources and support for effective technology use (Taylor and Todd Citation1995; Venkatesh et al. Citation2003), play a critical role in influencing learners’ Behavioral intention and use behavior. This concept is recognized as a significant factor in technology adoption (Faqih and Jaradat Citation2021; Kang et al. Citation2014; Osei, Kwateng, and Boateng Citation2022), particularly in the context of educational technologies such as mobile learning, e-learning platforms, and augmented reality. In relation to ChatGPT, facilitating conditions focus on students access to the tool, including technical support and training availability, especially during periods of high demand.

H5:

Hedonic motivation affects directly and significantly Behavioral intention.

Hedonic motivation is crucial for technology adoption in educational settings and refers to the use of technology for enjoyment or novelty (Van Der Heijden Citation2004; Venkatesh and Xu Citation2012). Previous studies have shown its significant impact in various contexts, as mobile learning (Azizi, Roozbahani, and Khatony Citation2020) and e-learning (Twum et al. Citation2021). This concept is particularly relevant in understanding the adoption of new and potentially enjoyable AI tools like ChatGPT among students.

H6 and H7:

Habit affects directly and significantly Behavioral intention and Habit affects directly and significantly Use behavior.

Habit, defined as the degree to which the use of technology becomes a routine and automatic behavior, plays a significant role in the adoption of technology among higher education students (Limayem and Cheung Citation2007; Venkatesh and Xu Citation2012). Its importance has been shown in the case of mobile learning adoption (Ameri et al. Citation2020; Yu et al. Citation2021), as well as in the use of e-learning platforms (Alotumi Citation2022; Zacharis and Nikolopoulou Citation2022). In studying ChatGPT usage, habit is characterized by the regularity and consistency of its integration into students’ academic practices, considering usage frequency. Habit has often shown a direct effect on user behavior (e.g., (Tamilmani et al. Citation2021).

H8:

Behavioral intention affects directly and significantly Use behavior.

Behavioral intention is defined as an individual’s likelihood or plan to use a technology in the future (Davis Citation1989; Venkatesh and Xu Citation2012). In the proposed context it relates to students’ intentions to use ChatGPT in higher education. This intention is a crucial predictor of actual technology use and is shaped by the UTAUT2 model constructs.

Use behavior refers to the actual employment of a technology, influenced by prior Behavioral intentions (Venkatesh and Xu. Citation2012). In this context, it encompasses the frequency, duration, and patterns of ChatGPT usage in academic work, and is also affected by Habit.

Furthermore, the present research examines Experience, Age, and Gender as potential moderating variables that may influence the dynamics between predictive factors and both Behavioral intention and Use Behavior in relation to the students ChatGPT usage. These moderators are integral to the standard UTAUT2 framework. The study of Strzelecki (Strzelecki Citation2023) did not include Experience, as the ChatGPT technology was very new when data was collected. Thus, experience was deemed to be low and not relevant for all the participants. Furthermore, the study of Strzelecki (Strzelecki Citation2023) used Study year and not Age as a moderating factor as in the original UTAUT2 model. In our study, we decided to adhere to the standard UTAUT2 model and include age as a moderating factor. Price value is a construct included in the original UTAUT2 formulation (Venkatesh and Xu Citation2012) as well as often included in adaptations of the UTAUT2 model including these studies investigating technology adoptions in education (see e.g., (Nikolopoulou, Gialamas, and Lavidas Citation2020)). The Price value construct was not included in the present study (as it was not included in (Strzelecki Citation2023)), since the ChatGPT basic service is for the moment free to use. The existing literature provides insufficient evidence to formulate a definitive hypothesis regarding the interaction between predictive and moderating factors. Consequently, the analysis of the interaction between model predictors and moderator variables is primarily exploratory (pre-registered).

Method

The study hypotheses, methodology, and data analysis plan were pre-registered using Open Science Framework service. The pre-registration can be consulted using this link (https://osf.io/kcwyb/?view_only=0bafb17ac0694419bc58f7e0999b76df., the link is anonymized for peer-review)

Sample and Procedure

The adequate sample size for PLS-SEM is linked to multiple variables such as the complexity of the model, the quantity of latent constructs and indicators, the effect sizes of the studied phenomena, and the targeted statistical power level (Hair, Ringle, and Sarstedt Citation2013). While some scholars (e.g., Kock Citation2018) advocate for a minimum observational range of 100–200, empirical literature argues that PLS-SEM studies are often performed with smaller sample sizes (N < 100; see (Zeng et al. Citation2021). It has been suggested that PLS-SEM is effective even with limited sample sizes (Hair, Ringle, and Sarstedt Citation2011), and it has been advised that the minimal sample should exceed either tenfold the maximum number of formative indicators employed for a construct or ten times the largest number of structural paths affecting upon any latent variable (Hair, Ringle, and Sarstedt Citation2011). According to these guidelines, our study’s minimum sample size is calculated at sixty, derived by multiplying ten with the highest number of paths affecting the latent construct Behavioral intention, given that each of our constructs comprises fewer than seven items. The stop-rule for data collection has been pre-registered as either the date 15th December 2023 or the collection of data from a minimum of 100 participants, if the data collected is less than 100 participants on the stop date. On the stop date, we had collected the data of 105 participants, and therefore data collection was considered completed.

The data collection was executed using a survey via Microsoft Forms (Office 365) and followed the regulation of the General Data Protection Regulation (GDPR), and the Declaration of Helsinki. No further assessments were needed for this type of study in line with local and national regulations. The participants were informed of the purpose of the study, that their participation was voluntary, and that no personal, sensitive, nor identifiable data was collected. The survey was distributed directly to the e-mail addresses of students enrolled in various universities located in the western and central regions of Norway during the months of October and November 2023. Concurrently, an in-person recruitment strategy was employed placing posters promoting the study on the university campuses. These posters included printed QR codes for easy survey access. The survey was kept open until the 15th of December 2023. From this process, 105 valid responses were collected. However, following our pre-registered data exclusion criteria, one respondent was omitted from the data analysis due to incomplete responses (less than 80% completion). Consequently, data from 104 participants were subjected to analysis. Demographically, the sample comprised 67 female students (64.4%) and 37 male students (35.6%). The mean age of participants was reported as 25 years (SD = 4.19), with a minimum and maximum age of 20 and 47 years, respectively.

Instruments

The present research utilized the scale approach proposed in Strzelecki (Citation2023) and items adapted from Venkatesh et al. (Venkatesh et al. Citation2003; Venkatesh and Xu Citation2012). i.e., the Unified Theory of Acceptance and Use of Technology (UTAUT and UTAUT2). These adaptations were specifically revised to evaluate the usage of ChatGPT, modifying the original context from using the system and using mobile Internet to using ChatGPT. The constructs measured (Venkatesh et al. Citation2003; Venkatesh and Xu Citation2012) were Behavioral intention (3 items), Effort expectancy (4 items), Facilitating conditions (3 items), Hedonic motivation (3 items), Habit (4 items), Performance Expectancy (4 items), and Social influence (3 items). These items were all measured using a seven-point Likert scale, offering choices from strongly disagree to strongly agree. The Use behavior construct was measured using a single item, adapted from Venkatesh and Xu Citation2012 study, as proposed in Strzelecki (Citation2023), on a 7-options scale ranging from never to several times a day. Numerical values from 1 to 7 were assigned to these options to be able to include this variable in the model. These were as follows: 1 for Never, 2 for Once a month, 3 for Several times a month, 4 for Once a week, 5 for Several times a week, 6 for Once a day, and 7 for Several times a day. The items of the Norwegian version of the scale are available in the supplementary materials. Please note that in the study of Strzelecki (Citation2023), the construct Facilitating conditions had originally 4 items, but 1 was eliminated due to poor factor loadings, and therefore only the 3-items version of the scale was used in this study.

In total, this study utilized 25 survey items to investigate the proposed hypotheses. These items were translated and adapted into Norwegian from the original English version developed by Strzelecki (Citation2023), and reported in the research article. This translation process involved several stages to ensure accuracy. Initially, the items were translated from English to Norwegian by a proficient Norwegian-speaking researcher. Subsequently, a process of retro-translation was undertaken by a different individual, who translated the Norwegian version back into English. This step is critical in cross-cultural research to ensure that the translated items retain the meaning and nuance of the original items. Finally, a third expert reviewed both the translation and adaptation to confirm the fidelity and appropriateness of the translated items. This multi-step process is important to maintain the integrity and validity of the survey items, ensuring that they are both linguistically accurate and culturally sensitive (Brislin Citation1970).

Data Analysis and Statistics

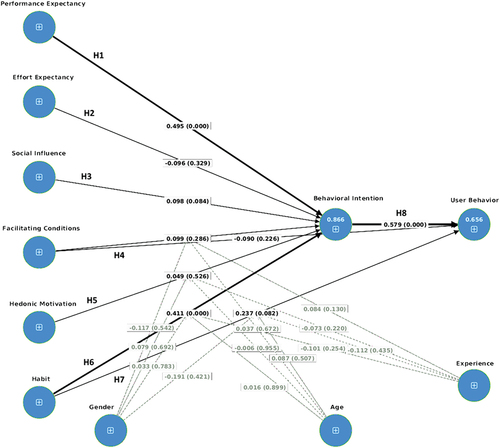

In the present research, the Partial Least Squares Structural Equation Modeling (PLS-SEM) algorithm was employed to estimate the model, utilizing the SmartPLS 4 software (Version 4.0.9.8). The analysis was conducted with the weighting path scheme, setting a cap of 3000 iterations, and using the softwares default settings for initial weights. To assess the statistical significance of the PLS-SEM outcomes, a bootstrapping nonparametric approach was used, running a total of 5000 samples. This method is in line with published literature (Hair Jr, Howard, and Nitzl Citation2020; Hair, Ringle, and Sarstedt Citation2011). The tested model is presented in . Standard significance level of alpha < .05 was used for all the analyses.

Results

Reflective constructs were examined through their respective indicator loadings. An indicator loading surpassing the threshold of 0.7 was indicative of satisfactory item reliability. Analyses showed that all items had an acceptable loading in the respective constructs ().

Table 1. Loading, Means and Standard Deviations (SD) for the study constructs.

Composite reliability (Rho c and Rho a values) was used to evaluate construct reliability, while almost all the computed values ranged between 0.70 and 0.95, indicative of adequate to high reliability (Afthanorhan Citation2013), the rho c values for Behavioral intention and Performance expectancy, as well as the rho a values for Habit exceeded the threshold. It is worth mentioning that some studies (see e.g., (Diamantopoulos et al. Citation2012)), have highlighted that high reliability values, exceeding 0.95, might indicate some criticalities in the measurement instruments.

Internal consistency reliability was evaluated using Cronbach’s alpha, and all construct showed acceptable values. The average variance extracted (AVE) from all items related to a specific reflective variable was calculated to ascertain the convergent validity of the measurement models. An AVE threshold of 0.50 or higher was deemed satisfactory for this purpose (Sarstedt, Ringle, and Hair Citation2021). The results for composite reliability, Cronbach’s alpha, and AVE satisfied the standard quality criteria ().

Table 2. Reliability and validity coefficients for the study constructs.

For the assessment of discriminant validity within the PLS-SEM framework, the Heterotrait-Monotrait ratio of correlations (HTMT) was employed (Henseler, Ringle, and Sarstedt Citation2014). Generally, an HTMT threshold of .90 is recommended for constructs with some degree of conceptual similarity (Henseler, Ringle, and Sarstedt Citation2014). The values are presented in . The assessment of discriminant validity using the Heterotrait-Monotrait ratio (HTMT) revealed significant overlaps among certain constructs. Particularly, the constructs of Effort expectancy and Facilitating conditions showed a high HTMT value of 0.926, indicating a considerable lack of distinctiveness between these variables. This suggests a potential ambiguity in how participants perceive and interpret these constructs. Similarly, Behavioral intention and Performance Expectancy displayed an HTMT value of 0.949. All other relationships between variables fall under the threshold of 0.9. These findings collectively suggest some of the constructs used may be somehow overlapping, and may not be capturing distinct phenomena as initially theorized, and as shown in previous research (Strzelecki Citation2023)

Table 3. Values obtained from the HTMT analyses.

In examining the efficacy of predictive constructs and the overall statistical model, researchers often utilize the coefficient of determination, denoted as R2. This metric, which can assume values between 0 and 1, indicates a model’s explanatory capacity, with higher values representing greater explanatory strength. Within this context, R2 values of 0.25, 0.50, and 0.75 are traditionally interpreted as indicative of weak, moderate, and substantial explanatory power, respectively (Joe F. Hair, Ringle, and Sarstedt Citation2011). Furthermore, the effect size of a variable Cohen’s f2 is interpreted using the threshold 0.35, 0.15, and 0.02 representing large, medium, and small effects, respectively. Importantly, values below 0.02 are in general interpreted as indicative of a negligible impact (Sarstedt, Ringle, and Hair Citation2021). The analysis of the PLS-SEM model is illustrated in , where path coefficients reveal the relationships among variables (including p-values in parentheses), and R2 values are shown within circles. The analysis highlights Performance Expectancy as the most potent predictor of Behavioral intention with a coefficient of .495, followed by Performance Expectancy with a path coefficient of .411. Behavioral intention exerts the most pronounced impact on Use Behavior (.579). No other significant relationships were revealed by the analyses. The complete results of these tests, including R2, significance level, and f2, are presented in .

Figure 2. Results of the PLS-SEM for the UTAUT2 model adapted to ChatGPT acceptance and use in university students. Path coefficients and p-values (in parentheses) are shown within the arrows. Arrows thickness reflects absolute values. R-squared values are shown in blue circles.

Table 4. Path coefficient, significance tests, effect size, and hypotheses support.

The study also tested – as exploratory analyses – the moderating roles of Gender, Age, and Experience. The results indicate that none of the tested moderating variables had a statistically significant influence on the relationships between the predictors and dependent variables under examination ().

Discussion

In this investigation, we explored the perception and utilization of the AI-driven platform, ChatGPT, among a cohort of Norwegian university students. This exploration was anchored in an adapted framework of the Unified Theory of Acceptance and Use of Technology 2 (UTAUT2), a model previously proposed by Strzelecki (Strzelecki Citation2023). Our empirical findings corroborate Strzelecki’s (Citation2023) findings that Performance Expectancy and Habit emerge as principal determinants in shaping Behavioral intention. Further, as in Strzelecki’s study (Strzelecki Citation2023), our findings also show that Behavioral intention significantly predicts User behavior. However, contrary to Strzelecki’s (Citation2023) findings, our study reveals that other variables within the model, i.e., Effort expectancy, Social influence, and Hedonic motivation, do not significantly influence Behavioral intentions. Neither does Facilitating conditions nor Habit significantly predict User behavior.

These outcomes are in line with previous research (Venkatesh, Thong, and Xu Citation2016; Yu et al. Citation2021), which underscored the pivotal role of Performance Expectancy and Habit in embracing nascent technologies within the higher education sector. Specifically, within the realm of AI-enabled conversational agents like ChatGPT, our data indicates that students’ expectations of the technology’s potential to augment academic performance, coupled with their usage frequency, foster the development of Behavioral intention.

Performance Expectancy emerges as the predominant predictor of Behavioral intention, aligning with prior research that identified a positive correlation between these two constructs across various domains, such as learning management systems (Raza et al. Citation2021) and mobile learning environments (Kang et al. Citation2014). Furthermore, Performance Expectancy has been recognized as a significant forecaster of Behavioral intention in diverse studies, including examination of students’ perceptions of video conferencing tools (Edumadze et al. Citation2022) and research on the intention to use interactive whiteboards (Wong, Teo, and Goh Citation2013).

In our study, Habit was identified as the second most robust predictor of Behavioral intention toward ChatGPT use among university students. This finding aligns with the broader narrative in technology acceptance research within higher education, where Habit is often seen as a significant positive influence of Behavioral intention. For instance some research (Osei, Kwateng, and Boateng Citation2022; Zwain Citation2019) highlighted Habit as a key driver in the acceptance and adoption of learning management systems and e-learning, respectively. However, our results deviate from other research (Ain, Kaur, and Waheed Citation2016; Twum et al. Citation2021) that reported an absence of direct impact of Habit on the Behavioral intention to use learning management systems and e-learning. Our research did not find a significant connection between Habit and Use Behavior (for a review see (Tamilmani, Rana, and Dwivedi Citation2018).

In contrast to the findings reported by Strzelecki (Citation2023), our study did not observe a positive correlation between Hedonic motivation and Behavioral intention in the usage of ChatGPT. This discrepancy may be attributed to the varying timeframes of the two studies. Strzelecki’s study was based on data gathered during March 2023, a relatively short time after ChatGPT’s launch in late 2022. During its initial phase, ChatGPT might have been perceived as an enjoyable novelty, attributed to its unique dialog-based interface that differed from previous computerized tools. However, as users have become more accustomed to ChatGPT, its appeal as a fun tool has possibly diminished, with users focusing more on its capability to enhance work performance. This shift is in line the findings of previous studies (Alotumi Citation2022; Mehta et al. Citation2019), where Hedonic motivation did not significantly predict Behavioral intention in the context of Google Classroom and e-learning adoption. However, Hedonic motivation is often found associated with behavioral intention (for a review see (Tamilmani et al. Citation2019)).

Moreover, our investigation indicates that Effort expectancy and Social influence do not significantly impact Behavioral intention to use ChatGPT, a contrast to Strzelecki’s (Citation2023) findings where these variables exhibited minimal effect sizes. Our results suggest that the ease of using ChatGPT in higher education does not significantly influence Behavioral intention. This is consistent with observations in studies on e-learning platform usage during social distancing (Zacharis and Nikolopoulou Citation2022) and Microsoft PowerPoint adoption in higher education (Chávez Herting, Cladellas Pros, and Castelló Tarrida Citation2020). Students’ rapid adaptability and skill acquisition toward innovative technologies could contribute and skill acquisition of students toward innovative technologies could be a contributing factor to these findings.

Our research indicates that Social influence does not impact the Behavioral intention to utilize ChatGPT among university students. This finding may suggest that people with educated background, as university students, are less swayed by external societal pressures. Given the novelty of ChatGPT and its yet-to-be mainstream status, our findings imply an absence of significant social pressure to adopt this tool. However, it is conceivable that as academic institutions begin to formalize policies around the usage of AI tools like ChatGPT, Social influence might emerge as a more prominent factor. This hypothesis is supported by existing literature where Social influence has been a crucial determinant in the adoption of mobile devices (Hoi Citation2020) and mobile learning (Ameri et al. Citation2020). Nonetheless, other studies focusing on technologies like Google Classroom (Alotumi Citation2022; Kumar and Bervell Citation2019) have not established a significant role for Social influence.

Additionally, our study revealed that Facilitating conditions do not significantly influence User Behavior. This finding diverges from Strzelecki’s (Strzelecki Citation2023), where Facilitating conditions were found to influence user behavior, albeit with a modest effect size (f2 = .051). It’s possible that differences in demographic characteristics or levels of technological familiarity between our sample and that used by Strzelecki (Citation2023) could account for the varying perceptions of the importance and impact of Facilitating conditions.

Other variables as Age, Gender, and Experience, did not modulate or mediate any of the relationships where they were tested. This is in line with what was found in the study of (Strzelecki Citation2023). The absence of experience as a mediator in ChatGPT adoption is noteworthy, and is potentially attributable to ease of use of ChatGPT compared to many other digital technologies. Unlike other technologies where experience can influence several aspects of adoption by reducing perceived complexity, ChatGPT’s user-friendly design ensures that both novices and experts users can use it with similar ease. This equalizes user competence across experience levels, suggesting that the platform’s intuitive nature minimizes the impact of experience on factors that are affecting willingness to use.

This pre-registered study was designed as a conceptual replication and extension of Strzelecki’s work (Strzelecki Citation2023), aiming at scrutinizing a cohort of students from a different nation, and offering a fresh temporal perspective on the development of the use of ChatGPT in the context of education. In contrast to Strzelecki’s research,eocused on the initial responses of students to ChatGPT, our analysis captures the views of students who have interacted with this technology for approximately one-year post-release. This indirect longitudinal element is fundamental, shedding light on the evolving perceptions and adaptations of users over time. Such insights are important for understanding long-term engagement and the progression of attitudes toward ChatGPT within an educational context.

The present investigation aligns with the recommendations of Menon and Shilpa (Citation2023), who highlighted the necessity of region-specific research for a more effective generalization of the factors influencing user intentions toward ChatGPT. Furthermore, our study takes into consideration the differential levels of technology adoption across cultures. By investigating the impact of ChatGPT within diverse cultural contexts, particularly contrasting the Nordic regions’ higher technology adoption rates with those of Poland. In light of this discussion, statistics have shown that Nordic countries exhibit a generally higher rate of technology adoption compared to Poland (Kinnunen, Androniceanu, and Georgescu Citation2019; Paraschiv et al. Citation2021). Consequently, the disparities observed between the findings of our study and those obtained from the investigation involving Polish university students (Strzelecki Citation2023) could potentially be attributed to the broader societal attitudes toward technology prevalent in these regions. Nonetheless, it’s important to note that this interpretation remains speculative. To support this possible explanation, additional data from nations with varying levels of technology adoption and acceptance are needed.

Our study acknowledges several limitations, most notably in some of the observed HTMT values, which exceed the 0.9 threshold. This contrasts with previous research in technology acceptance, such as that conducted by Strzelecki (Citation2023). One potential factor contributing to these elevated HTMT values could be the unique characteristics of our participant sample. Variations in demographics, cultural contexts, or technological expertise among the participants might have led to distinct interpretations of the survey constructs. The relatively small effect size may also have affected the HTMT analyses. The specificities of our sample are significant as they can greatly impact the perception and reporting of constructs within the study. The design and translation/adaptation of our measurement items might also have introduced variations. Even slight alterations in the phrasing of questions or the context in which they are presented can shift respondents’ understanding and responses. This can result in construct relationships that differ from those identified in prior research. These contextual settings of our research could have influenced the outcomes of the HTMT analyses, potentially leading to a closer correlation between constructs that were previously more distinct.

Some studies (Diamantopoulos et al. Citation2012), have highlighted that high reliability values, exceeding 0.95, might indicate issues in measurement instruments, and in our study some of the constructs exceeded such threshold for some of the values. While these high values might suggest a robust instrument, they often signal construct issues. Redundant indicators are a common cause, reducing construct validity by reflecting excessive similarity among items. Additionally, these high values might indicate straight-lining, a response pattern where participants consistently choose the same responses, leading to inflated error term correlations. However, it’s important to note that certain constructs, particularly those well-established and narrowly defined, may naturally yield high reliability scores due to their inherent consistency and specificity. In such cases, high reliability does not necessarily imply redundancy or response bias, but rather the precise capture of a specific psychological construct.

The results of our study should be interpreted considering several limitations. Primarily, our research was geographically limited to Norway, reducing the broader applicability of our findings to other regions with different cultural and educational backgrounds. However, the study contributes to the generalization of some of the findings reported previously for a sample from a culturally different student population (Strzelecki Citation2023).

Furthermore, the rapid evolution of AI technology means our findings might quickly need to be updated. As new updates to ChatGPT are released, this tool may become even more suited for students’ tasks, and therefore creating a need for updating our findings. However, such limitation applies to all research within the rapidly evolving field of AI. It is important to understand the evolution of the field and its tools over time, including perceptions and usage, as addressed in our study.

Another significant limitation was our study’s sample size, which was relatively small. Our sample size may not provide a comprehensive view of the broader student population’s attitudes and behaviors toward ChatGPT. However, is worth noting that the population of reference (students in western and center Norway universities) is also relatively small in international standards. Moreover, we didn’t consider the impact of university policies on AI tool usage, which could be a crucial factor in technology adoption. Our focus was purposely solely focused on the UTAUT2 model, leaving out other potentially influential factors.

The presented findings suggested that many factors of UTAUT2 model do not significantly affect user behavior toward ChatGPT. Such results indicated that the motivation behind acceptability and usage of AI tools may be different from these generally individuated in the context of prior technologies. Forthcoming research should attempt to explore other variables potentially impacting AI technology acceptance and usage. Important factors that may potentially influence acceptability and use of AI tools include attitudes toward AI (Grassini Citation2023a), ethical considerations in AI usage (Safdar, Banja, and Meltzer Citation2020), AI technology perceived trustworthiness (Kaur et al. Citation2022), AI transparency and interpretability (referred also as explainability, see (Ehsan et al. Citation2021), AI perceived ethicality (Schelble et al. Citation2022), and the role of user autonomy in technology interaction (Laitinen and Sahlgren Citation2021). These dimensions have the potential to offer a more comprehensive understanding of the factors influencing the acceptability and use of AI technologies in various societal and individual contexts.

Conclusion

The present study highlights the role of user perceptions in the adoption of AI technologies like ChatGPT in educational settings. Performance Expectancy and Habit were identified as critical in shaping Norwegian university students’ intentions to use ChatGPT. However, the lack of significant impact from Effort Expectancy, Social Influence, and Hedonic Motivation challenges prevailing assumptions in technology acceptance models. This research sheds light on the factors influencing AI technology adoption in university students and stimulates further inquiry into the evolving dynamics of user interaction with emerging AI technologies.

Author contribution Statement

Conceptualization, S.G., M.L.A.; methodology, S.G., M.L.A., A.M.; survey localization, M.L.A., A.M., formal analysis, S.G.; data collection, S.G., and M.L.A.; writing – original draft, S.G.; writing – review and editing, S.G., M.L.A., A.M. All authors have read and agreed on the submitted version of the manuscript.

Acknowledgements

We thank Aleksandra Sevic (University of Stavanger), for helping the translation and localization of the questionnaire from English into Norwegian.

Disclosure Statement

No potential conflict of interest was reported by the author(s).

Data availability statement

The data supporting the conclusion of the present article is available in OSF, and can be downloaded using the following link: https://osf.io/download/6581e515034461092c68f4f0/ The Norwegian version of the UTAUT ChatGPT use questionnaire is available in OSF and can be downloaded using the following link: https://osf.io/download/6581ec6a7094e90932a17485/.

References

- Adamopoulou, E., and L. Moussiades. 2020. Chatbots: History, technology, and applications. Machine Learning with Applications 2:100006 %U. doi:10.1016/j.mlwa.2020.100006.

- Afthanorhan, W. 2013. A comparison of partial least square structural equation modeling (pls-sem) and covariance based structural equation modeling (cb-sem) for confirmatory factor analysis. International Journal of Engineering Science and Innovative Technology 2 (5):198–24.

- Agarwal, R., and M. Wadhwa. 2020. Review of state-of-the-art design techniques for chatbots. SN Computer Science 1 (5 %U). doi:10.1007/s42979-020-00255-3.

- Ain, N., K. Kaur, and M. Waheed. 2016. The influence of learning value on learning management system use. Information Development 32 (5):1306–21. doi:10.1177/0266666915597546.

- Ajzen, I. 1991. The theory of planned behavior. Organizational Behavior and Human Decision Processes 50 (2):179–211. doi:10.1016/0749-5978(91)90020-T.

- Alotumi, M. 2022. Factors influencing graduate students’ behavioral intention to use google classroom: Case study-mixed methods research. Education and Information Technologies 27 (7):10035–63. doi:10.1007/s10639-022-11051-2.

- Ameri, A., R. Khajouei, A. Ameri, and Y. Jahani. 2020. Acceptance of a mobile-based educational application (LabSafety) by pharmacy students: An application of the UTAUT2 model. Education and Information Technologies 25 (1):419–35. doi:10.1007/s10639-019-09965-5.

- Androutsopoulou, A., N. Karacapilidis, E. Loukis, and Y. Charalabidis. 2019. Transforming the communication between citizens and government through ai-guided chatbots. Government Information Quarterly 36 (2):358–67. doi:10.1016/j.giq.2018.10.001.

- Arain, A. A., Z. Hussain, W. H. Rizvi, and M. S. Vighio. 2019. Extending utaut2 toward acceptance of mobile learning in the context of higher education. Universal Access in the Information Society 18 (3):659–73. doi:10.1007/s10209-019-00685-8.

- Azizi, S. M., N. Roozbahani, and A. Khatony. 2020. Factors affecting the acceptance of blended learning in medical education: Application of utaut2 model. BMC Medical Education 20 (1):367–67. doi:10.1186/s12909-020-02302-2.

- Brislin, R. W. 1970. Back-translation for cross-cultural research. Journal of Cross-Cultural Psychology 1 (3):185–216 %U 10.1177/135910457000100301.

- Buhalis, D., and E. S. Y. Cheng. 2019. Exploring the use of chatbots in hotels: Technology providers’ perspective. In Information and Communication Technologies in Tourism 2020, 231–42. Cham, Switzerland: Springer International Publishing.

- Camilleri, M. A., and C. Troise. 2023. Live support by chatbots with artificial intelligence: A future research agenda. Service Business 17 (1):61–80. doi:10.1007/s11628-022-00513-9.

- Casillo, M., F. Colace, L. Fabbri, M. Lombardi, A. Romano, and D. Santaniello. 2020. Chatbot in industry 4.0: An approach for training new employees. 2020 IEEE International Conference on Teaching, Assessment, and Learning for Engineering (TALE), 371-76. IEEE.

- Chávez Herting, D., R. Cladellas Pros, and A. Castelló Tarrida. 2020. Habit and social influence as determinants of PowerPoint use in higher education: A study from a technology acceptance approach. Interactive Learning Environments 31 (1):497–513. doi:10.1080/10494820.2020.1799021.

- Cotton, D. R. E., P. A. Cotton, and J. R. Shipway. 2023. Chatting and cheating: Ensuring academic integrity in the era of ChatGPT. Innovations in Education and Teaching International 61 (2):228–39. doi:10.1080/14703297.2023.2190148.

- Crawford, J., M. Cowling, and K.-A. Allen. 2023. Leadership is needed for ethical chatgpt: Character, assessment, and learning using artificial intelligence (ai). Journal of University Teaching and Learning Practice 20 (3). doi:10.53761/1.20.3.02.

- Dajani, D., and A. S. Abu Hegleh. 2019. Behavior intention of animation usage among university students. Heliyon 5 (10):e02536–36. doi:10.1016/j.heliyon.2019.e02536.

- Davis, F. D. 1989. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly 13 (3):319. doi:10.2307/249008.

- Diamantopoulos, A., M. Sarstedt, C. Fuchs, P. Wilczynski, and S. Kaiser. 2012. Guidelines for choosing between multi-item and single-item scales for construct measurement: A predictive validity perspective. Journal of the Academy of Marketing Science 40 (3):434–49. doi:10.1007/s11747-011-0300-3.

- Edumadze, J. K. E., K. A. Barfi, V. Arkorful, and N. O. Baffour Jnr. 2022. Undergraduate student’s perception of using video conferencing tools under lockdown amidst COVID-19 pandemic in ghana. Interactive Learning Environments 31 (9):5799–810. doi:10.1080/10494820.2021.2018618.

- Ehsan, U., Q. V. Liao, M. Muller, M. O. Riedl, and J. D. Weisz. 2021. Expanding explainability: Towards social transparency in ai systems. Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama Japan, 1–19.

- El-Masri, M., and A. Tarhini. 2017. Factors affecting the adoption of e-learning systems in Qatar and USA: Extending the Unified Theory of Acceptance and Use of Technology 2 (utaut2). Educational Technology Research & Development 65 (3):743–63. doi:10.1007/s11423-016-9508-8.

- Faqih, K. M. S., and M.-I. R. M. Jaradat. 2021. Integrating ttf and utaut2 theories to investigate the adoption of augmented reality technology in education: Perspective from a developing country. Technology in Society 67:101787. doi:10.1016/j.techsoc.2021.101787.

- Farooq, M. S., M. Salam, N. Jaafar, A. Fayolle, K. Ayupp, M. Radovic-Markovic, and A. Sajid. 2017. Acceptance and use of lecture capture system (lcs) in executive business studies. Interactive Technology & Smart Education 14 (4):329–48. doi:10.1108/ITSE-06-2016-0015.

- Gilson, A., C. W. Safranek, T. Huang, V. Socrates, L. Chi, R. A. Taylor, and D. Chartash. 2023. How does chatgpt perform on the United States Medical Licensing Examination? The implications of large language models for medical education and knowledge assessment. JMIR Medical Education 9:e45312–12. doi:10.2196/45312.

- González-González, C. S., V. Muñoz-Cruz, P. A. Toledo-Delgado, and E. Nacimiento-García. 2023. Personalized gamification for learning: A reactive chatbot architecture proposal. Sensors 23 (1):545. doi:10.3390/s23010545.

- Grassini, S. 2023a. Development and validation of the ai attitude scale (aias-4): A brief measure of general attitude toward artificial intelligence. Frontiers in Psychology 14:14. doi:10.3389/fpsyg.2023.1191628.

- Grassini, S. 2023b. Shaping the future of education: Exploring the potential and consequences of ai and chatgpt in educational settings. Education Sciences 13 (7):692. doi:10.3390/educsci13070692.

- Grassini, S., and M. Koivisto. 2024. Artificial creativity? Evaluating AI against human performance in creative interpretation of visual stimuli. International Journal of Human–Computer Interaction 1–12.

- Grimaldi, G., B. Ehrler, and Ai. 2023. Machines are about to change scientific publishing forever. ACS Energy Letters 8 (1):878–80 %U doi:10.1021/acsenergylett.2c02828.

- Guzik, E. E., C. Byrge, and C. Gilde. 2023. The originality of machines: Ai takes the Torrance test. Journal of Creativity 33 (3):100065. doi:10.1016/j.yjoc.2023.100065.

- Hair Jr, J. F. M. C. Howard, and C. Nitzl. 2020. Assessing measurement model quality in pls-sem using confirmatory composite analysis. Journal of Business Research 109:101–10. doi:10.1016/j.jbusres.2019.11.069.

- Hair, J., C. Ringle, and M. Sarstedt. 2013. Partial least squares structural equation modeling: Rigorous applications, better results and higher acceptance. Long Range Planning 46 (1–2):1–12. doi:10.1016/j.lrp.2013.01.001.

- Hair, J. F., C. M. Ringle, and M. Sarstedt. 2011. Pls-sem: Indeed a silver bullet. Journal of Marketing Theory & Practice 19 (2):139–52 %U doi:10.2753/mtp1069-6679190202.

- Henseler, J., C. M. Ringle, and M. Sarstedt. 2014. A new criterion for assessing discriminant validity in variance-based structural equation modeling. Journal of the Academy of Marketing Science 43 (1):115–35 %U 10.1007/s11747-014-0403-8.

- Hoi, V. N. 2020. Understanding higher education learners’ acceptance and use of mobile devices for language learning: A rasch-based path modeling approach. Computers & Education 146:103761. doi:10.1016/j.compedu.2019.103761.

- Hu, S., K. Laxman, and K. Lee. 2020. Exploring factors affecting academics’ adoption of emerging mobile technologies-an extended utaut perspective. Education and Information Technologies 25 (5):4615–35. doi:10.1007/s10639-020-10171-x.

- Jakkaew, P., and S. Hemrungrote. 2017. The use of utaut2 model for understanding student perceptions using google classroom: A case study of introduction to information technology course. Paper presentat at the 2017 International Conference on Digital Arts, Chiang Mai, Thailand, Media and Technology (ICDAMT).

- Kang, M., B. Y. T. Liew, H. Lim, J. Jang, and S. Lee. 2014. Investigating the determinants of mobile learning acceptance in korea using utaut2. Emerging Issues in Smart Learning, 209–16. Berlin Heidelberg: Springer. https://link.springer.com/chapter/10.1007/978-3-662-44188-6_29.

- Kasthuri, E., and S. Balaji. 2021. A chatbot for changing lifestyle in education. 2021 Third International Conference on Intelligent Communication Technologies and Virtual Mobile Networks (ICICV), IEEE %U. doi:10.1109/icicv50876.2021.9388633.

- Kaur, D., S. Uslu, K. J. Rittichier, and A. Durresi. 2022. Trustworthy artificial intelligence: A review. ACM Computing Surveys (CSUR) 55 (2):1–38. doi:10.1145/3491209.

- Khadija, A., F. F. Zahra, and A. Naceur. 2021. Ai-powered health chatbots: Toward a general architecture. Procedia Computer Science 191:355–60. doi:10.1016/j.procs.2021.07.048.

- Kim, W., Y. Ryoo, S. Lee, and J. A. Lee. 2023. Chatbot advertising as a double-edged sword: The roles of regulatory focus and privacy concerns. Journal of Advertising 52 (4):504–22. doi:10.1080/00913367.2022.2043795.

- Kinnunen, J., A. Androniceanu, and I. Georgescu. 2019. Digitalization of EU Countries: A clusterwise analysis. Proceedings of the 13rd International Management Conference: Management Strategies for High Performance, Bucharest, Romania, October, 1–12.

- Kock, N. 2018. Minimum sample size estimation in PLS-SEM: An application in tourism and hospitality research. In Applying Partial Least Squares in Tourism and Hospitality Research, ed. F. Ali, S. M. Rasoolimanesh, and C. Cobanoglu, 1–16. Leeds, England: Emerald Publishing Limited.

- Koivisto, M., and S. Grassini. 2023. Best humans still outperform artificial intelligence in a creative divergent thinking task. Scientific Reports 13 (1):13601. doi:10.1038/s41598-023-40858-3.

- Krishnan, C., A. Gupta, A. Gupta, and G. Singh. 2022. Impact of artificial intelligence-based chatbots on customer engagement and business growth. In Deep learning for social media data analytics, 195–210. Cham: Springer International Publishing.

- Kumar, J. A., and B. Bervell. 2019. Google classroom for mobile learning in higher education: Modelling the initial perceptions of students. Education and Information Technologies 24 (2):1793–817. doi:10.1007/s10639-018-09858-z.

- Laitinen, A., and O. Sahlgren. 2021. Ai systems and respect for human autonomy. Frontiers in Artificial Intelligence 4 %U doi:10.3389/frai.2021.705164.

- Limayem, H., and Cheung. 2007. How habit limits the predictive power of intention: The case of information systems continuance. MIS Quarterly 31 (4):705. doi:10.2307/25148817.

- Lim, W. M., A. Gunasekara, J. L. Pallant, J. I. Pallant, and E. Pechenkina. 2023. Generative ai and the future of education: Ragnarök or reformation? A paradoxical perspective from management educators. The International Journal of Management Education 21 (2):100790. doi:10.1016/j.ijme.2023.100790.

- Lund, B. D., and T. Wang. 2023. Chatting about chatgpt: How may ai and gpt impact academia and libraries? Library Hi Tech News 40 (3):26–29. doi:10.1108/LHTN-01-2023-0009.

- Luo, B., R. Y. K. Lau, C. Li, and Y. W. Si. 2021. A critical review of state‐of‐the‐art chatbot designs and applications. WIREs Data Mining and Knowledge Discovery 12 (1). doi:10.1002/widm.1434.

- Mehta, A., N. P. Morris, B. Swinnerton, and M. Homer. 2019. The influence of values on e-learning adoption. Computers & Education 141:103617. doi:10.1016/j.compedu.2019.103617.

- Menon, D., and K. Shilpa. 2023. “Chatting with chatgpt”: Analyzing the factors influencing users’ intention to use the open ai’s chatgpt using the utaut model. Heliyon 9 (11):e20962 %U doi:10.1016/j.heliyon.2023.e62.

- Møgelvang, A., K. Ludvigsen, C. Bjelland, and O. M. Schei. 2023. Hvl-studenters bruk og oppfatninger av ki-chatboter i utdanning. HVL-Rapport.

- Moore, G. C., and I. Benbasat. 1991. Development of an instrument to measure the perceptions of adopting an information technology innovation. Information Systems Research 2 (3):192–222. doi:10.1287/isre.2.3.192.

- Moriuchi, E., V. M. Landers, D. Colton, and N. Hair. 2021. Engagement with chatbots versus augmented reality interactive technology in e-commerce. Journal of Strategic Marketing 29 (5):375–89. doi:10.1080/0965254X.2020.1740766.

- Nikolopoulou, K., V. Gialamas, and K. Lavidas. 2020. Acceptance of mobile phone by university students for their studies: An investigation applying utaut2 model. Education and Information Technologies 25 (5):4139–55 %U doi:10.1007/s10639-020-10157-9.

- Okuda, T., and S. Shoda. 2018. Ai-based chatbot service for financial industry. Fujitsu Scientific & Technical Journal 54 (2):4–8.

- Osei, H. V., K. O. Kwateng, and K. A. Boateng. 2022. Integration of personality trait, motivation and utaut 2 to understand e-learning adoption in the era of COVID-19 pandemic. Education and Information Technologies 27 (8):10705–30. doi:10.1007/s10639-022-11047-y.

- Paraschiv, A. M., N. A. Panie, T. M. Nae, and L. Ciobanu. 2021. EU Countries’ Performance in Digitalization. International Conference on Business Excellence, 11–24, Cham: Springer International Publishing, March.

- Perkins, M. 2023. Academic integrity considerations of ai large language models in the post-pandemic era: Chatgpt and beyond. Journal of University Teaching and Learning Practice 20 (2). doi:10.53761/1.20.02.07.

- Raman, A., and Y. Don. 2013. Preservice teachers’ acceptance of learning management software: An application of the utaut2 model. International Education Studies 6 (7 %U doi:10.5539/ies.v6n7p157.

- Raza, S. A., Z. Qazi, W. Qazi, and M. Ahmed. 2021. E-learning in higher education during covid-19: Evidence from blackboard learning system. Journal of Applied Research in Higher Education 14 (4):1603–22. doi:10.1108/JARHE-02-2021-0054.

- Safdar, N. M., J. D. Banja, and C. C. Meltzer. 2020. Ethical considerations in artificial intelligence. In European Journal of Radiology, vol. 122, 108768. %U. doi:10.1016/j.ejrad.2019.68.

- Samsudeen, S. N., and R. Mohamed. 2019. University students’ intention to use e-learning systems: A study of higher educational institutions in Sri Lanka. Interactive Technology & Smart Education 16 (3):219–38. doi:10.1108/ITSE-11-2018-0092.

- Sarstedt, M., C. M. Ringle, and J. F. Hair. 2021. Partial least squares structural equation modeling. In Handbook of Market Research, 587–632. Cham: Springer International Publishing.

- Schelble, B. G., J. Lopez, C. Textor, R. Zhang, N. J. Mcneese, R. Pak, and G. Freeman. 2022. Towards ethical ai: Empirically investigating dimensions of ai ethics, trust repair, and performance in human-ai teaming. Human Factors 66 (4):1037–55. doi:10.1177/00187208221116952.

- Strzelecki, A. 2023. To use or not to use chatgpt in higher education? A study of students’ acceptance and use of technology. Interactive Learning Environments 1–14. doi:10.1080/10494820.2023.2209881.

- Suhel, S. F., V. K. Shukla, S. Vyas, and V. P. Mishra. 2020. Conversation to automation in banking through chatbot using artificial machine intelligence language. 2020 8th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions)(ICRITO), 611-18: IEEE.

- Svenningsson, N., and M. Faraon. 2019. Artificial intelligence in conversational agents: A study of factors related to perceived humanness in chatbots. Proceedings of the 2019 2nd Artificial Intelligence and Cloud Computing Conference, ACM %U. doi:10.1145/3375959.3375973.

- Tamilmani, K., N. P. Rana, and Y. K. Dwivedi. 2018. Use of ‘habit’ is not a habit in understanding individual technology adoption: A review of utaut2 based empirical studies. In IFIP Advances in Information and Communication Technology, 277–94 %@ 978-3-030-04315-5. %U Springer International Publishing. doi:10.1007/978-3-030-15-5_19.

- Tamilmani, K., N. P. Rana, N. Prakasam, and Y. K. Dwivedi. 2019. The battle of brain vs. Heart: A literature review and meta-analysis of “hedonic motivation” use in utaut2. International Journal of Information Management 46:222–35. doi:10.1016/j.ijinfomgt.2019.01.008.

- Tamilmani, K., N. P. Rana, S. F. Wamba, and R. Dwivedi. 2021. The extended unified theory of acceptance and use of technology (utaut2): A systematic literature review and theory evaluation. International Journal of Information Management 57:102269 %U doi:10.1016/j.ijinfomgt.2020.102269.

- Taylor, S., and P. A. Todd. 1995. Understanding information technology usage: A test of competing models. Information Systems Research 6 (2):144–76. doi:10.1287/isre.6.2.144.

- Twum, K. K., D. Ofori, G. Keney, and B. Korang-Yeboah. 2021. Using the utaut, personal innovativeness and perceived financial cost to examine student’s intention to use e-learning. Journal of Science and Technology Policy Management 13 (3):713–37. doi:10.1108/JSTPM-12-2020-0168.

- Van Der Heijden, H. 2004. User acceptance of hedonic information systems. MIS Quarterly 28 (4):695. doi:10.2307/25148660.

- Van Dis, E. A. M., J. Bollen, W. Zuidema, R. Van Rooij, and C. L. Bockting. 2023. Chatgpt: Five priorities for research. Nature 614 (7947):224–26. doi:10.1038/d41586-023-00288-7.

- Venkatesh, T., and Xu. 2012. Consumer acceptance and use of information technology: Extending the unified theory of acceptance and use of technology. MIS Quarterly 36 (1):157 %U. doi:10.2307/41410412.

- Venkatesh, V., M. G. Morris, G. B. Davis, and F. D. Davis. 2003. User acceptance of information technology: Toward a unified view. MIS Quarterly 27 (3):425. doi:10.2307/30036540.

- Venkatesh, V., J. Thong, and X. Xu. 2016. Unified theory of acceptance and use of technology: A synthesis and the road ahead. Journal of the Association for Information Systems 17 (5):328–76. doi:10.17705/1jais.00428.

- Wong, K.-T., T. Teo, and P. S. C. Goh. 2013. Understanding the intention to use interactive whiteboards: Model development and testing. Interactive Learning Environments 23 (6):731–47. doi:10.1080/10494820.2013.806932.

- Xu, A., Z. Liu, Y. Guo, V. Sinha, and R. Akkiraju. 2017. A new chatbot for customer service on social media. Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, ACM %U. doi:10.1145/3025453.3025496.

- Yu, C.-W., C.-M. Chao, C.-F. Chang, R.-J. Chen, P.-C. Chen, and Y.-X. Liu. 2021. Exploring behavioral intention to use a mobile health education website: An extension of the utaut 2 model. Sage Open 11 (4):215824402110557. doi:10.1177/21582440211055721.

- Zacharis, G., and K. Nikolopoulou. 2022. Factors predicting university students’ behavioral intention to use elearning platforms in the post-pandemic normal: An utaut2 approach with ‘learning value’. Education and Information Technologies 27 (9):12065–82. doi:10.1007/s10639-022-11116-2.

- Zeng, N., Y. Liu, P. Gong, M. Hertogh, and M. König. 2021. Do right pls and do pls right: A critical review of the application of pls-sem in construction management research. Frontiers of Engineering Management 8 (3):356–69 %U doi:10.1007/s42524-021-0153-5.

- Zwain, A. A. A. 2019. Technological innovativeness and information quality as neoteric predictors of users’ acceptance of learning management system. Interactive Technology & Smart Education 16 (3):239–54. doi:10.1108/ITSE-09-2018-0065.