?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Because of the existence of irrelevant, redundant, and noisy attributes in large datasets, the accuracy of a classification model has degraded. Hence, feature selection is a necessary pre-processing stage to select the important features that may considerably increase the efficiency of underlying classification algorithms. As a popular metaheuristic algorithm, particle swarm optimisation has successfully applied to various feature selection approaches. Nevertheless, particle swarm optimisation tends to suffer from immature convergence and low convergence rate. Besides, the imbalance between exploration and exploitation is another key issue that can significantly affect the performance of particle swarm optimisation. In this paper, a conditional opposition-based particle swarm optimisation is proposed and used to develop a wrapper feature selection. Two schemes, namely opposition-based learning and conditional strategy are introduced to enhance the performance of the particle swarm optimisation. Twenty-four benchmark datasets are used to validate the performance of the proposed approach. Furthermore, nine metaheuristics are chosen for performance verification. The findings show the supremacy of the proposed approach not only in obtaining high prediction accuracy but also in small feature sizes.

1. Introduction

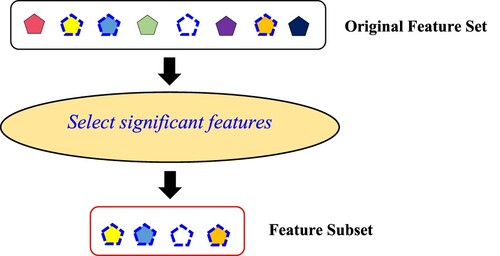

Due to the rapid growth of computer science and technology, the dataset is becoming much bigger and bigger. However, a big dataset normally contains an enormous number of features. Some of these features might be considered redundant, irrelevant, or noisy information that can negatively affect the performance of the classifier (AbdEl-Fattah Sayed et al., Citation2016; Kumar et al., Citation2020). Also, a large number of features in a dataset will exceedingly raise temporal and spatial complexity, which is a challenge that is inevitable in real-world problems. This explains why the classification algorithms usually face difficulty when dealing with high dimensional feature vector (Lin et al., Citation2018; Nagra et al., Citation2020). Thus, feature selection (FS) can be a good option for dimensionality reduction (see Figure ).

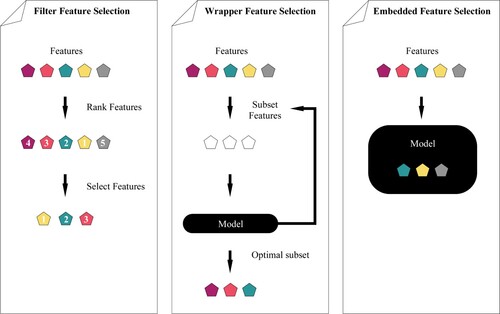

FS is one of the popular dimensionality reduction techniques to eliminate redundant and noisy attributes and select the relevant features as well. Consequently, selecting a set of significant features from a big dataset not only enhances the accuracy of the classifier, but also decreases the unnecessary computational complexity (Emary et al., Citation2016; Sreeja, Citation2019). Generally speaking, FS can be classified into filter, wrapper, and embedded approaches (see Figure ). Filter approaches are independent of the learning algorithm, and they are often computationally cheaper (Nguyen, Ly, et al., Citation2020). In contrast, wrapper approaches make use of a specific classifier as part of the evaluation. In embedded approaches, the model includes a built-in FS algorithm. Because of the inclusion of a learning model, the wrapper approaches can usually perceive excellent classification results (Xue et al., Citation2014).

In wrapper approaches, the FS is known as an NP-hard combinatorial optimisation. To resolve this challenging problem, many researchers employ metaheuristic algorithms to perform the local and global searches for finding an optimal feature subset (Nayak et al., Citation2018). In recent years, many metaheuristic algorithms have been proposed for the optimisation tasks such as particle swarm optimization (PSO) (Kennedy, Citation2011), genetic algorithm (GA) (Holland, Citation1992), moth flame optimization (Mirjalili, Citation2015), grey wolf optimizer (Mirjalili et al., Citation2014), gravitational search algorithm (Rashedi et al., Citation2009), salp swarm algorithm (Mirjalili et al., Citation2017), and artificial bee colony (Karaboga & Basturk, Citation2007). For wrapper-based FS, PSO and GA are the most frequently used.

PSO is a swarm intelligence algorithm that mimics the social interaction behaviuor of bird flocking and fish schooling in nature. Previous works show that PSO can provide excellent performance when dealing with the FS problem. However, the imbalance between exploration and exploitation is still an issue in PSO, which may limit the performance of PSO in feature evaluation. Therefore, an adaptive mechanism that can automatically adjust the conditions (exploration phase or exploitation phase) is desired. Besides, No Free Lunch theorem indicates that no single optimiser can handle all the problems perfectly (Wolpert & Macready, Citation1997). This theorem encourages the researchers to modify, enhance, and evolve the algorithm for improving the performance of the model to better solve a particular set of problems.

In this article, a new variant of PSO called conditional opposition-based particle swarm optimization (COPSO) is proposed for FS problems. The COPSO utilises opposition-based learning (OBL) and conditional strategy. The former generates the opposite solutions, which aims to ameliorate the quality of initial solutions and global best solution (gbest) in the population. The latter guides the particles to explore or exploit based on their fitness values. In this way, a well stable balance between local search and global search can be achieved. Twenty-four benchmark datasets are collected to test the performance of the COPSO. Nine metaheuristic algorithms are employed to measure the efficacy and efficiency of the proposed algorithm. Our experimental results reveal that the COPSO was highly capable of selecting a set of significant features, which led to excellent classification results. The primary contributions of this work are as follows:

A COPSO is proposed for FS problems. The OBL strategy is embedded into the COPSO to enhance the quality of the initial solutions and global best solution.

A conditional strategy is introduced and implemented into COPSO. This strategy enables the COPSO to adaptively switch the local search and global search behaviours.

The performance of COPSO is validated and tested using 24 benchmark datasets (from low to high dimensions).

The COPSO is compared with nine modern optimisers. The experimental results reveal its effectiveness in FS works.

The rest of this paper is organised as follows: Section 2 provides reviews of related works and details the standard PSO. Section 3 describes the proposed COPSO and its application for FS. The experimental results are discussed in Section 4. Finally, Section 5 concludes the outcomes of this paper.

2. Background

In this section, the related work that representing the state of the arts have been presented and discussed accordingly. Besides, the background and preliminaries of the standard PSO algorithm are presented in this section as follows.

2.1. Related works

In the past decade, metaheuristic algorithms have been regarded as favourable and predominant tools to seek out the potential features over the search space. Due to their excellent search capability, these algorithms are often used by the research community to tackle the FS problems (Faris et al., Citation2020; Hancer, Citation2019). Different metaheuristic algorithms such as PSO (Xue et al., Citation2014), GA (Huang & Wang, Citation2006), and ant colony optimisation (Aghdam et al., Citation2009) were employed to handle such problems. The studies in (Ibrahim et al., Citation2021; Nguyen, Xue, et al., Citation2020) also pointed out the effectiveness of metaheuristic algorithms in FS works. Additionally, (Agrawal et al., Citation2021) presented the comprehensive review of binary metaheuristic algorithms in tackling the FS tasks.

The GA is an evolutionary algorithm inspired by the biological evolution in nature. It evolves the solutions through three major operators: selection, crossover, and mutation. The GA was first applied to handle FS problems in (Siedlecki & Sklansky, Citation1989). However, the major drawback of GA is that it required long processing time to reach an optimal solution (Gunasundari et al., Citation2016). Thus, (Jude Hemanth & Anitha, Citation2019) modified the GA by implementing the “OR” and “AND” logic operations for FS. The proposed approach was able to reduce the randomness and achieved an increment of 4-6% accuracy in magnetic resonance image classification. Lately, a fast rival genetic algorithm was proposed in (Too & Abdullah, Citation2020b) for FS problems. The study showed that the proposed method could often find the informative feature subset within a short period. Another study in (Mazaheri & Khodadadi, Citation2020) demonstrated the efficiency of NSGA II in choosing the potential features.

In (Kashef & Nezamabadi-pour, Citation2015), an advanced version of ant colony optimization (ACO) was developed for FS. The proposed approach used the statistical properties to initialise the heuristic information, which provided a better guidance for the ants to seek the optimal feature subset. To enhance the accuracy of the Mars image classification, (Rashno et al., Citation2017) proposed two variants of ACO algorithms to select high-quality features. They reported their proposed method could outperform GA with the confidence level higher than 0.95. The authors in (Mafarja & Mirjalili, Citation2017) performed a hybridisation of whale optimisation algorithm and simulated annealing for FS. In their hybrid approach, the simulated annealing was applied to enhance the exploitation capability of the search agents when searching the promising areas. Another similar work was done in (Too & Abdullah, Citation2020a), in which a binary atom search optimisation was proposed to tackle the FS problems. Furthermore, (Gokalp et al., Citation2020) utilised the Iterated Greedy metaheuristic to select the quality features for improving the performance of the sentiment classification.

In recent days, PSO has successfully applied to many FS applications. As compared to GA, PSO is computationally less expensive, thereby receive much attention from the researchers in FS studies. However, PSO suffers from immature convergence and slow convergence rate, which makes it less efficient in finding the global optimum (Gou et al., Citation2017; Zhang et al., Citation2017). Besides, the imbalance between exploration and exploitation is another key factor that degrades the performance of the PSO algorithm (Xia et al., Citation2019). Hence, the authors in (Lu et al., Citation2015) enhanced the performance of the standard PSO in text-based-FS by modifying the inertia weight and constriction factor. Furthermore, a pbest guided binary PSO was designed to handle the FS problems in electromyography signals classification (Too et al., Citation2019). The proposed approach borrowed the utility of differential evolution to evolve the quality of personal best solutions when the stagnation happened. However, their method was computationally expensive compared to PSO algorithm. Recently, (Ji et al., Citation2020) developed an improved binary PSO to solve the FS issues. They developed local search and global search operators to improve exploitation and exploration behaviours.

From the literature review, the FS problem with a large number of features is still an open challenge that needs some extra attention by researchers to find out the way to improve existing methods or develop a new strategy.

2.2. Standard particle swarm optimisation

Particle swarm optimization (PSO) was developed by Kennedy and Eberhart in 1995 (Kennedy, Citation2011). It is a swarm intelligence algorithm that mimics the social interaction behaviour of bird flocking and fish schooling in nature. Initially, a population of N particles (solutions) is randomly initialised in a D-dimensional search space. Each particle maintains two vectors in the population, namely velocity, and position as follows:

where Xi and Vi represent the position and velocity of particle i. In the swarm, the particle is guided by its best experience and the best experience of the whole swarm to move toward the global optimum. Iteratively, the particle updates its velocity and position as below:

(1)

(1)

(2)

(2) where t is the current iteration, d refers to the dimension, pbest and gbest denote the personal best solution and global best solution, respectively. The R1 and R2 are two vectors randomly distributed in [0,1], c1 and c2 are the acceleration coefficients, and w is the inertia weight.

3. Proposed conditional opposition-based particle swarm optimization

To date, PSO has been widely employed to various engineering applications. However, its mechanisms require tuning and modification to perform the best on a particular class of problems. This is because PSO suffers from immature convergence, and it cannot effectively escape from the local optima, thus leading to an ineffective solution (Gou et al., Citation2017; Zhang et al., Citation2017). Besides, PSO has the limitation of weak robustness to various problem structures (Cheng & Jin, Citation2015a). In addition, the particles are guided by pbest and gbest toward the global regions. From this point of view, the particles will slowly, and then become similar to gbest. If gbest is itself trapped in the local optimal, the search spaces of particles are limited to the same areas, thereby reducing the efficacy of PSO in finding the optimal solution (Cheng & Jin, Citation2015b; Chuang et al., Citation2008). Moreover, the contradiction between exploration and exploitation is another issue that strongly affects the performance of PSO in FS (Xia et al., Citation2019).

In this work, a conditional opposition-based particle swarm optimization (COPSO) is proposed to improve the performance of the PSO algorithm. Correspondingly, COPSO utilises OBL and conditional strategy. The former aims to evolve the quality of initial solutions and gbest in which the opposite solutions are well-considered. The latter automatically switches the local and global search, which intends to maintain the well stable balance between local search and global search.

3.1. Opposition based learning

Generally speaking, opposition-based learning (OBL) is a strategy that defines the opposite solution for each current solution in the population, and it then identifies whether the opposite solution can provide better fitness value than the current solution (Ewees et al., Citation2018). In any metaheuristic optimisation algorithm, an initial population of random solutions is generated and used to seek out the global optimum for a given optimisation problem. Due to the randomness and absence of knowledge in the initial solutions, these algorithms cannot dramatically converge to the global optimum. Hence, OBL is employed to overcome this defect.

In (Jabeen et al., Citation2009), the OBL was implemented into PSO to enhance the initial population by considering the opposite solution of initial particles. (Kang et al., Citation2018) implemented the hybrid algorithm that integrated probabilistic OBL into PSO to enhance the population diversity. The authors in (Aladeemy et al., Citation2020) proposed different variants of Opposition-based Self-Adaptive Cohort Intelligence to tackle the FS problems. Their approaches adopted the OBL to generate the opposite solutions for improving the diversity of the candidates. In OBL, the opposite number, of the present X can be calculated as follow:

(3)

(3) where ub and lb denote the maximum value and minimum value of the search space. As for multidimensional search space, the opposite solution can be reformulated as:

(4)

(4) where D is referred to the number of dimensions. It is believed that the convergence of the algorithm can be improved by performing the search in the opposite direction (Abd Elaziz et al., Citation2017).

In the proposed approach, COPSO first integrates OBL to improve the quality of initial solutions, which enables the particles to perform the search more effectively. Algorithm 1 shows the procedure of OBL. Let N and D to be the population size and the total number of dimensions in the search space. At the beginning, COPSO randomly generates an initial population of N solutions. The fitness values of the solutions are then evaluated. Next, the initial solutions are used as the input for the OBL process. In this process, the opposite solutions are computed, and the fitness values of opposite solutions are calculated. After that, the initial solutions and opposite solutions are merged, and the best N solutions are chosen to build the new initial population.

Secondly, to assist the COPSO for escaping the local optimal, the OBL is applied to the gbest. At the end of each iteration, the OBL is used to compute the opposite solution of gbest. The opposite solution of gbest is defined as below:3.2. Conditional strategy

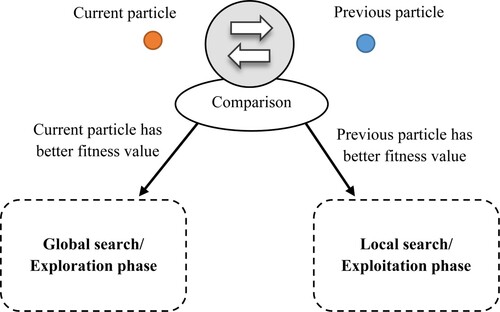

In PSO, the proper balance between exploration and exploitation has regarded as a key factor that can significantly affect the performance of the algorithm when searching for an optimal solution (Jensi & Jiji, Citation2016). However, it is difficult to decide whether the particle should perform the global search or local search since the situation is varying according to various problem structures. In this paper, a new conditional strategy is proposed, which aims to automatically switch the local search and global search in the searching process.

Figure illustrates the concept of the conditional strategy. As shown in Figure , a comparison is made between the particle and its previous one. If the particle offers better performance than its previous one, then an exploration phase is executed. Conversely, an exploitation phase is promoted when the performance of the existing particle has become worst. Considering the minimisation case, the conditional strategy is formulated as:

(6)

(6) where F(.) is the objective function, and t is the iteration number. Note that the condition of each particle is examined to ensure all of them can perform the search according to proper circumstances.

3.2.1. Exploration phase

From the aforementioned discussion, the condition (exploitation phase or exploration phase) is determined by comparing the fitness values obtained by the corresponding particle X(t) and its previous one X(t−1). If the fitness value achieved by the corresponding particle is better than or equal to previous one, this means that the particle is able to maintain or enhance the performance. Thereby, the particle shall search globally around the neighbours to find more promising regions. By this way, the diversity of swarm can be improved. In the exploration phase, the particle updates its velocity and position as below:

(7)

(7)

(8)

(8)

(9)

(9) where R3 and R4 are two vectors randomly distributed in [0,1], and

is the mean position of the current swarm.

3.2.2. Exploitation phase

On the other side, if the fitness value achieved by the corresponding particle is worse than its previous one, this indicates that the particle might be facing difficulty in finding the optimal solution. Thus, the particle shall search locally around the current best solutions and select the best solutions. In the exploitation phase, the particle updates its velocity and position as follows:

(10)

(10)

(11)

(11)

(12)

(12) where R5 and R6 are two vectors randomly distributed in [0,1], and

represents the mean position of global best particles over the course of the iterations. Instead of moving toward gbest, the mean position of gbest is less likely to introduce a bias toward the particular particle, which can improve the diversity of swarm in local search.

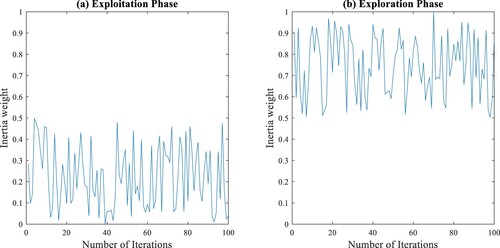

3.2.3. Adaptive inertia weight

Inertia weight is another key parameter that can substantially affect the performance of COPSO in FS. Generally speaking, a smaller value of inertia weight promotes the particle to exploit the best solutions. On the contrary, a larger value of inertia weight allows the algorithms to give more focus on exploration (Jiao et al., Citation2008). In COPSO, a conditional strategy is used to manipulate the exploration and exploitation behaviour. Thereby, an adaptive inertia weight is proposed, and it is defined as follows:

(13)

(13) where w is the inertia weight, R7 and R8 are two random numbers distributed in [0,1]. An illustration of adaptive inertia weight is demonstrated in Figure . In the exploration phase, the inertia weight was randomly generated in the ranges of 0.5 and 1, which encouraged the particle to search globally on the search space. For the exploitation phase, the inertia weight was allocated between 0 and 0.5, which enabled the particle to search locally around the promising regions. The utilisation of the adaptive inertia weight scheme promotes a well stable balance between local search and global search. Algorithm 2 presents the pseudocode of COPSO.

3.2.4. Computational complexity

The computational complexity of the COPSO mainly depends on the complexity of OBL for initialisation (OBL1), OBL for gbest (OBL2), and conditional strategy (CS). To sum up, the complexity of the proposed approach is given by

(14)

(14)

where N denotes the number of particles, D is the dimension size, T refers to the maximum number of iterations, and C denotes the cost of fitness function.

3.3 Feature selection using proposed method

This study proposes the COPSO to handle the FS problem in classification tasks. In fact, FS is a challenging combinatorial optimisation problem. The number of possible solutions increases exponentially with the number of dimensions (number of features). Let assume a dataset with D features, and the possible combinations of features will be 2D, which is difficult to analyze by using the exhaustive search strategy (Nemati et al., Citation2009). Therefore, the proposed COPSO is used to evaluate the best combination of significant features for classification tasks.

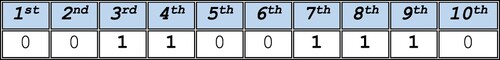

In the proposed approach, the solutions are presented in binary form, in which “1” denotes the selected features and “0” represents the unselected features. Figure illustrates the sample solution with ten features. It can be observed that the total number of five features (3rd, 4th, 7th, 8th, and 9th features) has been chosen in this sample solution.

Generally speaking, FS consists of two objectives, which are high classification performance and minimal feature size. In this framework, a fitness function that considers both objectives are employed as:

(15)

(15) where |S| is the length of feature subset, |C| is the total number of features, ER is the error rate, and it can be defined as the ratio of the number of wrongly classified instances to the total number of instances. The parameters α and β are the weight vectors to control the importance of classification performance and feature size, where the summation of α and β is equal to 1.

4. Results and discussions

This section consists of four sub-sections. The first sub-section provides the details of 24 used datasets. The second sub-section presents the comparison algorithms and evaluation metrics. The third sub-section shows the experimental results. The final sub-section discusses the findings of the experiment.

4.1. Experimental data

This sub-section validates the performance of COPSO in several real-world problems. Twenty-four datasets obtained from (UCI Machine Learning Repository, Citationn.d.) and (Datasets | Feature Selection @ ASU, Citationn.d.) are used to validate the performance of COPSO. Table tabulates the summary of these datasets. From Table , the datasets with various numbers of features (from low to high dimensions) were chosen to inspect the performance of COPSO.

Table 1. The twenty-four datasets used in this study.

4.2. Comparison algorithms and evaluation metrics

To evaluate the efficacy of COPSO, the opposition based PSO (OPSO), GA, and the other seven metaheuristics include the binary particle swarm optimization (BPSO) (Kennedy & Eberhart, Citation1997), moth flame optimization (MFO) (Mirjalili, Citation2015), multi-verse optimizer (MVO) (Mirjalili et al., Citation2016), generalized normal distribution optimization (GNDO) (Zhang et al., Citation2020), and pathfinder algorithm (PFA) (Yapici & Cetinkaya, Citation2019), opposition based sine cosine algorithm (OBSCA) (Abd Elaziz et al., Citation2017), and improved salp swarm algorithm (ISSA-OBL) (Tubishat et al., Citation2020) are selected for performance comparison. Table outlines parameter settings of utilised methods. The numbers of solutions and maximum iterations are set at 10 and 100 to ensure the fair comparison. The total number of dimensions (D) is equal to the number of features in the dataset.

Table 2. The parameter settings of utilised methods.

For wrapper-based FS, the k-nearest neighbour (KNN, k = 5) classifier is adopted as the learning algorithm to calculate the fitness value of each solution. The KNN is chosen because of its fast processing speed, easy implementation, and promising performance in previous work (Emary et al., Citation2016).

For each individual dataset, the data is partitioned into training and testing sets with the ratio of 7:3. In the FS phase, the stratified 10-fold cross-validation manner is employed to assess the performance of the proposed approach. In this method, the dataset set is randomly separated into 10 equal parts. Each part is employed as the validation set in succession, whereas the remaining parts (9 parts) are adopted to train the classifier. According to (Ouadfel & Abd Elaziz, Citation2020), the α is set at 0.9. For each algorithm, the experiment is repeated for 20 runs to obtain meaningful statistical results. Finally, the measurements achieved from all the evaluations are recorded, and their averages are presented.

4.3. Experimental results

Six evaluation metrics, including the mean fitness, standard deviation of fitness, validation accuracy, testing accuracy, number of selected features, and computational time, are calculated to measure the effectiveness of proposed COPSO. All the simulation and analysis are conducted using MATLAB 2021 in a computer with 2.90 GHz CPU and 16.0GB RAM.

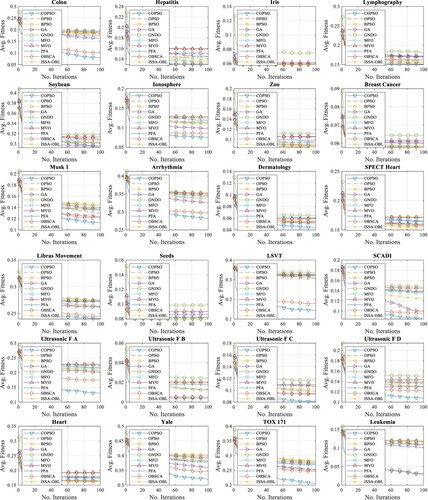

Figure depicts the results of the convergence curve. As can be seen, COPSO yielded promising performance for most datasets. In comparison with other algorithms, COPSO can usually converge faster to find an optimal solution. Besides, COPSO achieved very good diversity. The observed improvement in FS problems is attributed to OBL to provide high-quality solutions that enhance the convergence of the algorithm. As compared to OPSO, COPSO can often perform the search on more promising regions. This is mainly due to the proper balance between exploration and exploitation contributed by the conditional strategy, which properly adjusts the local search and global search exceedingly.

Tables and list the mean fitness and standard deviation of fitness values. Successively, COPSO achieved the lowest mean fitness in most cases. These results imply that the COPSO comprised of high potential in searching the global optimal solution, thus leading to superior performance. The foremost cause of this improved efficacy of the COPSO is that it utilises the OBL scheme. Hence, COPSO can effectively improve the quality of initial solutions and accelerate the convergence rate. Based on the result obtained in Table , the optimal mean fitness value is obtained by COPSO (21 datasets). In terms of robustness and consistency, COPSO can often offer highly consistent results due to smaller STD values. As such, COPSO utilises conditional and OBL strategies, which significantly improves the global search and local search capabilities.

Table 3. Result of the mean fitness value.

Table 4. Result of the standard deviation of fitness value.

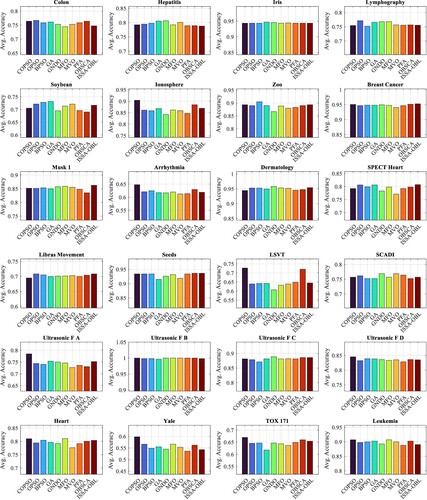

Table presents the result of the validation accuracy. Inspecting the results, COPSO contributed the highest validation accuracy in 15 datasets. Among the comparative algorithms, COPSO scored the highest average accuracies in most cases. The result of testing accuracy is shown in Figure . Successively, COPSO achieved the highest testing accuracy in many datasets. In comparison with the other nine algorithms, COPSO was highly capable of selecting the subset of significant features, thus resulting in optimal classification performance.

Table 5. Result of the average validation accuracy.

Table presents the result of the number of selected features. According to findings, COPSO perceived the smallest average number of selected features in most cases (14 datasets). As compared to OPSO, the COPSO can often select fewer features in the process of evaluation. The foremost cause for the improved efficiency of the COPSO is that it could maintain a well stable balance between local search and global search when dealing with FS problems. Table presents the experimental result of computational time. As can be seen, the computation time consumed by COPSO was competitive. On the one hand, the GNDO is found to be the slowest algorithm.

Table 6. Result of the average number of selected features.

Table 7. Result of the average computational time.

The Wilcoxon signed-rank test with 95% confidence level is used to inspect the fitness values obtained by COPSO algorithm. In this statistical test, the performances of two different algorithms are significantly different if the p-value is less than 0.05; otherwise, the performances of two different algorithms are similar. The result of the Wilcoxon test is demonstrated in Table . From Table , the performance of COPSO was significantly better than other algorithms for most of the datasets. The results again prove the efficacy of the COSPO in the present work. Based on the results obtained, it can be concluded that COPSO is a useful FS tool.

Table 8. The p-value of Wilcoxon test.

4.4. Discussions

From the empirical result and analysis, the proposed COPSO was shown to be a reliable and powerful method for FS problems. According to results, the COPSO was able to produce an initial population of high-quality solutions. These quality solutions accelerated the convergence behaviour of the COPSO algorithm. In comparison with other conventional methods, COPSO was more capable of finding the average minimum during the search process. The experimental results obtained in Table supported this argument. Taking TOX_171 as example, COPSO can search for the nearly optimal solution faster and overtook other algorithms in FS. From an optimisation perspective, COPSO not only finds the optimal solution effectively, but also offers a good convergence rate.

As compared to OPSO, COPSO has retained the optimal mean fitness value and highest validation accuracy in 23 and 20 datasets, respectively. For feature size, COPSO can often maintain a small subset of significant features, thereby improving the performance of the learning model. Also, the features chosen by COPSO were able to contribute to the optimal testing accuracy. In terms of convergence rate, COPSO can explore the feature space effectively and accelerate to the global best optimum. The results affirm the supremacy of COPSO in providing higher accuracies while selecting a smaller number of features.

Several observations can be made on the superiority of the COPSO method. Firstly, the OBL assists the algorithm in creating a set of high-quality initial solutions. Moreover, the OBL is applied to enhance the gbest in each iteration, which is beneficial in escaping the local optimal. Secondly, the conditional strategy enables the particles to make the search decisions based on their previous experiences, which helps the particles to decide whether to explore or exploit in the dynamic condition. This strategy is also associated with an adaptive inertia weight to maintain the well stable balance between exploration and exploitation. With the conditional strategy, the particles are highly capable of exploring and exploiting the promising regions over the feature space. Thanks to OBL and conditional strategy, the superiority of COPSO in avoiding the local optimal and improving the search capability can be ensured. Hence, COPSO can often contribute to the optimal performance when applied to FS problems.

Even though the performance of COPSO is superior, it also has some limitations. Firstly, the conditional strategy generated the inertia weight randomly for both exploitation and exploration conditions. This randomness may limit the search tendency of the COPSO algorithm. Secondly, the controlling parameters c1 and c2 are set according to the literature. These parameter settings are commonly used in the PSO algorithm. However, the parameter setting of COPSO can be further investigated for improving the performance of the algorithm in other applications.

5. Conclusion

In this paper, a conditional opposition-based particle swarm optimization (COPSO) was proposed for FS problems. The proposed COPSO utilised OBL to evolve the quality of the initial solutions and global best solution, thus improving the convergence rate of the algorithm. In addition, the conditional strategy assisted COPSO in balancing the exploration and exploitation tendency, which greatly increased the global search and local search abilities. By making full use of these mechanisms, COPSO was highly capable of escaping the local optima, and it could further enhance the efficacy of the algorithm in FS problems. The performance of the proposed COPSO was tested using 24 benchmark datasets, and the result was compared with the other nine FS algorithms. The comprehensive experimental results showed the supremacy of COPSO not only at producing high classification accuracy but also in minimal number of features.

Future work can centralise on the effectiveness of the COPSO in various applications such as numerical optimisation, welded beam design, and optimised support vector machine. Moreover, the concept and idea of conditional strategy can be also implemented into other swarm intelligence algorithms to maintain a well stable balance between exploration and exploitation. The applications of COPSO in different fields are worthy of investigating due to the black-box nature of this algorithm.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- Abd Elaziz, M., Oliva, D., & Xiong, S. (2017). An improved opposition-based sine cosine algorithm for global optimization. Expert Systems with Applications, 90, 484–500. https://doi.org/10.1016/j.eswa.2017.07.043

- AbdEl-Fattah Sayed, S., Nabil, E., & Badr, A. (2016). A binary clonal flower pollination algorithm for feature selection. Pattern Recognition Letters, 77, 21–27. https://doi.org/10.1016/j.patrec.2016.03.014

- Aghdam, M. H., Ghasem-Aghaee, N., & Basiri, M. E. (2009). Text feature selection using ant colony optimization. Expert Systems with Applications, 36(3, Part 2), 6843–6853. https://doi.org/10.1016/j.eswa.2008.08.022

- Agrawal, P., Abutarboush, H. F., Ganesh, T., & Mohamed, A. W. (2021). Metaheuristic algorithms on feature selection: A survey of one decade of research (2009–2019). IEEE Access, 9, 26766–26791. https://doi.org/10.1109/ACCESS.2021.3056407

- Aladeemy, M., Adwan, L., Booth, A., Khasawneh, M. T., & Poranki, S. (2020). New feature selection methods based on opposition-based learning and self-adaptive cohort intelligence for predicting patient no-shows. Applied Soft Computing, 86, 105866/1-19. https://doi.org/10.1016/j.asoc.2019.105866

- Cheng, R., & Jin, Y. (2015a). A social learning particle swarm optimization algorithm for scalable optimization. Information Sciences, 291, 43–60. https://doi.org/10.1016/j.ins.2014.08.039

- Cheng, R., & Jin, Y. (2015b). A competitive swarm optimizer for large scale optimization. IEEE Transactions on Cybernetics, 45(2), 191–204. https://doi.org/10.1109/TCYB.2014.2322602

- Chuang, L.-Y., Chang, H.-W., Tu, C.-J., & Yang, C.-H. (2008). Improved binary PSO for feature selection using gene expression data. Computational Biology and Chemistry, 32(1), 29–38. https://doi.org/10.1016/j.compbiolchem.2007.09.005

- Datasets | Feature Selection @ ASU. (n.d.). Retrieved October 3, 2019, from http://featureselection.asu.edu/datasets.php

- Emary, E., Zawbaa, H. M., & Hassanien, A. E. (2016). Binary grey wolf optimization approaches for feature selection. Neurocomputing, 172, 371–381. https://doi.org/10.1016/j.neucom.2015.06.083

- Ewees, A. A., Abd Elaziz, M., & Houssein, E. H. (2018). Improved grasshopper optimization algorithm using opposition-based learning. Expert Systems with Applications, 112, 156–172. https://doi.org/10.1016/j.eswa.2018.06.023

- Faris, H., Heidari, A. A., Al-Zoubi, A. M., Mafarja, M., Aljarah, I., Eshtay, M., & Mirjalili, S. (2020). Time-varying hierarchical chains of salps with random weight networks for feature selection. Expert Systems with Applications, 140, 112898/1-17. https://doi.org/10.1016/j.eswa.2019.112898

- Gokalp, O., Tasci, E., & Ugur, A. (2020). A novel wrapper feature selection algorithm based on iterated greedy metaheuristic for sentiment classification. Expert Systems with Applications, 146, 113176/1-10. https://doi.org/10.1016/j.eswa.2020.113176

- Gou, J., Lei, Y.-X., Guo, W.-P., Wang, C., Cai, Y.-Q., & Luo, W. (2017). A novel improved particle swarm optimization algorithm based on individual difference evolution. Applied Soft Computing, 57, 468–481. https://doi.org/10.1016/j.asoc.2017.04.025

- Gunasundari, S., Janakiraman, S., & Meenambal, S. (2016). Velocity bounded boolean particle swarm optimization for improved feature selection in liver and kidney disease diagnosis. Expert Systems with Applications, 56, 28–47. https://doi.org/10.1016/j.eswa.2016.02.042

- Hancer, E. (2019). Fuzzy kernel feature selection with multi-objective differential evolution algorithm. Connection Science, 31(4), 323–341. https://doi.org/10.1080/09540091.2019.1639624

- Holland, J. H. (1992). Genetic algorithms. Scientific American, 267(1), 66–72. https://doi.org/10.1038/scientificamerican0792-66

- Huang, C.-L., & Wang, C.-J. (2006). A GA-based feature selection and parameters optimizationfor support vector machines. Expert Systems with Applications, 31(2), 231–240. https://doi.org/10.1016/j.eswa.2005.09.024

- Ibrahim, R. A., Abd Elaziz, M., Ewees, A. A., El-Abd, M., & Lu, S. (2021). New feature selection paradigm based on hyper-heuristic technique. Applied Mathematical Modelling, 98, 14–37. https://doi.org/10.1016/j.apm.2021.04.018

- Jabeen, H., Jalil, Z., & Baig, A. R. (2009). Opposition based initialization in particle swarm optimization (O-PSO). In Proceedings of the 11th Annual Conference Companion on Genetic and Evolutionary Computation Conference: Late Breaking Papers (pp. 2047–2052). https://doi.org/10.1145/1570256.1570274

- Jensi, R., & Jiji, G. W. (2016). An enhanced particle swarm optimization with levy flight for global optimization. Applied Soft Computing, 43, 248–261. https://doi.org/10.1016/j.asoc.2016.02.018

- Ji, B., Lu, X., Sun, G., Zhang, W., Li, J., & Xiao, Y. (2020). Bio-inspired feature selection: An improved binary particle swarm optimization approach. IEEE Access, 8, 85989–86002. https://doi.org/10.1109/ACCESS.2020.2992752

- Jiao, B., Lian, Z., & Gu, X. (2008). A dynamic inertia weight particle swarm optimization algorithm. Chaos, Solitons & Fractals, 37(3), 698–705. https://doi.org/10.1016/j.chaos.2006.09.063

- Jude Hemanth, D., & Anitha, J. (2019). Modified genetic algorithm approaches for classification of abnormal magnetic resonance brain tumour images. Applied Soft Computing, 75, 21–28. https://doi.org/10.1016/j.asoc.2018.10.054

- Kang, Q., Xiong, C., Zhou, M., & Meng, L. (2018). Opposition-Based hybrid strategy for particle swarm optimization in noisy environments. IEEE Access, 6, 21888–21900. https://doi.org/10.1109/ACCESS.2018.2809457

- Karaboga, D., & Basturk, B. (2007). A powerful and efficient algorithm for numerical function optimization: Artificial bee colony (ABC) algorithm. Journal of Global Optimization, 39(3), 459–471. https://doi.org/10.1007/s10898-007-9149-x

- Kashef, S., & Nezamabadi-pour, H. (2015). An advanced ACO algorithm for feature subset selection. Neurocomputing, 147, 271–279. https://doi.org/10.1016/j.neucom.2014.06.067

- Kennedy, J. (2011). Particle swarm optimization. In laude Sammut & Geoffrey I. Webb (Eds.), Encyclopedia of machine learning (pp. 760–766). Springer. https://doi.org/10.1007/978-0-387-30164-8_630

- Kennedy, J., & Eberhart, R. C. (1997). A discrete binary version of the particle swarm algorithm. In Computational Cybernetics and Simulation 1997 IEEE International Conference on Systems, Man, and Cybernetics (Vol. 5, pp. 4104–4108). https://doi.org/10.1109/ICSMC.1997.637339

- Kumar, A., Kumar, Y., & Kukkar, A. (2020). A feature selection model for prediction of software defects. International Journal of Embedded Systems, 13(1), 28–39. https://doi.org/10.1504/IJES.2020.108279

- Lin, K.-C., Hung, J. C., & Wei, J. (2018). Feature selection with modified lion’s algorithms and support vector machine for high-dimensional data. Applied Soft Computing, 68, 669–676. https://doi.org/10.1016/j.asoc.2018.01.011

- Lu, Y., Liang, M., Ye, Z., & Cao, L. (2015). Improved particle swarm optimization algorithm and its application in text feature selection. Applied Soft Computing, 35, 629–636. https://doi.org/10.1016/j.asoc.2015.07.005

- Mafarja, M. M., & Mirjalili, S. (2017). Hybrid whale optimization algorithm with simulated annealing for feature selection. Neurocomputing, 260, 302–312. https://doi.org/10.1016/j.neucom.2017.04.053

- Mazaheri, V., & Khodadadi, H. (2020). Heart arrhythmia diagnosis based on the combination of morphological, frequency and nonlinear features of ECG signals and metaheuristic feature selection algorithm. Expert Systems with Applications, 161, 113697/1-14. https://doi.org/10.1016/j.eswa.2020.113697

- Mirjalili, S. (2015). Moth-flame optimization algorithm: A novel nature-inspired heuristic paradigm. Knowledge-Based Systems, 89, 228–249. https://doi.org/10.1016/j.knosys.2015.07.006

- Mirjalili, S., Gandomi, A. H., Mirjalili, S. Z., Saremi, S., Faris, H., & Mirjalili, S. M. (2017). Salp swarm algorithm: A bio-inspired optimizer for engineering design problems. Advances in Engineering Software, 114, 163–191. https://doi.org/10.1016/j.advengsoft.2017.07.002

- Mirjalili, S., Mirjalili, S. M., & Hatamlou, A. (2016). Multi-verse optimizer: A nature-inspired algorithm for global optimization. Neural Computing and Applications, 27(2), 495–513. https://doi.org/10.1007/s00521-015-1870-7

- Mirjalili, S., Mirjalili, S. M., & Lewis, A. (2014). Grey wolf optimizer. Advances in Engineering Software, 69, 46–61. https://doi.org/10.1016/j.advengsoft.2013.12.007

- Nagra, A. A., Han, F., Ling, Q. H., Abubaker, M., Ahmad, F., Mehta, S., & Apasiba, A. T. (2020). Hybrid self-inertia weight adaptive particle swarm optimisation with local search using C4.5 decision tree classifier for feature selection problems. Connection Science, 32(1), 16–36. https://doi.org/10.1080/09540091.2019.1609419

- Nayak, S. K., Rout, P. K., Jagadev, A. K., & Swarnkar, T. (2018). Elitism-based multi-objective differential evolution with extreme learning machine for feature selection: A novel searching technique. Connection Science, 30(4), 362–387. https://doi.org/10.1080/09540091.2018.1487384

- Nemati, S., Basiri, M. E., Ghasem-Aghaee, N., & Aghdam, M. H. (2009). A novel ACO–GA hybrid algorithm for feature selection in protein function prediction. Expert Systems with Applications, 36(10), 12086–12094. https://doi.org/10.1016/j.eswa.2009.04.023

- Nguyen, B. H., Xue, B., & Zhang, M. (2020). A survey on swarm intelligence approaches to feature selection in data mining. Swarm and Evolutionary Computation, 54, 100663/1-16. https://doi.org/10.1016/j.swevo.2020.100663

- Nguyen, T.-K., Ly, V. D., & Hwang, S. O. (2020). Effective feature selection based on MANOVA. International Journal of Internet Technology and Secured Transactions, 10(4), 383–395. https://doi.org/10.1504/IJITST.2020.108133

- Ouadfel, S., & Abd Elaziz, M. (2020). Enhanced crow search algorithm for feature selection. Expert Systems with Applications, 159, 113572/1-16. https://doi.org/10.1016/j.eswa.2020.113572

- Rashedi, E., Nezamabadi-pour, H., & Saryazdi, S. (2009). GSA: A gravitational search algorithm. Information Sciences, 179(13), 2232–2248. https://doi.org/10.1016/j.ins.2009.03.004

- Rashno, A., Nazari, B., Sadri, S., & Saraee, M. (2017). Effective pixel classification of Mars images based on ant colony optimization feature selection and extreme learning machine. Neurocomputing, 226, 66–79. https://doi.org/10.1016/j.neucom.2016.11.030

- Siedlecki, W., & Sklansky, J. (1989). A note on genetic algorithms for large-scale feature selection. Pattern Recognition Letters, 10(5), 335–347. https://doi.org/10.1016/0167-8655(89)90037-8

- Sreeja, N. K. (2019). A weighted pattern matching approach for classification of imbalanced data with a fireworks-based algorithm for feature selection. Connection Science, 31(2), 143–168. https://doi.org/10.1080/09540091.2018.1512558

- Too, J., & Abdullah, A. R. (2020a). Binary atom search optimisation approaches for feature selection. Connection Science, 32(4), 406–430. https://doi.org/10.1080/09540091.2020.1741515

- Too, J., & Abdullah, A. R. (2020b). A new and fast rival genetic algorithm for feature selection. The Journal of Supercomputing, 1–31. https://doi.org/10.1007/s11227-020-03378-9

- Too, J., Abdullah, A. R., Mohd Saad, N., & Tee, W. (2019). EMG feature selection and classification using a pbest-guide Binary Particle swarm optimization. Computation, 7(1), 12/1-20. https://doi.org/10.3390/computation7010012

- Tubishat, M., Idris, N., Shuib, L., Abushariah, M. A. M., & Mirjalili, S. (2020). Improved salp swarm algorithm based on opposition based learning and novel local search algorithm for feature selection. Expert Systems with Applications, 145/1-10, 113122. https://doi.org/10.1016/j.eswa.2019.113122

- UCI Machine Learning Repository. (n.d.). Retrieved March 24, 2019, from https://archive.ics.uci.edu/ml/index.php

- Wolpert, D. H., & Macready, W. G. (1997). No free lunch theorems for optimization. IEEE Transactions on Evolutionary Computation, 1(1), 67–82. https://doi.org/10.1109/4235.585893

- Xia, X., Xing, Y., Wei, B., Zhang, Y., Li, X., Deng, X., & Gui, L. (2019). A fitness-based multi-role particle swarm optimization. Swarm and Evolutionary Computation, 44, 349–364. https://doi.org/10.1016/j.swevo.2018.04.006

- Xue, B., Zhang, M., & Browne, W. N. (2014). Particle swarm optimisation for feature selection in classification: Novel initialisation and updating mechanisms. Applied Soft Computing, 18(Supplement C), 261–276. https://doi.org/10.1016/j.asoc.2013.09.018

- Yapici, H., & Cetinkaya, N. (2019). A new meta-heuristic optimizer: Pathfinder algorithm. Applied Soft Computing, 78, 545–568. https://doi.org/10.1016/j.asoc.2019.03.012

- Zhang, Y., Jin, Z., & Mirjalili, S. (2020). Generalized normal distribution optimization and its applications in parameter extraction of photovoltaic models. Energy Conversion and Management, 224, 113301. https://doi.org/10.1016/j.enconman.2020.113301

- Zhang, Y.-D., Zhang, Y., Lv, Y.-D., Hou, X.-X., Liu, F.-Y., Jia, W.-J., Yang, M.-M., Phillips, P., & Wang, S.-H. (2017). Alcoholism detection by medical robots based on Hu moment invariants and predator–prey adaptive-inertia chaotic particle swarm optimization. Computers & Electrical Engineering, 63, 126–138. https://doi.org/10.1016/j.compeleceng.2017.04.009