Abstract

As a fundamental service in near future, medical digital twin (MDT) is the virtual replica of a person. MDT applies new technologies of IoT, AI and big data to predict the state of health and offer clinical suggestions. It is crucial to secure medical digital twins through deep understanding of the design of digital twins and applying the new vulnerability tolerant approach. In this paper, we present a new medical digital twin, which systematically combines Haptic-AR navigation and deep learning techniques to achieve virtual replica and cyber–human interaction. We report an innovative study of the cyber–human interaction performance in different scenarios. With the focus on cyber resilience, a new solution of vulnerability tolerant is the must in the real-world MDT scenarios. We propose a novel scheme for recognising and fixing MDT vulnerabilities, in which a new CodeBERT-based neural network is applied to better understand risky code and capture cybersecurity semantics. We develop a prototype of the new MDT and collect several real-world datasets. In the empirical study, a number of well-designed experiments are conducted to evaluate the performance of digital twin, cyber–human interaction and vulnerability detection. The results confirm that our new platform works well, can support clinical decision and has great potential in cyber resilience.

1. Introduction

Digital twin will be an important 6G service in the near future (Samsung, Citation2020), which aggregates the new technologies such as artificial intelligence (AI) (Boden, Citation2017), Internet of Things (IoT) and big data analytics. Digital twins can be the virtual replicas of many physical entities, such as systems, devices, people and even places. One benefit of digital twin is that, before actual actions are performed on physical entities, professionals can run simulations and tests of using digital twins (Hu et al., Citation2021; Zhang et al., Citation2020). We can see digital twin applications in the sectors of manufacturing, automotive and healthcare, due to the explosion of IoT sensors. Digital twins can significantly reduce maintenance burdens with the real-time monitoring capability.

A medical digital twin (MDT) is a virtual replica of a person. Employing a life-long data record and AI-powered models, MDT can predict the health state and give clinical suggestions (Corral-Acero et al., Citation2020). For example, an MDT can predict the outcome of a lung cancer diagnosis. In the real world, a GE MDT platform combined discrete events and applied agent-based methods to deal with the nuances of care delivery and predict a patient's flow. An MDT can track a patient's records, cross-check them against known patterns, and analyse the disease features through AI. With the support of real-time data acquisition and processing, advanced data analytics and machine learning algorithms can produce accurate outcomes (Cao et al., Citation2021; Topol, Citation2019). An MDT can produce lifetime growth for healthcare organisations to benefit from the cost savings dramatically. It can also improve the healthcare organisations' preventive maintenance, e.g. predicting cardiopulmonary, respiratory arrest and lung cancer.

We aim to develop a new medical digital twin (MDT) with a focus on lung biopsy. Traditional lung biopsy navigation systems have two stages, preoperation and interoperation. The interoperation has two concerns: the operational tool's real-time location and the next stage of the operation. During the whole process, each of the guiding clues should be available for the surgeon in real time. For professional surgeons, the real-time force feedback from the hands may be more sensitive and important than the image guiding clues. In the new MDT, the haptic sensation is introduced in the interoperation stage to assist the image guiding for lung biopsy. We propose a new haptic-AR-enabled guiding method with deep learning to improve the robustness of the lung MDT.

In recent years, video-assisted thoracoscopic surgery (VATS) has become a popular method for lung cancer surgery. VATS is a minimally invasive operation, which only requires few small incisions in the patient's skin. It reduces the exposure of internal organs to external pollutants during surgery. Compared with traditional surgery, VATS has better cosmetic effects, shorter postoperative recovery time and short-term painkillers. Therefore, our new lung MDT chooses minimally invasive surgery as reference. Recently, the machine–human interaction through virtual reality has attracted a number of research. For example, Delp et al. proposed the application of VR technology in medical practice (Delp et al., Citation1990). However, there is lack of quantitative research on machine–human interaction in MDT with VATS. The well-known laparoscopic surgery simulator and evaluation method (Veronesi et al., Citation2016) doesn't consider the key characteristics of thoracoscopic surgery MDT, the narrow space, dangerous operation, and complex details. In this paper, we will bridge the gap through a new MDT research.

Cyber resilience (Chen et al., Citation2020; Miao et al., Citation2022; Samsung, Citation2020; Zhang et al., Citation2021) is a big concern in real-world critical applications including the development and applications of MDT, such as vulnerability tolerant is a fundamental requirement (Ahamad & Pathan, Citation2021; Liu et al., Citation2018). Exploitable MDT vulnerabilities are important security threats to the healthcare organisations and impact on a large number of users (Lin et al., Citation2020; Qiu et al., Citation2021). To deal with the limitations of conventional static and dynamic techniques, machine learning (ML) becomes popular and has been applied in software vulnerability detection. Compared to traditional techniques, ML-based software vulnerability detection has a great potential of discovering unknown vulnerabilities and their variants (Lin et al., Citation2019; Sun et al., Citation2019). Existing ML-based methods use programming code features, traditional ML algorithms (Coulter et al., Citation2020a) and the programming-related data such as developer activities and code commits (Lin et al., Citation2018) to build a detection model. As we know, feature engineering in the traditional ML depends on programming experience, vulnerability expertise and IoT domain knowledge (Coulter et al., Citation2020b), which are unreliable. To address this problem, deep learning is proposed and has demonstrated excellent performance in various scenarios (Liu et al., Citation2019; Wang et al., Citation2020). Existing methods includes applying FCN, CNNs and RNNs to model code characteristics and recognise vulnerability patterns in software. However, in the real world, we need an end-to-end solution to protect MDT by taking human-centric into account.

The innovation and contribution of the paper are threefold.

We developed a novel Haptic-AR intervention MDT for advanced lung biopsy.

We conducted a new study on XR-based machine–human interaction for lung biopsy prediction.

We proposed a novel vulnerability tolerance scheme using CodeBERT for cyber resilience of lung MDT.

The healthcare industry can use the new lung biopsy focused MDT to revolutionise clinical processes and hospital management. It will enhance medical care with digital tracking and advancing modelling of the human body. In industrial applications, the innovative haptic sensation can assist the image guiding for lung biopsy. Moreover, the new vulnerability detection technique will secure industrial software and significantly improve the cyber resilience of the healthcare industry.

We organise the rest of the paper as follows. Section 2 reviews the related work. A new MDT framework and a new cyber vulnerability resilience solution are presented in Section 3. Section 4 reports the experiments and results to demonstrate the effectiveness of the new MDT and techniques. Section 5 concludes this paper.

2. Related work

This section reviews the related work to our research, which includes three parts, MDT with XR and AI, MDT and its security, and Neural models for vulnerability detection. Recently, XR and AI have been applied to build MDTs and provide intelligence and new functions. Cyberattacks have targeted networked MDTs to compromise critical medical infrastructure. It is emergent to research cyber resilience when developing new MDTs, which motivates our work reported in this paper.

2.1. MDT with XR and AI

MDT with XR (Ratcliffe et al., Citation2021) can support doctors with more information during the operation, e.g. rebuilding the three-dimensional virtual patient for 3D surgical guiding. There are two key components, the three-dimensional reconstruction with CT/MRI images and the model-patient registration. Recent research focuses on two critical problems affecting system performance, physiological patient motion and surgical instrument interactions (Villanueva et al., Citation2020). Existing commercial systems adopt either visual-guide or optical-guide mechanisms based on infrared-based NDI Polaris. A precise registration between the reconstructed model and the patient is difficult because of the posture impurity and the lesions' heterogeneity. The IR-based navigation is significantly affected by signal blocking during the actual operations. We will research MDT with XR with high performance and provide a new proposal.

MDT systems use deep learning such as detecting COVID-19 (Burrer et al., Citation2020) pathogens and defending cyber-attacks. For example, leveraging the Internet of Things was proposed to collect real-time physiological data and the data was encrypted to ensure the safety of patient privacy. Researchers studied new anonymous IoTs models and developed RFID proof-of-concept systems. In the relevant scenarios, the blockchain technique was applied to protect contract deployment and function execution. AI plays an important role in risk prediction and prognosis treatment in MDT. To relieve the burdens on hospital staff and other health personnel, blockchain-based applications was developed to monitor and manage COVID-19 patients digitally. 5G technology can support the improvement of virus tracking, patient monitoring, data collection and analysis. Along with other synchronous technologies such as IoT and AI, we can see a great potential to revolutionise healthcare (Ting et al., Citation2020).

2.2. MDT and its security

The number of MDT deployed has been constantly increasing (Zhang et al., Citation2020). Although the application of MDT has a huge potential, it must take practical constraints into consideration. Vending machines can be combined with the IoT technology to facilitate a healthy lifestyle. However, cyber-attacks to MDT will lead to terrible consequences to the critical medical infrastructure. With the widespread application of MDT, posing a severe threat to the secure operation not only to medical devices but also to the entire MDT ecosystem (Coulter et al., Citation2020a).

To protect MDT security, cyber researchers and professionals have been working on secure systems and solutions to combat the increasing cyber-attacks (Lin et al., Citation2020; Wang et al., Citation2020; Xie et al., Citation2021). Extensive efforts have been made to ensure MDT security and privacy, providing practical guidance for medical industries. Recent survey papers discussed the opportunities and possible threats that MDT face at home and in hospital and the classification of MDT targeted cyber-attacks (Fu et al., Citation2017; Yang et al., Citation2017). A new method (Boejen & Grau, Citation2011) uses utilised unmanned aerial vehicles to launch an attack in a smart hospital environment, which can compromise wearable healthcare sensors. Deep learning approach was proposed for real-time cyber-attack detection for MDT security protection, and reported high accuracy and significant time-saving (Sethuraman et al., Citation2020).

In recent years, MDT has become widespread to combine the Virtual Reality (VR) technology with medical-related majors. Unity software was combined with a brain–computer interface to control the VR environment and MDT devices for future medical applications (Coogan & He, Citation2018). To improve the operation performance of the entire medical platform, our new research proposes to integrate the VR technology IoT and smart medical devices to duplicate the clinical process of lung cancer with pulmonary embolism. The new solution in the education field can significantly improve the learning experience by seamlessly combining their conceptual learning with practical experience.

2.3. Neural models for vulnerability detection

Fully connected network (FCN) was first applied to code representation learning in software vulnerability detection. Compared with the traditional machine learning, FCN is able to create a complex model of capturing nonlinear characteristics from a large data set (Lin et al., Citation2020; Liu et al., Citation2021). Researchers have used FCN to analyse source code and identify the in-variance of security vulnerability. FCN can take various forms of input data, i.e.input structure independent, which enables researchers to explore different handcrafted features and information for software vulnerability discovery.

However, with existing FCN-based methods, the vulnerability detection performance relies on manual feature engineering. FCN is not good at processing sequentially dependent data, such as the program code. However, the joint action of context in the code control flow normally leads to vulnerable programs. Hence, researchers utilised the context-aware neural network structures for software vulnerability detection. Considering program code share semantics and syntax similarity with natural languages, natural language processing (NLP) techniques have been applied to learn the patterns of vulnerable code. With the capability of dealing with structured spatial data (Ramsundar & Zadeh, Citation2018), convolution neural network (CNN) is proposed to recognise semantically similar pixels for image classification. CNN has also achieved certain success in text classification by capturing the semantics in the context window. This motivates researchers to design CNN-based solutions to learn the context-aware code semantics.

RNN is naturally developed to model context dependence and process sequential data, so it has been applied in vulnerability pattern recognition. LSTM, a variant of RNN, was used to predict vulnerabilities in binary programs and the research results show LSTM is better than traditional multi-layer perceptrons in this task (Wu et al., Citation2017). With the consideration of that LSTM only considers one-direction code relationship, a bidirectional LSTM (Bi-LSTM) network was proposed for detecting the vulnerabilities with ‘code gadget’ (Li et al., Citation2016). The new model has some ability to capture the semantics of some software vulnerabilities, e.g. buffer overflow, which are associated with multiple code fragments. Some other researches preferred to extract the abstract syntax trees (ASTs) from source code and used ASTs in representation learning through the Bi-LSTM network (Lin et al., Citation2021, Citation2017, Citation2018).

3. Human centric smart MDT with security

In a future era with AI, 6G and smart sensors, the healthcare system can seamlessly link the real-world patient and digital replicate through MDT to achieve precision medicine (Hamet & Tremblay, Citation2017; Saad et al., Citation2019; Wang et al., Citation2020). For example, future doctors have the capability to explore and monitor the patient, and detect and predict health problems remotely.

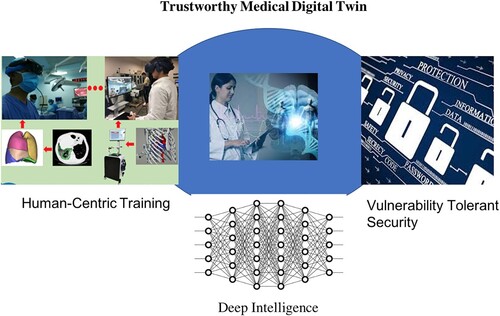

Figure shows a new secure MDT linking real-world patient, AI prediction and telemedicine through a data-driven approach. Specifically, deep learning is applied to support intelligence in human-centric training and vulnerability tolerant security. The new MDT provides certain trustworthiness through intelligent detection of software vulnerability. In the following three subsections, we will introduce the technical details about AI for MDT, machine–human interaction and secure MDT with advanced vulnerability detection.

3.1. Data-driven intelligent MDT

A real-world dataset is created by collecting clinical data of lung cancer patients from three hospitals in Yunnan and Chongqing. We conducted essential data analysis for evidence-based medicine, including risk factor analysis, meta-data analysis, epidemiology analysis. The results are a set of features, which consist of the age, gender, leukocyte, pathological type, risk factors, d-dimer level, ECG, TNM stage, pathological location, and thoracic CT findings.

The new MDT supports smart telemedicine and surgery navigation. We achieve the visual rendering through a patient-specific CT image reconstruct mesh, which use a physics-based rendering algorithm. Our new method can build up a microfacet model with more flexibility during the visual rendering. Due to the new fresnel term, the diffuse and specular reflection in our visual rendering have different forms and can accurately simulate the specific glistening effects of human organ. Moreover, we apply a multi-platform integrated game development tool to develop an unity software that allows healthcare managers to easily create interactive content. Our unity is developed for across platforms, e.g. Android, ios, PC and Web. It can deploy an APK of a VR project to an Android device and run the project and display through a headset.

Our MDT uses a customised CNN network to predict anomalies, e.g.pulmonary embolism in lung cancer. The neural network takes the labelled clinical data as input and builds a prediction model to support a treatment decision. It has five layers for convolution, full-connection and sigmoid activation. Compared to relevant work, our technique uses relatively little pre-processing for visual rendering. Instead of hand-engineered filtering and construction, the new method optimises the filters through automated learning and improves simulation performance. This data-driven approach is independent of prior knowledge and human intervention and has a significant advantage in feature extraction.

3.2. Machine–human interaction

Considering machine–human interaction through the proposed MDT, we implement a VatsSim-XR surgery simulator that integrates VR, AR and MR modes for clinical training. The training system consists of a laptop computer, two force feedback devices, a helmet-mounted display, and two positioners. The computer-simulated virtual environment integrates cognitive and motor skills to achieve VR-based clinical training. AR simulation and high-quality cameras are used to implement digital twin and achieve comfortable machine–human interaction. We use a panoramic camera to record the natural environment of an operation room and combine it with a virtual environment shown in Oculus helmets for MR-based doctor training. There are three learning modules in our MDT. The virtual simulator has two primary functions, visual rendering and tactile stimulation. The visual rendering can reconstruct the surgical instruments and environment. The tactile simulation utilises force feedback to implement tactile–visual interaction through displacement and force update timely. The surgical instrument can trigger the force feedback device and the contact to generate corresponding force feedback.

We invite tens of doctors in the real-world use experiments. A t-test analysis is performed on the data collected in the experiments. The novice doctors receive 2 weeks of training after first try and then do the experiments again in order to conduct a comparative study. All novice and expert doctors must learn how to operate the MDT simulator before officially participating in the experiments. They must understand the operating rules of peg transfer, blood vessel cutting and shearing, and rope perforation. The training experiments include peg transfer, blood vessel cutting and shearing, and rope perforation. All doctors conduct three-module experiments, VR, AR and MR.

The participating doctors are given the operating requirements and the scoring specification before conducting the experiments of machine–human interaction in the DMT. In the module of pet transfer, the task is to use the left surgical forceps to pick up the left object and transfer it to the right forceps. It also asks the participant to do the same task starting with the right forceps. The times of failing to correctly move the objects in the experiments is recorded for analysis. In module of blood vessel trimming, the task is to first use the left surgical clip to clamp the left end of the blood vessel. Then it repeats the same task with left surgical clamp. Finally, the task is to use the right surgical clamp to cut the blood vessel in the middle and catch the rope at the other end of the small tunnel. The number of ropes dropped in the experiments is recorded for analysis. In the module of rope perforation, the task is to use the left surgical clamp to grab one end of the rope and pass it through the small hole. The task is repeated with using the right surgical clamp.

3.3. Secure MDT with advanced vulnerability detection

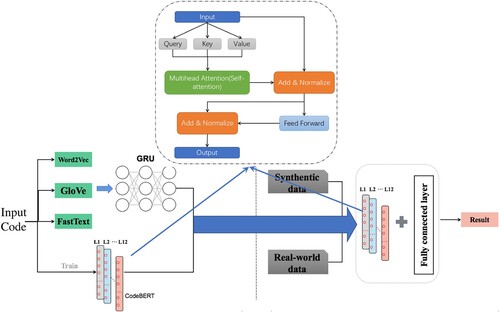

Our new research methodology is shown in Figure . Several real-world software projects and a large number of synthetic vulnerable functions are used in the learning and validation of deep neural models. We process the source code and transform it to work compatible with advanced deep embedding such as CodeBERT (Feng et al., Citation2020). The source code files are loaded and processed to generate sequence data and labels, which are passed to the next stage and produce the code embedding vectors in a high-dimension space. These vectors will then be partitioned and fed to such as the GRU neural network for the deep learning process. We feed CodeBERT with the synthetic data to obtain a fine-tuned model, which can output the vulnerable probabilities. Based on the fine-tuning model, we investigate the impact of several key parameters such as the length of the input sequence, batch size, epoch and learning rate.

Our research will address the following critical thinking. What is the impact of deep code embedding on the vulnerability resilience of MDT? We design a new scheme to facilitate different embedding models, including CodeBERT, Word2vec, GloVe and FastText. The novelty involves code processing, transformation and representation. Can synthetic data help to improve the robustness and effectiveness of embedding models? We apply a new approach to combine a real-world dataset and a synthetic dataset to achieve fine-tuning models such as fine-tuned CodeBERT and explore the nature of the data and model dependence. How does the input sequence length affect code embedding? We analyse the impact of various sizes of input code sequences on the detection performance of deep neural models.

Context information is crucial for analysing various vulnerable functions. For different contexts, a certain variable has various meanings. CodeBERT can be customised to extract high-level code representations for the task of detecting vulnerable C functions. Conventional embedding models do not consider the value variation of a variable and convert a word into a fixed vector, which has the limitation in terms of code semantics. CodeBERT has two objects in training, masked language modelling and replace token detection. For vulnerability detection, we take advantage of the second objective and use a large number of unimodal source code. We leverage the encoder to retain useful information through residual connections design. To learn the patterns of C language, we incorporate transfer learning to our new scheme and obtain relevant syntactic and semantic information from the syntactic data other languages.

Checking the contiguous information is generally insufficient to generate semantically rich vector representations. Vulnerable functions may contain declarations, assignments, control flow and other operational logic. Muti-head attention enables the model to focus on multiple key points, which facilitates the capture of potentially vulnerable functions. In addition, long-distance contextual information is crucial for vulnerability detection. Positional encoding layer is added to our new scheme. The occurrence of a vulnerable code fragment usually has constituent parts linked or connected to either previous or subsequent code, or even to both. Hence, the new design of our neural embedding network take these into consideration and detect a long-term dependency of both forward and backward by offering services.

We propose a fine-tuning solution to allow a deep neural model to learn the syntax and structure of the C programming language and understand more code semantics. Through transfer learning, the customised CodeBERT can learn characteristics of C language from the synthetic functions. Our synthetic dataset has sufficient quantity and diversity, better than real-world open-source projects and time-consuming labelling. It includes basic code patterns and syntax and accurate labels for balanced training and optimisation.

In our fine-tuning method, we use CodeBERT as the pre-trained source model on the source data set. Then, we build a target deep neural network, which replicates all source model structures and parameters except the output layer. Next, we add a fully connected output layer to the target model, whose output size is the number of classes in the target data set. Finally, we train the target model of using the target data set to achieve the fine-tuning purpose.

Source code functions have varying lengths, which exerts tremendous influence on the detection performance. The over-long code is needed to truncate with the consideration of excessively long vectors and information loss. There are long-distance dependencies, given that some vulnerable functions may lie many sentences away from their locus of attention. Due to the Bidirectional structure, previous information may slip from the model's memory in recent embedding models.

We conduct a new study on the length of input sequences for deep neural model and focus exactly on the key points of function vulnerability.

4. Experiments and results

We design and carry out different experiments to evaluate the performance and security of the new MDT and demonstrate the effectiveness of proposed techniques.

4.1. How does our MDT affects surgical training?

Our MDT has its built-in metrics in different modules of surgical training and performs data recording and analysis. The peg transfer module records and analyses the total procedure time and instrument pathway. The module of blood vessel clipping and cutting uses the metrics of the total procedure time, instrument pathway, and the error of clipping and cutting. In the rope perforation module, we consider the total procedure time and instrument pathway. In addition, we also investigate some other facts including the number of successfully transferred small objects, the number of times the rope successfully passed through the small holes and the number of failures. A large number of experiments are conducted to evaluate the effectiveness of the new MDT through comprehensively analysing the data from the novice group and the expert group, and the data collected before and after the training. Our questionnaire uses a 5-point Likert scale to assess the visual and tactile sensations of the four training methods. The questionnaire is filled out by senior doctors.

In our study, descriptive statistical methods are used to analyse the questionnaire data. First, we conduct reliability analysis on the questionnaires data and consider it credible with Cronbach's Alpha coefficient greater than 0.7. In terms of construct validation, an independent sample t-test is applied and the simulator effect is considered significant with p-value less than 0.05. Regarding to the improvement, we use the histogram to show whether each doctor improves his skills after training. The entropy method can be used to determine the index weight of each evaluation item in different modules. We apply the scope method to eliminate the dimensions of each evaluation item, eliminate the influence of physical quantities and compute the coefficient of variance to obtain the index weight.

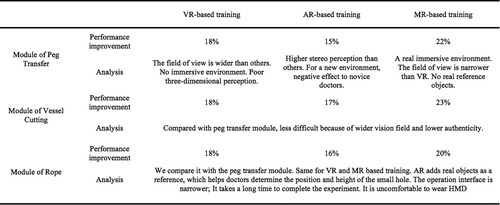

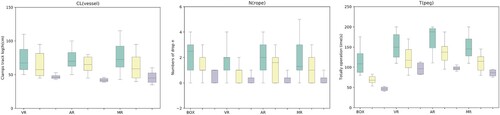

To demonstrate our new MDT system working well, the participating doctors conduct required operations step by step and the MDT outputs their performance based on pre-defined criteria. The performance computed automatically by MDT can accurately differentiate novices from experts. It also shows that novice doctors can improve their surgical skills after training with using our MDT. In the module of small object transferring, expert doctors perform better than that in other three different training. Compared with the VR-based training, the AR-based training can enhance the reference of the environment, but it may have the opposite effect and increase the difficulty in the operation. The MR-based training can improve the doctor's perception about the operating room and has a higher sense of immersion compared to VR-based training. We take various indicators including environment reference and simulation time into consideration when analysing the experiment results. In the module of the rope perforation, the performance of expert doctors is significantly better than that of novice doctors due to the high difficulty of the operation. Wearing a helmet display may cause some discomfort, so the experts take a long time in the training module. The novice doctors' performance is gradually consistent with those of the expert doctors. The influence of different training methods is reported in Figure . We can see that the novice group improves their surgical skills through training. After training, the movement trajectory of the surgical clip operated by the novice doctor is better.

We analyse the results obtained in the module of peg transfer. Figure show that the novice group improves their surgical skills through training. Some novices doctors achieve the expert level in the operation. Considering the time to complete the training experiments, the AR-based training takes the longest time while the Box-based training takes the shortest time. The VR-based and MR-based training take similar times. The results suggest that the actual operating environment can affect the operation performance of a doctor. Let's analyse the moving distance of the surgical clip. The moving distance of the surgical clip is the longest in the AR-based training, which is followed by the VR-based training. Combining different objects as a reference for the position may affect the judgment of the novice doctor and negatively affect its performance. The motion trajectory of the surgical clip of the novice doctor is more concentrated after the training. In terms of the number of drops of small objects, the Box-based and AR-based have more drops. The MR-based training can significantly decrease the average number of drops of novice doctors. Based on the entropy analysis method, the MR-based training can quickly improve this particular operation skill of novice doctors.

Figure 4. Comparison of training results in three modules (left to right in each subfigure: novice, after training and expert).

We investigate the impact of various training on the operation of vessel cutting. Figure shows that the novice group improves the surgical skills of vessel cutting and shearing through training. Some novice doctors achieve the expert operation level after training. The box-based training takes less time than the other three training approaches but has the most significant error that affects blood vessel cutting and shearing. The authenticity of the box module is lower than others, which suggests that the module authenticity may affect the operation performance. We also consider the movement distance of the surgical clip. Regarding the time to complete, the improvement of the VR-based training is the largest, and the AR-based training takes the shortest time. The module authenticity and the environment can speed up a doctor's judgment, but that may affect the operation accuracy. The AR-based training can improve the thinking quality and increase the operation accuracy, so the trajectory movement distance is shorter, and the errors are minor. The less time spent on MR shows that experts need shorter adaptability in a familiar environment. The results suggest that vascular clipping and shearing errors are related to time and the movement distance of the surgical clip. The MR-based training is suitable for medical training, which is proven partially by the movement trajectory of the surgical clip. The doctor can use the actual object as a reference standard in the MR module, supported by the entropy analysis.

We analyse the results of the experiments with rope. As shown in Figure , the novice group improves their surgical skills through training with the comparison to the data of expert doctors. The rope module has a different vision in the competing approaches. The VR-based and AR-based training provide a broader field of vision than MR, so the AR-based training takes the shortest time while the MR-based training spends the longest time. The group of novice doctors spend similar time on the AR-based training and the VR-based training. The rope piercing is difficult. the expert doctors don't demonstrate significantly better performance than novice doctors. The MR-based training still has the largest improvement in the time to complete for novice doctors. The fidelity of the environment makes a great impact on the improvement. The trajectory of the VR-based training is the most concentrated because the field of view is the widest. Through training, novice doctors show good performance close to that of experts.

4.2. What vulnerabilities could be detected through advanced neural learning?

Two datasets (Lin et al., Citation2021) are used in the empirical study to evaluate the performance of advanced neural learning for MDT vulnerability detection. The real-world dataset consists of 12 open-source software projects and libraries, including Asterisk, Httpd, Imagemagick, LibPNG, LibTIFF, OpenSSL, Pidgin, qemu, samba, VLCPlayer and Xen. It is a dual-granularity vulnerability detection dataset, providing labelled file-level and function-level vulnerabilities according to the information from the public NVD (https://nvd.nist.gov/) and CVE (https://cve.mitre.org/). The NVD is the U.S. government repository of standards-based vulnerability management data. The mission of the CVE program is to identify, define and catalogue publicly disclosed cybersecurity vulnerabilities. Our experiments use about 2000 vulnerable functions and 130,000 non-vulnerable functions for performance evaluation. Our synthetic vulnerability dataset contains function samples from the SARD project, artificially constructed code fragments based on known vulnerability patterns.

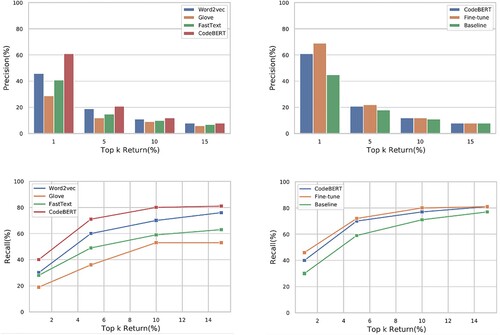

We implement several methods and conduct a set of experiments to evaluate the impact of different neural embedding techniques to the vulnerability detection performance. Figure shows the results of detecting vulnerable function at top k return. The embedding technique can affect the detection accuracy (precision) significantly, which varies from about 30% to about 60%, when we check top 1% ranked functions. The recall of CodeBERT is much better than Word2Vec, GloVe and FastText. The difference can achieve around 10% for the top 1% return function.

A set of experiments are designed and carried out to evaluate the impact of the fin-tuning to CodeBERT? To conduct the model fine-tuning, we add a fully-connection layer into the model and apply the synthetic SARD dataset for the parameter optimisation. As shown in Figure , our fine-tuning method can improve the precision up to 10% when retrieving 1% of vulnerable functions. In particular, our system can discover most of vulnerable function by checking 15% of top ranked functions. Compared to other detection models, our fine-tuning method can significantly improve the performance such as about 25% in precision and 20% in recall.

We conducted a set of experiments to choose a suitable sequence length for software vulnerability detection in MDT. For C language, the results show the best and reliable performance when the sequence length is 256. The precision for the setting of 128 is inferior, only 25%. Some essential information of vulnerable functions includes a head file, variable declaration, parameters, logic code and return value. If the sequence length is too short, the model may miss critical information, producing bad results. When the sequence length is too long, the performance is poor due to high noise. The sequences of vulnerable functions are automatically filled with 1 during the embedding process. Too much irrelevant information misleads the focus of the detection model.

Compared to the baseline of Flawfinder, which ranks the functions according to the vulnerability level, our neural embedding approach ranks functions based on the vulnerable probability. The experiment results show that the deep neural model with an optimised sequence length substantially outperforms FlawFinder. Our model can achieve the precision of 70% and the recall of about 50% in the top 1% return functions. Flawfinder has really poor precision and recall at this case.

We carried out a set of experiments to evaluate the performance of Word2Vec, GloVe, FastText and CodeBERT for vulnerability detection. Word2Vec performs word embeddings according to word co-occurrence and proximity in space. However, it doesn't pay attention to the context. GloVe and FastText have some progress over Word2Vec, but all of them use a single vector to represent a word and suffer from the issue of polysemous. Researchers proposed contextual word embeddings to consider the context information of a single word in a sentence. A vulnerability pattern could cross several lines, which has the characteristic of long-distance dependence. CodeBERT generates corresponding vectors in different contexts for a C word instead of one-to-one correspondence.

Figure reports the performance of different embedding methods and shows their various capacities in learning vulnerability patterns. CodeBERT demonstrates the ability to generate high-level deep context-dependent representations. We use the synthetic dataset and an additional fully connected layer to fine-tune CodeBERT to improve vulnerability detection accuracy. Compared to the other three models, our method can achieve over 10% improvement in precision and recall. We care about the impact of the batch size, epoch and input sequence length on the detection performance. Optimised input sequence length is assumed to benefit the model and reduce the information loss to improve the detection capability. In the experiments, we can improve from 7% to 44% in the top 1% returned functions. The results confirm that our new method can learn complex patterns and generate high-level representations better than conventional embedding methods.

Active MDTs are increasingly connected to the Internet to enhance their functionality and ability. Increasingly, MDTs can be controlled via a mobile phone, and data can be transmitted remotely to support new applications. The connectivity of MDTs to the Internet facilitates information sharing and treatment delivery, but it also exposes MDTs to the risk of potential cybersecurity threats. The threats can be reduced and managed by implementing a vulnerability resilience strategy. The responsibility for implementing and maintaining the cyber resilience of MDTs falls upon all stakeholders. Our research demonstrated a novel technique for MDT cyber resilience and can significantly benefit the medical industry.

5. Conclusion

This paper presented a new medical digital twin (MDT) combining XR navigation and deep learning to achieve cyber–human interaction for clinical training and cybersecurity. We designed a new system and three training modules to support cyber-human interaction and improve the clinical operation performance. We developed a new CodeBERT-based neural network to better understand risky code and capture cybersecurity semantics, so as to detect MDT vulnerabilities effectively. A large number of well-designed experiments were carried out to prove the effectiveness and efficiency of the new MDT. The experiment results and comprehensive analysis show that the proposed techniques work well and the new MDT can support clinical decision and has great potential in cyber resilience.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

References

- Ahamad, S. S., & Pathan, A. S. K. (2021). A formally verified authentication protocol in secure framework for mobile healthcare during COVID-19-like pandemic. Connection Science, 33(3), 532–554. https://doi.org/10.1080/09540091.2020.1854180

- Boden, M. A. (2017). Is deep dreaming the new collage?. Connection Science, 29(4), 268–275. https://doi.org/10.1080/09540091.2017.1345855

- Boejen, A., & Grau, C. (2011). Virtual reality in radiation therapy training. Surgical Oncology-oxford, 20(3), 185–188. https://doi.org/10.1016/j.suronc.2010.07.004

- Burrer, S. L., de Perio, M. A., Hughes, M. M., Kuhar, D. T., & Luckhaupt, S. E., Covid, C., Team, R., COVID, C., Team, R., COVID, C., Team, R. (2020). Characteristics of health care personnel with COVID-19–United States, February 12–April 9, 2020. Morbidity and Mortality Weekly Report, 69(15), 477–481. https://doi.org/10.15585/mmwr.mm6915e6

- Cao, Z., Zhou, Y., Yang, A., & Peng, S. (2021). Deep transfer learning mechanism for fine-grained cross-domain sentiment classification. Connection Science, 33(4), 911–928. https://doi.org/10.1080/09540091.2021.1912711

- Chen, X., Li, C., Wang, D., Wen, S., Zhang, J., Nepal, S., Xiang, Y., & Ren, K. (2020). Android HIV: A study of repackaging malware for evading machine-learning detection. IEEE Transactions on Information Forensics and Security, 15, 987–1001. https://doi.org/10.1109/TIFS.10206

- Coogan, C., & He, B. (2018). Brain-Computer interface control in a virtual reality environment and applications for the internet of things. IEEE Access, 6, 10840–10849. https://doi.org/10.1109/ACCESS.2018.2809453

- Corral-Acero, J., Margara, F., Marciniak, M., Rodero, C., Loncaric, F., Feng, Y., Gilbert, A., Fernandes, J. F., Bukhari, H. A., Wajdan, A., & Martinez, M. V. (2020). The digital twin to enable the vision of precision cardiology. European Heart Journal, 41(48), 4556–4564. https://doi.org/10.1093/eurheartj/ehaa159

- Coulter, R., Han, Q. L., Pan, L., Zhang, J., & Xiang, Y. (2020a). Code analysis for intelligent cyber systems: A data-Driven approach. Information Sciences, 50(7), 3081–3093. https://doi.org/10.1016/j.ins.2020.03.036

- Coulter, R., Han, Q. L., Pan, L., Zhang, J., & Xiang, Y. (2020b). Data-driven cyber security in perspective–intelligent traffic analysis. IEEE Transactions on Cybernetics, 50(7), 3081–3093. https://doi.org/10.1109/TCYB.6221036

- Delp, S. L., Loan, J. P., Hoy, M. G., Zajac, F. E., Topp, E. L., & Rosen, J. M. (1990). An interactive graphics-based model of the lower extremity to study orthopaedic surgical procedures. IEEE Transactions on Biomedical Engineering, 37(8), 757–767. https://doi.org/10.1109/10.102791

- Feng, Z., Guo, D., Tang, D., Duan, N., Feng, X., Gong, M., Shou, L., Qin, B., Liu, T., Jiang, D., & Zhou, M. (2020). Codebert: A pre-trained model for programming and natural languages. arXiv preprint arXiv:2002.08155.

- Fu, K., Kohno, T., Lopresti, D., Mynatt, E., Nahrstedt, K., Patel, S., Richardson, D., & Zorn, B. (2017). Safety, security, and privacy threats posed by accelerating trends in the internet of things. Computing Community Consortium (CCC) Technical Report, 29(3), 1–9. arXiv:2008.00017

- Hamet, P., & Tremblay, J. (2017). Artificial intelligence in medicine. Metabolism, 69(3), S36–S40. https://doi.org/10.1016/j.metabol.2017.01.011

- Hu, J., Liang, W., Hosam, O., Hsieh, M. Y., & Su, X. (2021). 5GSS: A framework for 5G-secure-smart healthcare monitoring. Connection Science, 1–23. https://doi.org/10.1080/09540091.2021.1977243

- Li, Z., Zou, D., Xu, S., Jin, H., Qi, H., & Hu, J. (2016). VulPecker: An automated vulnerability detection system based on code similarity analysis. In Proceedings of the 32nd ACCSA (pp. 201–213). Springer International Publishing.

- Lin, G., Wen, S., Han, Q., Zhang, J., & Xiang, Y. (2020). Software vulnerability detection using deep neural networks: A survey. Proceedings of the IEEE, 108(10), 1825–1848. https://doi.org/10.1109/PROC.5

- Lin, G., Xiao, W., Zhang, J., & Xiang, Y. (2019). Deep learning-based vulnerable function detection: A benchmark. In Proceedings of the International Conference on Information and Communications Security (pp. 219–232). Springer International Publishing.

- Lin, G., Zhang, J., Luo, W., Pan, L., De Vel, O., Montague, P., & Xiang, Y. (2021). Software vulnerability discovery via learning multi-domain knowledge bases. IEEE Transactions on Dependable and Secure Computing, 18(5), 2469–2485. https://doi.org/10.1109/TDSC.2019.2954088

- Lin, G., Zhang, J., Luo, W., Pan, L., & Xiang, Y. (2017). POSTER: Vulnerability discovery with function representation learning from unlabeled projects. In Proceedings of the 2017 Sigsac Conference on CCS (pp. 2539–2541). Springer International Publishing.

- Lin, G., Zhang, J., Luo, W., Pan, L., Xiang, Y., De Vel, O., & Montague, P. (2018). Cross-project transfer representation learning for vulnerable function discovery. IEEE Transactions on Industrial Informatics, 14(7), 3289–3297. https://doi.org/10.1109/TII.2018.2821768

- Liu, L., De Vel, O. Y., Han, Q., Zhang, J., & Xiang, Y. (2018). Detecting and preventing cyber insider threats: A survey. IEEE Communications Surveys & Tutorials, 20(2), 1397–1417. https://doi.org/10.1109/COMST.2018.2800740

- Liu, S., Lin, G., Han, Q. L., Wen, S., Zhang, J., & Xiang, Y. (2019). DeepBalance: Deep-learning and fuzzy oversampling for vulnerability detection. IEEE Transactions on Fuzzy Systems, 28(7), 1329–1343. https://doi.org/10.1109/TFUZZ.2019.2958558

- Liu, W., Hu, E. W., Su, B., & Wang, J. (2021). Using machine learning techniques for DSP software performance prediction at source code level. Connection Science, 33(1), 26–41. https://doi.org/10.1080/09540091.2020.1762542

- Miao, Y., Chen, C., Pan, L., Han, Q. L., Zhang, J., & Xiang, Y. (2022). Machine learning based cyber attacks targeting on controlled information: A survey. ACM Computing Survey, 54(7), 136:1–136:36. https://doi.org/10.1145/3465171

- Qiu, J., Zhang, J., Pan, L., Luo, W., Nepal, S., & Xiang, Y. (2021). A survey of android malware detection with deep neural models. ACM Computing Survey, 53(6), 126:1–126:36. https://doi.org/10.1145/3417978

- Ramsundar, B., & Zadeh, R. B. (2018). Tensorflow for deep learning: from linear regression to reinforcement learning. O'Reilly Media, Inc.

- Ratcliffe, J., Soave, F., Bryan-Kinns, N., Tokarchuk, L., & Farkhatdinov, I. (2021). Extended Reality (XR) remote research: A survey of drawbacks and opportunities. In Proceedings of the 2021 Chi Conference on Human Factors in Computing Systems (pp. 1–13). Springer International Publishing.

- Saad, W., Bennis, M., & Chen, M. (2019). A vision of 6G wireless systems: Applications, trends, technologies, and open research problems. IEEE Network, 34(3), 134–142. https://doi.org/10.1109/MNET.65

- Samsung (2020). 6G, the next hyper connected experience for all (Technical Report).

- Sethuraman, S. C., Vijayakumar, V., & Walczak, S. (2020). Cyber attacks on healthcare devices using unmanned aerial vehicles. Journal of Medical Systems, 44(1), 1–10. https://doi.org/10.1007/s10916-019-1489-9

- Sun, N., Zhang, J., Rimba, P., Gao, S., Zhang, L. Y., & Xiang, Y. (2019). Data-driven cybersecurity incident prediction: A survey. IEEE Communications Surveys and Tutorials, 21(2), 1744–1772. 10.1109/COMST.2018.2885561.

- Ting, D. S. W., Carin, L., Dzau, V., & Wong, T. Y. (2020). Digital technology and COVID-19. Nature Medicine, 26(4), 459–461. https://doi.org/10.1038/s41591-020-0824-5

- Topol, E. J. (2019). High-performance medicine: The convergence of human and artificial intelligence. Nature Medicine, 25(1), 44–56. https://doi.org/10.1038/s41591-018-0300-7

- Veronesi, G., Novellis, P., Voulaz, E., & Alloisio, M. (2016). Robot-assisted surgery for lung cancer: State of the art and perspectives. Lung Cancer (Amsterdam, Netherlands), 101(5), 28–34. https://doi.org/10.1016/j.lungcan.2016.09.004

- Villanueva, C., Xiong, J., & Rajput, S. (2020). Simulation-based surgical education in cardiothoracic training. ANZ Journal of Surgery, 90(6), 978–983. https://doi.org/10.1111/ans.v90.6

- Wang, M., Zhu, T., Zhang, T., Zhang, J., Yu, S., & Zhou, W. (2020). Security and privacy in 6G networks: New areas and new challenges. Digital Communications and Networks, 6(3), 281–291. https://doi.org/10.1016/j.dcan.2020.07.003

- Wu, F., Wang, J., Liu, J., & Wang, W. (2017). Vulnerability detection with deep learning. In Proceedings of the 3rd IEEE International Conference on Computer and Communications (ICCC) (pp. 1298–1302). Springer International Publishing.

- Xie, Y., Ji, L., Li, L., Guo, Z., & Baker, T. (2021). An adaptive defense mechanism to prevent advanced persistent threats. Connection Science, 33(2), 359–379. https://doi.org/10.1080/09540091.2020.1832960

- Yang, Y., Wu, L., Yin, G., Li, L., & Zhao, H. (2017). A survey on security and privacy issues in internet-of-things. IEEE Internet of Things Journal, 4(5), 1250–1258. https://doi.org/10.1109/JIOT.2017.2694844

- Zhang, J., Li, L., Lin, G., Fang, D., Tai, Y., & Huang, J. (2020). Cyber resilience in healthcare digital twin on lung cancer. IEEE Access, 8, 201900–201913. https://doi.org/10.1109/ACCESS.2020.3034324

- Zhang, J., Pan, L., Han, Q.-L., Chen, C., Wen, S., & Xiang, Y. (2021). Deep learning based attack detection for cyber-physical system cybersecurity: A survey. IEEE/CAA Journal of Automatica Sinica. https://doi.org/10.1109/JAS.2021.1004261