?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

From the perspective of attribute decomposition, there are a variety of software trustworthiness metric models. However, little attention has been paid to using more rigorous methods and to performing theoretical validation. Axiomatic methods formalise the empirical understanding of software attributes through defining ideal metric properties. They can offer precise terms for the software attributes' quantification. We have utilised them to assess software trustworthiness on the basis of attribute decomposition, presented four properties, constructed a software trustworthiness measure (STMBDA for short). In this paper, we extend the set of properties, introduce two new properties, namely non-negativity and proportionality, and perfect substitutability and expectability. We verify the theoretical rationality of STMBDA by demonstrating that it conforms to the new property set and the empirical validity by evaluating the trustworthiness of 23 spacecraft software. The validation results show that STMBDA is able to effectively assess the spacecraft software trustworthiness and identify weaknesses in the development process.

1. Introduction

Software is increasingly ubiquitous in our everyday lives and plays an indispensable role in the function of our society. A system failure caused by the software operation (directly or indirectly) can have very severe consequences, resulting in not only monetary, time, or property losses, but also casualties (Wong et al., Citation2010, Citation2017). As a result, software trustworthiness has attracted widespread attention in recent years. Its measurement has become a hot topic among researchers (He et al., Citation2018; Maza & Megouas, Citation2021). Software trustworthiness is able to be represented through many attributes (Chen & Tao, Citation2019; Gupta et al., Citation2021; Steffen et al., Citation2006); in this paper, these attributes are referred to as trustworthy attributes. Trustworthy attributes are often at levels that cannot be directly measured, so they are further decomposed into sub-attributes. A lot of software trustworthiness metric models on the basis of attribute decomposition are established. However, few studies have focused on more rigorous methods for measuring software trustworthiness and theoretically validating these measures. Strictly measuring the trustworthiness of software is able to assist with the assessment and improvement of software trustworthiness. Theoretical validation is a necessary activity for defining meaningful metric models and a required step for empirical validation of them (Srinivasan & Devi, Citation2014). Theoretical validation methods can be divided into two categories (Srinivasan & Devi, Citation2014): one is on the basis of the measurement theory (Briand, Emam, et al., Citation1996; Zuse & Bollmann-Sdorra, Citation1991), and the other is in the view of axiomatic approaches (Briand, Morasca, et al., Citation1996). Axiomatic approaches, which formally describe the empirical understanding of software attribute by defining desired metric properties, have been used to measure internal attributes, such as size, inheritance, complexity, and so on (Meneely et al., Citation2012).

To make software trustworthiness measurement more stringent, we once used axiomatic approaches to assess software trustworthiness on the basis of attributes. We extend this work to utilise axiomatic approaches to evaluate software trustworthiness from the point of attribute decomposition, give the expected properties of software trustworthiness measurement in the view of attribute decomposition, including monotonicity, acceleration, sensitivity, substitutivity and expectability, and establish a software trustworthiness measure (STMBDA) (Tao et al., Citation2015). In this paper, we complete the property set and introduce two new properties, namely non-negativity and proportionality. The reasons for introducing these two properties are as follows The measure result of software trustworthiness cannot be negative and non-negativity is used to describe this case. Software trustworthiness is the user's subjective recognition of the objective quality of software. The quantification of software trustworthiness needs to truly reflect users' approval. A software that is trusted requires that individual attribute (sub-attribute) values should not be too low, proportionality is to characterise this situation. At the same time, the substitutivity and expectability are improved. The improved substitutability adds two constraints to better depict the substitution. The first is that substitutivity between critical and non-critical attributes should be not only harder than that between critical attributes but also more difficult than that between non-critical attributes. The second is that the substitutivity between sub-attributes should be harder than that between attributes. The improved expectability adds user expectations related to sub-attributes. We verify STMBDA's theoretical rationality by proving that it satisfies the new property set. Its empirical validity is verified by utilising it to measure the trustworthiness of 23 spacecraft software. Compared with some established software trustworthiness metric models, STMBDA is able to better evaluate the software trustworthiness.

The rest of the work is organised as follows. We present the related works in Section 2. The expected measurement properties from the point of the attribute decomposition are described in Section 3, including the properties presented in Tao et al. (Citation2015), the newly introduced properties and the improved properties. STMBDA is introduced in Section 4 and we carry out its theoretical validation in the same section. We give STMBDA-based measurement process in Section 5. We conduct an empirical validation of STMBDA by a real case in Section 6. The comparative study is presented in Section 7. We end the paper with the conclusion and future work in the last section.

2. Related works

Typical models comprise uncertain theory (Shi et al., Citation2008), evidence theory (Ding et al., Citation2012), machine learning (Devi et al., Citation2019; Lian & Tang, Citation2022; Medeiros et al., Citation2020; Xu et al., Citation2021), system testing (Muhammad et al., Citation2018), crowd wisdom (H. M. Wang, Citation2018), social-to-software framework (Yang et al., Citation2018), heuristic-systematic processing model (Gene & Tyler, Citation5384–5393), users feedback (B. H. Wang et al., Citation2019), STRAM (Security, Trust, Resilience, and Agility Metrics) (Cho et al., Citation2019), vulnerability loss speed index (Gul & Luo, Citation2019), input validation coding practices behaviour (Lemes et al., Citation2019), trajectory matrix (Tian & Guo, Citation2020), trustworthy evidence of source code (Liu et al., Citation2021), axiomatic approaches (Tao & Chen, Citation2009, Citation2012; J. Wang et al., Citation2015), etc.

Next, we select some of the typical models mentioned above for a detailed introduction. Shi et al. (Citation2008) first build a software dependability indicator system on the basis of the existing research results and then give a software dependability metric method by combining AHP and the fuzzy synthesised evaluation method. Ding et al. (Citation2012) develop a software trustworthiness evaluation model on the basis of evidential reasoning. In this method, they also introduce two discounting factor estimation approaches to measure the reliability degree of evaluation result. Medeiros et al. (Citation2020) utilise machine learning algorithms to obtain the knowledge related to vulnerabilities from software metrics extracted from source code of some representative software projects. Devi et al. (Citation2019) analyse the effects of class imbalance and class overlap in traditional learning models, and their research results can be used to classify data sets of software trustworthiness assessment with class imbalance. Xu et al. propose a QoS prediction model. In this model, neural networks and matrix factorisation are combined to perform non-linear collaborative filtering on the latent feature vectors of users and services (Xu et al., Citation2021). Lian and Tang (Citation2022) give an API recommendation method on the basis of neural graph collaborative filtering technique. The experimental results show that the performance of this method is better than the most advanced methods in API recommendation. Falcone and Castelfranchi (Citation2002) study the relationship between trust and control. Muhammad et al. (Citation2018) give the software trustworthiness rating strategy, which utilises the system test execution completion score to measure software trustworthiness. Yang et al. present a Social-to-Software software trustworthiness framework. This framework consists of a generalised index loss, the ability trustworthiness measurement solution, basic standard trustworthiness measurement solution and identity trustworthiness measurement solution (Yang et al., Citation2018). Wang et al. establish an updating model of software component trustworthiness. The component's trustworthy degree is calculated according to users' feedback, the updating weight is obtained based on the number of users, and the final trustworthy degree of the system is computed by the Euler distance (B. H. Wang et al., Citation2019). Xie et al. (Citation2022) present an approach to cover specifications of nominal behaviour and security, in which combined validation is utilised to verify the SysML models' nominal behaviour and Fault Tree Analysis for security analysis.

3. Properties of software trustworthiness measures based on attribute decomposition

Trustworthy attributes are divided into critical and non-critical attributes. Critical attributes are the trustworthy attributes that the software must have, and others are called non-critical attributes (Tao & Chen, Citation2009). Using the same notations as Tao and Chen (Citation2009), denote the value of critical attributes by , and the values of non-critical attributes by

. Assuming that there are n sub-attributes to form trustworthy attributes, set their values as

, respectively. Let

be attribute measure functions about

, and T be the software trustworthiness measure function of

. The expected measure properties in the view of attribute decomposition are as follows. Among them, monotonicity, acceleration, and sensitivity are properties that have been proposed in Tao et al. (Citation2015), non-negativity and proportionality are newly introduced properties, and substitutivity and expectability are improved properties.

(1) Non-negativity

.

Non-negativity implies that the evaluation result of software trustworthiness is non-negative.

(2) Proportionality

,

.

Proportionality refers to the assumption that there should be an appropriate proportionality between attributes (sub-attributes). For example, supposing that critical attributes of a certain type of software consist of resilience and survivability, high-confidence software of this type requires both good resilience and high survivability. Very good resilience and low survivability or very high survivability and poor resilience are not appropriate. There are similar reasons for the proportional suitability of sub-attributes.

(3) Monotonicity (Tao et al., Citation2015)

,

.

That is, the increased value of the attribute does not cause a decrease in the software's trustworthy degree, and the increase in the sub-attribute value does not result in a lower attribute value.

(4) Acceleration (Tao et al., Citation2015)

,

.

Acceleration is used to characterise the rate of change of a attribute (sub-attribute). When only one attribute is increased and the other attributes

remain unchanged, the efficiency of using attribute

will decrease. For the sub-attributes, there are similar explanations.

(5) Sensitivity (Tao et al., Citation2015)

,

,

where is the ith attribute's weight,

is the weight of kth sub-attribute that constitutes the ith attribute,

is a function of

and

,

is a function of

and

. Sensitivity represents the percentage change in software trustworthiness (attribute value) caused by the percentage change in the attribute value (sub-attribute value). They should be non-negative and associated with the attributes (sub-attributes) and their weights. Furthermore, the software trustworthiness should be more sensitive to the smallest critical attribute relative to its weight. The reason is that its improvement is able to greatly increase the software trustworthiness ; therefore, the percentage change in its value will result in a relatively larger percentage change in the software trustworthiness.

(6) Substitutivity

,

where

(1)

(1)

are applied to indicate the difficulty of substituting between attributes, complying with

. The smaller

, the harder it is to replace the rth and tth attributes.

,

where

(2)

(2)

are applied to represent the difficulty of replacement between the sub-attributes that constitute the ith attribute. Similarly,

meets

, the smaller

, the harder it is to replace the kth and the lth sub-attribute.

Property 1 and property 2 indicate that the attributes are able to be replaced for each other to a certain extent, so can the sub-attributes.

,

,

,

.

,

.

Property 3 states that the substitutivity between critical and non-critical attributes should be not only harder than that between critical attributes but also more difficult than that between non-critical attributes. Property 4 means that the substitutivity between sub-attributes should be harder than that between attributes.

(7) Expectability

,

,

where and

are the user's minimum expected values for sub-attributes and attributes, respectively. Expectability implies that if all sub-attributes (attributes) meet the user's expectations, then the attribute trustworthiness (software trustworthiness) should also achieve the user's expectations and be less than or equal to the maximum value of all sub-attributes (attributes).

4. A software trustworthiness measure based on the decomposition of attributes and its theoretical validation

In this section, we introduce the software trustworthiness measure on the basis of the attribute decomposition constructed in Tao et al. (Citation2015) and theoretically validate it by demonstrating that it conforms to the properties described in Section 3.

Definition 4.1

Software trustworthiness measure based on the decomposition of attributes (STMBDA for short) (Tao et al., Citation2015)

STMBDA is defined as follows.

Where

m, s and n are the number of critical attributes, non-critical attributes and sub-attributes, respectively;

are the values of attributes;

T is the software trustworthiness measure function of

;

α and β represent the proportion of critical and non-critical attributes, such that

and

.

are the weight values of critical attributes, satisfying

and

;

express the weight values of the non-critical attributes with

and

;

are the weight values of sub-attributes making up the ith attribute with

and

, if the kth sub-attribute is not one of the sub-attributes forming the ith attribute, set

;

ϵ is utilised to control the effect of the smallest critical attribute on the software trustworthiness with

, among them,

is a value that all attributes must achieve and

is the i with

;

ρ is a parameter associated with the substitutivity between critical and non-critical attributes with

;

are parameters related to the substitutivity between the sub-attributes that make up the ith attribute satisfying

;

are the values of sub-attributes with

, among them,

is a value that all sub-attributes must reach.

For convenience, we denote the i with by

, the i with

by

, the i with

by

, the i with

by

, and let

The proof of Proposition 4.2(2) has been given in the Claim 1 in Tao et al. (Citation2015). Here we only give the proof of Proposition 4.2(1).

Proposition 4.2

.

T conforms to non-negativity and

.

Proof.

(1) Because

then

Substituting

in the above inequality, we can get

Raising to

power in the above inequality, it follows that

From the definition of STMBDA, we know that

then

Proposition 4.3

Proportionality holds for T.

Proof.

Since

then for

,

According to Proposition 4.2(1), we can obtain that

it follows that for

,

The proposition follows immediately from what we have proved.

Proposition 4.4

Monotonicity is satisfied by T (Tao et al., Citation2015).

Proposition 4.5

T complies with acceleration (Tao et al., Citation2015).

Proposition 4.6

Sensitivity holds for T (Tao et al., Citation2015).

The proof process of Proposition 4.4, 4.5, and 4.6 can be found in Tao et al. (Citation2015)

Proposition 4.7

T meets substitutivity.

Proof.

By Equation (Equation2(2)

(2) ), the substitutivity between the sub-attributes making up the ith

attribute is able to be determined as follows:

(3)

(3) Similarly, through Equation (Equation1

(1)

(1) ), for the substitutivity between critical attributes, it follows that

(4)

(4) for the substitutivity between non-critical attributes, we can deserve

(5)

(5) and the substitutivity between critical and non-critical attributes can be obtained as

Since for

,

and

then we have

therefore,

Observe that for

,

and

it follows

(6)

(6) Similarly, for

,

,

Note that for

,

and

it follows

(7)

(7) It can be seen from Equation (Equation3

(3)

(3) ) that the sub-attributes can be replaced with each other to a certain extent. According to Equations (Equation4

(4)

(4) ), (Equation5

(5)

(5) ), (Equation6

(6)

(6) ) and (Equation7

(7)

(7) ), attributes can be replaced by each other to a certain extent. By Equations (Equation6

(6)

(6) ) and (Equation7

(7)

(7) ), the substitutivity between critical and non-critical attributes is harder than both the substitutivity between critical attributes and that between non-critical attributes, and the substitutivity between sub-attributes is harder than that between attributes.

In summary, the proposition is proved.

Proposition 4.8

T satisfies expectability.

Proof.

By the definition of STMBDA, we can obtain that

According to Proposition 4.2(1), it follows that

Since

and from the proof process of Proposition 4.2(1) we know that

Then

Likewise, we can prove

Because of

and

it follows that

Due to

, we obtain that

So

Recall that we have proved that

then

Since

it follows that

and we can deserve that

From the conclusions of Proposition 4.2, 4.3, 4.4, 4.5, 4.6, 4.7 and Proposition4.8, the following theorem can be obtained.

Theorem 4.9

STMBDA conforms to all the seven properties introduced in Section 3.

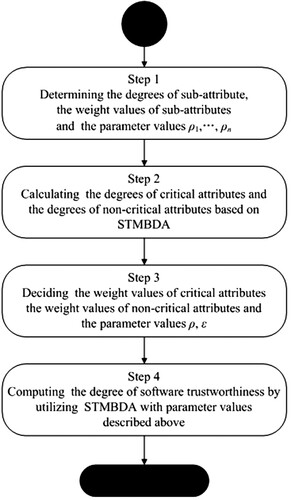

5. Measurement process based on STMBDA

The STMBDA-based measurement process is given in Figure . For a given software, Step 1 is used to determine the sub-attribute values , the sub-attribute weight values

and the parameter values

. Then the attribute values

are calculated by STMBDA in Step 2. Step 3 computes critical attribute weight values

, the non-critical attribute weight values

, and the parameter values ρ and ϵ. The above weight values can be calculated using the method proposed in Tao and Chen (Citation2012). In Step 4, STMBDA is utilised to get the degree of software trustworthiness based on the results of Step 2 and Step 3.

6. Empirical validation

The empirical validation contains case study, survey and experiment (Fenton & Bieman, Citation2015). An empirical validation of STMBDA is conducted by a real case in this section. The trustworthiness of spacecraft software is one of the crucial factors to guarantee the success of space mission. However, their current evaluation is only qualitative. To tighten the measurement of trustworthiness of the spacecraft software, with the assistance of STMBDA, their trustworthiness is assessed by utilising axiomatic approaches. The trustworthy attributes of spacecraft software contain 9 attributes, subdivided into 28 sub-attributes (J. Wang et al., Citation2015). The attributes, sub-attributes and the corresponding weight values are described in Table (J. Wang et al., Citation2015).

Table 1. Trustworthy attributes, sub-attributes of spacecraft software and their weight values.

Following the weight values of the nine attributes, the first four attributes are regarded as critical attributes and the last five attributes as non-critical attributes. The following parameter values for STMBDA are then deserved by Table : m = 4, s = 5, n = 28, ,

, and

If the kth sub-attribute is not in the set of the sub-attributes constituting the ith attribute, then

, which are not given in this table.

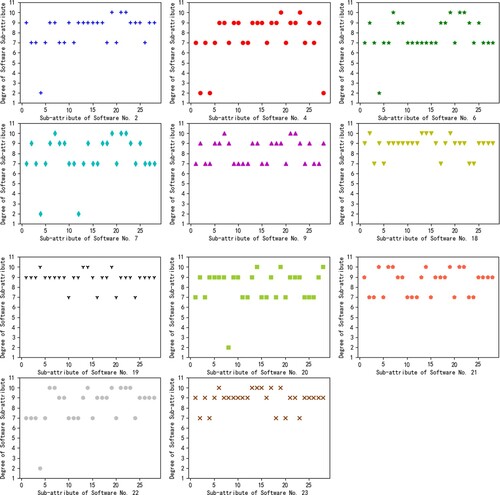

Trustworthiness of each sub-attribute is classified into four levels: A, B, C and D. To calculate the attribute values based on STMBDA, levels of sub-attributes are converted to specific values. Level A is converted to 10, Level B to 9, Level C to 7, and Level D to 2. A panel of 10 experts was invited to classify the 28 sub-attributes of the 23 spacecraft software (J. Wang et al., Citation2015). The scoring method is that each expert divides them according to the four grades of A, B, C, and D in the form of a combination of subjective and objective principles. For the specific classification standards, please refer to Section 7.2 of the reference (Chen & Tao, Citation2019). We chose 11 representative software as subjects, which are numbered 2, 4, 6, 7, 9, 18, 19, 20, 21, 22 and 23. Figure shows the distributions of sub-attribute values of these 11 software. The horizontal axis of each subgraph of Figure displays the number of sub-attribute, and the vertical axis shows the sub-attribute values.

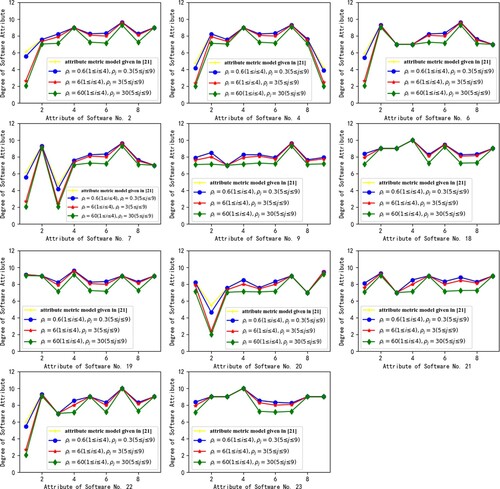

Given that the difficulty of the substitution between the sub-attributes constituting critical attributes should be greater than that between the sub-attributes making up non-critical attributes, let . For simplicity, set

, their specific values used in this case are given in the Figure . The distributions of attribute values computed through STMBDA of these 11 software are presented in Figure . In each subgraph of Figure , the vertical axis represents the number of attribute and the horizontal axis displays the attribute value. In order to compare with the model given in J. Wang et al. (Citation2015) (referred to as PBSTE3), PBSTE3 is also used to measure the attribute trustworthiness of these 11 representative software. In PBSTE3, both the trustworthiness measurement model and the attribute measurement model are in the form of the product of power functions. According to the sub-attribute weight values given in Table , the attribute value distributions calculated by the attribute measurement model in PBSTE3 are shown as the yellow line in Figure .

It can be seen from Figures and that the measurement results of attribute trustworthiness obtained by STMBDA can reflect the actual development of spacecraft software. For instance, since such software is developed by GJB5000A standard, the software change control for these 11 software is generally good. Meanwhile, the weaknesses in the software development process are able to be easily identified. For example, these 11 software generally lack special testing, the reason is that the testing verification technology is not advanced because of the design of dynamic timing, space, data use, and control behaviour, etc. Furthermore, the attribute metric model presented herein is more universal when compared to the attribute metric model built in J. Wang et al. (Citation2015). We can adjust software attribute trustworthiness through the parameters in STMBDA. If we have higher trustworthy requirements for attribute, then we raise the values of

, and vice versa. However, the distributions of sub-attribute values in Figure demonstrate that some grading criteria are too high to be achieved and some are too low to be easily implemented, thus the grading standards of sub-attribute trustworthiness need to be improved in future applications.

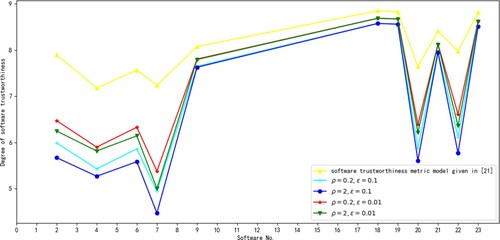

For the given parameter values

the distributions of trustworthy degrees of these 11 software calculated through STMBDA are given in Figure . The yellow line in Figure is obtained by the software trustworthiness metric model presented in J. Wang et al. (Citation2015) according to the weight values presented in Table . As can be seen from Figure , STMBDA does not greatly raise the trustworthy degree of the software due to the high value of individual sub-attribute, but the sub-attributes with lower values will reduce the degree of the software. That is, for the software to be trusted, each attribute must be trusted to a certain value. Meanwhile, the software trustworthiness measurement model built in J. Wang et al. (Citation2015) cannot reflect this situation well. In a similar way, we can adjust the software trustworthiness through the parameter ρ as required. If we have a higher trustworthy requirement for software, then we raise the value of ρ, and vice versa. At the same time, we can regulate the influence of the minimum critical attribute on software trustworthiness by modifying the parameter ϵ

This case demonstrates that STMBDA is suited to the measurement of the spacecraft software trustworthiness and can effectively assess their trustworthiness and accurately discover the vulnerability in the development process, which is very important for improving the development level of this type of software.

7. Comparative study

In the remainder of this section, we compare STMBDA with PBSTE1 (Tao & Chen, Citation2009), PBSTE2 (Tao & Chen, Citation2012), PBSTE3 (J. Wang et al., Citation2015), evidence theory-based software trustworthiness measure (ERBSTM) (Ding et al., Citation2012) and fuzzy theory-based software trustworthiness measure (FTBSTM) (Shi et al., Citation2008) by the properties described in Section 3. The comparison results are given in Table , among them, × indicates the measure does not meet the corresponding property and √ represents the measure conforms to the corresponding property.

Table 2. Comparative study by the properties introduced in Section 3.

It is easy to prove that all the metric models comply with non-negativity. PBSTM1, PBSTM2, PBSTM3, ERBSTM and FTBSTM do not take into account the issue associated with proportionality, so none of them meet proportionality. Ding et al. (Citation2012) and Shi et al. (Citation2008) demonstrate both ERBSTM and FTBSTM satisfy expectability. J. Wang et al. (Citation2015) show that PBSTM3 conforms to monotonicity, acceleration, sensitivity, substitutivity and expectability.

Next, we give a counter-example to show that neither PBSTM1 nor PBSTM2 satisfies the expectability. For a given software, suppose the number of its critical attributes m is 3 and the number of its non-critical attributes s is 2. The weight vector of critical attributes is , and that of non-critical attributes is

. Let

and

. Then the trustworthy degree of this software is 6.69 calculated by PBSTM1 and 6.54 computed by PBSTM2, both of which are less than minimum value of all the trustworthy attributes. Thus, neither PBSTM1 nor PBSTM2 complies with the expectability.

The rest of the comparison results can be obtained from the comparative study of Tao and Zhao (Citation2018).

Finally, it can be concluded from Table that STMBDA is superior to all five methods from the perspective of the properties introduced in Section 3.

8. Conclusion and future work

In this paper, we complete the set of expected properties of software trustworthiness measurement based on attribute decomposition, give two new properties, namely non-negativity and proportionality, improve substitutability and expectability, and introduce STMBDA given in Tao et al. (Citation2015). We verify the theoretical rationality of STMBDA through showing that it complies with the new property set, and the empirical validity by measuring the trustworthiness of 23 spacecraft software. We also present a comparative study, which shows that STMBDA outperforms the other five models in terms of the new property set. It should be noted that we only give the expected property set from the perspective of experience, which is reasonable but not complete. On the other hand, in the empirical validation, we specify the parameter values ϵ, ρ and in advance and do not give them the specific solving algorithms.

Several issues are worthy for further investigation. Firstly, we believe that the software trustworthiness measure properties given in this paper are necessary, but not sufficient. We will be interested in extending and perfecting this set of properties. Secondly, attribute decomposition-based software trustworthiness measures that do not meet the properties described in Section 3 cannot be taken as legitimate measures. However, metrics satisfying these properties should be used only as candidate metrics, and they still need to be better checked. We have utilised STMBDA to spacecraft software trustworthiness measurement, and we will use STMBDA for trustworthiness measurement of other types of software to conduct a comprehensive empirical validation in the future. Thirdly, we do not give a way to calculate the parameter values ϵ, ρ and in STMBDA, and how to determine these parameter values is also important for future work.

Acknowledgments

A preliminary version of this work was presented at 20th IEEE International Conference on Software Quality, Reliability and Security Companion (QRS-C) [Decomposition of Attributes Oriented Software Trustworthiness Measure Based on Axiomatic Approaches (Tao et al., Citation2020)].

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Briand, L. C., Emam, K. E., & Morasca, S. (1996). On the application of measurement theory in software engineering. Empirical Software Engineering, 1(1), 61–88. https://doi.org/10.1007/BF00125812

- Briand, L. C., Morasca, S., & Basili, R. V. (1996). Property-based software engineering measurement. IEEE Transactions on Software Engineering, 22(1), 68–86. https://doi.org/10.1109/32.481535

- Chen, Y. X., & Tao, H. W. (2019). Software trustworthiness measurement evaluation and enhancement specifications. Science Press.

- Cho, J. H., Xu, S. H., Hurley, P. M., Mackay, M., Benjamin, T., & Beaumont, M. (2019). STRAM: Measuring the trustworthiness of computer-based systems. ACM Computing Surveys, 51(6), 1–47. https://doi.org/10.1145/3277666

- Devi, D., Biswas, S. K., & Purkayastha, B. (2019). Learning in presence of class imbalance and class overlapping by using one-class SVM and undersampling technique. Connection Science, 31(2), 105–142. https://doi.org/10.1080/09540091.2018.1560394

- Ding, S., Yang, S. L., & Fu, C. (2012). A novel evidential reasoning based method for software trustworthiness evaluation under the uncertain and unreliable environment. Expert Systems with Applications, 39(3), 2700–2709. https://doi.org/10.1016/j.eswa.2011.08.127

- Falcone, R., & Castelfranchi, C. (2002). Issues of trust and control on agent autonomy. Connection Science, 14(4), 249–263. https://doi.org/10.1080/0954009021000068763

- Fenton, N., & Bieman, J. (2015). Software metrics: A rigorous and practical approach (3rd ed.). CRC Press.

- Gene, M. A., & Tyler, J. R. (2018, January 3–6). Trustworthiness perceptions of computer code a heuristic-systematic processing model. Proceedings of the 51st Hawaii International Conference on System Sciences, Hilton Waikoloa Village, Hawaii (pp. 5384–5393).

- Gul, J., & Luo, P. (2019, August 5–8). A unified measurable software trustworthy model based on vulnerability loss speed index. Proceedings of 18th IEEE International Conference on Trust, Security and Privacy in Computing and Communications/13th IEEE International Conference on Big Data Science and Engineering, Rotorua, New Zealand (pp. 18–25).

- Gupta, S., Aroraa, H. D., Naithania, A., & Chandrab, A. (2021). Reliability assessment of the planning and perception software competencies of self-driving cars. International Journal of Performability Engineering, 17(9), 779–786. https://doi.org/10.23940/ijpe.21.09.p4.779786

- He, J. F., Shan, Z. G., Wang, J., Pu, G. G., Fang, Y. F., Liu, K., Zhao, R. Z., & Zhang, Z. T. (2018). Review of the achievements of major research plan of trustworthy software. Bulletin of National Natural Science Foundation of China, 32(3), 291–296. https://doi.org/10.16262/j.cnki.1000-8217.2018.03.009

- Lemes, C. I., Naessens, V., & Vieira, M. (2019, October 28–31). Trustworthiness assessment of web applications: Approach and experimental study using input validation coding practices. Proceedings of IEEE 30th International Symposium on Software Reliability Engineering (ISSRE), Berlin, Germany (pp. 435–445).

- Lian, S. X., & Tang, M. D. (2022). API recommendation for Mashup creation based on neural graph collaborative filtering. Connection Science, 34(1), 124–138. https://doi.org/10.1080/09540091.2021.1974819

- Liu, H., Tao, H. W., & Chen, Y. X. (2021). An approach for trustworthy evidence of source code oriented aerospace software trustworthiness measurement. Aerospace Control and Application, 47(2), 32–41. https://doi.org/10.3969/j.issn.1674-1579

- Maza, S., & Megouas, O. (2021). Framework for trustworthiness in software development. International Journal of Performability Engineering, 17(2), 241–252. https://doi.org/10.23940/ijpe.21.02.p8.241252

- Medeiros, N., Ivaki, N., Costa, P., & Vieira, M. (2020). Vulnerable code detection using software metrics and machine learning. IEEE Access, 8, 219174–219198. https://doi.org/10.1109/Access.6287639

- Meneely, A., Smith, B., & Williams, L. (2012). Validating software metrics: A spectrum of philosophies. ACM Transactions on Software Engineering and Methodology, 21(4), 1–28. https://doi.org/10.1145/2377656.2377661

- Muhammad, D. M. S., Fairul, R. F., Loo, F. A., Nur, F. A., & Norzamzarini, B. (2018). Rating of software trustworthiness via scoring of system testing results. International Journal of Digital Enterprise Technology, 1(1/2), 121–134. https://doi.org/10.1504/IJDET.2018.092637

- Shi, H. L., Ma, J., & Zou, F. Y. (2008, August 29–September 2). Software dependability evaluation model based on fuzzy theory. Proceedings of International Conference on Computer Science & Information Technology, Singapore (pp. 102–106).

- Srinivasan, K. P., & Devi, T. (2014). Software metrics validation methodologies in software engineering. International Journal of Software Engineering & Applications, 5(6), 87–102. https://doi.org/10.5121/ijsea

- Steffen, B., Wilhelm, H., Alexandra, P., Becker, S., Boskovic, M., Dhama, A., Hasselbring, W., Koziolek, H., Lipskoch, H., Meyer, R., Muhle, M., Paul, A., Ploski, J., Rohr, M., Swaminathan, M., Warns, T., & Winteler, D. (2006). Trustworthy software systems: A discussion of basic concepts and terminology. ACM SIGSOFT Software Engineering Notes, 31(6), 1–18.https://doi.org/10.1145/1218776.1218781

- Tao, H. W., & Chen, Y. X. (2009, September 15–18). A metric model for trustworthiness of softwares. Proceedings of the 2009 IEEE/WIC/ACM International Conference on Web Intelligence and International Conference on Intelligent Agent Technology, Milan, Italy (pp. 69–72).

- Tao, H. W., & Chen, Y. X. (2012). A new metric model for trustworthiness of softwares. Telecommunication Systems, 51(2-3), 95–105. https://doi.org/10.1007/s11235-011-9420-9

- Tao, H. W., Chen, Y. X., & Pang, J. M. (2015, May 18). A software trustworthiness measure based on the decompositions of trustworthy attributes and its validation. Proceedings of Industrial Engineering, Management Science and Applications, Tokyo, Japan (pp. 981–990).

- Tao, H. W., Chen, Y. X., & Wu, H. Y. (2020, December 11–12). Decomposition of attributes oriented software trustworthiness measure based on axiomatic approaches. Proceedings of the 20th IEEE International Conference on Software Quality, Reliability and Security Companion (QRS-C), Macau, China (pp. 308–315).

- Tao, H. W., & Zhao, J. (2018). Source codes oriented software trustworthiness measure based on validation. Mathematical Problems in Engineering, 2018(3), 1–10.https://doi.org/10.1155/2018/6982821

- Tian, J. F., & Guo, Y. H. (2020). Software trustworthiness evaluation model based on a behaviour trajectory matrix. Information and Software Technology, 119(1), 106233. https://doi.org/10.1016/j.infsof.2019.106233

- Wang, B. H., Chen, Y. X., Zhang, S., & Wu, H. Y. (2019). Updating model of software component trustworthiness based on users feedback. IEEE Access, 7, 60199–60205. https://doi.org/10.1109/Access.6287639

- Wang, H. M. (2018). Harnessing the crowd wisdom for software trustworthiness: Practices in China. Software Engineering Notes, 43(1), 6–11. https://doi.org/10.1145/3178315.3178328

- Wang, J., Chen, Y. X., Gu, B., Guo, X. Y., Wang, B. H., Jin, S. Y., Xu, J., & Zhang, J. Y. (2015). An approach to measuring and grading software trust for spacecraft software. Scientia Sinica Techologica, 45(2), 221–228. https://doi.org/10.1360/N092014-00479

- Wong, W. E, Debroy, V., Surampudi, A., Kim, H., & Siok, M. F. (2010, June 9–11). Recent catastrophic accidents: Investigating how software was responsible. Proceedings of the 4th IEEE International Conference on Secure Software Integration and Reliability Improvement (SSIRI), Singapore (pp. 14–22).

- Wong, W. E, Li, X. L., & Laplante, P. A. (2017). Be more familiar with our enemies and pave the way forward: A review of the roles bugs played in software failures. Journal of Systems and Software, 133(2/3), 68–94. https://doi.org/10.1016/j.jss.2017.06.069

- Xie, J., Tan, W. A., Yang, Z. B., Li, S. M., Xing, L. Q., & Huang, Z. Q. (2022). SysML-based compositional verification and safety analysis for safety-critical cyber-physical systems. Connection Science, 34(1), 911–941. https://doi.org/10.1080/09540091.2021.2017853

- Xu, J. L., Xiao, L. J., Li, Y. H., Huang, M. W., Zhuang, Z. C., Weng, T. H., & Liang, W. (2021). NFMF: Neural fusion matrix factorisation for QoS prediction in service selection. Connection Science, 33(3), 753–768. https://doi.org/10.1080/09540091.2021.1889975

- Yang, X., Jabeen, G., Luo, P., Zhu, X. L., & Liu, M. H. (2018). A unified measurement solution of software trustworthiness based on social-to-software framework. Journal of Computer Science and Technology, 33(3), 603–620. https://doi.org/10.1007/s11390-018-1843-2

- Zuse, H., & Bollmann-Sdorra, P. (1991, May 5). Measurement theory and software measures. Proceedings of the BCS-FACS Workshop on Formal Aspects of Measurement, South Bank University, London (pp. 219–259).