?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

The search space of large-scale multi-objective optimization problems (LSMOPs) is huge because of the hundreds or even thousands of decision variables involved. It is very challenging to design efficient algorithms for LSMOPs to search the whole space effectively and balance the convergence and diversity at the same time. In this paper, to tackle this challenge, we develop a new algorithm based on a weighted optimization framework with two effective strategies. The weighted optimization framework transforms an LSMOP into multiple small-scale multi-objective optimization problems based on a problem transformation mechanism to reduce the dimensionality of the search space effectively. To further improve its effectiveness, we firstly propose a centerline symmetry strategy to select reference solutions to transform the LSMOPs. It takes not only some non-dominated solutions but also their centerline symmetric points as the reference solutions, which can enhance the population diversity to avoid the algorithm falling into local minima. Then, a new double-line transformation function is designed to expand the search range of the transformed problem to further improve the convergence and diversity. With the two strategies, more widely distributed potential search areas are provided and the optimal solutions can be found easier. To demonstrate the effectiveness of our proposed algorithm, numerical experiments on widely used benchmarks are executed and the statistical results show that our proposed algorithm is more competitive and performs better than the other state-of-the-art algorithms for solving LSMOPs.

1. Introduction

Many real-world applications can be formulated as multi-objective optimisation problems (MOPs), such as route resource allocation (Gong et al., Citation2012; Y. Zhang et al., Citation2017), scheduling (Yuan & Xu, Citation2015), image processing (Gong & Zhou, Citation2018), cyber-physical social systems (Wang et al., Citation2020), and so on. Evolutionary algorithms, such as genetic algorithm (GA) (Zhao et al., Citation2020), particle swarm optimisation (PSO) (Nagra et al., Citation2020; Too et al., Citation2022), and differential evolution (DE) (Li et al., Citation2022; Zhao et al., Citation2021), have the characteristics of self-organisation and self-learning. Therefore, they are widely used in the field of optimisation. In the past few decades, many multi-objective optimisation evolutionary algorithms (MOEAs) have been developed for solving MOPs. They can be classified into three categories: Pareto dominance-based MOEAs (Deb et al., Citation2002; J. Liu, Wang, Wang, et al., Citation2019; Maltese et al., Citation2018; Sun et al., Citation2020; Xue & Wang, Citation2017), decomposition-based MOEAs (Dai & Wang, Citation2015a, Citation2015b; Dai et al., Citation2015; J. Liu, Wang, Fan, et al., Citation2019; E. Song & Li, Citation2022), and indicator-based MOEAs (Bader & Zitzler, Citation2011; Beume et al., Citation2007; Bringmann & Friedrich, Citation2010; Russo & Francisco, Citation2014; While et al., Citation2012). These MOEAs are very effective for small and medium scale MOPs.

However, with the development of technology, more and more decision variables are involved in the multi-objective optimisation models established for real-world engineering problems. For example, flight safety systems optimisation (Everson & Fieldsend, Citation2006) has more than 1500 decision variables, EEG time series data optimisation (Goh et al., Citation2015, may 25–28) involves more than 4800 decision variables, and the number of decision variables increases exponentially with the increase of layers in the deep neural network architecture optimisation problem (Tian et al., Citation2021). These MOPs with more than 100 decision variables are called LSMOPs. As the number of decision variables increases, the search space of MOPs will increase exponentially. The existing MOEAs perform not well for LSMOPs because of the huge search space. Therefore, many scholars have been attracted to study LSMOPs in recent years and numerous related algorithms have been developed, which can be classified into three categories.

The algorithms in the first category apply the divide-and-conquer technique to solve LSMOPs (Antonio & Coello, Citation2013, Citation2016; Cao et al., Citation2020, Citation2017; H. Chen et al., Citation2020; Ma et al., Citation2016; A. Song et al., Citation2016; X. Zhang et al., Citation2018). For example, Antonio et al. divide the decision variables into several equal-length subcomponents using the existing random grouping method. Each subproblem evolves collaboratively with other subproblems based on a differential evolution algorithm to optimise the LSMOPs (Antonio & Coello, Citation2013). Song et al. propose a dynamic random grouping method, which determines the number of groups dynamically according to the quality of the obtained solutions in each cycle (A. Song et al., Citation2016). After grouping, a decomposition-based MOEA, i.e. MOEA/D (Q. Zhang & Li, Citation2007), is used to optimise each subgroup. Because of the conflicts among the objectives, the existing grouping methods proposed for large-scale single-objective optimisation problems cannot be well applied in LSMOPs. Some decision variable analysis techniques and grouping strategies are proposed to group the decision variables for LSMOPs. Ma et al. propose an MOEA based on decision variables analysis (MOEA/DVA). In MOEA/DVA, the decision variables are classified into three types, i.e. convergence-related, diversity-related and mixed variables, according to their contributions to the convergence and diversity of the algorithm. Then the existing large-scale global optimisation grouping method is used to further divide the convergence-related variables into subgroups and the small-scale sub-MOPs are optimised collaboratively (Ma et al., Citation2016). To improve the grouping accuracy, Cao et al. apply the recursive differential grouping method to MOEA/DVA to adjust the misclassified variables in the three types of groups (Cao et al., Citation2017). Based on the main idea of MOEA/DVA, Zhang et al. group the decision variables into convergence-related and diversity-related variables instead of the three types by using the k-means clustering strategy to reduce the dimensionality of the LSMOPs (X. Zhang et al., Citation2018). However, these decision interaction analysis methods will consume a large number of computation resources, leading to low computational efficiency.

The algorithms in the second category propose some effective strategies to accelerate the search for LSMOPs (Gu & Wang, Citation2020; He et al., Citation2022; Hong et al., Citation2019; Tian et al., Citation2020; Yi et al., Citation2018, Citation2020; Y. Zhang et al., Citation2020). The evolutionary algorithm based on a direction-guided adaptive offspring generation (DGEA) (He et al., Citation2022) proposes an adaptive reproduction mechanism to guide the population evolution, thereby producing promising offspring based on the selected parents with strong convergence and diversity. Hong et al. present a diversity enhancement strategy to guide the population towards different regions of the Pareto frontier, to avoid the algorithm falling into local minima (Hong et al., Citation2019). The large-scale multi-objective competitive swarm optimiser algorithm (LMOCSO) (Tian et al., Citation2020) designs a new two-stage particle updating mechanism to update the position of each particle to solve LSMOPs with complicated landscapes. The updated particles are determined by the competitive mechanism of the competitive swarm optimiser (Tian et al., Citation2020). However, since these algorithms do not apply any dimensionality reduction strategy, the scale of the problem is still large and it requires a large number of function evaluations for the algorithms to search the whole decision variable space.

The algorithms belonging to the third category are based on non-grouping dimensionality reduction strategy, e.g. problem transformation technology and fuzzy technology (He et al., Citation2019; R. Liu et al., Citation2020; Qin et al., Citation2021; Tang et al., Citation2020; Zille et al., Citation2018). For example, A large-scale multi-objective optimisation framework via problem reformulation called LSMOF is proposed to reduce the dimension of the decision variables. It first presents a bi-direction weight variable association mechanism that associates two weight vectors to one selected solution to specify the search directions to accelerate the population evolution. Then by using a performance indicator, it reformulates an LSMOP into a small-scale SOP (He et al., Citation2019). By doing this, the search space is narrowed down from the whole space to several straight lines, which accelerates the convergence of the algorithm for solving LSMOPs. However, its performance is easily affected by the selection of reference points. Qin et al. design a directed sampling strategy based on LSMOF, which samples solutions based on the uniformly distributed search directions to reformulate the original problem to improve the population diversity (Qin et al., Citation2021). However, the sharp reduction of the search space still affects the population diversity of this kind of algorithm to a certain extent. Zille et al. propose a weighted optimisation framework (WOF) to transform the LSMOP into several small-scale MOPs. It first divides the n-dimensional decision variables into g (g<n) subgroups by adopting the existing grouping methods, e.g. random grouping (Omidvar et al., Citation2010), ordered grouping (W. Chen et al., Citation2010), and differential grouping (Omidvar et al., Citation2014). The variables in one subgroup are appointed with the same weight variable. By using a transformation function, an original n-dimensional LSMOP can be converted into a g-dimensional MOP with g weight vectors as decision variables, thereby reducing the dimensionality of the original LSMOP. Yang et al. present a fuzzy decision variables framework for LSMOPs to reduce the decision variable search space. It consists of two stages: fuzzy evolution and precise evolution (Yang et al., Citation2021). In the fuzzy evolution, it blurs the decision variables to reduce the search space to accelerate the convergence of the algorithm. Then the existing MOEAs are used to optimise the original problem to maintain the population diversity in the precise evolution stage. The algorithms in this category are more effective than those of the other two categories. Among the algorithms in this category, WOF is a very effective algorithm. However, its effectiveness is also affected by the selection of reference points. And the form of the transformation function also has a great impact on the search range of the transformed problem, thus affecting the performance of WOF. It is necessary for us to study effective strategies to enhance the performance of WOF to improve the ability to solve LSMOPs.

Based on the analysis of the related works, we can know that the research on large-scale multi-objective optimisation algorithms (LSMOAs) is still in the early stage. In this paper, to alleviate the limitations of WOF and improve the accuracy of solving LSMOPs, we present a new algorithm with two effective strategies based on WOF. The main innovations are summarised as follows:

A new centreline symmetry strategy for selecting reference solutions is presented to improve the diversity of WOF.

A new transformation function is designed to expand the search range for the selected reference solutions.

A new algorithm with the above two strategies is proposed and numerical experiments are carried out on widely used LSMOP benchmarks to verify its performance.

2. Preliminary and motivation

The purpose of LSMOAs based on problem transformation is to achieve dimensionality reduction. Among them, WOF is a very effective algorithm. Because our algorithm is designed based on WOF, in this section, we elaborate on the main idea of WOF and then analyse its limitations. Subsequently, the motivation of this paper is presented.

2.1. The main idea of WOF

There are two stages in WOF. In the first stage, the original large-scale problem is transformed into several low-dimensional MOPs to reduce the search space of the decision variable space. In the second stage, one of the existing MOEAs is used to optimise the original problem

. Specifically, in the first stage, suppose

is an optimal solution of the n-dimensional problem

. For any solution

and a linear transformation function

, we can find a real number optimal vector

such that

. Then, for an arbitrary but fixed solution

, we can optimise

instead of optimising

. To reduce the dimensionality of the original problem, WOF decomposes n decision variables into g groups, where

. Then assign the same weight

to the variables in the same group

, where

, i.e.

(1)

(1) where l denotes the size of each group. Then, by taking a fixed

, the problem

of optimising n-dimensional

is transformed into the problem

of optimising g-dimensional

, thereby reducing the dimensionality of the LSMOPs. In WOF, to keep the population diversity, m solutions in the first non-dominated front are selected as the reference solutions according to the crowding distance to execute the transformation, and then m transformed low-dimensional MOPs are optimised. Finally, m optimal weight vectors

are used to update the individuals of the original problem according to the transformation function.

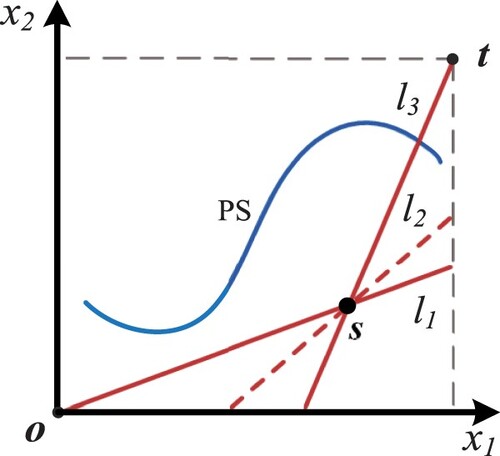

Geometrically speaking, the result of the above problem transformation is to narrow the whole search space to m straight lines. In Figure (a), the blue curve PS denotes the Pareto optimal set (PS) and is a selected reference solution.

and

denote the origin and the upper boundary point, respectively. After using transformation functions, e.g. the linear transformation function, the search range is reduced from the whole 2D space to one straight line. Searching along the straight line can obtain the optimal solution or the sub-optimal solution more quickly than searching on the entire two-dimensional space.

Figure 1. Illustration examples of WOF. (a) The search space transformation and (b) The effect of reference solution selection on the search range.

Based on the principle of the problem transformation method, we can know that the search for the transformed problem focuses on some potential areas which may result in faster convergence. However, due to the drastic reduction of the search space, the population diversity will deteriorate. Therefore, in the second stage of WOF, to make up for the diversity loss caused by optimising the transformed problem, the original problem

is optimised by the existing MOEAs. Since optimising the original problem

in the whole search space may obtain all possible solutions in the whole space, which may improve the population diversity. For details of the algorithm, please refer to WOF (Zille et al., Citation2018).

2.2. Motivation

According to the principle of WOF, we can know that factors affecting the search range are the selection of the grouping method, reference solutions, and the transformation function. Since the correlation between decision variables of LSMOPs is complex, there is still no effective large-scale multi-objective grouping method to group the decision variables of LSMOPs reasonably. At present, the commonly used grouping methods of WOF are the ones proposed for large-scale global optimisation problems, e.g. random grouping, linear grouping, ordered grouping, and differential grouping. The influence of the four grouping methods on WOF has been investigated in the original paper and the results show that WOF using the ordered grouping method is more competitive. Therefore, in our proposed algorithm, the ordered grouping method is still used to group the decision variables. In this paper, we focus on the influence of the latter two factors, i.e. the selection of reference solutions and the transformation function.

As shown in Figure (b), if the selected reference solutions are located in a small area of the search space, the transformed search range may be concentrated in a certain area as shown by the six straight lines. The search range is far away from PS, which may cause the algorithm to fail to find the optimal solutions. Thus, how to select appropriate reference solutions to expand the search coverage of the algorithm and improve the population diversity is an urgent problem to enhance the performance of WOF.

Additionally, the transformation function can determine the search directions for the selected reference solutions. As shown in Figure , the linear transformation function transforms the search space into one straight line based on one reference solution. If the LSMOP is complicated and the optimal solutions or the sub-optimal solutions do not locate near the line, the efficiency of WOF will be affected. Thus, it is an effective way to improve the performance of the WOF in solving LSMOPs by designing a new transformation function to add potential search directions.

3. The proposed algorithm

In this section, we propose a new weighted optimisation algorithm with a centerline symmetry strategy for selecting reference solutions and a double-line transformation function, referred to as CSDT, for solving LSMOPs. The main framework is shown in Algorithm 1. CSDT begins with the population initialisation and is followed by two stages. In the first stage, according to our proposed selection mechanism in Section 3.1, m solutions with relatively wide distribution are selected as reference solutions for the problem transformation. Subsequently, one of the existing grouping methods is used to group the decision variables into g subgroups. For each reference solution, the original problem is transformed into a g-dimensional problem

based on the transformation function designed in Section 3.2. Then, the transformed problem

is optimised by the existing MOEAs to obtain promising weight vectors. These weight vectors are utilised to reproduce new promising offspring based on the original population by applying the transformation function. Optimizing the transformed problem can reduce the search space of the algorithm and can concentrate the search in the potential search areas, thereby significantly accelerating the population convergence. Subsequently, the existing MOEAs are utilised to optimise the problem

to obtain solutions with good diversity in the second stage.

In the following, we will introduce the details of the proposed selection strategy and transformation function.

3.1. A new reference solutions selection strategy

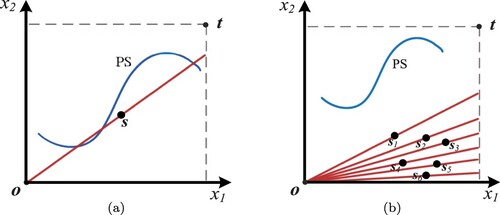

In this section, we design a strategy to select widely distributed solutions to transform the original problem to get some widely distributed search lines. For the convenience of description, we define the diagonal line connecting the upper and lower boundary points of the decision variable space as the centreline. The details are as follows.

Firstly, select m/2 best solutions by using the existing environmental selection method, e.g. NSGA-II, where m is the number of the reference solutions to be selected. Generate m/2 centreline symmetry points based on the m/2 best solutions selected before. The method of generating centreline symmetry points is described in the following.

As shown in Figure (a), suppose that is one of the selected best solutions,

is its projection point on the centreline. For the convenience, suppose

and the origin

are the upper and lower boundary points of the decision variable space in this paper, respectively. Since points

,

and

are on the same straight line, we can get

, where

is a real number between [0,1]. Once we get the value of k, the coordinates of

can be obtained. Since

, where θ is the angle between the vector

and

, and also the angle between

and

, we can get

. Then, the coordinates of

can be gotten by

. Based on the obtained

, the symmetry point of

can be generated by

.

Figure 2. Illustrations of the reference solution generation mechanism. (a) Centerline symmetry point generation for one solution and (b) Centerline symmetry point generation for multiple solutions.

According to the above method, m/2 centreline symmetry points can be generated. These points and the m/2 best solutions selected previously are taken as reference solutions. After transformation, the search space of the transformed problem can be expanded to widely distributed straight lines.

Take a 2D space in Figure (b) as an example. Suppose that m is 6. are the reference solutions selected by NSGA-II. Three straight lines passing through

are the search range of the transformed problem using the linear transformation function, which is far away from the PS. According to the reference solutions generation method, we can obtain the centreline symmetry points of

, i.e.

. Then,

and

are taken as reference solutions to transform the original problem. From Figure (b), we can see that after the transformation, the search range of the transformed problem is increased by three straight lines passing through

. The six lines are relatively widely distributed in the decision variable space. Searching along these lines, the algorithm can find the solutions of PS easier.

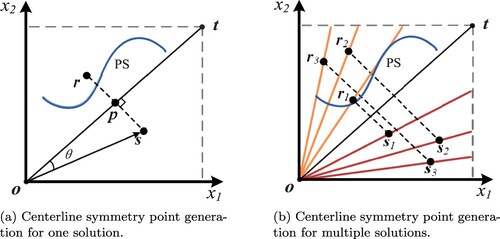

3.2. A new transformation function

In WOF, three transformation functions are proposed to transform the original problem. Specifically, the forms of the product transformation and the interval-intersection transformation

are basically the same. They narrow the whole search subspace to a straight line connecting the origin

and the corresponding selected reference solution, which is denoted by

in Figure . The p-value transformation

narrows the search space to the straight line passing through the corresponding selected reference solution and parallel to the centreline as displayed by

in Figure .

Here, we design a double-line transformation function to increase the search range and search directions for the selected reference solutions. The new transformation function is as follows.

(2)

(2) where

is the value of ith dimension of

, and

is the weight vector assigned to the jth group of decision variables that

belongs to.

Based on the designed transformation function, the search range of the original problem can be narrowed down to two straight lines, i.e. and

as shown in Figure . If

, the algorithm searches along the line

, otherwise it searches along the line

. From Figure , we can see that the search range is expanded to more promising areas, which may make it easier to find promising solutions quickly. This can further improve the efficiency of the existing MOEAs to solve LSMOPs.

4. Experiments and results

In this section, we conduct the numerical experiments on widely used multi-objective benchmarks UF1-UF10 (Q. Zhang et al., Citation2008), WFG1-WFG9 (Huband et al., Citation2006), and LSMOP1-LSMOP9 (Cheng et al., Citation2017) to empirically demonstrate the effectiveness of our CSDT. And the comparison with MOEA/DVA (Ma et al., Citation2016), LSMOF (He et al., Citation2019), DGEA (He et al., Citation2022), and WOF (Zille et al., Citation2018) is made. All the numerical experiments are carried out on PlatEMO (Tian et al., Citation2017) of Matlab 2015b on a computer with Intel(R) Xeon(R) CPU E5-2630 v4 @2.20GHz and 128G RAM. IGD (Zitzler et al., Citation2003) is used to comprehensively access the convergence and diversity of the obtained non-dominated solutions set. The smaller the IGD value is, the better the algorithm performs.

4.1. Parameter settings

For each test problem, the number of the objectives is set to 2 and 3, respectively. The dimension of the decision variables is set to 200, 500, and 1000, respectively. Since our proposed algorithm is an improved version of WOF and the main framework of WOF is not changed, the values of the same parameters in CSDT and WOF are consistent with those set in WOF (Zille et al., Citation2018). For example, the number of reference solutions m is set to 10. The ratio of function evaluations spent in the first stage δ is 0.5, which means that we allocate 50 percent of the total function evaluations to each stage. The ordered grouping method is used in these two algorithms and the number of groups g is 4. In WOF, the p-value transformation function is applied to transform the original problems since it is the best one among the three proposed functions. The optimisation algorithm used in each stage is particle swarm optimisation in CSDT and the original WOF. As for the other three compared algorithms, i.e. MOEA/DVA, DGEA, and LSMOF, the parameters are set to their default values. To ensure the fairness of the comparison experiments, the population size is set to 100 for all the compared algorithms. To ensure the convergence of the compared algorithms, we set the maximum number of function evaluations (FEs) to 500,000 for all algorithms.

On all instances, every algorithm runs independently 20 times. The Wilcoxon rank-sum test with a significance level of 0.05 (Carrasco et al., Citation2020) is applied to display the statistical results of compared algorithms. In the statistical results, “+”, “=”, and “−” mean that the compared algorithm is statistically better than, comparable to, and worse than the proposed algorithm.

4.2. Investigation of effectiveness of the two strategies

To investigate the effectiveness of our proposed strategies, we conducted numerical experiments on UF and WFG benchmarks. In the experiments, WOF-CS is the algorithm WOF with the reference solution selection strategy embedded, and WOF-DT is the algorithm WOF with the new transformation function embedded. We compare the performance of WOF-CS, WOF-DT, and the proposed algorithm CSDT with that of the original algorithm WOF on UF and WFG benchmarks. The IGD results are shown in Tables and .

Table 1. IGD values of WOF with different strategies on UF benchmarks, where the best results on each test instance is highlighted.

Table 2. IGD values of WOF with different strategies on WFG benchmarks, where the best results on each test instance is highlighted.

Firstly, comparing the results of WOF-CS and WOF in Tables and , we can see that the results obtained by WOF-CS are statistically better than those obtained by WOF on the two benchmark suites. Specifically, for UF benchmarks, WOF-CS wins on 11 out of 30 instances and ties on the remaining instances. It performs significantly better than WOF mainly on UF1, UF2, UF5, UF8, and UF9 instances. For WFG benchmarks, WOF-CS wins on 25/54 instances and loses only on 1/54 instances on WFG benchmarks. The better results obtained by WOF-CS mainly focus on all instances of WFG2, WFG3, WFG4, and WFG7, and bi-objective instances of WFG1, WFG6, WFG8, and WFG9. This indicates that the proposed reference solution strategy is effective. Select widely distributed solutions to transform the original problem can provide widely distributed search lines for the algorithm to search. Along these lines, the algorithm can obtain solutions with better diversity, which may help WOF jump out the local minima and find better optimal solutions while ensuring the acceleration for population convergence.

Secondly, comparing the results of WOF-DT and WOF, we can see that the performance of WOF-DT is significantly better than that of WOF on UF and WFG benchmarks. To be specific, for UF benchmarks, WOF-DT performs statistically better than WOF on 19 instances. It obtains better results than WOF on all UF benchmarks except UF5 and 200-D UF7. For WFG benchmarks, WOF-CS performs statistically better than WOF on 32 out of 54 instances and worse than WOF only on 1 instance. The better results obtained by WOF-DT mainly focus on all instances of WFG1, WFG2, WFG3, WFG4, WFG6, WFG7, and WFG9, and bi-objective instances of WFG5 and WFG8, and tri-objective WFG1. This demonstrates that our designed transformation function is more competitive. As described in Section 3.2, our proposed transformation function can narrow down the search space into two straight lines based on one reference solution and provide more potential search areas and search directions than the existing ones. It can help the algorithms find more promising solutions quickly, thereby improving the efficiency of solving LSMOPs.

Furthermore, the performance of CSDT that combines the two proposed strategies on WOF is also investigated. From Tables and , CSDT outperforms WOF with 22 wins, 1 lose, and 7 ties on UF benchmarks, and performs statistically better than WOF with 32 wins, 1 lose, and 21 ties on WFG benchmarks. This demonstrates that our proposed algorithm is more efficient than the original WOF for solving LSMOPs. Moreover, the best results obtained by CSDT are more than those obtained by the algorithms embedded with one single strategy. Specifically, WOF-CS obtains 5 best results, WOF-DT obtains 6 best results, and CSDT finds 17 best results on 30 UF benchmarks. And on 54 WFG instances, WOF-CS gets 14 best results, WOF-DT gets 13 best results, and CSDT obtains 24 best results. This proves that CSDT gives full play to the advantages of the two strategies and greatly improves the ability of WOF to solve LSMOPs.

In summary, the above comparative analysis demonstrates that the two strategies we proposed are effective and can significantly improve the performance of WOF in solving LSMOPs.

4.3. Comparisons with state-of-the-arts

To demonstrate the performance of CSDT, we compare CSDT with three state-of-the-art LSMOAs, i.e. MOEA/DVA, LSMOF, and DGEA, on UF benchmarks, WFG benchmarks, and LSMOP benchmarks, respectively. The statistical IGD results achieved by the four compared algorithms are shown in Tables to .

Table 3. IGD values of the four compared algorithms on UF benchmarks, where the best results on each test instance is highlighted.

Table 4. IGD values of the four compared algorithms on WFG benchmarks, where the best results on each test instance is highlighted.

Table 5. IGD values of the four compared algorithms on LSMOP benchmarks, where the best results on each test instance is highlighted.

From Table , we can find that our proposed CSDT obtains 18 best results, MOEA/DVA obtains 6 best results, and LSMOF obtains 6 best results on 30 UF instances. Specifically, CSDT outperforms MOEA/DVA with 20 wins, 8 loses, and 2 ties. It performs significantly better than LSMOF on all instances except UF5 and UF10. And on all instances, the performance of CSDT is statistically better than that of DGEA.

On 54 WFG instances, it can be seen from Table that our proposed CSDT obtains 45 best results and LSMOF obtains 13 best results. To be specific, CSDT outperforms MOEA/DVA and DGEA on all WFG test instances. CSDT performs statistically better than LSMOF on 37 instances and performs worse on 9 instances. It performs very well on almost all test instances of WFG2, WFG3, WFG7, and WFG8. On bi-objective WFG6, tri-objective WFG5, and most instances of WFG1, CSDT also has a good performance.

From Table , we can see that among the four compared algorithms, CSDT obtains 37 best results, MOEA/DVA obtains 6 best results, LSMOF obtains 6 best results, and DGEA obtains 5 best results on 54 LSMOP instances. Specifically, CSDT outperforms MOEA/DVA with 47 wins, 5 loses, and 2 ties. It performs significantly better than LSMOF on 41 instances and performs worse on 5 instances. Compared to LSMOF, it finds better solutions for all the instances of LSMOP1, LSMOP2, LSMOP4, LSMOP5, LSMOP8, and bi-objective LSMOP6. As for DGEA, CSDT outperforms it with 42 wins, 7 loses, and 5 ties.

Overall, CSDT is the best one among the four compared algorithms for solving LSMOPs. The poor performance of MOEA/DVA may be due to the numerous FEs consumption on decision variable correlation analysis which is not required in CSDT. The sub-problems obtained by grouping variables cannot be fully optimised, thus failing to find better solutions. DGEA needs to search the entire decision variable space of LSMOPs in the optimisation process, which makes it not easy to find optimal solutions quickly. Whereas in CSDT, we only need to search along several potential straight lines in the first stage, which significantly accelerates the population convergence. Therefore, the optimisation efficiency of CSDT is much higher than that of DGEA. As for LSMOF, it narrows the entire search space into several straight lines, which accelerates the convergence of the algorithm significantly. However, the search space is too small compared to the whole decision search space, which may lose the population diversity and make the algorithm fall into local minima. CSDT pays attention to ensuring the diversity of the population when reducing the search space. It searches in more areas with convergence and diversity potential, which can enhance the diversity of the obtained offspring while ensuring their convergence, thereby finding better optimal solutions than LSMOF.

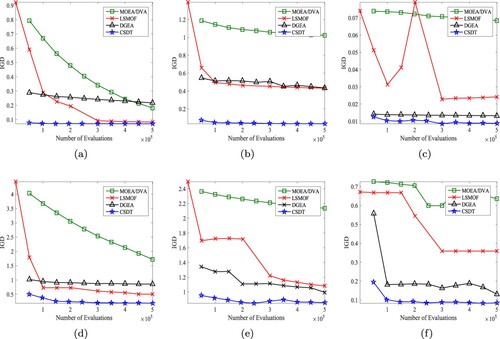

To more intuitively illustrate the effectiveness of CSDT, we give the variations of IGD curves got by MOEA/DVA, LSMOF, DGEA, and CSDT on 500-dimensional bi-objective UF2, WFG2, LSMOP2, and 1000-dimensional tri-objective UF8, WFG8, LSMOP8 in Figure . In Figure , the horizontal axis represents the number of FEs and the vertical axis denotes the IGD values obtained by the algorithms. These curves can reflect the search performance of the compared algorithms with the evolution. As can be observed, the convergence curve of CSDT can quickly reach smaller IGD values than the other three compared algorithms on these six instances. In the first stage of CSDT, i.e. before 250,000 FEs, the curves converging quickly to a small value without stagnation indicates that our proposed strategies can provide more potential search range to avoid the algorithm falling into the local optimal solutions and accelerate the convergence of the algorithm.

Figure 4. The variations of IGD values obtained by the four compared algorithms on UF2, UF8, WFG2, WFG8, LSMOP2, and LSMOP8. (a) UF2. (b) WFG2. (c) LSMOP2. (d) UF8. (e) WFG8 and (f) LSMOP8.

Based on the above results and analysis, it can be seen that our proposed CSDT is effective, and its comprehensive performance is more competitive than the state-of-the-art algorithms for solving LSMOPs.

4.4. Parameter sensitivity analysis

To analyse the effect of the number of reference vectors m, in this section, we conduct a parameter sensitivity analysis experiment on UF and WFG benchmarks. The values of m are set to 4, 10, 20, and 50, respectively. The experimental results are displayed in Tables and .

Table 6. IGD values of CSDT with different values of m on UF benchmarks, where the best results on each test instance is highlighted.

Table 7. IGD values of CSDT with different values of m on WFG benchmarks, where the best results on each test instance is highlighted.

From Table , we can find that on 30 UF instances, the number of the best results obtained by CSDT with m = 4, 20, 50, and 10 is 4, 8, 1, and 17, respectively. When m = 10, the performance of CSDT is the best. CSDT with m = 20 performs better than that with m = 4 and 50, and performs slightly worse than that with m = 10. For convenience, we use CSDT-num to represent CSDT with m = num. When compared with CSDT-10, CSDT-4 performs significantly worse on 22 instances, CSDT-50 performs significantly worse on 19 instances, and CSDT-20 performs significantly worse on only 4 instances. The performance of CSDT-10 is comparable to that of CSDT-20 on 26/34 instances.

From Table , we can see that on 54 WFG instances, the number of the best results obtained by CSDT with m = 4, 20, 50, and 10 is 1, 10, 1, and 42, respectively. Similar to UF benchmarks, when m = 10, the performance of CSDT is also the best on WFG benchmarks. When compared with CSDT-10, both CSDT-4 and CSDT-50 perform statistically worse on 36 instances, and CSDT-20 performs significantly worse on 12 instances. Moreover, the performance of CSDT-10 is comparable to that of CSDT-20 on 42/54 instances.

Overall, we can conclude that when m takes a moderate value, such as or 20, the performance of CSDT is good. When m takes a relatively large value, such as m = 50, or a relatively small value, such as

, the performance of CSDT is not as good as that when m takes 10 or 20. When m is relatively large, the algorithm needs to optimise relatively more transformed problems in the first stage. Since the computing resources allocated to each stage are fixed, it will reduce the computing resources that can be allocated to each transformed problem, resulting in insufficient optimisation of each transformed problem. Therefore, the solutions found by the algorithm are relatively poor. However, when m is relatively small, the number of the transformed problems is relatively less. Although each transformed problem can be optimised sufficiently, the search range is relatively small than that when

or 20.

5. Conclusion

In this paper, we present a new algorithm based on WOF for LSMOPs, named CSDT. To alleviate the limitations of WOF, in the first stage, we take the centreline symmetry points to replace half of the reference solutions chosen in WOF to transform the problem. It can avoid the search range of the transformed problem being concentrated in a small area of search space, thereby improving the search efficiency of the algorithm. Then, to enhance the diversity of the algorithm while ensuring its convergence, a double-line transformation function is designed to add more potential search areas. Subsequently, in the second stage, PSO is applied to search the whole space of the original problem to improve the diversity of CSDT. To investigate the effectiveness of the two strategies and the general performance of CSDT, we conducted numerical experiments on three widely used benchmark suites. The performance of CSDT is compared with that of the original WOF, MOEA/DVA, LSMOF, and DGEA. The statistical results indicate that CSDT significantly improves the performance of the WOF and outperforms the other four compared algorithms.

However, since our proposed algorithm pays more attention to diversity than WOF, its performance may be slightly inferior to WOF for problems that are difficult to obtain convergence solutions. Moreover, since our proposed algorithm is proposed based on WOF, its performance will be affected by the results of decision variable grouping. In the future, an important task for us is to study advanced multi-objective grouping techniques to reasonably group the decision variables of LSMOPs. Furthermore, finding potential areas and directions to guide the algorithm search is also an effective method that we need to study for solving LSMOPs.

We have to point out that there are many other kinds of computational intelligence algorithms applicable to the problems, e.g. the algorithms to mimic the behaviours of different animals (like monarch butterfly optimisation (MBO), earthworm optimisation algorithm (EWA), elephant herding optimisation (EHO)). Due to the page limits, we do not list these references.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

References

- Antonio, L. M., & Coello, C. A. C. (2013, June 20–23). Use of cooperative coevolution for solving large scale multiobjective optimization problems. In IEEE congress on evolutionary computation, CEC 2013 (pp. 2758–2765). IEEE.

- Antonio, L. M., & Coello, C. A. C. (2016, September 17–21). Decomposition-based approach for solving large scale multi-objective problems. In International conference on parallel problem solving from nature (pp. 524–534). Springer.

- Bader, J., & Zitzler, E. (2011). HypE: An algorithm for fast hypervolume-based many-objective optimization. Evolutionary Computation, 19(1), 45–76. https://doi.org/10.1162/EVCO_a_00009

- Beume, N., Naujoks, B., & Emmerich, M. T. M. (2007). SMS-EMOA: Multiobjective selection based on dominated hypervolume. European Journal of Operational Research, 181(3), 1653–1669. https://doi.org/10.1016/j.ejor.2006.08.008

- Bringmann, K., & Friedrich, T. (2010). An efficient algorithm for computing hypervolume contributions. Evolutionary Computation, 18(3), 383–402. https://doi.org/10.1162/EVCO_a_00012

- Cao, B., Zhao, J., Gu, Y., Ling, Y., & Ma, X. (2020). Applying graph-based differential grouping for multiobjective large-scale optimization. Swarm and Evolutionary Computation, 53(3), Article 100626. https://doi.org/10.1016/j.swevo.2019.100626

- Cao, B., Zhao, J., Lv, Z., & Liu, X. (2017). A distributed parallel cooperative coevolutionary multiobjective evolutionary algorithm for large-scale optimization. IEEE Transactions on Industrial Informatics, 13(4), 2030–2038. https://doi.org/10.1109/TII.9424

- Carrasco, J., Garcıa, S., Das, S., & Herrera, F. (2020). Recent trends in the use of statistical tests for comparing swarm and evolutionary computing algorithms: Practical guidelines and a critical review. Swarm and Evolutionary Computation, 54(6), Article 100665. https://doi.org/10.1016/j.swevo.2020.100665

- Chen, H., Cheng, R., Wen, J., Li, H., & Weng, J. (2020). Solving large-scale many-objective optimization problems by covariance matrix adaptation evolution strategy with scalable small subpopulations. Information Sciences, 509(1), 457–469. https://doi.org/10.1016/j.ins.2018.10.007

- Chen, W., Weise, T., Yang, Z., & Tang, K. (2010, September 11–15). Large-scale global optimization using cooperative coevolution with variable interaction learning. In International conference on parallel problem solving from nature (pp. 300–309). Springer.

- Cheng, R., Jin, Y., Olhofer, M., & Sendhoff, B. (2017). Test problems for large-scale multiobjective and many-objective optimization. IEEE Transactions on Cybernetics, 47(12), 4108–4121. https://doi.org/10.1109/TCYB.2016.2600577

- Dai, C., & Wang, Y. (2015a). A new decomposition based evolutionary algorithm with uniform designs for many-objective optimization. Applied Soft Computing, 30(2), 238–248. https://doi.org/10.1016/j.asoc.2015.01.062

- Dai, C., & Wang, Y. (2015b). A new uniform evolutionary algorithm based on decomposition and CDAS for many-objective optimization. Knowledge-Based Systems, 85(6), 131–142. https://doi.org/10.1016/j.knosys.2015.04.025

- Dai, C., Wang, Y., & Ye, M. (2015). A new multi-objective particle swarm optimization algorithm based on decomposition. Information Sciences, 325(9), 541–557. https://doi.org/10.1016/j.ins.2015.07.018

- Deb, K., Agrawal, S., Pratap, A., & Meyarivan, T. (2002). A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Transactions on Evolutionary Computation, 6(2), 182–197. https://doi.org/10.1109/4235.996017

- Everson, R. M., & Fieldsend, J. E. (2006). Multiobjective optimization of safety related systems: An application to short-term conflict alert. IEEE Transactions on Evolutionary Computation, 10(2), 187–198. https://doi.org/10.1109/TEVC.2005.856067

- Goh, S. K., Tan, K. C., Mamun, A. A., & Abbass, H. A. (2015, May 25–28). Evolutionary big optimization (bigopt) of signals. In IEEE congress on evolutionary computation, CEC 2015 (pp. 3332–3339). IEEE.

- Gong, Y., Zhang, J., Chung, H. S., Chen, W., Zhan, Z., Li, Y., & Shi, Y. (2012). An efficient resource allocation scheme using particle swarm optimization. IEEE Transactions on Evolutionary Computation, 16(6), 801–816. https://doi.org/10.1109/TEVC.2012.2185052

- Gong, Y., & Zhou, Y. (2018). Differential evolutionary superpixel segmentation. IEEE Transactions on Image Processing, 27(3), 1390–1404. https://doi.org/10.1109/TIP.2017.2778569

- Gu, Z., & Wang, G. (2020). Improving NSGA-III algorithms with information feedback models for large-scale many-objective optimization. Future Generation Computer Systems, 107(4598), 49–69. https://doi.org/10.1016/j.future.2020.01.048

- He, C., Cheng, R., & Yazdani, D. (2022). Adaptive offspring generation for evolutionary large-scale multiobjective optimization. IEEE Transactions on Systems, Man, and Cybernetics: Systems, 52(2), 786–798. https://doi.org/10.1109/TSMC.2020.3003926

- He, C., Li, L., Tian, Y., Zhang, X., Cheng, R., Jin, Y., & Yao, X. (2019). Accelerating large-scale multiobjective optimization via problem reformulation. IEEE Transactions on Evolutionary Computation, 23(6), 949–961. https://doi.org/10.1109/TEVC.4235

- Hong, W., Tang, K., Zhou, A., Ishibuchi, H., & Yao, X. (2019). A scalable indicator-based evolutionary algorithm for large-Scale multiobjective optimization. IEEE Transactions on Evolutionary Computation, 23(3), 525–537. https://doi.org/10.1109/TEVC.4235

- Huband, S., Hingston, P., Barone, L., & While, L. (2006). A review of multiobjective test problems and a scalable test problem toolkit. IEEE Transactions on Evolutionary Computation, 10(5), 477–506. https://doi.org/10.1109/TEVC.2005.861417

- Li, W., Sun, Y., Huang, Y., & Yi, J. (2022). An adaptive differential evolution algorithm using fitness distance correlation and neighbourhood-based mutation strategy. Connection Science, 34(1), 829–856. https://doi.org/10.1080/09540091.2021.1997913

- Liu, J., Wang, Y., Fan, N., Wei, S., & Tong, W. (2019). A convergence-diversity balanced fitness evaluation mechanism for decomposition- based many-objective optimization algorithm. Integrated Computer Aided Engineering, 26(2), 159–184. https://doi.org/10.3233/ICA-180594

- Liu, J., Wang, Y., Wang, X., Guo, S., & Sui, X. (2019). A new dominance method based on expanding dominated area for many-objective optimization. International Journal of Pattern Recognition and Artificial Intelligence, 33(3), 1959008:1–24. https://doi.org/10.1142/S0218001419590080

- Liu, R., Liu, J., Li, Y., & Liu, J. (2020). A random dynamic grouping based weight optimization framework for large-scale multi-objective optimization problems. Swarm and Evolutionary Computation, 55(2), Article 100684. https://doi.org/10.1016/j.swevo.2020.100684

- Ma, X., Liu, F., Qi, Y., Wang, X., Li, L., Jiao, L., Yin, M, & Gong, M. (2016). A multiobjective evolutionary algorithm based on decision variable analyses for multiobjective optimization problems with large-scale variables. IEEE Transactions on Evolutionary Computation, 20(2), 275–298. https://doi.org/10.1109/TEVC.2015.2455812

- Maltese, J., Ombuki-Berman, B. M., & Engelbrecht, A. P. (2018). A scalability study of many-objective optimization algorithms. IEEE Transactions on Evolutionary Computation, 22(1), 79–96. https://doi.org/10.1109/TEVC.2016.2639360

- Nagra, A. A., Han, F., Ling, Q., Abubaker, M., Ahmad, F., Mehta, S., & Apasiba, A. T. (2020). Hybrid self-inertia weight adaptive particle swarm optimisation with local search using C4.5 decision tree classifier for feature selection problems. Connection Science, 32(1), 16–36. https://doi.org/10.1080/09540091.2019.1609419

- Omidvar, M. N., Li, X., Mei, Y., & Yao, X. (2014). Cooperative co-evolution with differential grouping for large scale optimization. IEEE Transactions on Evolutionary Computation, 18(3), 378–393. https://doi.org/10.1109/TEVC.4235

- Omidvar, M. N., Li, X., Yang, Z., & Yao, X. (2010, July 18–23). Cooperative co-evolution for large scale optimization through more frequent random grouping. In IEEE congress on evolutionary computation, CEC 2010 (pp. 1–8). IEEE.

- Qin, S., Sun, C., Jin, Y., Tan, Y., & Fieldsend, J. E. (2021). Large-Scale evolutionary multiobjective optimization assisted by directed sampling. IEEE Transactions on Evolutionary Computation, 25(4), 724–738. https://doi.org/10.1109/TEVC.2021.3063606

- Russo, L. M. S., & Francisco, A. P. (2014). Quick hypervolume. IEEE Transactions on Evolutionary Computation, 18(4), 481–502. https://doi.org/10.1109/TEVC.2013.2281525

- Song, A., Yang, Q., Chen, W., & Zhang, J. (2016, July 24–29). A random-based dynamic grouping strategy for large scale multi-objective optimization. In IEEE congress on evolutionary computation, CEC 2016 (pp. 468–475). IEEE.

- Song, E., & Li, H. (2022). A hybrid differential evolution for multi-objective optimisation problems. Connection Science, 34(1), 224–253. https://doi.org/10.1080/09540091.2021.1984396

- Sun, J., Miao, Z., Gong, D., Zeng, X., Li, J., & Wang, G. (2020). Interval multiobjective optimization with memetic algorithms. IEEE Transactions on Cybernetics, 50(8), 3444–3457. https://doi.org/10.1109/TCYB.6221036

- Tang, D., Wang, Y., Wu, X., & Cheung, Y. (2020, July 19–24). A symmetric points search and variable grouping method for large-scale multi-objective optimization. In IEEE congress on evolutionary computation, CEC 2020 (pp. 1–8). IEEE.

- Tian, Y., Cheng, R., Zhang, X., & Jin, Y. (2017). PlatEMO: A MATLAB platform for evolutionary multi-objective optimization. IEEE Computational Intelligence Magazine, 12(4), 73–87. https://doi.org/10.1109/MCI.2017.2742868

- Tian, Y., Liu, R., Zhang, X., Ma, H., Tan, K. C., & Jin, Y. (2021). A multipopulation evolutionary algorithm for solving large-Scale multimodal multiobjective optimization problems. IEEE Transactions on Evolutionary Computation, 25(3), 405–418. https://doi.org/10.1109/TEVC.2020.3044711

- Tian, Y., Zheng, X., Zhang, X., & Jin, Y. (2020). Efficient large-scale multiobjective optimization based on a competitive swarm optimizer. IEEE Transactions on Cybernetics, 50(8), 3696–3708. https://doi.org/10.1109/TCYB.6221036

- Too, J., Sadiq, A. S., & Mirjalili, S. M. (2022). A conditional opposition-based particle swarm optimisation for feature selection. Connection Science, 34(1), 339–361. https://doi.org/10.1080/09540091.2021.2002266

- Wang, G., Cai, X., Cui, Z., Min, G., & Chen, J. (2020). High performance computing for cyber physical social systems by using evolutionary multi-objective optimization algorithm. IEEE Transactions on Emerging Topics in Computing, 8(1), 20–30. https://doi.org/10.1109/TETC.2017.2703784

- While, L., Bradstreet, L., & Barone, L. (2012). A fast way of calculating exact hypervolumes. IEEE Transactions on Evolutionary Computation, 16(1), 86–95. https://doi.org/10.1109/TEVC.2010.2077298

- Xue, X., & Wang, Y. (2017). Improving the efficiency of NSGA-II based ontology aligning technology. Data and Knowledge Engineering, 108(5), Article 43114. https://doi.org/10.1016/j.datak.2016.12.002

- Yang, X., Zou, J., Yang, S., Zheng, J., & Liu, Y. (2021). A fuzzy decision variables framework for large-scale multiobjective optimization. IEEE Transactions on Evolutionary Computation, 3(6), 1–15. https://doi.org/10.1109/TEVC.2021.3118593

- Yi, J., Deb, S., Dong, J., Alavi, A. H., & Wang, G. (2018). An improved NSGA-III algorithm with adaptive mutation operator for big data optimization problems. Future Generation Computer Systems, 88(2), 571–585. https://doi.org/10.1016/j.future.2018.06.008

- Yi, J., Xing, L., Wang, G., Dong, J., Vasilakos, A. V., Alavi, A. H., & Wang, L. (2020). Behavior of crossover operators in NSGA-III for large-scale optimization problems. Information Sciences, 509(15), 470–487. https://doi.org/10.1016/j.ins.2018.10.005

- Yuan, Y., & Xu, H. (2015). Multiobjective flexible job shop scheduling using memetic algorithms. IEEE Transactions on Automation Science and Engineering, 12(1), 336–353. https://doi.org/10.1109/TASE.8856

- Zhang, Q., & Li, H. (2007). MOEA/D: A multiobjective evolutionary algorithm based on decomposition. IEEE Transactions on Evolutionary Computation, 11(6), 712–731. https://doi.org/10.1109/TEVC.2007.892759

- Zhang, Q., Zhou, A., Zhao, S., Suganthan, P. N., Liu, W., & Tiwari, S. (2008). Multiobjective optimization test instances for the CEC 2009 special session and competition. University of Essex, Colchester, UK and Nanyang Technological University, Singapore, Special Session on Performance Assessment of Multi-objective Optimization Algorithms, Technical Report, 264, 1–30.

- Zhang, X., Tian, Y., Cheng, R., & Jin, Y. (2018). A decision variable clustering-based evolutionary algorithm for large-scale many-objective optimization. IEEE Transactions on Evolutionary Computation, 22(1), 97–112. https://doi.org/10.1109/TEVC.2016.2600642

- Zhang, Y., Gong, Y., Gu, T., Li, Y., & Zhang, J. (2017). Flexible genetic algorithm: A simple and generic approach to node placement problems. Applied Soft Computing, 52, 457–470. https://doi.org/10.1016/j.asoc.2016.10.022

- Zhang, Y., Wang, G., Li, K., Yeh, W., Jian, M., & Dong, J. (2020). Enhancing MOEA/D with information feedback models for large-scale many-objective optimization. Information Sciences, 522(1), 1–16.

- Zhao, F., Du, S., Lu, H., Ma, W., & Song, H. (2021). A hybrid self-adaptive invasive weed algorithm with differential evolution. Connection Science, 33(4), 929–953. https://doi.org/10.1080/09540091.2021.1917517

- Zhao, F., Zhang, L., Zhang, Y., Ma, W., Zhang, C., & Song, H. (2020). An improved water wave optimisation algorithm enhanced by CMA-ES and opposition-based learning. Connection Science, 32(2), 132–161. https://doi.org/10.1080/09540091.2019.1674247

- Zille, H., Ishibuchi, H., Mostaghim, S., & Nojima, Y. (2018). A framework for large-scale multiobjective optimization based on problem transformation. IEEE Transactions on Evolutionary Computation, 22(2), 260–275. https://doi.org/10.1109/TEVC.2017.2704782

- Zitzler, E., Thiele, L., Laumanns, M., Fonseca, C. M., & da Fonseca, V. G. (2003). Performance assessment of multiobjective optimizers: An analysis and review. IEEE Transactions on Evolutionary Computation, 7(2), 117–132. https://doi.org/10.1109/TEVC.2003.810758