ABSTRACT

The enhancement of computing power, the maturity of learning algorithms, and the richness of application scenarios make Artificial Intelligence (AI) solution increasingly attractive when solving Geo-spatial Information Science (GSIS) problems. These include image matching, image target detection, change detection, image retrieval, and for generating data models of various types. This paper discusses the connection and synthesis between AI and GSIS in block adjustment, image search and discovery in big databases, automatic change detection, and detection of abnormalities, demonstrating that AI can integrate GSIS. Moreover, the concept of Earth Observation Brain and Smart Geo-spatial Service (SGSS) is introduced in the end, and it is expected to promote the development of GSIS into broadening applications.

1. Introduction

Artificial Intelligence (AI) (Russell and Norvig Citation2002) is increasingly applied in various fields given the development and enhancement of computing power, the maturity of learning algorithms, and the richness of application scenarios. AI is a comprehensive technical science that focuses on the development of theories, methods, techniques, and application systems for simulating and extending human intelligence. People are attempting to understand the essence of intelligence with AI, to produce a new kind of intelligent machines that can respond in a way similar to human intelligence. The term “Artificial Intelligence” was proposed in 1956 during a Dartmouth Conference (Hamet and Tremblay Citation2017). That meeting is considered as the official birth of the new discipline “artificial intelligence”. At that time, IBM’s “dark blue” computer defeated the world chess champion, and a perfect expression of artificial intelligence technology. An increasing number of AI applications target big data, computing power, internet of things, object detection, abnormality and change detection, image interpretation, and robotic mapping.

The significance of AI has risen to the level of national strategy in many countries. United States issued a series of policies to promote the development of AI (Krishnan Citation2016). In April 2013, the United States announced a new Brain Research Program to promote innovative neurotechnology, while an NIH Group in January 2014 developed a detailed plan for the next 10 years. The DARPA “Future Technology Forum” was held in October 2015, and CSIS published the National Defense 2045 plan in November 2015 pertaining to AI. Synergistically, DARPA supports a third “offset strategy” proposed by the United States in February 2016, and the White House established an artificial intelligence committee in May 2016. In Japan, an AI Comprehensive Development Plan was implemented, which includes the New Robot Project initiated in January 2015. Linked to this plan, in June 2015, Japan established a Research Center of Artificial Intelligence, and in early 2016 created the Advanced Integrated Intelligent Platforms Program. The Republic of Korea also considers AI as one of the five key areas for development (Zhang Citation2016). The Exobrain Plan was released; a Second Master Plan for Intelligent Robots, and the AI star lab was launched by the Republic of Korea emphasizing natural language dialogue systems, robot technologies, and AI integration areas.

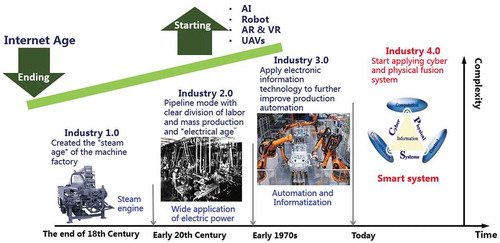

China also issued a series of policies related to AI development. In 2015, China announced “Made in China 2025” plan which includes a focus on AI (Liu Citation2016); key documents include “The State Council’s Guiding Opinions on actively promoting the “Internet +” action, and the “Outline of the Thirteen Five-Year Plans for National Economic and Social Development (Draft)”. Also, among these directives are the “Three-year action plan” for the implementation of the “Internet + artificial intelligence” program, issued between 2015 and 2016 (Fan Citation2006). The rapid development of AI will likely lead the fourth industrial revolution in human intelligence, as illustrated in .

The development of AI enhances Geo-spatial Information Science (GSIS) (Chen et al. Citation2009; Li Citation2012a), especially when combined with advances in big data analysis (Weng et al. Citation2009; Li et al. Citation2015; Yang et al. Citation2017; Zhang et al. Citation2013), deep learning, or other artificial intelligence techniques. Neural networks were reborn in the 1980s; AI and GSIS converged as artificial intelligence solved problems such as image matching and map generation in the GSIS field (Voženílek Citation2009). AI provides sophisticated techniques for GSIS projects and, at the same time, GSIS is a powerful technology with the vast data sets and a wide scope of applications for AI. Since 2006, a new generation of information technologies, such as the Internet of Things and Cloud Computing, was officially launched, realizing the comprehensive integration of industrialization and informatization (Li and Shao Citation2009; Li, Shao, and Yang Citation2011; Li et al. Citation2014).

The networked world is linked to the real world through the ubiquitous sensor network forming a new kind of Cyber physical space, which can automatically and real-time perceive the various states and changes in people and things in the real world. Cloud-computing centers can process the massive and complex computational problems, and control and generate intelligent feedback. In 2009, countries around the world officially proposed to build a Smart Earth collaboratively (Mei Citation2009); but, a Smart Earth cannot be realized without AI in GSIS for supervision and management of decision-making support.

With the development of AI, more and more GSIS researchers at LIESMARS have integrated AI methods into their architecture (Xin, Li, and Sun Citation2003; Zhan, Zhang, and Li Citation2008; Shao et al. Citation2019; Xiao et al. Citation2017). In the following sections, we describe applications developed at LIESMARS that combine AI methods with GSIS:

Large-scale block adjustment

Image search in big databases

Automatic change detection in images

Abnormal target and event detection

Earth Observation Brain (EOB) and Smart Geo-spatial Service (SGSS)

An Internet + Spaceborne Information Real-time Service System (PNTRC)

These applications are all based on AI to achieve high performance and make progress toward breakthroughs that integrates various fields. GSIS, together with AI, is crucial to understand thoroughly the changes in time and space, solving difficult problems in the past, and will likely expand possibilities in the future.

2. Large-scale block adjustment

Block adjustment refers to the refinement of 3D coordinates in scene geometries, relative motion parameters, and the optical features of cameras that simultaneously acquire images. Subsequently, this technology has attracted the attention of many GSIS researchers. AI technology now permits large-scale storage and block adjustment to tackle large optimization problems; large-scale block adjustment demands less computational resources when using parallel processing, like GPU with CPU.

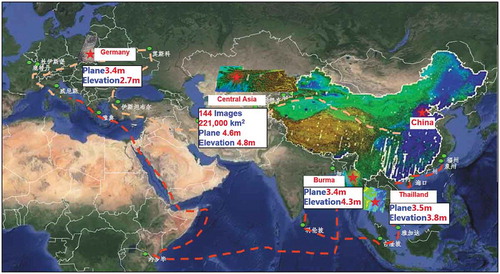

Large-scale block adjustment of systems onboard the ZY-3 satellite is now solved through AI. The ZY-3 (Li Citation2012b), launched in 2012, obtains stereo imagery pairs and produces high-precision digital orthographic maps (DOM) and digital surface models (DSM) (Zhang et al. Citation2014a; Wang et al. Citation2017). This satellite provides high-precision spatial references for surveying, land, and defense. The images from the ZY-3 satellite are used for “Global Automatic Mapping Major Projects”: Central Asia, Thailand, Burma, and Germany. shows information about “Global Automatic Mapping Major Projects”.

AI allowed the use of the entire uncontrolled regional network for block adjustment of 8810 scenes in the national ZY-3 satellite database (Wang et al. Citation2014a; Zhang et al. Citation2014b, Citation2014; Wang et al. Citation2013). The gross error detection method based on weighted iteration posterior variance estimation is used to automatically select three million strong connection points from two billion matching points. The accuracy of autonomous positioning of remote sensing images was improved from 15 m to better than 5 m, to meet global mapping needs. The results of block adjustment without ground control point (GCP) for Shandong Province are shown in .

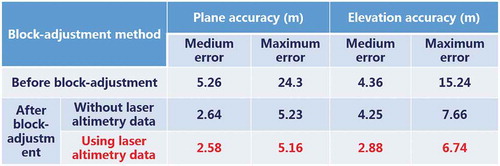

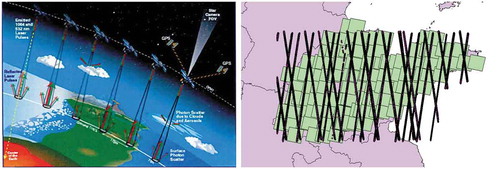

Combined block adjustment for ZY-3 images and laser altimetry data successfully uses AI. In 2003, ICESat-1 (Li et al. Citation2016a) was successfully launched, with a spot size of 70 m in diameter, a plane accuracy of 10 m, and an elevation accuracy of 15CM, providing combined block adjustment capabilities. Elevation accuracy with laser data-assisted adjustment is significantly higher than the elevation accuracy without laser data adjustment (Li et al. Citation2016b). Combined block-adjustment can further improve the elevation accuracy of ZY-3 without GPCs to greater than 3 m. illustrates the combined block adjustment for ZY-3 images and ICESat-1 data.

3. Image retrieval from big databases

Driven by the demand from both in military and civilian fields in GSIS, automatic image retrieval from big remote sensing databases has become an increasingly urgent need and has attracted an increasing amount of research interest, due to its broad applications.

Image retrieval methods can be divided into two categories According to the approach of description for the image, Text-based Image Retrieval (TBIR) (Zhu and Shao Citation2011) and Content-Based Image Retrieval (CBIR) (Wang et al. Citation2014b). TBIR methods were common in early remote sensing image retrieval systems (Shao, Li, and Zhu Citation2011; Shao et al. Citation2015). These methods rely on manual annotations and extracted keywords in terms of sensor types, waveband information, and the geographical locations of remote sensing images. This approach requires manual intervention in the labeling process, which makes TBIR time consuming and prohibitive especially as the volume of remote sensing images increases constantly. The advancement of satellite technologies means that the volume of remote sensing image databases has increased as well. TBIR cannot easily solve: a large amount of image data, high feature dimension, and short response time; as a consequence, CBIR has become more and more applied in remote sensing related fields in order to keep up with the growing need for automation.

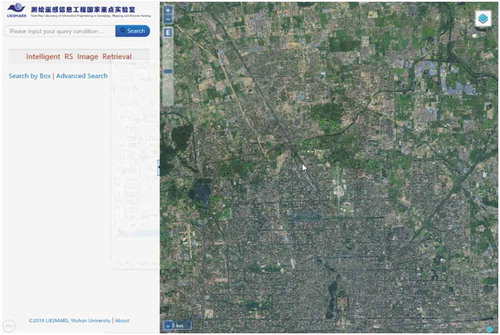

CBIR generally contains two steps in traditional CBIR algorithms: feature extraction and image matching. The first step extracts high dimensional features that represent the whole image, and the second step retrieves the corresponding or relevant image from the image dataset by query image matching. Since image retrieval aims at image search and discovery in large-scale tiled remote sensing image databases (Zhou et al. Citation2015; Shao et al. Citation2014), manual information extraction from remote sensing big data is time consuming and prohibitive, and an arduous problem. Applying AI to image retrieval problems in GSIS brings the power of deep learning and high-performance online retrieval engines to focus on large-scale databases. Thus, many efficient image retrieval algorithms were proposed based on AI and high-performance computing (Zhou et al. Citation2017; Liang et al. Citation2016), A deep-learning-based high-performance online search engine on Large-Scale Tiled Remote Sensing Image Database is developed with 10 million tiled remote sensing images at LIESMARS, which combines object level, land cover level, and scene level image retrieval. In addition, the retrieval system interface is shown in , which integrates keywords retrieval, information lists, and map engine.

4. Automatic change detection in images

Change detection (Hussain et al. Citation2013; Radke et al. Citation2005; Jiang et al. Citation2007) and analysis is one of the major topics in remote sensing. It is referred by Singh (1989) (Mahmoodzadeh Citation2007) as “the process of identifying differences in the state of object or phenomenon by observing it at different times”. In GSIS, change detection is considered as surface component alterations with varying rates and is used as an evaluation index in many applications, including forestry, damage assessment, disaster monitoring, urban planning, and land management. Remote sensing and technology are fast, automatic, and accurate. Automatic change detection in GSIS can be divided into two categories: 2D change detection and 3D change detection.

2D change detection

Traditional 2D change detection methods are typically based on classifiers deploying ensemble learning methods. The ensemble learning method is applied to optimize the advantages of the multiple supervised classifiers with multiple object contextual features, and to obtain stable and highly accurate results of change detection in urban areas from high-resolution remote sensing images. The image pairs used in change detection, however, represent the same location differently (Alberga Citation2009). Thus, the different modalities and properties of multi-resolution data make ensemble learning method inappropriate for solving change detection problems.

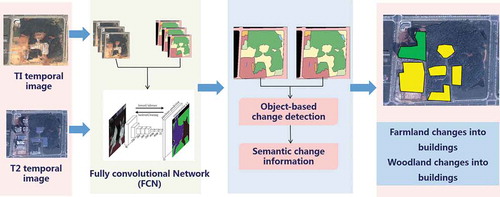

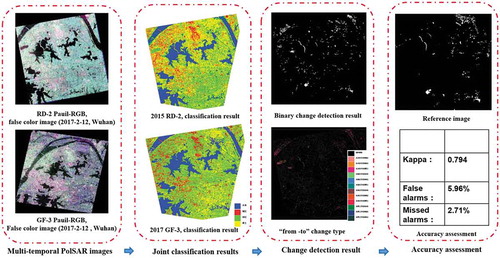

Methods combining AI with other approaches for 2D change detection yield a more robust evaluation of a given pixel. To that end, a change detection algorithm based on unsupervised feature learning incorporated deep-architecture-based unsupervised feature learning and mapping-based feature change analysis (Zhang et al. Citation2016). The learned mapping function can bridge the different representations and highlight changes. Three fully convolutional neural network architectures were developed to perform change detection using a pair of co-registered images (Daudt, Saux, and Boulch Citation2018). The network that contributed the most toward the solution contained extensions of two integrated fully convolutional networks, shows an overview of 2D change detection at LIESMARS, based on fully convolutional networks that break through the pixel-based CD to discover semantic information and create new knowledge from changed objects. shows an overview of change detection based on multi-source sensors on SAR at LIESMARS. It illustrates that methods based on AI can provide high performance for 2D change detection.

3D change detection (Qin, Tian, and Reinartz Citation2016)

Digital Elevation Models (DEM) and 3D city models have become more accessible than ever before. The unprecedented technological development of 3D data acquisition and generation through 3D space-borne, airborne, and close-range data make image based, Light Detection and Ranging (LiDAR) based point clouds become more accessible than ever before. Moreover, 3D change detection has attracted attention with its high accurate measurement accuracy and dynamic visualization aided suitable for decision support (Taneja, Ballan, and Pollefeys Citation2013; Yang, Fang, and Li Citation2013). Traditional methods for 3D change detection, separate bundle adjustment processes and need manual intervention, lead to spatial co-registration errors and high false alarm detection rate.

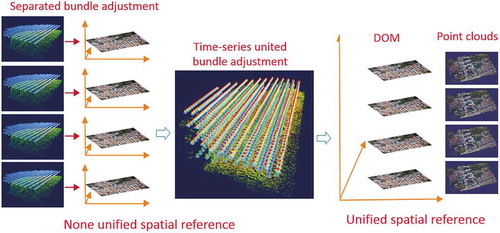

The integration of AI promotes an automatic 3D change detection system with high accuracy and robustness in multi-source sensors. The paper in Li et al. (Citation2017b) proposes a novel bundle adjustment strategy called united bundle adjustment (UBA) for multi-temporal UAV image co-registration. It can automatically achieve high co-registration accuracy, which extends the capacities of consumer-level UAVs to meet the growing need for automatic building change detection and dynamic monitoring using only RGB band images, eventually. Meanwhile, deep- learning-based multi-stereo matching algorithms and multi-view matching algorithms are used for change detection in 3D point clouds. shows an overview of 3D change detection on time-series multiple UAV images in Li et al. (Citation2017b).

Figure 8. Overview of 3D change detection on time-series multiple UAV images (Li et al. Citation2017b).

5. Abnormal target and event detection

Abnormal target and abnormal event detection is vital in various applications such as earthquake warnings, fire detection, and urban traffic supervision. In the field of GSIS, there are numerous publications focusing on such tasks (Liu Citation2000; Solheim, Hogda, and Tommervik Citation1995; Mercier and Girard-Ardhuin Citation2006). The traditional anomaly detection algorithms can be divided into three categories: model-based technology, proximity-based techniques (distance metrics), and density-based technology.

Model-based technology establishes a data model and trains a set of model parameters based on known samples. In the subsequent predictions, the so-called abnormal points are those that cannot fit perfectly with the model. Proximity-based techniques are usually defined between objects, which are objects that are far away from most other objects. When the data can be rendered in a two-dimensional or three-dimensional scatter plot, distance-based outliers can be visually detected. In addition, density-based technology is calculated relatively directly, especially when there is a measure of proximity between objects. Objects in low-density areas are relatively far from neighbors and may be considered as anomalies.

Since the emergence of AI, many researchers (Ravanbakhsh et al. Citation2018; Wei et al. Citation2018) are proposing deep-learning-based algorithms for abnormal target and abnormal event detection. In the field of GSIS, high-resolution remote sensing images and videos facilitate numerous disaster reconstruction and rescue operations based on abnormal target and abnormal event detection. The results discussed in publications (Deng et al. Citation2017; Liang et al. Citation2018) as well as various projects demonstrate that the combination of AI and GSIS can provide abnormal detection for military and civilian users. shows a result of post-earthquake collapsed house extraction based on multi-feature and multi-core learning at LIESMARS, which provides vital information for earthquake reconstruction.

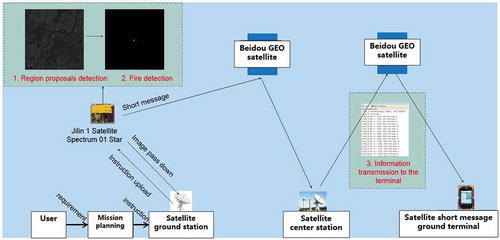

One of the national key research and development plans is the “On-orbit intelligent processing technology” led by Wuhan University. The team developed a satellite on-orbit processing system based on deep learning and GSIS. It can automatically identify, search, and locate forest fire points and surface vessels from orbit. The system integrates Beidou short message transmission, with real-time processing and transmission capabilities anywhere in the world. On 21 March 2019, in-orbit processing independently identified a forest fire in the Mekong River Basin, and extracted fire information in orbit in only 2.02 s; it only takes 13 s to receive a short message from the camera to the ground terminal. shows an overview of forest fire emergency rescue system based on the satellite on-orbit processing, which uses infrared remote sensing data to find fires and pass on this information to the ground through the Beidou satellite network.

6. Earth Observation Brain (EOB) and Smart Geo-spatial Service (SGSS)

Artificial intelligence is associated with brain science, cognitive science, psychology, statistics, and computer science. The progress of brain science and cognitive science has a great effect on the development of artificial intelligence, more researchers strive to build machines similar to the human brain in various disciplines. In the field of GSIS, the combination of theoretical knowledge and cognitive science is the will raise intelligence level across the entire geo-spatial information network. With brain science and SGSS, remote sensing can automate those three processes through a variety of spatial information brains in massive geo-spatial data acquisition; intelligent geo-spatial data processing and mining; and quick geo-spatial data-driven responses.

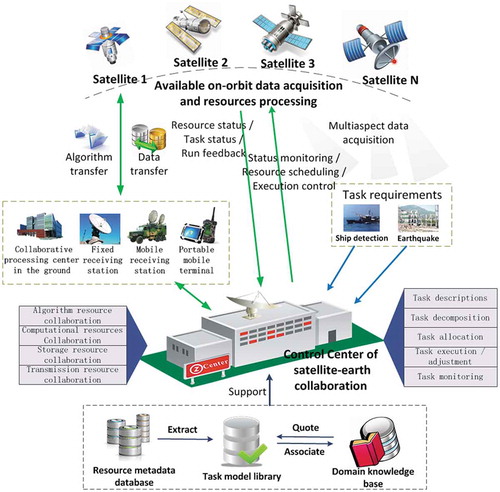

Satellite sensors and intelligent onboard processing systems can be regarded as an Earth Observation Brain (Li et al. Citation2017a). Images are acquired and processed quickly; useful information is automatically extracted and sent directly to the end-users. The EOB concept is shown in .

Figure 11. The concept of EOB (Li et al. Citation2017a).

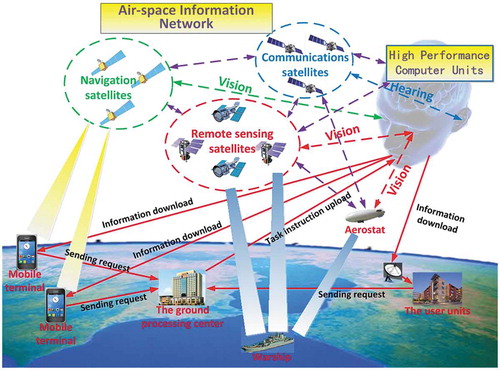

This figure illustrates how a human brain obtains information from the surrounding environment by visual, auditory, and other senses. Then, the information is transmitted to the left and right hemispheres along neurons. The left and right hemispheres analyze the surrounding environment, thus guiding behavior. Like the human brain, EOB can achieve on-board sensing, cognition, and transmission. In addition, satellite–ground collaborative processing system of task-driven remote sensing imagery can be achieved with the concept of EOB. shows an overview of satellite–ground collaborative processing system.

There are three objectives for the EOB to realize:

EOB is an intelligent earth observation system to simulate a brain cognition process. By integrating geo-spatial information science, computer science, data science, and brain cognition science, EOB will deliver real-time sensing, object extraction, target cognition, change detection, and information transmitting for quick responses.

EOB is a space–air–ground integrated information network linking RS and navigation satellites, communication airships, and aircraft. The EOB will process and analyze the information with on-board image processing and satellite–ground collaborative cloud computing, to obtain useful information and knowledge for real-time users, automatically.

EOB is a smart service system for geo-spatial information retrieval that will send the correct data, information, and knowledge to the person at the right time and the right place.

7. An Internet + Spaceborne Information Real-time Service System (PNTRC)

An Internet + Spaceborne Information Real-time Service System is suggested. It contains following features:

The communication, navigation, and remote sensing satellites which consist of about 500 high and low earth orbit satellites will build a space-borne information network with no board image process.

The intelligent service index of remote sensing information can reach to 0.5 m of spatial resolution and revisit circle is better than 5 min.

The real-time navigation and positioning accuracy can be sub-meter.

The global mobile communication: Global coverage of speech, video and image communications.

With PNTRC, any end-user can use his or her smartphone to get real-time response and service. The Luojia Satellites 1–3 are doing test in recent 2 years for that goal.

In recent years, China has also prioritized the development of brain and cognitive science. In the “national long-term scientific and technological development plan (2006–2020)”, “brain science and cognitive science” was recognized as one of the eight frontier scientific fields. In 2012, the Chinese Academy of Sciences launched a strategic pilot science special project (B); the “Brain Function Link Map“, and the basis for a future “Brain Science Plan”. In future, the “Brain Science Plan” will promote the development of brain science in China, as well as brain disease prevention and control, as well as artificial intelligence development.

8. Conclusions

Many applications in photogrammetry and remote sensing can be automatically solved using AI, such as image matching, image target detection, change detection, image retrieval, Digital Orthophoto Quad (DOQ), and Digital Surface Model (DSM) generation. Yet there are still many applications in photogrammetry and remote sensing that however, remain difficult to solve with AI, such as Digital Elevation Model (DEM) generation from DSM, Digital Line Graphic (DLG) generation from DOQ and Multispectral Scanner (MSS) images, as well as 3D topological relationship generation of house models.

In the future, spatial cognition, such as Earth Observation systems, smart city, smartphone brains, and Internet + Spaceborne Information Real-time Service, will have broad applications on the smart earth. Additionally, through integration of Global Navigation Satellite System (GNSS), remote sensing, and communication, a smart air and space-borne real-time service system can provide geo-spatial information to end-user smartphones.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

Notes on contributors

Deren Li

Deren Li is a professor in State Key Laboratory of Information Engineering in Surveying, Mapping and Remote Sensing, Wuhan University. He was selected as a member of Chinese Academy of Sciences in 1991 and a member of Chinese Academy of Engineering in 1994. He got his bachelor and master degrees from Wuhan Technical University of Surveying and Mapping respectively in 1963 and 1981. In 1985, he got his doctor degree from University of Stuttgart, Germany. He was awarded the title of honorary doctor from ETH Zürich, Switzerland in 2008.

Zhenfeng Shao

Zhenfeng Shao is a professor in State Key Laboratory of Information Engineering in Surveying, Mapping and Remote Sensing, Wuhan University. He got his bachelor and master degrees from Wuhan Technical University of Surveying and Mapping respectively in 1998 and 2001, and received the PhD degree from Wuhan University in 2004. His research interest mainly focuses on urban remote sensing applications. The specific research directions include high-resolution remote sensing image processing and analysis, key technologies and applications from digital cities to smart cities and sponge cities.

Ruiqian Zhang

Ruiqian Zhang is a PhD student in School of Remote Sensing and Information Engineering in Wuhan University. She received the bachelor degree in remote sensing science and technology from Wuhan University, Wuhan, China in 2015. She is currently working toward the Ph.D. degree in photogrammetry and remote sensing from School of Remote Sensing and Information Engineering from Wuhan University. Her research interests include image/video processing and object detection.

References

- Alberga, V. 2009. “Similarity Measures of Remotely Sensed Multi-sensor Images for Change Detection Applications.” Remote Sensing 1 (3): 122–143. doi:10.3390/rs1030122.

- Chen, J., J. Jiang, X. Zhou, Y. Zhai, W. Zhu, and M. Ding. 2009. “Design of National Geo-spatial Information Service Platform: Overall Structure and Key Components.” Geomatics World 3: 7–11.

- Daudt, R. C., B. L. Saux, and A. Boulch. 2018. “Fully Convolutional Siamese Networks for Change Detection.” The 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, October 7–10.

- Deng, Z., H. Sun, S. Zhou, J. Zhao, and H. Zou. 2017. “Toward Fast and Accurate Vehicle Detection in Aerial Images Using Coupled Region-based Convolutional Neural Networks.” IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing 10 (8): 3652–3664. doi:10.1109/JSTARS.2017.2694890.

- Fan, C. C. 2006. “China’s Eleventh Five-year Plan (2006-2010): From ‘Getting Rich First’ to ‘Common Prosperity’.” Eurasian Geography and Economics 47 (6): 708–723. doi:10.2747/1538-7216.47.6.708.

- Hamet, P., and J. Tremblay. 2017. “Artificial Intelligence in Medicine.” Metabolism 69: 36–40. doi:10.1016/j.metabol.2017.01.011.

- Hussain, M., D. Chen, A. Cheng, H. Wei, and D. Stanley. 2013. “Change Detection from Remotely Sensed Images: From Pixel-based to Object-based Approaches.” ISPRS Journal of Photogrammetry and Remote Sensing 80: 91–106. doi:10.1016/j.isprsjprs.2013.03.006.

- Jiang, L., M. Liao, L. Zhang, and H. Lin. 2007. “Unsupervised Change Detection in Multitemporal SAR Images Using MRF Models.” Geo-spatial Information Science 10 (2): 111–116. doi:10.1007/s11806-007-0051-y.

- Krishnan, A. 2016. “Military Neuroscience and the Coming Age of Neurowarfare.” Routledge. doi:10.1080/01495933.2018.1486100.

- Li, D. 2012a. “The Geo-spatial Information Science Mission.” Geo-spatial Information Science 15 (1): 1–2. doi:10.1080/10095020.2012.708142.

- Li, D. 2012b. “China’s First Civilian Three-line-array Stereo Mapping Satellite: ZY-3.” Acta Geod. Cartogr. Sin 41 (3): 317–322. doi:10.1007/s11783-011-0280-z.

- Li, D., and Z. Shao. 2009. “The Era of New Geographic Information.” Science in China (F: Information Science) 39 (6): 579–587.

- Li, D., Z. Shao, and X. Yang. 2011. “Theory and Practice from Digital City to Smart City.” Geospatial Information 6: 002.

- Li, D., M. Wang, Z. Dong, X. Shen, and L. Shi. 2017a. “Earth Observation Brain (EOB): An Intelligent Earth Observation System.” Geo-spatial Information Science 20 (2): 134–140. doi:10.1080/10095020.2017.1329314.

- Li, D., Y. Yao, Z. Shao, and L. Wang. 2014. “From Digital Earth to Smart Earth.” Chinese Science Bulletin 59 (8): 722–733. CNKI:SUN:JXTW.0.2014-08-003.

- Li, G., X. Tang, X. Gao, H. Wang, and Y. Wang. 2016b. “ZY‐3 Block Adjustment Supported by Glass Laser Altimetry Data.” The Photogrammetric Record 31 (153): 88–107. doi:10.1111/phor.12138.

- Li, G., X. Tang, X. Gao, C. Zhang, and T. Li. 2016a. “Improve the ZY-3 Height Accuracy Using ICESAT/GLAS Laser Altimeter Data.” International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Prague, Czech Republic, July 12–19.

- Li, N., X. Huang, F. Zhang, and D. Li. 2015. “Registration of Aerial Imagery and Lidar Data in Desert Areas Using Sand Ridges.” The Photogrammetric Record 30 (151): 263–278. doi:10.1111/phor.12110.

- Li, W., K. Sun, D. Li, T. Bai, and H. Sui. 2017b. “A New Approach to Performing Bundle Adjustment for Time Series UAV Images 3d Building Change Detection.” Remote Sensing 9 (6): 625. doi:10.3390/rs9060625.

- Liang, D., Y. Guo, S. Zhang, S. Zhang, P. Hall, M. Zhang, and S. Hu. 2018. “LineNet: a Zoomable CNN for Crowdsourced High Definition Maps Modeling in Urban Environments.” https://arxiv.org/pdf/1807.05696.pdf

- Liang, R., L. Shi, H. Wang, J. Meng, J. J. Wang, Q. Sun, and Y. Gu. 2016. “Optimizing Top Precision Performance Measure of Content-based Image Retrieval by Learning Similarity Function.” The 23rd International conference on pattern recognition (ICPR), Cancun, Mexico, December 4–8.

- Liu, D. 2000. “Abnormal Detection on Satellite Remote Sensing OLR before ChiChi Earthquakes.” Geo-information Science 1. doi:10.3969/j.issn.1560-8999.2000.01.009.

- Liu, S. X. 2016. “Innovation Design: Made in China 2025.” Design Management Review 27 (1): 52–58.

- Mahmoodzadeh, H. 2007. “Digital Change Detection Using Remotely Sensed Data for Monitoring Green Space Destruction in Tabriz.” International Journal of Environmental Research 1 (1): 35–41. doi:10.1080/09603120701695593.

- Mei, F. 2009. “Smart Earth and Reading China——Analysis on Development of Internet of Things.” Agriculture Network Information 12: 5–7.

- Mercier, G., and F. Girard-Ardhuin. 2006. “Partially Supervised Oil-slick Detection by SAR Imagery Using Kernel Expansion.” IEEE Transactions on Geoscience and Remote Sensing 44 (10): 2839–2846. doi:10.1109/tgrs.2006.881078.

- Qin, R., J. Tian, and P. Reinartz. 2016. “3D Change Detection–approaches and Applications.” ISPRS Journal of Photogrammetry and Remote Sensing 122: 41–56. doi:10.1016/j.isprsjprs.2016.09.013.

- Radke, R. J., S. Andra, O. Al-Kofahi, and B. Roysam. 2005. “Image Change Detection Algorithms: A Systematic Survey.” IEEE Transactions on Image Processing 14 (3): 294–307. doi:10.1109/tip.2004.838698.

- Ravanbakhsh, M., M. Nabi, H. Mousavi, E. Sangineto, and N. Sebe. 2018. “Plug-and-play Cnn for Crowd Motion Analysis: An Application in Abnormal Event Detection.” IEEE Winter Conference on Applications of Computer Vision (WACV), Nevada, U.S., March 12–14.

- Russell, S. J., and P. Norvig. 2002. Artificial Intelligence: A Modern Approach. doi:10.4028/www.scientific.net/AMM.263-266.2829.

- Shao, Z., J. Cai, P. Fu, L. Hu, and T. Liu. 2019. “Deep Learning-based Fusion of Landsat-8 and Sentinel-2 Images for a Harmonized Surface Reflectance Product.” Remote Sensing of Environment 235: 111425. doi:10.1016/j.rse.2019.111425.

- Shao, Z., D. Li, and X. Zhu. 2011. “A Multi-scale and Multi-orientation Image Retrieval Method Based on Rotation-invariant Texture Features.” Science China Information Sciences 54 (4): 732–744. doi:10.1007/s11432-011-4207-x.

- Shao, Z., W. Zhou, Q. Cheng, C. Diao, and L. Zhang. 2015. “An Effective Hyperspectral Image Retrieval Method Using Integrated Spectral and Textural Features.” Sensor Review 35 (3): 274–281. doi:10.1108/SR-10-2014-0716.

- Shao, Z., W. Zhou, L. Zhang, and J. Hou. 2014. “Improved Color Texture Descriptors for Remote Sensing Image Retrieval.” Journal of Applied Remote Sensing 8 (1): 083584. doi:10.1117/1.jrs.8.083584.

- Solheim, I., K. A. Hogda, and H. Tommervik. 1995. “Detection of Abnormal Vegetation Change in the Monchegorsk, Russia, Area.” 1995 International Geoscience and Remote Sensing Symposium (IGARSS), Firenze, Italy, July 10–14.

- Taneja, A., L. Ballan, and M. Pollefeys. 2013. “City-scale Change Detection in Cadastral 3d Models Using Images.” The IEEE Conference on computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, June 23–28.

- Voženílek, V. 2009. “Artificial Intelligence and GIS: Mutual Meeting and Passing.” IEEE International Conference on Intelligent Networking and Collaborative Systems, Barcelona, Spain, November 4–6.

- Wang, M., B. Yang, D. Li, J. Gong, and Y. Pi. 2017. “Technologies and Applications of Block Adjustment without Control for ZY-3 Images Covering China.” Geomatics and Information Science of Wuhan University 42 (4): 427–433. doi:10.13203/j.whugis20160534.

- Wang, T., G. Zhang, D. Li, X. Tang, Y. Jiang, H. Pan, and X. Zhu. 2014a. “Planar Block Adjustment and Orthorectification of ZY-3 Satellite Images.” Photogrammetric Engineering and Remote Sensing 80 (6): 559–570. doi:10.14358/pers.80.6.559-570.

- Wang, T., G. Zhang, D. Li, X. Tang, Y. Jiang, H. Pan, X. Zhu, and C. Fang. 2013. “Geometric Accuracy Validation for ZY-3 Satellite Imagery.” IEEE Geoscience and Remote Sensing Letters 11 (6): 1168–1171. doi:10.1109/LGRS.2013.2288918.

- Wang, X., Z. Shao, X. Zhou, and J. Liu. 2014b. “A Novel Remote Sensing Image Retrieval Method Based on Visual Salient Point Features.” Sensor Review 34 (4): 349–359. doi:10.1108/SR-03-2013-640.

- Wei, H., Y. Xiao, R. Li, and X. Liu. 2018. “Crowd Abnormal Detection Using Two-stream Fully Convolutional Neural Networks.” The 10th International Conference on Measuring Technology and Mechatronics Automation (ICMTMA), Changsha, China, February 10–11.

- Weng, M., X. Wei, R. Qu, and Z. Cai. 2009. “A Path Planning Algorithm Based on Typical Case Reasoning.” Geo-spatial Information Science 12 (1): 66–71. doi:10.1007/s11806-009-0185-1.

- Xiao, Z., Y. Gong, Y. Long, D. Li, X. Wang, and H. Liu. 2017. “Airport Detection Based on a Multiscale Fusion Feature for Optical Remote Sensing Images.” IEEE Geoscience and Remote Sensing Letters 14 (9): 1469–1473. doi:10.1109/LGRS.2017.2712638.

- Xin, X., D. Li, and H. Sun. 2003. “Multiscale SAR Image Segmentation Using a Double Markov Random Field Model.” Seventh International Symposium on Signal Processing and Its Applications, Paris, France, July 4–4.

- Yang, B., L. Fang, and J. Li. 2013. “Semi-automated Extraction and Delineation of 3D Roads of Street Scene from Mobile Laser Scanning Point Clouds.” ISPRS Journal of Photogrammetry and Remote Sensing 79: 80–93. doi:10.1016/j.isprsjprs.2013.01.016.

- Yang, C., M. Yu, F. Hu, Y. Jiang, and Y. Li. 2017. “Utilizing Cloud Computing to Address Big Geospatial Data Challenges.” Computers, Environment and Urban Systems 61: 120–128. doi:10.1016/j.compenvurbsys.2016.10.010.

- Zhan, Q., X. Zhang, and D. Li. 2008. “Ontology-based Semantic Description Model for Discovery and Retrieval of Geospatial Information.” The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Beijing, China, July 3–11.

- Zhang, B. T. 2016. “Humans and Machines in the Evolution of AI in Korea.” AI Magazine 37 (2): 108–112. doi:10.1609/aimag.v37i2.2656.

- Zhang, F., X. Huang, W. Fang, and D. Li. 2013. “Non-rigid Registration of Mural Images and Laser Scanning Data Based on the Optimization of the Edges of Interest.” Science China Information Sciences 56 (6): 1–10. doi:10.1007/s11432-011-4440-3.

- Zhang, G., Y. Jiang, D. Li, W. Huang, H. Pan, X. Tang, and X. Zhu. 2014a. “In-orbit Geometric Calibration and Validation of ZY-3 Linear Array Sensors.” The Photogrammetric Record 29 (145): 68–88. doi:10.1117/12.319850.

- Zhang, G., T. Wang, D. Li, X. Tang, Y. Jiang, W. Huang, and H. Pan. 2014b. “Block Adjustment for Satellite Imagery Based on the Strip Constraint.” IEEE Transactions on Geoscience and Remote Sensing 53 (2): 933–941. doi:10.1109/tgrs.2014.2330738.

- Zhang, P., M. Gong, L. Su, J. Liu, and Z. Li. 2016. “Change Detection Based on Deep Feature Representation and Mapping Transformation for Multi-spatial-resolution Remote Sensing Images.” ISPRS Journal of Photogrammetry and Remote Sensing 116: 24–41. doi:10.1016/j.isprsjprs.2016.02.013.

- Zhang, Y., M. Zheng, X. Xiong, and J. Xiong. 2014. “Multistrip Bundle Block Adjustment of ZY-3 Satellite Imagery by Rigorous Sensor Model without Ground Control Point.” IEEE Geoscience and Remote Sensing Letters 12 (4): 865–869. doi:10.1109/LGRS.2014.2365210.

- Zhou, W., S. Newsam, C. Li, and Z. Shao. 2017. “Learning Low Dimensional Convolutional Neural Networks for High-resolution Remote Sensing Image Retrieval.” Remote Sensing 9 (5): 489. doi:10.3390/rs9050489.

- Zhou, W., Z. Shao, C. Diao, and Q. Cheng. 2015. “High-resolution Remote-sensing Imagery Retrieval Using Sparse Features by Auto-encoder.” Remote Sensing Letters 6 (10): 775–783. doi:10.1080/2150704X.2015.1074756.

- Zhu, X., and Z. Shao. 2011. “Using No-parameter Statistic Features for Texture Image Retrieval.” Sensor Review 31 (2): 144–153. doi:10.1108/02602281111110004.