?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

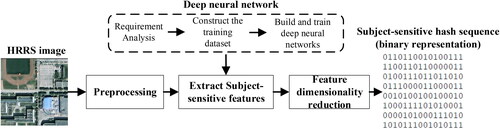

The premise of effective use of high-resolution remote sensing (HRRS) images is that the data integrity and authenticity of HRRS images must be guaranteed. This paper proposes a new subject-sensitive hashing algorithm for the integrity authentication of HRRS images. This algorithm takes AGIM-net (Attention Gate-based improved M-net) proposed in this paper to extract the subject-sensitive features of the HRRS images, and uses Principal Component Analysis (PCA) based method to compress and encode the extracted features. AGIM-net is an improved U-net based on attention mechanism, adding multi-scale input in the encoder stage to extract rich image features; adding multi-scale output in the decoder stage, and suppressing the features irrelevant to the subject through Attention Gate to improve the robustness of the algorithm. Experiments show that the proposed algorithm has improved robustness compared with existing algorithms, and the tamper sensitivity and security are basically equivalent to the existing algorithms.

1. Introduction

With the rapid development of earth observation technology and information technology, high-resolution remote sensing (HRRS) images are widely used in disaster monitoring, urban planning, environmental science, surveying and mapping and many other fields. However, the premise of effective use of HRRS images is that the data integrity and authenticity of the HRRS images must be guaranteed. If the HRRS image used by the user is tampered with, the effective content of the high-scoring image will be changed or even distorted.

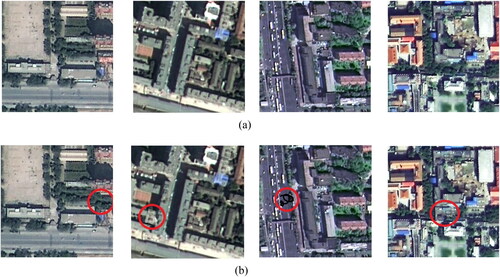

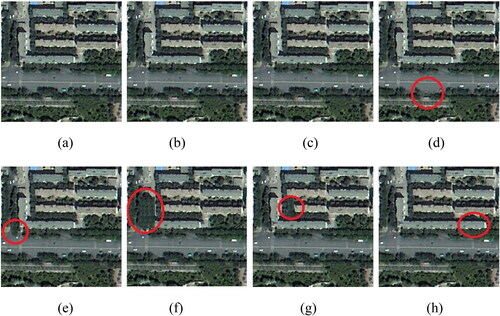

shows a set of example of tampered HRRS images: for tampered HRRS images, it is difficult for users to determine whether they have been tampered with. If the user uses the tampered HRRS image, the analysis results obtained will be inaccurate or even wrong; if the user is not sure whether the HRRS image has been tampered with, the use value of the HRRS image will be greatly reduced. Therefore, HRRS images need to be shared and used on the basis of ensuring data integrity and authenticity.

Figure 1. Tampering examples of high-resolution remote sensing images. (a) Original images; (b) Tampered images.

To solve the above problems, integrity authentication technology is generally used to ensure data integrity and authenticity. The mainstream authentication methods mainly include cryptographic hash algorithm (Aqeel-Ur-Rehman et al. Citation2016), digital signature (Sinha and Singh Citation2003), fragile watermark (Jiang et al. Citation2021), block chain (Zhou et al. Citation2019) and perceptual hash (Ding et al. Citation2019; Zhang et al. Citation2020a). Cryptographic hash algorithms and digital signatures perform binary-level authentication on data, which are over-sensitivity to changes and limits their applications in the multimedia domain (Karsh et al. Citation2016). Blockchain is mainly implemented by the cryptographic techniques and has the same shortcomings as the cryptographic hash algorithm. Fragile watermarking technology modifies the original data to a certain extent, which is limited in many applications of HRRS images. For example, HRRS images with low redundancy cannot be embedded enough fragile watermark information. Perceptual hashing (Niu and Jiao Citation2008) can realize content-based authentication, which overcomes the above problems to a certain extent. However, with the improvement of the resolution of HRRS images and the application of HRRS images in more fields, the performance of existing perceptual hashing algorithms has gradually encountered bottlenecks, and new security problems have begun to emerge.

In fact, in different applications of HRRS images, users’ focus on image content is different: hydrologists tend to pay more attention to the information of rivers and lakes, users who study outdoor navigation pay more attention to road and bridge, and users who study the extraction of moving targets are more interested in the information of planes, ships, and vehicles. In short, the requirements of users in different fields for the integrity authentication of HRRS images are often related to specific fields. However, the current perceptual hashing algorithms cannot achieve ‘subject-sensitive’ integrity authentication. Ding et al. (Citation2020) proposed the concept of ‘Subject-sensitive hashing’ to deal with this type of subject-sensitive authentication problem. As the existing subject-sensitive hashing algorithms still have major shortcomings in terms of algorithm robustness, we propose a new subject-sensitive hashing algorithm based on attention mechanism to improve the robustness of the algorithm in this paper. The contributions and novelties of this work are as follows:

We propose a deep neural network named AGIM-net, which is a derivative U-net based on Attention Gate and multiscale strategy. Experiments show that the proposed AGIM-net is more suitable for subject-sensitive hashing of HRRS image than existing networks such as original U-net and AAU-net.

Based on AGIM-net, we propose a new subject-sensitive hashing algorithm for the integrity authentication of HRRS image.

We conduct comprehensive experiments to evaluate the performance of our proposed AGIM-net-based algorithm and compare it with existing methods, which shows that the overall performance of our algorithm is better than existing algorithms.

The rest of this article is organized as follows. The related work is reviewed in Section 2. AGIM-net and the subject-sensitive hashing algorithm proposed is described in detail in Section 3. Section 4 presents the experiments and discussion. Finally, we conclude this paper in Section 5.

2. Related works

2.1. Perceptual hashing

Perceptual hashing, also known as perceptual hash, can map multimedia data with the same content to a digital digest uniquely. The most important features of perceptual hashing are robustness against content-preserving operations and tamper sensitivity to malicious manipulations (Karsh et al. Citation2018). Compared with cryptographic hash, digital signature, and blockchain, perceptual hashing can realize the authentication of HRRS images based on the content rather than the carrier of HRRS images.

According to different feature extraction methods adopted by the algorithm, perceptual hashing can be roughly divided into the following categories (Du et al. Citation2020): Frequency-domain robust feature-based algorithms (Qin et al. Citation2013; Huang et al. Citation2014), this kind of algorithms have good robustness to content retention operations, but often not robust enough for the geometric transformation; Statistical feature-based algorithms (Tang et al. Citation2013), achieve a good balance in terms of algorithm robustness and distinguishability, but there are often major shortcomings in algorithm security; Dimensionality reduction-based algorithms (Sun and Zeng Citation2014; Karsh and Laskar Citation2017; Hosny et al. Citation2018), obtain better performance in resisting geometric attacks, but are not as sensitive to tampering as the first two types of algorithms; Feature point-based algorithms (Wang et al. Citation2012b; Pun et al. Citation2018), have strong resistance to geometric transformation attacks, especially rotation attacks, but are not suitable for HRRS images; Learning-based algorithms (Strecha et al. Citation2012; Wang et al. Citation2012a), higher computational complexity and are gradually replaced by deep learning based algorithms. In recent years, some hashing algorithms use a variety of mixed features to generate hash sequences, for example, the hashing algorithm combining LWT (Lifting Wavelet Transform) and DCT (Discrete Cosine Transform) has also achieved good results.

In the existing research on perceptual hash algorithms for HRRS images, Ding et al. (Citation2019) proposed a perceptual hash algorithm based on an improved U-net, which enhanced robustness while maintaining high tamper sensitivity; Zhang et al. (Citation2020a) combined the global features and local features of HRRS images to generate perceptual hash sequences, which have better tampering localization capabilities.

However, with the improvement of resolution of HRRS images and the integration of geography and cyberspace security, perceptual hashing has gradually been unable to meet the integrity authentication requirements of HRRS images. New theories and methods are urgently needed to solve the problems in HRRS image authentication.

2.2. Subject-sensitive hashing

Subject-sensitive hashing (Ding et al. Citation2020) is a one-way mapping that takes into account the types of objects that users focus on, and uniquely maps the perceptual content of an image to a digital summary. Subject-sensitive hashing can be seen as a special case of perceptual hashing. It inherits the robustness and tamper-sensitivity of perceptual hashing, and can perform key integrity authentication for specific types of features. For example, if users mainly use building information from HRRS images, the building information should be authenticated more strictly. Here, ‘stricter authentication’ depend on the perceptual feature extraction method adopted by subject-sensitive hashing algorithm, and should not be generalized. In the experiment of this paper, we take building as an example of subject to describe the algorithm and test the performance of the algorithm.

Although it is difficult for traditional image processing techniques to achieve subject-sensitive hashing, the rise of deep learning provides a feasible way to achieve subject-sensitive hashing: through the learning of specific samples, deep neural networks have the ability to extract subject-sensitive features. Compared with traditional methods, deep learning has excellent feature expression ability and can mine essential information hidden inside the data (Li et al. Citation2019). The method based on deep learning can automatically learn features from training samples, reduce the complexity of artificially designed features and show a strong potential in enhancing the robustness of subject-sensitive hashing. However, existing deep learning-based hashing technology (Lu et al. Citation2017; Song et al. Citation2022; Sun et al. Citation2022a, Citation2022b) is mainly oriented to image retrieval, which is quite different from the requirements of image authentication and cannot be directly used for image authentication.

Deep learning provides a feasible way to achieve subject-sensitive hashing. The framework of deep learning based subject-sensitive hashing algorithm is shown in : First, construct a training sample set that meets the requirements of subject-sensitive feature extraction; Then, design and train the corresponding deep neural network model; Next, subject-sensitive feature extraction is performed through the trained deep neural network model, and the obtained features are mapped and encoded by dimension reduction to obtain the final hash sequence. In the above process, the deep neural network used for feature extraction is the core of the algorithm. Besides MUM-Net (Ding et al. Citation2020), other deep neural networks such as U-net (Ronneberger et al. Citation2015), MultiResUNet (Ibtehaz and Rahman Citation2020) can also be used for subject-sensitive hashing.

In the deep learning method, attention mechanism can make the feature extraction process pay more attention to salient features, and suppress irrelevant regions through sample learning, which is very suitable for the extraction of subject-sensitive features. Essentially, attention mechanism is a weighted probability distribution mechanism: important content is given a higher weight, and other content is given a relatively small weight. Attention mechanism enables the network to dynamically select a subset of input attributes in a given input-output pair setting to improve decision accuracy (Zhao et al. Citation2020a. This enables the attention mechanism to focus on finding subject-sensitive information, highlighting the features relevant to the prediction, and improving the accuracy of the model prediction. Attention mechanism has been widely used in image classification (Xing et al. Citation2020; Zhu et al. Citation2021), machine translation (Zhang et al. Citation2020b; Lin et al. Citation2021) and natural language description (Li et al. Citation2021; Ji and Li Citation2020), showing strong application prospects.

Attention U-net (Oktay et al. Citation2018) is an improved U-net network based on attention mechanism. Compared with the original U-net, Attention U-net automatically pays attention to the regions with salient features during inference without additional supervision and annotation, and does not increase the amount of parameters and computation. In this paper, we draw on the Attention Gate of Attention U-net and the multi-scale input and output network structure of M-net (Adiga and Sivaswamy Citation2019) to propose a deep neural network named Attention Gate-based improved M-net (AGIM-net) to achieve subject-sensitive feature extraction of HRRS images.

3. Methods

3.1. AGIM-net

The core of subject-sensitive hashing lies in the deep neural network model used for subject-sensitive feature extraction, which directly determines the performance of the algorithm. As a variant of U-net, AGIM-net proposed in this paper is used for the extraction of subject-sensitive feature of HRRS images.

In original U-Net, part of spatial information is lost due to the pooling and stride operation (Gu et al. Citation2019), while multi-scale input can greatly alleviate the impact of spatial information loss (Fu et al. Citation2018). Inspired by this, AGIM-net constructs a multi-scale input (image pyramid) by downsampling the input image to reduce the loss of spatial information due to convolution and pooling operations, so that each level module in the encoding stage can learn richer features.

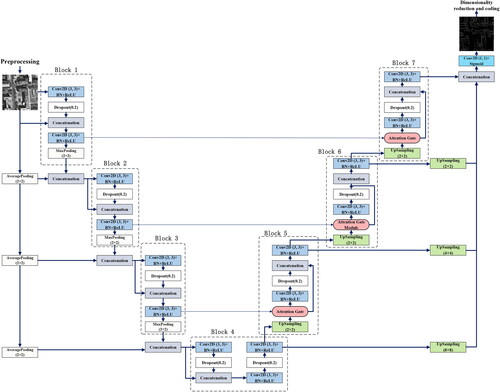

The network structure of AGIM-net is shown in . In general, AGIM-net adopts a symmetric network structure. There are obvious differences compared with U-net and Attention U-net: due to the addition of multi-scale input in the encoder stage and the multi-scale output addition in the decoder part, the overall network architecture of AGIM-net is ‘M’ shaped.

The left half of AGIM-net is the encoding path, and the right half is the decoding path, which are down-sampling three times and up-sampling three times respectively. Each module of the encoding path contains convolution operations, batch normalization (BN) and activation functions, and each module performs a pooling operation at the end; in the decoding path, in addition to convolution operations, BN and activation functions, each module contains an Attention Gate (AG) module to connect the encoding path and the decoding path. In the whole network, except the last network layer adopts sigmod as the activation function, each network layer adopts rectified linear unit (ReLU) as the activation function.

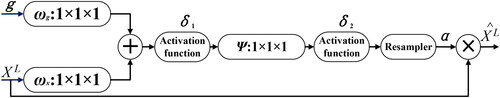

Attention Gate (AG) plays an important role in AGIM-net, which can strengthen the learning of subject-related features and suppress the learning of subject-unrelated features to extract the subject-sensitive features that meet the needs of integrity authentication. The structure of AG is shown in : g represents the gating vector applied to the input image pixels, containing contextual information, used to reduce the responsiveness of lower-level features; XL represents the initial feature map of the input; δ1 and δ2 are two activation functions; α represents the attention coefficient related to a specific subject, which is used to suppress the expression of features that are not related to a specific subject; represents the output feature map, which is the result of multiplying the attention coefficient α and the initial feature map XL.

In AG, the activation functions δ1 and δ2 can be sigmoid, ReLU, Elu, LeakyReLU, PReLU or other activation functions, but different activation functions make the performance of the model different. In the experiments of this paper, we determined that δ1 is ReLU and δ2 is sigmoid after repeated experiments.

In fact, in addition to Attention U-net and our proposed AGIM-net, some scholars have also studied the improved U-net based on the attention mechanism: Jiang et al. (Citation2020) proposed an improved U-net named Attention M-net based on the attention mechanism, which added three compression and excitation (Squeeze-and-Excitation, SE) to the encoding path and decoding path respectively, which is different from the Attention Gate adopted by AGIM-net, and its network structure is quite different from that of AGIM-net. The AAU-Net proposed by Ding et al. (Citation2021) is also an improved U-net based on Attention Gate. AAU-Net has the distinctive characteristics of structural asymmetry: the structure of the encoding path and the decoding path is asymmetric, and the encoding path contains 4 sub-sampling layers while the decoding path has only 2 up-sampling layers, making the output feature map much smaller than the input image.

3.2. Subject-sensitive hashing based on AGIM-net

The subject-sensitive hashing algorithm based on AGIM-net consists of three steps: The first step is the pre-processing of HRRS images, including grid division for HRRS images and grid cell normalization; the second step is to extract the subject-sensitive features of HRRS images based on AGIM-net; the third step, compression coding, is to perform dimension reduction, compression, coding, and encryption on the extracted subject-sensitive features to obtain subject-sensitive hash sequences of HRRS images. Details as follows:

Step 1: Pre-processing of HRRS images.

Taking into account the characteristics of the massive data of HRRS images, our algorithm uses quadrilateral grid division to divide the HRRS images into W×H grid cells (W and H are both positive integers). Regarding the granularity of grid division, our algorithm draws on the relevant conclusions of existing methods: each grid cell after grid division should be greater than or equal to 256 × 256 pixels and less than 512 × 512 pixels.

Bilinear interpolation is used to normalize each grid cell to m × m pixel size. In the experiments of this paper, m = 256, which is the size of the input image of AGIM-net. The normalized grid cell is denoted as Rij.

Step 2: Extract subject-sensitive features of HRRS images based on AGIM-net.

According to the selected subject, construct a training sample set and train AGIM-net. The specific training dataset and training process will be discussed in detail in the experimental section.

For single-band high-resolution images, the pre-processed grid cell Rij is input to AGIM-net, and the output feature is recorded as Fij.

For multi-band HRRS images, band fusion should be performed on the HRRS images. The single-channel image after band fusion is input to AGIM-net, and the output feature is recorded as Fij.

Step 3: Compression coding.

Our algorithm uses a compression coding method based on principal component analysis (PCA) to process the extracted features Fij. In essence, the subject-sensitive feature Fij extracted by AGIM-net is a two-dimensional matrix of pixel values. As the first few columns of principal components contain most of the effective content information of Fij after the matrix is decomposed by PCA, our algorithm selects the first column of principal components as the subject-sensitive feature.

Since the lower part of the subject-sensitive feature Fij can more clearly reflect whether the feature value changes, and considering the digestibility of the hash sequence, we use the low-bit effective principle to binarize each Fij: take the lowest two digits of Fij after binarization to characterize Fij. After the subject-sensitive feature is binarized, the obtained 0-1 sequence is encrypted by the Advanced Encryption Standard (AES) algorithm to obtain the hash sequence of the grid cell, which is recorded as SHij. The hash sequences SHij of all grid cells are concatenated to obtain the final subject-sensitive hash sequence of the HRRS image, which is denoted as SH.

The above algorithm process can also be represented as follows:

Algorithm 1:

AGIM-net based Subject-sensitive hashing algorithm

Input: A HRRS image

Output: Subject-sensitive hash sequence

Step 1 Input a HRRS image

Step 2 Divide the HRRS image into grid cells

Step 3 Normalize each grid cells, and denoted them as Rij.

Step 4 for each Rij

Fij= AGIM-net (Rij)

Fij is binarized

end

Step 5 SHij = AES ( PCA(Binarized Fij) )

Step 6 Connect each SHij to get SH

Step 7 Output Subject-sensitive hash sequence SH

The integrity authentication process of our algorithm is similar to existing methods: generate a hash sequence of the HRRS image to be authenticated, compare the difference between this hash sequence and the hash sequence of the original image, and then determine the content integrity of the image to be authenticated. The normalized hamming distance is used by our algorithm to compare the difference of two hash sequences. Let h1 and h2 be two binary representation hash sequences, and the length is L, the normalized hamming distance is as follows:

(1)

(1)

If the normalized hamming distance of h1 and h2 is less than the set threshold T, it means that the content of the image grid cell has not changed significantly; otherwise, the content of the image grid cell has been tampered with.

4. Experiments and discussion

4.1. Implementation details and performance metric

In the experiment, Python 3.7 is used as the programming language, and the deep learning framework Keras 2.3.1 (the backend is tensorflow 1.15.5) is used to implement the deep neural network AGIM-net. To maintain algorithm compatibility, the implementation process of the subject-sensitive hashing algorithm also adopts Python 3.7.

The hardware information is: Intel I7-9700K CPU, 32 GB DDR4 memory and NVIDIA RTX 2080Ti GPU (11 G memory). In the training process of AGIM-net, the batch size is set to 4 and the epoch is set to 100; the Adam optimizer is used, and its learning rate is 1e-5.

Our algorithm takes building as an example of subject to test the performance of the algorithm. The training process of the model adopts the same data set of Ding et al. (Citation2020), which is a composite training sample set based on the WHU building data set (Ji and Wei Citation2019) and combined with manually drawn robust edge features. It contains a total of 3166 pairs of training samples, and the sample size is 256 × 256 pixels. Since the proportion of pixels corresponding to edge features in the training samples is relatively small, that is, the positive samples and negative samples are unbalanced, Focal loss (FL) (Lin et al. Citation2017) is taken as the loss function. In addition, to facilitate the discussion and comparison of the algorithm, the encryption and decryption of the hash sequence is temporarily omitted, which does not affect the robustness and tamper-sensitivity evaluation of the algorithm.

In the experiment, the following algorithms are used as comparison algorithms to test the performance of our algorithm:

U-net based subject-sensitive hashing algorithm (referred to as ‘U-net-based algorithms’);

MultiResUnet based subject-sensitive hashing algorithms (referred to as ‘MultiResUnet based algorithms’);

M-net based subject-sensitive hashing algorithms (referred to as ‘M-net based algorithms’);

Attention ResUNet (Zhao et al. Citation2020b) based subject-sensitive hashing algorithm (referred to as ‘Attention ResUNet based algorithm’);

Attention R2U-Net (Alom et al. Citation2019) based subject-sensitive hashing algorithm (referred to as ‘Attention R2U-Net based algorithm’);

AAU-Net based subject-sensitive hashing algorithm (referred to as ‘AAU-Net based algorithm’);

Attention U-net-based subject-sensitive hashing algorithm (referred to as ‘Attention U-Net based algorithm’).

The implementation process of each comparison algorithm also uses Python 3.7, and the training data set used is the same as that of AGIM-net.

We will compare the performance of each algorithm from the following performance metric:

Robustness. Robustness means that after a HRRS image has undergone an operation that does not change the contents of the image, the hash sequence of the image does not change or the degree of change falls below a pre-set threshold.

Sensitivity to Tampering. If the contents of a HRRS image are tampered with, the hash sequence of the image should change to a degree greater than a pre-set threshold, that is, it is sensitive to tampering.

Digestibility. It means that the hash sequence generated by the algorithm should be as short as possible.

Security. It refers to the effective content information of the original HRRS image that cannot be obtained from the hash sequence.

Compute performance. It means that the algorithm should generate a hash sequence of a HRRS image as quickly as possible.

4.2. Examples of integrity authentication

To intuitively illustrate the integrity authentication process of each subject-sensitive hash algorithm, this section takes the HRRS image shown in as an example to make a preliminary comparison of each algorithm. In shows the original HRRS image, which is in Tiff format with a size of 256 × 256 pixels; are divided into the following three groups:

Figure 5. Examples of integrity authentication for HRRS image. (a) original images in Tiff format;(b) Image converted to PNG format; (c) Image after LSB watermark embedding; (d) Subject-unrelated tampering test 1; (e) Subject-unrelated tampering test 2; (f) Subject-related tampering test 1; (g) Subject-related test 2; (h) Subject-related tampering test 3.

Operations that do not change the content of the image. are the image after format conversion (Tiff format is converted to PNG format) and the image after watermark embedding (take LSB watermark as an example). These two operations do not change the content of the HRRS image, while the image changes dramatically at the binary level;

Subject-unrelated tampering. are two examples of subject-unrelated tampering, that is, the content of the image is tampered with, but the tampering is unrelated to the building;

Subject-related tampering. are three examples of subject-related tampering, that is, the contents of the image being tampered with are related to buildings.

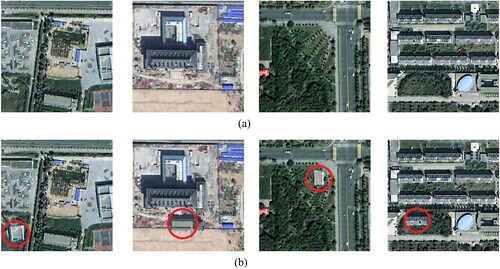

Figure 6. Examples of subject-related tampering (adding buildings). (a) Original images; (b) Tampered images.

Our AGIM-net based algorithm and each comparison algorithm in Section 3.1 are used to generate the hash sequence of the images shown in , and then calculate the normalized hamming distance between hash sequences of and the hash sequence , the results are shown in :

Table 1. Normalized hamming distance of each algorithm.

First, set the threshold T to 0.02, and the integrity authentication results based on are shown in :

Table 2. Integrity authentication results based on Table1 (Threshold T is 0.02).

It can be seen from that each algorithm has good robustness to the operations that do not change the image content, and overcomes the deficiency that cryptographic hash can only perform binary-level authentication on images. For subject-related tampering, each comparison algorithm has good tampering sensitivity and can detect all of them. However, for subject-unrelated tampering, the comparison algorithms have certain differences: when the threshold T is 0.02, U-net based algorithm, Attention R2U-Net based algorithm, and Attention U-net based algorithm failed to detect the subject-unrelated tampering shown in ; MultiResUnet based algorithm and Attention ResUNet based algorithm failed to detect the subject-unrelated tampering shown in ; M-net-based algorithms, AAU-Net-based algorithms, and AGIM-net-based algorithms can detect all types of tampering.

An ideal subject-sensitive hashing algorithm can not only detect tampering, but also distinguish between ‘subject-related tampering’ and ‘subject-unrelated tampering’ by setting different thresholds T. When the threshold is 0.03, the integrity authentication results based on are shown in :

Table 3. Integrity authentication results based on (Threshold T is 0.03).

It can be seen from that after the threshold T is increased, the subject-unrelated shown in has passed the authentication of AGIM-net based algorithm. Meanwhile, the subject-related tampering shown in is still regarded as malicious tampering by the AGIM-net-based algorithm and fails to pass the integrity authentication. Combining the results of and , we can draw the preliminary conclusion that our AGIM-net based algorithm can distinguish between subject-related tampering and subject-unrelated tampering well.

By setting a higher threshold T, it is also possible for other algorithms to be able to distinguish between subject-related tampering and subject-related tampering. For example, if the threshold T is set to 0.05, the integrity authentication results based on are shown in :

Table 4. Integrity authentication results based on (Threshold T is 0.05).

Combining with , it can be seen that AGIM-net based algorithm is more in line with the characteristics of the ideal subject-sensitive hashing, that is, the subject-related tampering and subject-unrelated tampering are distinguished by different threshold settings in integrity authentication. Although M-net based algorithm is also able to distinguish between subject-related tampering and subject-unrelated tampering, the distinction is not as strong as the AGIM-net based algorithm. MultiResUnet based algorithm fails to detect subject-unrelated tampering by setting a small threshold, and there is a problem of insufficient tampering sensitivity.

4.3. Performance of robustness

In this section, the dataset Datasets10,000 used by Ding et al. (Citation2021) in the research on AAU-Net based subject-sensitive hash algorithm is used to compare the robustness of each algorithm. The dataset Datasets10,000 contains 10,000 HRRS images in TIF format with size of 256 × 256 pixels, consisting of images from sources such as Gaofen-2 (GF-2) satellite and DOTA (Xia et al. Citation2018).

First, the robustness of each algorithm to digital watermark embedding is tested. Here, the Least Significant Bit (LSB) method is used to embed 32-bit watermark information into the images of Datasets10,000, and the normalized hamming distance between the image hash sequences before and after watermark embedding is calculated.

Since in different integrity authentication applications, different thresholds are often set according to requirements, the robustness test under various thresholds can better illustrate the robustness of the algorithm. For this reason, the image ratios whose normalized hamming distance is lower than different thresholds are used to describe the robustness of the algorithm in the experiments. The robustness test results of each algorithm for digital watermarking are shown in :

Table 5. Robustness test comparison of watermark embedding (32 bits embedded).

It can be seen from that even when the threshold T is relatively low (e.g. T = 0.02), the robustness of each comparison algorithm for embedding 32-bit watermark information is ideal, and AGIM-net based algorithm, MultiResUnet based algorithm and Attention ResUNet based algorithm even have complete robustness.

Since watermark embedding with different amounts of information modifies the image differently, we further test the robustness of the algorithm under 128-bit watermark embedding, and the results are shown in :

Table 6. Robustness test comparison of watermark embedding (128 bits embedded).

It can be seen from that with the increase of watermark information embedding, the robustness of each algorithm decreases slightly, but the decline is small, indicating that each algorithm has well robustness to digital watermarking.

Next, we test the robustness of each algorithm to Joint Photographic Experts Group (JPEG) compression. Here, we use C++ as the programming language and call the relevant interface of opencv 2.4.13 to perform 95% JPEG compression on the images. The robustness test results are shown in :

Table 7. Robustness test comparison of JPEG compression.

As can be seen from , the robustness of our AGIM-net based algorithm has the best robustness to JPEG compression; the robustness of Attention ResUNet based algorithm and Attention U-net based algorithm is also relatively good, but not as good as AGIM-net based algorithm; Attention R2U-Net based algorithm has relatively poor robustness to JPEG compression and is not recommended for use in reality.

Overall, the robustness of our proposed AGIM-net based subject-sensitive hashing algorithm is better than existing subject-sensitive hashing algorithms.

4.4. Performance of sensitivity to tampering

Subject-sensitive hashing algorithm should have good tampering sensitivity while having good algorithm robustness, that is, the ability to detect the tampering of HRRS image content.

First, we test the algorithm’s tampering sensitivity to random content tampering. Each image in Datasets10,000 is tampered with the content of 16 × 16 pixels, and the location of the tampered area is random. The proportion of tampering detected by each algorithm under different thresholds is shown in :

Table 8. Location random tamper sensitivity testing (16 × 16 pixel tampering area).

It can be seen from that our AGIM-net based algorithm is basically at the same level as the existing algorithm: the tamper sensitivity of each algorithm is ideal under a lower threshold (such as 0.02 or 0.03) except Attention ResUNet based algorithm; but the tamper sensitivity of each algorithm decreases significantly at higher thresholds (such as 0.1 or higher).

Next, we test the tamper sensitivity of each algorithm to noise addition. After randomly adding 64 white noises to each image in Datasets10,000, the ratios of tampered images detected by each algorithm under different thresholds are shown in :

Table 9. Tamper sensitivity test for noise addition (64 white noises added).

It can be seen from that our AGIM-net-based algorithm has the best tampering sensitivity to noise addition, especially when the threshold is relatively high (such as 0.1), most tampering can still be detected.

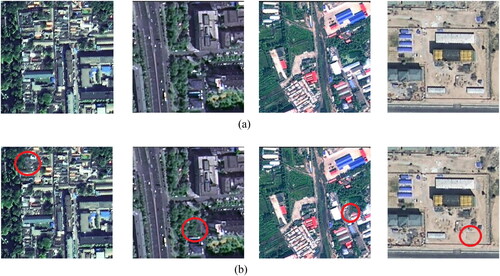

The difference between subject-sensitive hashing and conventional perceptual hashing is that subject-sensitive hashing can perform focused integrity authentication for a specific subject. As there is currently no publicly available dataset for the test of subject-related tampering, we use the HRRS images of the GaoFen-2 (GF-2) satellite as the source to construct tampering datasets on adding buildings (named DatasetaddBuilding) and deleting buildings (named DatasetdeleteBuilding), and each dataset contains 100 tampering instances. and show some examples of the above two datasets DatasetaddBuilding and DatasetdeleteBuilding, respectively.

Figure 7. Examples of subject-related tampering (deleting buildings). (a) Original images; (b) Tampered images.

The test results of each algorithm’s tamper sensitivity for adding buildings and deleting buildings are shown in and , respectively.

Table 10. Tamper sensitivity test for subject-related tampering (add buildings).

Table 11. Tamper sensitivity test for subject-related tampering (delete buildings).

It can be seen from that under the medium and low threshold (less than or equal to 0.05), except for the Attention ResUNet based algorithm, each algorithm has good tamper sensitivity for adding buildings. Our AGIM-net-based algorithm, Attention R2U-Net based algorithm, M-net based algorithm can detect tampering of all added buildings. But at higher thresholds (0.1 or greater), the tamper sensitivity of each algorithm begins to decrease.

It can be seen from that our AGIM-net-based algorithm is only slightly less sensitive to for deleting buildings than Attention R2U-Net based algorithm.

Overall, the tamper sensitivity of our AGIM-net based algorithm is slightly lower than that of Attention R2U-Net based algorithm, while the robustness of the Attention R2U-Net based algorithm is the worst, seriously affecting its comprehensive performance.

4.5. Digestibility and security analysis

The hash sequence generated by our algorithm is a 0-1 sequence of 128 bits, that is, the digestibility of our algorithm is the same as MD5 algorithm and shorter than SHA-1 algorithm. As each comparison algorithm adopts the same feature coding process, there is no difference in digestibility of each algorithm.

The security of our proposed AGIM-net based subject-sensitive hashing algorithm mainly depends on the following two aspects:

The difficulty of interpretation of deep neural networks. The difficulty of interpretation of deep neural networks is an obvious disadvantage in other fields, but it can well guarantee the security of subject-sensitive hashing: even if the attacker obtains the hash sequence, it is difficult for the attacker to reversely obtain the content information of the HRRS image from the hash sequence.

In the compression encoding stage of the algorithm, AES algorithm is used to encrypt the extracted features. Since the security of AES has long been widely recognized. The application of the AES algorithm further strengthens the security of our algorithm.

4.6. Compute performance

In order to test the computational performance of each algorithm under different data volumes, we select 30, 300, 1000, and 10000 HRRS images from the dataset Datasets10,000 to construct four datasets, and then test the computational performance of the algorithm on these four datasets. We evaluate the computational performance of each algorithm from two perspectives: total calculation time and average calculation time. The comparison results are shown in :

Table 12. Tests of compute performance.

As can be seen from , the computational performance of our AGIM-net-based algorithm is at a medium level: it is better than the MultiResUnet-based algorithm, Attention ResUNet based algorithm, Attention R2U-Net based algorithm, and inferior to several other algorithms. The reason why the computational performance of our algorithm is not the best is also obvious: our proposed AGIM-net itself is an improved version of U-net, adding multi-scale input and attention mechanisms, which inevitably increases the complexity of the model. On the other hand, since the model needs to be loaded every time the subject-sensitive hashing algorithm is started, the more HRRS images computed in a batch, the shorter the time to compute each image hash sequence, as can be seen from .

4.7. Discussion

Subject-sensitive hashing is an integrity authentication technology derived from perceptual hashing, and can be regarded as a special perceptual hashing. Subject-sensitive hashing algorithm needs to have good robustness and tamper-sensitivity at the same time, while these two properties are inherently contradictory.

In this paper, we propose a new subject-sensitive hashing algorithm for the integrity authentication of HRRS image. The core of our algorithm is the deep neural network AGIM-net for feature extraction of HRRS image. The feature of AGIM-net is that the multi-scale input of the encoding part and the multi-scale output of the decoding part enable the extracted features to meet the needs of fine-grained tampering detection; Attention Gate is used in the decoding part to extract subject-related features to avoid the interference of invalid features. Combined with the experimental results and analysis in Sections 4.2–4.5, the following conclusions can be drawn:

Robustness. Overall, AGIM-net-based algorithm is more robust than existing algorithms for operations that do not alter image content, such as JPEG compression and digital watermark embedding, especially at low thresholds such as 0.02 or 0.03. Moreover, the robustness of our algorithm is better than other attention-based deep neural network models, including Attention U-net, AAU-Net, and Attention ResUNet.

Tamper Sensitivity. Although the tamper sensitivity of Attention R2U-Net based algorithm is the best, its robustness is the worst, resulting in its poor overall performance. The tamper sensitivity of our AGIM-net is inferior to Attention R2U-Net, which is basically the same as Attention U-net and better than other models.

Digestibility and Security. The length of the hash sequence generated by each algorithm is 128 bits due to the similar feature encoding process, and the security of each comparison algorithm relies on the unidirectionality brought by the inexplicability of the deep neural network and the security of the encryption algorithm, so there is no obvious difference in the security and digestibility of each comparison algorithm.

5. Conclusion

In this paper, we propose a subject-sensitive hashing algorithm for HRRS images based on AGIM-net. AGIM-net is an improved M-net based on Attention Gate: the encoder stage extracts as much feature information as possible through multi-scale input, and in the decoder stage, the network extracts subject-related features through Attention Gate to avoid unnecessary features interference to enhance the robustness of the algorithm. In the experiment, our AGIM-net based subject-sensitive hashing algorithm is compared with 7 related algorithms such as M-net based algorithm and Attention U-net based algorithm. The results show that the overall performance of the AGIM-net based algorithm outperforms existing algorithms.

Our next research focus is to further improve the robustness of the algorithm to operations such as JPEG compression, tending to be as completely robust as possible. On the other hand, we will focus on building a dataset for the test of subject-related tampering sensitivity test.

Data availability statement

The code used in this study are available by contacting the corresponding author.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

References

- Adiga VS, Sivaswamy J. 2019. FPD-M-net: fingerprint image denoising and inpainting using M-Net based convolutional neural networks. arXiv preprint arXiv:1812.10191.

- Alom MZ, Yakopcic C, Hasan M, Taha TM, Asari VK. 2019. Recurrent residual U-Net for medical image segmentation. J Med Imaging (Bellingham). 6(1):014006.

- Aqeel-Ur-Rehman LX, Kulsoom A, Ullah S. 2016. A modified (dual) fusion technique for image encryption using SHA-256 hash and multiple chaotic maps. Multimed Tools Appl. 75:11241–11266.

- Ding KM, Chen SP, Wang Y, Liu YM, Zeng Y, Tian J. 2021. AAU-Net: attention-based asymmetric U-Net for subject-sensitive hashing of remote sensing images. Remote Sensing. 13:5109.

- Ding KM, Liu YM, Xu Q, Lu FQ. 2020. A subject-sensitive perceptual hash based on MUM-Net for the integrity authentication of high resolution remote sensing images. ISPRS Int J Geo-Inf. 9(8):485.

- Ding KM, Yang ZD, Wang YY, et al. 2019. An improved perceptual hash algorithm based on U-Net for the authentication of high-resolution remote sensing image. Appl Sci. 9:2972.

- Du L, Ho ATS, Cong R. 2020. Perceptual hashing for image authentication: a survey. Signal Process Image Commun. 81:115713.

- Fu H, Cheng J, Xu Y, Wong DWK, Liu J, Cao X. 2018. Joint optic disc and cup segmentation based on multi-label deep network and polar transformation. IEEE Trans Med Imaging. 37(7):1597–1605.

- Gu ZW, Cheng J, Fu HZ, Zhou K, Hao HY, Zhao YT, Zhang TY, Gao SH, Liu J. 2019. CE-Net: context encoder net-work for 2D medical image segmentation. IEEE Trans Med Imaging. 38(10):2281–2292.

- Hosny KM, Khedr YM, Khedr WI, Mohamed ER. 2018. Robust color image hashing using quaternion polar complex exponential transform for image authentication. Circuits Syst Signal Process. 37:5441–5462.

- Huang L, Tang Z, Yang F. 2014. Robust image hashing via colour vector angles and discrete wavelet transform. IET Image Proc. 8(3):142–149.

- Ibtehaz N, Rahman MS. 2020. MultiResUNet: rethinking the U-Net architecture for multimodal biomedical image segmentation. Neural Netw. 121:74–87.

- Jiang Y, Zhao CH, Ding W, Hong L, Shen Q. 2020. Attention M-net for Automatic Pixel-Level Micro-crack Detection of Photovoltaic Module Cells in Electroluminescence Images. Proceeding of 9rd IEEE Data Driven Control and Learning Systems Conference, Liuzhou, China.

- Jiang L, Zheng H, Zhao C. 2021. A Fragile Watermarking in Ciphertext Domain Based on Multi-Permutation Superposition Coding for Remote Sensing Image. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 p. 5664–5667.

- Ji Z, Li S. 2020. Multimodal alignment and attention-based person search via natural language description. IEEE Internet Things J. 7:11147–11156.

- Ji SP, Wei SY. 2019. Building extraction via convolutional neural networks from an open remote sensing building dataset. Acta Geod Cartographica Sin. 48:448–459.

- Karsh RK, Laskar RH. 2017. Aditi robust image hashing through DWT-SVD and spectral residual method. J Image Video Proc. 2017:31.

- Karsh RK, Laskar RH, Richhariya BB. 2016. Robust image hashing using ring partition-PGNMF and local features. Springerplus. 5(1):1995.

- Karsh RK, Saikia A, Laskar RH. 2018. Image authentication based on robust image hashing with geometric correction. Multimed Tools Appl. 77:25409–25429.

- Lin TY, Goyal P, Girshick R, He KM, Dollár P. 2017. Focal loss for dense object detection. IEEE Trans Pattern Anal Mach Intell. 42:2999–3007.

- Lin H, Ren S, Yang Y, Zhang YY. 2021. Unsupervised image-to-image translation with self-attention and relativistic discriminator adversarial networks. Acta Autom Sin. 47(9):2226–2237.

- Li S, Song W, Fang L, Chen Y, Ghamisi P, Benediktsson JA. 2019. Deep learning for hyperspectral image classification: an overview. IEEE Trans Geosci Remote Sens. 57(9):6690–6709.

- Li X, Yuan A, Lu X. 2021. Vision-to-language tasks based on attributes and attention mechanism. IEEE Trans Cybern. 51(2):913–926.

- Lu X, Song L, Xie R, Yang XK, Zhang WJ. 2017. Deep hash learning for efficient image retrieval. IEEE International Conference on Multimedia & Expo Workshops (ICMEW). p. 579–584.

- Niu XM, Jiao YH. 2008. An overview of perceptual hashing. Acta Electronica Sinica. 36(7):1405–1411.

- Oktay O, Schlemper J, Folgoc LL, Lee M, Heinrich M, Misawa K, Mori K, McDonagh S, Hammerla NY, Kainz B, et al. 2018. Attention u-net: learning where to look for the pancreas. arXiv preprint arXiv:1804.03999.

- Pun CM, Yan CP, Yuan XC. 2018. Robust image hashing using progressive feature selection for tampering detection. Multimed Tools Appl. 77(10):11609–11633.

- Qin C, Chang CC, Tsou PL. 2013. Robust image hashing using non-uniform sampling in discrete Fourier domain. Digital Signal Process. 23(2):578–585.

- Ronneberger O, Fischer P, Brox T. 2015. U-net: convolutional networks for biomedical image segmentation. Proceedings of the 18th International Conference on Medical Image Computing and Computer-Assisted Intervention, Oct p. 234–241.

- Sinha A, Singh K. 2003. A technique for image encryption using digital signature. Opt Commun. 218(4–6):229–234.

- Song W, Gao Z, Dian R, Ghamisi P, Zhang Y, Benediktsson JA. 2022. Asymmetric hash code learning for remote sensing image retrieval. IEEE Trans Geosci Remote Sens. 60:1–14.

- Strecha C, Bronstein AM, Bronstein MM, Fua P. 2012. LDAHash: improved matching with smaller descriptors. IEEE Trans Pattern Anal Mach Intell. 34(1):66–78.

- Sun Y, Feng S, Ye Y, Li X, Kang J, Huang Z, Luo C. 2022b. Multisensor fusion and explicit semantic preserving-based deep hashing for cross-modal remote sensing image retrieval. IEEE Trans Geosci Remote Sens. 60:5219614.

- Sun Y, Ye Y, Li X, Feng S, Zhang B, Kang J, Dai K. 2022a. Unsupervised deep hashing through learning soft pseudo label for remote sensing image retrieval. Knowledge-Based Systems. 239:107807.

- Sun R, Zeng W. 2014. Secure and robust image hashing via compressive sensing. Multimedia Tools and Applications. 70(3):1651–1665.

- Tang Z, Zhang X, Huang L, Dai Y., 2013. Robust image hashing using ring-based entropies. Signal Process. 93(7):2061–2069.

- Wang J, Kumar S, Chang SF. 2012a. Semi-supervised hashing for large-scale search. IEEE Trans Pattern Anal Mach Intell. 34(12):2393–2406.

- Wang X, Xue J, Zheng Z. 2012b. Image forensic signature for content authenticity analysis. Journal of Visual Communication & Image Representation. 23(5):782–797.

- Xia GS, Bai X, Ding J, Zhu Z, Belongie S, Luo J, Zhang L. 2018. DOTA: a large-scale dataset for object detection in aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 p. 3974–3983.

- Xing XH, Yuan YX, Meng MQH. 2020. Zoom in lesions for better diagnosis: attention guided deformation network for WCE image classification. IEEE Trans Med Imag. 39:4047–4059.

- Zhang B, Xiong D, Xie J, Su J. 2020a. Neural machine translation with GRU-gated attention model. IEEE Trans Neural Netw Learn Syst. 31(11):4688–4698.

- Zhang XG, Yan HW, Zhang LM, Wang H. 2020b. High-resolution remote sensing image integrity authentication method considering both global and local features. ISPRS Int J Geo-Inf. 9:254.

- Zhao ZP, Bao ZT, Zhang ZX, Deng J, Cummins N, Wang H, Tao, J, Schuller. 2020a. Automatic assessment of depression from speech via a hiserarchical attention transfer network and attention autoencoders. IEEE J Sel Topics Signal Process. 14:423–434.

- Zhao S, Liu T, Liu BW, Ruan K. 2020b. Attention residual convolution neural network based on U-net (AttentionResU-Net) for retina vessel segmentation. IOP Conf Ser: Earth Environ Sci. 440:032138.

- Zhou XM, Li CP, Tang DJ, Song YC, Hu Z, Zhang JS., 2019. Design of Remote Sensing Image Sharing Service System Based on Block Chain Technology. In Procceedings of the IEEE International Conference on Signal, Information and Data Processing (ICSIDP),Chongqing, China, 11-13 December 2019. p. 1–4.

- Zhu MH, Jiao LC, Liu F. 2021. Residual spectral–spatial attention network for hyperspectral image classification. IEEE Trans Geosci Remote Sens. 59:449–462.