?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Modern earth observation sensors have revolutionized the remote sensing community by improving remote sensing image quality. However, Pixel-based image analysis methods have challenges in handling very high-resolution (VHR) imagery. Geographic Based Image Analysis (GEOBIA) yielded promising results, but it is not inflexible in capturing domain experts’ expressions, therefore geographic information system professionals shifted to ontologies for remote sensing science. This paper advocates for the adoption of knowledge representation using ontologies in remote sensing. To this end, a survey of GEOBIA studies for image analysis and classification is presented, and the limitations of existing methods in reaching the remote sensing expert-level expectation are clarified. New GEOBIA development techniques as well as opportunities for improving GEOBIA models have been looked into. Recent studies that adopted ontologies in forest image classification are analyzed and recommendations for the remote sensing science community are provided, to highlight the advantages of ontologies in interpreting satellite images.

1. Introduction

The launch of the first civilian satellite for earth observation (Landsat-1) has significantly transformed the remote sensing science community (Castilla and Hay Citation2008) by making it possible to acquire near real-time high-quality satellite imaging on demand. Remote sensing substantially simplifies the automated study of urban, suburban, and natural environments for applications such as monitoring urban expansion, detecting changes, crop prediction, forestation/deforestation, surveillance, human activities, mining, and so on Qin and Liu (Citation2022). Satellite earth observation sensors coupled with the evolution of web services have tremendously improved access to satellite images, and principal agencies such as the National Aeronautics and Space Administration (NASA), United States Geological Survey (USGS), Brazilian National Institute for Space Research (INPE), Group on Earth Observation (GEO) and so forth, have ensured that large amounts of data are freely available to the users (Arvor et al. Citation2013). The advent of modern remote sensors has improved the quality of remote sensing images which are made available to the community of users. Supervised pixel-based methods have been widely used for tasks relating to change detection in land use as well as land cover multi-temporal mapping Lu et al. (Citation2013). However, the traditional pixel-based methods are unable to handle images produced by very high resolution (VHR) satellite imagery sensors (Castilla and Hay Citation2008), that is, (VHR <5 m) pixel size. Pixel-based methods have been hugely criticized for putting focus on presenting information as a digital number, i.e. how bright each pixel in an image is and it does not have the power to give details relating to spatial concepts of neighborhood, homogeneity, and proximity Souza-Filho et al. (Citation2018). Machine learning (ML) is a sub-branch of artificial intelligence, and algorithms under ML are designed in such a way that they will be able to learn from data in order to predict corresponding outputs. Land cover classification has gained popular research in remote sensing, where both pixel-level classification and boundary mapping are all considered. Machine learning classifiers such as classification and regression trees (CART) Xiang et al. (Citation2008), Random Forest (RF) Breiman (Citation2001), and Support Vector Machines (SVM) Cortes and Vapnik (Citation1995), have proved to perform better, and therefore have been widely used in land cover classification. The CART works by predicting a target variable using decision rules inferred from data features. The advantages of CART for land cover applications include its simple, explicit, and intuitive classification structure based on a set of ‘if-then’ rules. It can also be trained with any set of inputs without the need to adjust any parameters because by nature CART is a non-parametric model. CART was the first machine classifier to be used in land cover classification Pal and Mather (Citation2003). However, CART has got issues with regard to computational complexity, high variable correlation, and noises from data collection and calibration, which have the potential to negatively affect classification and efficiency Zhang and Yang (Citation2020).

The SVM, first introduced in 1995 by Cortes Zhang et al. (Citation2020) is a classification algorithm that defines hyperplanes so as to maximize the margins, herein referred to as the distance between separating the hyperplane and the closest sample. The main advantage of SVM is attributed to its insensitivity to the amount of training data hence rendering it suitable for use in cases where there are limited training samples Foody and Mathur (Citation2004). Ref Liu et al. (Citation2006) employed SVM to perform forest disease classification on 1-meter resolution airborne images. Ref Van der Linden and Hostert (Citation2009) adopted SVM to map land cover in urban areas using airborne imagery based on a resolution of 4 meters. A study in Asma and Abdelhamid (Citation2020) proposed a novel approach for the classification of VHR remote sensing images by harmonizing the pixel-based and object-based classification techniques. The algorithm of super-pixels was employed to group pixels into different batches, usually referred to as segments. Super-pixels were then merged into more significant objects by using the metric distance between all neighbor segments. The resulting image was classified using Support Vector Machine into regions for water, trees, grass, and rocks. ML algorithms are also employed to detect changes e.g. forest change detection. For instance, Support Vector Machines (SVM) and genetic algorithms can be harmonized together to detect land cover changes. For this case, the radial basis kernel and associated parameters such as C and Ω for SVM are optimized using a genetic algorithm. This hybrid approach produced efficient results when implemented on the Mexico dataset and Sardinia dataset Pati et al. (Citation2020). The challenge encountered in the SVM classifier is the selection of kernel parameters. The selection of parameters is done through a computationally intensive cross-validation process. The Radial Basis Function (RBF) based on the Gaussian function is the most widely used non-linear kernel function in SVM. Selecting RBF is a challenging task since it involves defining appropriate range values for each parameter and determining the best combination through a cross-validation process. Another problem is that SVM-RBF’s performance decreases whenever the number of features is much greater than the number of training samples.

Just like SVM, Rf is also a non-parametric classifier. The RF classifier is a bagging algorithm that uses a set of decision trees and classifies each instance based on the number of votes. RF is computationally efficient and is capable of handling high-dimensional data without over-fitting. Therefore, it has been successfully used in land cover mapping using VHR. Ref Adelabu et al. (Citation2014) successfully used an RF classifier for insect defoliation classification, and Van Beijma et al. (Citation2014) managed to classify forest habit on 2-meter resolution airborne imagery. A study in Cuypers et al. (Citation2023) employed Random Forest Classifier on VHR optical imagery to improve object recognition for GEOBIA land use and land cover (LULC) classification. The study identified ten LULC classes on the satellite image obtained from Google Earth Engine in the city of Nice in France. The study investigated the impact of adding Gray-Level Co-occurrence Matrix (GLCM) texture information and spectral indices, and the results showed its classification accuracy from 67.05 to 74.30%. However, the RF classifier is very difficult to visualize and interpret in detail and it has proven to overfit for noisy datasets.

Deep neural networks are now getting much recognition in the field of semantic segmentation He, Zhang et al. (Citation2016) Szegedy et al. (Citation2017), image classification He, Zhang, et al. (Citation2016) Szegedy et al. (Citation2017), and object detection Redmon et al. (Citation2016). This technology has quickly infiltrated remote sensing image applications, in particular, semantic segmentation classification has been widely used for land cover classification. With the aim of increasing accuracy in pixel-level land cover classification, a study in Dong et al. (Citation2020) designed a feature ensemble network (FE-Net), comprising multi-scale feature encapsulation and two enhancement phases. The first phase adopts three layers which are shallow, middle, and deep-scale features from the ResNet-101 backbone and the second one is the multi-scale feature description enhancement. The optimal channel selection was also adopted to work on each intrascale and interscale feature sequentially. The model performed well as it achieved a classification accuracy of 68.08 and 65.16% on ISPRS and GID data sets respectively. In the same vein, a study in Zhou et al. (Citation2023) proposed an EG-UNet enhancement model for open pit mining land cover with irregular and sparse spatial distribution features. The model is composed of two main modules, the edge feature enhancement module, and the long-range information extraction module. Since the edge of mine land contains more detailed information than other spectral locations, the Sobel operator was then used to extract object boundary, and this process gives an advantage of increasing the weight of features for preservation purposes before the pooling operation. The information extraction module’s purpose is to extract tiny objects such as dumping grounds in the mining area. The EG-UNet model recorded the best performance, particularly on classifying classes with few samples. However, existing deep learning approaches in the area of remote sensing are still in their infancy and therefore lack a holistic approach Zhang et al. (Citation2020). Also, deep learning models are black box in nature because of the complexity of their network structure such that it is very difficult to understand how they make decisions. Therefore, domain expert knowledge may not be certain if the model gained correct knowledge, hence undermining users’ confidence in deep learning models Sarker (Citation2021).

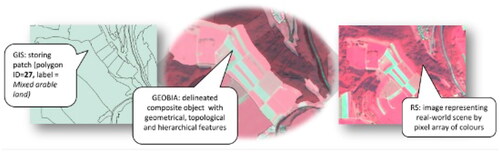

New methods such as Geographic Based Image Analysis (GEOBIA) have been of significant importance to the remote sensing science community. GEOBIA offers so many advantages over pixel-based methods. It can generate a large set of features by generating more objects from the textural, spectral, and spatial properties of a group of pixels Souza-Filho et al. (Citation2018). The ability of GEOBIA to process photos with a very high (spatial) resolution has led to its promotion as a tool for monitoring changes in agricultural, forested, and urban areas’ land cover and land use Tompoulidou et al. (Citation2016). The pixel-based approach ignored the fact that pixels are not isolated, but rather knitted together into a complex image with spectral patterns (Castilla and Hay Citation2008). It has been proven in VHR imagery that, individual pixels are too small to refer to a land cover class; therefore, they require a pixel footprint that is big enough to represent recurring elements such as forests (Blaschke and Strobl Citation2001). GEOBIA was introduced to provide answers to problems faced by pixel-based methods (Blaschke and Strobl Citation2001). GEOBIA is a branch of Geographic Information Science that aims to bridge the gap between the pixel and vector worlds (Castilla and Hay Citation2008; Blaschke Citation2010). GEOBIA, however, is heavily criticized for being excessively subjective because it approximates a degree of computer-aided photo interpretation Arvor et al. (Citation2019). As a result of this, GEOBIA rules are not transferable, as their rules correspond to those of image processing chains. Therefore, GEOBIA is not suited to address issues related to the era of big data Arvor et al. (Citation2013). Ontologies that provide a way of representing knowledge offer great potential to address such problems. They are able to represent numerical and symbolic knowledge, provide cognitive semantic reasoning capabilities, and exchange information on the deduced interpretation of remote sensing images. The definition of ontology derived from Artificial Intelligence is expressed as the formal, explicit specification of a shared conceptualization. From the definition, (1) formal means that the rules are expressed in a way that should be executed by computers, (2) explicit means that the definitions of all concepts and relations are clear and unambiguous, (3) shared means the definitions of all concepts and relations are commonly agreed by a community of knowledge domain. Formal ontologies provide shared definitions of concepts and associated relations to allow computer applications to communicate with each other Gruber (Citation1995). They define the domain knowledge by expressing concepts and the relationships that bind them together (e.g. ‘a woodland -is a kind of a –forest – – type’, ‘an orchard-is a kind of a-an – – artificial – – vegetation’,etc.). Advantages brought by ontologies in remote sensing science applications with respect to description logic (DL) involve:

Symbolic grounding. It precisely associates concepts with the right sensing data and also provides for valid associations of concepts between themselves. DL- ontologies represent low-level presentation into a high-level presentation which can be easily assimilated by human beings.

Knowledge sharing. Standardization of ontology language and use of consensual conceptualization allows mechanisms for remote sensing image representations to be shared and reused by intelligent agents in the same domain.

Reasoning. The description logic in ontology language provides a reasoning capacity that helps to infer new knowledge from existing explicit descriptions.

The rest of the paper is organized as follows. Sections 2 and 3 outline the current challenges with forest classifications using VHR images and the existing methods to handle VHR images, respectively. GEOBIA studies, challenges, and new developments are then considered in Sections 4–6, respectively. In Section 7, the use of ontologies for remote sensing image classification is explored. Section 8 proposes a state-of-the-art ontology-based model for forest image classification. Future directions and recommendations are highlighted in Sections 9 and 10 concludes the paper with a summary of findings.

2. Challenges with forest cover classification with VHR images

There are three major issues with using Remote Sensing (RS) pictures for forest cover categorization for VHR data, and these are as follows: (i) scaling up the well-trained classifiers from a single dataset leads to huge domain gaps across scenes and geographical locations; (ii) a lack of balanced, consistent, and high-quality training data hinders the development of accurate classifiers; and (iii) The impact of inter-class similarity and intra-class variability on classification accuracy.

2.1. Domain gaps across scenes and geographical locations

Transferability is a desired feature in trained models because data that would have been collected by different sensors in different geographical locations characterized by a variety of land patterns still achieve satisfactory results when compared with the actual training data. Applications related to computer vision have outstanding transferability, hence they are widely used in different domain setups Yosinski et al. (Citation2014). Tasks such as semantic segmentation and tasks relating to the prediction of outdoor crowd-sourcing images generalize well because their prediction outcome is hugely determined by the structure of the scene when viewed from a ground view image Li and Snavely (Citation2018). However, in RS images, the content of different parts of the images may vary greatly and thus are completely unstructured, and atmospheric effects create even greater variations in object appearances, let al.one the drastic change of land patterns across different geographical regions (e.g. urban vs. suburban, tropical vs. frigid). Therefore, transferability issues continue to be one of the main challenges to face when trying to scale up classification capabilities. In order to address this challenge, the Geometric-consistent Generative Adversarial Network (GcGAN) has been proposed by Fang et al. (Citation2019) to eliminate any discrepancy that may arise between labeled and unlabeled images without losing their intrinsic land cover information by translating labeled feature images from the source domain to target domain. Another approach is the adoption of transfer learning models in remote sensing science applications, these techniques are able to produce a generalizable classifier by minimizing gaps in the feature space Qin and Liu (Citation2022). These methods can be applied to data collected from a variety of sensors in a variety of geographical locations.

2.2. Lack of balanced, consistent, and high-quality training data

More training data is needed because both the amount of VHR data and the complexity of the models are growing. Traditional manual labeling methods, which were mostly used when processing coarse resolution data (like MODIS, Landsat, and Sentinel) Cai et al. (Citation2014) or VHR data from a small area of interest (AOI), are not optimal and are no longer possible as the models are changed to DL models that need more data. To solve this problem, academics tried to get training data from many different places, such as crowd sourcing services (like Amazon Turk) [24] and public datasets (like OpenStreetMap) Haklay and Weber (Citation2008). On the one hand, these extra datasets make it much easier to train high-accuracy classifiers, but on the other hand, they add new problems that may need to be solved for common training data problems that are explained below.

Imbalanced training samples: When the classes or categories in the training data contain a varying number of images or samples, generally it causes the model to perform poorly in predictions. This was handled in traditional manual labeling approaches because samples were drawn on purpose and reassembled afterward for shallow classifiers. However, for DL-based models, all available training data are often fed into a network, regardless of how balanced they are. In order to tackle the imbalance problem in VHR images Sun et al. (Citation2020) developed an impartial semi-supervised learning approach based on extreme gradient boosting algorithm (ISS-XGB). The ISS-XGB incorporates several semi-supervised classifiers to solve the multi-class classification. The model first employs multi-group unlabeled data to suppress the imbalance of training samples and then uses extreme gradient boosting regression to simulate the target classes with positive and unlabeled samples.

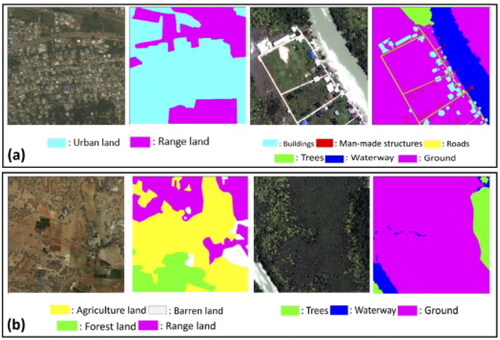

Inconsistent training samples: Researchers in the RS community who want to do semantic segmentation are now able to use more crowd-sourcing or public datasets Demir et al. (Citation2018) Schmitt et al. (Citation2021). But the class definitions and amount of detail in these crowd-sourcing datasets or public benchmark datasets may not be the same. shows an example of a more detailed classification that separates buildings from other man-made structures. Some datasets define the ground class as including low-vegetation, grass, and barren land, while others separate the ground into range-land with low vegetation and barrens. So, the first problem with using this kind of data is figuring out how to change or improve their labels to fit specific needs and details about the classification jobs. Inconsistency is also caused by using different types of remote sensing data Sui et al. (Citation2020) and also by having a data set with images consisting of different numbers of bands. Due to discrepancies in VHR data Jin et al. (Citation2022) propose a multi-source data fusion technique that requires re-sampling to unify the spatial resolution. The technique filters training samples and has the ability to offer product correction at a fine resolution. The superpixel algorithm was adopted to correct unreliable information of multiple products into a new land cover fusion product. The technique performed well as it achieved an accuracy of 85.80% on Landsat images.

Lack of quality training data: The majority of present machine learning models in RS tend to underestimate their accuracy because they are contaminated by poor and low-quality training data, as shown in Schmitt et al. (Citation2021). The low-quality data anticipated for learning algorithms can be another obstacle, despite active efforts to address the issue, for example by feeding the community with new data as samples. Employing techniques such as image enhancement and restoration helps to deal with issues to do with lack of quality data. Image enhancement techniques such as Histogram equalizer, Linear congruent adjustment, etc. improve image quality balancing parameters with regard to contrast, brightness, and sharpness Kundu (Citation2022). Image restoration techniques artifacts like noise or blurs from images.

Figure 1. Inconsistencies of the class definition and level of details, (Qin and Liu Citation2022).

2.3. Intra-class variability and inter-class similarity for VHR data

VHR data with a ground resolution of a meter or less have improved earth observation by providing more detailed information. The increased resolution has increased intra-class variability and inter-class similarities: spectral information alone can identify a pixel as belonging to multiple land cover classes, and different classes may contain pixels with similar spectral signatures Qin (Citation2015). There was extensive research into possible solutions for this problem, such as object-based approaches or spatial-spectral characteristics Ghamisi et al. (Citation2015), but the advances that were possible couldn’t keep up with the higher resolution and volume of data. Therefore, it may become more of a challenge when more sophisticated (and complex) models are utilized with larger numbers of annotated datasets. To tackle the problem of inter-class similarity and intra-class variance Venkataramanan et al. (Citation2021) developed a model that automatically picks classes that are to be clustered and also determines an optimal number of classes to be generated. The obtained clusters are considered to be independent classes. Inter-class is dealt with by employing a triplet loss function to separate features between each class. Zhang et al. (Citation2022) proposed a technique that tackles intra-class variance problems by developing a machine-learning model that organizes input instances as a graph. From the obtained graph, a normalized cut surrogate metric is used to determine intra-class variance within the training batch. The feature aggregation scheme is proposed by considering the equivalence between the normalized cut and random walk. The scheme is developed under the guidance of transition probability. Through supervision of aggregated features, transition probabilities are constrained to create a graph partition consistent with the given labels, hence the normalized cut and intra-class variance is well suppressed.

3. Proposed methods to handle VHR remote sensing images

The main obstacles to the current VHR RS picture classification are those already discussed. In addition to improving model performances, efforts have been made to solve these issues using multi-source/multi-resolution data, unlabeled data, more noise-tolerant models, and learning techniques. These initiatives can be generally described as follows:

Weakly/Semi-supervised learning for small, imprecise, and incomplete samples.

For weak supervision to work, the underlying training data must be inexpensive to collect (like publicly available GIS data) and noisy, imprecise, and asymmetrical. Because these methods attempt to incorporate heuristics, limitations, and error distributions, that are unique to each situation, hence they are not universal. This is because semi-supervised learning in RS assumes the existence of a large amount of unlabeled data and relies on the limited training data to achieve high classification performance, and is thus relevant to applications that deal with crowd-sourcing labels or labels with minor temporal differences Larochelle (Citation2020). Li et al. (Citation2017) proposed the zero-short scene classification (ZSSC) that has got the ability to recognize images even when presented with incomplete labeled data. The model is attributed to its capacity to recognize images from unseen scene classes. The approach utilizes, word2vec, a natural language process to map names of seen/unseen scene classes to semantic vectors. The relationship that exists between seen and unseen classes are defined with the help of a semantic-directed graph constructed from the semantic vectors. To perform knowledge transfer from seen classes to unseen classes, an initial label prediction on a test image is performed, then the label propagation algorithm is developed for ZSSC. The label-refined approach is adopted to suppress noise in the zero-shot classification results. The approach outperformed the state-of-the-art learning models in scene classification.

Transfer Learning and domain adaptation to fill domain gaps.

Within the realm of machine learning, transfer learning (TL) is defined as the presumption that knowledge gained from completing one task may be valuable if transferred to completing another task. A model that learns to conduct per-pixel semantic segmentation of scenes, for instance, has the potential to make human detection more accurate. In the field of RS, this term mostly refers to methods that aim to produce a generalizable classifier by minimizing gaps in the feature space. These methods can be applied to data collected from a variety of sensors in a variety of geographical locations. Pan et al. (Citation2016) designed a multi-layer transfer learning that caters to specific latent features for domain adaptation. Firstly, the model generates specific latent features, which are then combined together into one latent feature space layer. Since the layers have different pluralism, multiple layers are generated to correspond to each distribution layer. The difference in the pluralism in each layer means that learning distributions from one layer helps learn distributions on other layers. The iterative algorithm based on Non-Nagative Matrix Tri-Factorization was adopted to solve the optimization problem. The multi-layer transfer learning managed to outperform state-of-the-methods on sentiment classification tasks.

Use low-resolution photos or public GIS data as sources of tagged or partially labeled data.

Nearly 80% of the world’s GIS data coverage is provided by OpenStreetMap, however, its quality varies Barrington-Leigh and Millard-Ball (Citation2017), and some local governments make their GIS data available for public use. Researchers presented their work in this context and drew conclusions that were directly linked to the datasets. Additionally, these low-resolution labels can be used as a general guide to address domain gaps of data across various locations for scaling up the land cover classification of VHR data as the low-resolution labeled data with global coverage are gradually becoming more complete (e.g. National Land Cover Database Homer et al. (Citation2012)). Wu et al. (Citation2019) developed an effective unsupervised deep feature algorithm for classifying low-resolution images. The approach does not require any fine-tuning on the convenet filters and the convenet filters are used to extract features from both high and low-resolution images, and the obtained features are fed into a two-layer feature transfer network for knowledge transfer. The network has the ability to transfer distinguished features from a high-resolution feature space to a low-resolution feature space. The model was implemented on the VOC2007 dataset and showed significant improvement against baseline methods.

Fusion of multi-modality and multi-view data. Unlabeled data sources such as Light Detection and Ranging (LiDAR), Synthetic Aperture Radar(SAR), and nighttime data can be used to study heuristics and improve latent representation learning Qin and Liu (Citation2022). Lei et al. (Citation2021) proposed a fusion of multi-modality and multi-scale attention network land cover classification of VHR images. The multi-modality fusion was designed on the basis of an encoding-decoding network that eliminates redundant features and fuses only useful features. This process increases the classification of land cover products by removing redundant features. The novel multi-scale spatial context enhancement module was adopted to improve feature fusion and alleviate the problem of large-scale variation of objects. The model was implemented on Vaihingen and Potsman datasets and performed well as it obtained F1-scores of 88.6 and 92.3% for Vaihingen and Potsman datasets, respectively.

3.1. Semisupervised learning (SSL) methods

One of the major challenges in Remote Sensing (RS) classifications is that the process of collecting VHR images for training (labeled) samples is really a tedious task. Therefore the RS science community has adopted SSL methods to tackle this challenge Yin et al. (Citation2014); Bazi et al. (Citation2012). SSL works by trying to generate a wealth of information from the available unlabeled data, despite having few available unlabeled data, with the aim of improving the performance of the classifier. Such approaches assume that points within the same structure are likely to have the same label Wang et al. (Citation2015). In VHR images it seems reasonable to assume that if samples have close spectral information then they are likely to have similar labels. SSL methods have been successfully used in image classification applications such as vegetation mapping, land cover mapping, and urban planning Kwak and Kim (Citation2023). Fan et al. (Citation2020) proposed a semi-supervised multi-Convolutional Neural Network (CNN) ensemble learning method (Semi-MCNN) for urban land cover classification. The model harmonized the multi-CNN ensemble approach and a semi-supervised strategy to build an end-to-end architecture. This hybrid approach generally improves classification accuracy and generalization ability. The purpose semi-supervised technique was to leverage unlabeled images to labeled samples, and the ensembled teacher model dataset generation (EMDG), which is an automatic sample selection technique, was adopted to select appropriate samples and to generate large datasets from unlabeled samples automatically. The model was implemented on Shenzhen’s land cover data and performed well as it achieved an overall accuracy of 92.5%. Ekanayake et al. (Citation2018) developed a semi-supervised approach for mapping boundaries between two vegetation zones using satellite hyperspectral data. The approach employed the Maximum Likelihood Classification technique in order to detect pure vegetation pixels. In order to determine the boundary between two major vegetation zones, the technique considers the degree of correlation of pixels containing vegetation at various spatial coordinates. Finally, the systematic procedure comprising Fisher’s Discriminant Analysis (FDA) and spectral clustering is used to divide the vegetation pixels into two vegetation zones.

3.2. Deep learning approaches

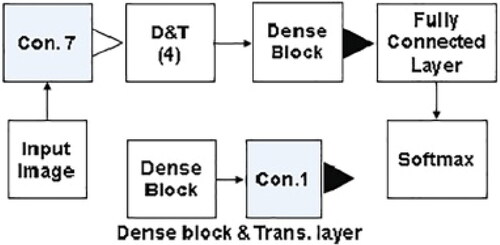

Deep learning technologies have been widely used to perform multi-class segmentation on VHR images Sertel et al. (Citation2022). The number of classes to segment should be carefully examined prior to the application of deep learning technologies. Yuan et al. (Citation2021) conducted a critical review on semantic segmentation using deep learning methods. The findings from the study showed that segmentation of VHR on datasets such as ISPRS vaihingen (five classes), ISPRS Potsman (five classes), and Massachusets (two classes) achieved high accuracy ranging from 85 to 99%. Audebert et al. (Citation2018) developed an efficient multi-scale deep fully CNN based on ResNet and SegNet with multi-modal to perform segmentation on high-resolution remote sensing data. Results obtained showed that the fusion of multi-modal data significantly increases the accuracy of semantic segmentation by attributing its capability to learn multi-modal features jointly. Fu et al. (Citation2017) integrated Atrous convolution to Fully Convolution Network (FCN) to build multi-scale network architecture to perform semantic segmentation on VHR images obtained from GF-2 and IKONOS datasets. The Conditional Random Fields were also added to the network in order to refine the output class maps. The model performed well as it obtained the precision, recall, and kappa values of 0.81, 0.78, and 0.83, respectively. Other developments such as Densely Connected Convolutional Network (DenseNet) Huang et al. (Citation2017), and ShuffleNet Zhang et al. (Citation2018) have been extended in remote sensing segmentation to address issues to do computational complexity, and these designs are producing satisfactory performance in semantic segmentation for remote sensing data Chen, Fu et al. (Citation2018). DenseNet is an extension of ResNet, which introduced extra connections from one layer to its subsequent layers, and this has increased information flow and feature reusing Huang et al. (Citation2017). The building blocks of DenseNet are dense block, which is made up of stacked layers of two filters (a 3 × 3 followed by a 1 × 1 filter. The dense blocks are interconnected with a 1 × 1 convolutional layer for feature dimensionality reduction. The network structure alleviates the vanishing gradient problem and enables feature reuse. shows the DenseNet architecture.

Figure 2. DenseNet architecture (Yuan et al. Citation2021).

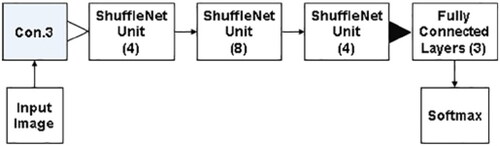

ShuffleNet Zhang et al. (Citation2018) significantly increases computational complexity by reducing computation complexity of 1 × 1 convolutions and utilizes channel shuffle to help the information flow across feature channels. The computation of individual shares to be processed by the GPU is divided by the group convolution, and the output is reorganized into a matrix, where the rows are the group count and the columns are the channel count. The depthwise convolution is used instead of 3 × 3 convolution. The second group convolution restores channel dimensionality to match the residual for concatenation. shows the DenseNet architecture.

Figure 3. ShuffleNet architecture (Yuan et al. Citation2021).

3.3. Multi-resolution data classification

Multi-resolution data classification technique considers different levels of information granularity to analyze task data. This technique is widely used to perform classification tasks on data such as VHR images, graphs, and time series. It works by extracting patterns or features from images at different resolutions and integrating them into the classification performance. Duarte et al. (Citation2018) developed a multi-resolution feature fusion for classifying building images using CNN. This approach integrates feature maps produced from different resolution levels (terrestrial, aerial, satellite) in order to categorize damages on building from remote sensing images. The results of the study demonstrated that multi-resolution fusion techniques outperform the traditional methods in classifying building images with 89% compared to 84%. The concept of using multi-resolution produces better accuracy and localization capability than using single-resolution features. Teruggi et al. (Citation2020) proposed a hierarchical learning machine approach for multi-resolution 3D point cloud classification. The study extended the learning machine approaches with a multi-level and multi-resolution approach. The integration of the hierarchical concept optimized 3D classification results and improved the learning process. The multi-level and multi-resolution procedure was tested and assessed on two large datasets (the Pomposa Abby and Milan Cathedral both in Italy). The model managed to identify necessary architectural classes at each geometric resolution. Fixed network structures at a single resolution are difficult to characterize surface targets that have bright colors and different shapes with fixed sizes. To address this challenge Cong et al. (Citation2022) proposed a structure defined by sample characteristic (SDSC) multi-resolution classification network that learns samples using a multi-resolution strategy and the principle of maximum classification probability. In order to improve the credibility and classification accuracy, the results obtained from the multi-resolution strategy were integrated into the final classification results. The proposed method is suitable for classifying high spatial resolution remote sensing images because of its better cognitive performance and insensitivity to noise.

4. GEOBIA studies

GEOBIA is a remote sensing tool used for land cover mapping and detecting land cover changes. It is a new discipline in remote sensing science that has evolved from pixel-based approaches and has significantly improved the workflow of imagery processing, particularly for land cover classification and detection Arvor et al. (Citation2013). GEOBIA’s main goal is to deal with more complicated classes that are determined by spatial and hierarchical relationships both within and outside of the classification process Lang (Citation2008). Of course, one might perform a multi-spectral classification in an RS system first, then group and rearrange the labeled pixels to construct objects using GIS software. However, the analyst may be skewed by this sequence, which limits the number of classes that may be handled. The outcomes achieved through this process differ from those that would be obtained with a single conceptual step, as is the case with human perception. Instead of examining the spectral behavior of individual pixels, the object-based approach groups adjacent pixels into objects, which then serve as the observation units. This classification circumvents the issue of artificially square objects as used in the per-pixel analysis Fisher (Citation1997); Burnett and Blaschke (Citation2003); Blaschke (Citation2010), so long as the objects of interest cover a sufficient number of pixels to permit a meaningful representation of their shape. GEOBIA has been used in a range of applications such as geo-morphology Drăguţ et al. (Citation2011), agriculture Vogels et al. (Citation2017), archeology research Hegyi et al. (Citation2020), and soil science Dornik et al. (Citation2018). GEOBIA has managed to bridge the gap between remote sensing and Geographic Information Science (GIScience). In the fraternity of GIScience, the term Object Image Analysis (OBIA) was first introduced in 2006 Lang and Blaschke (Citation2006), and later reformulated as GEOBIA in 2008 whose central focus was on Earth Observation (EO) applications and the integration of geo-spatial-temporal reasoning to deal with high volumes of EO imagery and other related information extraction challenges Lang et al. (Citation2019). GIScience scholars have reached a consensus on the fact that GEOBIA is a paradigm shift Blaschke et al. (Citation2014), that has managed to bridge the semantic information gap from big data in the image domain. The representation of 2D imagery as a gridded array of pixels does not provide descriptive content with regard to semantic information or object boundaries Lang et al. (Citation2019). Such information needs to be documented in metadata. The image content in the current setup cannot be queried, but attempts to meet this vision exist Blaschke (Citation2010). Geographic Information system (GIS) datasets are discrete and the finite vector set handles discrete categorical nominal variables rather than numerical variables Lang et al. (Citation2019). The success of GEOBIA as measured by bibliometric measures Blaschke et al. (Citation2014) is attributed to its mediating power between geospatial entities and continuous field representations, which caters to the needs of GIS and remote sensing communities. The Harmonisation of these two models is presented in .

Figure 4. Impact of land cover type on the evolution of NPSS in the near-infrared (Lang et al. Citation2019).

The classification system of traditional pixel-based methods suffers from the salt and pepper-effect. This problem was alleviated by the Object-Based Image Analysis (OBIA) methodology when implemented on the Northern California vegetation inventory (Yu et al. Citation2006). OBIA adds object shape and context to spectral and textural information, and this significantly lowers the salt and pepper effect problem.

Another study by Chubey et al. (Citation2006) used object-based analysis of IKONOS-2 imagery for extraction of forest inventory parameters rather than the traditional pixel-based image analysis approaches. Object-based analyses were first introduced in the area of remote sensing by Kettig and Landgrebe (Citation1976), however, the approach did not receive much attention as its pixel-based method counterpart (Lu et al. Citation2013). Later, the object-based analysis techniques proved to be of significant importance in forest information extraction (Hay et al. Citation1996; Pekkarinen Citation2002; Imaging Citation2002). This was reinforced by the introduction of commercial object-based image analysis software such as eCognition (Arvor et al. Citation2019), feature analyst, etc. Chubey et al. (Citation2006) developed a novel method that used the eCognition software and decision tree statistical analysis to extract forest inventory parameters. The IKONOS-2 images were segmented into image objects using the eCognition software. The multi-resolution segmentation was employed, where the image was partitioned into homogeneous multi-pixel regions. The size, spectral homogeneity, spatial homogeneity, and shape of the generated image objects were used to guide the segmentation procedure. The segmentation process was further tested against several other input/weighting combinations whereby each combination was evaluated on its ability to delineate meaningful landscape components. Image objects delineated carried crucial forest-related information that was derived from spectral and spatial characteristics of forest stand composition. Furthermore, analysis was performed using decision trees to determine correlations between image object metrics obtained from input data and individual forest inventory parameters. Decision trees were chosen because (a) they can handle high dimensional datasets, (b) they are able to work on both non-continuous and continuous variables, (c) of the non-parametric nature of the approach, and (d) they are easy to implement. However, there are some challenges with decision trees. Their performance depends on the quality and representation of training data (Friedl et al. Citation1999) and the accuracy increases with more training data. Therefore, the requirement for large training datasets is a concern from an operational perspective.

A quite number of studies have shown that OBIA methods are, re-applicable and more transferable to other images. This is achieved by re-applying the rule set on other conditions, and rule sets have the ability to adapt to new changed conditions. Hofmann et al. (Citation2011) developed a new method to measure the robustness of a rule set. The new method is based on the assumption that the level of adaptation to be measured is in congruence with the quality of classification achieved. The robustness xi of an unchanged rule set applied on an image Mi (i.e. = is expressed by ratio of quality values:

If

it implies a better result for Mi and vice versa for

The mean robustness of all the images Mn is expressed

and the greater x the more Y for qr > 0.

The studies in part delves into the importance of evaluating segmentation results and considered segmentation evaluation metrics such as the inverse of the number of objects (INO), Normalised Post Segmentation Standard Deviation, and Bhattacharyya Distance (BD) have been provided. A method for evaluating the quality of segmentation results in object-based classification was presented by Radoux and Defourny (Citation2008). The proposed method constituted of two indices; one index was used to evaluate the extent to which the classification could be improved while the other assessed the boundary quality of the delineated land cover classes. Using a combination of three parameters from the same segmentation technique, the method was used to segment a Quick-bird image. It was established that large groups of pixels in an image, aid in the reduction of variance (Edwards and Cavalli-Sforza Citation1965). The study opted for a small intra-class variance with the assumption that it improves parametric classification. Over and under-segmentation were assessed using indices based on mean-sized objects. As a first quantitative goodness metric, the inverse of the Number of Objects (INO) was utilized. INO measures the ability of a model in segmenting an image into individual objects. INO is expressed as where N represents the number of objects. The second global index was the Normalised Post Segmentation Standard Deviation (NPSS). It examines segmentation quality based on the variability of the segmented image against the variability of the entire image. NPSS is expressed as

where σs is the standard deviation of the pixel intensity values in the segmented region and σx is the standard deviation of the intensity values of the whole image (that is it includes both the segmented region and non-segmented region. The NPSS was used to calculate the class uniformity by replacing each pixel value with the parent object’s mean values. The small intra-class variance does not always improve classification results; in some circumstances, a considerably large variance between two classes can improve the classification. Therefore, a dissimilarity metric, the Bhattacharyya Distance (BD) was co-opted in the study to test the relevance of the proposed goodness indices since it contains the term that compares co-variance matrices and it also accounts for classification errors (Webb Citation2003). BD is a measure of dissimilarity between two probability distributions. For probability distribution p and q on the same domainX, BD is expressed as

where

where p(x) and q(x) are probability density functions.

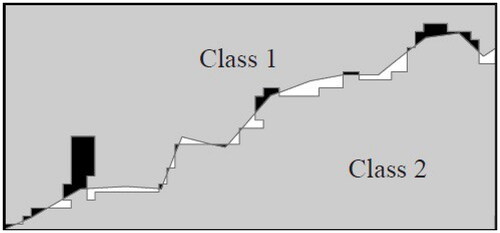

Artifacts along the boundaries and missing boundaries are the key challenges with segmentation algorithms. The quality of segmentation precision is determined by the number of artifacts along the boundary. The accuracy and precision criteria proposed by Mowrer and Congalton (Citation2000) were utilized to evaluate the positional quality of the edges. The bias and mean of the distribution of boundary errors were used to determine the accuracy and precision, respectively. shows sample errors along the edges of a segmentation result. Negative values were assigned to non-matching polygons (omission error) and positive values to matching cases. The goodness of indices was evaluated by NPSS and BD. Both indices gave valuable insight into segmentation findings. Results showed that NPSS was more correlated than INO. The positive results can be attributed to the fact that the mean class values were not modified by the segmentation. However, segmentation parameters were shown to be sensitive to global NPSS, with the object size parameter accounting for more than 80% of the variance. The effect of segmentation on every NPSS class had to vary, this reflects the sensitivity of segmentation algorithms to the land cover class. The absolute boundary error was sensitive to under-segmentation and was able to detect artifacts along class boundaries. There was a higher correlation between shape parameters and boundary errors. Results from show the average absolute errors of parameters in various scales smooth, mixed, and compact. The studies have revealed that most segmentation algorithms face challenges that include artifacts and missing values along the boundaries that deter them from achieving good segmentation results. Evaluation metrics such as NPSS, INO, BD, accuracy, and precision for assessing segmentation quality were looked at in these studies.

Figure 5. Errors along the edges of a segmentation output. Black polygons are omissions (−) and white polygons are commissions (+) with respect to class 1 (Xie et al. Citation2008).

Table 1. Average of absolute errors (in meters) on boundary position between deciduous and coniferous forests, for a combination of segmentation parameters, i.e. scale parameter between 10 and 60 and compactness, illustrated for forest/arable land interfaces (Xie et al. Citation2008).

A study by Osio et al. (Citation2018) uses OBIA-based monitoring of Riparian vegetation to assess the effect of flooding on the Lake Nakara Riparian Reserve vegetation species. An OBIA methodology was proposed (Osio et al. Citation2018) to serve as the basis for the classification of Riparian vegetation. The methodology comprised four pillars: data capture, pre-processing, processing, and analysis. Satellite data was downloaded from the USGS site, from Landsat 5 TM, Landsat 8 OLI (collected in 2014), and Landsat 8 OLI (collected in 2016) datasets. The pre-processing consisted of removing noise and ensuring uniformity between the datasets. The Ehlers fusion technique was employed to pen sharpen each image to 15 m resolution. Raw values of the images were converted to Top of the Atmosphere reflectance by the ArcGIS 10.4 software using a spatial analyst tool, in the arc toolbox. The planetary reflectance PY is defined as where Qcal is the quantized and calibrated standard product pixel value and Mp and Ap are the band-specific multiplicative and additive re-scaling factors, respectively. A multi-resolution segmentation algorithm was adopted to convert pixels into image objects. Four bands namely; green, red, near-infrared (NIR), and shortwave infrared (SWIR) were used to classify vegetation indices on each dataset. NDVI values were obtained from the rule set established in the feature view and the supervised classification was carried out for each image using the K-NN algorithm. In terms of classification scales, scaling varied across different images such that there were different numbers of instances per imagery. Multi-resolution segmentation was used to segment images into image objects based on the feature parameters of layer weights, scale parameters, and composition of homogeneity criterion. The parameters were set in eCognition Developer 9.2 and were used by the multi-resolution segmentation to divide the image into homogeneous objects. shows the segmentation scales set.

Table 2. Segmentation scales (Osio et al. Citation2018).

Hossain and Chen (Citation2019) reviewed object-based image segmentation algorithms and challenges from remote sensing perspectives. The authors concluded that the quality of image segmentation has a significant impact on the final feature extraction and classification in OBIA (Hossain and Chen Citation2019; Vickers Citation2017; Su Citation2017). Many other studies (Blaschke et al. Citation2008; Cheng et al. Citation2001; Zhang et al. Citation2017) argued that the most crucial step in OBIA is image segmentation. Geographic Object Image Analysis (GEOBIA) was established to provide for image analysis by remote sensing scientists, environmental disciplines, and GIS specialists (De Jong and Van der Meer Citation2007). A comprehensive review of studies related to GEOBIA was undertaken by Blaschke (Citation2010).

Chiu and Lin (Citation2005) formulated the mathematical definition of segmentation as follows: given P, the homogeneity criteria and R, an entire image; Ri and Rj are segments of R if the following conditions hold (1) (2)

(3)

and (4)

where i = j and Ri and Rj are neighbours. Segmentation algorithms have been categorized into (a) pixel-based (Friedl et al. Citation1999), (b) edge-based, (c) region-based, and (d) hybrid-based (Beveridge et al. Citation1989). In edge-based segmentation, the algorithm determines the edges, which are boundaries between objects (Cao et al. Citation2016); the edges are then closed up by continuous algorithms (Martin et al. Citation2004). Filtering, enhancement, and detection are the three key processes in edge detection (Jain et al. Citation1995). Filtering methods are necessary as they produce minimum blurring edges (Jain et al. Citation1995; Chen et al. Citation2006; Sahin and Ulusoy Citation2013). Enhancement highlights the pixels with huge changes in local intensity levels, and the enhanced data is utilized to detect real or genuine edges. The next stage is to use techniques like Hough transform (Kiryati et al. Citation1991), neighborhood search (Ghita and Whelan Citation2002), and watershed transformation (WT) (Vincent and Soille Citation1991). For natural segmentation, WT is commonly utilized (Hossain and Chen Citation2019). The region-based segmentation starts from the inside of the image and goes outwards until reaching the object boundaries (Zhang et al. Citation2016). Merging and splitting are the two basic operations in region-based segmentation (Fan et al. Citation2005). The segmentation process follows a systematic approach (Bins et al. Citation1996): (a) the first step performs an initial (seed) segmentation of the image, (b) the next step merges adjacent segments that are similar while splitting those that are dissimilar and (c) the previous step is repeated until there are no more segments to merge or split. The region growing or merging is defined by two main issues (Lucchese and Mitra Citation2001): (a) selection of a seed region and (b) similarity. Algorithms such as K-means clustering (Wang et al. Citation2010), hybrid region merging, single-seeded region growing (Verma et al. Citation2011), Particle Swarm Optimization (PSO) method, etc. (Mirghasemi et al. Citation2013) are used to generate the initial seeds. However, researchers are still in search of algorithms that work without seeds (Wu et al. Citation2015) or that are influenced by neighbors, even though seeded (Fan et al. Citation2005). After seed selection, the region grows sequentially by adding similar pixels, guided by specific homogeneity criteria. The criteria determine whether the pixels belong to the growing region or not (Nock and Nielsen Citation2004). The region splitting and merging entails, using the homogeneity criterion (based on attributes such as grey values, texture internal edges, etc.) to split the image into several segments (De Jong and Van der Meer Citation2007). If the seed image is not homogeneous, then the image is split into four sub-regions which serve as seeds for the next level (Martin et al. Citation2004). The process continues until all sub-regions become homogeneous. Bottom-up and top-down strategies are combined in the split and merge method (Guindon Citation1997). Bottom-up approaches enlarge the image by combining or merging comparable pixels, whereas top-down approaches split an entire image into image objects depending on the heterogeneity criterion (Benz et al. Citation2004). Edge-detecting methods face problems in generating closed segments and are excellent in detecting edges, while region-based methods are good in generating closed segments but are imprecise in detecting edges (Wang and Li Citation2014). Hence an algorithm was proposed that harmonized segmentation using both edge and region-based segmentation maps inputs Chu and Aggarwal (Citation1993). The technique utilized the maximum likelihood estimator to predict initial edge positions from multiple inputs. An iterative procedure is then employed to smooth the resultant edge patterns. Finally, the edge map is converted to a region map using closed-edge contours. The regions are then merged to ensure that every region has the required properties.

5. Challenges of GEOBIA

In the past two decades, GEOBIA has been successfully adopted for land cover mapping (Blaschke and Strobl Citation2001; Blaschke et al. Citation2014). However, GEOBIA techniques require that regions of interest or objects be identified before applying classification rules on extracted objects (Blaschke and Strobl Citation2001). The segmentation step either relies on user expertise or empirical training to be adapted for each new scene to be processed (Drăguţ et al. Citation2014; Ming et al. Citation2015). Hence, GEOBIA is not applicable for Big Geodata where there is a large scale analysis which requires methods that are super-fast and robust (Merciol et al. Citation2018). Furthermore, GEOBIA has not yet been quantitatively verified though there is a general consensus among numerous researchers (Tehrany et al. Citation2014).

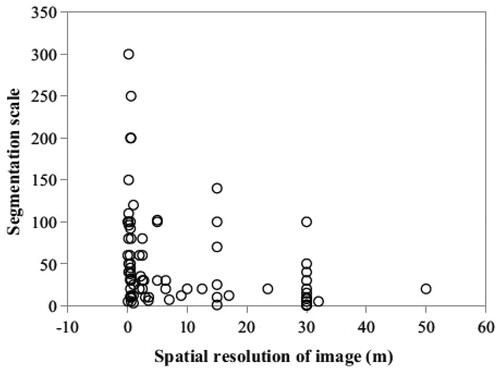

GEOBIA has been extensively used in land cover mapping applications. Land cover mapping is a complicated process as it incorporates factors such as image type, segmentation methods, accuracy assessment, classification algorithms, input features, etc. that have a great influence in the quality of the final product (Khatami et al. Citation2016). It is still a huge problem to come with a standard GEOBIA technique that provides an optimal solution for every study area. Spatial resolution is inversely proportional to segmentation scales. shows the relationship between spatial resolution and segmentation scale.

Figure 6. Correlation between spatial resolution and segmentation scale (Cai et al. Citation2014).

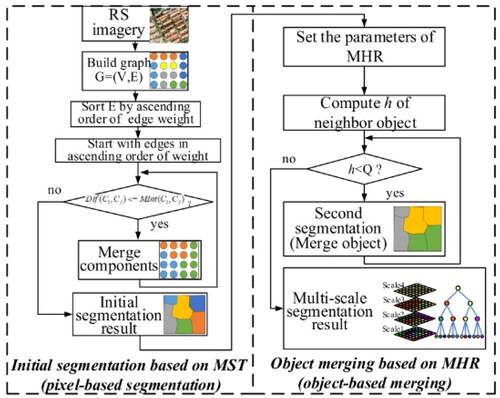

Whenever the spatial resolution becomes high, the segmentation scales become smaller and the lower the spatial resolution, the greater the configured optimal segmentation scales. It is very complex (Johnson and Xie Citation2011) to determine optimal segmentation scales due to the fact that the variability of the scale is affected by other image characteristics such as the size of the study area. The scale issue has emerged to be a huge problem for OBIA studies in relation to multi-segmentation scale methods. Therefore, there is a need to determine the appropriate segmentation scale necessary to obtain optimized segmentation results (Arbiol et al. Citation2007). Many researchers have explored trial and error approaches by varying segmentation scales based on their experience (Laliberte and Rango Citation2009), however, this approach is not advisable (Johnson and Xie Citation2011). In order to counteract this challenge Gu et al. (Citation2018) propose an efficient multi-scale segmentation method based on graph theory and Fractal Net Evolution Approach (FNEA). The proposed model is shown in . The contributions from this approach are that: (a) the Minimum Spanning Tree (MST) algorithm that performs the initial segmentation and the Minimum Heterogeneity Rule (MHR) algorithm adopted for object merging in FNEA are hybridized, (b) the segmentation strategy is implemented using data partition and the reverse searching forward processing chain using the Message Passing Interface (MPI) parallel technology. This approach is highly effective since it uses a fast graph segmentation algorithm and it also serves as a multi-scale segmentation and is hence suitable for a variety of landscapes such as industrial or agriculture. The problem of multi-scale segmentation also arises in defining semantic rules to relate lower landscape units to high-level organizations. To address this issue Burnett and Blaschke (Citation2003) developed Hierarchical Patch Dynamics (HPD) framework that aids in the development of describing patterns and processes, acting through a range of scales, which make up landscapes. The framework was implemented on two different projects. In the first project, habitat mapping was done using a multi-scale GIS database. The landscape segments were generated using sub-patch information including dominant tree crown densities and species. In the second project, fractal-based segmentation was adopted to produce agricultural scene segments, and the decision framework was adopted to choose the best combination of segmentation levels to identify shrub encroachment.

Figure 7. Multi-segmentation based on MST and MHR Gu et al. (Citation2018).

The next section presents recent developments in GEOBIA.

6. New GEOBIA developments

This section reviews new GEOBIA developments in terms of data sources, object based feature extraction, geo-object-based modelling frameworks, new forms of image objects, GEOBIA systems for novice GEOBIA users, and the use of knowledge from other disciplines.

6.1. Data sources

Modern high spatial resolution sensors provided a new landscape for remote sensing fraternity to study free-scale object or phenomenon from anywhere on the Earth’s surface Chen, Weng, et al. (Citation2018). Ancient GEOBIA studies used to work with classic, single-image optical scenes for proof-of-concept studies, however new development in remote sensing fraternity have sharp increase of non-conventional data image type richer in spectral, spatial and temporal information, thereby, improving the modelling for geographical entities Chen, Weng, et al. (Citation2018). Conventional GEOBIA data is defined as a high resolution imagery with limited spectral bands acquired by remote sensors mounted on relatively stable satellite/airborne platforms.

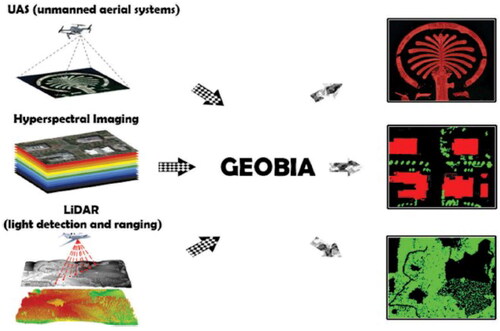

As depicted in , rather than collecting images through satellite or airborne sensors, unmanned aerial system (UAS) or Drones have the ability to collect either sub-meter or sub-decimeter resolution data with high flexibility and very little demand for resources.

Figure 8. Conventional data types and image objects (Cai et al. Citation2014).

Similarly, Light Detection and Ranging (LiDAR) represents the conventional 2D spectral features with 3D structural information (bottom of ). Since segmentation in GEOBIA is solely applied to 2D imagery, LiDAR converts clouds or waveforms to raster format image models before they are used in GEOBIA framework. The GEOBIA community has taken the advantage of LiDAR’s penetration capacity of retrieving 3D structures of non-solid objects with gaps such as trees. Chen, Weng, et al. (Citation2018) argued that the LiDAR approach resembles real forest structure than sharpened WorldView-2 optical imagery at a 0.5 m resolution.

Hyperspectral images have been extensively used by GEOBIA experts to distinguish between geographical objects of similar spectral characteristics. Traditionally, hyperspectral images comprised of very limited spectral range that spans from visible to near-infrared section of the electromagnetic spectrum. These types of images have been successfully used in classifying mangrove species with 30-band (Kamal and Phinn Citation2011), examining post-fire severity by utilizing a 50-band MASTER mosaic (Powers et al. Citation2015) and assessing tropical forest area diversity with a 129-band AisaEAGLE imaging spectrometer (Schäfer et al. Citation2016). Hyperspectral imagery (middle of ) is very rich in spectral information as compared to multispectral imagery data sets, hence the extra bands can be used to obtain other useful information such as textural, object-based shape and contextual features. However, obtaining this extra information aids in computational costs due to rigorous analysis for feature extraction. Advances in sensor technology is moving towards extending spectral information beyond the red, green, blue and near-infrared segment of the spectrum, e.g. Worldview-2 has additional coastal-blue (400–450nm), yellow (585–625nm), red-edge (705–745nm) and near-infrared-2(840–1040nm) bands at the 1.84 m resolution Vermeulen and van Niekerk (Citation2016).

6.2. Object based feature extraction

Classic features such as texture, context measures and spectral have been extracted through unsupervised image segmentation. GEOBIA has progressed to solely obtain these features through analyzing characteristics of geographic objects.

6.2.1. Novel object features

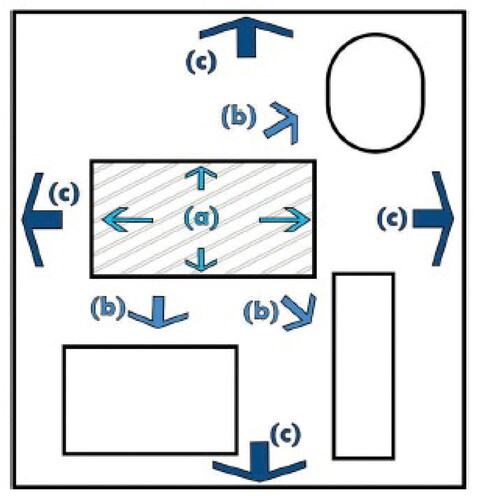

The traditional way of characterizing features of individual regions of interest involves measuring shape complexity (Mowrer and Congalton Citation2000; Cao et al. Citation2016), extracting features from interval-valued data modeling (He et al. Citation2016a), and establishing semivariogram descriptors for quantifying spatial correlation and patterns within objects as presented in . A study by Wang et al. (Citation2017) extended the approach to include the relationship between objects and lines. The authors explained that geographical objects in urban setups have more regular shapes than natural environments and have systematically distributed lines. Cai et al. (Citation2014) extracted geostatistical features by examining the temporal behavior of each object’s internal structure in relation to object based-based change detection.

Figure 9. Object-based features obtained from three scales (a) image-objects (b) neighborhood image objects and (c) individual communities (Cai et al. Citation2014).

A study by Wang et al. (Citation2017) extended the approach to include the relationship between objects and lines. The authors explained that geographical objects in urban setups have more regular shapes than natural environments and have systematically distributed lines. Cai et al. (Citation2014) extracted geostatistical features by examining the temporal behavior of each object’s internal structure in relation to object based-based change detection. Chubey et al. (Citation2006) proposed to incorporate neighboring image objects to improve the description of contextual information. Chen et al. (Citation2011) came up with a geographic object-based image texture (GEOTEX) model that produced a set of texture measures by examining each image object with its corresponding neighbors through the natural window/kernel.

In GEOBIA techniques, the segmentation process produces a large number of object-based features (), thereby reducing computational efficiency and also increasing modeling uncertainties. To mitigate this challenge, Powers et al. (Citation2015) harmonized Principal Component Analysis (PCA) and Minimal Noise Reduction (MNR) to reduce the airborne MASTER sensor’s 50 input spectral bands prior to segmentation.

6.2.2. Feature selection space

Techniques ranging from statistical analysis to machine learning and deep learning have been employed to obtain optimal features through the reduction of feature space. The majority of these techniques follow the approach of minimizing the number of input features while at the same time maximizing follow the separation distance between classes. Once processed features are then ranked in order of significance. Machine learning approaches have evolved for feature space reduction. However, for GEOBIA techniques, a consensus has not yet been reached as to which machine learning algorithm is the best for feature space reduction relative to particular applications. Algorithms that have been applied successfully for object-based feature selection include: Winnow (Littlestone Citation1988; Powers et al. Citation2015), random forest (Breiman Citation2001; Franklin et al. Citation2000), minimal redundancy maximum relevance (Peng et al. Citation2005) and Support Vector Machine (SVM) (Huang and Zhang Citation2013). Generally, the choice of algorithm for optimal feature space reduction depends on performance, ease of use, and accessibility. With the aim of improving feature selection from VHR images Chaib et al. (Citation2022) proposed a framework based on Vision Transformer (ViT) models. Firstly, the ViT model is used to extract informative features from the VHR image scene, and the obtained features are merged into one signal dataset. The feature and selection algorithm is then adopted to trim off features that do not provide information to describe scenes such as beaches and agriculture. These features have a tendency of degrading the classification accuracy. The proposed model outperformed other state-of-the-art models when implemented on the VHR benchmark. Chen et al. (Citation2016) proposed an efficient semi-supervised feature selection (ESFS) technique that selects all the desirable features by exploiting all the details available on the unlabeled objects. Firstly, it uses the probability matrix of unlabeled objects in the loss function to obtain features that are relevant per each class, instead of using traditional graphs. Lastly, norm regularization is employed to ensure that selection matrix rows have the required sparsity. ESFS outperformed other classical methods when implemented on a VHR image. Too and Abdullah (Citation2021) proposed an improved genetic algorithm (GA) that incorporates the performance of GA in feature selection. The approach uses the competition strategy that integrates the new selection and cross-over schemes to improve the global search capability. Also, the dynamic mutation rate is also incorporated to improve the search power of the algorithm in the mutation process.

6.3. Geo-object based frameworks

GEOBIA has been dominantly used in land-cover/use classification. Recently it has also been successfully used to detect features in the area of archaeological remains (Lasaponara et al. Citation2016), green roofs (Theodoridou et al. Citation2017), alluvial fans (Pipaud and Lehmkuhl Citation2017), and dunes (Vaz et al. Citation2015). Various modifications have also been employed with the aim of meeting specific needs in real-world applications. Eckert et al. (Citation2017) improved the classification of fine geographical objects which only existed in certain landscape zones. Another study by Guo et al. (Citation2013) enrolled a two-step strategy to enhance classification by using object-neighbour context and scene context, respectively. However, these methods lack the ability to analyze latent spatial phenomenon. This challenge was addressed by Lang et al. (Citation2008) who introduced geons which serve as a spatial units that are homogeneous in terms of varying space time phenomena under policy concern. Lang et al. (Citation2014) further improved geons and came up with composite geons which provided solutions to policy-relevant phenomena such as societal vulnerability to hazards. The GEOBIA framework relied on geo-object based basic principles to derive image-object classes. However, improvement was done on GEOBIA workflow by adding parametric and non-parametric models to analyse object based variation within a specific class.

6.4. New forms of image objects

Considering the fact that the 3D geographical objects are represented as image objects in 2D format, some uncertainties and errors in identifying ground features could arise as some spatial dimensions are neglected (Alexander et al. Citation2010). Techniques in remote sensing and computer vision have progressed to the extent that it is now possible to capture geographical objects in 3D (Vaz et al. Citation2015). New trend in GEOBIA methods now incorporates vertical features in GEOBIA modelling e.g. carbon estimation at the tree cluster level using canopy height from a LiDAR sensor (Godwin et al. Citation2015). Extraction of object features by these techniques was centred on calculating boundaries of 2D image objects (Godwin et al. Citation2015; Zhang et al. Citation2013). Remote sensing science fraternity now advocates for generating image object features directly from a 3D scene model, which accurately represents real-world geographical objects. Photogrammetry and computer vision techniques can be used to construct 3D scene models (Luhmann et al. Citation2019). Using 3D information to delineate objects boundaries still remains a challenging task, as it is possible to loose crisp boundaries for certain components, for instance, a transition zone between wetland and water (Bian Citation2007). Defining fuzzy boundaries seemingly provides a better solution, whereby an image object is treated as homogeneous unit and equal parts (in terms of homogeneity) have a possibility of belonging to a certain class.

6.5. GEOBIA systems for novice GEOBIA users

GIScience and other communities took two decades to adopt GEOBIA frameworks and software packages (Chen, Weng, et al. Citation2018). The GEOBIA experts play a major role in incorporating user’s knowledge and experience when developing GEOBIA models in order to achieve high accuracies. For GEOBIA applications to have a wider coverage, GEOBIA models should provide a way of translating novice-GEOBIA users understating of geographical entities into an appropriate choice of algorithms. This could be achieved with systems that have three key components such as (1) data query, (2) processing chain and (3) product sharing which can interactively direct a user to go through the entire GEOBIA process. Krizhevsky et al. (Citation2012) argued that the translation of novice-GEOBIA understanding to GEOBIA language can be facilitated by the use of rule sets that are clearly defined and trained by machine or deep learning methods.

6.6. Embracing knowledge from other disciplines

GEOBIA has been extensively used in several disciplines (e.g. forest, urban planning, etc.), a gap still exists on how to integrate these disciplines to effectively support geo-object-based modelling (Chen, Weng, et al. Citation2018). Because of the nature of image analysis, GEOBIA hugely benefits from knowledge forecasting provided by computer vision that simulates human perception of digital imagery (Blaschke et al. Citation2014). Insufficient information on spectral, spatial, or temporal resolutions may result in experienced photo interpreters or computer programs producing incorrect perceptions of geographic entities (Castilla and Hay Citation2008). A potential solution proposed by Castilla and Hay (Citation2008) is to take advantage of the Earth-centric nature of GEOBIA, where perceived geo-objects and their corresponding spatiotemporal dynamics meet rules or laws in natural or built-in environments. In a study on vegetation transitional zones from dense to bare ground in California by Chen, Weng, et al. (Citation2018), a GEOBIA framework was employed to map disease-caused mortality and the results obtained indicated that there was over-estimation on patches of dead trees due to similar textural, geometrical and spectral characteristics between dead tree crowns and ground/shrub grass.

In order to enhance effective knowledge exchange and management, the GEOBIA community has adopted ontologies for specific applications (Andrés et al. Citation2017; Baraldi et al. Citation2017). Ontologies have played an important role in GEOBIA frameworks, but there is still a lack of comprehensive and universally accepted GEOBIA framework that provides guidelines for formalizing expert knowledge with ontologies. The next section discusses the use of ontologies in GEOBIA.

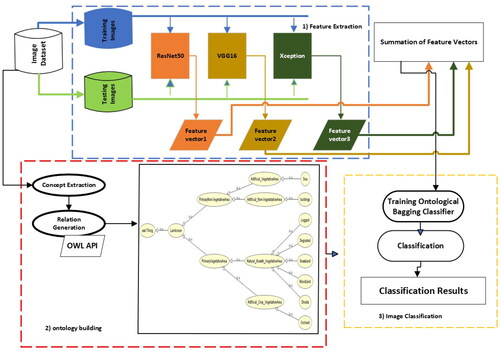

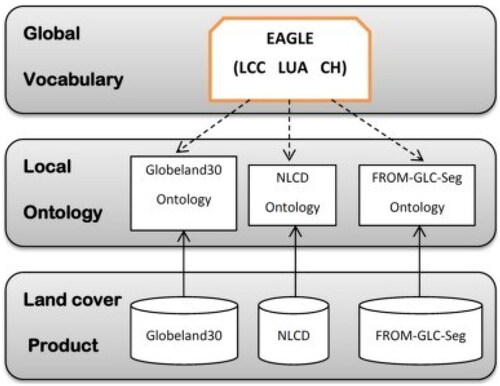

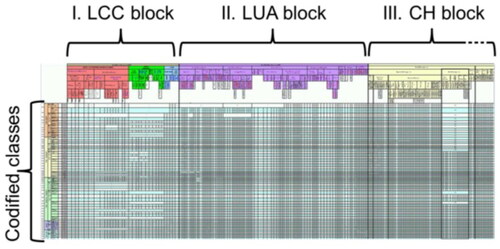

7. Ontologies for forest remote sensing image classification

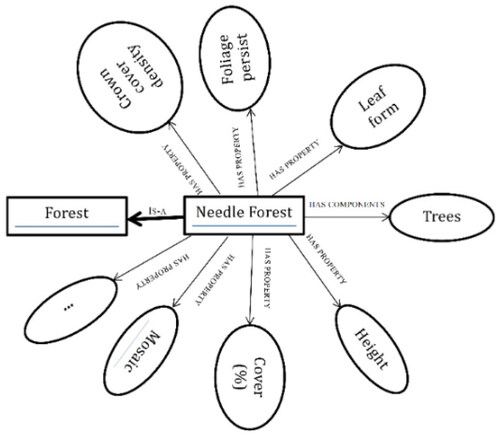

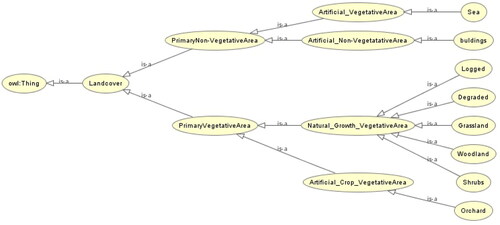

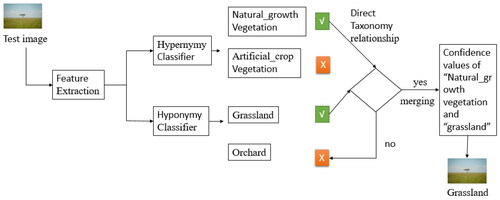

Although data-driven approaches have attracted significant interest in research, knowledge-driven approaches remain an important future direction for the remote sensing (RS) science community Arvor et al. (Citation2019). With that in regard, ontology having a strong power in knowledge representation, inference of common sense, knowledge sharing, and semantic cognition has gained much attention in the RS community Li et al. (Citation2022). The Thailand Flora Ontology (TFO) (Panawong et al. Citation2018) was proposed to establish a semantic lexicon on the web, to assist plant biologists in the discovery of flora knowledge. The development of the ontology was against the backdrop that ordinary non-botanist people were not able to understand or receive accurate information about plants because plant information was expressed in English with botanical terms. Two steps were followed to design the ontology including the domain analysis for knowledge organization and the ontology development process. In the first step, a qualitative research method was employed to construct the Flora of Thailand knowledge structure, through (1) selection of already existing resources pertaining to plant ontology, biological classification, the flora of Thailand, and plant taxonomy, (2) flora content analysis from selected resources, (3) adoption of domain analytic approach for the organization of Thailand’s Flora, and (4) explicit clarification of flora knowledge organization in consultation with domain experts. The ontology development process requires ontology engineers and developers to have the requisite knowledge in ontology specification and ontology development environment (Chansanam and Tuamsuk Citation2016). The construction of the TFO ontology followed the guidelines suggested in Ontology Development 101 by Noy and McGuinness (Citation2001). The scope of the TFO ontology was limited to the Flora of Thailand, therefore the study recommended further development into an ontology-based retrieval system.