Abstract

Recent technological advancements have enabled the development of smarter (more automated) and more intelligent (adaptable) environments. To understand what factors lead users to reject or adopt Intelligent Environments (IEs), we reviewed nine prominent technology adoption theories. We conducted a literature review to investigate the acceptance and adoption of different types of IEs. We found that perceived usefulness, ease of use, perceived control or self-efficacy, affect and enjoyment, and perceived risks are the common factors across the studies explaining the adoption of IEs. However, shortcomings in the design and methods of the reviewed studies present major concerns in the generalizability and application of existing theories to emerging IEs. We identify eight lacunae in the existing literature and propose a new conceptual model for explaining the adoption of IEs. Through this study, we contribute to the formulation of the theoretical background for the successful introduction of IEs and their integration into users’ everyday life.

1. Introduction

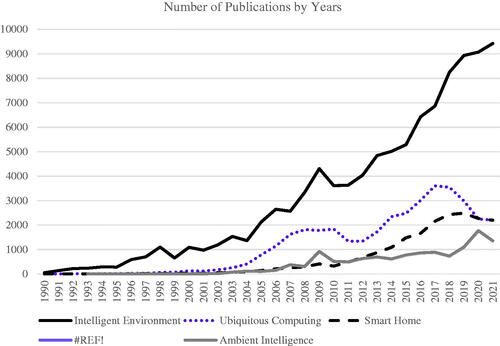

Recent technological advancements in artificial intelligence (AI) and information and communication technologies (ICT) are transforming our physical worlds. Computing systems are increasingly being embedded in homes, offices, and cars through mobile devices, wearables, sensors, data transmission, and cloud computing to improve the occupants’ lifestyle by catering to their needs. These new emerging intelligent and personalized environments have been investigated under different terms, such as intelligent environment (IE), ubiquitous (or pervasive) computing, Ambient Intelligence (AmI), and context-aware environments.

presents the increasing trend in the number of peer-reviewed publications on the subject of smart and intelligent environments.

Aside from factors describing technological capabilities, user technology literacy, marketing, or regulation issues, uncovering the mechanisms and factors involved in the acceptance and use of IEs is the key to explaining how AI-enabled environments should be designed. Little is known about what drives users to accept and adopt IEs and one stream of research that can address this problem is user technology adoption.

In this article, we discuss the core constructs of current technology adoption models and the extent to which those constructs explain and predict user behavior. We categorize models into different classes to show their similarities and differences. In addition, we comment on the abilities of each to explain user acceptance and use of IE. The discussion covers considerations about the variables that mediate characteristics of an IE and its actual use, and how these variables relate to one another and user behavior. Finally, we discuss whether or not the current technology adoption models can answer our research questions: Can we apply any of the existing technology adoption models to the acceptance and use of IEs? What are the prominent factors of IEs to users? What are the unique concerns around IEs that have limited their adoption rate? How can we build more informative models to explain user adoption of IEs? Does a generic model work across different contexts (cars, homes, offices, etc.)?

2. Terminologies and basic concepts

The idea of embedding computers into the physical world was first investigated by Weiser (Citation1991) who coined the term “ubiquitous computing.” Malik (Citation2015) pointed to characteristics of ubiquitous or pervasive computing environments as follows: embedded (devices are implemented within the environments), transparent (users see the services rather than the operating systems), context-aware or -sensitive (operations are aware of the physical and logical context), human-centric (the system enables natural and intuitive interfaces), intelligent (operations can be automated and adapt to demands), and autonomous (operations can happen without user intervention or order).

Similarly, Ambient Intelligent (AmI) environments are often referred to as “embedded, context-aware, personalized, and adaptive with interconnected devices and sensors” (Acampora & Loia, Citation2005). Upon first proposing the concept, AmI was described as an environment where “humans will be surrounded by intelligent interfaces supported by computing and networking technology which is everywhere, embedded in everyday objects” (Ducatel, Citation2001). According to Little and Briggs (Citation2005), there are five requirements for AmI: (1) unobtrusive hardware, (2) a seamless mobile or fixed communications infrastructure, (3) dynamic and massively distributed device networks, (4) naturalistic human interfaces, and (5) dependability and security.

Augusto et al. (Citation2013) explained how these concepts are different from one another. They explained pervasive computing as more focused on distributed computing, such as computer networks and distributed architecture, ubiquitous computing as more focused on human–computer interactions, and AmI to be centered around intelligence and the role of AI. Based on Augusto et al. (Citation2013), IEs refer to an environment where “the actions of numerous networked controllers (controlling different aspects of an environment) are orchestrated by self-programming preemptive processes (e.g., intelligent software agents) in such a way as to create an interactive holistic functionality that enhances occupants’ experiences” (p. 1). Therefore, the key characteristics of IEs include the use of intuitive interfaces, sensors and detectors, proactive functions, unobtrusiveness and invisibility, pervasive or mobile computing, sensor networks, AI, robotics, multimedia computing, middleware solutions, and agent-based software.

Abowd et al. (Citation1998) presented seven key requirements for ubiquitous smart spaces, another name for IEs: capturing everyday experiences, access to information, communication and collaboration support, natural interfaces, environmental awareness, automatic receptionist and tour guide, and training. Kaasinen et al. (Citation2012) characterized intelligent environments by pervasive embedded information and communication technologies into the physical spaces. From their perspective, a user is not only a passive subject but a “co-crafter” who is also actively engaged in the creating/updating of the functions. According to Kaasinen et al. (Citation2012), IEs refer to any space in our surroundings, such as homes, cars, offices, or streets, that are equipped with embedded sensors and devices which can interact with and adapt to users—who are also co-crafters of their environment—through its AI-enabled context awareness and learning capabilities.

The words “smart” and “intelligent” have been used interchangeably in previous studies. These two terms are synonymous, but conceptually distinct when they are used to describe technology-enabled devices. According to Minerva et al. (Citation2015), “smart” refers to devices that can act independently, while intelligent devices can learn and adapt to situations similar to humans. However, there’s no apparent consensus and the distinction has not been addressed in the literature.

Understanding what drives users to adopt an IE provides information around critical user needs, design considerations, and barriers and factors to support adoption. Existing technology acceptance and adoption theories, each with different perspectives, often from social psychology and built around non-intelligent technologies, are limited in addressing why and how people adopt and use IEs. In this article, we analyze the most prominent technology adoption theories with a concept-centric review through a selective list of articles that are widely cited. We classify the theories based on their core constructs and are discussed around their benefits, application areas, and limitations around applying them to the adoption of IEs.

3. Technology acceptance and adoption theories

In this section, technology adoption theories are classified into two types: theories that explain acceptance and adoption of technologies at the user level versus those that explain this phenomenon at the societal and cultural level.

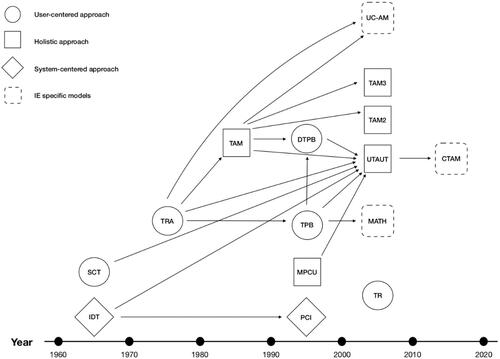

presents a summary of all the theories discussed in this article based on the time that their first version was published.

Figure 2. A summary of all the discussed theories. The year refers to when the first version of the theories was published. The figure only includes relationships between theories covered in the article. The arrows show the origins of the models.

3.1. Theories describing technology acceptance and adaption by individual users

The theories in this class are classified further into three categories: theories at the level of the user, system-centered theories, and theories with a holistic approach. The categories are not mutually exclusive but highlight the focal point of each theory and their core constructs.

3.1.1. Theories at the level of the user

Theories belonging to this category focus on user-related factors, such as motivation and attitude to explain what characteristics in users lead them to adopt a technology.

3.1.1.1. Theory of Reasoned Action (TRA) and Theory of Planned Behavior (TPB)

Theory of Reasoned Action (TRA) was developed by Ajzen and Fisbbein (Citation1974) in the field of social psychology to predict human behavior. Based on this theory, attitude (A) and subjective norms (SN) are the two predictors of behavioral intention (BI), and intention is most likely to lead to performing a behavior (BI = A + SN) (Lai, Citation2017).

The Theory of Planned Behavior (TPB) (Ajzen, Citation1991) complemented TRA by adding the construct of perceived behavioral control (PBC) as another predictor of behavioral intention. Based on TPB, in some situations, even strong intentions do not lead to actual behavior (Ajzen, Citation1991; Wahdain & Ahmad, Citation2014). By adding perceived behavioral control, realistic limitations as well as self-efficacy are considered. Ajzen (Citation1991) defined subjective norms as individual’s perceptions of other people’s opinions of the behavior, and perceived behavioral control refers to people’s perception of the ease or difficulty of performing the behavior of interest (Wahdain & Ahmad, Citation2014).

TPB has been vastly used in the domain of public health. Predictive factors in this theory (attitude, subjective norms, availability of resources, opportunities and skills, and perceived significance of the resources) mostly emphasize the social context and user characteristics. Although the core constructs of this model can be informative in explaining why people adopt technologies, it is not specific to technology usage, and lacks technology and task-related factors when applied to explain acceptance and use of IE.

3.1.1.2. Technology Readiness (TR)

An individual’s tendency to adopt and use new technologies (Parasuraman, Citation2000) is measured using the technology readiness index (TRI) which consists of 36 items broadly categorized into four constructs: optimism, innovativeness, discomfort, and insecurity. Parasuraman (Citation2000) defined innovativeness as “a tendency to be a technology pioneer and thought leader.” In TRI, optimism refers to an individual’s positive view toward a technology that shapes their perceived control, flexibility, and efficiency while discomfort identifies a user’s lack of control over the technology. Insecurity shows a user’s inability to rely on technology. Here, optimism and innovativeness promote TR while discomfort and insecurity inhibit TR.

Parasuraman and Colby (Citation2015) updated the original index to account for new themes and advancements in technology. They streamlined TRI by rewording existing items as well as adding new items to reflect other potential technology adoption determinants like a distraction, impacts on relationships, dependency, and social pressures. The revised index (TRI 2.0) is more succinct with 16 items, and more applicable to a contemporary context because the items are technology neutral and worded in a way that is relevant to current technologies. Although TR has been used to examine the adoption behavior of a wide variety of technology-based devices and services ranging from cryptocurrency (Alharbi & Sohaib, Citation2021) to e-learning (Kaushik & Agrawal, Citation2021), few studies have used this model in the context of IEs.

3.1.1.3. Social Cognitive Theory (SCT)

SCT originated in psychology (Bandura, Citation1986). Researchers (e.g., Compeau et al., Citation1999) used this theory to understand individuals’ cognitive (self-efficacy, performance-related outcome expectations, and personal outcome expectations), affective (affect and anxiety), and usage reactions to technologies. In a longitudinal study by Compeau et al. (Citation1999), perceived consequences of using computers were divided into two dimensions: performance-related and personal outcomes. Their findings show that computer self-efficacy and outcome expectations significantly impacted individuals’ affective and behavioral reactions to information technology. Moreover, they found that computer self-efficacy is a continuing predictive factor of technology use and adoption over a long period of time. Although SCT provides a solid theoretical framework by considering different intervening factors in human behavior, it has not widely been adopted in the area of technology adoption.

3.1.2. System-centered theories

In this category, theories focus on the characteristics of the technologies.

3.1.2.1. Innovation Diffusion Theory (IDT) or Diffusion of Innovation Theory (DoI)

Innovation Diffusion Theory (IDT) or Diffusion of Innovation Theory (DoI) is rooted in sociology and has been used since 1960 to study innovations in different fields (Rogers, Citation1995; Venkatesh et al., Citation2003). IDT puts forward a framework explaining the diffusion of the innovation process and understanding how users ultimately decide whether to adopt or reject an innovation (Hubert et al., Citation2019). According to this theory, there are five factors that influence the rate of diffusion of an innovation: relative advantage, compatibility, complexity, trialability, and observability (Rogers, Citation1995). Relative advantage is the assigned value to the innovation relative to the current practice. He defined compatibility as the extent to which the user perceives the innovation aligns with their values, experiences, and needs. Complexity refers to user perception of difficulty in understanding and using an innovation, trialability measures the availability of the innovation to be experimented before adoption, and observability refers to the availability of an innovation results to prospective users (Kaminski, Citation2011; Rogers, Citation1995; Walldén & Mäkinen, Citation2014).

Past studies have used IDT in conjunction with the technology acceptance model (TAM, see section 3.1.3) to gain a better understanding of the decision-making process involved in users’ adoption behavior (Hubert et al., Citation2019; Lee, Citation2014). For example, Hubert et al. (Citation2019) drew from TAM, IDT, and perceived risk theory when developing an adoption model. He suggested that a combination of TAM and IDT can enhance our understanding of adoption behavior and explain the differences between intention to use versus actual usage.

3.1.2.2. Perceived Characteristics of Innovation (PCI)

Moore and Benbasat (Citation1991) adapted the theory of Innovation Diffusion Theory (IDT) and identified factors that explain the reasons for use and acceptance of new technology: relative advantage, ease of use, image, visibility, compatibility, results in demonstrability, and voluntariness of use (Walldén & Mäkinen, Citation2014). In an effort to address the limitations of IDT, Moore and Benbasat (Citation1991) noted that there is an important distinction between the primary characteristics of innovations and the perception of these characteristics. They suggested that the latter is of greater relevance because users’ behavior is dependent on their perception of the primary characteristics, and argued that when studying the diffusion of innovations, it is imperative to focus on the perceptions of using the innovation so they simply reworded pre-existing constructs. Moreover, the observability construct from IDT was divided into result demonstrability and visibility because it was found to encompass two separate concepts. Image refers to the user status in a social system and voluntariness is the user perception of voluntary usage of a technology (Moore & Benbasat, Citation1991). These two factors were deemed important to add to IDT because the researchers felt that IDT did not include a measure of social approval with regards to making their own decision to adopt or reject an innovation, both of which can influence the user’s adoption behavior.

3.1.3. Theories with a holistic approach

In this category, theories combine several different aspects of the technology adoption process by considering the characteristics of the user, system, environment, tasks, and context.

3.1.3.1. Technology Acceptance Model (TAM)

TAM is the most commonly used and empirically supported theory (Legris et al., Citation2003; Peek et al., Citation2014; Wahdain & Ahmad, Citation2014). TAM was developed from the theory of reasoned action (TRA) and was built to study the use of IT systems in organizations where usage was voluntary (Dishaw & Strong, Citation1999). Similar to TRA, TAM also suggests that user beliefs are the mediators between the external variables and intention to use (Venkatesh, Citation2000). Two key beliefs, perceived ease of use (PEU) and perceived usefulness (PU), predict behavioral intention in this model (Dishaw & Strong, Citation1999). In the early version of TAM, the attitude was defined as the mediator between PEU and PU that leads to intention to use. However, as the model was developed, the attitude construct was eliminated since it was found that attitude was not a significant predictor of intention to use (e.g., Legris et al., Citation2003). While PEU and PU are independent variables, the latter is more important in determining system use and impacts people’s perception of a system’s usefulness (Dishaw & Strong, Citation1999; Legris et al., Citation2003).

To explain PEU, PU, and intention to use, Venkatesh and Davis (Citation2000) developed TAM2. This extended model was tested in both voluntary and mandatory technology use settings, accounting for social influence (subjective norms, voluntariness, and image), cognitive instrumental processes (job relevance, output quality, and result demonstrability), and experience, and explaining 60 percent of user adoption.

In 2008, Venkatesh and Bala developed TAM3 (Venkatesh & Bala, Citation2008). Similar to TAM2, TAM3 was built to further uncover the determinants of PEU and PU. They found that subjective norms, image, job relevance, output quality, and result demonstrability influence PU. PEU is influenced by anchor variables (computer self-efficacy, perceptions of external control, computer anxiety, computer playfulness) and adjustment variables (perceived enjoyment and objective usability), while experience and voluntariness act as modifiers of behavioral intention.

Previous research has discussed the shortcomings of TAM, which may limit its applicability to IEs. Although TAM stems from psychology, it does not explain individual differences (Walldén & Mäkinen, Citation2014). McFarland and Hamilton (Citation2006) also pointed out that TAM may be too general and does not fully consider the impacts of contextual variables. They explained that this can be related to the fact that TAM does not differentiate between the task and the complexity of the system so users may score the system based on the difficulty of the task rather than the characteristics of the system. Another issue with TAM is that the items and questions ask about the user experience of a system that is supposed to accomplish specific goals. However, in intelligent environments, user interactions, goals, and tasks are undefined and unlimited.

Given that TAM was developed based on TRA, the role of control which was defined in TPB was not explicitly incorporated in the theoretical development of TAM (Lai, Citation2017; Venkatesh, Citation2000; Venkatesh & Davis, Citation1996). Taylor and Todd (Citation1995) combined TAM and TPB and created a version of TPB called the decomposed TPB. In this new model, subjective norms, attitudes, and PBC are replaced with constructs of information systems to account for different usage situations. This theory also provides a generic questionnaire to avoid tailoring an instrument that TPB requires with instructions. Results from an empirical comparison between TAM and decomposed TPB showed a slight increase in the predictive ability of this theory over TAM, at the cost of more complexity (Lai, Citation2017; Mathieson et al., Citation2001).

Another critique of TAM is that it ignores social factors that could play a role in the adoption of technology. Previous studies investigating the influence of social factors on people’s adoption of a technology show contradictory results (e.g., Malhotra & Galletta, Citation1999). Davis et al. (Citation1989) explained that subjective norms’ scales had a very poor psychometric standpoint, and might not explain consumers’ behavioral intentions. Lai (Citation2017) explained that social norms can mostly explain behavioral intention to use in a mandatory environment and this may be evident in IE settings. For example, smart home services might have been adopted by a household of four where only one or two of the residents decided to incorporate the services and other residents became mandatory users. Moreover, when TAM is applied to collaborative systems, it is often observed that the belief structures (perceived ease of use and perceived usefulness) are not stable (Malhotra & Galletta, Citation1999). This is an important point in terms of applying this model to IEs because the environment may be used by more than one user.

3.1.3.2. Unified Theory of Acceptance and Use of Technology (UTAUT)

Venkatesh et al. (Citation2003) built the Unified Theory of Acceptance and Use of Technology out of eight existing theories: TAM, TRA, combined TAM and TPB, TPB, MPCU, IDT, Motivational Model, and SCT. The new model has four predictors of users’ behavioral intention: performance expectancy, effort expectancy, social influence, and facilitating conditions. Effort expectancy represents perceived ease of use and complexity, and performance expectancy includes perceived usefulness, extrinsic motivation, job-fit, relative advantage, and outcome expectations (Lai, Citation2017). In addition, four significant moderating variables were identified: gender, experience, age, and voluntariness of use (Taherdoost, Citation2018).

UTAUT is an extension from TAM2 and TAM3 to include social influence and is capable of explaining up to 70% of adoption (Peek et al., Citation2014). Although UTAUT resolves several issues that previous theories have, it is built upon system usage in a workplace environment. The workplace environment and the factors that influence adoption in the workplace would likely differ from determinants of usage in the home or other environments. Moreover, this model—like the other existing models—is built to measure the intention to use and use behavior of specific systems with limited and defined goals which might not be the case in IEs.

3.1.3.3. PC Utilization Model or Model of PC Utilization (MPCU)

The Model of PC Utilization (MPCU) was built by Thompson et al. (Citation1991) and is based on Triandis’ Theory of Human Behavior (Venkatesh et al., Citation2003). In this model, personal computer usage can be directly explained and predicted by the following factors: affect, facilitating conditions, long-term consequences of use, perceived consequences, social influences, complexity, and job fit. Although the model was created with the intention of predicting PC utilization, the nature of the model also allows us to predict a wider range of information technologies acceptance.

After the first test of MPCU, a survey of workers who optionally used a PC in their job was conducted by Thompson et al. (Citation1991). Thompson et al. (Citation1991) found that all but two factors—affect and facilitating conditions—had a significant impact on PC utilization. In another study, Thompson et al. (Citation1994) extended MPCU to study how experience with PCs influences the utilization of PCs. In this study, a questionnaire was sent to participants from eight different companies (N = 219). The main findings of the study can be summarized into three points. First, experience directly influences utilization. Second, experience also indirectly affects utilization but these effects are less prominent than the direct effects. Third, experience plays an important role in moderating the effects of all the aforementioned factors on utilization, except for job fit. However, MPCU is very limited to a specific system and the predictive factors are derived from people’s interaction with PCs which may not include all the factors that involve in user adoption of an IE.

3.2. Theories describing technology acceptance and adoption at the societal level

In addition to theories and models describing individual users’ adoption of technology, some frameworks outlined how technology acceptance and adoption take place at a macro and societal level as described in this section.

3.2.1. Domestication theory

Domestication theory first began to take shape at the beginning of the 1990s and stemmed from anthropology and studies of consumption behaviors (Haddon, Citation2006). This theory is used to study the ways in which technology becomes integrated into peoples’ lives, and aims to illustrate the importance of technology and what it means to people in their day-to-day routines. One unique feature of the domestication approach compared to other theories is that it examines the wider context to better understand how people give meaning to different technologies, what roles they fill out for people, and how they experience them in their day-to-day life. Early domestication studies primarily examined the usage and adoption of either a collection of ICTs or specific devices in the home (Haddon, Citation2006). Unlike other theories, domestication considers group structure in households and workplaces as well as individual behavior. With regards to smart homes, this theory is particularly appropriate because the decision to start using new technologies may depend on and/or be influenced by familial interactions, or as Haddon (Citation2006) puts it, “the politics of the home.” In short, the advantage of the domestication theory lies in the fact that it allows researchers to examine technology usage in realistic settings by considering the social context and the interaction of different complex factors on different levels, such as media and culture (Hynes & Richardson, Citation2009).

Despite the many advantages of this theory, it is equally as important to note the limitations. Haddon (Citation2006) comments on two drawbacks of the domestication theory. Firstly, methodologies are largely qualitative. Secondly, there is no centralized method or factor that we can refer to when applying this theory. Addressing these two limitations can help studies to apply the domestication approach with more practical implications.

3.2.2. Innovation Diffusion Theory (IDT)

We placed IDT in the category of system-centered theories and defined it as a theory that focuses on the characteristics of a system or an innovation. However, this theory has other angles that can explain adoption beyond the system level. Other than the concept of innovation and its characteristics that were discussed above, this theory introduces the elements of communication, time, and social system (Lundblad, Citation2003). Rogers (Citation1995) highlighted the importance of communication, or the process by that innovations can be transmitted to or within the social system, such as mass media and interpersonal channels, in the adoption rate. The time element explains the timeframe from when the innovation is introduced to the first adopters, the inclination of an individual to adopt a new idea, and the rate or speed of adoption (Lundblad, Citation2003). According to Rogers (Citation1995), the adoption of an innovation does not happen with the same speed across the individuals of a society. He introduced adopter categories that many organizations use to investigate their product success and marketing: innovator, early adopter, early majority, late majority, and laggards.

With the consideration of different distribution channels, IDT benefited industries and market research in understanding the diffusion of an innovation based on the target user and stages of product development across organizations, such as education, healthcare, and information technology. IDT is a great example for future research, specifically in the area of IE to incorporate a more holistic approach to understanding adoption through user attitude and personality characteristics.

shows a summary of the theories and aggregates the core constructs of models that have similar meanings but different terminologies in the same category.

Table 1. The core constructs of the nine prominent theories.

Core constructs of different technology adoption models address different angles to consumers’ needs and desires; emotional response is one of them. Among all the core constructs that are shown in , the following list falls into future consumers’ capabilities, limitations, and emotional responses: attitude, optimism, perceived control, self-efficacy, discomfort, trust, affect, subjective norm, social influence, and image, perceived value, usefulness, relative advantage, performance expectancy, and long-term consequences of use. This long list shows the importance of emotional responses as a strong predictive factor of consumers’ technology adoption. Therefore, future research should investigate how developers can take these factors into account and address them practically.

4. Literature review on technology acceptance and adoption of intelligent environments

Various comparative studies have been conducted to determine what technology adoption theories to explain acceptance, predict adoption of these environments, and which factors have the greatest impact in terms of purchase intention. Kaasinen et al. (Citation2012) identified ten factors in previous studies that have significant impacts on user acceptance of IEs: usefulness, value, ease of use, a sense of being in control, integrating into practices, ease of taking into use, trust, social issues, cultural differences, and individual differences. Another study concentrated on the user experience of IoT (Park et al., Citation2017). Based on the extraction of factors from expert interviews and subsequent testing of hypotheses around factor relationships, a new model was presented. Findings revealed that users’ attitude toward IoT technologies is the greatest predictor of their intention to use them. Moreover, perceived compatibility and cost showed major impacts on intention to use as well. However, this study did not consider types and numbers of IoT services, and only investigated experts’ opinions instead of actual users.

A study by Shuhaiber and Mashal (Citation2019) investigated the acceptance of smart homes by residents through a survey. They showed that in addition to perceived usefulness and perceived ease of use (the two predictive factors from the technology adoption model), trust, awareness, enjoyment, and perceived risks significantly influence attitude toward smart homes and consequently the intention to use. However, there are some limitations to the design of this study. First, the source of the employed questionnaire is unknown. Second, they recruited participants under self-awareness of smart home concepts. In other words, researchers did not explain the specification or functions of a smart home. Self-awareness is a broad concept that can include a variety of respondents from frequent users of many smart home services to non-users who are aware of some of the existing products.

Yang et al. (Citation2018) explored the association between four critical factors (perceived automation, perceived controllability, perceived interconnectedness, and perceived reliability) and the adoption of smart homes. Results of their survey showed that controllability, interconnectedness, and reliability, but not automation, was significant in influencing smart home adoption. However, their bias toward choosing these four factors and excluding other constructs was not explained in the article.

Interestingly, researchers found varied results when samples were divided by residence type, gender, age, and experience which suggests different users value different features in IoT products. For example, controllability and interconnectedness were found to affect adoption for apartment dwellers, whereas automation and reliability were of greater importance for general home dwellers. Another trend in the group comparisons was that men valued interconnectedness more compared to women, while women valued reliability more than men. One limitation of this study is that it is unclear how and why the researchers decided to focus on these factors. Moreover, the research model is not based on existing adoption models or theories which makes it difficult to evaluate the applicability of these findings to the adoption of IEs.

De Boer et al. (Citation2019) extended the TAM model by investigating the role of user skills as predictors of IoT technology acceptance and usage in the home. IoT skills were hypothesized to determine usage by influencing PEU and PU. Since it is unclear exactly what skills are required to operate IoT technology, the study uses Internet skills as an indicator of IoT skills based on the logic that Internet skills are transferable. The results from an online survey showed that IoT skills do in fact contribute to IoT use; skills were found to influence PU and PEU in indirect and direct ways. The researchers believe that these findings demonstrate the importance of the user’s evaluation of their own IoT skills when beginning to use IoT technology, and this judgment will thereby, impact their assessment of ease of use and usefulness. While the findings seem promising, one limitation is that the systems and features explored in this study are not clear. Another limitation is that the original TAM was intended to test specific tasks, but this study did not apply the model in that way. As a result of not having a clearly defined task, researchers considered that this might have caused mixed results in technology perceptions.

Venkatesh and Brown (Citation2001) developed the model of adoption of technology in households (MATH) based on TPB (Ajzen, Citation1985). In line with Taylor and Todd’s version of TPB (1995), Brown and Venkatesh (Citation2005) put forth a decomposed belief structure for home PC adoption. In this model, attitudinal belief structure is made up of utilitarian outcomes, hedonic outcomes, and social outcomes; normative belief structure is composed of social influences from the people in an individual’s social network; and control belief structure consists of three constraints that inhibit adoption behavior: knowledge, difficulty of use, and cost. Brown and Venkatesh (Citation2005) defined utilitarian outcomes (the effectiveness of household activities), hedonic outcomes (the experience of pleasure), and social outcomes (the public recognition). In this study, two rounds of large-scale phone interviews with different households helped pinpoint detailed dimensions within the theorized constructs. For example, the overarching social influence factor can be divided into influences from friends and family, and influence of information from secondary sources. One of the key contributions of this model is that it highlights the differences in driving factors between adopters and non-adopters.

Brown and Venkatesh (Citation2005) conducted a quantitative test of MATH and extended the model by exploring the effects of life cycle characteristics and income on adoption behavior. The results from a survey of households that have not adopted a PC show that the influence of attitudinal beliefs varies by life cycle stage. For example, the utility for work-related use was of greater importance in the stages of life that are associated with prime working-age years. Consistent with the typical life cycle of a working individual, the importance of this factor increased and then, decreased toward retirement age. Another key finding was that income interacts with the normative and control beliefs within the life cycle stage. In the stages of life where normative beliefs were deemed important, as income increased, the influence of peoples’ opinions decreased. With regards to control beliefs, the influence of fear of technological change, declining cost, and cost decreased, as income increased. Thus, it is important for companies to consider how the life cycle characteristics of households influence tech adoption. Brown and Venkatesh (Citation2005) also point out that income is a determinant, but is not the sole driver of non-adoption. One of the strengths of this model is that it examines the adoption of technology in households while attempting to understand household decision-making processes. However, one limitation is that MATH specifically examines determinants of PC adoption and so, the applicability of the model to study the usage of smart home devices has yet to be determined. Also, MATH is tailored to examine a PC which is different from a smart home in several ways. For instance, applying the model to smart home devices may require more constructs because smart homes can be used for a wider variety of purposes as compared to a PC. Additionally, the primary decision maker may share different views compared to other household members.

Another relevant theory in the area of IEs is the Ubiquitous Computing Acceptance Model (UC-AM) which was built to investigate factors that drive and impede consumers to adopt a new service landscape (Spiekermann, Citation2007). UC-AM is developed based on TRA and TAM to predict whether potential users accept ubiquitous computing environments. Attitude is a central concept to this model as TPB and TRA have shown its strong correlation with behavioral intentions. Spiekermann (Citation2007) differentiates two types of attitude: cognitive and affective. Cognitive attitude refers to the advantage or disadvantage to perform the behavior and affective attitude points to someone’s feeling about performing a behavior, which assumes both have positive impacts on the intention to use a system. UC-AM indicates that cognitive and affective attitudes are the primary factors of technology acceptance or intention to use. Moreover, this model assumes that the usefulness of TAM is applicable to ubiquitous computing environments since this construct refers not only to a job performance but also to the outcome of system adoption as rooted in expectancy-valence theory. One difference is that ease of use from TAM is not included in this model because intuitive interaction is a requirement in ubiquitous computing environments.

UC-AM also accounts for user-perceived control, perceived risk, and influential factors of user emotion and technology acceptance. Spiekermann (Citation2007) found that privacy concerns hardly show any influence on behavioral intention, affective attitude, and cognitive effort toward a service, thereby concluding that people’s privacy concerns do not drive their behavior. This study also reports that functional risk shows no significant influence on attitudes or the intention to use ubiquitous computing services. Shin (Citation2009, Citation2010) assessed the model in the ubiquitous city context to provide guidelines for u-city designers. Results showed that trust has an indirect impact on intention to use and is mediated by perceived risk and benefit. Therefore, contrary to Spiekermann’s claim (Citation2007), perhaps, the risk does influence behavior depending on the type of technology and should be considered in the context of IEs.

Although UC-AM is built based on strong theories in the area of technology adoption models, there are some limitations to its methodology. This model is tested based on people’s perception and acceptance of scenarios accompanied by graphics that are supposed to represent three ubiquitous computing services from different contexts: an intelligent fridge that autonomously places orders, an intelligent speed adaptation system (ISA) which brakes the car automatically when the route’s speed limit is passed, and a car that services itself and schedules a meeting with a garage if parts are about to wear out. People’s perceptions of these scenarios might differ from their actual usage of the system or experience of living in an AI-enabled environment.

User adoption of highly automated cars as a kind of intelligent system and environment has been studied as well. Studies show low explanatory power of behavioral intention (20%) to predict acceptance of driver support using UTAUT to measure the acceptance of driver support (Adell, Citation2010) and automated road transport systems (Madigan et al., Citation2016). These findings show that UTAUT missed capturing other determinant factors. To address the gaps in UTAUT, Osswald et al. (Citation2012) built the Car Technology Acceptance Model (CTAM) upon the UTAUT model. They introduced anxiety and perceived safety as new additional factors that should be considered for the evaluation of in-car information systems. Hewitt et al. (Citation2019) applied CTAM to autonomous vehicles and called it the Autonomous Vehicle Acceptance Model (AVAM). They found that people’s intention to use autonomous vehicles decreases as the levels of automation increase based on SAE levels of automation (SAE International, Citation2018).

User adoption of higher automation levels (SAE levels 3, 4, and 5) was investigated by Detjen et al. (Citation2021). Based on the findings of previous studies and the gaps in existing models, they found five categories that explain the acceptance of autonomous vehicles: Security & Privacy, Trust & Transparency, Safety & Performance, Competence & Control, and Positive Experiences.

shows all the predictive factors identified in previous studies on user adoption of IEs. Among all, usefulness, ease of use, sense of being in control, affect, and perceived risk are the most common factors across the reviewed articles.

Table 2. A summary of predictive factors in user adoption of IEs.

5. Existing gaps and future considerations

Adoption and acceptance models provide a solid base for understanding and investigating technologies and how behavior (usage and adoption) is a result of beliefs and/or affective reactions to a technology in a specific context. Each of the existing theories uncovered determinant factors in individuals, situations, tasks, and society that can be used as a base for future studies. However, these theories may not be able to describe and predict user acceptance and adoption of IEs. Below, we identify the drawbacks of the current studies.

First, the existing models and theories were built to understand the adoption of simpler technologies which do not represent current sophisticated, multi-purpose, embedded, intelligent, and intuitive technologies and their ecosystems. In IEs, users are surrounded by many computing and communicating devices. These complex environments introduce new evaluation challenges, such as invisibility (implementing computing devices into the environment), interoperability (the ability of different systems to work together), and modulability (capability to adjust and accommodate changes in the environment and user’s preferences) (Malik, Citation2015), all of which require new assessment measures and considerations.

Second, many of the previous models have been developed to test the technology in the workplace where an individual user’s attitudes may be less determinative of system adoption (Davis et al., Citation1989), regardless of the voluntariness of system use. Attitude is likely to be of greater importance in the domain of IEs because it probably holds more weight in the user’s decision to adopt the technology relative to workplace environments.

Third, many of the existing models have been tested by other studies in the early stages of new technology acceptance which does not account for the technology adoption journey and continuous usage. The main dependent variable in many of the models is the intention to use a system, which is important in terms of its ability to describe system acceptance but may not be enough to predict the actual long-term usage and successful distribution and delivery of promised benefits.

Fourth, the meaning of core constructs and factors should be reassessed and updated. New technologies introduce new functionalities and expand the applications and concepts. Kaasinen et al. (Citation2012) discussed how concepts, such as “value” or “benefit” might explain usefulness better, where the former addresses users’ appreciation of and motivation for the technology rather than a specific need.

Fifth, with new technologies and higher degrees of automation, new use cases and needs will be introduced to users. Kaasinen et al. (Citation2012) discussed that users expect an intelligent environment should be able to help them with decision-making, keeping up with the popular trends, increasing their safety and personal freedom, acquiring higher social status, improving cost management, and other higher-level complicated tasks. New models need to consider the impact of automation on users and the challenges, such as security, privacy, trust, transparency, etc. (e.g., Detjen et al., Citation2021) in highly automated environments which might not have been determinant factors for previous/older technologies.

Sixth, most of the models and theories do not provide sufficient information for designers and developers about the system design considerations to increase user acceptance of new systems. For example, many of the existing IEs require users to understand the basic computing technologies and skill factor which is closely related to the previous experience and self-efficacy is also important in the design of IEs.

Seventh, many of the models have been tested with samples that lack in diversity of possible user characteristics. Some studies (e.g., Lee, Citation2014; Morris & Venkatesh, Citation2000) showed that the predictive factors of technology adoption are different for older and younger people.

Finally, reviewing the core constructs of the technology adoption models shows that only a few factors are consistent across the models. This suggests little overlap and diverse views of each model. Social influence, usefulness, and ease of use are the three factors that have been repeated the most by the technology adoption models (). Among factors specific to IEs, usefulness, ease of use, sense of being in control, enjoyment, attitude, and perceived risk are highlighted by the studies (). Limitations in the design and methodology of these studies raise this question (e.g., laboratory studies or expert interviews): what are the most important factors that we should focus on? What are the other determinant factors in user adoption of IEs?

6. Toward a new model for intelligent environment adoption

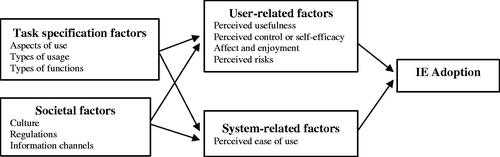

With the identified lessons and gaps, we propose a conceptual model () that summarizes the insights from the reviewed studies and their limitations. The goal of this preliminary model is to show where the current IE adoption research stands and what directions are open for future studies.

Figure 3. A preliminary model that summarizes predictive factors in IE adoption in accordance with four major themes.

In this model, we define four themes. All themes are identified by grouping the factors and core constructs from previous models to cover all the existing categories of technology adoption factors. However, we only selected technology adoption factors that have been repeatedly shown as important and predictive ones in our literature review. The identified factors fall into one of these themes: user-related factors, system-related factors (i.e., environment), task-specific factors, and societal factors.

Among the most common factors across IE adoption studies, perceived ease of use falls into the system-related category that can also be identified as “environment” in this scope.

Perceived usefulness, perceived control or self-efficacy, affect and enjoyment, and perceived risks fall into the user-related category to define what characteristics of user attitudes can be accounted as predictive factors in their IE adoption.

Regarding societal factors, that have an important role in today’s diffusion of ideas and new products considering the new increasingly information channels intruded into our lives, three general factors (culture, regulations, and information channels) have been highlighted. With all the infrastructure and outside services that can be involved in different layers of IEs, culture, regulations, information channels, and speed of information distribution can have great impacts on the adoption process.

The literature review showed little agreement on task-specific factors. Different aspects of use (consuming, communicating, etc.), types of usage (general or specific use), and types of functions (shared and personal) with respect to technology adoption have been briefly discussed in different studies. The place and impacts of the last two themes (societal and task-specific factors) require more investigation.

7. Summary and implications

The ubiquitous, immersive, and pervasive nature of IEs goes beyond a simple apparatus or an artifact that at the highest level engages users with, but is a space that users exist within. These properties of IEs make them different, and perhaps, their adoption is not able to be understood through a single existing technology adoption model or even a synthesis of several. This study aimed at understanding what theories and factors can best explain user adoption of IEs. To this end, nine prominent and widely used technology adoption theories were discussed. The discussion was followed by introducing previous studies on IE adoption and new models and approaches that attempted to explain predictive factors in the adoption of IEs.

Each adoption theory provides a solid base for future studies to understand different angles of technology adoption and can be borrowed and applied to the area of IE adoption. Perceived usefulness, ease of use, and social influence have been determined as predictive factors of technology adoption by several theories. In the area of IEs, our literature review shows that the studies emphasized perceived usefulness, ease of use, perceived control or self-efficacy, affect and enjoyment, and perceived risks as the prominent factors, regardless of the type of environment and goals. The extracted factors from the literature require more studies to bridge the gap between these high-level concepts and design considerations. Field studies, usability testing, and other methods should be applied to understand the role of these factors, their importance, and their connection to one another.

However, the existing technology adoption theories lack in many aspects. The current theories have been built based on user interaction with simple technologies in the workplace where factors, such as perceived risks, privacy, security, voluntariness, and control may have different meanings and perceived importance in comparison to other spaces, such as homes. In addition, new meanings and expectations can be associated with the same constructs or their concepts can be expanded as we are discussing more complex and automated technologies. Another significant critique of existing models is that they provide very limited information on how the technology can be improved and what design considerations should be taken. The narrow scope of research in the types of recruited participants or stages of adoption is another shortcoming that opens doors for more investigations to uncover determinant factors in the acceptance and adoption of IEs.

Based on the advantages and limitations discussed around existing theories, this article presents a new preliminary model to understand, describe and predict user acceptance and adoption of IEs. The conceptual components of the present model may not include the entire set, but this preliminary model may serve as a good starting point to provide a legitimate framework for research on the acceptance and adoption of IEs. The themes and the components of this model are supported by the literature reviewed in the present article. The quantitative relationship between the themes and their components should be validated with further empirical research, revealing more critical factors and plausible mediating effects.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Notes on contributors

Shabnam FakhrHosseini

Shabnam FakhrHosseini is a Research Scientist at the MIT AgeLab. She received her PhD in Applied Cognitive Science and Human Factors and conducts research on a variety of topics including technology adoption, human-robot interaction, and distracted driving.

Kathryn Chan

Kathryn Chan graduated magna cum laude from Wellesley College where she received a BA in Cognitive Science and Economics. Her global upbringing and bilingual background sparked her to investigate how the performance of downstream natural language processing models are impacted by sociolinguistic variation.

Chaiwoo Lee

Chaiwoo Lee is a Research Scientist at the MIT AgeLab, a research program dedicated to inventing new ideas to improve the quality of life of older adults. Chaiwoo's research focuses on understanding acceptance of technologies across generations, and looks into people's perceptions, attitudes and experiences with new devices and services.

Myounghoon Jeon

Myounghoon Jeon is an Associate Professor of Industrial and Systems Engineering and Computer Science at Virginia Tech and director of the Mind Music Machine Lab. His research focuses on emotions and sound in the areas of Automotive User Experiences, Assistive Robotics, and Arts in XR Environments.

Heesuk Son

Heesuk Son received the BS degree and PhD degree in School of Computing from KAIST, Korea in 2011 and 2019, respectively. From 2020 to 2022, he worked as postdoctoral research scholar at MIT AgeLab. Since 2022, he has been a Research Scientist at Meta, USA.

John Rudnik

John Rudnik currently is a PhD student at the School of Information in the University of Michigan. Previously, at MIT AgeLab, John worked on a variety of topics including technology adoption, user needs of smart home services, retirement, and longevity planning.

Joseph Coughlin

Joseph Coughlin is a Director of the Massachusetts Institute of Technology AgeLab. He teaches in MIT's Department of Urban Studies & Planning and the Sloan School's Advanced Management Program. Coughlin conducts research on the impact of global demographic change and technology trends on consumer behavior and business strategy.

References

- Adell, E. (2010). Acceptance of driver support systems. In Proceedings of the European Conference on Human Centred Design for Intelligent Transport Systems (Vol. 2, pp. 475–486).

- Abowd, G., Atkeson, C., & Essa, I. (1998). Ubiquitous smart spaces. A white paper submitted to DARPA.

- Acampora, G., & Loia, V. (2005). Using FML and fuzzy technology in adaptive ambient intelligence environments. International Journal of Computational Intelligence Research, 1(2), 171–182. https://doi.org/10.5019/j.ijcir.2005.33

- Ajzen, I. (1985). From intentions to actions: A theory of planned behavior. In Action control (pp. 11–39). Springer.

- Ajzen, I. (1991). The theory of planned behavior. Organizational Behavior and Human Decision Processes, 50(2), 179–211. https://doi.org/10.1016/0749-5978(91)90020-T

- Ajzen, I., & Fisbbein, M. (1974). Factors influencing intentions and the intention-behavior relation. Human Relations, 27(1), 1–15. https://doi.org/10.1177/001872677402700101

- Alharbi, A., & Sohaib, O. (2021). Technology readiness and cryptocurrency adoption: PLS-SEM and deep learning neural network analysis. IEEE Access, 9, 21388–21394. https://doi.org/10.1109/ACCESS.2021.3055785

- Augusto, J. C., Callaghan, V., Cook, D., Kameas, A., & Satoh, I. (2013). Intelligent environments: A manifesto. Human-Centric Computing and Information Sciences, 3(1), 1–18. https://doi.org/10.1186/2192-1962-3-12

- Bandura, A. (1986). Social foundations of thought and action (pp. 23–28). Englewood Cliffs.

- Brown, S. A., & Venkatesh, V. (2005). Model of adoption of technology in households: A baseline model test and extension incorporating household life cycle. MIS Quarterly, 29(3), 399–426. https://doi.org/10.2307/25148690

- Compeau, D., Higgins, C. A., & Huff, S. (1999). Social cognitive theory and individual reactions to computing technology: A longitudinal study. MIS Quarterly, 23(2), 145–158. https://doi.org/10.2307/249749

- Davis, F. D., Bagozzi, R. P., & Warshaw, P. R. (1989). User acceptance of computer technology: A comparison of two theoretical models. Management Science, 35(8), 982–1003. https://doi.org/10.1287/mnsc.35.8.982

- De Boer, P. S., Van Deursen, A. J., & Van Rompay, T. J. (2019). Accepting the Internet-of-Things in our homes: The role of user skills. Telematics and Informatics, 36, 147–156. https://doi.org/10.1016/j.tele.2018.12.004

- Detjen, H., Faltaous, S., Pfleging, B., Geisler, S., & Schneegass, S. (2021). How to increase automated vehicles’ acceptance through in-vehicle interaction design: A review. International Journal of Human–Computer Interaction, 37(4), 308–330. https://doi.org/10.1080/10447318.2020.1860517

- Dishaw, M. T., & Strong, D. M. (1999). Extending the technology acceptance model with task–technology fit constructs. Information & Management, 36(1), 9–21. https://doi.org/10.1016/S0378-7206(98)00101-3

- Ducatel, K. (2001). Union européenne. Technologies de la société de l‘information, Union européenne. Institut d‘études de prospectives technologiques, & Union européenne. Société de l‘information conviviale. Scenarios for ambient intelligence in 2010.

- Haddon, L. (2006). The contribution of domestication research to in-home computing and media consumption. The Information Society, 22(4), 195–203. https://doi.org/10.1080/01972240600791325

- Hewitt, C., Politis, I., Amanatidis, T., & Sarkar, A. (2019, March). Assessing public perception of self-driving cars: The autonomous vehicle acceptance model. In Proceedings of the 24th International Conference on Intelligent User Interfaces (pp. 518–527).

- Hubert, M., Blut, M., Brock, C., Zhang, R. W., Koch, V., & Riedl, R. (2019). The influence of acceptance and adoption drivers on smart home usage. European Journal of Marketing, 53(6), 1073–1098. https://doi.org/10.1108/EJM-12-2016-0794

- Hynes, D., & Richardson, H. (2009). What use is domestication theory to information systems research? In Handbook of research on contemporary theoretical models in information systems (pp. 482–494). IGI Global.

- Kaasinen, E., Kymäläinen, T., Niemelä, M., Olsson, T., Kanerva, M., & Ikonen, V. (2012). A user-centric view of intelligent environments: User expectations, user experience and user role in building intelligent environments. Computers, 2(1), 1–33. https://doi.org/10.3390/computers2010001

- Kaminski, J. (2011). Diffusion of innovation theory. Canadian Journal of Nursing Informatics, 6(2), 1–6. https://cjni.net/journal/?p=1444

- Kaushik, M. K., & Agrawal, D. (2021). Influence of technology readiness in adoption of e-learning. International Journal of Educational Management, 35(2), 483–495. https://doi.org/10.1108/IJEM-04-2020-0216

- Lai, P. C. (2017). The literature review of technology adoption models and theories for the novelty technology. Journal of Information Systems and Technology Management, 14(1), 21–38. https://doi.org/10.4301/S1807-17752017000100002

- Lee, C. (2014). Adoption of smart technology among older adults: Challenges and issues. Public Policy & Aging Report, 24(1), 14–17. https://doi.org/10.1093/ppar/prt005

- Legris, P., Ingham, J., & Collerette, P. (2003). Why do people use information technology? A critical review of the technology acceptance model. Information & Management, 40(3), 191–204.

- Little, L., & Briggs, P. (2005). Designing ambient intelligent scenarios to promote discussion of human values.

- Lundblad, J. P. (2003). A review and critique of Rogers’ diffusion of innovation theory as it applies to organizations. Organization Development Journal, 21(4), 50. https://www.proquest.com/scholarly-journals/review-critique-rogers-diffusion-innovation/docview/197971687/se-2

- Madigan, R., Louw, T., Dziennus, M., Graindorge, T., Ortega, E., Graindorge, M., & Merat, N. (2016). Acceptance of automated road transport systems (arts): An adaptation of the UTAUT model. Transportation Research Procedia, 14, 2217–2226. https://doi.org/10.1016/j.trpro.2016.05.237

- Malhotra, Y., Galletta, D. F. (1999, January). Extending the technology acceptance model to account for social influence: Theoretical bases and empirical validation. In Proceedings of the 32nd Annual Hawaii International Conference on Systems Sciences, 1999, HICSS-32, Abstracts and CD-ROM of Full Papers (p. 14). IEEE.

- Malik, Y. (2015). Towards evaluating pervasive computing systems [Unpublished Ph.D. thesis]. University of Sherbrooke.

- Mathieson, K., Peacock, E., & Chin, W. W. (2001). Extending the technology acceptance model: The influence of perceived user resources. ACM SIGMIS Database, 32(3), 86–112. https://doi.org/10.1145/506724.506730

- McFarland, D. J., & Hamilton, D. (2006). Adding contextual specificity to the technology acceptance model. Computers in Human Behavior, 22(3), 427–447. https://doi.org/10.1016/j.chb.2004.09.009

- Minerva, R., Biru, A., & Rotondi, D. (2015). Towards a definition of the Internet of Things (IoT). IEEE Internet Initiative, 1(1), 1–86.

- Moore, G. C., & Benbasat, I. (1991). Development of an instrument to measure the perceptions of adopting an information technology innovation. Information Systems Research, 2(3), 192–222. https://doi.org/10.1287/isre.2.3.192

- Morris, M. G., & Venkatesh, V. (2000). Age differences in technology adoption decisions: Implications for a changing work force. Personnel Psychology, 53(2), 375–403. https://doi.org/10.1111/j.1744-6570.2000.tb00206.x

- Osswald, S., Wurhofer, D., Trösterer, S., Beck, E., & Tscheligi, M. (2012, October). Predicting information technology usage in the car: Towards a car technology acceptance model. In Proceedings of the 4th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (pp. 51–58).

- Parasuraman, A. (2000). An updated and streamlined technology readiness index: TRI 2.0. Journal of Service Research, 2(4), 307–320. https://doi.org/10.1177/109467050024001

- Parasuraman, A., & Colby, C. L. (2015). An updated and streamlined technology readiness index: TRI 2. Journal of Service Research, 18(1), 59–74. https://doi.org/10.1177/1094670514539730

- Park, E., Cho, Y., Han, J., & Kwon, S. J. (2017). Comprehensive approaches to user acceptance of Internet of Things in a smart home environment. IEEE Internet of Things Journal, 4(6), 2342–2350. https://doi.org/10.1109/JIOT.2017.2750765

- Peek, S. T., Wouters, E. J., Van Hoof, J., Luijkx, K. G., Boeije, H. R., & Vrijhoef, H. J. (2014). Factors influencing acceptance of technology for aging in place: A systematic review. International Journal of Medical Informatics, 83(4), 235–248. https://doi.org/10.1016/j.ijmedinf.2014.01.004

- SAE International (2018). SAE j3016b Standard: Taxonomy and definitions for terms related to on-road motor vehicle automated driving systems. Retrieved from https://doi.org/10.4271/J3016_201806

- Rogers, E. M. (1995). Diffusion of innovations: Modifications of a model for telecommunications. In Die diffusion von innovationen in der telekommunikation (pp. 25–38). Springer.

- Shin, D. H. (2009). Ubiquitous city: Urban technologies, urban infrastructure and urban informatics. Journal of Information Science, 35(5), 515–526. https://doi.org/10.1177/0165551509100832

- Shin, D. H. (2010). Ubiquitous computing acceptance model: End user concern about security, privacy and risk. International Journal of Mobile Communications, 8(2), 169–186. https://doi.org/10.1504/IJMC.2010.031446

- Shuhaiber, A., & Mashal, I. (2019). Understanding users’ acceptance of smart homes. Technology in Society, 58, 101110. https://doi.org/10.1016/j.techsoc.2019.01.003

- Spiekermann, S. (2007). User control in ubiquitous computing: Design alternatives and user acceptance. Habilitation Humboldt Universität Berlin.

- Taherdoost, H. (2018). A review of technology acceptance and adoption models and theories. Procedia Manufacturing, 22, 960–967. https://doi.org/10.1016/j.promfg.2018.03.137

- Taylor, S., & Todd, P. (1995). Decomposition and crossover effects in the theory of planned behavior: A study of consumer adoption intentions. International Journal of Research in Marketing, 12(2), 137–155. https://doi.org/10.1016/0167-8116(94)00019-K

- Thompson, R. L., Higgins, C. A., & Howell, J. M. (1991). Personal computing: Toward a conceptual model of utilization. MIS Quarterly, 15(1), 125–143. https://doi.org/10.2307/249443

- Thompson, R. L., Higgins, C. A., & Howell, J. M. (1994). Influence of experience on personal computer utilization: Testing a conceptual model. Journal of Management Information Systems, 11(1), 167–187. https://doi.org/10.1080/07421222.1994.11518035

- Venkatesh, V. (2000). Determinants of perceived ease of use: Integrating control, intrinsic motivation, and emotion into the technology acceptance model. Information Systems Research, 11(4), 342–365. https://doi.org/10.1287/isre.11.4.342.11872

- Venkatesh, V., & Bala, H. (2008). Technology acceptance model 3 and a research agenda on interventions. Decision Sciences, 39(2), 273–315. https://doi.org/10.1111/j.1540-5915.2008.00192.x

- Venkatesh, V., & Brown, S. A. (2001). A longitudinal investigation of personal computers in homes: Adoption determinants and emerging challenges. MIS Quarterly, 25(1), 71–102. https://doi.org/10.2307/3250959

- Venkatesh, V., & Davis, F. D. (1996). A model of the antecedents of perceived ease of use: Development and test. Decision Sciences, 27(3), 451–481. https://doi.org/10.1111/j.1540-5915.1996.tb01822.x

- Venkatesh, V., & Davis, F. D. (2000). A theoretical extension of the technology acceptance model: Four longitudinal field studies. Management Science, 46(2), 186–204. https://doi.org/10.1287/mnsc.46.2.186.11926

- Venkatesh, V., Morris, M. G., Davis, G. B., & Davis, F. D. (2003). User acceptance of information technology: Toward a unified view. MIS Quarterly, 27(3), 425–478. https://doi.org/10.2307/30036540

- Wahdain, E. A., & Ahmad, M. N. (2014). User acceptance of information technology: Factors, theories and applications. Journal of Information Systems Research and Innovation, 6(1), 17–25. http://seminar.utmspace.edu.my/jisri/

- Walldén, S., & Mäkinen, E. (2014). On accepting smart environments at user and societal levels. Universal Access in the Information Society, 13(4), 449–469. https://doi.org/10.1007/s10209-013-0327-y

- Weiser, M. (1991). The computer for the 21st century. Scientific American, 265(3), 94–104. https://doi.org/10.1038/scientificamerican0991-94

- Yang, H., Lee, W., & Lee, H. (2018). IoT smart home adoption: The importance of proper level automation. Journal of Sensors, 2018, 1–11. https://doi.org/10.1155/2018/6464036